Analysis and Research on Spectrogram-Based Emotional Speech Signal Augmentation Algorithm

Abstract

1. Introduction

2. Related Theory

2.1. Spectrogram Generation Principle

2.2. Data Augmentation Algorithms

3. Proposed Method

3.1. Subjective Listening Design

- Changes in Audio Quality: The listeners described differences in clarity, distortion, and other audio qualities between the augmented audio and the original audio. They were asked whether the speech still sounded natural and whether there were any unpleasant effects (e.g., blurring, distortion).

- Noise Perception: The listeners noted whether the augmented audio introduced noise, specifying the type of noise (e.g., background noise, buzzing, echoes) and the severity of its impact (mild/significant/severe).

- Changes in Emotional Expression Intensity: The listeners described whether the emotional expression in the augmented audio had changed, such as whether the emotion had been weakened or enhanced. They were also asked whether they could perceive any changes in the authenticity or intensity of the emotion.

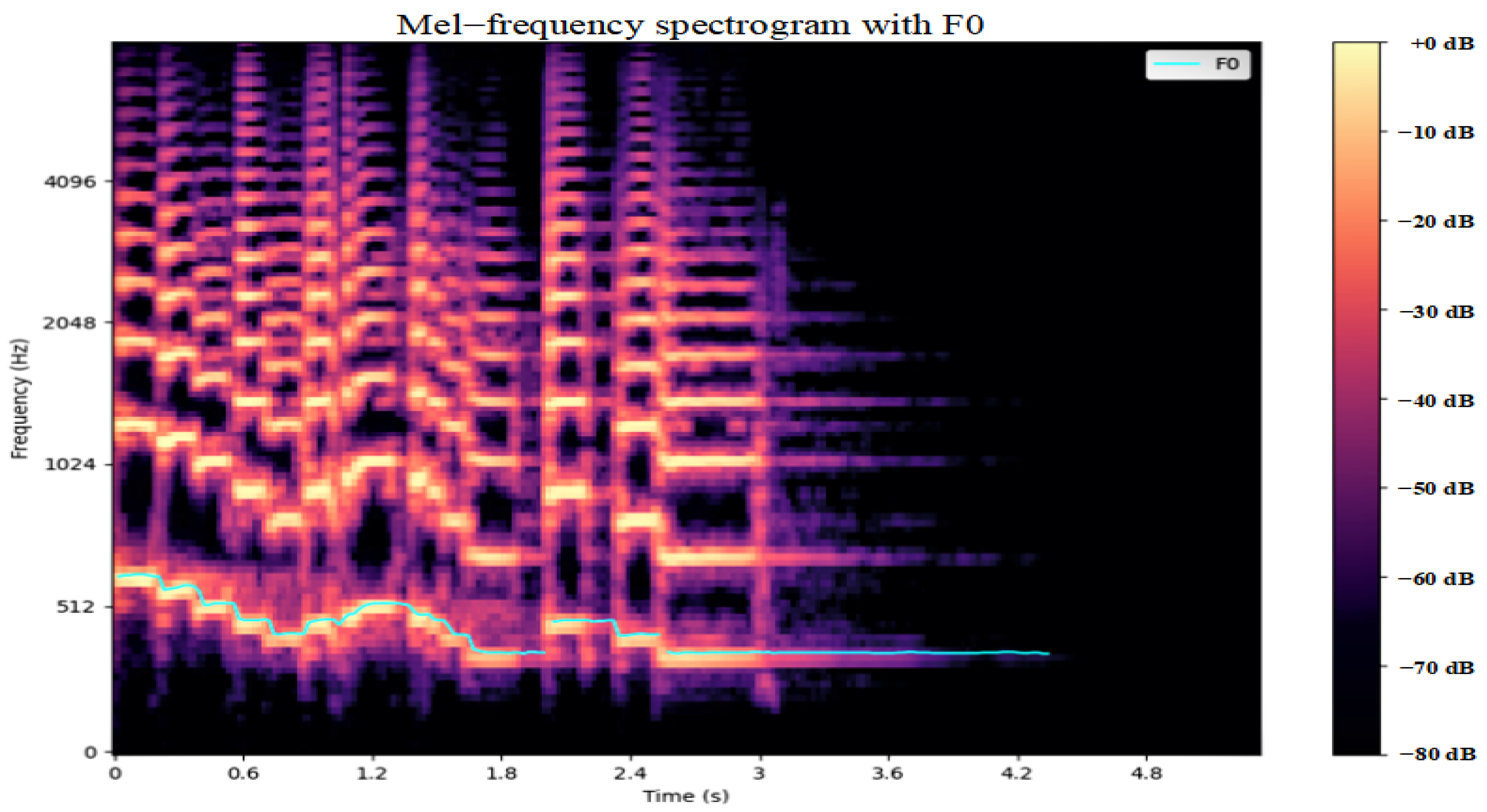

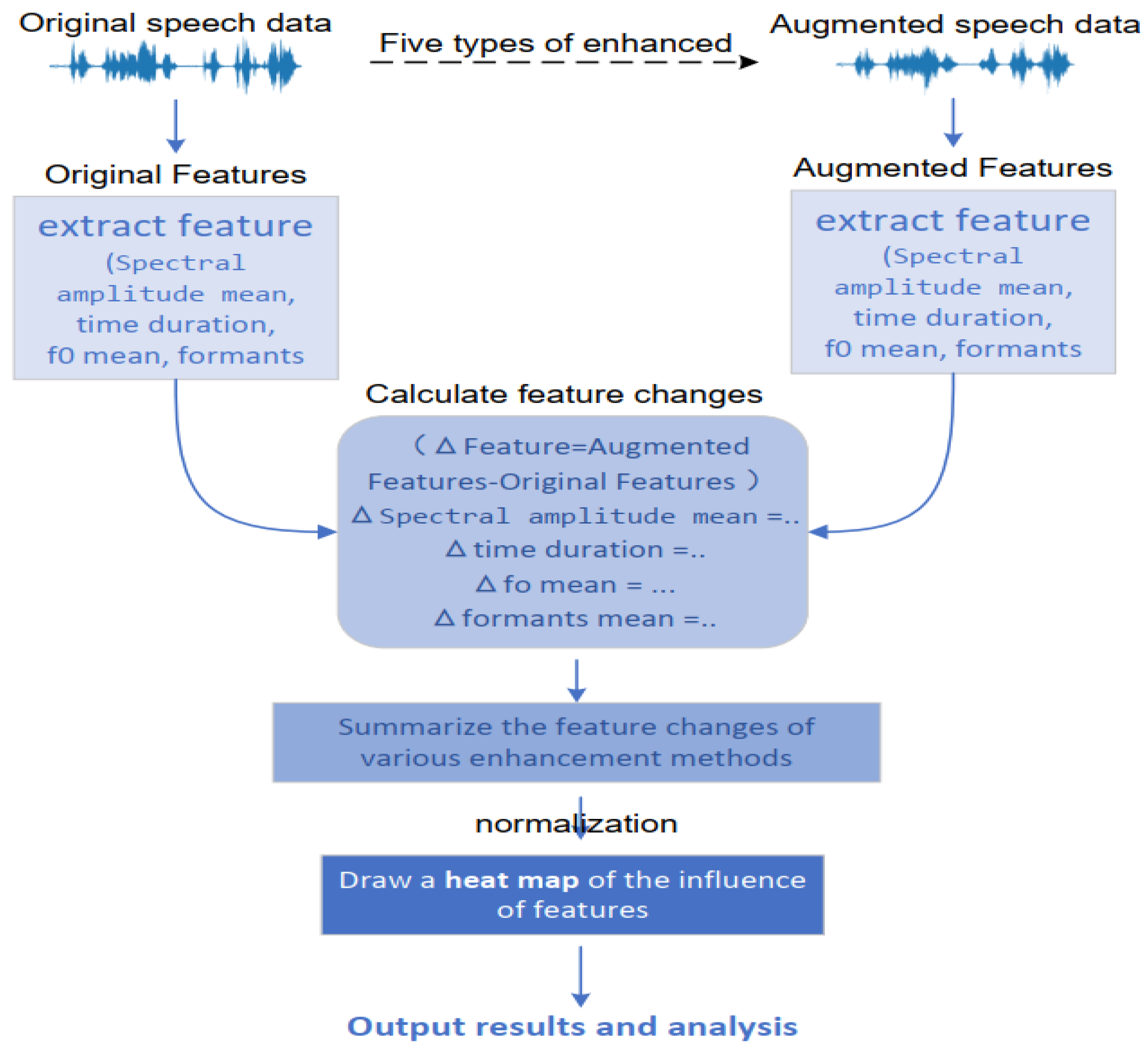

3.2. Spectral Characteristics Analysis and Feature Extraction of the Spectrogram

3.3. Objective Emotion Classification Evaluation Design

4. Experiment Analysis

4.1. Experimental Environment and Dataset

4.2. Subjective Auditory Perception Analysis

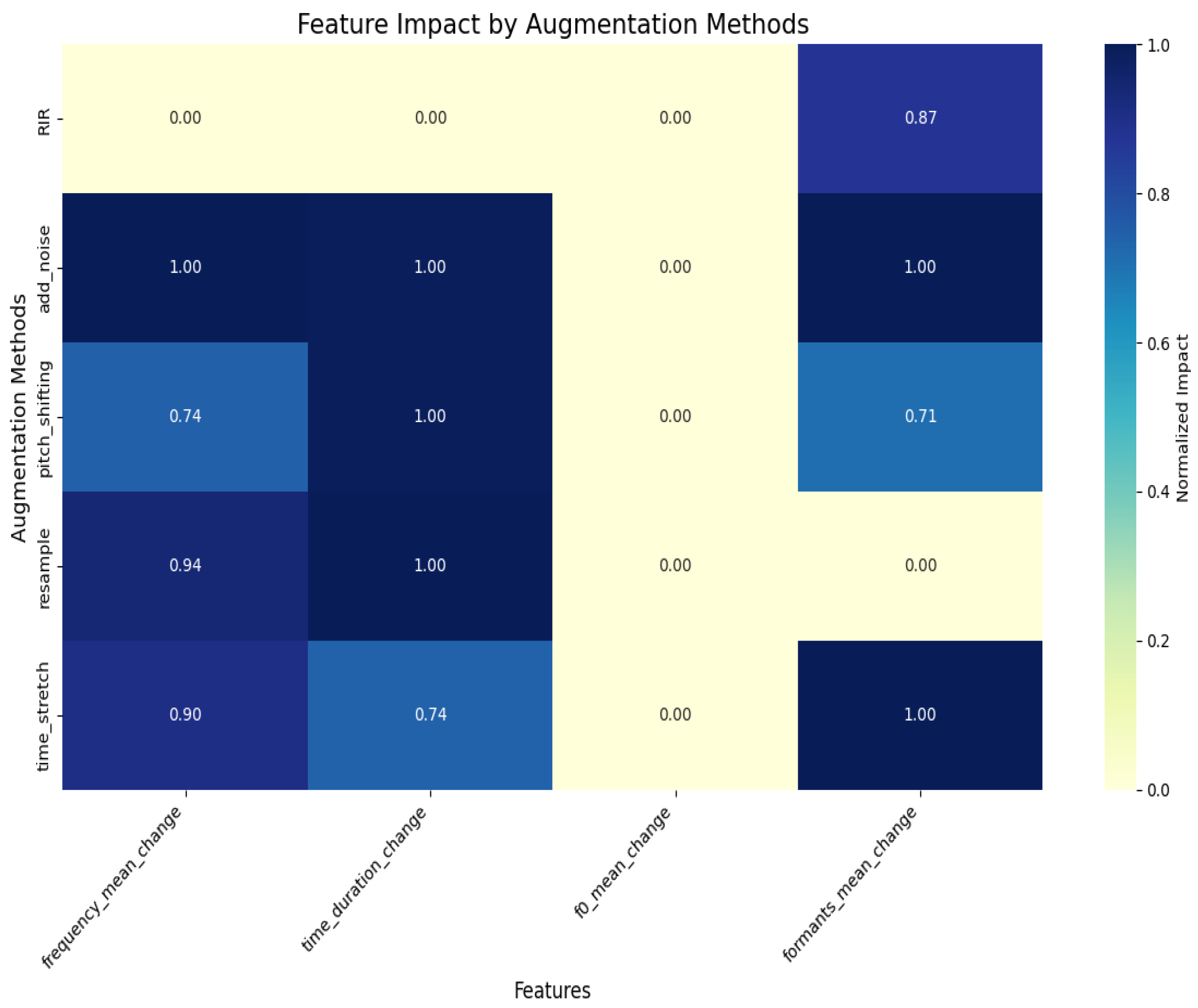

4.3. Impact of Augmentation Algorithms on Spectrogram Spectral Characteristics

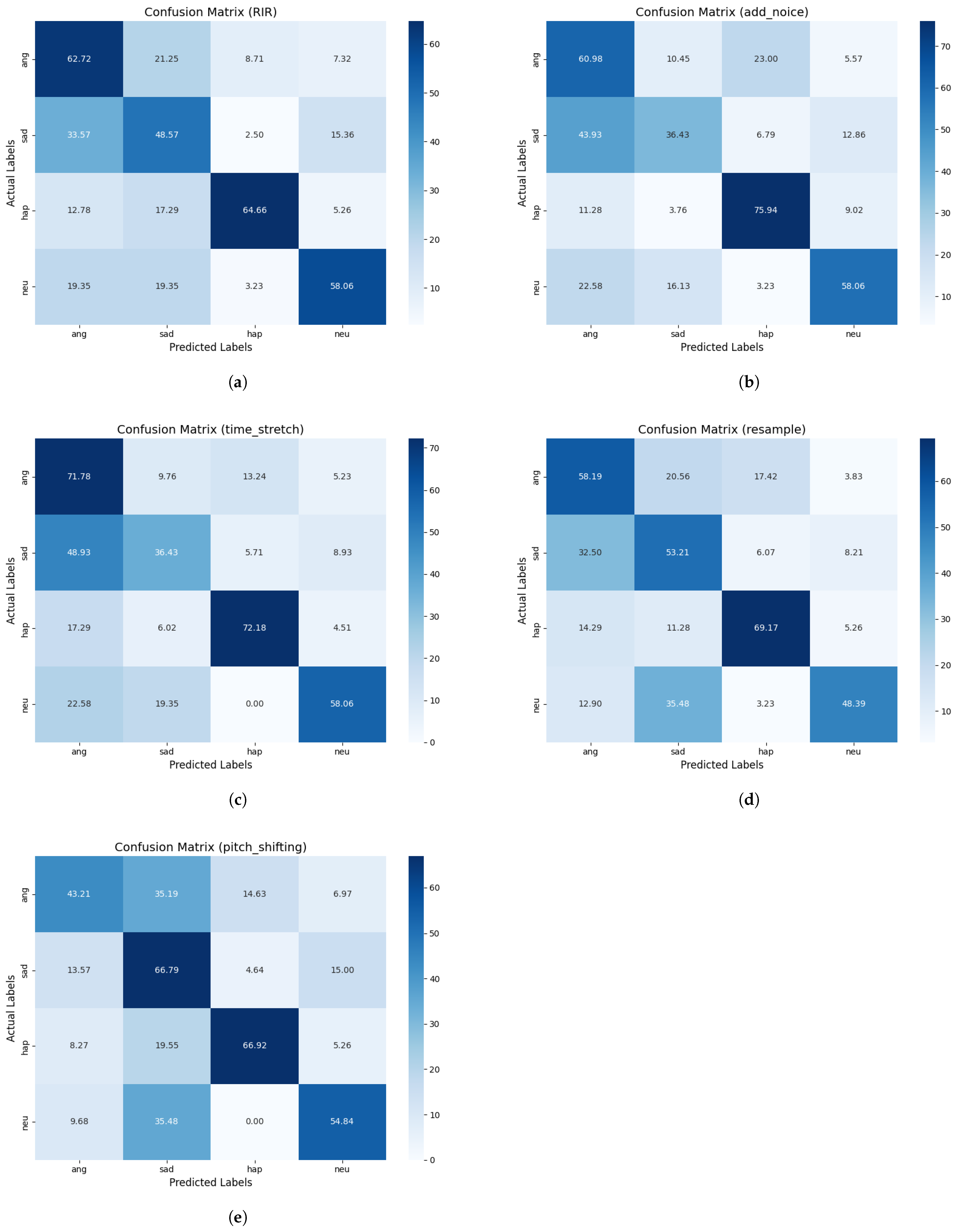

4.4. Objective Emotion Classification Evaluation Results Analysis

- Weighted Accuracy (WA): When compared with the reverberation (RIR) algorithm, the significance markers for the noise addition and time stretching methods are ***, indicating that their p-values are less than 0.001 compared to reverberation in terms of the WA metric. This indicates that there is an extremely significant difference between these methods and reverberation in improving the weighted accuracy, with the difference in effects unlikely to be caused by random factors. The pith shifting method is marked with **, indicating that its p-value is less than 0.01 and there is a significant difference compared to reverberation.

- F1-Score: The significance markers for the noise addition, time stretching, and pitch shifting methods are ***. This indicates that p < 0.001 compared with reverberation, showing an extremely significant difference. The resampling method is marked with *, indicating that p < 0.05, meaning that there is a certain degree of significant difference compared to reverberation. This shows that the differences in the effects of these methods on the F1-score compared to reverberation are not accidental.

- Precision: The noise addition method is marked with **, indicating that its p-value is less than 0.01 and there is a significant difference compared to reverberation. The time stretching and resampling methods are marked with *** for p < 0.001, showing an extremely significant difference compared to reverberation. This reflects that there are obvious differences between these methods and reverberation in terms of precision performance and that these differences are caused by the methods themselves rather than random factors.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Song, M.; Triantafyllopoulos, A.; Yang, Z.; Takeuchi, H.; Nakamura, T.; Kishi, A.; Ishizawa, T.; Yoshiuchi, K.; Jing, X.; Karas, V.; et al. Daily Mental Health Monitoring from Speech: A Real-World Japanese Dataset and Multitask Learning Analysis. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Gao, X.; Song, H.; Li, Y.; Zhao, Q.; Li, W.; Zhang, Y.; Chao, L. Research on Intelligent Quality Inspection of Customer Service Under the “One Network” Operation Mode of Toll Roads. In Proceedings of the 2022 3rd International Conference on Information Science, Parallel and Distributed Systems (ISPDS), Guangzhou, China, 22–24 July 2022; pp. 369–373. [Google Scholar]

- Zhang, B.-Y.; Wang, Z.-S. Design Factors Extraction of Companion Robots for the Elderly People Living Alone Based on Miryoku Engineering. In Proceedings of the 2022 15th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 17–18 December 2022; pp. 110–113. [Google Scholar]

- Zhang, K.; Zhou, F.; Wu, L.; Xie, N.; He, Z. Semantic understanding and prompt engineering for large-scale traffic data imputation. Inf. Fusion 2024, 102, 102038. [Google Scholar] [CrossRef]

- Jackson, P.; Haq, S. Surrey Audio-Visual Expressed Emotion (Savee) Database; University of Surrey: Guildford, UK, 2014. [Google Scholar]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A database of German emotional speech. Interspeech 2005, 5, 1517–1520. [Google Scholar]

- Atmaja, B.T.; Sasou, A. Effects of data augmentations on speech emotion recognition. Sensors 2022, 22, 5941. [Google Scholar] [CrossRef] [PubMed]

- Barhoumi, C.; Ayed, Y.B. Improving Speech Emotion Recognition Using Data Augmentation and Balancing Techniques. In Proceedings of the 2023 International Conference on Cyberworlds (CW), Sousse, Tunisia, 3–5 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 282–289. [Google Scholar]

- Tao, H.; Shan, S.; Hu, Z.; Zhu, C. Strong generalized speech emotion recognition based on effective data augmentation. Entropy 2022, 25, 68. [Google Scholar] [CrossRef]

- Arias, P.; Rachman, L.; Liuni, M.; Aucouturier, J.J. Beyond correlation: Acoustic transformation methods for the experimental study of emotional voice and speech. Emot. Rev. 2021, 13, 12–24. [Google Scholar] [CrossRef]

- Liu, L.; Götz, A.; Lorette, P.; Tyler, M.D. How tone, intonation and emotion shape the development of infants’ fundamental frequency perception. Front. Psychol. 2022, 13, 906848. [Google Scholar] [CrossRef] [PubMed]

- Bergelson, E.; Idsardi, W.J. A neurophysiological study into the foundations of tonal harmony. Neuroreport 2009, 20, 239–244. [Google Scholar] [CrossRef] [PubMed]

- Teng, X.; Meng, Q.; Poeppel, D. Modulation Spectra Capture EEG Responses to Speech Signals and Drive Distinct Temporal Response Functions. Eneuro 2021, 8. [Google Scholar] [CrossRef]

- Luengo, I.; Navas, E.; Hernáez, I. Feature analysis and evaluation for automatic emotion identification in speech. IEEE Trans. Multimed. 2010, 12, 490–501. [Google Scholar] [CrossRef]

- Badshah, A.M.; Ahmad, J.; Rahim, N.; Baik, S.W. Speech Emotion Recognition from Spectrograms with Deep Convolutional Neural Network. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Republic of Korea, 13–15 February 2017; pp. 1–5. [Google Scholar]

- Zhang, S.; Zhao, Z.; Guan, C. Multimodal Continuous Emotion Recognition: A Technical Report for ABAW5. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; pp. 5764–5769. [Google Scholar]

- Biswas, M.; Sahu, M.; Agrebi, M.; Singh, P.K.; Badr, Y. Speech Emotion Recognition Using Deep CNNs Trained on Log-Frequency Spectrograms. In Innovations in Machine and Deep Learning: Case Studies and Applications; Springer Nature: Cham, Switzerland, 2023; pp. 83–108. [Google Scholar]

- Li, H.; Li, J.; Liu, H.; Liu, T.; Chen, Q.; You, X. Meltrans: Mel-spectrogram relationship-learning for speech emotion recognition via transformers. Sensors 2024, 24, 5506. [Google Scholar] [CrossRef]

- Feng, T.; Hashemi, H.; Annavaram, M.; Narayanan, S.S. Enhancing Privacy Through Domain Adaptive Noise Injection For Speech Emotion Recognition. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7702–7706. [Google Scholar]

- Mujaddidurrahman, A.; Ernawan, F.; Wibowo, A.; Sarwoko, E.A.; Sugiharto, A.; Wahyudi, M.D.R. Speech emotion recognition using 2D-CNN with data augmentation. In Proceedings of the 2021 International Conference on Software Engineering & Computer Systems and 4th International Conference on Computational Science and Information Management (ICSECS-ICOCSIM), Pekan, Malaysia, 24–26 August 2021; pp. 685–689. [Google Scholar]

- Braunschweiler, N.; Doddipatla, R.; Keizer, S.; Stoyanchev, S. A Study on Cross-Corpus Speech Emotion Recognition and Data Augmentation. In Proceedings of the 2021 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Cartagena, Colombia, 13–17 December 2021; pp. 24–30. [Google Scholar]

- Zhao, W.; Yin, B. Environmental sound classification based on pitch shifting. In Proceedings of the 2022 International Seminar on Computer Science and Engineering Technology (SCSET), Indianapolis, IN, USA, 8–9 January 2022; pp. 275–280. [Google Scholar]

- Hailu, N.; Siegert, I.; Nürnberger, A. Improving Automatic Speech Recognition Utilizing Audio-codecs for Data Augmentation. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 21–24 September 2020; pp. 1–5. [Google Scholar]

- Ko, T.; Peddinti, V.; Povey, D.; Seltzer, M.L.; Khudanpur, S. A study on data augmentation of reverberant speech for robust speech recognition. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 5220–5224. [Google Scholar]

- Lin, W.; Mak, M.W. Robust Speaker Verification Using Population-Based Data Augmentation. In Proceedings of theICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7642–7646. [Google Scholar]

- Smith, S. Digital Signal Processing: A Practical Guide for Engineers and Scientists; Newnes: Brierley Hill, UK, 2003. [Google Scholar]

- Yi, L.; Mak, M.W. Improving speech emotion recognition with adversarial data augmentation network. IEEE Trans. Neural Networks Learn. Syst. 2020, 33, 172–184. [Google Scholar] [CrossRef] [PubMed]

- Rudd, D.H.; Huo, H.; Xu, G. Leveraged mel spectrograms using harmonic and percussive components in speech emotion recognition. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Chengdu, China, 16–19 May 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 392–404. [Google Scholar]

- Mcfee, B.; Raffel, C.; Liang, D. librosa: Audio and Music Signal Analysis in Python. In Proceedings of the Python in Science Conference, Austin, TX, USA, 6–12 July 2015. [Google Scholar]

- Mauch, M.; Dixon, S. PYIN: A fundamental frequency estimator using probabilistic threshold distributions. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 659–663. [Google Scholar]

- Araujo, A.D.L.; Violaro, F. Formant frequency estimation using a Mel-scale LPC algorithm. In Proceedings of the ITS’98 Proceedings. SBT/IEEE International Telecommunications Symposium (Cat. No. 98EX202), Sao Paulo, Brazil, 9–13 August 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 207–212. [Google Scholar]

- Xu, L.; Zhao, F.; Xu, P.; Cao, B. Infrared target recognition with deep learning algorithms. Multimed. Tools Appl. 2023, 82, 17213–17230. [Google Scholar] [CrossRef]

- Wang, B.; Sun, Y.; Chu, Y.; Min, C.; Yang, Z.; Lin, H. Local discriminative graph convolutional networks for text classification. Multimed. Syst. 2023, 29, 2363–2373. [Google Scholar] [CrossRef]

- Li, J.; Liu, S.; Gao, Y.; Lv, Y.; Wei, H. UWB (N) LOS identification based on deep learning and transfer learning. IEEE Commun. Lett. 2024, 28, 2111–2115. [Google Scholar] [CrossRef]

- Liu, Z.T.; Han, M.T.; Wu, B.H.; Rehman, A. Speech emotion recognition based on convolutional neural network with attention-based bidirectional long short-term memory network and multi-task learning. Appl. Acoust. 2023, 202, 109178. [Google Scholar] [CrossRef]

- Ahmed, S.F.; Alam, M.S.B.; Hassan, M.; Rozbu, M.R.; Ishtiak, T.; Rafa, N.; Gandomi, A.H. Deep learning modelling techniques: Current progress, applications, advantages, and challenges. Artif. Intell. Rev. 2023, 56, 13521–13617. [Google Scholar] [CrossRef]

- Ullah, R.; Asif, M.; Shah, W.A.; Anjam, F.; Ullah, I.; Khurshaid, T.; Alibakhshikenari, M. Speech emotion recognition using convolution neural networks and multi-head convolutional transformer. Sensors 2023, 23, 6212. [Google Scholar] [CrossRef]

- Chauhan, K.; Sharma, K.K.; Varma, T. Speech emotion recognition using convolution neural networks. In Proceedings of the 2021 international conference on artificial intelligence and smart systems (ICAIS), Coimbatore, India, 25–27 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1176–1181. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Vaessen, N.; Van Leeuwen, D.A. Fine-Tuning Wav2Vec2 for Speaker Recognition. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 7967–7971. [Google Scholar] [CrossRef]

- Dai, D.; Wu, Z.; Li, R.; Wu, X.; Jia, J.; Meng, H. Learning Discriminative Features from Spectrograms Using Center Loss for Speech Emotion Recognition. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7405–7409. [Google Scholar] [CrossRef]

- Xu, C.; Zhu, Z.; Wang, J.; Wang, J.; Zhang, W. Understanding the role of cross-entropy loss in fairly evaluating large language model-based recommendation. arXiv 2024, arXiv:2402.06216. [Google Scholar]

- Sharma, M. Multi-Lingual Multi-Task Speech Emotion Recognition Using wav2vec 2.0. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 6907–6911. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.-C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive Emotional Dyadic Motion Capture Database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Er han, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolu tions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Ibrahim, K.M.; Perzo, A.; Leglaive, S. Towards Improving Speech Emotion Recognition Using Synthetic Data Augmentation from Emotion Conversion. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 10636–10640. [Google Scholar] [CrossRef]

- Pappagari, R.; Villalba, J.; Żelasko, P.; Moro-Velazquez, L.; Dehak, N. CopyPaste: An Augmentation Method for Speech Emotion Recognition. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6324–6328. [Google Scholar] [CrossRef]

- Tiwari, U.; Soni, M.; Chakraborty, R.; Panda, A.; Kopparapu, S.K. Multi-Conditioning and Data Augmentation Using Generative Noise Model for Speech Emotion Recognition in Noisy Conditions. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 7194–7198. [Google Scholar] [CrossRef]

| Data Augmentation Algorithm | Implementation in Spectrograms |

|---|---|

| Time Stretching | Modifies the time axis of the spectrogram to adjust speech speed or rhythm |

| Noise addition | Adds background noise or environmental noise to the spectrogram |

| Pitch Shifting | Adjusts the amplitudes and phases of frequency components to change the frequency distribution, simulating changes in pitch or fundamental frequency |

| Reverberation (RIR) | Adds reverb in the time domain to simulate different spatial environments, making the frequency distribution in the spectrogram more complex and blurring the distinct frequency boundaries |

| Resampling | Adjusts the frequency resolution of the spectrogram to simulate speech under different sampling conditions |

| Component | Description |

|---|---|

| Input Layer | Receives the preprocessed Mel spectrogram with an input size of [B, 1, 64,200] |

| Convolutional Layer | Two convolutional operations are performed to extract multi-channel features, with kernel sizes of (10, 2) and (2, 8) |

| Feature Fusion | The convolutional results are concatenated along the channel dimension to obtain an enhanced feature representation |

| Residual Module | Multi-layer residual modules are applied to progressively enhance the feature representation, with the final output size of [B, 256, 4, 13] |

| Global Average Pooling | Converts the high-dimensional feature map into a one-dimensional feature vector, with an output size of [B, 256] |

| Component | Description |

|---|---|

| Input Layer | Receives the one-dimensional feature vector output from the feature extractor, with size [B, 256] |

| Fully Connected Layer 1 | Maps the features to a 64-dimensional space, using ReLU activation and Dropout to prevent overfitting |

| Fully Connected Layer 2 | Outputs the probability distribution over the emotion categories (4 classes: Anger, Happiness, Sadness, Neutral) using Softmax |

| Data Augmentation Algorithm | Parameter Settings | Fixed Parameters |

|---|---|---|

| Time Stretching | [0.8, 1.2, 1.5, 1.8, 2.0] | —— |

| Noise addition | Signal power ; Noise coefficient ; Noisy speech | dB |

| Resampling | Sampling rate: 20,000, First resample from 16,000 to 20,000, then resample from 20,000 back to original sampling rate | Original audio sampling rate: 16,000 Hz |

| Pitch Shifting | Pitch shift steps: [−6,−3,0,3,6] per octave (semitone): 12 | —— |

| Reverberation (RIR) | Source positions: [[1, 1, 1.75], [5, 4, 1.75], [9, 7, 1.75]] Microphone positions: [[0.5, 0.5, 1.2], [4, 3, 1.2], [8, 6, 1.2]] | 3D space dimensions: [10, 8, 3.5] |

| Augmentation Algorithm | Listener’s Audio Quality Evaluation | Listener’s Noise Perception | Listener’s Description of Emotional Expression Changes |

|---|---|---|---|

| Original Audio | Clear, natural, no distortion | No noise | Emotional expression is clear and unchanged, with the best speech quality |

| Noise addition | Slightly blurred, mild distortion | Increased background noise (slight noise) | The noise makes the details of emotional conveyance less clear |

| Time Stretching | Change in speech rate, slight distortion | No noticeable noise | Excessively fast change in speaking speed will lead to the original sad emotion being misjudged as cheerful |

| Resampling | Audio slightly distorted, frequency variation | No noticeable noise | Distortion affects emotional transmission, especially noticeable in intense emotions (e.g., anger) |

| Pitch Shifting | Timbre change, good clarity | No noticeable noise | Timbre changes cause subtle emotional differences, especially confusion between anger and sadness |

| Reverberation (RIR) | The audio has reverberation and is slightly blurry | Slight noise | The speech overlaps, affecting the clear conveyance of emotions |

| Augmentation Method | Good Recognition Performance | High Misclassification Rate | Other Observations |

|---|---|---|---|

| Time Stretching | “Anger” (71.78%) and “Happiness” (72.18%) | “Sadness” (48.93% misclassified as “Anger”) | “Neutral” performs poorly, with blurred boundaries, significantly reducing model performance |

| Reverberation(RIR) | “Anger” (62.72%) and “Happiness” (64.66%) | “Sadness” (33.57% misclassified as “Anger”), “Neutral” (19.35% misclassified as “Sadness”) | Overall stability is better than other methods |

| Noise Addition | “Happiness” (75.94%) | “Sadness” (43.93% misclassified as “Anger”) | Suitable for increasing data diversity but has a significant impact on boundaries |

| Pitch Shifting | “Sadness” (66.79%) and “Happiness” (66.92%) | “Anger” (35.19% misclassified as “Sadness”) | “Anger” and “Sadness” confusion is significant |

| Resampling | “Happiness” (69.17%) | “Anger” (20.56% misclassified as “Sadness”), “Neutral” (35.48% misclassified as “Sadness”) | “Neutral” performs worst, with severe confusion |

| Method | Baseline | Baseline+RIR+Resample | The Extent of Performance Improvement |

|---|---|---|---|

| Network | WA(%) | WA(%) | (%) |

| ResNet18 [46] | 60.28 | 62.39 | +2.11 |

| VGG16 [47] | 52.58 | 60.94 | +8.36 |

| GoogleNet [48] | 56.52 | 59.26 | +2.74 |

| DenseNet [49] | 59.28 | 63.32 | +4.04 |

| Ours | 60.64 | 67.74 | +7.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, H.; Li, S.; Wang, X.; Liu, B.; Zheng, S. Analysis and Research on Spectrogram-Based Emotional Speech Signal Augmentation Algorithm. Entropy 2025, 27, 640. https://doi.org/10.3390/e27060640

Tao H, Li S, Wang X, Liu B, Zheng S. Analysis and Research on Spectrogram-Based Emotional Speech Signal Augmentation Algorithm. Entropy. 2025; 27(6):640. https://doi.org/10.3390/e27060640

Chicago/Turabian StyleTao, Huawei, Sixian Li, Xuemei Wang, Binkun Liu, and Shuailong Zheng. 2025. "Analysis and Research on Spectrogram-Based Emotional Speech Signal Augmentation Algorithm" Entropy 27, no. 6: 640. https://doi.org/10.3390/e27060640

APA StyleTao, H., Li, S., Wang, X., Liu, B., & Zheng, S. (2025). Analysis and Research on Spectrogram-Based Emotional Speech Signal Augmentation Algorithm. Entropy, 27(6), 640. https://doi.org/10.3390/e27060640