DFST-UNet: Dual-Domain Fusion Swin Transformer U-Net for Image Forgery Localization

Abstract

1. Introduction

- A novel Swin Transformer-based U-shaped architecture is proposed for image forgery localization. Swin Transformer blocks are integrated into the U-Net architecture to capture the long-range context information and identify forged regions at different scales.

- A dual-stream encoder is proposed to comprehensively expose forgery traces in both the RGB domain and the frequency domain. The HAFM is designed to effectively fuse the dual-domain features.

- Experimental results demonstrate that our method outperforms the state-of-the-art (SOTA) methods in image forgery localization, especially regarding generalization and robustness.

2. Related Work

2.1. CNN-Based Approaches

2.2. Transformer-Based Approaches

3. Methodology

3.1. Overall Network Architecture

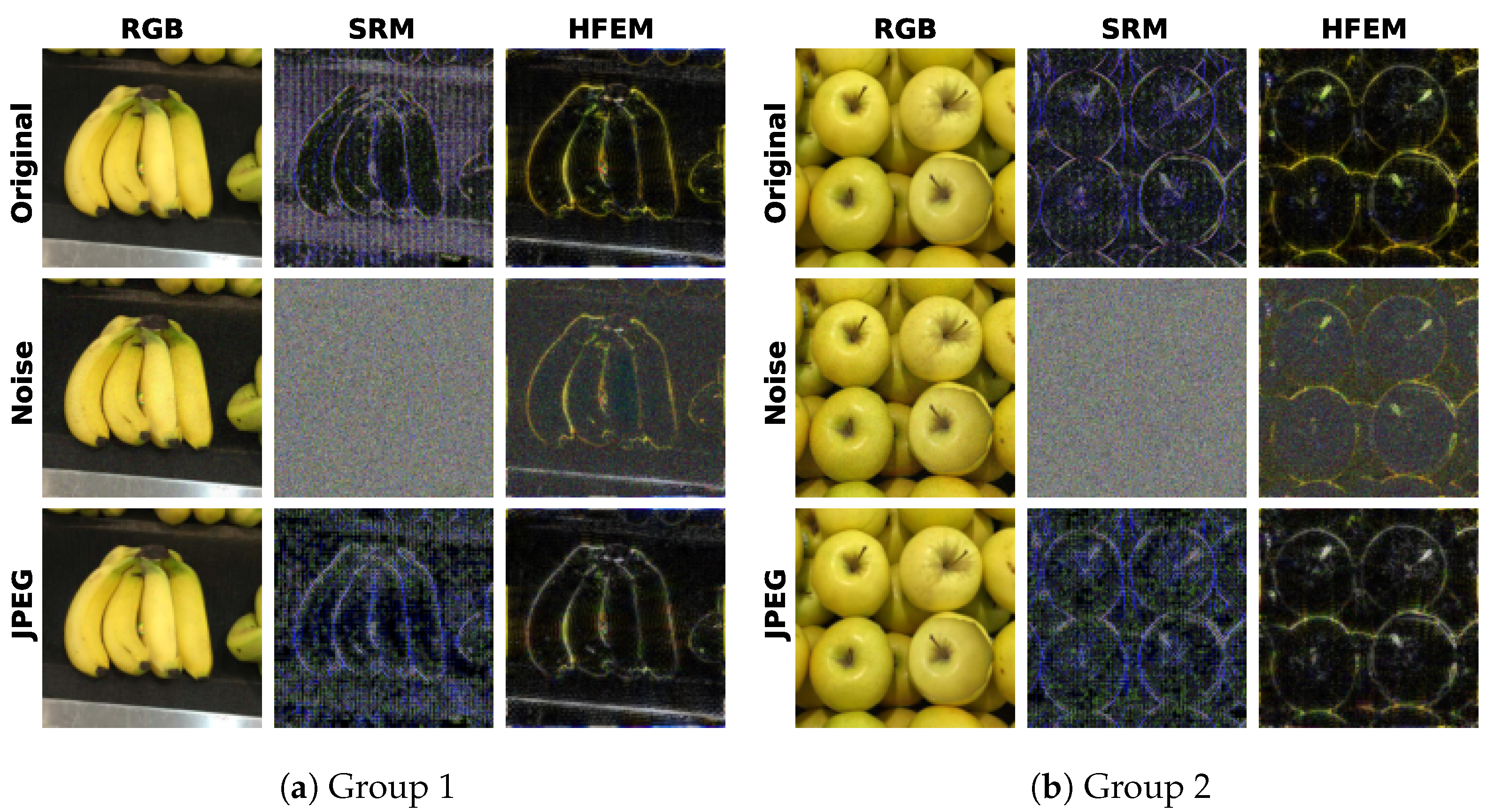

3.2. High-Frequency Feature Extractor

3.3. Hierarchical Attention Fusion Module

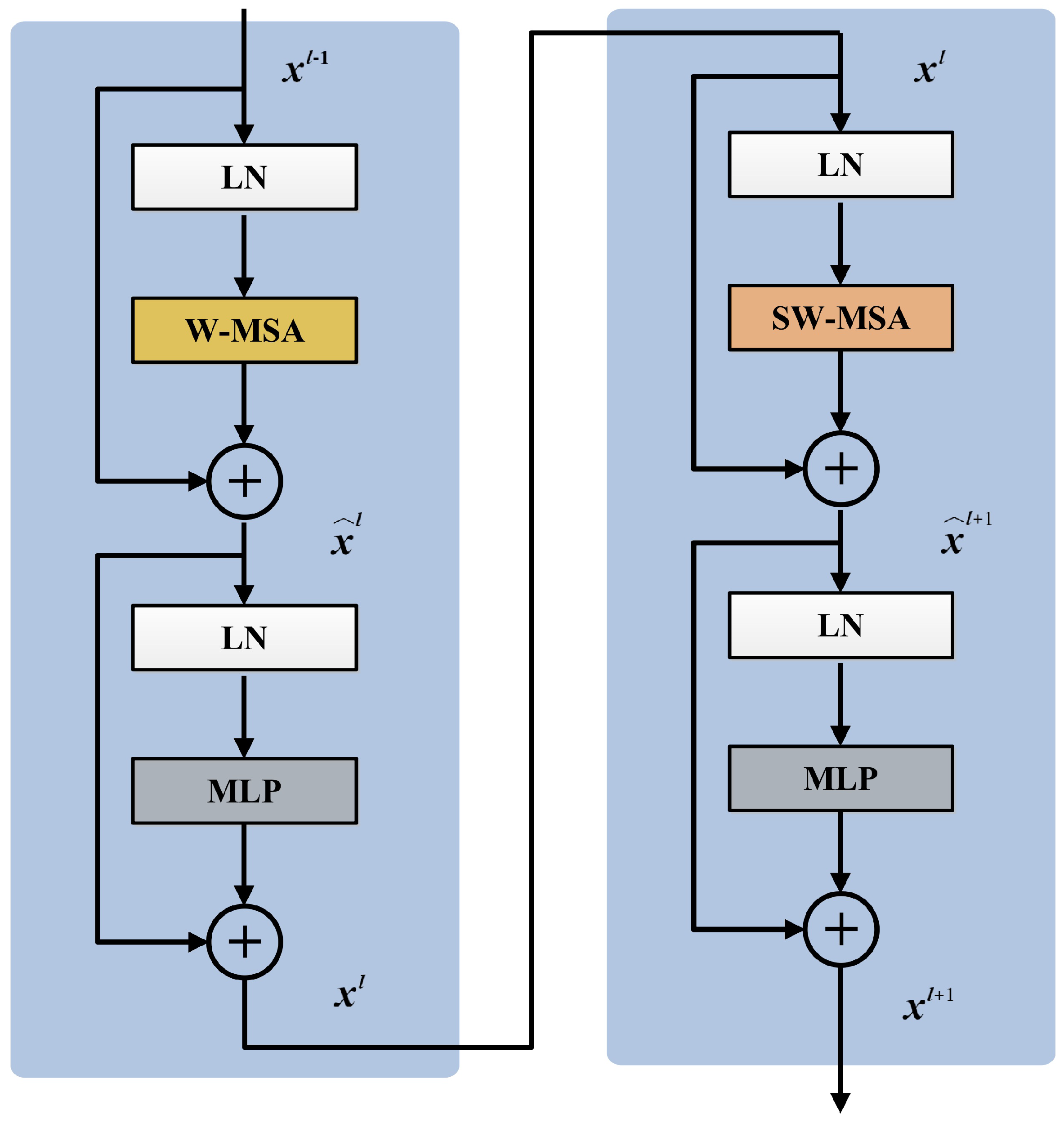

3.4. Swin Transformer Block

3.5. Loss Function

4. Experiment

4.1. Experimental Setup

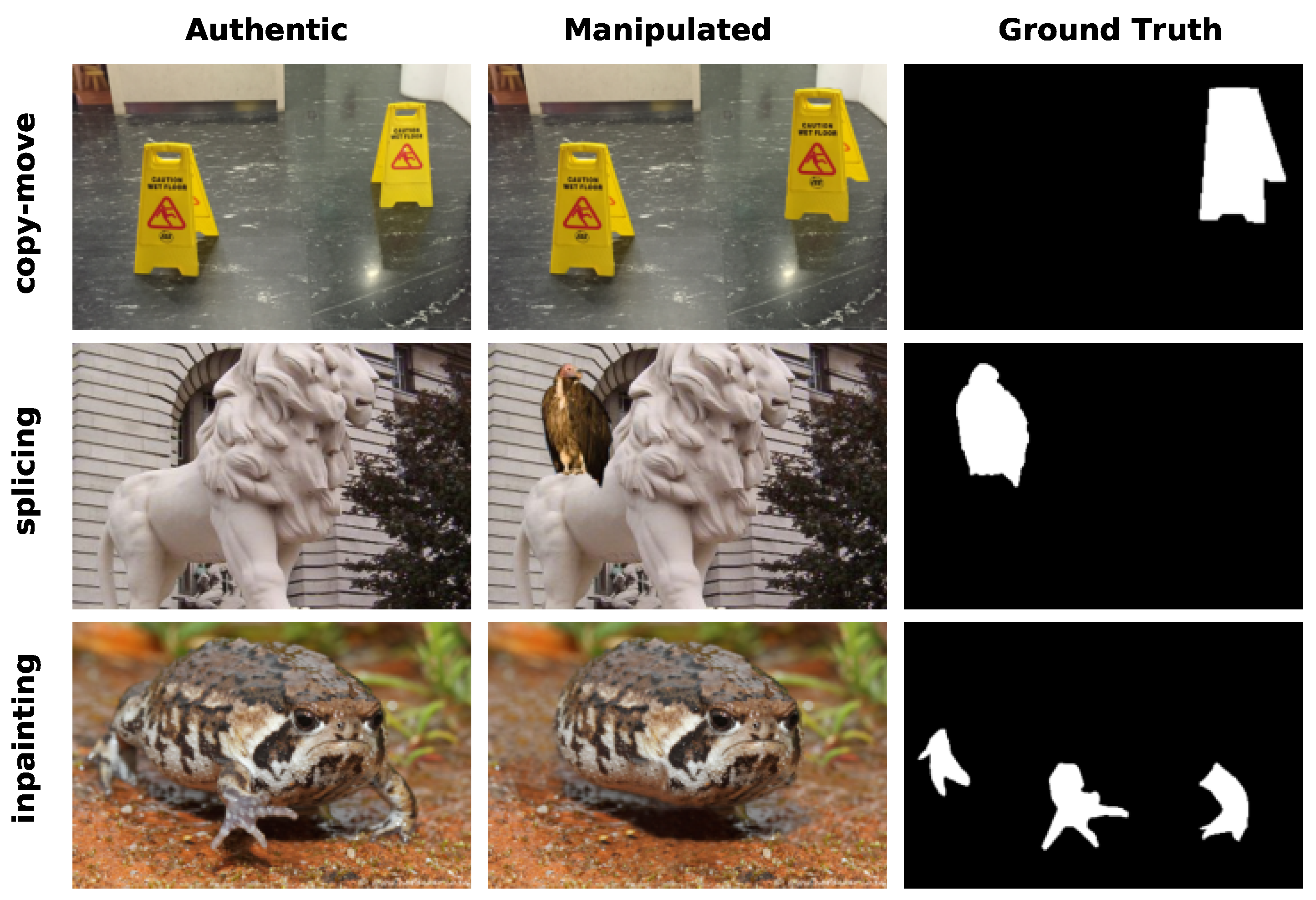

4.1.1. Dataset

4.1.2. Evaluation Metrics

4.1.3. Implementation Details

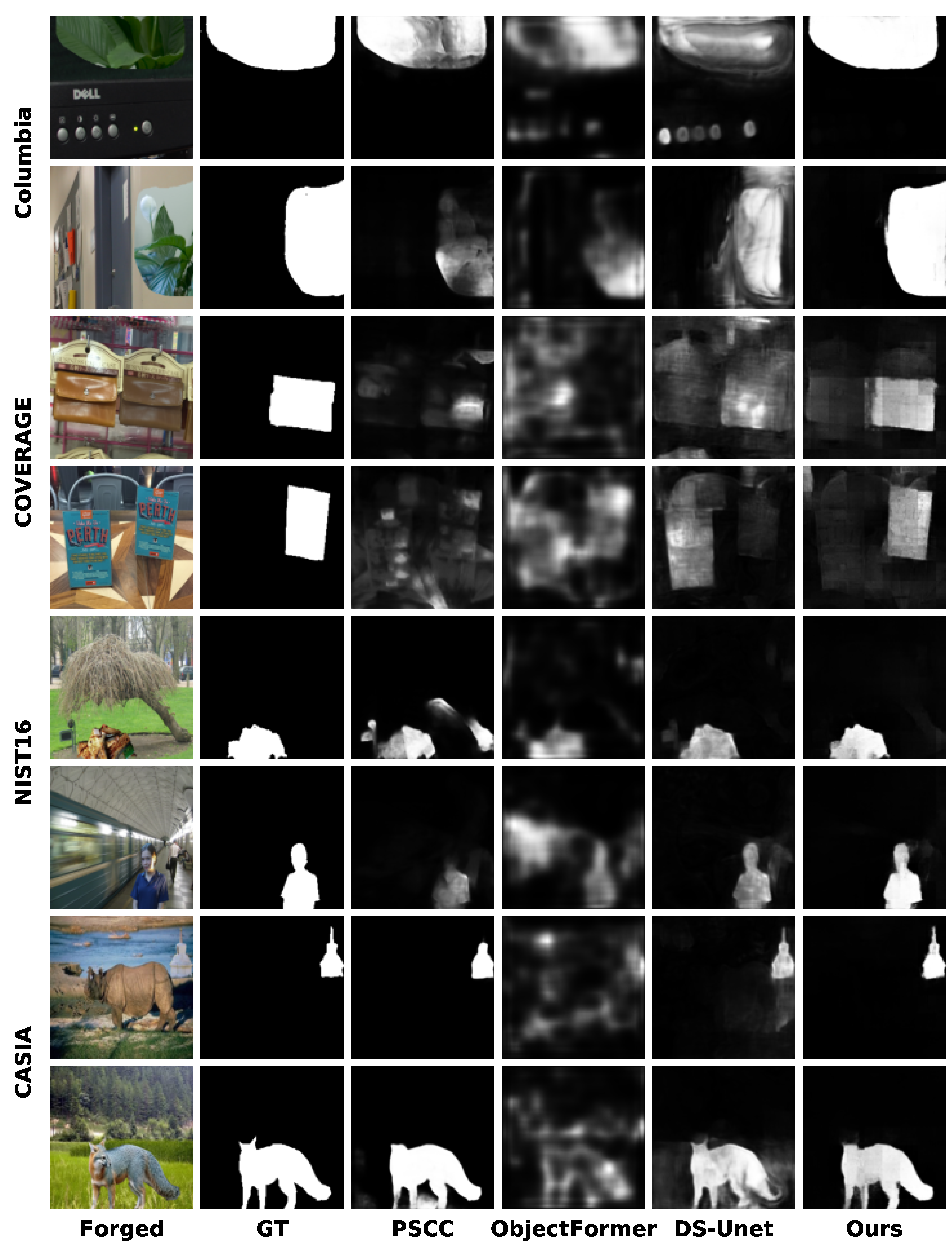

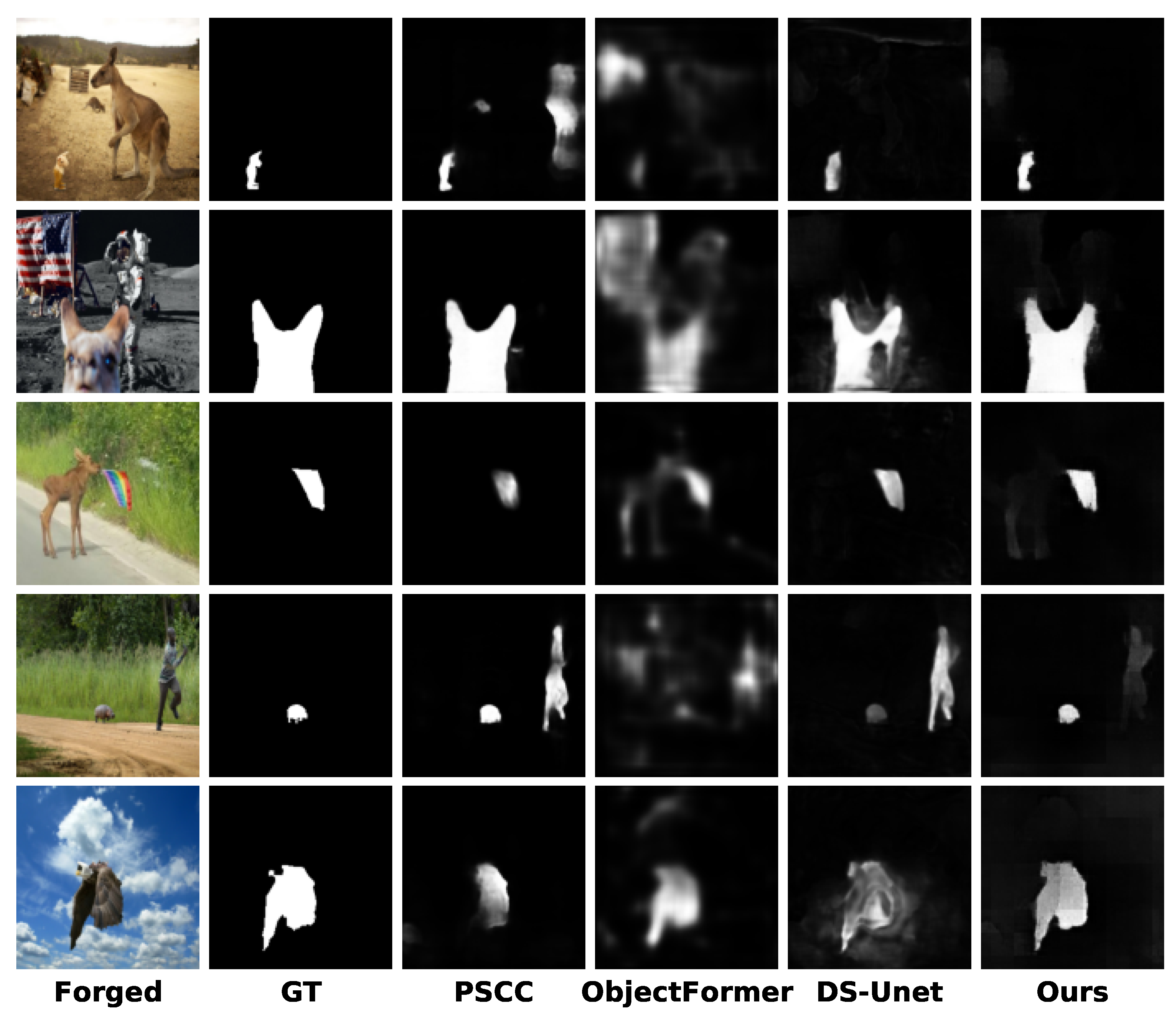

4.2. Comparisons with SOTA Methods

4.2.1. Pre-Training Scenario

4.2.2. Fine-Tuning Scenario

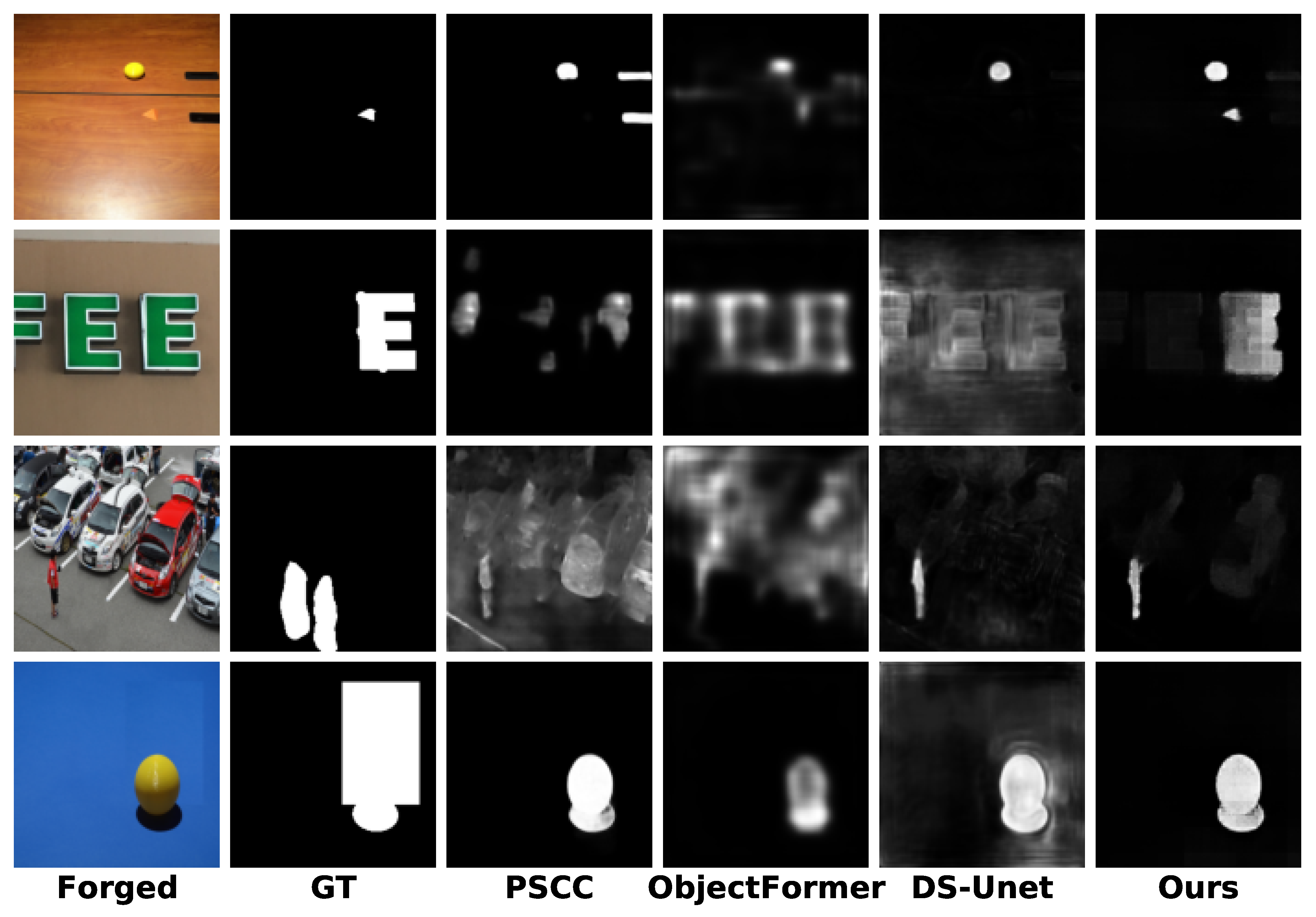

4.3. Visualization of Model Prediction Masks

4.4. Robustness Analysis

4.5. Ablation Analysis

- Baseline: We use Swin-Unet [46] as the baseline model with only RGB images as input.

- Model-v1: This model is constructed by replacing the HFEM of DFST-UNet with the SRM filters.

- Model-v2: This model is constructed by replacing the HFEM of DFST-UNet with the Bayar convolutions [47].

- Model-v3: This model is constructed on the basis of the baseline. Instead of inputting RGB images, the proposed HFEM is adopted to generate high-frequency features as the input.

- Model-v4: This model is constructed by replacing the HAFM of DFST-UNet with the element-wise addition.

- Model-v5: This model is constructed by replacing the Gaussian high-pass filter in HFEM with an ideal high-pass filter, and retaining the HAFM for feature fusion.

4.6. Model Complexity and Computational Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Ferrara, P.; Bianchi, T.; De Rosa, A.; Piva, A. Image forgery localization via fine-grained analysis of CFA artifacts. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1566–1577. [Google Scholar] [CrossRef]

- Fan, J.; Cao, H.; Kot, A.C. Estimating EXIF parameters based on noise features for image manipulation detection. IEEE Trans. Inf. Forensics Secur. 2013, 8, 608–618. [Google Scholar] [CrossRef]

- Chen, Y.L.; Hsu, C.T. Detecting recompression of JPEG images via periodicity analysis of compression artifacts for tampering detection. IEEE Trans. Inf. Forensics Secur. 2011, 6, 396–406. [Google Scholar] [CrossRef]

- Bianchi, T.; Piva, A. Image forgery localization via block-grained analysis of JPEG artifacts. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1003–1017. [Google Scholar] [CrossRef]

- Wu, Y.; Abd-Almageed, W.; Natarajan, P. Busternet: Detecting copy-move image forgery with source/target localization. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 168–184. [Google Scholar] [CrossRef]

- Zhong, J.L.; Pun, C.M. An end-to-end dense-inceptionnet for image copy-move forgery detection. IEEE Trans. Inf. Forensics Secur. 2019, 15, 2134–2146. [Google Scholar] [CrossRef]

- Zhu, Y.; Chen, C.; Yan, G.; Guo, Y.; Dong, Y. AR-Net: Adaptive attention and residual refinement network for copy-move forgery detection. IEEE Trans. Ind. Inform. 2020, 16, 6714–6723. [Google Scholar] [CrossRef]

- Chen, B.; Tan, W.; Coatrieux, G.; Zheng, Y.; Shi, Y.Q. A serial image copy-move forgery localization scheme with source/target distinguishment. IEEE Trans. Multimed. 2020, 23, 3506–3517. [Google Scholar] [CrossRef]

- Islam, A.; Long, C.; Basharat, A.; Hoogs, A. DOA-GAN: Dual-order attentive generative adversarial network for image copy-move forgery detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4676–4685. [Google Scholar] [CrossRef]

- Barni, M.; Phan, Q.T.; Tondi, B. Copy move source-target disambiguation through multi-branch CNNs. IEEE Trans. Inf. Forensics Secur. 2020, 16, 1825–1840. [Google Scholar] [CrossRef]

- Liu, B.; Pun, C.M. Deep fusion network for splicing forgery localization. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Bi, X.; Wei, Y.; Xiao, B.; Li, W. RRU-Net: The ringed residual U-Net for image splicing forgery detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar] [CrossRef]

- Cozzolino, D.; Verdoliva, L. Noiseprint: A CNN-based camera model fingerprint. IEEE Trans. Inf. Forensics Secur. 2019, 15, 144–159. [Google Scholar] [CrossRef]

- Xiao, B.; Wei, Y.; Bi, X.; Li, W.; Ma, J. Image splicing forgery detection combining coarse to refined convolutional neural network and adaptive clustering. Inf. Sci. 2020, 511, 172–191. [Google Scholar] [CrossRef]

- Liu, B.; Pun, C.M. Exposing splicing forgery in realistic scenes using deep fusion network. Inf. Sci. 2020, 526, 133–150. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, G.; Wu, L.; Kwong, S.; Zhang, H.; Zhou, Y. Multi-task SE-network for image splicing localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4828–4840. [Google Scholar] [CrossRef]

- Li, H.; Huang, J. Localization of deep inpainting using high-pass fully convolutional network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8301–8310. [Google Scholar] [CrossRef]

- Wu, H.; Zhou, J. IID-Net: Image inpainting detection network via neural architecture search and attention. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1172–1185. [Google Scholar] [CrossRef]

- Zhou, P.; Han, X.; Morariu, V.I.; Davis, L.S. Learning rich features for image manipulation detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1053–1061. [Google Scholar] [CrossRef]

- Wu, Y.; AbdAlmageed, W.; Natarajan, P. Mantra-net: Manipulation tracing network for detection and localization of image forgeries with anomalous features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9543–9552. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, Z.; Jiang, Z.; Chaudhuri, S.; Yang, Z.; Nevatia, R. SPAN: Spatial pyramid attention network for image manipulation localization. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 312–328. [Google Scholar] [CrossRef]

- Zhuang, P.; Li, H.; Tan, S.; Li, B.; Huang, J. Image tampering localization using a dense fully convolutional network. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2986–2999. [Google Scholar] [CrossRef]

- Dong, C.; Chen, X.; Hu, R.; Cao, J.; Li, X. Mvss-net: Multi-view multi-scale supervised networks for image manipulation detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3539–3553. [Google Scholar] [CrossRef]

- Yin, Q.; Wang, J.; Lu, W.; Luo, X. Contrastive learning based multi-task network for image manipulation detection. Signal Process. 2022, 201, 108709. [Google Scholar] [CrossRef]

- Das, S.; Islam, M.S.; Amin, M.R. Gca-net: Utilizing gated context attention for improving image forgery localization and detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 81–90. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Y.; Chen, J.; Liu, X. PSCC-Net: Progressive spatio-channel correlation network for image manipulation detection and localization. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7505–7517. [Google Scholar] [CrossRef]

- Huang, Y.; Bian, S.; Li, H.; Wang, C.; Li, K. DS-UNet: A dual streams UNet for refined image forgery localization. Inf. Sci. 2022, 610, 73–89. [Google Scholar] [CrossRef]

- Xu, D.; Shen, X.; Shi, Z.; Ta, N. Semantic-agnostic progressive subtractive network for image manipulation detection and localization. Neurocomputing 2023, 543, 126263. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers); Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online, 16–20 November 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Hao, J.; Zhang, Z.; Yang, S.; Xie, D.; Pu, S. Transforensics: Image forgery localization with dense self-attention. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15055–15064. [Google Scholar] [CrossRef]

- Wang, J.; Wu, Z.; Chen, J.; Han, X.; Shrivastava, A.; Lim, S.N.; Jiang, Y.G. Objectformer for image manipulation detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2364–2373. [Google Scholar] [CrossRef]

- Das, S.; Amin, M.R. Learning interpretable forensic representations via local window modulation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 436–447. [Google Scholar] [CrossRef]

- Ma, X.; Du, B.; Jiang, Z.; Hammadi, A.Y.A.; Zhou, J. IML-ViT: Benchmarking Image Manipulation Localization by Vision Transformer. arXiv 2023, arXiv:2307.14863. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Chen, X.; Dong, C.; Ji, J.; Cao, J.; Li, X. Image manipulation detection by multi-view multi-scale supervision. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 14185–14193. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef]

- Hsu, Y.F.; Chang, S.F. Detecting image splicing using geometry invariants and camera characteristics consistency. In Proceedings of the 2006 IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada, 9–12 July 2006; pp. 549–552. [Google Scholar] [CrossRef]

- Wen, B.; Zhu, Y.; Subramanian, R.; Ng, T.T.; Shen, X.; Winkler, S. COVERAGE—A novel database for copy-move forgery detection. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 161–165. [Google Scholar] [CrossRef]

- Dong, J.; Wang, W.; Tan, T. Casia image tampering detection evaluation database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013; pp. 422–426. [Google Scholar] [CrossRef]

- Guan, H.; Kozak, M.; Robertson, E.; Lee, Y.; Yates, A.N.; Delgado, A.; Zhou, D.; Kheyrkhah, T.; Smith, J.; Fiscus, J. MFC datasets: Large-scale benchmark datasets for media forensic challenge evaluation. In Proceedings of the 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), Waikoloa, HI, USA, 7–11 January 2019; pp. 63–72. [Google Scholar] [CrossRef]

- Novozamsky, A.; Mahdian, B.; Saic, S. IMD2020: A large-scale annotated dataset tailored for detecting manipulated images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Workshops, Snowmass, CO, USA, 1–5 March 2020; pp. 71–80. [Google Scholar] [CrossRef]

- Zeng, N.; Wu, P.; Zhang, Y.; Li, H.; Mao, J.; Wang, Z. DPMSN: A dual-pathway multiscale network for image forgery detection. IEEE Trans. Ind. Inform. 2024, 20, 7665–7674. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 205–218. [Google Scholar] [CrossRef]

- Bayar, B.; Stamm, M.C. Constrained convolutional neural networks: A new approach towards general purpose image manipulation detection. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2691–2706. [Google Scholar] [CrossRef]

| Dataset | #Image | Forgery Type | Ave. Resolution | ||

|---|---|---|---|---|---|

| S | C | I | |||

| Columbia [40] | 180 | ✓ | ✗ | ✗ | 892 × 647 |

| COVERAGE [41] | 100 | ✗ | ✓ | ✗ | 476 × 393 |

| CASIA-v1 [42] | 920 | ✓ | ✓ | ✗ | 370 × 270 |

| CASIA-v2 [42] | 5123 | ✓ | ✓ | ✗ | 450 × 344 |

| NIST16 [43] | 564 | ✓ | ✓ | ✓ | 3460 × 2616 |

| IMD2020 [44] | 2010 | ✓ | ✓ | ✓ | 1056 × 849 |

| Dataset | Pre-Training | Fine-Tuning | ||

|---|---|---|---|---|

| Train | Test | Train | Test | |

| Columbia [40] | - | 180 | - | - |

| COVERAGE [41] | - | 100 | 75 | 25 |

| CASIA [42] | 12,418 | 920 | 5123 | 920 |

| NIST16 [43] | - | 564 | 404 | 160 |

| IMD2020 [44] | - | 2010 | 1510 | 500 |

| Dataset → Methods ↓ | Columbia [40] | COVERAGE [41] | NIST16 [43] | IMD2020 [44] | Average |

|---|---|---|---|---|---|

| ManTra-Net [20] | 82.4 | 81.9 | 79.5 | 74.8 | 79.65 |

| SPAN [21] | 93.6 | 92.2 | 84.0 | 75.0 | 86.20 |

| MVSS++ [23] | 91.3 | 82.4 | 83.7 | - | 85.80 |

| PSCC * [26] | 92.7 | 78.0 | 81.6 | 84.8 | 84.28 |

| DS-UNet * [27] | 93.6 | 80.0 | 83.8 | 84.2 | 85.40 |

| ObjectFormer * [34] | 85.0 | 76.6 | 81.7 | 82.2 | 81.38 |

| ForMoNet [35] | - | 85.2 | 84.6 | 83.9 | 84.57 |

| Ours | 95.6 | 83.7 | 84.5 | 85.5 | 87.33 |

| Dataset → Methods ↓ | COVERAGE [41] | NIST16 [43] | IMD2020 [44] | Average |

|---|---|---|---|---|

| RGB-N [19] | 81.7/43.7 | 93.7/72.2 | 74.8/ - | 83.40/57.95 |

| SPAN [21] | 93.7/55.8 | 96.1/58.2 | 75.0/ - | 88.27/57.00 |

| PSCC * [26] | 91.7/51.8 | 99.1/69.0 | 91.7/46.1 | 94.17/55.63 |

| DS-UNet * [27] | 89.9/31.8 | 99.8/88.7 | 92.8/39.8 | 94.17/53.43 |

| DPMSN [45] | - | 98.9/83.3 | 77.5/29.0 | 88.20/56.15 |

| TransForensics [33] | 88.4/67.4 | - | 84.8/ - | 86.60/67.40 |

| ObjectFormer * [34] | 84.5/24.0 | 97.8/57.1 | 84.4/19.6 | 88.90/33.57 |

| ForMoNet [35] | 86.2/65.1 | 95.1/84.7 | 85.0/43.8 | 88.77/64.53 |

| Ours | 97.9/77.8 | 99.9/96.7 | 98.2/80.3 | 98.67/84.93 |

| Methods → Distortion ↓ | ManTra [20] | SPAN [21] | PSCC * [26] | ObjectFormer * [34] | DS-UNet * [27] | Ours |

|---|---|---|---|---|---|---|

| w/o distortion | 78.05 | 83.95 | 81.56 | 81.67 | 83.82 | 84.50 |

| Resize 0.78× | 77.43 | 83.24 | 80.66 | 81.52 | 84.02 | 84.40 |

| Resize 0.25× | 75.52 | 80.32 | 75.96 | 80.52 | 81.54 | 83.59 |

| Gaussian blur k = 3 | 77.46 | 83.10 | 80.65 | 81.32 | 83.90 | 83.27 |

| Gaussian blur k = 15 | 74.55 | 79.15 | 66.36 | 80.81 | 82.17 | 81.32 |

| Gaussian noise = 3 | 67.41 | 75.17 | 82.27 | 81.47 | 82.91 | 84.25 |

| Gaussian noise = 15 | 58.55 | 67.28 | 79.09 | 79.64 | 81.87 | 82.08 |

| JPEG compression q = 100 | 77.91 | 83.59 | 77.96 | 81.79 | 83.36 | 84.68 |

| JPEG compression q = 50 | 74.38 | 80.68 | 75.54 | 81.48 | 82.14 | 84.32 |

| Average | 72.90 | 79.07 | 77.31 | 81.07 | 82.74 | 83.49 |

| Model | RGB | High-Frequency | Fusion | Columbia | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SRM | Bayar | Ideal | HFEM | Add | HAFM | AUC | F1 | ACC | ||

| Baseline | ✓ | 91.82 | 45.76 | 84.79 | ||||||

| Model-v1 | ✓ | ✓ | ✓ | 87.92 | 24.95 | 78.08 | ||||

| Model-v2 | ✓ | ✓ | ✓ | 88.63 | 38.16 | 82.42 | ||||

| Model-v3 | ✓ | 93.43 | 59.51 | 87.47 | ||||||

| Model-v4 | ✓ | ✓ | ✓ | 91.58 | 46.24 | 84.48 | ||||

| Model-v5 | ✓ | ✓ | ✓ | 95.54 | 66.34 | 88.84 | ||||

| Ours | ✓ | ✓ | ✓ | 95.59 | 66.68 | 88.95 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Xie, A.; Mai, T.; Chen, Y. DFST-UNet: Dual-Domain Fusion Swin Transformer U-Net for Image Forgery Localization. Entropy 2025, 27, 535. https://doi.org/10.3390/e27050535

Yang J, Xie A, Mai T, Chen Y. DFST-UNet: Dual-Domain Fusion Swin Transformer U-Net for Image Forgery Localization. Entropy. 2025; 27(5):535. https://doi.org/10.3390/e27050535

Chicago/Turabian StyleYang, Jianhua, Anjun Xie, Tao Mai, and Yifang Chen. 2025. "DFST-UNet: Dual-Domain Fusion Swin Transformer U-Net for Image Forgery Localization" Entropy 27, no. 5: 535. https://doi.org/10.3390/e27050535

APA StyleYang, J., Xie, A., Mai, T., & Chen, Y. (2025). DFST-UNet: Dual-Domain Fusion Swin Transformer U-Net for Image Forgery Localization. Entropy, 27(5), 535. https://doi.org/10.3390/e27050535