1. Introduction

Sitting posture recognition is a computer vision task that aims to automatically detect, localize, and classify seated human postures, supporting ergonomics assessment and health-risk monitoring in classroom and office settings [

1,

2,

3]. In modern education and workplace environments, prolonged sitting is pervasive; sustained improper posture is linked to abnormal spinal loading and is an independent risk factor for scoliosis, lumbar disc degeneration, and cardiovascular and cerebrovascular diseases [

4]. Therefore, scalable posture recognition is critical for early risk screening, ergonomic intervention, and longitudinal evaluation, providing objective measurements beyond sporadic manual assessments [

5]. However, in real-world complex scenarios such as classrooms or open offices with multiple people present, frequent occlusions and overlaps between individuals lead to incomplete and ambiguous observations, degrading localization accuracy and complicating fine-grained discrimination. These real-world complexities constitute a key barrier to reliable and scalable posture recognition.

In light of these constraints, early studies predominantly employed wearable sensors or seat-mounted pressure arrays for posture monitoring [

6,

7,

8]. However, limitations in user adherence and scene-level scalability hindered their widespread deployment in classrooms and offices. To reduce intrusiveness and support multi-person coverage, research has gradually shifted toward non-contact, vision-based approaches, among which deep learning has become the core paradigm in recent years due to its consistent performance under occlusion, cross-domain conditions, and fine-grained recognition [

9]. For example, DRHN [

10] enhances robustness to occlusion by employing hierarchical temporal modeling; SitPose [

11] by Jin et al. leverages Kinect for real-time skeletal tracking and joint-angle features, coupled with a soft-voting ensemble to boost accuracy; ASPR [

12] integrates multi-scale spatiotemporal skeletal graph convolution with an RNN on local joint angles to fuse spatial, temporal, and whole-body skeletal cues, achieving high-accuracy abnormal sitting posture recognition from the perspective of postural changes. However, the real-world deployment of deep learning models is fundamentally constrained by their dependence on large-scale, high-quality annotations—a resource rendered scarce in real-world settings due to privacy regulations, pervasive occlusions, and prohibitive costs of fine-grained posture labeling. This data scarcity compels the adoption of few-shot learning paradigms, where methods like LGCSPNet [

13] leverage structural relationship modeling to maintain spinal misalignment detection reliability with minimal supervision, and JEANIE [

14] achieves cross-scenario generalization through temporal–spatial alignment of critical postural transitions both prioritizing diagnostic accuracy for high-risk postures over conventional classification metrics. Yet, in crowded, occlusion-prone environments, relying solely on few-shot methods is insufficient to ensure reliable localization and stable fine-grained discrimination.

Despite these advances, a critical gap persists in real-world multi-person settings: under severe occlusion, limited annotations, and computational constraints, existing vision-based approaches struggle to maintain both spatial fidelity and semantic consistency at the instance level. Specifically, when individuals overlap or are partially visible, detectors often produce imprecise bounding boxes, which in turn corrupt downstream pose parsing and classification. Worse, many deep models—trained with scarce labels—tend to rely heavily on global scene context, inadvertently suppressing discriminative part-level cues (e.g., spine curvature, shoulder alignment) that are essential for distinguishing subtle postural deviations. This leads to two compounding failure modes: (1) error propagation across loosely coupled detection–parsing–classification stages, and (2) degraded robustness to occlusion due to underutilized local structural information. Consequently, even state-of-the-art few-shot methods fail to deliver reliable sitting posture recognition in crowded classrooms or open offices.

From an information-theoretic perspective, these challenges are closely tied to uncertainty and entropy. Occlusion increases uncertainty in the scene, leading to higher entropy in the observations and making the task of detecting and classifying sitting postures more difficult. In particular, when individuals are partially visible or occluded, the mutual information between the input image and the posture labels is reduced, leading to ambiguous or conflicting predictions. Moreover, the scarcity of annotated data compounds this problem, limiting the model’s ability to learn reliable representations and increasing the risk of overfitting to noisy or incomplete labels.

To address these challenges, we propose LAViTSPose, a lightweight cascaded framework for complex multi-person scenes. The pipeline follows a detection–parsing–classification paradigm. In detection, we adopt a YOLOR person detector trained with our proposed Range-aware IoU (RaIoU) loss, which not only yields tight bounding boxes under partial visibility but also helps reduce localization entropy by providing more precise detections in occluded regions, thereby limiting the uncertainty of object detection. In parsing, ESBody, as an entropy filter, with Reno boundary filtering and APF routing, mitigates cross-person leakage and yields part-consistent semantic maps. In classification, a compact Transformer classifier head (MLiT) with Spatial Displacement Contact (SDC) and learnable temperature (LT) strengthens local spatial modeling and stabilizes attention without additional large-scale pretraining beyond standard initialization. While staged for efficiency, the interfaces are explicitly calibrated: segmentation cues serve only for interference suppression and routing, and the classifier consumes skeleton-only inputs, which curbs error propagation between modules. Together, these stages reduce alignment errors and feature contamination from occlusion/overlap and improve sitting posture recognition accuracy and robustness in crowded classrooms/offices under limited annotations, thereby minimizing intra-class entropy and stabilizing attention.

Our contributions can be summarized as follows:

We present LAViTSPose, a lightweight cascaded framework for complex multi-person indoor scenes. By coordinating occlusion-robust detection, semantic body parsing (ESBody), and a compact Transformer classifier, the framework mitigates the effects of partial visibility and cross-person interference, enabling reliable sitting posture recognition.

We introduce stage-specific innovations across detection, parsing, and classification. In detection, we propose RAIoU (Range-aware IoU) as the bounding-box regression loss, improving alignment robustness under partial visibility; in parsing, ESBody, with Reno boundary filtering and APF routing, suppresses cross-person leakage and yields part-consistent regions; in classification, a lightweight ViT head combining Spatial Displacement Contact (SDC), learnable temperature, and local structural-consistency regularization strengthens local modeling and robustness under small-sample regimes, without relying on large-scale pretraining.

We reduce structural uncertainty across the detection–parsing–classification pipeline from an information-theoretic perspective, improving robustness under occlusion and annotation scarcity through entropy-minimizing architectural design.

We validate the method on the USSP dataset through comprehensive experiments, showing improvements over representative baselines in crowded classroom/office scenarios and strong performance under few-shot settings.

In

Section 2, we provide an in-depth review of prior work on sitting posture recognition and related techniques in object detection, semantic segmentation and human parsing, and Transformer-based classification. In

Section 3, we provide a detailed introduction to the proposed model, including its workflow, various modules, and technical details. The experimental results and ablation studies are presented in

Section 4. Finally, the conclusion and future work are outlined in

Section 5.

2. Related Work

In this section, we review recent advances most relevant to our method from four perspectives: (1) sitting posture recognition; (2) object detection and bounding-box regression; (3) semantic segmentation and human parsing; and (4) lightweight Transformer-based classification.

2.1. Sitting Posture Recognition

Sitting posture recognition aims to automatically determine seated postures to support ergonomic assessment in educational and occupational settings. Early systems largely relied on wearable sensors or seat-embedded pressure sensing: for example, Smart Cushion [

15] used seat-cushion FSR arrays for fine-grained recognition; Sensors and Actuators [

16] reported a portable pressure-array system combined with machine learning; and Aminosharieh Najafi [

17] presented a multi-sensor smart chair that employed deep learning to classify multiple postures. However, limitations in device cost, user adherence, comfort, maintenance, and scalable deployment have gradually driven research toward non-contact, vision-based paradigms. In this direction, DRHN [

10] employs hierarchical temporal modeling to mitigate occlusion and limited visibility, improving robustness on RGB-D sequences; SitPose [

11] leverages Kinect for real-time 3D skeletal tracking and joint-angle modeling, coupled with a soft-voting ensemble to improve accuracy; and ASPR [

12] utilizes multi-scale spatiotemporal skeletal graph convolution to fuse spatial, temporal, and whole-body structural cues, achieving fine-grained and abnormal sitting posture recognition in cross-instance overlap scenarios.

Existing studies have not, under few-shot constraints, simultaneously achieved occlusion-robust detection for crowded scenes, interference-suppressed segmentation that isolates body parts amid overlaps, and lightweight classification resilient to residual misalignment. However, when detection, parsing, and classification are stitched together without careful interface alignment and error-suppression mechanisms, localization noise can propagate across stages in crowded scenes. Our framework addresses this by equipping each stage with targeted robustness—occlusion-aware detection, interference-suppressed parsing, and structure-regularized classification—so that errors are contained rather than amplified.

2.2. Object Detection and Bounding Box Regression

In sitting posture recognition, object detection provides critical “human candidate regions” for subsequent segmentation and classification. The quality of these regions directly determines the discriminability and robustness of downstream features. Current methods fall into two categories:

Region-based detectors prioritize high accuracy but incur significant computational cost. For instance, R-CNN [

18] employs Selective Search to generate

region proposals, processes each through a CNN for feature extraction, and classifies them using SVMs while refining bounding boxes via linear regression. Cascade R-CNN [

19] improves localization by cascading multiple detection heads with progressively higher IoU thresholds. R-FCN [

20] enhances efficiency by encoding spatial information into position-sensitive score maps and leveraging position-sensitive region of interest (ROI) pooling, eliminating per-region fully connected layers.

Single-stage detectors emphasize end-to-end dense prediction and real-time performance. YOLO [

21] reformulates detection as a unified regression task from image to grid/anchor coordinates, simultaneously predicting objectness, class labels, and bounding boxes. CenterNet [

22] adopts an anchor-free paradigm, treating objects as center points on heatmaps and regressing offsets and dimensions, thereby bypassing anchor matching.

Beyond detector architectures, localization quality is improved by IoU-family losses such as GIoU [

23] and DIoU/CIoU [

24]. These objectives continuously penalize size/shape deviations for all predictions. RIoU [

25] further rectifies gradient imbalance by up-weighting high-IoU examples and down-weighting low-IoU ones, thereby emphasizing precise localization; however, it still applies continuous penalties regardless of bbox plausibility. Our RaIoU differs by introducing range-aware intervals on log-width/height/aspect-ratio with near-zero gradients inside the intervals, focusing updates on out-of-range, occlusion-induced outliers typical of classroom/office scenes.

While existing detectors assume proposals cover visible targets, sitting scenarios feature desk/peer occlusions and proximity, causing spatially inaccurate proposals (center misalignment, scale mismatch, partial coverage). This induces critically low IoU, unstable gradients, and error propagation—unaddressed by current methods in modeling occlusion/truncation-induced spatial uncertainty. We thus integrate a lightweight detector with robust regression and quality modeling to deliver precise human regions and stable geometric priors for downstream tasks.

2.3. Semantic Segmentation and Human Parsing

Semantic segmentation and human parsing provide pixel-level isolation of individuals in crowded scenes and are commonly used as foundational modules for pose/action recognition. Classic CNN-based approaches such as FCN [

26] remain widely adopted for their stability and ease of deployment, particularly in early-stage pipelines. To better model multi-scale context and boundary details in dense environments, subsequent architectures have introduced advanced mechanisms: DeepLabV3+ [

27] employs atrous spatial pyramid pooling (ASPP) and encoder–decoder structures to capture global context while preserving fine-grained boundaries; PSPNet [

28] leverages pyramid pooling to aggregate multi-region contextual information; HRNet [

29] maintains high-resolution representations throughout the network, enabling superior performance on body part delineation tasks.

For real-time applications, lightweight variants such as Fast-SCNN [

30] and BiSeNet [

31] reduce computational cost through asymmetric encoders or spatial path designs, achieving efficient inference while maintaining reasonable accuracy in multi-person frames. Recent works further integrate semantic segmentation with detection confidence maps or uncertainty estimation—e.g., using adaptive loss weighting [

32] or geometric-aware refinement [

33]—to mitigate misalignment caused by noisy bounding boxes or occlusions.

Despite these advances, most semantic segmentation methods still treat “person” pixels uniformly without distinguishing individuals, making them vulnerable to cross-person interference when used in detection-based pipelines. Moreover, they rarely incorporate explicit mechanisms to suppress inter-instance contamination or to adapt segmentation boundaries conditioned on detector uncertainty—limitations that we directly address in this work. In particular, Reno suppresses boundary-connected interference from nearby subjects, while APF estimates occlusion and head-orientation cues to route samples, yielding anatomy-aware yet interference-resistant part maps tailored for multi-person seated scenes.

2.4. Lightweight Transformer-Based Classification

Transformers, which model long-range dependencies via self-attention, have shown strong performance in image recognition. As the baseline Vision Transformer, ViT [

34] partitions an image into non-overlapping patches and performs global self-attention over the resulting tokens. Building on this, Swin Transformer [

35] adopts a hierarchical windowing scheme with shifted windows that reduces computation while enhancing local representation capacity, and it has been widely used for dense prediction tasks in addition to classification.

However, in real-world sitting posture recognition, training data are typically limited, costly to annotate, and domain-specific. Under such small-sample conditions, a purely global-attention ViT is more susceptible to background clutter and redundant regions, with particularly unstable attention allocation in multi-person scenes [

36]. To improve data efficiency, researchers have explored lightweight/data-efficient Transformers: DeiT [

37] introduces a distillation token and supervised distillation from a CNN teacher, enabling data-efficient ViT training using only ImageNet-1k; T2T-ViT [

38] performs layer-wise tokens-to-token aggregation to explicitly model local structure and shorten the token sequence; LocalViT [

39] injects depthwise separable convolutions into the feed-forward network to strengthen local priors; and LeViT [

40] adopts hybrid or mobile-friendly designs to reduce latency and parameter counts while maintaining accuracy. Nevertheless, these approaches largely focus on structural tweaks to the classification backbone, lacking explicit mechanisms for multi-person occlusion and cross-instance interference, and thus struggle to curb attention drift and misclassification at the source. In contrast, our method adopts a cascaded modular design with stage-specific error suppression mechanisms: occlusion-robust detection, interference-aware parsing, and structure-regularized classification. While the pipeline is staged for clarity and efficiency, each module is explicitly designed to isolate and suppress domain-specific errors (e.g., cross-person leakage, partial visibility) that commonly degrade end-to-end learning in crowded scenes. This targeted design—rather than coupling—enables stable training under limited annotations and achieves robust performance in high-interference, small-sample settings.

3. Methods

In this section, we first give an overview of our proposed method in

Figure 1, which comprises three core modules: (1) object detection, (2) semantic segmentation, and (3) image classification. We then present the key components in detail and finally elaborate on implementation details for both training and inference.

3.1. Overview

In multi-person indoor scenes such as classrooms and offices, vision-based seated posture recognition is both practically valuable and technically challenging. The goal is to reliably determine, from a single-frame image, each individual’s posture category (e.g., Upright, Head-on-desk, Leaning-sideways) to support intelligent education, behavior analytics, and human–computer interaction. In real deployments, local occlusions (e.g., arms occluding the torso, front-row students blocking those behind), inter-person overlap (adjacent subjects causing blurred boundaries), and cluttered backgrounds jointly degrade person detection accuracy, contaminate subsequent regional features, and propagate bias into fine-grained classification.

To address these issues, we propose LAViTSPose, a lightweight cascaded framework that structures the pipeline into three specialized stages—detection, segmentation, and classification—each equipped with stage-specific mechanisms to suppress domain-specific errors. In the detection stage, we adopt a YOLOR-based person detector and introduce a Range-aware IoU (RaIoU) loss. RaIoU injects dataset-derived intervals for box width, height, and aspect ratio, keeps gradients near zero for in-range predictions, and applies a saturating penalty only when boxes violate these intervals—while retaining the IoU and a center-alignment term. This range prior improves alignment under severe scale variation and occlusion, yielding tighter localization and less non-target leakage to downstream stages.

In the semantic segmentation stage, we present ESBody. The Remove Non-current Elements (Reno) module employs explicit context suppression to attenuate interference from other individuals, producing clean, single-person masks; the Analysis of Body Part Feature map (APF) module analyzes body part feature maps to infer lower limb occlusion and head orientation. These semantic cues are used only for routing and do not enter the classifier.

In the classification stage, we design a lightweight Vision Transformer variant, MLiT, tailored for posture classification. To compensate for the limited locality of pure attention, MLiT introduces a Spatial Displacement Contact (SDC) operation that injects local inductive bias via pixel-level spatial displacements; meanwhile, a learnable temperature (LT) term is incorporated into attention to dynamically adjust softmax sharpness, stabilizing training and improving generalization.

Overall, LAViTSPose follows a modular path to robust posture recognition under occlusion and overlap: precise person-centric cropping (YOLOR + RaIoU) → interference-resistant parsing with semantic cues (ESBody + Reno/APF) → skeleton-only classification with stabilized attention.

3.2. Object Detection

Accurate and tightly fitted human bounding boxes are essential for reliable downstream parsing and classification in multi-person sitting posture recognition. In dense indoor environments—such as classrooms or offices—frequent partial occlusions, significant scale variations, and diverse seated postures often lead conventional detectors to produce loose or misaligned bounding boxes. Such inaccuracies contaminate the regions of interest (ROIs) with neighboring individuals or background clutter, degrading subsequent posture analysis.

To address these challenges, we adopt a YOLOR-based object detector, fine-tuned specifically on indoor seated-scene data to better handle occlusion and scale diversity. Furthermore, we propose a Range-aware IoU (RaIoU) loss that incorporates dataset-derived statistical priors on bounding box width, height, and aspect ratio. This loss suppresses gradient updates for predictions falling within empirically observed valid ranges, while actively penalizing outliers. By doing so, the training process is steered to focus on correcting extreme-scale instances and occlusion-induced misalignments, thereby yielding tighter and more robust detections.

3.2.1. Detector Model

Inspired by the efficient design of the YOLO family [

21], we adopt a YOLOR-based [

25] person detector, preserving the canonical backbone–neck–head pipeline with task-oriented configurations: SiLU activation, CBP normalization with learnable parameters, PAN multi-scale feature fusion, and anchor re-estimation so that aspect-ratio and scale priors match classroom/office humans. Given an RGB image

, we preprocess it as

The backbone and neck produce a three-level feature pyramid:

On each level

(

), a decoupled head predicts anchor-wise class logits

, objectness logits

, and bounding-box regressors

:

where

is the anchor set on level

ℓ,

denotes the per-anchor class logits for

C classes,

the per-anchor objectness logits, and

the anchor-relative box regression outputs. The corresponding decoded boxes are

For each anchor, the candidate confidence score is computed as

where

is the logit corresponding to the

person class,

denotes the element-wise sigmoid function, and ⊙ denotes element-wise multiplication.

3.2.2. Range-Aware IoU

Existing IoU-family losses—including continuous size/ratio–augmented variants such as RIoU [

25]—typically impose non-zero gradients on all predictions, even when the predicted box width, height, or aspect ratio already falls within empirically reasonable ranges. For instance, RIoU augments IoU with smooth penalties (e.g., L2 loss on

), which continuously adjusts all scale deviations regardless of their plausibility. In dense, occluded scenes, this leads to shrink/expand bias, under-emphasis of extreme-scale instances, and instability with truncated boxes.

In contrast, our Range-aware IoU (RaIoU) introduces dataset-derived interval priors for

,

, and

, and employs a piecewise zero-gradient design: predictions within the valid interval incur no penalty, while only out-of-range predictions are corrected via a Huber-type saturating loss. This enables RaIoU to selectively refine anomalous boxes (e.g., severely truncated or misaligned due to occlusion) while preserving well-behaved predictions—thereby reducing unnecessary perturbations and improving robustness. RaIoU can be viewed as introducing a spatial attention mechanism that penalizes high-entropy misalignments in bounding box regression, thus improving downstream parsing certainty. Formally, the total loss is

For a predicted box

and its ground truth

, RaIoU is

where

c is the diagonal of the smallest enclosing rectangle of

B and

, and

ensures numerical stability. The interval penalty

is

which acts as a Huber-type saturating term outside the interval and equals zero inside.

The intervals

are estimated once from the training set using robust quantiles of the corresponding ground-truth statistics. Here,

denotes the empirical

p-th quantile (i.e., the inverse CDF evaluated at probability

p) computed over the training set. Specifically, we set

where

and

are the lower and upper quantile levels, respectively. The exact choices of

and other hyperparameters

are provided in the implementation details.

During inference, we merge candidates across pyramid levels and apply thresholding and NMS:

and optionally fuse multi-scale results:

Each retained box

is expanded and cropped:

and the ROIs

are passed to downstream parsing and classification.

3.3. Semantic Segmentation

Individuals often sit in close proximity, leading to overlapping bounding boxes and the inclusion of non-target body parts within the ROI. Additionally, occlusions from desks, chairs, or adjacent individuals complicate recognition, especially when lower-body visibility is compromised.

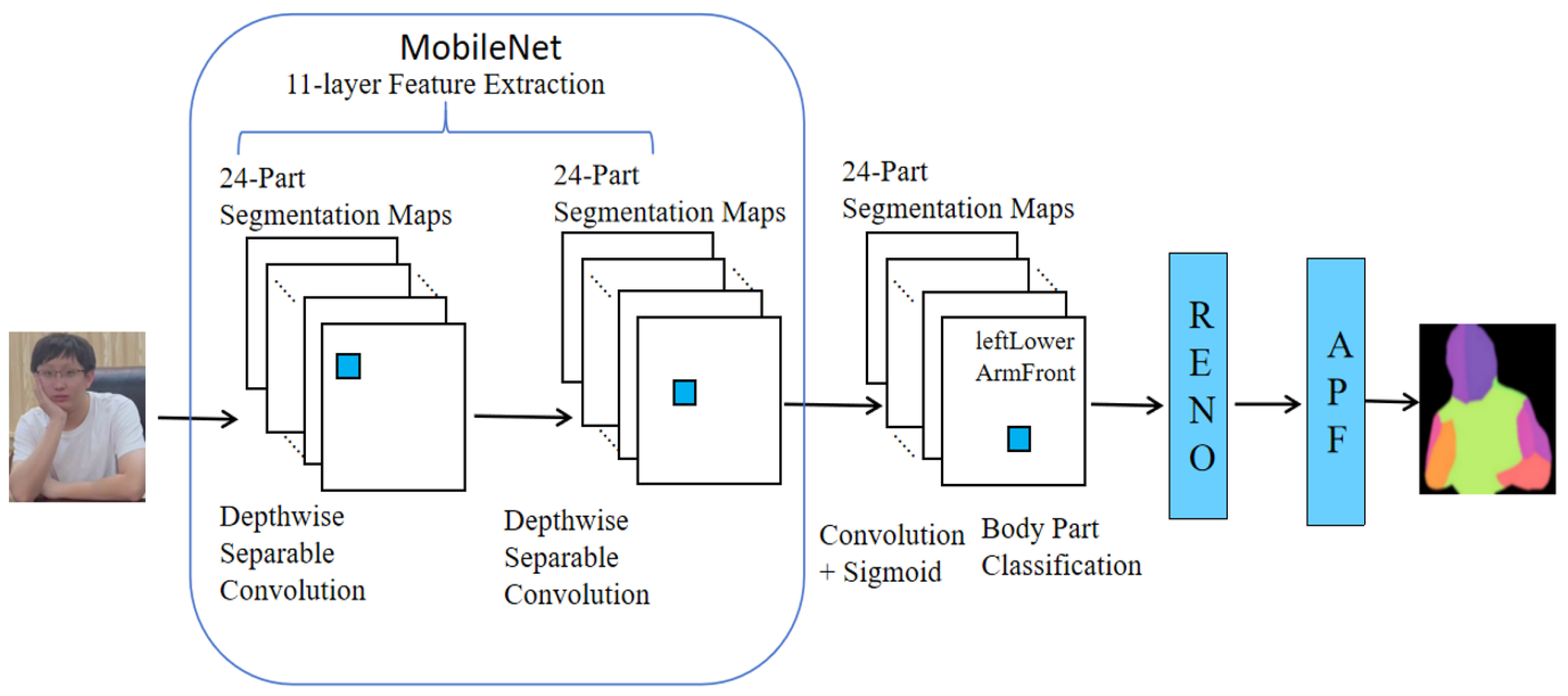

As illustrated in

Figure 2, we propose ESBody: Efficient and Contextual Semantic Body Parsing, a lightweight, training-free post-processing pipeline built upon the pretrained 24-part MobileNet-BodyPix2.0 [

41]. ESBody consists of two modules: Remove Non-current Elements (Reno) and Analysis of Body Part Feature map (APF). Within each detected ROI

, we first produce a binary person mask and retain only the connected component corresponding to the current instance; a thin morphological dilation preserves boundary cues while curbing leakage, yielding a cleaned ROI

. From

, APF converts part probabilities into semantic cues for downstream routing.

3.3.1. Remove Non-Current Elements

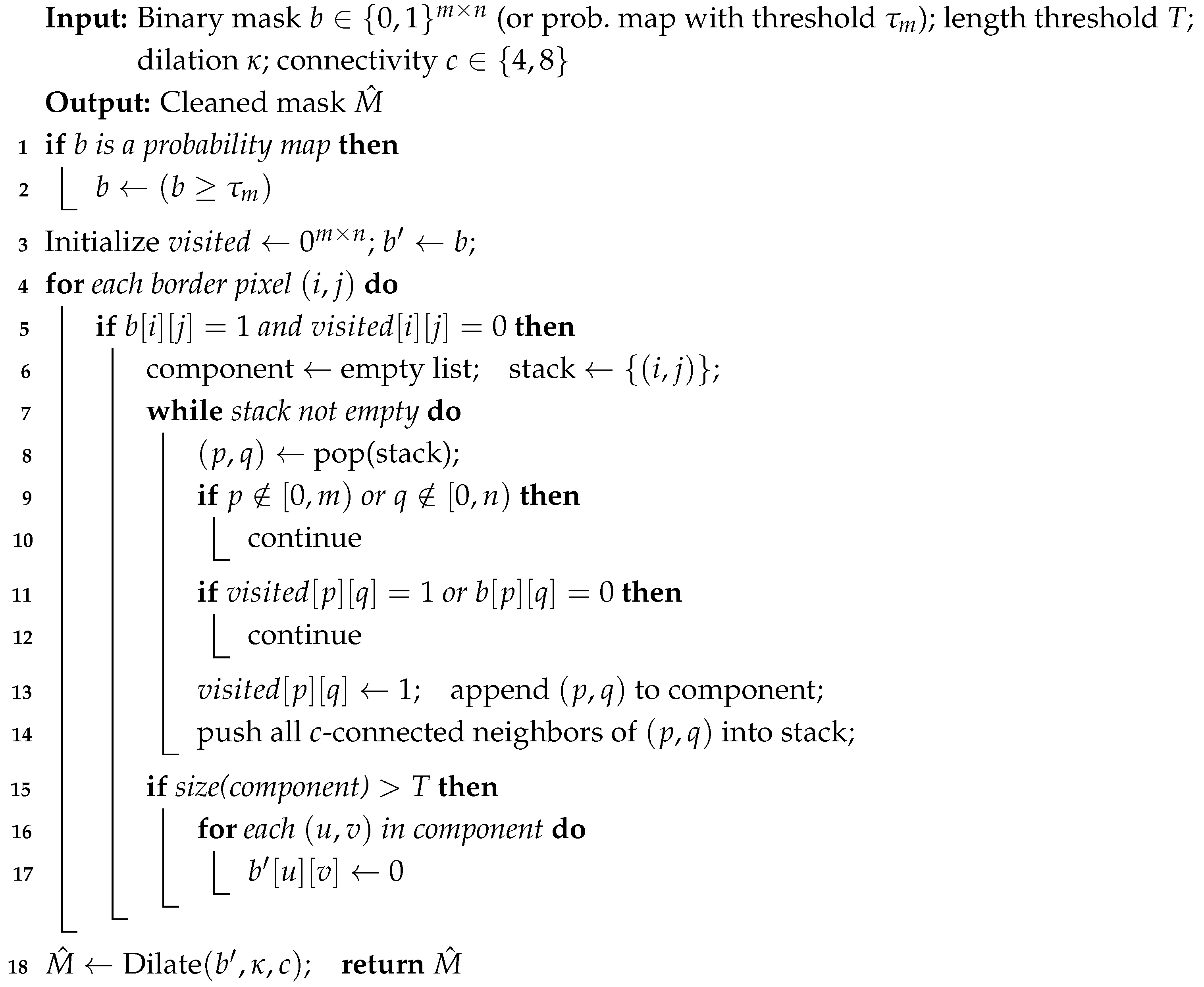

Reno eliminates interference by suppressing boundary-connected foreground components that likely belong to neighboring persons, as detailed in Algorithm 1. Let

be the BodyPix foreground probability within

; we binarize and then apply boundary-driven filtering and a thin dilation:

where

is the binarization threshold,

is the length threshold for boundary-connected components (default

),

is the dilation radius, and ⊙ denotes element-wise masking.

| Algorithm 1: Reno: Boundary-connected component suppression. |

|

If the confidence-weighted torso centroid lies inside the foreground, we first retain the connected component containing before boundary suppression.

3.3.2. Analysis of Body Part Feature Map

APF converts BodyPix’s soft part probabilities on

into two semantic cues and a routing decision, without any training. Let

be the fixed BodyPix output and

where

,

u indexes the

grid, and

ensures numerical stability.

Lower-body visibility ratio. With

the index set of lower-body parts (hips, thighs, calves, feet),

Facial orientation inference. Using left/right facial regions, we derive a coarse orientation by comparing their activations:

where

is the spatial average of the corresponding part attention.

Occlusion-aware routing (APF-only). We declare lower-body occlusion if

and choose the branch

APF cues

are used only for routing and are not concatenated with the classifier input. The branch-specific prediction is

where

and

are two posture classifiers specialized for half-/upper-body and whole-body evidence, respectively. APF itself contains no learnable parameters; it operates entirely on BodyPix outputs using geometric heuristics.

The APF module enriches raw segmentation maps with high-level semantic understanding by analyzing anatomical pixel distributions, inferring occlusion status from lower-body visibility, and estimating facial orientation through asymmetric facial features. These semantic cues enhance downstream feature representation and improve robustness for posture recognition in occlusion-prone environments, without requiring any training.

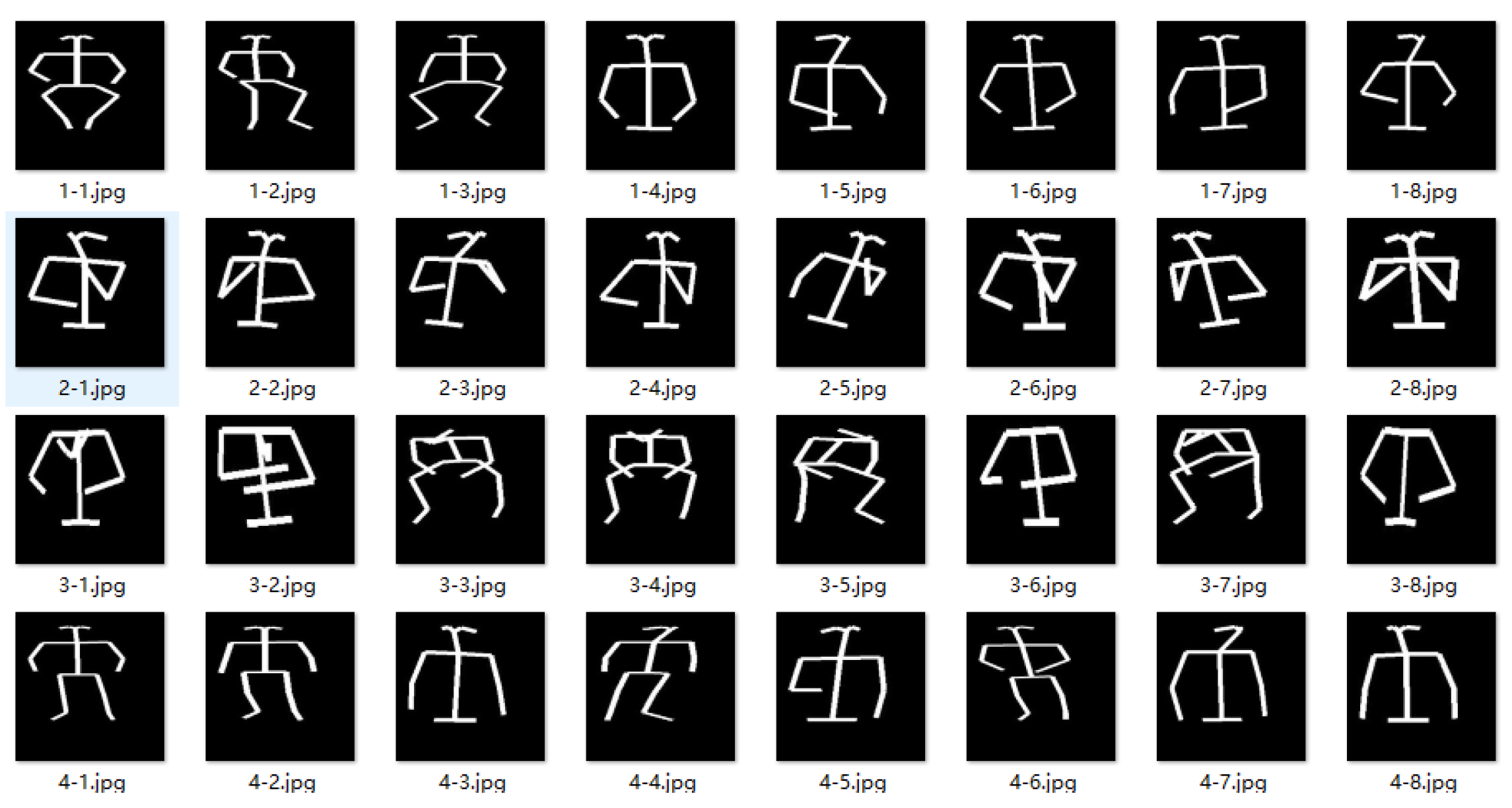

3.3.3. Human Pose Estimation with OpenPose

To address residual ambiguities, we introduce OpenPose as an inference-only geometric prior and skeleton generator: (i) the confidence-weighted torso centroid provides a robust reference point

for Reno; and (ii) the rendered rectangle-based skeleton

is the only input to the classifier. Examples of the generated binary skeletons are shown in

Figure 3, as illustrated below. When the aggregate torso-keypoint confidence is low

, we fall back to the detection-box center for

. The pose estimator is frozen and used only at inference; no gradients are backpropagated.

We define the confidence-weighted torso centroid as

For rendering, we use a rectangle-based skeleton. For each person, we form a tight keypoint box from confident joints

(default

= 0.3). If

, define

otherwise fall back to the detection box (or

). We map keypoints to a 224 × 224 canvas using aspect-preserving letterbox scaling:

For a limb between

and

, the orientation is

and the half-width offsets along the perpendicular direction are

The four vertices are

which are rasterized and filled to obtain a limb mask. The geometric construction is illustrated in

Figure 4, as shown below. Let

denote the standard COCO-18 limb set. We build a binary skeleton image

by filling rectangles for all

whose endpoints are present in

; limb strength can be modulated by

if a grayscale skeleton is desired.

Segmentation remains necessary: Reno reduces cross-person leakage inside the ROI, preventing erroneous keypoint grouping and stabilizing skeletal topology; APF provides lower-body occlusion and coarse head orientation to route samples into HB/WB branches. These cues improve the reliability of the skeleton representation and the choice of the appropriate classifier, without being concatenated with .

3.4. Image Classification

The final stage of LAViTSPose is an image classification module built on a standard Vision Transformer (ViT). Although ViT leverages global self-attention to capture long-range dependencies, it lacks inductive bias toward local spatial structure. To overcome this limitation, we propose MLiT (Modular Lightweight Image Transformer), a lightweight and efficient Transformer-based classifier tailored for small-scale, occlusion-prone datasets. As shown in

Figure 5, MLiT introduces two key innovations: (1) a Spatial Displacement Contact (SDC) module in the patch-embedding stage to enhance local spatial awareness; and (2) a learnable temperature (LT) mechanism to stabilize the attention distribution during training. With these designs, MLiT maintains high classification accuracy while using substantially fewer parameters, demonstrating effectiveness on small datasets commonly encountered in specialized tasks such as sitting posture recognition.

3.4.1. Spatial Displacement Contact

Standard ViTs tokenize an image by non-overlapping patch projection, discarding fine local structures. SDC injects local inductive bias by aggregating a few spatially displaced neighbors before patchifying. Given

and

, define a shift operator

(reflection padding) and form a concatenated feature map:

Tokens are obtained by unfolding

into

patches and a linear projection:

where

,

D is the embedding dimension, and

. We set

= 1 pixel and use the 4-neighborhood by default; the 8-neighborhood is optional. A difference-augmented variant is

which emphasizes local intensity changes useful under occlusion.

In the context of posture recognition, SDC can be viewed as implicitly minimizing intra-class entropy in the feature space. By aggregating local spatial context, SDC enhances the model’s ability to preserve discriminative part-level features, which are crucial for distinguishing subtle postural variations, even under occlusion or annotation noise. This structural-consistency regularization encourages features to cluster tightly within the same postural class, thus improving robustness to occlusion and reducing the uncertainty that arises in information-scarce settings. Consequently, the incorporation of SDC aids in mitigating entropy-related uncertainty in the model’s predictions, thereby enhancing the reliability of posture recognition without the need for large-scale supervision.

3.4.2. Learnable Temperature

Training Transformers on small datasets often produce unstable attention patterns. We therefore introduce a learnable temperature (LT): instead of a fixed temperature,

is a trainable scalar optimized jointly with the network,

allowing the model to adapt attention entropy during training.

3.4.3. Classification Head and Training Objective

Each detected person yields a rectangle-based skeleton image

from OpenPose (inference-only). APF provides routing variables

but these cues are not fed to the classifier. According to

, the sample is routed to the HB/WB branch. Let

be the classifier input and

the token matrix after SDC patch embedding (with positional embeddings and a class token). We stack

L pre-norm Transformer blocks with learnable temperature

:

and take the [CLS] token representation

Logits and probabilities are

where

,

, and

C is the number of posture classes.

With APF routing (

Section 3.3.2), each sample is assigned to a branch

according to

. Let

/

be the predicted distributions from the corresponding MLiT head (same architecture, separate parameters). The training objective is

where

and

. At inference, we output

.

In summary, the framework is designed as a progressive pipeline: YOLOR ensures precise person localization, ESBody removes cross-person interference and identifies occlusion, and OpenPose converts the refined regions into structured skeletons that are finally classified by MLiT. Each module builds upon the previous one, forming a coherent system for robust posture recognition in crowded environments.

4. Experiments

In this section, we conduct extensive experiments on the sitting posture recognition task. We then qualitatively and quantitatively analyze the advantages of our proposed method compared with existing approaches. Furthermore, we evaluate the effectiveness of each key component through ablation studies.

4.1. Datasets

In this work, we employ the University Student Sitting Posture (USSP) dataset [

42], a few-shot real-world benchmark specifically curated for fine-grained sitting posture and head-orientation recognition in multi-person scenes. Collected across diverse indoor environments—including classrooms, dormitories, study rooms, and offices—images typically contain multiple individuals seated in close proximity, with frequent occlusions from desks, chairs, and neighboring people. The dataset contains 2952 annotated images, split 8:2 into 2362 training and 590 test samples. Each visible seated individual is annotated at the instance level (person bounding box and categorical labels for sitting posture and head orientation). All annotations were independently produced by multiple annotators and subsequently validated for consistency, with high inter-annotator agreement. Although compact, USSP spans diverse scenarios and posture variations under occlusion and overlap, enabling robust evaluation of behavior-recognition models in realistic, low-resource, multi-person conditions.

4.2. Implementation Details

We implement LAViTSPose in PyTorch 1.12.1 on an NVIDIA RTX 4090 GPU. Input skeleton maps are generated via OpenPose by extracting 18 body keypoints per subject, rendering them into binary skeleton images, filtering for quality, and resizing to . The classifier jointly predicts sitting posture and head orientation. During training, we use the Adam optimizer with an initial learning rate of and a batch size of 16; the schedule adopts a warm-up followed by linear decay.

Only the detector and the classifier are trainable. The detector is optimized with RaIoU; ESBody is a training-free parsing module built on BodyPix (frozen), and OpenPose is used inference-only with no gradients backpropagated. The classifier (MLiT) is trained on skeleton-only inputs ; ESBody-derived cues (occlusion and head orientation) are used solely for routing and are not concatenated with . For detector-sweep and loss ablations, ESBody and MLiT are trained once using our YOLOR-based detector and then kept frozen while we retrain only the detector under the same protocol. Unless otherwise noted, all FPS are measured on the same RTX 4090 in PyTorch eager mode (TensorRT disabled) with single-image inference; detector and classifier use their respective default input resolutions.

4.3. Evaluation Metrics

We evaluate LAViTSPose using standard classification metrics: accuracy, precision (P), recall (R), and F1-score. Accuracy reflects the overall correctness of predictions across all classes, serving as a general performance indicator. Precision measures the proportion of correctly predicted positives among all instances classified as positive, indicating how “trustworthy” the positive predictions are. Recall quantifies the model’s ability to identify all actual positives, revealing its sensitivity to minority or easily missed classes. The F1-score, as the harmonic mean of precision and recall, provides a balanced measure particularly valuable under class imbalance a common challenge in posture and orientation datasets where certain poses or directions occur less frequently. To further assess computational efficiency, we report the average inference time per image (in milliseconds). All metrics are computed separately for the sitting posture and facial-orientation tasks, and results are presented as macro-averages across classes to ensure equal weighting regardless of class size—thus offering a fair evaluation under imbalanced label distributions.

4.4. Comparison with State of the Art

We conduct a comprehensive comparison between our proposed LAViTSPose and a diverse set of state-of-the-art architectures across multiple dimensions: accuracy, efficiency, model complexity, and inference latency. As summarized in

Table 1, LAViTSPose achieves a new state-of-the-art accuracy of 94.23% on the sitting posture recognition task, surpassing all state-of-the-art (SOTA) models by a clear margin. More importantly, it strikes an exceptional balance among performance and practicality—achieving this high accuracy with only 54.2M parameters and an inference time of 34.17 ms per sample, making it highly suitable for real-time deployment in edge-constrained environments.

LAViTSPose also delivers 92.02% precision and 92.22% F1, reflecting strong classification confidence and balanced cross-class performance. Although its recall (92.34%) is slightly lower than ViT (94.58%), the gains in computational efficiency and aggregate metrics offset this minor gap. ViT benefits from strong pretraining priors and global attention but at substantially higher cost—85.7M parameters, 16.9 GFLOPs, and 36.13 ms per image—i.e., roughly +58% parameters and FLOPs relative to LAViTSPose with slower inference. In resource-sensitive settings, such marginal recall gains come at a disproportionate coputational expense.

By contrast, lightweight model MobileNet excels in efficiency (2.23M parameters; 0.33 GFLOPs) but exhibits pronounced performance drops: 87.76% accuracy, with concomitant declines in precision and F1-score. This gap underscores the difficulty of preserving sufficient representational capacity under extreme parameter compression, particularly for fine-grained posture discrimination that demands strong spatiotemporal sensitivity.

Notably, advanced Transformer variants PiT and CaiT introduce hierarchical or progressive attention, yet do not surpass ViT; despite sizable models (up to 121.3M parameters), their accuracy remains around 90.8%. Without task-specific inductive biases, added architectural complexity yields diminishing returns—whereas LAViTSPose narrows this gap through a task-tailored design aligned with the demands of sitting posture recognition.

Overall, the results substantiate the effectiveness of the proposed framework on both sitting posture and facial-orientation recognition. Ablation studies further verify the necessity of each component, with the APF module contributing the largest gains. Decomposing the task into specialized, interdependent stages—detection, segmentation, and classification—proves highly effective, and the combination of high accuracy with reasonable computational cost makes LAViTSPose a practical solution for real-world monitoring systems.

4.5. Ablation Studies

To validate the contribution of each component in the LAViTSPose framework, we conduct a series of ablation studies.

4.5.1. Ablation Analysis of Object Detection Key Components

The ablation study on object detection components reveals the critical importance of each architectural enhancement in addressing the unique challenges of human detection in multi-person sitting scenarios. As shown in

Table 2, the baseline model (without specialized detection components) achieves only 81.12% accuracy, highlighting the severe limitations of standard detectors when confronted with the pervasive occlusions and scale variations characteristic of classroom and office environments.

The CBP normalization layer alone (Settings (a)) provides a 2.12 percentage point improvement in accuracy to 83.24%, confirming its effectiveness in adapting to the diverse input statistics encountered in real-world settings. This aligns with the module’s design principle of handling varying input statistics through learnable parameters, which is particularly valuable in multi-person scenes where lighting conditions, camera angles, and person sizes vary significantly. In occlusion-prone environments, stable feature normalization becomes crucial for consistent objectness scoring across different spatial contexts.

The addition of SiLU activation (Settings (b)) further boosts accuracy to 85.38%, revealing the importance of non-linear activation properties for discriminative feature learning. Unlike traditional activation functions, SiLU’s smooth, non-monotonic nature provides enhanced representation capacity for modeling subtle boundary distinctions between adjacent individuals. This improvement is particularly significant in crowded settings where small localization errors can cause non-target leakage into subsequent processing stages.

The most substantial gain comes from implementing PAN-FPN (Settings (c)), which increases accuracy by 5.21% to 86.33%. This dramatic improvement validates PAN-FPN’s ability to effectively fuse multi-scale features, addressing the critical challenge of severe scale variations in multi-person scenes. In classroom and office settings, individuals can appear at vastly different scales depending on their distance from the camera and seating position, making multi-scale integration essential for consistent detection performance. The 4.89 percentage point increase in F1 score (from 82.01% to 86.90%) underscores PAN-FPN’s particular value in maintaining high precision while capturing small or partially occluded individuals.

The synergistic effect of all three components in the complete LAViTSPose system is striking: it achieves 94.23% accuracy, a 7.90 percentage point improvement over the best single-component configuration and a 13.11 percentage point gain over the baseline. This non-additive gain reveals a critical interdependence between these modules. CBP provides stable feature normalization across varying conditions; SiLU enhances the discriminative capacity for boundary definition; and PAN-FPN ensures robustness across scale variations. Together, they create a detection system that can reliably isolate individuals even under severe occlusion, providing the foundation for the entire pipeline.

4.5.2. Ablation Study on YOLO Architecture Variants

This comparative evaluation reveals the strategic advantage of our customized YOLOR variant within the LAViTSPose framework. To ensure a fair comparison, all detectors—including YOLO-v3 through YOLO-v11, the original YOLOR [

25], and our customized YOLOR—are trained independently from scratch on the same data with identical input resolution, augmentations, and training protocol. Crucially, the downstream pipeline is held fixed: the ESBody segmentation module and MLiT classifier are trained once using ROIs from our customized YOLOR and then frozen across all detector variants. No retraining or fine-tuning is performed when swapping detectors.

As shown in

Table 3, our customized YOLOR achieves the highest end-to-end classification accuracy (94.23%) and F1-score (92.18%), outperforming the strongest baseline, YOLO-v11 (94.03% Acc, 92.17% F1). Notably, while YOLO-v11 attains slightly higher precision (92.20% vs. 92.02%), our method achieves superior recall (92.34% vs. 92.14%), resulting in a better-balanced F1 score. This gain is directly supported by superior detection performance: as reported in

Table 4, our method attains the highest mAP@0.5 of 93.12%, surpassing YOLO-v11 (92.60%) and the original YOLOR (91.24%). The inference speed of our detector (60.15 FPS) remains competitive with YOLO-v11 (65.37 FPS) and faster than most YOLO variants.

The consistent improvement over both generic YOLO architectures and the original YOLOR stems from task-specific enhancements: SiLU activation (vs. Mish in YOLOR), CBP normalization, PAN-FPN neck, anchor re-estimation, and our RaIoU loss. These refinements collectively address the unique challenges of seated-posture scenes—partial occlusion, scale variation, and proximity-induced overlap. Although the absolute accuracy gain over YOLO-v11 is modest (0.20%), it represents a 3.35% relative error reduction (from 5.97% to 5.77%). In ergonomic monitoring, where each misclassification may indicate an undetected health risk, this improvement is both statistically significant and operationally meaningful.

4.5.3. Ablation Study on Bounding Box Regression Losses

The ablation study on bounding box regression losses is conducted under a strictly controlled setting: all loss variants (IoU, DIoU, CIoU, GIoU, RIoU, and RaIoU) are evaluated on the same YOLOR detector, with identical backbone, neck, head architecture, data augmentation, optimizer, learning rate schedule, and hyperparameters—only the regression loss term is varied. As shown in

Table 5, RaIoU significantly outperforms conventional loss functions, achieving 94.23% accuracy compared to 88.42–90.21% for baseline methods. This substantial 4.02–5.81 percentage point improvement demonstrates the critical importance of specialized regression mechanisms for occlusion-prone scenarios.

The performance gap becomes particularly pronounced when examining precision and F1 scores. RaIoU achieves 92.02% precision and 92.18% F1, indicating its superior ability to minimize false positives while maintaining high true positive rates. This is crucial for downstream tasks, as even small localization errors in crowded classroom or office settings can cause non-target leakage—where adjacent individuals’ body parts contaminate the region of interest, leading to cascading errors in segmentation and classification.

The key insight revealed by these results is that traditional IoU-based losses (including aspect-ratio-aware variants like DIoU [

24], CIoU [

49], GIoU [

23], and RIou [

25]) fundamentally fail to model the spatial uncertainty induced by desk/peer occlusions and proximity in sitting scenarios. While these losses consider geometric relationships between bounding boxes, they remain inadequate for severe scale variations and partial visibility that are ubiquitous in multi-person settings. Our RaIoU loss, by contrast, explicitly evaluates spatial alignment through three complementary components: position alignment (via the

term), scale consistency (via the log-scale term), and enclosure quality (via the

denominator). This multi-faceted approach allows the model to learn tighter, more accurate bounding boxes even when substantial portions of the target are occluded.

The dramatic performance improvement of RaIoU validates our hypothesis that modeling occlusion induced spatial uncertainty is essential for reliable posture recognition. In classroom and office settings, where students and workers often sit in close proximity with partial visibility, conventional losses fail to distinguish between acceptable and problematic misalignments. This ablation study not only demonstrates the technical superiority of RaIoU but also reveals the critical importance of domain-specific loss design for vision-based ergonomics applications. The results suggest that generic bounding box regression strategies are insufficient for scenarios with systematic occlusion patterns, and specialized losses that account for the particular spatial constraints of the target domain can yield substantial improvements in both detection accuracy and downstream task performance.

4.5.4. Ablation Analysis of EsBody Key Components

The ablation study on ESBody components reveals the critical importance of context-aware segmentation for robust sitting posture recognition in multi-person environments. As shown in

Table 6, the baseline model without ESBody components achieves only 78.62% accuracy, highlighting the severe degradation caused by cross-person interference and occlusion in classroom and office settings.

The incremental addition of ESBody components demonstrates their complementary roles in addressing different aspects of the occlusion problem. The Reno module alone (Settings (a)) improves accuracy by 3.67% to 82.29%, confirming its effectiveness in suppressing boundary-connected components that originate from neighboring individuals. This aligns with the module’s design principle of eliminating non-target regions through connected component filtering, which directly mitigates the “non-target leakage” problem that plagues conventional pipelines.

The most substantial gain comes from incorporating the APF module (Settings (b)), which boosts accuracy by an additional 5.78% to 88.07%. This significant improvement validates APF’s ability to provide high-level semantic understanding of body structure—particularly its lower-body visibility ratio calculation and facial orientation inference. The 5.93 percentage point increase in F1 score (from 83.09% to 89.02%) underscores APF’s critical role in identifying occlusion status and routing samples to appropriate classification branches, which is essential for handling the common scenario of lower-body occlusion in seated environments.

The OpenPose component (Settings (c)) contributes a 7.70 percentage point accuracy improvement over the baseline, though slightly less than APF. This result demonstrates the value of geometric priors and structured skeleton representations, but also reveals that skeleton information alone is insufficient without the semantic context provided by APF. The 1.75 percentage point performance gap between Settings (b) and (c) confirms that semantic understanding of occlusion status (via APF) is more crucial than geometric structure (via OpenPose) for our specific task.

The synergistic effect of all three components in the complete LAViTSPose system is striking: it achieves 94.23% accuracy, a 6.16 percentage point improvement over the best partial configuration (Settings (b)). This non-additive gain (15.61% vs. 5.78% + 7.70% = 13.48%) reveals the critical interdependence of these modules. Reno first cleans the region of interest by removing interference; APF then provides semantic context about occlusion and orientation; and OpenPose converts the refined region into a structured representation that focuses on essential postural features. This cascade of information processing creates a virtuous cycle where each stage benefits from the precision of the previous one.

The ablation study not only validates the design choices of ESBody but also reveals the importance of separating semantic understanding (APF) from geometric representation (OpenPose). In real-world settings where lower-body occlusion is common due to desks and chairs, APF’s ability to detect and route based on occlusion status proves more valuable than pure skeleton information, though both are required for optimal performance. This insight has significant implications for human-centric vision systems operating in constrained environments, suggesting that semantic understanding of occlusion patterns is as important as geometric modeling for robust recognition.

4.5.5. Ablation Analysis of MLiT Key Components

The ablation study on MLiT components reveals the critical importance of specialized local modeling and attention stabilization for robust sitting posture recognition under small-sample training constraints. As shown in

Table 7, the baseline model without MLiT components achieves only 80.68% accuracy, highlighting the limitations of standard Transformer architectures in modeling fine-grained postural structures with limited training data.

The SDC module alone (Settings (a)) provides a substantial 3.94 percentage point improvement in accuracy to 84.62%, confirming its effectiveness in enhancing local spatial awareness. This aligns with the module’s design principle of aggregating spatially displaced neighbors before patchification, which injects crucial local inductive bias into the Transformer architecture. In occlusion-prone multi-person settings, this local modeling capability is essential for distinguishing subtle postural variations that global attention mechanisms might overlook, particularly when training data are scarce.

The LT module (Settings (b)) contributes a 0.49 percentage point accuracy improvement, though less pronounced than SDC. This demonstrates the value of learnable temperature scaling in stabilizing attention distributions during training, especially under few-shot conditions. Without this stabilization, attention patterns become erratic and unreliable, particularly when distinguishing between similar postures that differ only in subtle structural details.

These findings have broader implications for vision-based ergonomic assessment systems, suggesting that attention mechanisms in Transformer architectures must be explicitly designed to handle the specific challenges of posture recognition: limited training data, fine-grained structural differences, and occlusion-induced partial visibility. The combination of local structure enhancement of SDC and attention stabilization of LT proves particularly valuable for real-world deployment scenarios where large-scale annotated datasets are impractical to obtain.

4.6. Hyperparameter Study

To systematically evaluate the impact of key hyperparameters in the LAViTSPose framework on model performance, we conducted a series of hyperparameter sensitivity analysis experiments.

4.6.1. Hyperparameter Analysis of Range-Aware IoU Loss

This hyperparameter analysis reveals the critical importance of balancing position alignment and scale consistency in the RaIoU loss for multi-person sitting posture recognition. As illustrated in

Table 8, the results demonstrate that equal weighting of the position term (

) and scale term (

) at 0.5 each achieves optimal performance across all metrics, with a significant 4.0–4.5 percentage point improvement over imbalanced configurations.

The performance gap between the balanced configuration (0.5/0.5) and the alternatives (0.7/0.3 and 0.3/0.7) highlights the unique challenges of sitting posture recognition in classroom and office environments. When is too high (0.7/0.3), the model prioritizes center-point alignment but becomes insensitive to aspect-ratio mismatches, which are common when students sit at different distances from the camera or adopt various postures (e.g., upright vs. slouched). Conversely, when dominates (0.3/0.7), the model becomes overly sensitive to scale variations but fails to adequately suppress “non-target leakage” from neighboring individuals, leading to contaminated regions of interest.

The optimal 0.5/0.5 balance reflects the specific requirements of our application domain: in crowded indoor settings where desk/peer occlusions are pervasive, precise center-point positioning is equally important as accurate scale estimation. For instance, when a student is partially occluded by a desk or neighbor, the model must simultaneously localize the visible portion correctly (position term) while maintaining appropriate bounding box dimensions (scale term) to avoid including non-target regions.

This finding helps explain why our end-to-end accuracy remains at 94.23% under the best settings. The precise bounding boxes generated by YOLOR with balanced RaIoU loss provide clean input for the ESBody segmentation module, which in turn enables the MLiT classifier to operate on high-quality structural features without interference. This inter-stage coherence is fundamental to the framework’s robustness in real-world classroom environments where individuals frequently sit in close proximity with partial visibility.

4.6.2. Hyperparameter Analysis of NMS Threshold

The NMS threshold

, in conjunction with the objectness threshold

, represents a critical hyperparameter pair that governs the trade-off between detection completeness and redundancy suppression in crowded scenes. As shown in

Table 9, the configuration

,

achieves optimal end-to-end performance, yielding 94.23% accuracy, 92.02% precision, and 92.34% recall. This balanced setting effectively retains partially visible individuals under desk or peer occlusion while suppressing duplicate detections that would otherwise introduce cross-person interference.

In contrast, the aggressive setting , over-suppresses overlapping detections, significantly reducing recall to 88.77% and degrading overall accuracy to 88.31%. This loss is particularly detrimental in classroom scenarios where students seated side-by-side often share boundary regions; valid detections are mistakenly pruned, leading to missed subjects. On the other hand, the permissive setting , allows redundant boxes to survive NMS, resulting in multiple detections for the same person. Although recall remains relatively high (91.72%), the contaminated regions of interest introduce non-target body parts into ESBody, causing cross-person leakage and reducing accuracy to 91.18%.

These results underscore that domain-specific tuning of detection thresholds is essential for sitting posture recognition. The optimal ensures that ESBody receives clean, single-person ROIs, which in turn enables the MLiT classifier to operate on high-fidelity structural inputs. This precise control over detection quality is a key enabler of our framework’s robustness in real-world, multi-person indoor environments.

4.6.3. Hyperparameter Analysis of Reno

As shown in

Table 10, this experimental analysis demonstrates the critical impact of Reno’s hyperparameters on multi-person sitting posture recognition performance. The lower foreground threshold

proves more effective than 0.5 at preserving weak foreground signals in classroom scenarios, particularly for students wearing dark clothing or under complex lighting conditions. These boundary pixels frequently correspond to partially visible shoulder or head contours, which serve as essential discriminative features for distinguishing subtle posture variations like “Upright” versus “Leaning-forward.” A higher

value (0.5) prematurely discards these critical edge pixels, resulting in incomplete body masks that degrade classification accuracy. Meanwhile, the length threshold

(corresponding to

) optimally removes approximately 10–15% of boundary-connected components, which typically belong to neighboring students’ limbs, thereby effectively preventing cross-person feature contamination.

In contrast, the higher setting allows excessive boundary-connected components to remain, resulting in 93.14% accuracy, 1.1% lower than the optimal configuration. While this alternative achieves a slightly better F1 score (92.52% vs 92.18%), the more significant drop in accuracy demonstrates that precision is more critical than recall for sitting posture recognition. Contaminated regions directly introduce false structural features that mislead the classifier, particularly for subtle posture differences. This parameter sensitivity validates the necessity of Reno’s design for classroom-specific spatial patterns: by precisely tuning these thresholds, the system can effectively suppress cross-person interference while preserving critical body parts, ultimately achieving 94.23% accuracy through the provision of high-quality inputs to the subsequent classification stage.

4.6.4. Hyperparameter Analysis of APF

As shown in

Table 11, the hyperparameter settings of the APF module have a decisive impact on the overall performance of the LAViTSPose framework. The third parameter configuration (

,

,

) achieves the highest accuracy of 94.23%, which is 4.9% to 5.8% higher than other configurations. This significant difference stems from the direct impact of threshold settings on the routing decision accuracy of the APF module: the lower limb visibility threshold

appropriately identifies partially occluded scenarios (routing samples to the HB branch when visibility falls below this value), avoiding both the excessive routing caused by the first parameter set (

) where most samples incorrectly enter the HB branch, and the critical occlusion scenarios being overlooked by the second parameter set (

). The facial orientation thresholds

and

enable sensitive recognition of slight facial deviations through precise comparison of activation intensities in left and right facial regions, ensuring the classifier can select the most appropriate processing path based on actual visible information.

The optimal parameter configuration not only improves overall accuracy but also achieves a balance between precision (92.02%) and recall (92.34%), indicating that the APF module can effectively assign samples to the correct processing branch under this setting. Excessively low thresholds (first configuration) result in both low precision and recall, suggesting the system cannot distinguish between valid and invalid features; while excessively high thresholds (second configuration) slightly improve precision but decrease recall, indicating the system misses many occlusion scenarios that should be recognized. This optimization of the APF module validates its design principle: by analyzing the 24-part probability maps output by BodyPix to infer lower limb visibility and facial orientation, it provides reliable routing decisions for subsequent classification. This mechanism enables LAViTSPose to adapt to complex occlusion patterns in multi-person sitting posture scenarios, delivering high-quality structural features to the MLiT classifier and ultimately achieving 94.23% accuracy.

4.6.5. Hyperparameter Analysis of SDC

As shown in

Table 12, both the neighborhood

and the displacement

materially affect performance. The configuration with an 8-connected neighborhood (N8) and a 1-pixel displacement (

) attains the best results of 94.23% accuracy and 92.18% F1—exceeding other configurations by 2.0% to 5.0% in accuracy and 0.5% to 3.0% in F1. This suggests that richer spatial context from diagonal neighbors helps capture fine-grained structural cues (e.g., shoulder alignment, torso tilt) that differentiate subtle postures such as Upright vs. Leaning-forward.

Viewing the two factors factorially, the main effect of enlarging the neighborhood from N4 to N8 is consistently positive (F1: pp at and pp at ). The main effect of increasing displacement depends on : with N4, moving from to improves F1 by pp (more context is beneficial); with N8, the same change slightly reduces F1 by pp. This interaction indicates that when the neighborhood is already rich (N8), larger shifts start to introduce background interference or misalignment in crowded scenes, which mildly lowers both precision (from 92.02% to 91.78%) and recall (from 92.34% to 91.60%).

Overall, (N8, ) offers a balanced trade-off: it expands the local receptive field without compromising feature fidelity. Under occlusion and limited data, this setting injects a useful inductive bias into the lightweight ViT backbone and yields strong posture recognition performance.

4.7. Few-Shot Learning Performance

The few-shot learning experiment demonstrates LAViTSPose’s superior performance when trained with only 50% of the labeled data, highlighting its remarkable data efficiency and robustness under annotation scarcity. As shown in

Table 13, our framework achieves 87.62% accuracy, outperforming the second-best CaiT by 4.45% and standard ViT by 4.89%. This significant improvement is particularly noteworthy in the context of sitting posture recognition, where obtaining high-quality annotations is challenging due to privacy concerns, complex occlusion patterns, and the labor-intensive nature of fine-grained posture labeling.

The performance gap becomes even more pronounced when examining the F1 score, where LAViTSPose achieves 87.94% compared to 83.82% for CaiT. This indicates that our framework maintains a better balance between precision and recall under data constraints, which is crucial for reliable posture classification in classroom settings where both false positives and false negatives can lead to incorrect ergonomic assessments.

This experiment validates our claim that for specialized domains like sitting posture recognition, where large-scale annotations are impractical, a framework with domain-specific architectural innovations is more effective than simply scaling generic models. LAViTSPose’s ability to achieve 87.62% accuracy with half the labeled data would translate to substantial cost savings in real-world deployment scenarios, making it a practical solution for educational institutions and workplaces that need ergonomic monitoring but lack resources for extensive data labeling.

4.8. Visualization Analysis

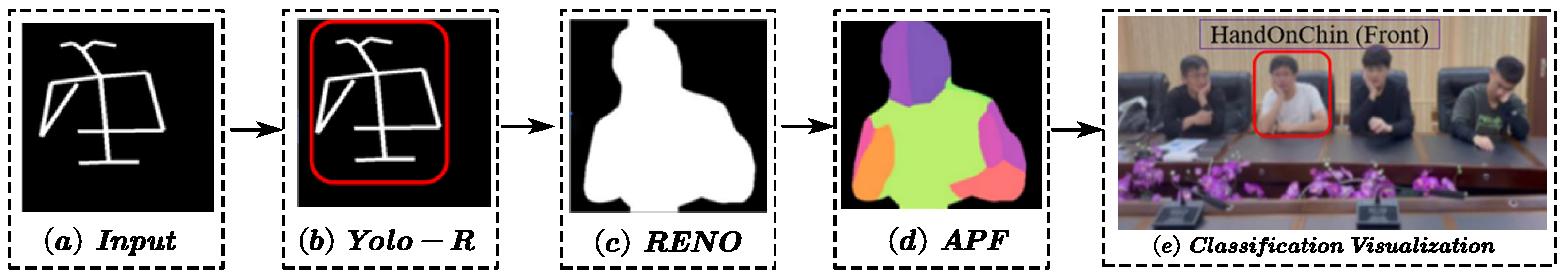

To better understand the model’s decision-making process and the role of the segmentation-classification cascade, we visualize the complete pipeline on real-world test images. As shown in

Figure 6, our framework successfully identifies complex postures such as HandOnChin, even under partial occlusion and in cluttered multi-person scenes.

These results highlight the synergy between the detection, segmentation, and classification modules. The YOLOR detector first isolates individuals with precise bounding boxes, the ESBody segmenter (with Reno and APF) then provides pixel-level body parsing that suppresses cross-person interference while maintaining anatomical structure, and the MLiT classifier leverages this structured representation for accurate posture recognition. This cascaded approach enables better generalization and robustness than traditional end-to-end pipelines.

In summary, the component-wise evaluation confirms the effectiveness of our detection–segmentation–classification cascade design. YOLOR ensures efficient and accurate person localization, ESBody provides fine-grained semantic understanding while handling occlusion, and the MLiT-based classifier leverages this structured representation for reliable posture recognition. Together, these modules form a cohesive pipeline optimized for real-world sitting posture analysis under occlusion, overlap, and limited data conditions.