The ADMM-based distributed logistic regression framework decomposes centralized optimization tasks into n subproblems, enabling collaborative training across n edge nodes. While this architecture reduces network bandwidth requirements and offers partial protection for raw user data, privacy risks persist during parameter transmission where adversaries could potentially reconstruct training data through analysis of historical model parameters uploaded to the central server. To address this vulnerability, a differential privacy mechanism that injects noise into local parameter updates is introduced, thereby enhancing the algorithm’s privacy guarantees. The injected noise strategically increases the entropy of the transmitted parameters, making it information-theoretically harder for adversaries to reconstruct sensitive training data from the observed model updates. The proposed DLGP algorithm is detailed below.

4.1. -Sensitivity Analysis

For -differential privacy implementation via a Gaussian mechanism, sensitivity analysis must first be conducted to quantify variations in local parameter updates resulting from minor input dataset modifications. In the DLGP algorithm, the -sensitivity of local parameter is analyzed, defined as the maximum -norm difference between parameter updates derived from adjacent datasets differing by exactly one sample.

Consider two adjacent local datasets

and

at edge node

i, where

(i.e., the symmetric difference contains exactly one sample). Without loss of generality, the discrepancy is assumed to occur in the first sample. The local parameters computed from

and

are denoted as

and

, respectively, which are obtained by minimizing slightly different objective functions

where

represents the local cost function from

Section 3, and

captures the logistic loss difference introduced by the modified sample

From the smoothness properties of the objective functions, the gradients at their respective minima satisfy

and

. Given the

-strong convexity of

, the inequality

is satisfied. Application of the Cauchy–Schwarz inequality to the left-hand side yields

Combining Equations (

21) and (

22) and substituting the expression for

leads to

By the triangle inequality, the numerator term is bounded as

where each term is bounded by 1 due to sigmoid function properties, resulting in

Substituting Equation (

24) into Equation (

23) gives the

-sensitivity of the local parameter update

as

This sensitivity bound quantifies the maximum information leakage that could occur from a single data point modification, providing a foundation for calibrating the noise required to achieve the desired entropy increase in the output distribution.

4.2. Dynamic Noise Generation and Perturbation Implementation

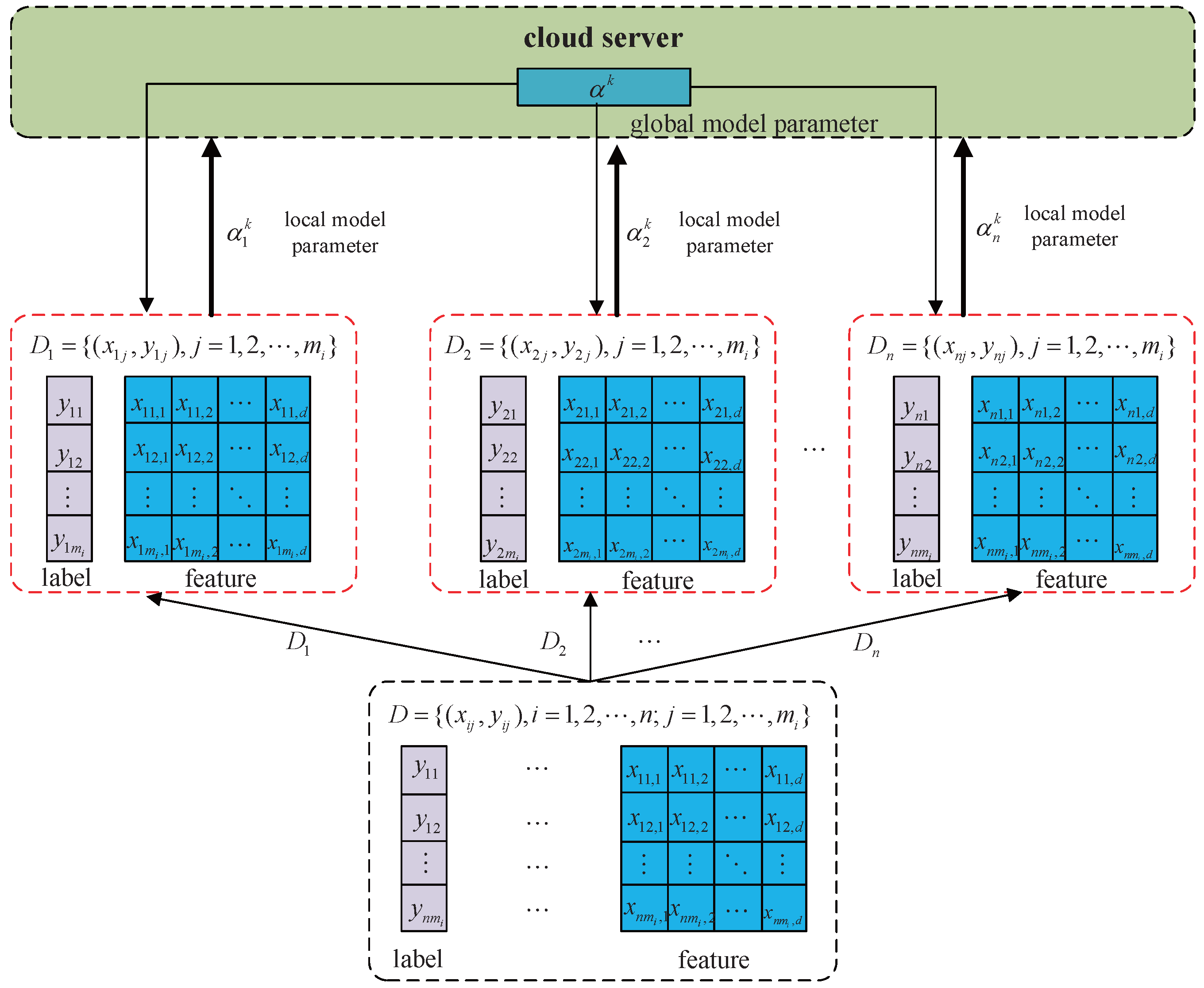

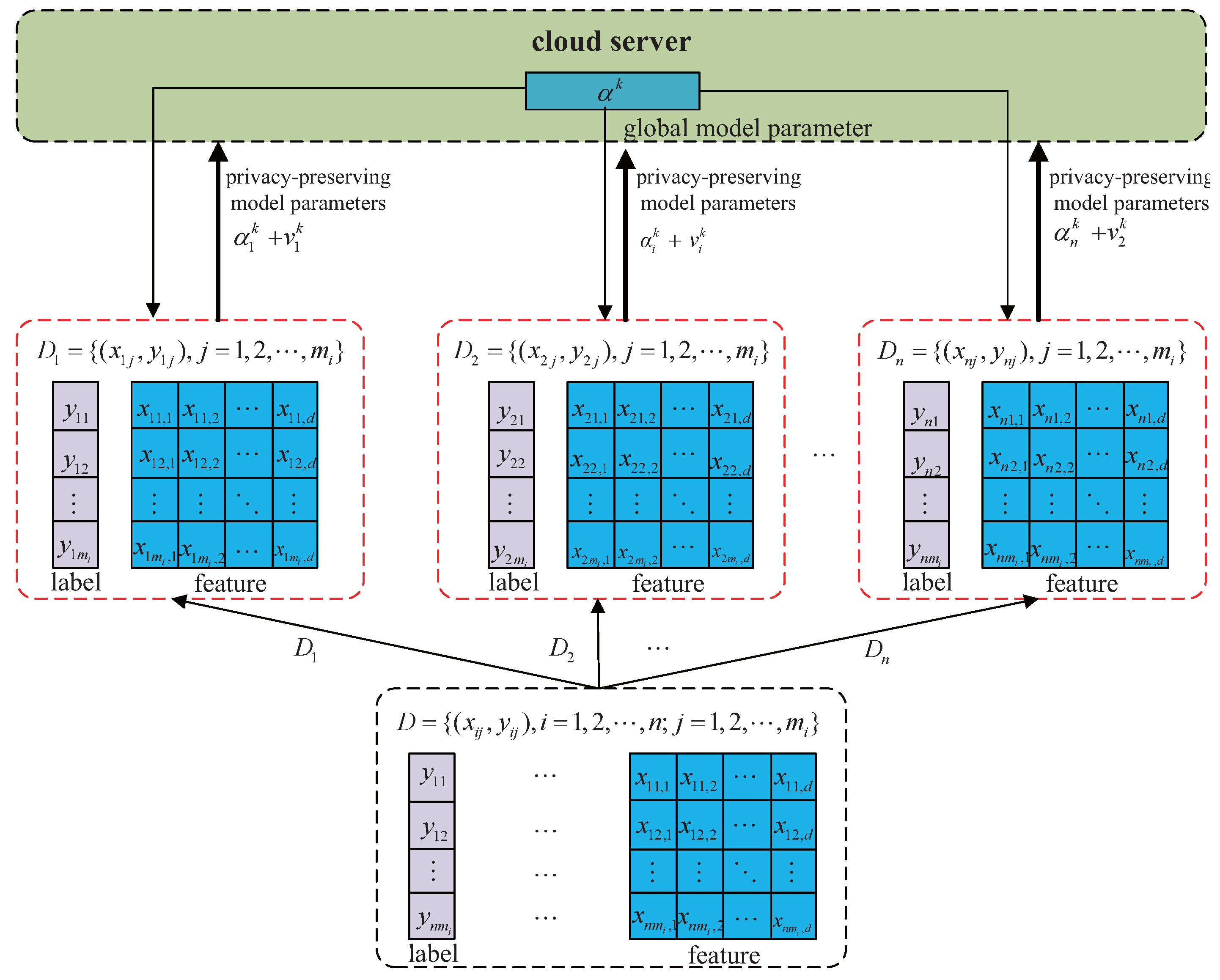

The workflow of the DLGP algorithm is illustrated in

Figure 2. Computational tasks are distributed by the central server to

n edge nodes. During the

k-th iteration, local parameters are computed by each edge node using Newton iterations as described in

Section 3. To prevent privacy leakage, calibrated Gaussian noise is injected into these local parameters prior to transmission to the server.

Based on the Gaussian mechanism for differential privacy and the sensitivity result in Equation (

25), the noise added to each local parameter must follow a Gaussian distribution

, where the standard deviation

is calibrated as

which ensures that the perturbed parameter

satisfies

-differential privacy for each update step. The Gaussian distribution is chosen specifically because it represents the maximum entropy distribution for a given variance, meaning it provides the greatest amount of uncertainty for a given noise power constraint. This optimal entropy property makes Gaussian noise particularly effective for obscuring the original parameter values while minimizing the impact on utility.

Following privacy protection implementation across all edge nodes, perturbed values

are uploaded to the central server for global aggregation. The global parameter update rule is adjusted for perturbed local parameters as

Subsequently, dual variables are updated by each edge node using perturbed local and aggregated global parameters

Updated dual variables and global parameters are then utilized for the

-th iteration of local parameter optimization. This process continues until

K total iterations are completed, at which point final global parameter

is returned. The iterative noise injection creates a cumulative privacy protection effect, where each round of perturbation further increases the uncertainty about the original training data, effectively implementing a form of progressive information obfuscation throughout the learning process. The complete DLGP algorithm is summarized in Algorithm 2.

| Algorithm 2 Pseudocode for the DLGP algorithm |

- Require:

Local datasets . - Ensure:

Final global model parameter . - 1:

Initialize , , and . - 2:

for to K do - 3:

for to n do - 4:

for to do - 5:

Construct the local cost function using Equation ( 14). - 6:

Update the gradient using Equation ( 16). - 7:

Update the Hessian matrix using Equation ( 17). - 8:

Update the step size and local parameter using Equation ( 15). - 9:

end for - 10:

Set the local parameter . - 11:

Compute the sensitivity using Equation ( 25). - 12:

Calculate the Gaussian noise standard deviation using Equation ( 26). - 13:

Generate Gaussian noise . - 14:

Apply perturbation: . - 15:

end for - 16:

Update the global parameter using Equation ( 27). - 17:

for to n do - 18:

Update the dual variable using Equation ( 28). - 19:

end for - 20:

end for - 21:

return Final global model parameter .

|

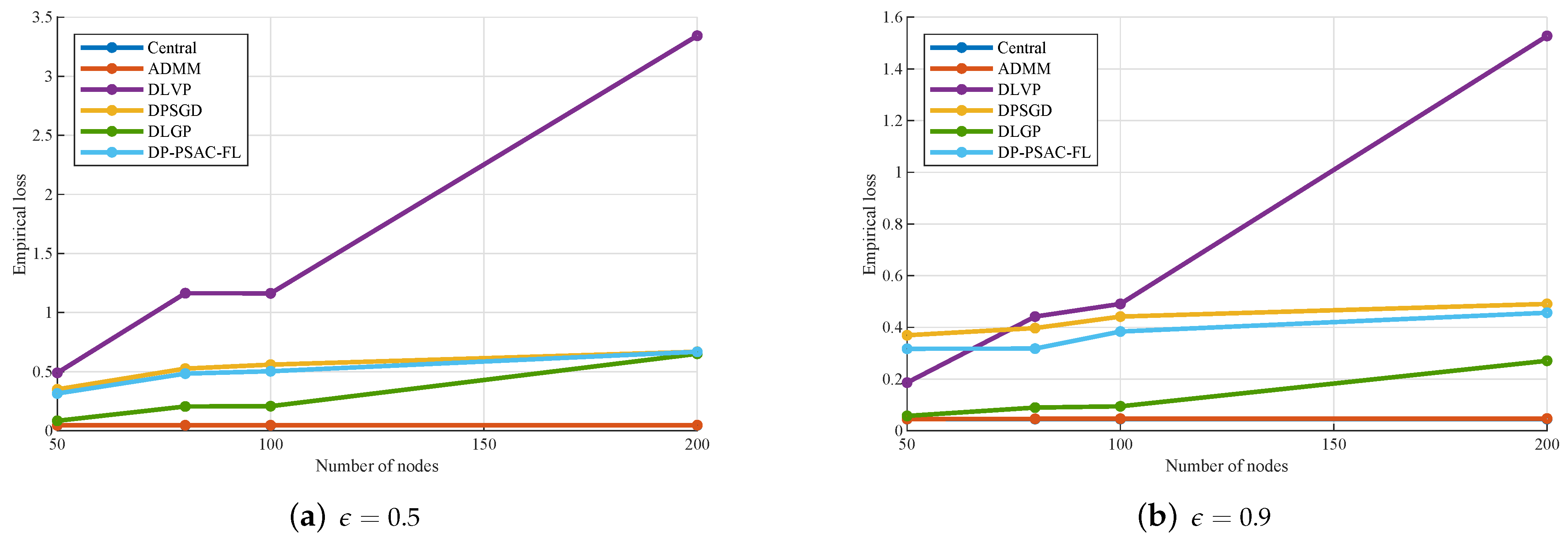

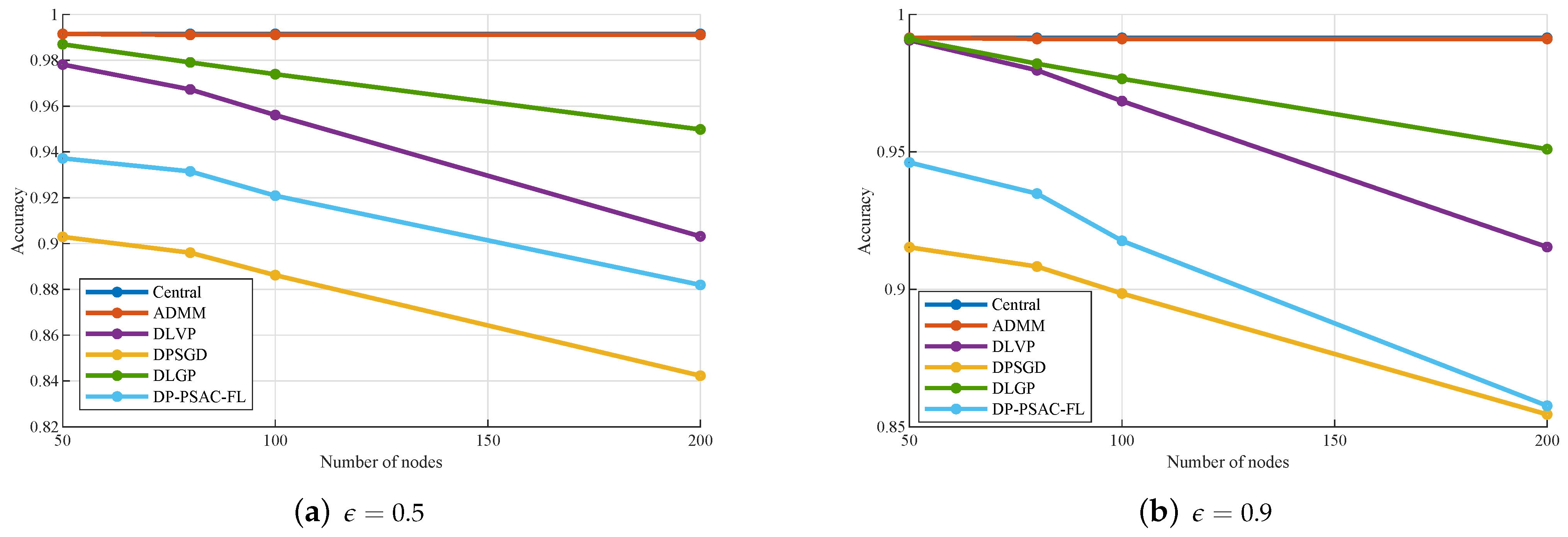

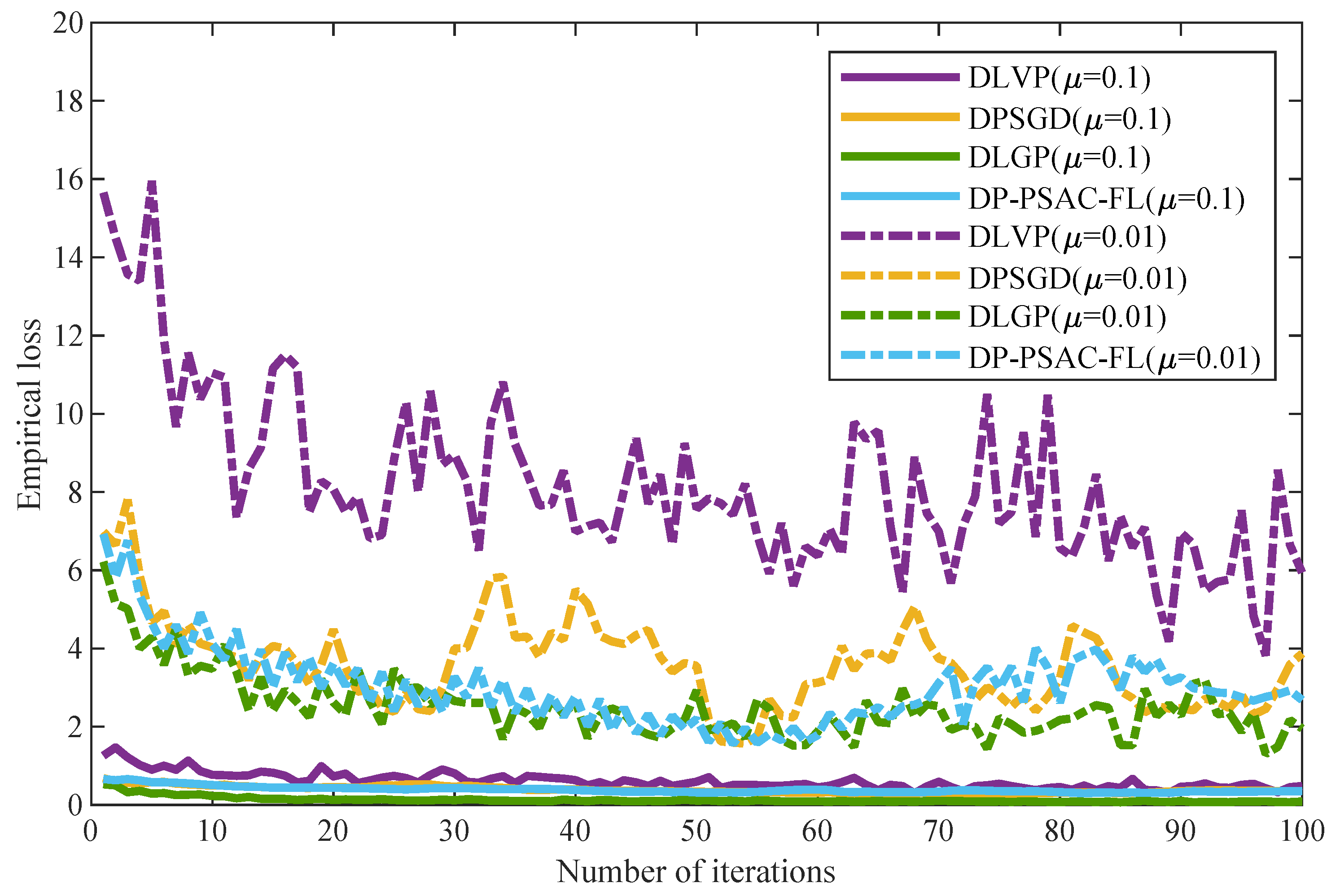

In practical deployments, the selection of the privacy budget

critically influences the trade-off between privacy guarantees and model utility. A smaller

value enforces stronger privacy protection by increasing the noise scale according to Equation (

26), which can potentially degrade model accuracy by obscuring the true parameter updates. However, for larger datasets, the impact of noise is mitigated due to the reduced

-sensitivity per data point, as sensitivity is inversely proportional to the local dataset size

. This implies that in data-rich environments, even stringent privacy settings can be achieved without substantial loss in utility, as the model benefits from greater statistical stability.

4.3. Computational Complexity Analysis

The computational complexity of DLGP primarily stems from local parameter updates using Newton’s method, global parameter aggregation, dual variable updates, and the additional privacy protection mechanism. Consider a federated system with n edge nodes, each maintaining a local dataset of size (assumed equal to m for simplicity), feature dimension d, ADMM iteration count K, and maximum Newton iterations per ADMM round.

The local parameter update represents the most computationally intensive component of the algorithm. At each edge node during every ADMM iteration, Newton’s method is employed to minimize the local augmented Lagrangian function. The gradient computation according to Equation (

16) requires evaluating the logistic loss gradient, regularization term, and constraint penalties across all

m local samples, resulting in

complexity. The Hessian matrix calculation following Equation (

17) involves computing the sum of outer products for all samples, yielding

complexity. The Newton update step in Equation (

15) necessitates solving a linear system through matrix inversion, which incurs

complexity. With

Newton iterations per ADMM round, the total local computation complexity per node becomes

.

The privacy protection mechanism introduces additional computational overhead that must be quantified. The

-sensitivity calculation derived in Equation (

25) involves a closed-form expression computable in

time. Gaussian noise generation according to Equation (

26) requires sampling

d independent random values, resulting in

complexity. Although these privacy operations add incremental costs, they are negligible compared to the local optimization overhead.

Global aggregation and dual variable updates contribute moderately to the overall computational burden. The server-side global parameter aggregation in Equation (

27) computes the average of

n perturbed local parameters, requiring

operations. The dual variable updates at each edge node following Equation (

28) involve simple vector operations with

complexity per node, totaling

across all nodes.

The overall computational complexity of DLGP across K ADMM iterations combines all these components. The dominant factor is the local Newton optimization, which scales with . The privacy protection and coordination operations contribute , which becomes negligible for practical problems where or dominates. The specific complexity characteristics depend on the relationship between feature dimension d and sample size m. When , the term dominates, making complexity linear in sample size. When d is large ( or ), the term from matrix inversion becomes predominant.

Communication overhead represents another critical aspect of distributed algorithm performance. In each ADMM iteration, every edge node uploads a perturbed d-dimensional parameter vector and downloads the global d-dimensional parameter, resulting in communication per round. The total communication overhead over K iterations is , which is identical to the baseline ADMM algorithm without privacy protection. This demonstrates that the privacy mechanism does not increase communication overhead.

Therefore, compared to the standard ADMM framework, DLGP introduces minimal additional computational burden. The privacy operations add only to the overall complexity, which is dominated by the local optimization costs.

The computational complexity analysis reveals that the DLGP algorithm maintains practical efficiency for edge computing environments. As summarized in

Table 2, the dominant computational cost stems from the local Newton optimization, specifically the Hessian computation (

) and matrix inversion (

) operations. The privacy protection mechanism introduces only linear overhead

, which becomes negligible compared to the polynomial terms in the local optimization. Importantly, the communication overhead remains at

per iteration, involving only the transmission of parameter vectors between edge nodes and the central server. The favorable complexity profile ensures the algorithm’s scalability while maintaining rigorous privacy guarantees through differential privacy.