1. Introduction

In recent years, the rapid advancement of the Internet of Things (IoT) and Artificial Intelligence (AI) technologies has significantly promoted the widespread adoption of voice-enabled AI assistants in various IoT terminals, such as smart homes and wearable devices [

1]. To further enhance the battery life of portable IoT terminals, Keyword Spotting (KWS) has emerged as a critical technology. Serving as the intelligent wake-up engine for AI assistants, KWS ensures that IoT terminals can intelligently switch between sleep and wake states during user operation intervals, making it an indispensable component [

2].

As KWS operates continuously, its power consumption constitutes a major portion of the energy expenditure of AI assistants and the entire IoT terminal [

2]. Achieving high recognition accuracy while effectively reducing KWS power consumption is a significant challenge actively explored by both academia and industry. Early KWS systems primarily relied on traditional machine learning methods, such as Gaussian Mixture Models (GMMs) and Hidden Markov Models (HMMs), for speech recognition. These methods are susceptible to interference from noise, accent variations, or speaker changes, resulting in relatively low recognition rates. Recently, with the rise of Deep Neural Networks (DNNs), DNN techniques with superior recognition capabilities have become the mainstream trend in the KWS field [

3]. However, DNNs are inherently computation- and memory-intensive. Deploying DNN-KWS modules on hardware resource-constrained portable IoT terminals is an extremely challenging task [

4]. This challenge aligns with the core trade-off in Rate-Distortion Theory, where one seeks the minimal bit-rate (model size/complexity) for a given level of accuracy (distortion).

Network quantization is an effective method for compressing DNN models and addressing deployment difficulties [

5]. It can be viewed as an efficient encoding strategy guided by information theory, representing network parameters and activations with minimal bits to reduce information loss. M. Shah et al. [

6] first proposed a quantized three-layer Convolutional Neural Network (CNN) design for KWS tasks. Compared with a floating-point three-layer CNN, the quantized CNN achieved 89.5% recognition accuracy using only 6-bit weights and 16-bit fixed-point computations, consuming merely 14% of the power of the full-precision model. This work strongly demonstrates the potential of quantization in reducing DNN-KWS power consumption.

Given that binary network quantization can simplify the complex multiply–accumulate (MAC) operations in convolution into efficient XNOR logic operations, thereby maximizing the reduction in computational complexity and memory resource consumption in DNN-KWS, Binary Neural Networks (BNNs) have become a focal point for researchers in the DNN-KWS field [

7]. Binarization can be conceptualized as transmitting original high-precision information through an extremely low-capacity channel. The core challenge is to maintain sufficiently high mutual information between the input features and the final decision under this constraint. Zheng et al. [

8] proposed a BNN-KWS design based on a two-layer fully connected network. This design reduced the computational load of the KWS module by 94% through network binarization while maintaining 91% recognition accuracy. To further reduce the number of XNOR logic units in BNN-KWS, Liu et al. [

9] introduced approximate computing into the BNN-KWS design. Their design incorporated a Signal-to-Noise Ratio (SNR) prediction module, enabling adaptive dual-mode configuration of standard and approximate computation, thereby reducing the computational complexity of BNN-KWS to approximately 1.2% of the full-precision model. However, the approximate computing strategy caused the recognition accuracy of BNN-KWS to drop to 87.9%. To improve the computational precision of BNN-KWS, Yu Gong et al. [

10] introduced a quality assessment mechanism into the approximate computing BNN-KWS design, achieving 88.1% recognition accuracy with only 1.6% of the computational complexity of the full-precision model. Subsequently, Lin et al. [

11] increased the KWS recognition rate to 95% by appropriately increasing the network depth.

These network binarization methods effectively reduce DNN-KWS power consumption by lowering the computational complexity of the DNN module. However, since both parameters and activation functions in binarized networks are represented by only 1 bit, the model’s expressive power is limited, and optimization is challenging [

12]. Consequently, the recognition rates of most existing BNN-KWS modules do not exceed 95% [

13]. From an information theory standpoint, the 1-bit representation severely constrains the amount of information that can be transmitted per layer, potentially creating an information bottleneck during the forward pass. This leads to the loss of critical information and often results in output probability distributions with high Shannon entropy, indicating significant uncertainty. In practical applications, KWS modules with lower recognition rates experience frequent false wake-ups, increasing the average power consumption of AI assistants [

13]. To solve this problem, this paper proposes a speech-enhanced BNN model, namely the Probability Smoothing Enhanced Binarized Neural Network for KWS (PSE-BNN), which improves BNN-KWS recognition accuracy by employing smoothing filtering to reduce noise during the recognition process. The design is inspired by the information processing principle of utilizing temporal correlation to reduce uncertainty. The main innovative contributions of this work are as follows:

Probability Smoothing Enhanced Binarized Neural Network (PSE-BNN): We propose a novel PSE-BNN model featuring a two-layer hierarchical architecture. The first layer takes Mel-Frequency Cepstral Coefficients (MFCCs) as inputs to extract preliminary probability features. The second layer leverages temporal correlations between consecutive speech frames to apply smoothing filtering to the outputs of the first layer, acting as an SNR enhancer by utilizing the mutual information between adjacent frames to reduce the conditional entropy of the current frame’s output, enhancing keyword recognition accuracy. Evaluated on the open-source Google Speech Commands Dataset (GSCD) [

14], the PSE-BNN achieves a recognition accuracy of 97.29%. The entropy of the output probability distribution is significantly reduced after smoothing.

FPGA-Based Hardware Implementation: We designed and implemented an efficient hardware circuit for the PSE-BNN model on an FPGA. Optimizations include employing approximate computing and Coordinate Rotation Digital Computer (CORDIC) algorithms to replace MAC operations in the network’s logic operations and the newly added smoothing filter layer with logical computations. Implemented on the Xilinx VC707 development board (Xilinx, Inc., San Jose, CA, USA), the PSE-BNN module occupies only 0.64% of LUTs, 0.14% of FFs, and utilizes no DSP resources. This design achieves efficient low-power information processing, aligning with the fundamental principles of information theory concerning energy, computation, and information.

Comprehensive Evaluation: We present detailed software and hardware-in-the-loop (HIL) test results, demonstrating the model’s high accuracy and low resource utilization, significantly outperforming state-of-the-art designs in both metrics (accuracy +1.93%, resources −65%).

4. FPGA-Based Hardware Implementation of PSE-BNN

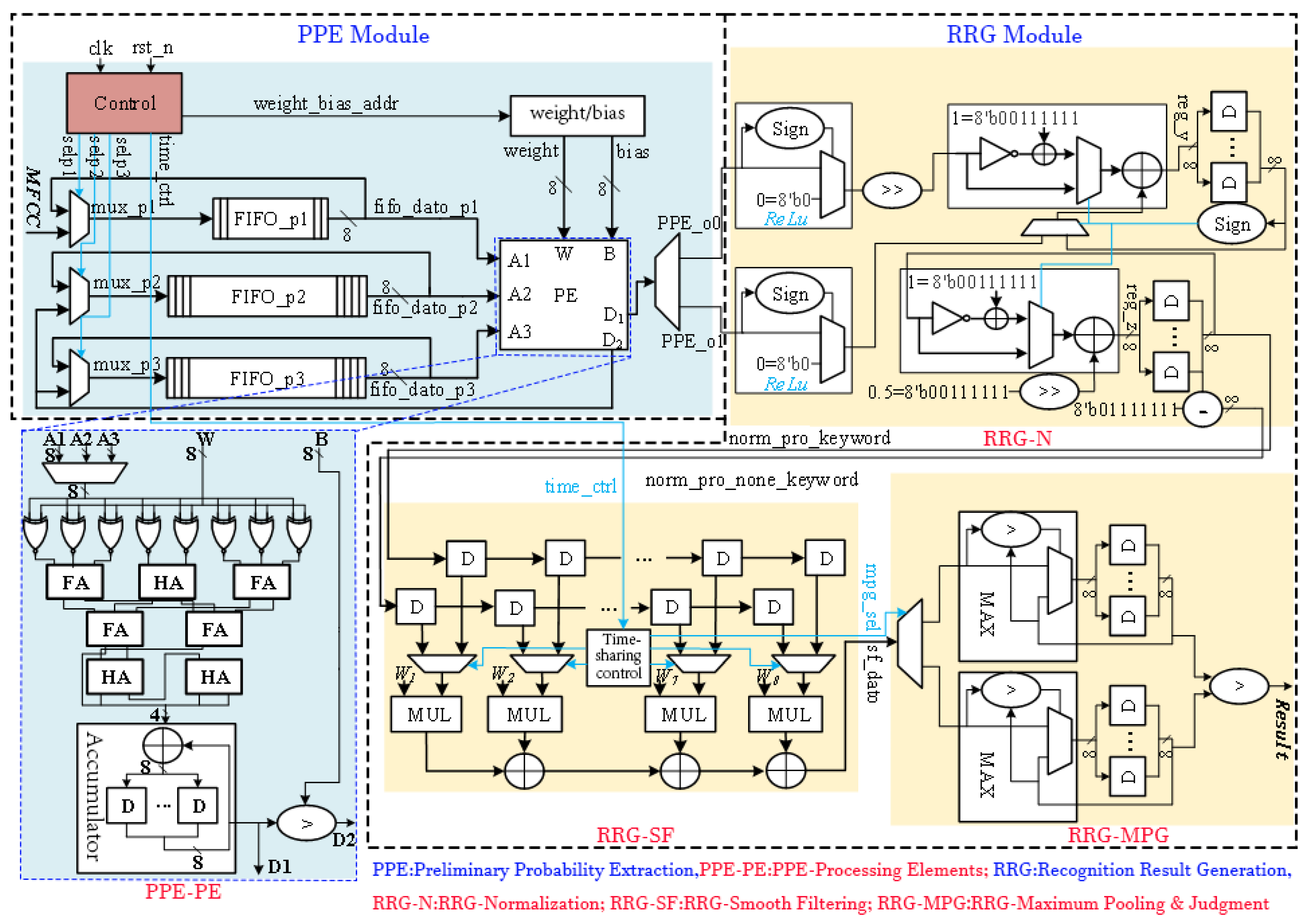

This section presents the hardware design of the proposed PSE-BNN model, with the specific circuit architecture shown in

Figure 3. The goal of the hardware implementation is to physically realize the aforementioned information processing flow efficiently, ensuring reliable processing of the information stream under low-power and low-latency constraints.

4.1. PPE Module Implementation

The PPE module, receiving MFCC data and generating preliminary probabilities, handles the computations of the Preliminary Probability Extraction layer. A circular buffer module caches input data and intermediate data from the FC1 and FC2 layers. This buffer module is pivotal for enabling the sequential reuse of a single Processing Element (PE) across all three FC layers. By temporarily storing the intermediate results, the buffer provides the necessary data interface for the time-multiplexed execution of FC1, FC2, and FC3 on the same physical hardware. By reusing Processing Elements (PEs), the module performs inference calculations for FC1, FC2, FC3, and the intermediate Sign layers. This reuse strategy exemplifies resource-optimized allocation in information processing, using limited hardware resources to handle a defined information processing task.

The circular buffer module consists of one FIFO with a depth of 50 and two FIFOs with depths of 256, caching input data and the FC1/FC2 intermediate data. A multiplexer (MUX) controls data reads and writes. While a single PSE-BNN computation is ongoing, the FIFOs repeatedly read and write their own output data. Upon completion, new audio feature values are loaded into the FIFOs.

The PE unit primarily performs the accumulation and comparison operations described by Equation (3), as shown in the circuit structure within

Figure 3. Input signals A1, A2, and A3 correspond to the audio feature input vector and intermediate data vectors from FC1 and FC2 layers, respectively. W and B are the input weight vector and bias term. Output signals include the raw output D

1 from FC3 and the binary activation output D

2, reused for FC1/FC2 layers. A Control module manages a MUX to route D

2 to the appropriate FIFO. Within the PE: (1) An array of 8 XNOR gates performs single-bit vector multiplication between inputs and weights; (2) A Wallace tree adder (composed of 3 half-adders and 4 full-adders) sums the product vectors; (3) An accumulator sums multiple groups of these sums to yield the convolution result D

1; (4) A numerical comparator compares D

1 with the bias B to generate the single-bit output D

2. The XNOR parallel array enables high-speed, low-power forward propagation of information.

4.2. RRG Module Implementation

The RRG module implements the Recognition Result Generation layer (

Figure 2), comprising three submodules: Normalization (RRG-N), Smooth Filtering (RRG-SF), and Maximum Pooling and Judgment (RRG-MPG). These three submodules work together to perform information refinement on the preliminary probability sequence.

The RRG-N submodule first performs

ReLU transformation through sign bit determination and multiplexer circuits, where negative inputs are set to 0 and non-negative inputs remain unchanged. Subsequently, normalization is applied to the output. Since the PSE-BNN classification has two categories, the normalization probability can be achieved through a single division operation. Normalization ensures the legality of the probability values (summing to 1), allowing for valid entropy calculation. In the circuit design, this division is implemented using an 8-iteration Cordic algorithm. The iterative formula for the nth Cordic iteration is as follows:

In the formula,

represents the dividend,

denotes the divisor,

is the directional control factor, and the iteration result

constitutes the quotient. In our design, the Cordic division circuit achieves 2× numerical scaling through shifters, implements circuit resource sharing between addition and subtraction operations using configurable adder-subtractor units, and determines specific arithmetic operations through control signals. Notably, subtraction is realized by adding the two’s complement of the input. After 8 iterative computations, the optimized normalization process described in Equation (4) completes, yielding outputs

and

.

In the RRG-SF submodule, this design employs two shift registers to store 16 independent normalized probabilities (8 consecutive frames for both keyword and non-keyword categories). The shift registers form a short-term memory unit, preserving the temporal context of information. The convolution calculation between probabilities and weight vectors is implemented through multiply–accumulate (MAC) circuits. By utilizing multiplexers to reuse partial computation circuits, time-division processing for dual-category probability filtering is achieved, ultimately implementing the smoothing filter computation defined in Equation (5).

The RRG-MPG submodule acquires the maximum value of smoothed probabilities across 4 consecutive frames through a maximum value extraction circuit, then stores this result using register arrays, thereby implementing the confidence score computation specified in Equation (6). Finally, a decision comparator performs comparative analysis between keyword and non-keyword confidence scores to generate the final keyword recognition output (Equation (7)).

4.3. Timing Characteristics of the PSE-BNN Circuit System

The timing diagram of the proposed PSE-BNN circuit system is illustrated in

Figure 4. The system operates in a sequential execution mode rather than a pipelined one. This design choice is a direct consequence of the hardware-reuse strategy employed in the PPE module, where a single Processing Element (PE) is time-multiplexed across the FC1, FC2, and FC3 layers. Consequently, the system must complete the processing of one entire speech frame before commencing computation on the next. The PPE module first requires 514 clock cycles to complete the preliminary probability extraction for a single speech frame. Subsequently, the RRG module performs its normalization, smoothing, and decision-making within 22 clock cycles. Thus, the complete keyword recognition functionality for one frame totals 536 clock cycles. This fixed latency defines the total processing time from information input to decision output. While this non-pipelined approach does not support the concurrent processing of multiple consecutive frames, it achieves the design goal of ultra-low resource utilization. The resulting latency is fully sufficient for real-time KWS applications, as the processing time per frame remains significantly shorter than the typical duration of a speech frame itself, ensuring no data backlog occurs during continuous operation.

It is noteworthy that although the RRG module introduces additional processing steps, it completes its operations in merely 22 clock cycles, accounting for only approximately 4% of the total latency. This demonstrates that the proposed smoothing mechanism delivers a significant improvement in accuracy while introducing negligible latency overhead.

5. Experiments and Results Analysis

To verify the effectiveness of the proposed PSE-BNN model, we conducted performance evaluations using the GSCD as the test benchmark.

First, the recognition accuracy of the PSE-BNN model was validated through co-simulation of software and hardware implementations. The software testing was performed on a PC configured with the following specifications:

CPU: Intel® Core™ i7-11800H

RAM: 16 GB

GPU: NVIDIA GeForce GTX 3050 (8 GB VRAM)

The software model was implemented and trained using a custom framework built in Python 3.7.

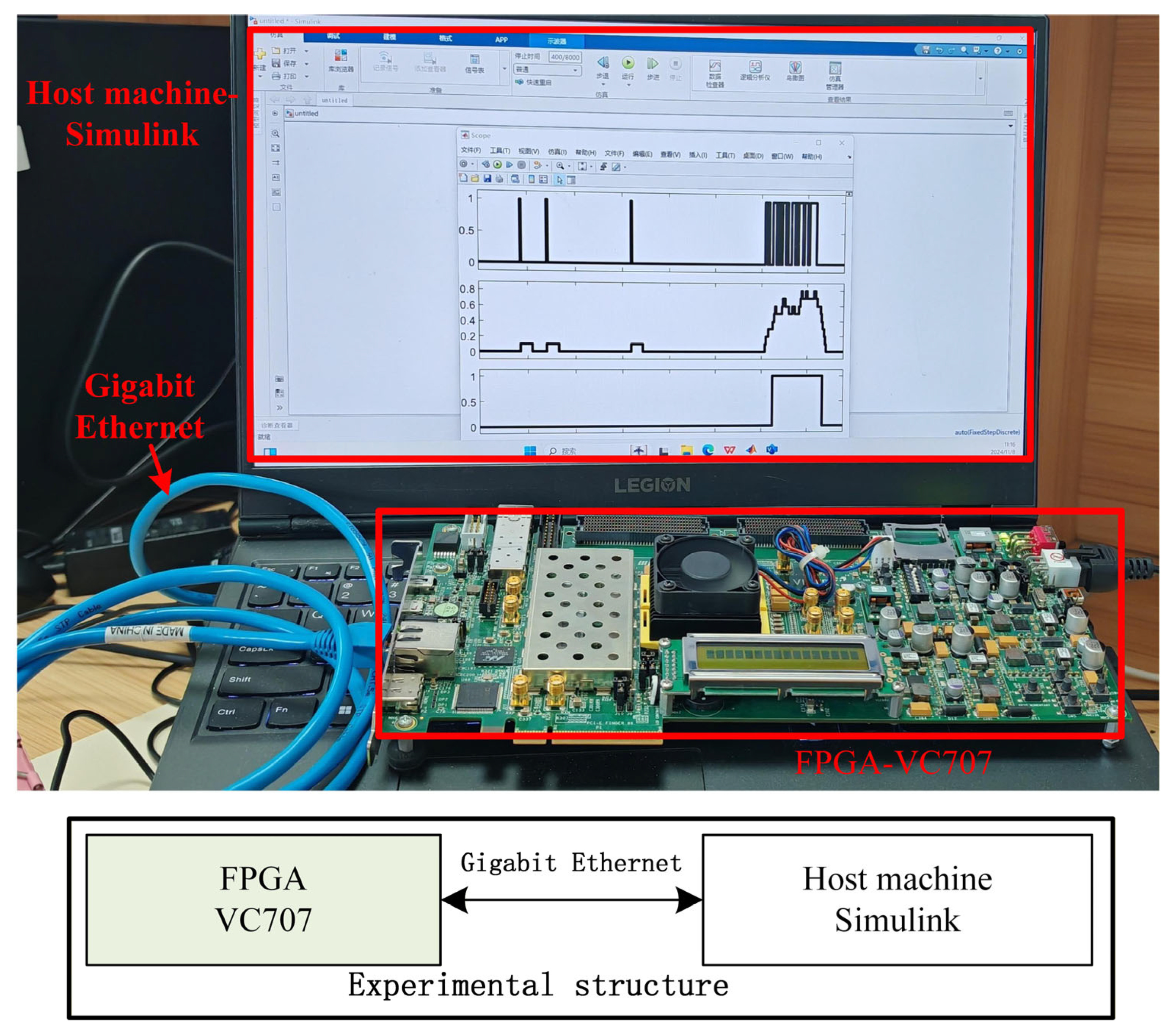

For hardware validation, a Hardware-in-the-Loop (HIL) test platform was implemented, as illustrated in

Figure 5. The platform comprises a Xilinx FPGA VC707 development board interfaced with a host PC via Gigabit Ethernet. The PSE-BNN model was deployed on the VC707 FPGA, and the HIL platform was utilized to evaluate the hardware recognition performance of the keyword spotting (KWS) system. Given the low resource utilization of the proposed PSE-BNN on the Virtex-7 FPGA, the design is also suitable for deployment on smaller FPGAs, which are more cost-effective for edge devices.

Subsequently, this work analyzes the hardware implementation performance of the proposed KWS system. The hardware resource utilization of the KWS system is evaluated and compared with existing hardware designs.

5.1. Network Recognition Performance Analysis

5.1.1. Software Testing Performance

In the experiments, we first converted raw audio from the GSCD into binary MFCC features using the VoiceBox speech processing toolbox in MATLAB. MFCC feature extraction itself is a compression and reshaping of speech spectral information, aiming to preserve the information most important for human hearing or for the classifier. These features were subsequently fed into the PSE-BNN model for processing.

A multi-group experimental strategy was adopted: each of the 30 keywords in the GSCD served sequentially as the positive class, while the remaining keywords and noise samples constituted the negative class. Thirty independent experiments were conducted, with the mean accuracy across all groups serving as the model evaluation metric. During network training, the learning rate was set to 0.001 with 20 epochs and a batch size of 500.

Figure 6 presents the accuracy statistics of the PSE-BNN model on the GSCD. The results demonstrate that while recognition accuracy varies slightly across different keywords, the overall recognition accuracy reaches 97.29%.

5.1.2. Hardware Testing Performance

To validate the real-time performance of the proposed PSE-BNN model, a real-time HIL test platform was established. During testing, the PC transmits binarized MFCC features to the VC707 FPGA in real-time via MATLAB/Simulink 2022b. The VC707 performs PSE-BNN hardware computations and then transmits the results back to the PC through Ethernet for visualization in MATLAB/Simulink. This closed-loop test validates the correctness and real-time performance of the complete flow of information from the software environment to hardware processing and back.

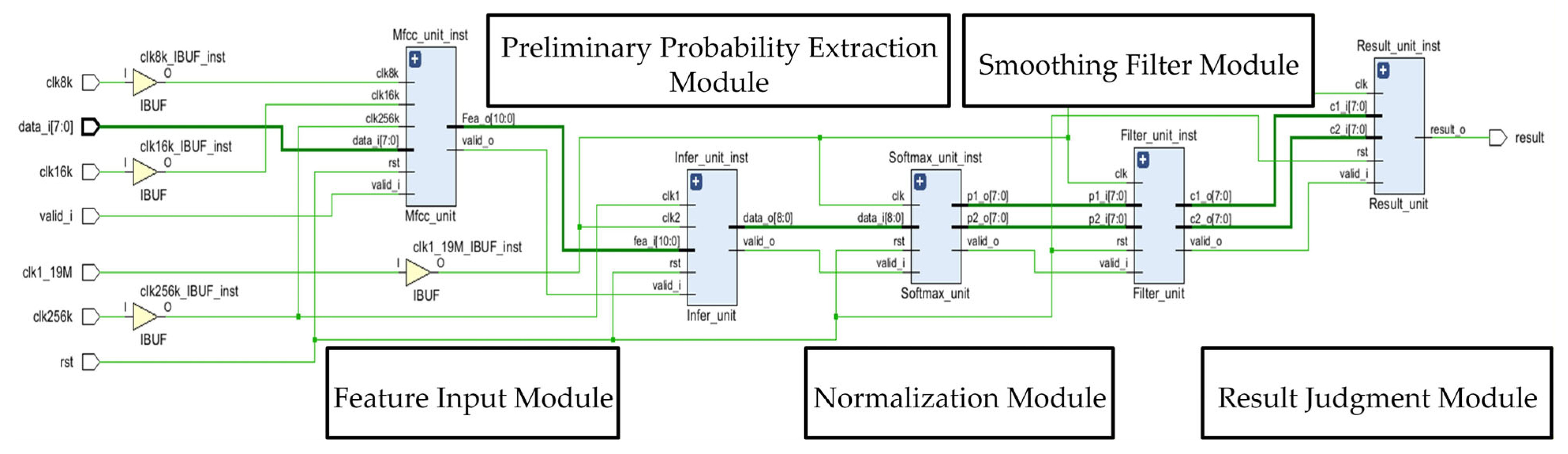

Figure 7 shows the RTL schematic diagram of the proposed system.

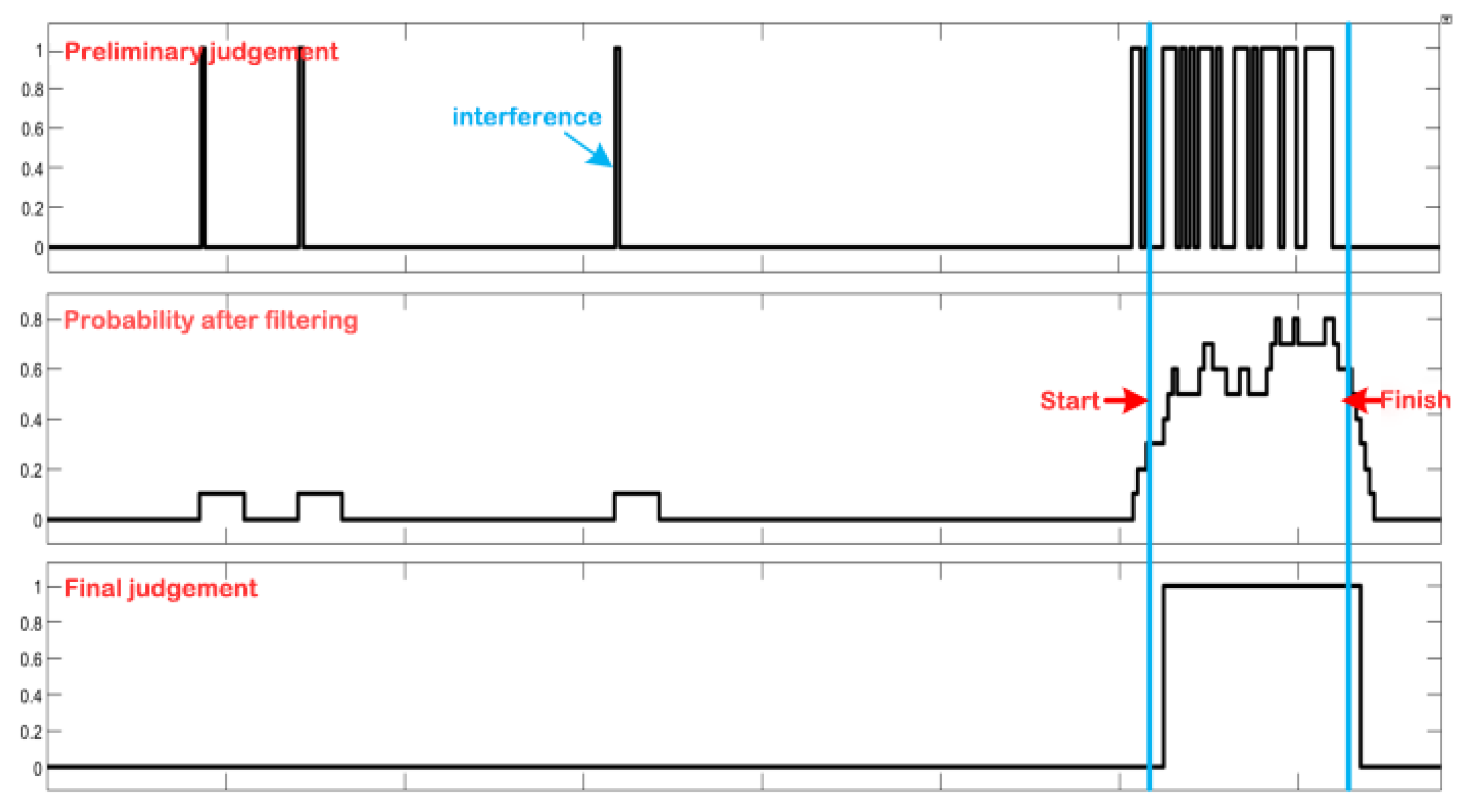

Figure 8 Actual Processing Waveform on the HIL Platform. To validate the PSE-BNN model, the outputs of the PPE module, RRG-SF module, and RRG-MPG module in the hardware implementation are transmitted back to the PC in real-time. The waveform demonstrates that the smoothing processing effectively eliminates interference from noise and disturbance signals in continuous speech segments, thereby generating more accurate keyword recognition results. This visually demonstrates the process of information being refined layer by layer and entropy being gradually reduced.

5.2. Analysis of System Hardware Implementation Performance

5.2.1. Hardware Resource Utilization

Table 2 details the resource utilization of the proposed PSE-BNN/KWS system. Since the convolutional operations in the PSE-BNN model involve no multiplication, the design requires no DSP resources. Furthermore, through approximation-optimized design, the implementation occupies only 1939 LUTs, corresponding to a 0.64% overall utilization rate. The single-bit characteristic of the PSE-BNN model significantly reduces memory requirements for the KWS system. By employing data concatenation storage, the design minimizes Block RAM (BRAM) usage, requiring only 6.5 BRAM blocks (equivalent to 234 Kb, assuming 36 Kb blocks). The extremely low resource utilization indicates that this design achieves a very high information processing energy efficiency ratio, i.e., the amount of information processing (or recognition performance achieved) per unit of hardware resource consumed is very high.

Figure 9 illustrates the correlation between the number of fully connected (FC) layers and the resulting memory footprint and recognition accuracy. This trend is further corroborated by comparisons with prior implementations. For example, Liu [

9] used a 4CONV + 1FC structure to achieve 92.6% accuracy with 21.91 KB memory, while the VLSI’18 [

9] design, employing 4CONV + 2FC, reached 95% accuracy at 52 KB. Our architecture, which uses 3FC + 1CONV, attains a notably higher accuracy of 97.29% at 234 KB. These results confirm that augmenting the number of FC layers consistently improves accuracy, though at the cost of increased memory usage. This trade-off is intentional in our design, reflecting a deliberate choice to prioritize recognition accuracy for high-accuracy keyword spotting, even with moderate memory overhead.

5.2.2. Comparison with State-of-the-Art Works and Analysis

Table 3 presents a comprehensive comparative analysis of state-of-the-art hardware designs. Most existing hardware designs primarily utilize the GSCD, either version v1 or v2, focusing on word-level decision-making for practical KWS applications at the edge. Our KWS architecture achieves a classification accuracy of 97.29%, which represents a significant improvement of 1.93 percentage points over the current state-of-the-art design [

15], despite utilizing a shallower configuration (3 fully connected layers and 1 convolutional layer) compared to its 7-layer convolutional architecture. This evidence highlights the effectiveness of fully connected layers in optimizing KWS accuracy. This evidence highlights the effectiveness of fully connected layers in optimizing KWS accuracy.

Our trade-offs in the architecture become apparent when examining resource utilization. Our design increases the number of fully connected layers, necessitating a larger allo-cation of Block RAM (BRAM). To address this, we implemented layer-wise binarization of the three fully connected layers, resulting in a significant reduction in computational complexity while maintaining performance integrity. While binarization typically reduces memory, our PSE-BNN’s increased footprint stems from employing larger, more effective fully connected layers. This architectural choice, facilitated by the computational efficiency of 1-bit operations, allows us to surpass the accuracy of all prior BNN-based works [

9,

11,

15] and even 8-bit non-BNN architectures [

16,

17]. Crucially, by implementing the model on an FPGA, we leverage distributed on-chip memory, which enables parallel data access and mitigates the typical latency penalty associated with larger models. When combined with pipelined/parallel logic and approximate computing, this approach not only accommodates the larger model but also enables a high throughput of 2.2 GOP/s at a low clock frequency, achieving the best performance in both accuracy and speed. In addition, to counteract any potential degradation in feature representation caused by binarization, we introduced low-bit quantized feature enhancement modules.

Furthermore, our hardware accelerator design incorporates an innovative approximate computing paradigm through algorithm-hardware co-design, which leads to a notable reduction in FPGA LUT utilization. The throughput, evaluated in Giga-Operations Per Second (GOPS), follows the methodology of [

18] where each binary XOR, negation, and addition is accounted as a single operation. After a series of optimized design iterations, our implementation achieves rapid execution of KWS computations. Experimental results indicate that our design provides a throughput of 2.2 GOP/s, representing a 1.89× improvement over the current state-of-the-art solution [

17] that uses GRU + FC.

Recent research has started to explore alternative neural architecture approaches for KWS system development, as seen in [

16,

17]. While these alternative architectures show reduced computational complexity, their reliance on high-precision arithmetic operations results in lower recognition accuracy and computational throughput compared to our implementation. These findings reinforce the conclusion that Binary Neural Networks (BNNs) continue to be the best architectural choice for edge-optimized digital KWS systems, especially when considering the trade-off between accuracy and throughput in resource-constrained environments.

Overall, the proposed PSE-BNN achieves a better trade-off, offering higher recognition accuracy and throughput while maintaining low hardware cost, making it highly suitable for resource-constrained edge KWS applications.

6. Conclusions

This paper presents a high-precision, high-throughput BNN-based keyword spotting (KWS) system optimized for FPGA implementation. By investigating the primary causes of limited recognition accuracy in existing BNN-KWS systems, from an information theory perspective, we identified the root cause as information loss and increased uncertainty (entropy) induced by binarization. We introduced smooth filtering into the BNN architecture, resulting in a hardware-friendly Probabilistic Smoothing Enhanced BNN (PSE-BNN) model. This model effectively reduces the entropy of the output probability distribution by utilizing temporal context information, thereby improving recognition confidence. Furthermore, the design incorporates resource-constrained optimization strategies tailored for edge-device deployment, including approximate computing and critical circuit reuse, which significantly reduce hardware complexity. Remarkably, the proposed system achieves a 2.29% improvement in recognition accuracy while operating without DSP resources and utilizing only 1939 LUTs (0.64% utilization rate). Both experimental and information-theoretic analyses demonstrate that the PSE-BNN model not only excels in traditional metrics but also shows significant advantages in the effectiveness and reliability (low entropy output) of information processing. This research provides a novel and theoretically grounded solution for achieving efficient and reliable information processing on edge computing devices with strictly constrained resources.