Abstract

Noisy permutation channels are applied in modeling biological storage systems and communication networks. For noisy permutation channels with strictly positive and full-rank square matrices, new achievability bounds are given in this paper, which are tighter than existing bounds. To derive this bound, we use the -packing with Kullback–Leibler divergence as a distance and introduce a novel way to illustrate the overlapping relationship of error events. This new bound shows analytically that for such a matrix W, the logarithm of the achievable code size with a given block n and error probability is closely approximated by , where , , and is a characteristic of the channel referred to as channel volume ratio. Our numerical results show that the new achievability bound significantly improves the lower bound of channel coding. Additionally, the Gaussian approximation can replace the complex computations of the new achievability bound over a wide range of relevant parameters.

1. Introduction

The noisy permutation channel, consisting of a discrete memoryless channel (DMC) and a uniform random permutation block, was introduced in [1] and is a point-to-point communication model that captures the out-of-order arrival of packets. Such channels can be used as models of communication networks and DNA storage systems, where the ordering of the codeword does not carry any information. Previously, several advances have been made to asymptotic bounds, including the binary channels in [2], the capacity of full-rank DMCs in [1], and the converse bounds based on divergence covering [3,4].

The code lengths of suitable codes in communication systems are in the order of thousands or hundreds, invalidating the asymptotic assumptions in classical information theory. We initiate the study of new channel coding bounds to extend the information-theoretic results for noisy permutation channels in finite blocklength analysis. Finite blocklength analysis and finer asymptotics are important branches of research in information theory. Interest in this topic has been growing since the seminal works of [5,6,7]. These works suggest that the channel coding rate in the finite blocklength regime is closely related to the information density [8], i.e., the stochastic measure of the input distribution and channel noise. The second-order approximation of conventional channels involves the variance of the information density, which has been shown to approximate the channel coding rate at short blocklengths.

The codeword positions are randomly permuted in noisy permutation channels. Conventional analysis techniques, specifically the dependence-testing (DT) bound and the random coding union (RCU) bound ([9] Theorems 17 and 18), become inapplicable. Since the messages are mapped to different probability distributions in noisy permutation channels [1], the only statistical information the receiver would use from the received codeword is which marginal distribution belongs to. Therefore, the finer asymptotic completely differs from that in conventional channels.

The main contributions of our work are the following:

- We present a new nonasymptotic achievability bound for noisy permutation channels that have strictly positive square matrices W with full rank. The two main ingredients of our proof are the following: the -packing [10,11] with Kullback–Leibler (KL) divergence as a distance, and an analysis for the error events that decouple the union of error events from the message set. Additionally, this new bound is stronger than existing bounds ([1] Equation (36)).

- We show that the finite blocklength achievable code size can be approximated bywhere , , and is the channel volume ratio.

- To complement these results and assist in understanding them, we particularize all these results to typical DMCs, i.e., BSC and BEC permutation channels. Additionally, our Gaussian approximations, through numerical results, lead to tight approximations of the achievable code size for blocklengths n as short as 100 in these cases.

We continue this section with the motivation and application. Section 2 sets up the system model. In Section 3, we provide methods to construct a set of divergence packing centers (message set) and bounds for packing numbers. In Section 4, we present our new achievability bound and particularize this bound to the typical DMCs. Section 5 studies the asymptotic behavior of the achievability bound using Gaussian approximation analysis and applies it to the typical DMCs. In Section 6, we present numerical results. We conclude this paper in Section 7.

1.1. Motivation and Application

The noisy permutation channel models the scenario where codewords undergo reordering, which occurs in communication networks and DNA storage systems. We briefly outline some applications of this channel.

- (a)

- Communication Networks: First, noisy permutation channels are a suitable model for the multipath routed network in which packets arrive at different delays [12,13]. In such networks, data packets within the same group often take paths of differing lengths, bandwidths, and congestion levels as they traverse the network to the receiver. Consequently, transmission delays exhibit unpredictable variations, causing these packets to arrive at their destination in a potentially different order from their original sending sequence. Moreover, during transmission, data packets may be lost or corrupted due to reasons such as link failures or buffer overflow. Treating all possible packets as the input alphabet, this scenario fits the noisy permutation channel model.

- (b)

- DNA Storage Systems: The DNA storage systems, known for their high density and reliability over long periods, are another motivation for our research [14,15,16]. Such a system can be seen as an out-of-order communication channel [1,14,17]. The source data is written onto DNA molecules (or codeword strings) consisting of letters from an alphabet of four nucleotides . Due to physical conditions causing random fragmentation of DNA molecules, long-read sequencing technology, such as nanopore sequencing [18], is employed at the receiver to read entire randomly permuted DNA molecules. In the noisy permutation channel, the DMC matrix models potential errors during the synthesis and storage of DNA molecules, followed by a random permutation block that represents the random permutation of DNA molecules. For a comprehensive overview of DNA storage systems, see [1,14]; studies presenting specific DNA-based storage coding schemes include [17,19,20].

1.2. Notation

We use , to represent integer intervals. Let denote the indicator function. For a given and a random variable , we write to indicate that the random variable follows the distribution . Let and denote the random vector and its realization in the n-th Cartesian product , respectively. A -dimensional simplex on is a set of points as follows:

The KL divergence and the total variation are denoted by and , respectively. For a matrix A, we use the notation to represent the rank of matrix A. The probability and mathematical expectation are denoted by and , respectively. The cumulative distribution function of the standard normal distribution is denoted by and is its inverse function.

2. System Model

The code consists of a message set , an (possibly randomized) encoder function , and a (possibly randomized) decoder function , where the notation ‘’ indicates that the decoder chooses “error”. We write for the finite input alphabet and for the finite output alphabet. M denotes the achievable code size of code .

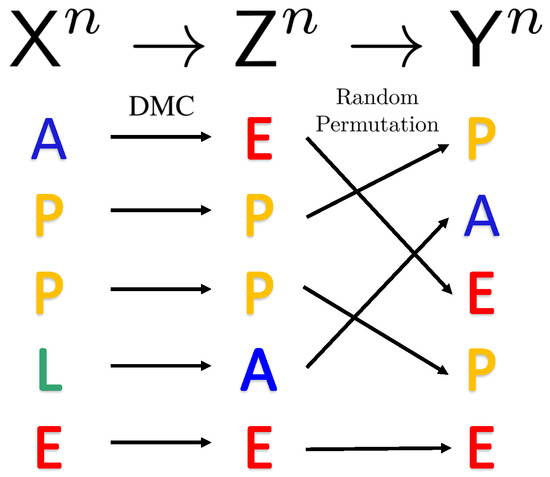

The input alphabet abstracts transmitted codeword symbols in various applications. For instance, in DNA storage applications, denotes the alphabet of four nucleotides, while the n-length codeword represents the DNA molecule formed by the corresponding n nucleotides. The sender uses encoder to encode the message M into a codeword , which is then passed through the DMC W to produce . The DMC is defined by a matrix W, where denotes the probability that the output occurs given input . Finally, passes through a random permutation block to generate . The random permutation block operates as follows. First, a random permutation is denoted as , drawn randomly and uniformly from the symmetric group over . Then, is generated by permuting according to for all . The receiver uses decoder to produce the estimate of the message . We can describe these steps by the following Markov chain:

The channel model of the noisy permutation channel is illustrated in Figure 1. For codewords drawn i.i.d. from , the random permutation block does not change the probability distribution of codewords [1], i.e., if , then and .

Figure 1.

Illustration of a communication model with a DMC followed by a random permutation.

We say W is strictly positive if all the transition probabilities are greater than 0. We impose the following restrictions on the channel.

Assumption 1.

The channel W is a strictly positive and full-rank square matrix.

For a given code , the average error probability of code is

The achievable code size with a given blocklength and probability of error is denoted by The code rate of the encoder–decoder pair is denoted by

where is the binary logarithm (with base 2) in this paper. Note that the rate for the noisy permutation channel is not the conventional definition where since the noisy permutation channels will have rate 0 under this definition. The capacity for noisy permutation channels is defined as .

3. Message Set and Divergence Packing

A divergence packing is a set of centers in simplex such that the minimum distance between each center is greater than some KL distance. The following definition abides by the packing number.

Definition 1.

The achievability space of the marginal distribution is defined by .

Definition 2.

Let be the set of divergence packing centers. The divergence packing number on is defined by

where is the packing radius.

Here, we provide some intuition for using divergence packing in noisy permutation channels. Using the ML decoder, we relate the non-asymptotic channel performance to the likelihood ratio of two distributions (decoding metric). The statistical mean of this decoding metric is the KL divergence, which arises from applying the law of large numbers as the blocklength grows. Thus, using divergence packing can obtain an upper bound of the error probability by lower bounding the distance between each distribution, and the code size can be analyzed asymptotically via the Berry–Esseen bound (see Section 5). These factors motivate us to use the KL divergence in constructing the message set.

Since the messages correspond to different distributions in noisy permutation channels, the message set can be equivalent to the set of marginal distributions at the receiver (e.g., see [1]). In the sequel, we denote the marginal distribution corresponding to message m by .

Additionally, in Gaussian approximations, we need the following definition.

Definition 3.

Let and be, respectively, the volume of the projection of and from to space , in which the y-th dimension is removed. The channel volume ratio is defined as

Next, we present several lower bounds on packing numbers. In the two-dimensional case, our construction achieves tighter bounds. For higher dimensions, our primary tool is the volume bound for -packing (e.g., see [8] Theorem 27.3). These results form the foundation for constructing marginal probability distributions in subsequent sections, while also playing a key role in the analysis of Gaussian approximations.

3.1. Binary Case

We first give the lower bound of the packing number in the binary case. Consider , where . Define

where .

Then, we have the following result proved in Appendix A.

Proposition 1.

Fix , where and . We can construct a set of packing centers by (8) with and such that

3.2. General Case

Next, we introduce a general method for constructing the set of packing centers and the bounds of their size. Let , and we consider the following set:

The intuition behind constructing this set is that the minimum distance of distributions in this uniform structure can be bounded by the total variation distance. Then, we can obtain the set of divergence packing centers that have a certain radius by applying Pinsker’s inequality.

We have the following lower bound proved in Appendix A.

Theorem 1.

Fix a W that satisfies Assumption 1 and generate . We can construct a set of packing centers by (10) with such that

where is the packing radius.

4. New Bounds on Rate

In this section, we introduce our new bound, which is based on divergence packing and yields the spirit of the RCU bound. The key ingredient is our analysis of error events.

To that end, we introduce some definitions. Suppose we have a set of marginal distributions , which is constructed by (8) or (10) with any . Fixing a , we are often concerned with the divergence packing centers close to P. To do this, we consider , where , and for . K is a constant that has value or 1 when is constructed by (8) or (10), respectively. We define as the neighboring set of P:

In general, the distribution coincides with distributions in the set , except near the boundaries of the simplex where may violate the constraints of the probability space. We use the intersection operation in (12) to make sure all elements of remain within the simplex. For convenience, we use to index , and we say is the neighboring distribution of P. By counting, we have .

For the marginal distribution corresponding to the transmitted message m, we use the log-likelihood ratio to define the following decoding metric:

where .

Then, the proof of our main result consists of three parts, each detailed in one of the following subsections. In the first subsection, we introduce a lemma. This lemma shows that the message sets constructed by (8) or (10) have an overlapping relationship for error events. In the second subsection, we use this lemma to give an equivalent expression for the error probability. The third subsection contains our main result. Additionally, we particularize this new bound to BSC and BEC permutation channels in the fourth and fifth subsections, respectively.

4.1. Overlapping of Error Events

Intuitively, the rate of decay of is dominated by the rate of decay of the probability of error in distinguishing neighboring messages. In order to use this intuition mathematically, we need to analyze the relationship between error events. The following lemma, proved in Appendix B, does this and can be used for analyzing random coding bounds.

4.2. Equivalent Expression

In this subsection, we give a lemma tailored to our purposes. It follows directly from Lemma 1.

Lemma 2.

Proof.

Remark 1.

If the transmitted message is m, Lemma 2 shows that the union of error events can be equivalent to a minor union on . Its size depends on the size of the output alphabet.

4.3. Main Result: New Lower Bound

The main result in this section is the following. Please refer to Appendix C for the proof.

Theorem 2.

Remark 2.

Theorem 2 relies on the message set constructed by (8) or (10). We restrict the channel W to be a full-rank square matrix, which makes an equal-dimensional subspace of . Therefore, the evenly spaced grid structure on can be constructed by using (8) or (10). Without this condition, we cannot apply (10) unless we make strong assumptions about W.

Remark 3.

Theorem 2 upper bounds the probability of error with the sum of the probabilities of error events on instead of , which makes our bound much stronger than [1]. In fact, if we do not use Lemma 2 but instead apply the union bound and the second moment method for TV distance ([21] Lemma 4.2(iii)) in the proof of Theorem 2, we can obtain the existing bound ([1] Equation (36)).

4.4. BSC Permutation Channels

In this subsection, we particularize the nonasymptotic bounds to the BSC, i.e., the DMC matrix is

denoted . According to Proposition 1 and Theorem 1, using (8) to construct the set of marginal distributions is better than (10) in the binary case. Therefore, we focus on the former in this subsection. For convenience, we denote . For , let if . Then, for a , we clearly have

Let

and

The following bound is a straightforward generalization of Theorem 2.

Theorem 3

(Achievability). For the BSC permutation channel with crossover probability δ, there exists a code such that

where

and

The set of marginal distributions is constructed by (8) and for the radius r, we have

Proof.

Let us assume the transmitted message is , corresponding to the marginal distribution . In BSC, we focus on the set (22). Using the same argument in the proof of Lemma 1, the term corresponding to in (20) can be computed as

where follows from (A19). Similarly, the term corresponding to in (20) can be computed as

where follows from (A19). Equations (29) and (30) are substituted into Theorem 2 to complete the proof. □

4.5. BEC Permutation Channels

The BEC permutation channel with erasure probability consists of input alphabet and output alphabet , where the conditional distribution is

Moreover, we denote such a channel as for convenience.

Next, we have the following achievability bound.

Proposition 2.

Proof.

The derivations of this proof follow from ([1] Proposition 6), and we include the details for the sake of completeness. We first note that the BSC matrix satisfies the Doeblin minorization condition (e.g., see [1] Definition 5) with and constant . Using ([1] Lemma 6), we find that is a degraded version of . Then, for the encoder and decoder pairs for BSC permutation channels and for BEC permutation channels, the average probability of error satisfies [1] Equation (36).

Then, the argument of the proof of Theorem 3 is repeated. This completes the proof. □

5. Gaussian Approximation

We turn to the asymptotic analysis of the noisy permutation channel for a given blocklength and average probability of error.

5.1. Auxiliary Lemmata

To establish our Gaussian approximation, we will present two lemmata. The first lemma we will exploit is an important tool in the Gaussian approximation analysis:

Lemma 3

(Berry–Esseen, [22] Chapter XVI.5, Theorem 2). Fix a positive integer n. Let indexed by be independent. Then, for any real x and we have

where

To develop the Gaussian approximation, we consider the following definitions. The variance and third absolute moment of log-likelihood ratio between two distributions P and Q are defined as and , respectively. Then, the following lemma is concerned with properties of and , which is proved in Appendix D.

Lemma 4.

Fix a W that satisfies Assumption 1 and generate . Let constructed by (10) with any be the set of packing centers on . If packing radius , for any and , we have

where

and are constants greater than 0. Additionally, we have

5.2. Main Result: Gaussian Approximation

The main result in this section is the following. Please refer to Appendix E for the proof.

Theorem 4.

Fix W is a strictly positive and full-rank square matrix for noisy permutation channels. Then, there exists a number , such that

is achievable for all , where , , is the channel volume ratio, and θ is a constant.

This achievable code size (39) is different from the Gaussian approximation of the traditional channel (e.g., see [7]) since our bound is obtained by divergence packing numbers . The packing radius is a key ingredient affecting the lower bound of and affects the error probability.

5.3. Approximation of BSC and BEC Permutation Channels

We apply Theorem 4 to obtain the following approximation.

Corollary 1.

For BSC permutation channels with crossover probability δ, there exists a number , such that

is achievable for all , where θ is a constant.

Proof.

For BSC, we have . By using Lagrange’s formula [23], we have . Substituting this into Theorem 4 yields the result. □

Remark 4.

We remark that the Gaussian approximation shows some properties of the code size with a given blocklength n and probability of error ϵ. In BSC permutation channels, while the channel capacity is only related to the rank of the channel matrix, the rate at which the achievable code size approaches the capacity is affected by crossover probability δ.

The approximation of BSC permutation channels can also be derived from Proposition 1. To use the message set constructed by (8), we need the following lemma, which is proved in Appendix D:

Lemma 5.

Fix a W that satisfies Assumption 1 and generate . Let constructed by (8) with any be the set of packing centers on . Then, there exists a packing radius , such that for all , we have

and

where , and are positive and finite.

Then, we have the following result.

Proposition 3.

For BSC permutation channels with crossover probability δ, there exists a number , such that

is achievable for all , where θ is a constant.

Proof.

Next, for BEC permutation channels, we have the following approximation.

Proposition 4.

For BEC permutation channels with erasure probability η, there exists a number , such that

and

are achievable for all , where θ is a constant.

Proof.

Through Theorem 2, repeat the argument of the proof of Corollary 1 and Theorem 3, replacing with . □

6. Numerical Results

In this section, we perform numerical evaluations to illustrate our results. We first validate the precision of our Gaussian approximation across a wide range of parameters. Secondly, we present the performance of bounds of a binary DNA storage system and compare them with existing bounds.

6.1. Precision of the Gaussian Approximation

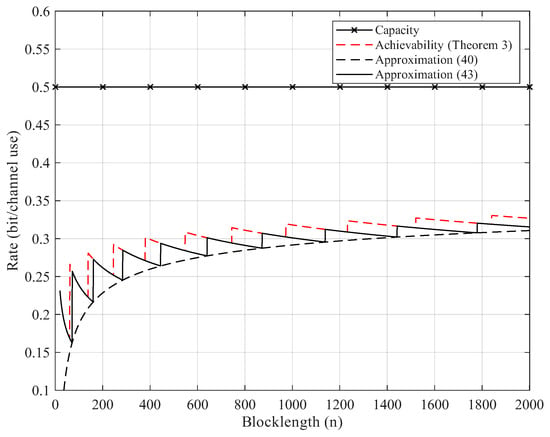

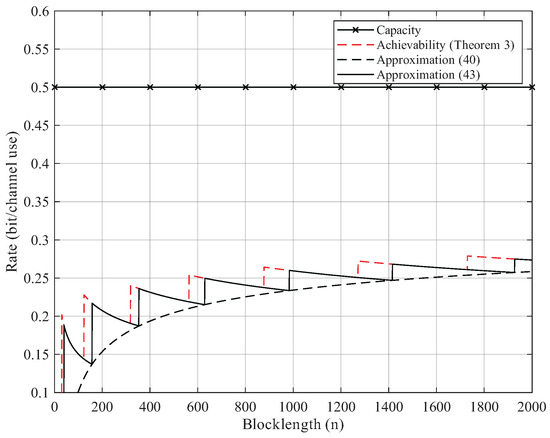

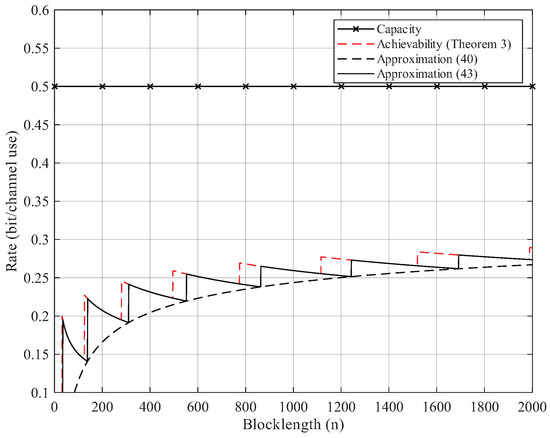

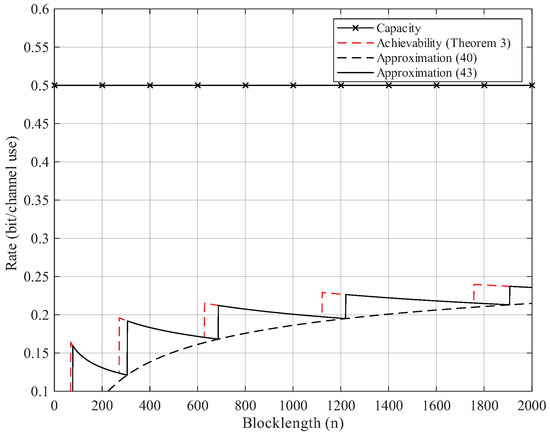

Here, we give the numerical results. According to Proposition 2, the BEC permutation channel with erasure probability can be equivalent to the BSC permutation channel with crossover probability . Thus, we focus on the numerical results of BSC permutation channels. We use Theorem 3 to compute the non-asymptotic achievability bound. We start searching from until the right side of (25) is less than the error probability . For Gaussian approximation, we use (40) and (43) but omit the remainder term . As Figure 2, Figure 3, Figure 4 and Figure 5 show, although the remainder term of the Gaussian approximation is a constant, it is still quite close to the non-asymptotic achievability bound. In fact, for all , the difference between (43) and Theorem 3 is within 1 bit in .

Figure 2.

Rate–blocklength tradeoff for the BSC with crossover probability and average block error rate .

Figure 3.

Rate–blocklength tradeoff for the BSC with crossover probability and average block error rate .

Figure 4.

Rate–blocklength tradeoff for the BSC with crossover probability and average block error rate .

Figure 5.

Rate–blocklength tradeoff for the BSC with crossover probability and average block error rate .

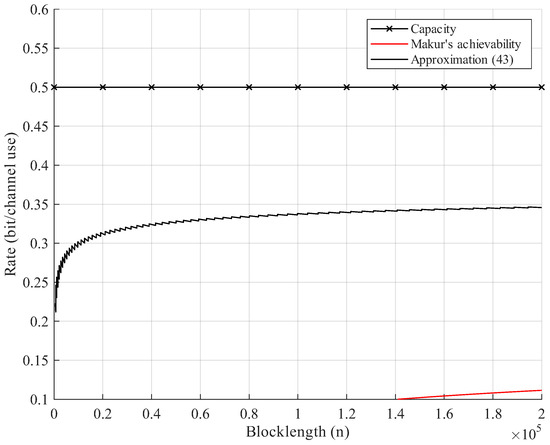

6.2. Comparison with Existing Bound

Additionally, in the context of DNA storage systems, we consider codewords composed of nucleotides . For simplicity, A and C are regarded as the symbol 0, and G and T are regarded as the symbol 1 in code constructions, giving a binary alphabet . The synthesis errors and random permutation of DNA molecules are modeled as the BSC Permutation Channel with crossover probability . To reduce the computation complexity, we use approximation (43). Furthermore, we present numerical results for the existing lower bound, namely, Makur’s achievability bound ([1] Equation (36)) for BSC permutation channels. The result shows that our new achievability bound is uniformly better than Makur’s bound. In the setup of Figure 6, our bound quickly approaches half of the capacity (). As the blocklength increases, Makur’s bound reaches of the channel capacity at about , shown in Figure 6. This is because we show the overlapping relationship of error events, which reduces the number of error events when applying the union bound.

Figure 6.

Rate–blocklength tradeoff for the BSC with crossover probability and average block error rate : example of a DNA storage system.

7. Conclusions and Discussion

In summary, we established a new achievability bound for noisy permutation channels with a strictly positive and full-rank square matrix. The key element is that our analysis indicates that the size of error events in the union is independent of the message set. This allows us to derive a refined asymptotic analysis of the achievable rate. Numerical simulations show that our new achievability bound is stronger than Makur’s achievability bound in [1]. Additionally, our approximation is quite accurate, even though the remainder term is a constant. Finally, the primary future work will generalize the DMC matrix in noisy permutation channels to non-full-rank and non-strictly positive matrices. Other future work may improve asymptotic expansion (e.g., improving the remainder term to ).

Author Contributions

Conceptualization, L.F.; Methodology, L.F.; Software, L.F.; Validation, L.F.; Formal analysis, L.F.; Investigation, L.F.; Resources, L.F.; Data curation, L.F.; Writing—original draft, L.F.; Writing—review and editing, L.F., G.L. and X.L.; Visualization, L.F.; Supervision, G.L.; Project administration, G.L. and Y.J.; Funding acquisition, G.L., X.L. and Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Divergence Packing

Appendix A.1. Proof of Proposition 1

We note that constructed by (8) is a subset of . Fix . Then, for we have

Use Pinsker’s inequality ([8] Theorem 7.10) to obtain . Then, we conclude this proof by realizing that .

Appendix A.2. Proof of Theorem 1

To prove Theorem 1, we consider the set

We have the following lemma:

Lemma A1.

Let be constructed by (A2) with any . For , we have

Proof.

For every , we can find a such that for and , we have , . For , we have . Then, we obtain that . □

Fix a radius and fix a constructed by (10). Note that we have . For any , we have

where follows from Pinsker’s inequality and follows from Lemma A1. On the other hand, note that the right inequality of (A4) shows that

Hence, a total variation packing constructed by can be regarded as an -norm packing of with radius . Let be the -norm unit ball. The volume bound ([8] Theorem 27.3) of -norm packing provides the lower bound of as

Here, is the volume of the -norm unit ball; is the volume of the projection of from to the space , consistent with the argument used in the proof of ([24] Proposition 2).

Note that the maximum volume ratio is by Definition 3. We continue the bounding as follows:

where

- (A7) holds since this projection can remove any y-th dimension, where . Consequently, the lower bound is given by taking the maximum of the volume ratio over ;

- (A8) follows by using Lagrange’s formula [23] and the volume formula to obtain and , respectively.

Finally, we conclude the proof by realizing that the left inequality of (A4) implies that gives the set of divergence packing centers. This completes the proof of Theorem 1.

Appendix B. Proof of Lemma 1

We first consider constructed by (10). Fix a and generate corresponding to P. Fix , where . Denote a such that , , , where . Let

where is a constant. Define

If , for and , we have

For and , we find and consider the intersection, i.e., . Then, for , we have (A11) holds for any .

Now we consider ), which is farther from P in Euclidean distance than . For each , we obtain that the Euclidean distance between and is , where .

Since Q is a probability distribution, we have and . Using the inequality , we obtain that

and

Let , , and . For , let . For , let . Then, we have

Due to the constraints of the probability space, we have

Recall that for , (A11) holds for any . Then, by the definition of , for , we have

where and . We combine (A14)–(A16) to obtain that if , then

That is

For , define such that (A17) holds for . We have . Denote by and the complement of and , respectively. We note that if (14) holds, we have . Then, we obtain (15) holds for a since we obviously have . This completes the proof in the case of constructed by (10).

We then give another proof in binary case. Consider constructed by (8). For convenience, let if , where . Fix and . Let

For any and , we have

holds for . Note that is a monotonically increasing function with respect to . Then, we can obtain

since .

Appendix C. Proof of Theorem 2

Since the matrix is full-rank, we obtain the achievability space of the marginal distribution is a -dimensional probability space . We consider constructing the set of marginal distributions by using the divergence packing on space , i.e., let be constructed by (8) or (10) with any .

Assuming the transmitted message is m, corresponding to the marginal distribution . After the codeword passes through the DMC matrix W and the random permutation block , we obtain that . Then, error event of the maximum likelihood decoder is

Then, for drawn i.i.d. from the average probability of error satisfies

where

- (A24) follows from we regard the equality case, , as an error event though the ML decoder might return the correct message;

- (A25) follows from Lemma 2;

- (A26) follows from the union bound.

Let the message be uniform on . Then, we take the expectation over all codewords to obtain

This completes the proof.

Appendix D. Properties of and

This appendix is concerned with the behavior of and . We first prove Lemma 4.

Appendix D.1. Proof of Lemma 4

Since the DMC matrix is strictly positive, each term of marginal distribution P is uniformly bounded away from zero, i.e., there exists a such that for all . Similarly, there exists a such that for all . Clearly, we have and . Without loss of generality, we assume . That is, we have .

We know that

where and .

We consider each term in P separately. If , we obviously get zero. If , we have . Here, since the distributions in have no zero term, we have . Since , we have

Consequently, we have

and

If , consider , where . Applying the same argument to obtain

Consequently, we have

and

Then, we see that

where

- (A35) simply expand the variance;

Finally, we complete the proof of the lower bound by noting that .

We now turn to bound . For , we have

For , we have

Note that can be bounded by the following:

Using the inequality , we obtain

which establishes (38).

Appendix D.2. Proof of Lemma 5

Then, we prove Lemma 5 for constructed by (8). We first note that

where , , and . Note that holds for small . Repeat the proof of Lemma 4, replacing a with and with . Then, there exists a packing radius , such that for all , we have

and

where , and are positive and finite.

Appendix E. Proof of Theorem 4

Let constructed by (10) with be the set of packing centers on , where is the packing radius and we specify later. For the transmitted message m, passing codeword through the DMC matrix and random permutation block induces a marginal distribution on . Note that the decoding metric is the sum of independent identically distributed variables:

It has the mean , variance , and third absolute moment . Denote

and

According to Theorem 2, there exists a code with average error probability such that

Denote . We continue (A49) as follows:

where

Equating the RHS of (A52) to and noting that , we solve that

where by its definition, and are suitable constants. This can be done since for suitable constants and , we obtain and for n sufficiently large by Lemma 4. Consequently, for large n, can be upper bounded by a suitable constant .

The above arguments indicate that there exists a code with average error probability such that the code size is achievable. Let and let . For n sufficiently large, we obtain that for suitable ,

where

- (A55) holds for suitable by Theorem 1 and Taylor’s formula of .

This completes the proof.

References

- Makur, A. Coding Theorems for Noisy Permutation Channels. IEEE Trans. Inf. Theory 2020, 66, 6723–6748. [Google Scholar] [CrossRef]

- Makur, A. Bounds on Permutation Channel Capacity. In Proceedings of the 2020 IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; pp. 762–766. [Google Scholar]

- Tang, J.; Polyanskiy, Y. Capacity of Noisy Permutation Channels. IEEE Trans. Inf. Theory 2023, 69, 4145–4162. [Google Scholar] [CrossRef]

- Feng, L.; Wang, B.; Lv, G.; Li, X.; Wang, L.; Jin, Y. New Upper Bounds for Noisy Permutation Channels. IEEE Trans. Commun. 2025, 73, 7478–7492. [Google Scholar] [CrossRef]

- Strassen, V. Asymptotic Estimates in Shannon’s Information Theory. In Proceedings of the Transactions of the Third Prague Conference on Information Theory, Prague, Czech Republic, 5–13 June 1962; pp. 689–723. [Google Scholar]

- Hayashi, M. Information Spectrum Approach to Second-Order Coding Rate in Channel Coding. IEEE Trans. Inf. Theory 2009, 55, 4947–4966. [Google Scholar] [CrossRef]

- Polyanskiy, Y.; Poor, H.V.; Verdu, S. Channel Coding Rate in the Finite Blocklength Regime. IEEE Trans. Inf. Theory 2010, 56, 2307–2359. [Google Scholar] [CrossRef]

- Polyanskiy, Y.; Yihong, W. Information Theory: From Coding to Learning; Cambridge University Press: Cambridge, UK, 2023. [Google Scholar]

- Polyanskiy, Y. Channel Coding: Non-Asymptotic Fundamental Limits. Ph.D. Thesis, Princeton University, Princeton, NJ, USA, 2010. [Google Scholar]

- Kolmogorov, A.N. Selected Works of A. N. Kolmogorov. Mathematics and Its Applications; Springer: Dordrecht, The Netherlands, 1993. [Google Scholar]

- Yuhong, Y.; Andrew, B. Information-theoretic determination of minimax rates of convergence. Ann. Stat. 1999, 27, 1564–1599. [Google Scholar]

- John, W.; Steven, W.; Wa, M. Optimal rate delay tradeoffs for multipath routed and network coded networks. In Proceedings of the 2008 IEEE International Symposium on Information Theory (ISIT), Toronto, ON, Canada, 7–12 June 2008. [Google Scholar]

- John, W.; Steven, W.; Wa, M. Optimal Rate–Delay Tradeoffs and Delay Mitigating Codes for Multipath Routed and Network Coded Networks. IEEE Trans. Inf. Theory 2009, 55, 5491–5510. [Google Scholar]

- Yazdi, S.M.; Hossein, T.; Han, M.; Garcia, R. DNA-Based Storage: Trends and Methods. IEEE Trans. Mol. Biol. Multi-Scale Commun. 2015, 1, 230–248. [Google Scholar] [CrossRef]

- Erlich, T.; Zielinski, D. DNA Fountain enables a robust and efficient storage architecture. Science 2017, 355, 950–954. [Google Scholar] [CrossRef] [PubMed]

- Heckel, R.; Shomorony, I.; Ramchandran, K.; Tse, D.N.C. Fundamental limits of DNA storage systems. In Proceedings of the 2017 IEEE International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 3130–3134. [Google Scholar]

- Kovačević, M.; Tan, V.Y. Codes in the Space of Multisets—Coding for Permutation Channels with Impairments. IEEE Trans. Inf. Theory 2018, 64, 5156–5169. [Google Scholar] [CrossRef]

- Laver, T.; Harrison, J.; Moore, K.; Farbos, A.; Paszkiewicz, K.; Studholme, D. Assessing the performance of the oxford nanopore technologies minion. J. Mol. Biol. 2015, 3, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kovačević, M.; Tan, V.Y. Asymptotically optimal codes correcting fixed-length duplication errors in DNA storage systems. IEEE Commun. Lett. 2018, 22, 2194–2197. [Google Scholar] [CrossRef]

- Kiah, M.H.; Puleo, G.; Milenkovic, O. Codes for DNA sequence profiles. IEEE Trans. Inf. Theory 2016, 62, 3125–3146. [Google Scholar] [CrossRef]

- Evans, W.; Kenyon, C.; Peres, Y.; Schulman, L.J. Broadcasting on trees and the Ising model. Ann. Appl. Prob. 2000, 410–433. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications; Wiley: New York, NY, USA, 1971. [Google Scholar]

- Stein, P. A Note on the Volume of a Simplex. Am. Math. Mon. 1966, 73, 299. [Google Scholar] [CrossRef]

- Jennifer, T. Divergence Covering. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).