Information Complexity of Time-Frequency Distributions of Signals in Detection and Classification Problems

Abstract

1. Introduction

- introduction of the concept of the TFD information complexity;

- using Rényi entropy to calculate the information complexity of two-dimensional probability distributions;

- application of the proposed information characteristics to the classification problem of acoustic signals.

2. Statement of Signal Classification Problem

3. Time-Frequency Distributions

3.1. Spectrogram and Wigner–Ville Distribution

3.2. Reassigned Spectrogram

- at each point , where the value of the spectrogram is defined, two values are also calculatedwhich define the local distribution centers of through the window centered at ;

- then the value of the spectrogram is moved from the point to this centroid , which allows us to determine the reassigned spectrogram as follows:where is the Dirac delta function. In general, for , the value of the spectrogram corresponds to a new position on the time-frequency plane, namely, moved to the point .

4. Entropy of Time-Frequency Distributions

4.1. Classical Information Criteria and Discrete Distributions

4.2. New Information Criteria and Discrete Distributions

5. Complexity of Time-Frequency Distributions

- Kullback–Leibler divergence ;

- Rényi divergence (can be considered a generalization of , since when tends to 1, it becomes );

- Jensen–Shannon divergence for Rényi entropy ;

- Euclidean distance ;

- Total signed measure of variation .

5.1. Rényi Divergence

5.2. Jensen–Shannon Divergence for Time-Frequency Distributions

5.3. New Information Characteristics

- Related to Shannon entropy:

- Related to Rényi entropy:

- by for one-dimensional discrete distributions;

- by where is a pair of indices for two-dimensional discrete distributions.

6. Modeling

6.1. Model Signal Description

- harmonic signals;

- linearly frequency-modulated chirp signals (LFM chirp signals);

- model signals of marine vessels.

6.2. Description of Real Signals

- Bioacoustic signals;

- Recordings of hydroacoustic background marine noise;

- Hydroacoustic ship signals.

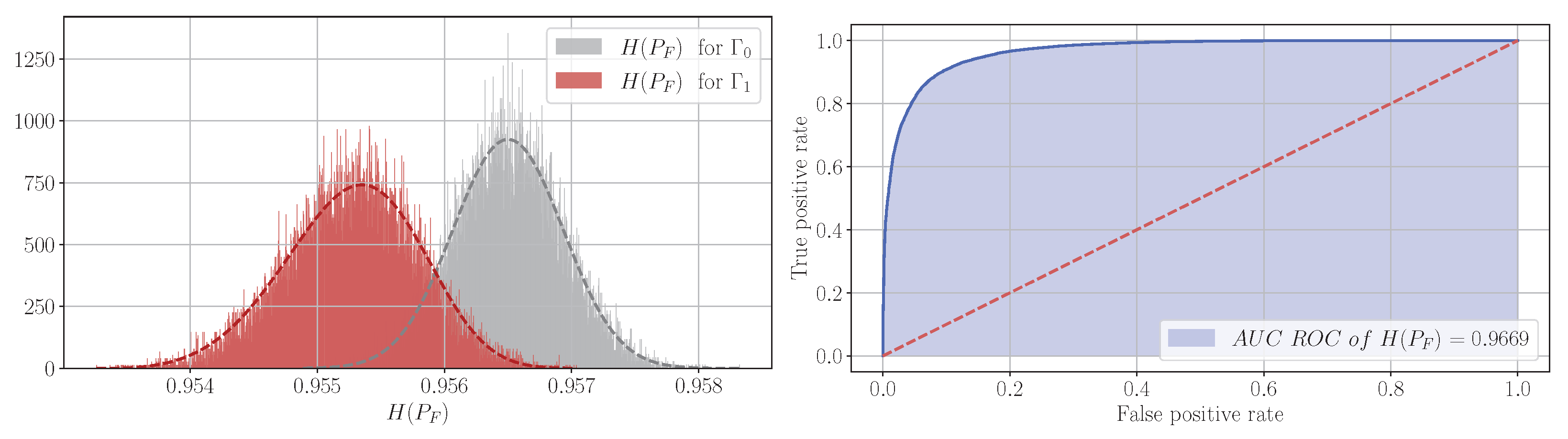

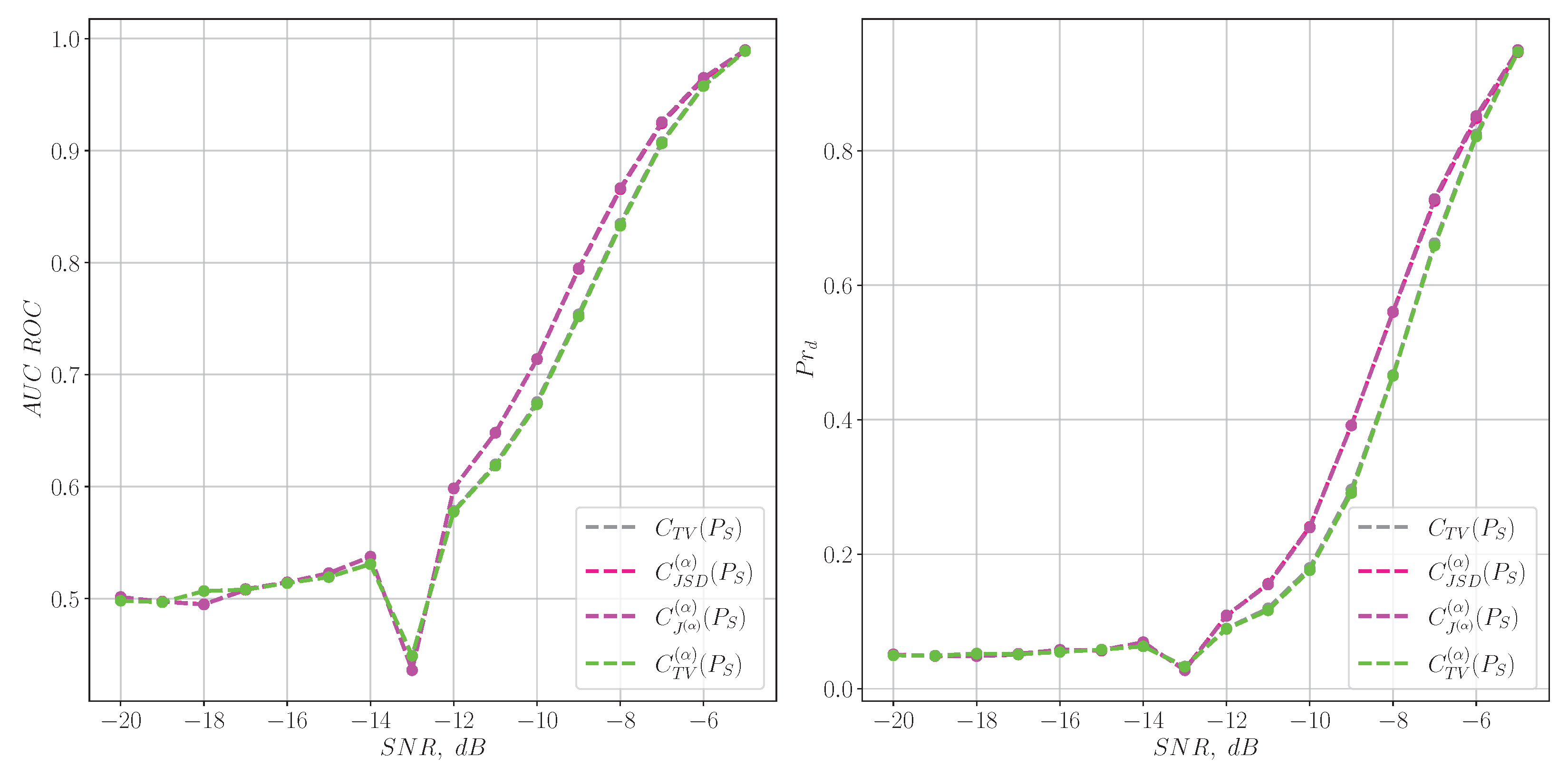

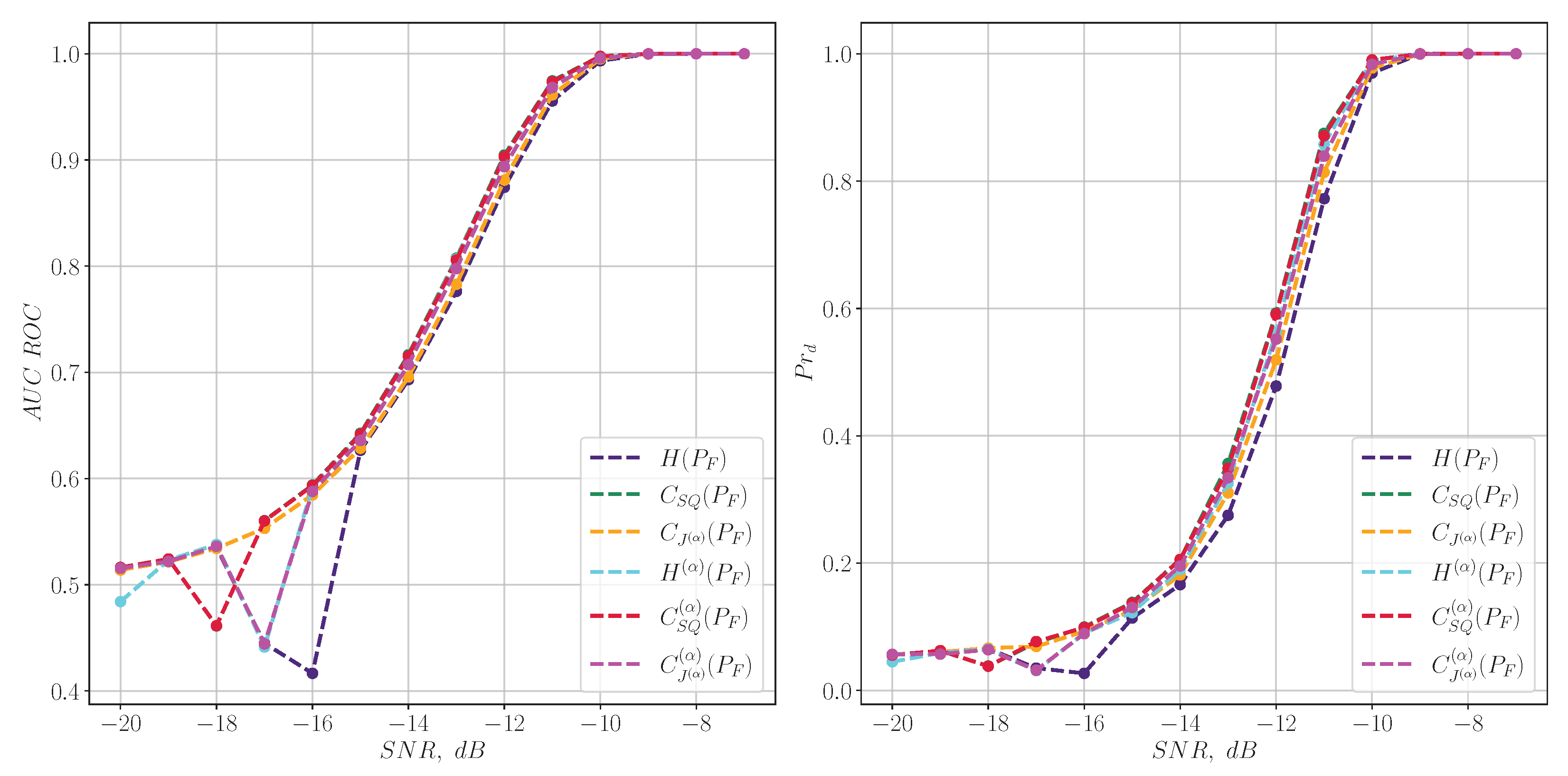

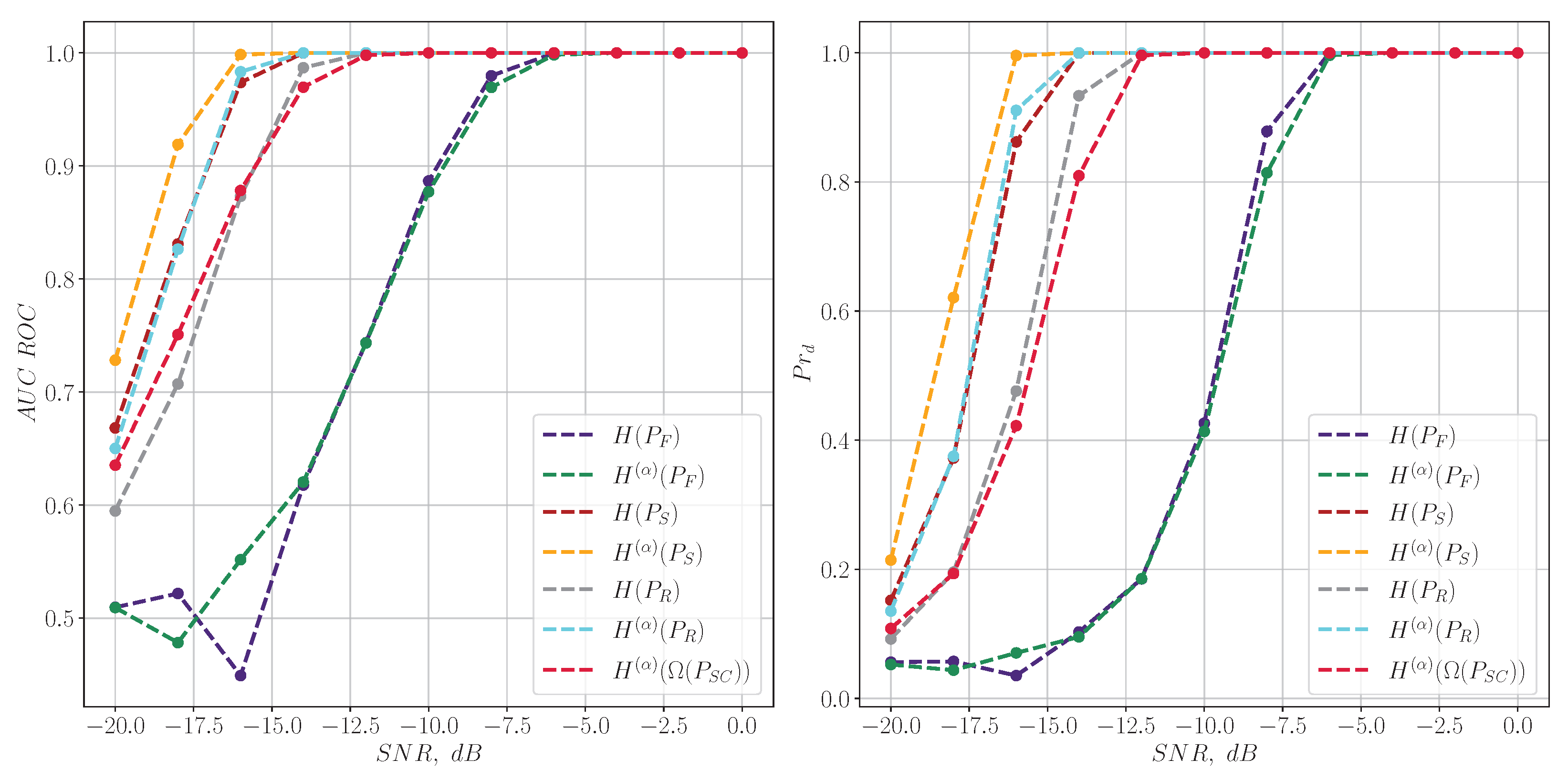

6.3. Statistical Experiments for Detecting Model Signals

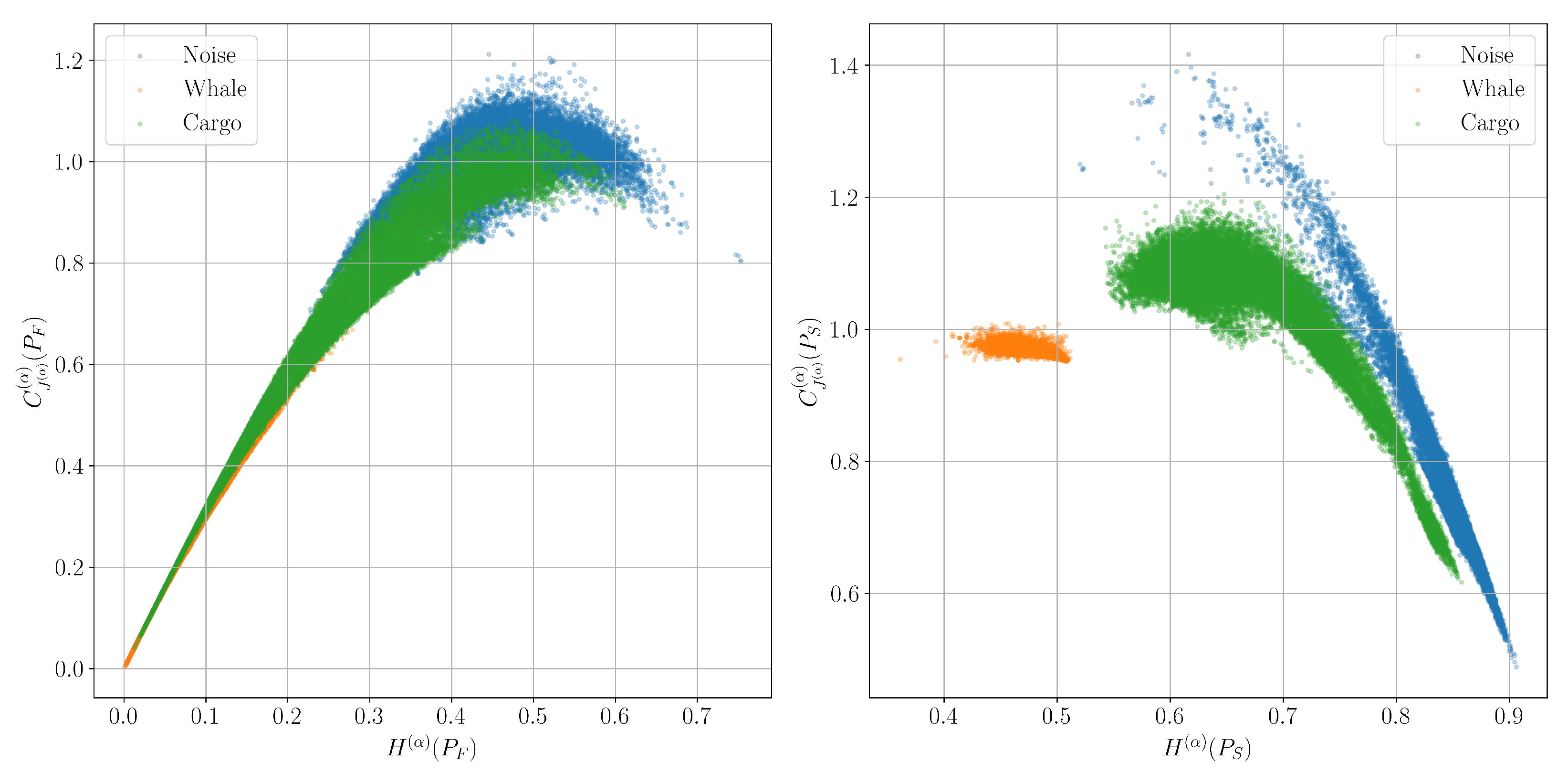

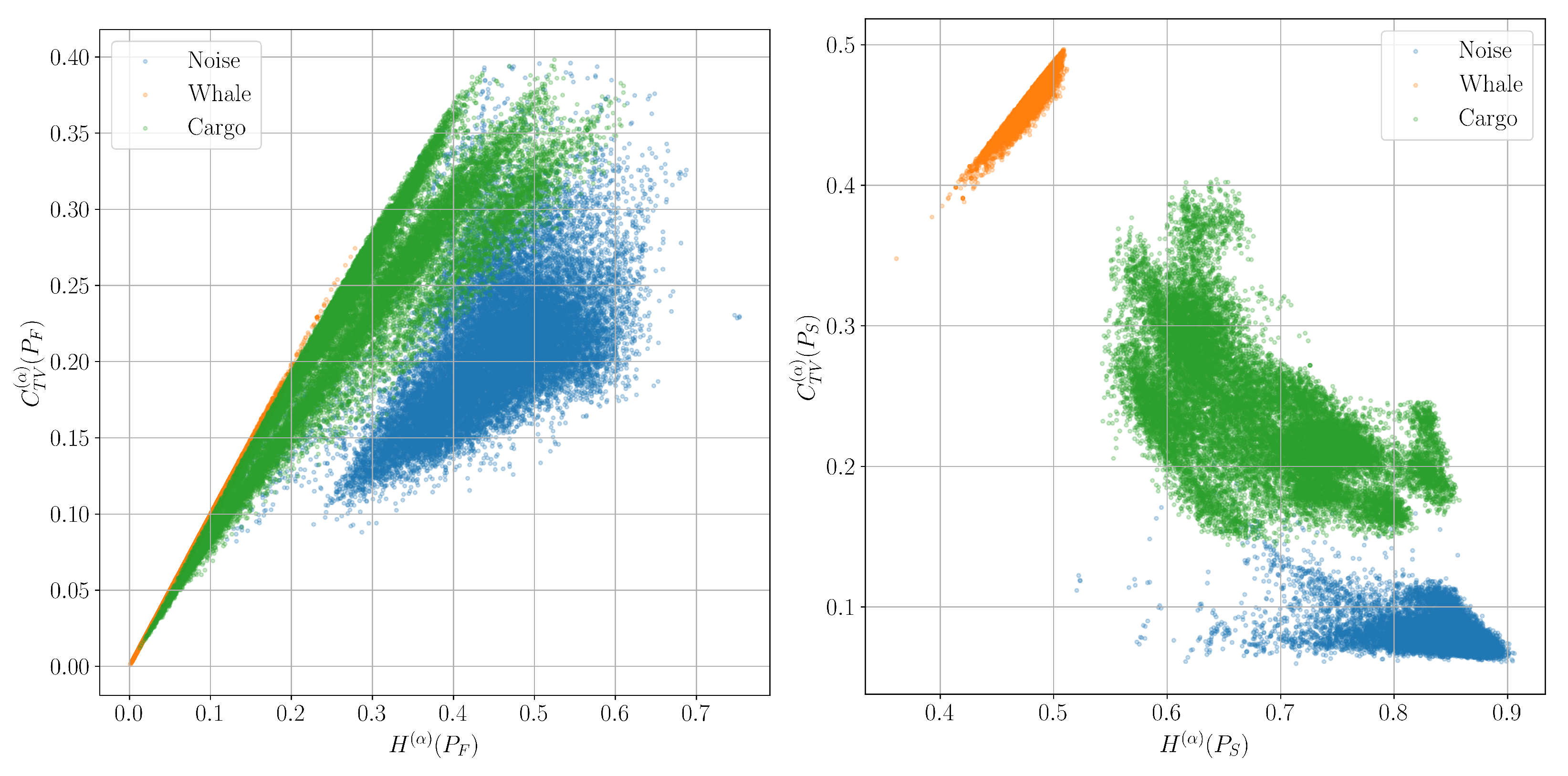

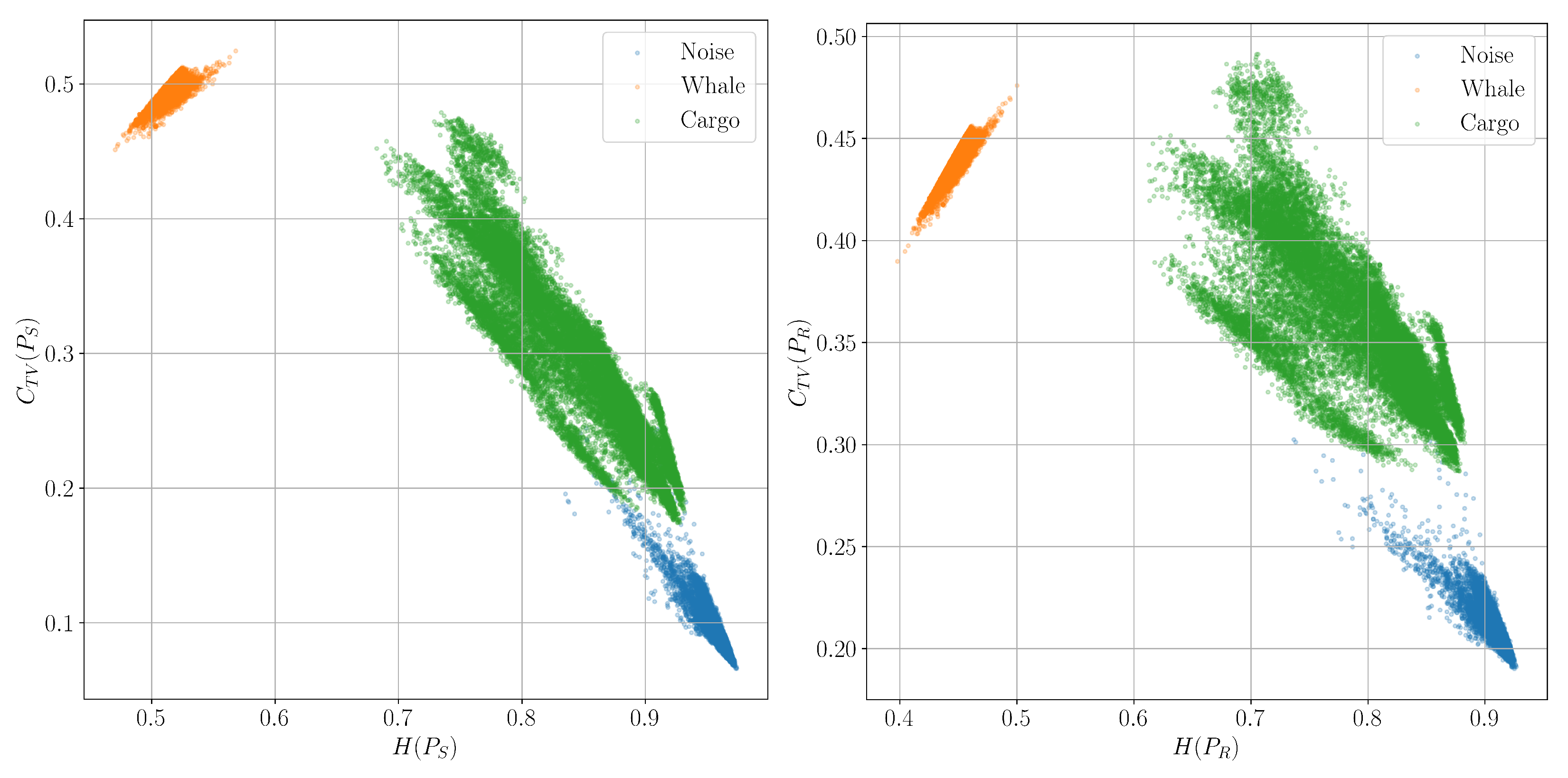

6.4. Plane for Classification of Real Signals

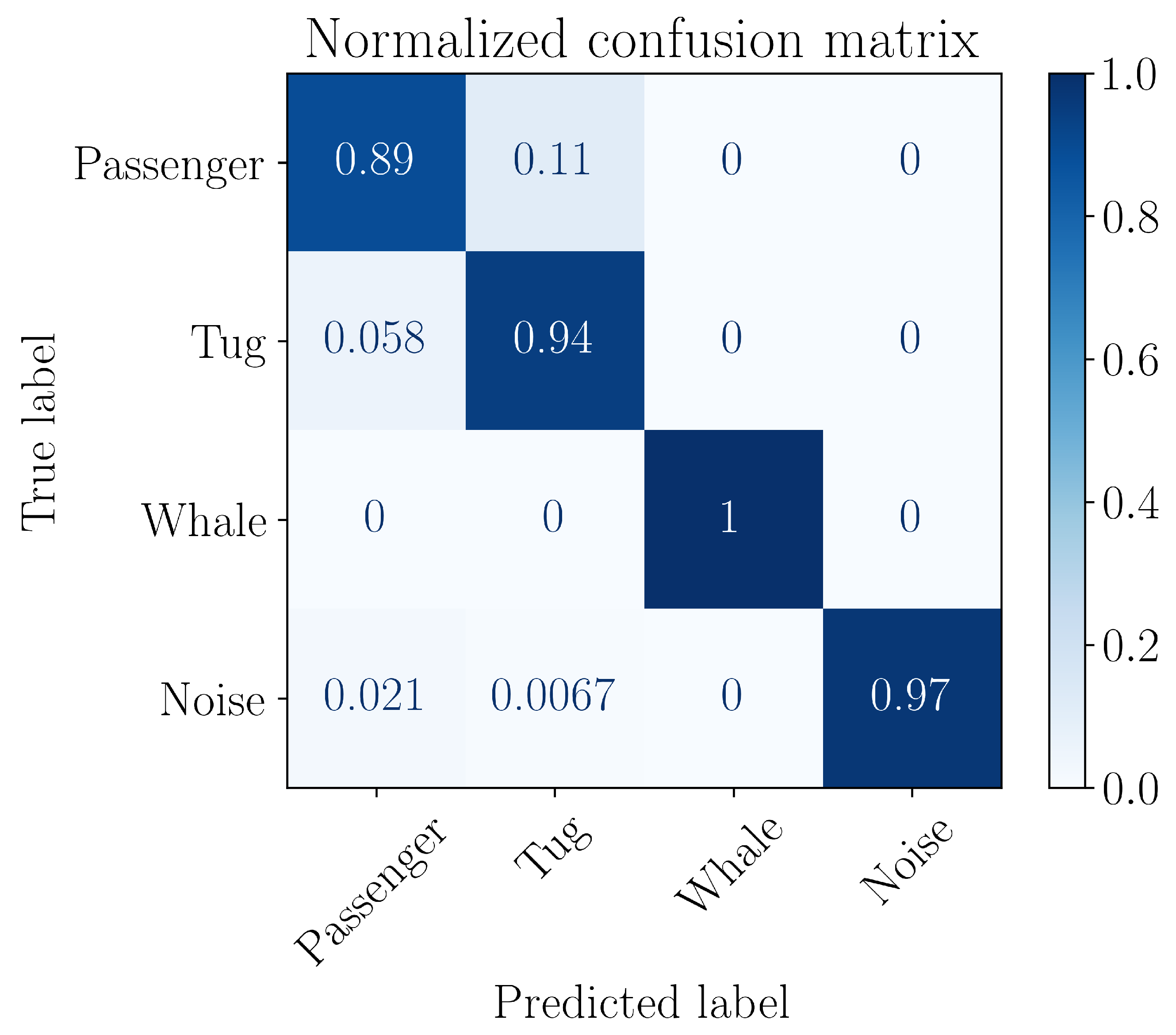

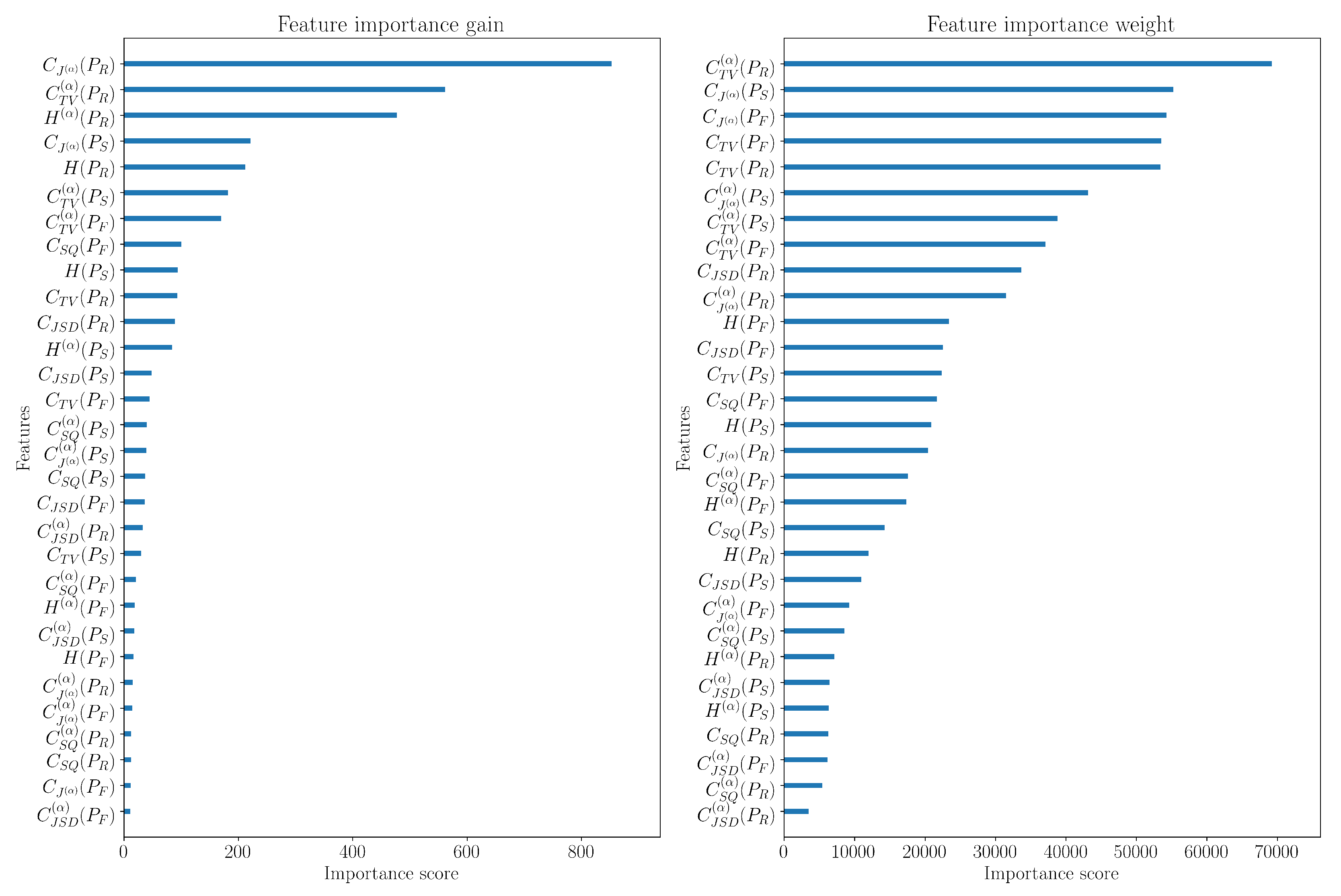

6.5. Using Entropy Features to Classify Signals with Machine Learning Methods

- natural marine background noise (Noise);

- bioacoustic signals of whales (Whale);

- hydroacoustic signals of a tugboat (Tug);

- hydroacoustic signals of a passenger ship (Passenger).

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AUC ROC | Area Under the Receiver Operating Characteristic Curve |

| CFAR | Constant False Alarm Rate |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| JSD | Jensen–Shannon Divergence |

| LFM | Linear Frequency Modulation |

| SNR | Signal-To-Noise Ratio |

| STFT | Short-Time Fourier transform |

| TFD | Time-Frequency Distribution |

| VAD | Voice Activity Detection |

References

- Cohen, L. Time-Frequency Analysis; Electrical Engineering Signal Processing; Prentice Hall PTR: Hoboken, NJ, USA, 1995; p. 320. [Google Scholar]

- Boashash, B.; Khan, N.A.; Ben-Jabeur, T. Time–frequency features for pattern recognition using high-resolution TFDs: A tutorial review. Digit. Signal Process. 2015, 40, 1–30. [Google Scholar] [CrossRef]

- Malarvili, M.B.; Sucic, V.; Mesbah, M.; Boashash, B. Renyi entropy of quadratic time-frequency distributions: Effects of signals parameters. In Proceedings of the 2007 9th International Symposium on Signal Processing and Its Applications, Sharjah, United Arab Emirates, 12–15 February 2007. [Google Scholar] [CrossRef]

- Sucic, V.; Saulig, N.; Boashash, B. Analysis of local time-frequency entropy features for nonstationary signal components time supports detection. Digit. Signal Process. 2014, 34, 56–66. [Google Scholar] [CrossRef]

- Sucic, V.; Saulig, N.; Boashash, B. Estimating the number of components of a multicomponent nonstationary signal using the short-term time-frequency Rényi entropy. EURASIP J. Adv. Signal Process. 2011, 2011, 125. [Google Scholar] [CrossRef]

- Babikov, V.G.; Galyaev, A.A. Information diagrams and their capabilities for classifying weak signals. Probl. Inf. Transm. 2024, 60, 127–140. [Google Scholar] [CrossRef]

- Bačnar, D.; Saulig, N.; Vuksanović, I.P.; Lerga, J. Entropy-Based Concentration and Instantaneous Frequency of TFDs from Cohen’s, Affine, and Reassigned Classes. Sensors 2022, 22, 3727. [Google Scholar] [CrossRef]

- Aviyente, S. Divergence measures for time-frequency distributions. In Proceedings of the Seventh International Symposium on Signal Processing and Its Applications, Paris, France, 1–4 July 2003; Volume 1, pp. 121–124. [Google Scholar] [CrossRef]

- Zarjam, P.; Azemi, G.; Mesbah, M.; Boashash, B. Detection of newborns’ EEG seizure using time-frequency divergence measures. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 5, pp. 429–432. [Google Scholar] [CrossRef]

- Porta, A.; Baselli, G.; Lombardi, F.; Montano, N.; Malliani, A.; Cerutti, S. Conditional entropy approach for the evaluation of the coupling strength. Biol. Cybern. 1999, 81, 119–129. [Google Scholar] [CrossRef]

- Baraniuk, R.; Flandrin, P.; Janssen, A.; Michel, O. Measuring time-frequency information content using the Renyi entropies. IEEE Trans. Inf. Theory 2001, 47, 1391–1409. [Google Scholar] [CrossRef]

- Michel, O.; Baraniuk, R.; Flandrin, P. Time-frequency based distance and divergence measures. In Proceedings of the IEEE-SP International Symposium on Time- Frequency and Time-Scale Analysis, Philadelphia, PA, USA, 25–28 October 1994. [Google Scholar] [CrossRef]

- Flandrin, P.; Baraniuk, R.; Michel, O. Time-frequency complexity and information. In Proceedings of the ICASSP ’94, IEEE International Conference on Acoustics, Speech and Signal Processing, Adelaide, SA, Australia, 19–22 April 1994. [Google Scholar] [CrossRef]

- Kalra, M.; Kumar, S.; Das, B. Moving Ground Target Detection With Seismic Signal Using Smooth Pseudo Wigner–Ville Distribution. IEEE Trans. Instrum. Meas. 2020, 69, 3896–3906. [Google Scholar] [CrossRef]

- Xu, Y.; Zhao, Y.; Jin, C.; Qu, Z.; Liu, L.; Sun, X. Salient target detection based on pseudo-Wigner-Ville distribution and Rényi entropy. Opt. Lett. 2010, 35, 475–477. [Google Scholar] [CrossRef]

- Vranković, A.; Ipšić, I.; Lerga, J. Entropy-Based Extraction of Useful Content from Spectrograms of Noisy Speech Signals. In Proceedings of the 2021 International Symposium ELMAR, Zadar, Croatia, 13–15 September 2021. [Google Scholar] [CrossRef]

- Liu, C.; Gaetz, W.; Zhu, H. Estimation of Time-Varying Coherence and Its Application in Understanding Brain Functional Connectivity. EURASIP J. Adv. Signal Process. 2010, 2010, 390910. [Google Scholar] [CrossRef]

- Moukadem, A.; Dieterlen, A.; Brandt, C. Shannon Entropy based on the S-Transform Spectrogram applied on the classification of heart sounds. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 704–708. [Google Scholar] [CrossRef]

- Batistić, L.; Lerga, J.; Stanković, I. Detection of motor imagery based on short-term entropy of time–frequency representations. Biomed. Eng. Online 2023, 22, 41. [Google Scholar] [CrossRef]

- Sang, Y.F.; Wang, D.; Wu, J.C.; Zhu, Q.P.; Wang, L. Entropy-Based Wavelet De-noising Method for Time Series Analysis. Entropy 2009, 11, 1123–1147. [Google Scholar] [CrossRef]

- Auger, F.; Flandrin, P.; Lin, Y.T.; McLaughlin, S.; Meignen, S.; Oberlin, T.; Wu, H.T. Time-frequency reassignment and synchrosqueezing: An overview. IEEE Signal Process. Mag. 2013, 30, 32–41. [Google Scholar] [CrossRef]

- Galyaev, A.A.; Babikov, V.G.; Lysenko, P.V.; Berlin, L.M. A New Spectral Measure of Complexity and Its Capabilities for Detecting Signals in Noise. Dokl. Math. 2024, 110, 361–368. [Google Scholar] [CrossRef]

- Galyaev, A.A.; Berlin, L.M.; Lysenko, P.V.; Babikov, V.G. Order statistics of the normalized spectral distribution for detecting weak signals in white noise. Autom. Remote Control 2024, 85, 1041–1055. [Google Scholar] [CrossRef]

- López-Ruiz, R.; Mancini, H.; Calbet, X. A statistical measure of complexity. Phys. Lett. A 1995, 209, 321–326. [Google Scholar] [CrossRef]

- Liu, Z.; Lü, L.; Yang, C.; Jiang, Y.; Huang, L.; Du, J. DEMON Spectrum Extraction Method Using Empirical Mode Decomposition. In Proceedings of the 2018 OCEANS—MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Kudryavtsev, A.A.; Luginets, K.P.; Mashoshin, A.I. Amplitude Modulation of Underwater Noise Produced by Seagoing Vessels. Akust. Zhurnal 2003, 49, 224–228. [Google Scholar] [CrossRef]

- Kirsebom, O.S.; Frazao, F.; Simard, Y.; Roy, N.; Matwin, S.; Giard, S. Performance of a deep neural network at detecting North Atlantic right whale upcalls. J. Acoust. Soc. Am. 2020, 147, 2636–2646. [Google Scholar] [CrossRef]

- Du, X.; Hong, F. QiandaoEar22: A high-quality noise dataset for identifying specific ship from multiple underwater acoustic targets using ship-radiated noise. EURASIP J. Adv. Signal Process. 2024, 2024, 96. [Google Scholar] [CrossRef]

- Irfan, M.; Jiangbin, Z.; Ali, S.; Iqbal, M.; Masood, Z.; Hamid, U. DeepShip: An underwater acoustic benchmark dataset and a separable convolution based autoencoder for classification. Expert Syst. Appl. 2021, 183, 115270. [Google Scholar] [CrossRef]

- Tanky; van; Dong, L.; cool; Eberenz, J. libAudioFlux/audioFlux: v0.1.9; Zenodo: Geneva, Switzerland, 2024. [Google Scholar] [CrossRef]

- Ribeiro, H.V.; Zunino, L.; Lenzi, E.K.; Santoro, P.A.; Mendes, R.S. Complexity-Entropy Causality Plane as a Complexity Measure for Two-Dimensional Patterns. PLoS ONE 2012, 7, e40689. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Chen, Z. Feature Extraction of Ship-Radiated Noise Based on Intrinsic Time-Scale Decomposition and a Statistical Complexity Measure. Entropy 2019, 21, 1079. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lysenko, P.; Galyaev, A.; Berlin, L.; Babikov, V. Information Complexity of Time-Frequency Distributions of Signals in Detection and Classification Problems. Entropy 2025, 27, 998. https://doi.org/10.3390/e27100998

Lysenko P, Galyaev A, Berlin L, Babikov V. Information Complexity of Time-Frequency Distributions of Signals in Detection and Classification Problems. Entropy. 2025; 27(10):998. https://doi.org/10.3390/e27100998

Chicago/Turabian StyleLysenko, Pavel, Andrey Galyaev, Leonid Berlin, and Vladimir Babikov. 2025. "Information Complexity of Time-Frequency Distributions of Signals in Detection and Classification Problems" Entropy 27, no. 10: 998. https://doi.org/10.3390/e27100998

APA StyleLysenko, P., Galyaev, A., Berlin, L., & Babikov, V. (2025). Information Complexity of Time-Frequency Distributions of Signals in Detection and Classification Problems. Entropy, 27(10), 998. https://doi.org/10.3390/e27100998