Abstract

The two-star random graph is the simplest exponential random graph model with nontrivial interactions between the graph edges. We propose a set of auxiliary variables that control the thermodynamic limit where the number of vertices N tends to infinity. Such ’master variables’ are usually highly desirable in treatments of ‘large N’ statistical field theory problems. For the dense regime when a finite fraction of all possible edges are filled, this construction recovers the mean-field solution of Park and Newman, but with explicit control over the corrections. We use this advantage to compute the first subleading correction to the Park–Newman result, which encodes the finite, nonextensive contribution to the free energy. For the sparse regime with a finite mean degree, we obtain a very compact derivation of the Annibale–Courtney solution, originally developed with the use of functional integrals, which is comfortably bypassed in our treatment.

1. Introduction

Many quantum and statistical systems simplify when the number of degrees of freedom becomes large, for example the number of internal degrees of freedom of fields at each spacetime point, or the size of matrices, etc. One is often talking about ’large N’ limits [1], where N parametrizes the number of degrees of freedom. A ’holy grail’ problem for such large N limits is the search for ’master variables’ or ’master fields’, such that, when the original problem is reformulated through these variables, N becomes a numerical parameter in the action (or in the statistical probability distribution), and changing N no longer affects the set of the degrees of freedom involved. As this parameter tends to infinity, the large N limit is often controlled by an explicit saddle point in terms of the master variables, which condense, as a result, to definite values.

The master variables can be of a different nature than the original variables. In the simplest case of the vector model whose fundamental variables are vectors under rotations, scalar fields constructed from these vectors condense to definite values at large N and play the role of master variables [1]. Similar ’scalar condensation’ via different applications of the Hubbard–Stratonovich transformation occurs in multi-matrix models with a large number of matrices transformed into one another by rotations [2,3,4], or in computations of random tensor eigenvalue distributions in a large number of dimensions [5,6,7]. The situation can be much more exotic, however. For example, for large random matrices of size , the resolvents, which are functions of an external spectral parameter, condense to definite values [8,9]. Thus, while the original variables are numbers, the master variables are functions. This is especially visible in the corresponding treatments of sparse random matrix ensembles [10,11,12,13,14,15]: starting with random matrices, one arrives at explicit functional integrals in terms of the functional master variables that acquire a saddle point structure at large N. Finally, in quantum gauge theories, loop variables make a prominent appearance at large N and are believed to condense to definite values [16,17,18,19]. Those are functionals on the loop space, while the original variables are fields in the physical spacetime.

Motivated by this diversity of large N limits and the master variables that control them, we would like to revisit here the two-star random graph model. This is probably the simplest statistical graph model with nontrivial edge interactions and nontrivial thermodynamics, formulated as a Gibbs-like maximal entropy ensemble with controlled average number of edges and ’2-stars’ (which one can think of as the number of paths of length 2). By contrast, if one only controls the number of edges, one ends up with the edges being filled or not filled randomly and independently, which is known as the Erdös–Rényi random graph. This model is of fundamental importance in the physics of networks [20], providing a point of departure for more sophisticated constructions, but its thermodynamics is trivial (this is for the case of distinguishable vertices; thermodynamics of unlabeled graphs with indistinguishable vertices is nontrivial even within the Gibbs ensemble that only controls the number of edges [21,22]).

We thus turn to the two-star random graph on N vertices and look for a set of master variables that control the large N limit. One generally expects that the master variables are invariants of the symmetries of the theory, and here we must keep in mind that, unlike models, the matrix models defining random graph ensembles are typically invariant under vertex permutations, but not under any form of continuous rotations, leaving a much bigger set of invariants.

In the dense regime (a finite fraction of all possible edges occupied), a mean-field solution of the two-star model was developed by Park and Newman [23]. This solution is simple, elegant and manifestly correct given the comparisons with numerics, but it does not bring under explicit control the corrections, which is where the master variables proposed here lead to improvement. In the sparse regime (a finite average degree), the model was solved using auxiliary fields by Annibale and Courtney [24]. This solution, however, goes through functional integrals at the intermediate stages. The master variables proposed here will recover it in a more compact and elementary fashion. (We mention for additional perspective the mathematical works [25,26] dealing with related topics, as well as some further relevant physics literature on the two-star model and its generalizations [27,28,29].)

Our treatment will proceed with defining the relevant master variables in Section 2, showing how they can be used to reproduce the Park–Newman mean-field solution in the dense regime in Section 3, spelling out the computation of corrections to this solution in Section 4, followed by a very compact derivation of the large N solution in the sparse case in Section 5. We will give a physical interpretation of the condensation of master variables in this solution in Section 6, and then conclude with a brief summary and discussion.

2. The Two-Star Random Graph and Its Auxiliary Field Representation

For analytic considerations, one starts by representing graphs as adjacency matrices. For each graph on N vertices, define a real symmetric zero-diagonal adjacency matrix whose entries equal 1 if there exists an edge between vertices i and j, and 0 otherwise. The probability of each graph in the two-star model is then given by

where implies summing over all possible adjacency matrices, that is, over all graph configurations. This is a typical Gibbs-like maximal entropy ensemble with two ’fugacities’ and . Introducing the vertex degrees , one can understand as twice the number of edges, while . These are the two quantities whose expectation values are controlled by their thermodynamic conjugates and . By linear redefinitions of and , one can equivalently control instead the number of edges and the number of ’two-star’ motifs (ordered triplets of distinct nodes with at least two edges connecting them), given by . While all of these definitions are physically equivalent, the conventions explicitly stated in (1) prove convenient for our subsequent analytic work.

To solve the ensemble (1) in the thermodynamic limit , one needs to evaluate the partition function Z. Direct summation over A is impossible due to the quadratic nonlinearity in H. We start with a simple Hubbard–Stratonovich transform, as in [23]:

We need to introduce one such variable for each value of , obtaining

where we expressed the exponent through the independent entries with . The summation over with then trivializes since the summand is factorized over the entries of the adjacency matrix. One thereby arrives at the following vector model in terms of :

which is often more convenient to represent as

One expects that such vector models simplify when N is large. This is what in fact happens, though the details depend crucially on the N-scalings of the thermodynamic parameters and that determine whether the graph is in the sparse or dense regime (both of which are an option at large N, defining thus different large N limits of the model).

A mean-field solution of (5) in the dense regime was originally presented in [23]. The dense regime corresponds to , at . In this regime, condense to definite values of order (in the current conventions) plus fluctuations of order 1. The idea of [23] is then to expand around this condensation point as and use the Taylor expansion for S:

The mean-field value is chosen to ensure that the term linear in is absent. As an estimate for Z of (5), one then takes times the Gaussian determinant from integrating the exponential of the quadratic terms in (6), with all the higher-order terms neglected.

All the indications are that the results of the above mean-field analysis are exact at , and they compare very well with the corresponding numerics [23]. And yet, why could the higher-order terms in (6) be neglected? Most naively, differentiating S with respect to inserts powers of , and since , this amounts to manifest negative powers of N, which is reassuring. This is, however, not yet the whole story. The number of variables grows with N, and in the standard Feynman-like expansion while evaluating (5) with (6) substituted, one will have multiple sums over indices ranging from 1 to N. This will introduce positive powers of N that will compete with the negative powers. (The ability of fluctuations in a large number of variables to compromise expansions is highlighted in a similar context in Section 8.2 of [16]). More than that, if we repeatedly differentiate S with respect to the same component of , the resulting expression has N terms, contributing further positive factors of N.

Due to all the above ingredients, the story with higher-order corrections to [23], which we briefly sketch in Appendix A, appears somewhat bewildering. While the naive evaluation within the Gaussian approximation produces the correct result at large N that can be verified numerically, the higher-order corrections come with a swarm of positive and negative powers of N, and it is difficult to give a concise argument as to how exactly they are suppressed, and why they do not upset the Gaussian estimate (though it is known empirically that they do not). While one could attempt diagrammatic accounting of the powers of N in these corrections, somewhat in the spirit of random tensor considerations [30,31], it is anything but easy.

Instead of attempting diagrammatic analysis of (5) with the expansion (6), we shall follow here a different strategy, introducing a further set of auxiliary variables, somewhat akin to those recently used for analyzing graphs with prescribed degree sequences [15,32,33]. In this way, conventional saddle points will emerge that control the large N limit of (5), where N becomes a numerical parameter in the ’action,’ and the set of integration variables is N-independent. This will have other useful applications, besides elucidating the analytics behind the mean-field solution of [23].

We first write (5) as

and then expand the logarithm as to obtain

An attractive feature of the last expression is that it can be completely factorized over at the cost of introducing one more Hubbard–Stratonovich transformation with respect to the variables that couple to . In this way, one gets

or more suggestively, since all the integrals are identical to each other,

The integral in the last line gives an effective action , in terms of which one has to deal with a saddle point problem at large N, with N playing no more of a role than providing a large saddle point parameter.

3. The Dense Regime at Leading Order

We now turn to the dense regime of [23], where and , which we write as

These scalings are necessary to ensure a nontrivial infinite N limit with a finite mean connectivity (the fraction of all possible edges that are filled).

For the first-pass treatment in this section, as in [23], we only keep the extensive part of the free energy, that is, contributions to that survive at . For that, we compute up to the order at , so that is computed correctly up to factors that stay finite at . In this way, we reproduce the solution of the model at the precision level of [23], while the subleading corrections will be discussed in the next section.

For constructing a saddle point estimate of (9) and (10), it is important to identify the N-scaling of the variables responsible for the dominant contribution. In view of the mean-field picture of [23] that has been verified by comparisons with numerics, the field condenses to values of order . Since are Hubbard–Stratonovich conjugates of , they are expected to condense at values of order N, since the sum over k consists of N identical terms of order 1. We shall see that this regime , is indeed consistent and produces a saddle point estimate of Z with controlled corrections.

Motivated by the above picture, we write . We will choose to ensure that the integral over is dominated by of order 1. Then, neglecting all terms suppressed by ,

We then choose to ensure that the terms of order that are linear in cancel out, which would guarantee that only values of of order 1 contribute to the integral:

One is then left with an elementary Gaussian integral over that yields

The corrections to this formula are of order , which will give at most multiplicative contributions of order in , and those cannot affect the extensive part of the free energy.

From the above, we write S as

where the leading terms of order N have been separated out explicitly, and is a function of implicitly determined by (13). To construct a saddle point estimate of (9), we look for stationary points of , determined at leading order in N by

With the dominant terms in S collected in (15), this yields

The terms with cancel out in view of (13), leaving the following saddle point configuration:

Note that are consistently of order N. At this saddle point, (13) becomes an explicit equation for

as in the mean-field solution of [23]. If we expand (9) around the saddle point configuration , the Gaussian integral over fluctuations is manifestly of order at large N, as we shall see more explicitly in the next section. Then, nonvanishing contributions to the free energy per vertex may only come from the saddle point exponential evaluated at , that is

From (15), (16) and (18), this is evaluated as

where we have used the evident formulas

and also equation (13) to simplify the terms of order 1. The expression is identical to the result of [23] up to a change of notation: what we call here is in [23], and what we call B is in [23].

4. The Dense Regime: Subleading Corrections

To keep the story clean, we shall take the saddle point values deduced in the previous section as an input for identifying the relevant point of expansion, and restart the derivation independently departing, once again, from the partition function (9) and (10). We will see that this results in an explicit controllable expansion that we will process in a matter-of-fact manner.

In (9) and (10) with , we introduce the following variable redefinitions motivated by the previous section:

To avoid clutter in the sums, we define the shorthand

In the previous section, we computed S up to the order , and then the free energy is computed up to the order , since S enters the expression for Z as and one has to take a logarithm of Z. In order to compute the next correction, which is the nonextensive contribution to the free energy of order , one correspondingly has to compute S up to the order . To do so, with the above notation, we rewrite (10) as

We expand the denominator in the integrand as

and, keeping in mind that , expand the integrand up to order . Importantly, terms involving in the exponent cancel out because of our choice of and , and one is left with the following Gaussian integral (where we omit odd powers of , which integrate to 0):

We will often encounter the following sums, for which we introduce explicit notation:

Evaluating the -integral in (25) then yields

Raising this expression to the power of N, so as to substitute it in (9), and making use of at large N, we arrive at

where all possible corrections to this expression are suppressed by powers of . Evaluating in terms of from (26)—the explicit formulas are tabulated in Appendix B—and also keeping in mind that and , we obtain

From this,

Importantly, the term involving in the first line of (29) cancels out in (30). This leaves in (9) a Gaussian integral over where all integration variables are only allowed to take values of order 1, leading to an explicit finite result. This reflects the suitability of our parametrization in (22) and (23). Had we chosen a different expansion point, large terms of the form would have compromised the usefulness of evaluating the Gaussian integrals at this stage, as they would induce rearrangements in the corrections.

To evaluate (9), it remains to integrate over . To do so, we employ the following Hubbard–Stratonovich transformation for the exponential of the last term in (30):

It is now straightforward, even if somewhat laborious, to evaluate the remaining Gaussian integral over where the quantities defined in (26) appear once again, and can thereafter be explicitly expressed through . The result is

We have once again used the expressions for sums of the form given in Appendix B. To undo the Hubbard–Stratonovich transformation in (31), we need to multiply the last line with and integrate over y. This yields

Finally, we need to gather all the contributions to Z of (9), consisting of the -independent part of (30), the second y-independent line of (32) and (33). This leaves for the free energy

with introduced for compactness. The first line reflects the mean-field result of [23], while the subsequent two lines give the first nontrivial correction that the novel representations developed here allowed us to derive.

As the computations leading to (34) are rather convoluted, it is important to implement some independent checks. One such check is provided by the particle–hole duality respected by the two-star model: in the original partition function (1), one can change the summation variable from the adjacency matrix A to the ’inverted’ adjacency matrix, where all filled edges are replaced by empty ones and vice versa. This manifestly relates partition functions, and hence free energies, at two different values of at any given N. As (34) is expressed through rather than , one has to deal with the corresponding transformation for that maps it to . The subleading corrections in this transformation mix the different orders of N in (34) and since the different contributions must cancel out in the end, this provides a nontrivial check of the subleading corrections in the last two lines of (34). Our formula passes this test. We provide an explicit implementation in Appendix C.

Further validation of (34) is provided by comparisons with numerical Monte Carlo sampling of the two-star model. We follow a strategy similar to what we have previously employed in [34] for more complicated related models, reviewed in Appendix D. In our comparisons between analytics and numerics, we focus on the degree variance. In terms of the free energy , the averages of the mean degree and mean degree squared are expressed as

To compute these derivatives, one also needs the derivatives of with respect to and B:

Then, the variance is

We separate out the contribution due to the extensive part of free energy, given by the first line of (34):

The contribution to the degree variance V coming from is

with . The leading order variance

matches the one derived in [23]. We are keeping the subleading order in (39) as it will combine with the higher-order corrections. The remaining nonextensive piece of the free energy (34) is

Since this expression is bulky, it is more practical to use symbolic computation software for handling its derivatives, and we rely on SymPy [35] for this purpose, followed by evaluating the resulting expressions for each set of parameters. To verify (34) numerically, we then extract point-by-point the degree variance from Monte Carlo simulations, with subtracted, and compare it with

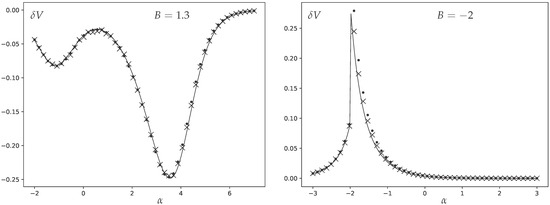

In Figure 1, we present the results of this comparison, showing excellent agreement. We opt for the moderate number of vertices as it allows us to keep the higher-order corrections to the analytics sufficiently small, but at the same time the Monte Carlo equilibration for such moderately sized graphs happens sufficiently fast to attain a very high precision. This high precision is necessary since we are measuring a small subleading contribution to the variance. (We additionally display the results of numerical simulations for .)

Figure 1.

The difference between degree variance and the leading order prediction given by (40) as a function of . The crosses represent numerical measurements from Monte Carlo sampling for graphs with vertices; the dots are the same for vertices, deviating from the analytic curve slightly stronger because of higher corrections, while the solid lines are the analytic prediction (42). Two different values are presented: on the left and on the right.

We have been dealing above with the dominant correction to the mean-field result of [23]. If one is to compute further corrections to the free energy, the process is completely algorithmic, even if it will become more and more laborious with each next order. Indeed, higher-order corrections will manifest themselves as contributions of order and smaller in the formula for on top of what is written explicitly in (27). All of these contributions are furthermore polynomial in . Then, when writing in (9), these corrections will give contributions suppressed by or more in the exponent, all of which can be re-expanded as additive contributions polynomial in . Thus, one will end up with the same Gaussian integrals as in our treatment above, except that they will have extra polynomial insertions in terms of suppressed by powers of N. All such Gaussian integrals with polynomial insertions can be evaluated using the standard formulas, leaving behind an explicit series in powers of that will correct our result in (34).

5. The Sparse Regime

We now turn to the sparse regime,

In this case, the J-sum in (10) works very differently from the dense case considered above, since turns into powers of , introducing stronger and stronger suppression in contributions from higher J. This leads to considerable simplifications.

To identify the relevant scalings of in terms of powers of N explicitly, consider the expansion of given by (10), similar to what has been employed in the previous sections, but with the new large-N scalings (43) of and :

From this, we see that it is self-consistent to assume that . Indeed, if that is so, the leading contribution to from scales as . When raised to the power N to obtain in (9), this will yield , since . Thus, for , the contribution of in (9) is completely negligible at large N, and one is left with an empty Gaussian integral of , so that the fluctuations of are of order 1 as assumed. For , gets a contribution of order 1 that should be integrated together with the Gaussian factor in (9). This integral will just produce a factor of order 1 in the partition function, and hence a nonextensive contribution to the free energy that does not alter the expectation values of the vertex degrees and their squares at large N, so we can ignore it. The fluctuations of are again of order 1 as assumed. The only contribution where the structure is different, and it defines the end result, is that of . Indeed, assuming that is of order 1 is inconsistent, since that would have yielded of the form , and the Gaussian factor would not have been able to keep the fluctuations at order 1 as assumed. The consistent assumption is that is of order , since in that case, is of order 1, and hence is of the form , while the Gaussian factor is of the same order, so that the two factors balance each other self-consistently producing an explicit saddle point, as we shall see immediately.

From the above estimates, for the leading order analysis, S will only depend on at large N, and to incorporate the relevant scaling of , we write . Then,

The first integral has an explicit saddle point structure at large N, and the saddle point is at satisfying

The free energy per vertex f satisfies

where X is the solution of (45), and we have only kept the contributions nonvanishing at large N. It is convenient to represent as a series

From (45),

The mean degree is

The last term vanishes by the saddle point equation, giving

At the same time,

Again, due to the saddle point equation,

Expressing X from (50) and substituting it into (48), multiplied by X, and into (52), we obtain

This is identical to the Annibale–Courtney solution of [24], but now derived using only elementary integrals, and in a few lines rather than a few pages. At any given c and , (53) has to be solved as a nonlinear equation for , whereupon is computed directly from (54).

6. The Sparse Regime: Condensation of the Master Variable

As we have seen in the previous section, the solution of the two-star model in the sparse regime is determined by a saddle point structure in terms of . The Park–Newman variables , on the other hand, do not condense to definite values, since there is a saddle point structure in the integral defining Z in (44), but not in the integral defining S. Likewise, the vertex degrees do not condense, since both mean degree and degree fluctuations are of order 1.

To develop a physical intuition for the condensation of , it is natural to turn to its Hubbard–Stratonovich conjugate . We can compute the expectation value of this observable by inserting it into the partition function (4):

This expression can be processed in two different ways. First, we can introduce the variables, pushing the structure toward (9) and (10), and then apply the saddle point analysis of Section 5. The only difference due to the new factor of is that we should multiply the integrand on the right-hand side of (8) with , since with this extra factor, integrating out precisely reproduces . In this way,

where we have used and the fact that x condenses to the definite value X determined by (45), with fluctuations suppressed by in view of the saddle point considerations of the previous section. Alternatively, we can take (55) and return to the original adjacency matrix variables defining the two-star model. We thus undo the step leading from (3) to (4) to obtain

where is the original two-star Hamiltonian (1) and are the vertex degrees. Note that all vertices are equivalent so we can write as simply . From (56) and (57), we then obtain

In other words, the condensation value of the master variable that controls the sparse regime of the two-star model is proportional to the expectation value of a specific exponential function of the vertex degree in terms of the original random graph variables.

7. Conclusions

We have revisited solutions of the two-star random graph model in the thermodynamic limit, in both dense and sparse regimes. Previous approaches relied on the variables dating back to [23], which are Hubbard–Stratonovich conjugates of the vertex degrees. We pointed out that considerable empowerment of the formalism results from introducing further variables as in (8). These variables can be thought of as Hubbard–Stratonovich conjugates of . The usage of such exponentials of the fields is ubiquitous in conformal field theories [36]—see [37] for a recent discussion—but to the best of our knowledge they have not appeared previously in studies of random graphs.

In the dense regime, it is known that the variables condense themselves to definite values at large N. It is predictable that all variables condense to definite values as well, with small fluctuations. Our representation provides for effective control over these fluctuations, with a straightforward bookkeeping of the factors, which is challenging in the language of . We thus manage to compute the nonextensive part of the free energy in the dense regime. In the sparse regime, the situation is more peculiar: the variables do not condense—the representation for S in (44) does not have a large N saddle point structure—but the variables do, leading to a simple saddle point calculation of the free energy. This calculation is phrased entirely in terms of elementary one-dimensional integrals. The condensation value of the x-variable determining the large N behavior in the sparse regime can be expressed in terms of the original graph geometry as the expectation value of a certain exponential of the vertex degree given by (58).

We schematically summarize the interplay between our work and the previous treatments in [23,24] in Table 1. (We stress that this table only shows the aspects of [23,24] relevant for the considerations here, while those papers additionally consider many other important questions, in particular, in relation to the phenomenology of phase transitions in two-star graphs.)

Table 1.

Comparison with the previously explored approaches:

Besides elucidating the analytic structure of the two-star model, we hope that the techniques presented here will usefully transfer to further related settings. A number of interesting extensions of the two-star model can be seen in the literature [38,39,40,41,42], and they provide an attractive avenue for exploration in this regard.

Author Contributions

Conceptualization, O.E.; Methodology, O.E.; Formal analysis, P.A.-p. and O.E.; Investigation, P.A.-p. and O.E.; Data curation, P.A.-p.; Writing—original draft, P.A.-p. and O.E.; Writing—review & editing, O.E. All authors have read and agreed to the published version of the manuscript.

Funding

O.E. is supported by the C2F program at Chulalongkorn University and by NSRF via grant number B41G680029.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

We thank Sergei Nechaev for valuable discussions on closely related topics.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Naive Expansion Around the Park–Newman Solution

To illustrate the power of our formalism, we would like to briefly contrast it with naive expansion around the Park–Newman solution [23]. In our notation, the representation for the partition function used for obtaining that solution is given by (5), which we reproduce here for convenience:

To derive the mean-field solution, one assumes that the dominant configuration is site-independent, , where satisfies the same equation we derived in Section 3:

One then expands as and writes

Equation (A2) ensures that there are no terms linear in in this expansion. Then, the exponential of all of these terms starting with the cubic one should be expanded in a Taylor series, so that a Gaussian integral over emerges from (A1) with an infinite series of polynomial insertions.

To get a sense of how this expansion works, we write out the general formulas for the derivative tensors

Notice the Kronecker symbols involving only the two indices k, l and one external index , so that the derivative tensors vanish unless there are at most two distinct values present among the indices . The first few orders evaluated at the saddle point are

One can in principle build the standard Feynman perturbation theory from this expansion, with the inverse of the matrix used as the propagator, and the higher-order terms in the -expansion used as vertices. However, the expressions for the diagrams will involve summations over indices taking values from 1 to N, contributing positive powers of N that will compete with the negative powers seen in the denominators of the derivative formulas above. Furthermore, the powers of N, both in the propagator and the vertices, depend on the number of coincident indices. This leads to complicated accounting of the powers of N. By contrast, with the variables and the representation (9) and (10) used in the main text, N is a purely numerical parameter in the action, and the powers of N are recovered straightforwardly—essentially, by means of Taylor expansions.

Appendix B. Some Useful Formulas

While the expressions below can be straightforwardly derived from (26), they are used extensively in our manipulations and we summarize them for the convenience of the reader:

Appendix C. Particle–Hole Duality

The partition function of the two-star model is defined as

We want to change the summation variable as , which precisely corresponds to flipping the state of all the edges (filled to empty, empty to filled). Then,

This means that under this transformation, in the dense regime (substitute ), the free energy changes as

Since we express our results as functions of defined by (22), rather than as functions of and B, it is handy to correspondingly recast the transformation as . To do so, we first express through as

enact the transformation , and then write the equation defining through :

This equation can be solved in terms of an expansion as

With this transformation, we write

This relation is respected by our result (34) up to order , providing a validation of our derivations.

Appendix D. Monte Carlo Sampling

We sample graphs from the two-star ensemble using a particular version of Metropolis Monte Carlo algorithm that we have previously employed in [34] for related models. We summarize it below. General discussion can be found in [43].

We start with an adjacency matrix of an Erdös–Rényi graph generated by randomly connecting any two vertices with probability . Then, for each Monte Carlo step, we propose an update move which flips the current edge (filled to empty, empty to filled) for a randomly picked pair of vertices . The change in the adjacency matrix can be formally written as

This move is then accepted with probability as per the usual Metropolis algorithm.

Computing the full Hamiltonian takes a number of operations of order , which can be time-consuming when one has to take a large number of samples from a big system. One can optimize the numerical process by computing only the change of the Hamiltonian from each proposed update move. For this, one needs to compute the change in the sum of degrees , and in . From (A15), the change in degree of node m is

It follows that

The change in the Hamiltonian is thus

This allows one to compute the acceptance probability with a number of operations of order 1.

We compute the mean degree and the mean degree squared by averaging over Monte Carlo sample points, which are taken at intervals of elementary Monte Carlo steps with the update rule described above. The long interval between the samples ensures that a significant part of the graph is updated by the stochastic evolution before each next sample is taken.

References

- Moshe, M.; Zinn-Justin, J. Quantum field theory in the large N limit: A review. Phys. Rep. 2003, 385, 69–228. [Google Scholar] [CrossRef]

- Hotta, T.; Nishimura, J.; Tsuchiya, A. Dynamical aspects of large N reduced models. Nucl. Phys. B 1999, 545, 543–575. [Google Scholar] [CrossRef]

- Mandal, G.; Mahato, M.; Morita, T. Phases of one dimensional large N gauge theory in a 1/D expansion. J. High Energy Phys. 2010, 2010, 34. [Google Scholar] [CrossRef]

- Evnin, O. Randomized Wilson loops, reduced models and the large D expansion. Nucl. Phys. B 2011, 853, 461–474. [Google Scholar] [CrossRef]

- Sasakura, N. Real eigenvector distributions of random tensors with backgrounds and random deviations. Prog. Theor. Exp. Phys. 2023, 2023, 123A01. [Google Scholar] [CrossRef]

- Delporte, N.; Sasakura, N. The edge of random tensor eigenvalues with deviation. J. High Energy Phys. 2025, 2025, 71. [Google Scholar] [CrossRef]

- Majumder, S.; Sasakura, N. Three cases of complex eigenvalue/vector distributions of symmetric order-three random tensors. Prog. Theor. Exp. Phys. 2024, 2024, 093A01. [Google Scholar] [CrossRef]

- Staudacher, M. Combinatorial solution of the two-matrix model. Phys. Lett. B 1993, 305, 332–338. [Google Scholar] [CrossRef]

- Eynard, B. Master loop equations, free energy and correlations for the chain of matrices. J. High Energy Phys. 2003, 2003, 18. [Google Scholar] [CrossRef][Green Version]

- Rodgers, G.J.; Bray, A.J. Density of states of a sparse random matrix. Phys. Rev. B 1988, 37, 3557. [Google Scholar] [CrossRef]

- Bray, A.J.; Rodgers, G.J. Diffusion in a sparsely connected space: A model for glassy relaxation. Phys. Rev. B 1988, 38, 11461. [Google Scholar] [CrossRef]

- Fyodorov, Y.V.; Mirlin, A.D. On the density of states of sparse random matrices. J. Phys. A 1991, 24, 2219. [Google Scholar] [CrossRef]

- Mirlin, A.D.; Fyodorov, Y.V. Universality of level correlation function of sparse random matrices. J. Phys. A 1991, 24, 2273. [Google Scholar] [CrossRef]

- Akara-pipattana, P.; Evnin, O. Random matrices with row constraints and eigenvalue distributions of graph Laplacians. J. Phys. A 2023, 56, 295001. [Google Scholar] [CrossRef]

- Akara-pipattana, P.; Evnin, O. Statistical field theory of random graphs with prescribed degrees. J. Stat. Mech. 2025, 2025, 053402. [Google Scholar] [CrossRef]

- Polyakov, A.M. Gauge Fields and Strings; Routledge: London, UK, 1987. [Google Scholar] [CrossRef]

- Das, S.R. Some aspects of large N theories. Rev. Mod. Phys. 1987, 59, 235. [Google Scholar] [CrossRef]

- Makeenko, Y. Methods of Contemporary Gauge Theory; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Shen, H.; Smith, S.A.; Zhu, R. A new derivation of the finite N master loop equation for lattice Yang-Mills. Electr. J. Prob. 2023, 29, 1–18. [Google Scholar] [CrossRef]

- Newman, M. Networks: An Introduction; Oxford University Press: Oxford, UK, 2018. [Google Scholar] [CrossRef]

- Paton, J.; Hartle, H.; Stepanyants, H.; Hoorn, P.v.; Krioukov, D. Entropy of labeled versus unlabeled networks. Phys. Rev. E 2022, 106, 054308. [Google Scholar] [CrossRef] [PubMed]

- Evnin, O.; Krioukov, D. Ensemble inequivalence and phase transitions in unlabeled networks. Phys. Rev. Lett. 2025, 134, 207401. [Google Scholar] [CrossRef]

- Park, J.; Newman, M.E.J. Solution of the two-star model of a network. Phys. Rev. E 2004, 70, 066146. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Annibale, A.; Courtney, O.T. The two-star model: Exact solution in the sparse regime and condensation transition. J. Phys. A 2015, 48, 365001. [Google Scholar] [CrossRef]

- Radin, C.; Yin, M. Phase transitions in exponential random graphs. Ann. App. Prob. 2013, 23, 2458–2471. [Google Scholar] [CrossRef]

- Mukherjee, S.; Xu, Y. Statistics of the two-star ERGM. Bernoulli 2023, 29, 24–51. [Google Scholar] [CrossRef]

- Bizhani, G.; Paczuski, M.; Grassberger, P. Discontinuous percolation transitions in epidemic processes, surface depinning in random media, and Hamiltonian random graphs. Phys. Rev. E 2012, 86, 011128. [Google Scholar] [CrossRef]

- Gorsky, A.; Valba, O. Finite-size effects in exponential random graphs. J. Compl. Net. 2020, 8, cnaa008. [Google Scholar] [CrossRef]

- Biondini, G.; Moro, A.; Prinari, B.; Senkevich, O. p-star models, mean field random networks and the heat hierarchy. Phys. Rev. E 2022, 105, 014306. [Google Scholar] [CrossRef] [PubMed]

- Gurau, R.; Ryan, J.P. Colored tensor models—A review. SIGMA 2012, 8, 20. [Google Scholar] [CrossRef]

- Gurău, R. Random Tensors; Oxford University Press: Oxford, UK, 2017. [Google Scholar] [CrossRef]

- Kawamoto, T. Entropy of microcanonical finite-graph ensembles. J. Phys. Compl. 2023, 4, 035005. [Google Scholar] [CrossRef]

- Evnin, O.; Horinouchi, W. A Gaussian integral that counts regular graphs. J. Math. Phys. 2024, 65, 093301. [Google Scholar] [CrossRef]

- Akara-pipattana, P.; Chotibut, T.; Evnin, O. The birth of geometry in exponential random graphs. J. Phys. A 2021, 54, 425001. [Google Scholar] [CrossRef]

- Meurer, A.; Smith, C.P.; Paprocki, M.; Čertík, O.; Kirpichev, S.B.; Rocklin, M.; Kumar, A.M.; Ivanov, S.; Moore, J.K.; Singh, S.; et al. SymPy: Symbolic computing in Python. PeerJ Comput. Sci. 2017, 3, e103. [Google Scholar] [CrossRef]

- Francesco, P.; Mathieu, P.; Sénéchal, D. Conformal Field Theory; Section 6; Springer: Berlin/Heidelberg, Germany, 1997. [Google Scholar] [CrossRef]

- Benedetti, D.; Lauria, E.; Mazáč, D.; van Vliet, P. One-dimensional Ising model with 1/r1.99 interaction. Phys. Rev. Lett. 2025, 134, 201602. [Google Scholar] [CrossRef] [PubMed]

- Snijders, T.A.B.; Pattison, P.E.; Robins, G.L.; Handcock, M.S. New specifications for exponential random graph models. Socio. Meth. 2006, 36, 99. [Google Scholar] [CrossRef]

- Avetisov, V.; Gorsky, A.; Maslov, S.; Nechaev, S.; Valba, O. Phase transitions in social networks inspired by the Schelling model. Phys. Rev. E 2018, 98, 032308. [Google Scholar] [CrossRef]

- Bolfe, M.; Metz, F.L.; Guzmán-González, E.; Castillo, I.P. Analytic solution of the two-star model with correlated degrees. Phys. Rev. E 2021, 104, 014147. [Google Scholar] [CrossRef]

- Catanzaro, A.; Patil, S.; Garlaschelli, D. A general solution for network models with pairwise edge coupling. arXiv 2025, arXiv:2503.20805. [Google Scholar] [CrossRef]

- Marzi, M.; Giuffrida, F.; Garlaschelli, D.; Squartini, T. Reproducing the first and second moment of empirical degree distributions. arXiv 2025, arXiv:2505.10373. [Google Scholar] [CrossRef]

- Newman, M.E.J.; Barkema, G.T. Monte Carlo Methods in Statistical Physics; Oxford University Press: Oxford, UK, 1999. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).