1. Introduction

Bitcoin is widely considered the first decentralized digital currency [

1], relying on a consensus mechanism and distributed architecture to ensure transaction transparency and stability. While its anonymity [

2] protects user privacy, it also enables illicit activities such as money laundering and extortion [

3,

4,

5,

6]. These activities not only pose financial risks but also complicate regulatory oversight, making anomaly detection essential for identifying fraud by uncovering hidden illegal transaction patterns.

Recent advances in machine learning [

7] and neural networks [

8] have significantly improved detection techniques. Bitcoin transaction data, represented as a dynamic graph, evolves over time through correlated subgraphs, motivating research into dynamic graph anomaly detection (DGAD) [

9]. In static graph scenarios, graph neural networks (GNNs) [

10] have been effective for node classification and edge prediction. In dynamic settings, the integration of graph convolutional networks (GCNs) with temporal feature modeling [

11,

12,

13] has enhanced feature extraction for Bitcoin transaction graphs. Notably, EvolveGCN [

14] adapts GCN parameters dynamically through recurrent neural networks (RNNs) [

15], capturing evolving graph structures and node attributes. However, EvolveGCN’s unidirectional temporal modeling limits its ability to capture long-range temporal dependencies in transaction data.

Moreover, Bitcoin transaction networks suffer from severe class imbalance, as abnormal transactions are rare compared to normal transactions. This imbalance leads models to favor the majority class, reducing detection accuracy for anomalies. Current solutions, such as GraphSMOTE [

16], address this issue by generating synthetic samples based on node connectivity; however, they struggle with detecting subtle anomalies whose spatial distributions overlap with those of normal nodes.

To overcome these challenges, we propose the Bidirectional EvolveGCN with Class-Balanced Learning Network (Balanced-BiEGCN) for Bitcoin transaction anomaly detection. The model consists of two key innovations: (1) Bi-EvolveGCN, a bidirectional temporal feature fusion mechanism that enhances the capture of long-range dependencies in dynamic graphs, and (2) a Sample Class Transformation Classifier (CSCT), which balances class distributions by generating difficult-to-distinguish abnormal samples. The generation of these samples is guided by two novel loss functions: (a) the Adjacency Distance Adaptive Loss function, which optimizes the adjacency relationships of generated anomalies to mimic real abnormal nodes, and (b) the Symmetric Space Adjustment Loss function, which adjusts the spatial distribution of hard-to-detect anomalies to improve detection performance.

In summary, the contributions of this paper are:

We design Balanced-BiEGCN, a novel framework that integrates Bi-EvolveGCN for bidirectional temporal feature extraction and CSCT to address class imbalance through synthetic anomaly sample generation.

We propose the Adjacency Distance Adaptive Loss function to align the topological properties of synthetic anomalies with those of real anomalous nodes, thereby enhancing anomaly detection.

We develop the Symmetric Space Adjustment Loss function to improve the detection of subtle anomalies by refining their spatial distribution.

2. Related Work

Bitcoin, due to its decentralized and encrypted nature, has become an innovative medium for financial transactions. However, its anonymity and immutability also enable fraud and illegal transactions, making Bitcoin transaction anomaly detection a crucial area of research in fintech. This task involves analyzing blockchain records to extract graph structural features, node attributes, and temporal dynamics, to detect anomalous transactions or malicious entities [

17].

Current detection methods can be broadly classified into two categories: traditional methods based on feature engineering [

18] and methods leveraging deep learning [

19,

20]. Traditional approaches focus on manually designing features such as transaction frequency, amount, and node connectivity, using classical machine learning algorithms like linear regression and decision trees for classification. In contrast, deep learning-based methods, particularly Graph Neural Networks (GNNs) and self-supervised learning frameworks, automatically learn latent feature representations from transaction networks, significantly improving detection accuracy and robustness [

21,

22,

23].

Despite these advances, significant challenges remain, including the scarcity of labeled anomalies and the increasing concealment of malicious activities. Dynamic graph anomaly detection (DGAD), which focuses on identifying anomalies in evolving graph structures, introduces further complexities, including dynamic topology modeling, temporal dependency capture, and robustness to noise. In the Bitcoin transaction context, the dynamic nature of transaction relationships requires temporal graph models for accurate representation. Notable approaches include DyGCN [

24], which updates GCN parameters dynamically using LSTM [

25] and mutual information maximization to capture global structures, enabling the identification of abnormal address-node connections in the transaction network. PI-GNN [

26] addresses catastrophic forgetting by freezing stable subgraph parameters and expanding new ones to capture emerging abnormal behaviors. STRIPE [

27] decouples transaction features into steady-state topologies (e.g., transaction communities) and dynamic attributes (e.g., single transaction amounts), using memory networks and mutual attention mechanisms to detect anomalies.

Further advancements in spatiotemporal joint modeling and efficiency optimization include DGL-LS [

28], which uses multi-scale convolutional kernels and attention gates to capture both short-term and long-term temporal dependencies, thus identifying large transfers and abnormal trends. Dy-SIGN [

29] employs spiking neural networks and implicit differentiation, representing transactions as binary spikes and reducing memory consumption to half that of traditional methods.

Existing research in Bitcoin transaction anomaly detection has made notable progress, especially with deep learning methods like Graph Neural Networks (GNNs) and self-supervised learning, which have enhanced detection accuracy. However, challenges remain in modeling the dynamic and temporal aspects of transaction networks, as well as handling class imbalance and the scarcity of labeled anomaly samples. While models like DyGCN and PI-GNN have made strides in addressing dynamic challenges, capturing long-term temporal dependencies and evolving anomalous behaviors remains an open area for improvement. This paper aims to bridge these gaps by proposing a novel model that integrates bidirectional temporal feature integration with class imbalance techniques, enhancing the detection of subtle and emerging anomalies. The proposed approach enhances long-term temporal dependency modeling and offers a robust framework for handling dynamic and complex transaction behaviors, thereby providing a more comprehensive solution to the outstanding challenges in this field.

3. Method

This paper proposes a Bidirectional EvolveGCN with a Class-Balanced Learning Network (Balanced-BiEGCN) to address the challenge of anomaly detection in dynamic Bitcoin transaction graphs. The entire method consists of two stages: node feature extraction and classification. First, in the feature extraction stage, as shown in

Figure 1a, the Bi-EvolveGCN module learns node representations by aggregating information from both forward and backward temporal subgraphs. Technical details are provided in

Section 3.1. Second, in the classification stage, as shown in

Figure 1b, the CSCT balances the class distribution by synthesizing hard-to-distinguish anomalous samples from normal ones. Technical details are given in

Section 3.2. Furthermore,

Section 3.3 details the loss functions of Balanced-BiEGCN. The adjacency distance adaptive loss and the symmetric space adjustment loss are core constraint mechanisms, providing critical guidance during the synthesis of a new anomalous node class.

To model the dynamic nature of Bitcoin transaction graphs, the Balanced-BiEGCN decomposes the input data into a sequence of T temporal subgraphs, each representing a specific time window. Formally, a snapshot at time point is denoted as , where represents the node set ( is the number of nodes); is the adjacency matrix, where 1 if an edge exists between nodes and , and 0 otherwise; represents the dimensional feature vectors of the nodes (e.g., containing transaction amount, timestamp, etc.).

3.1. Bi-EvolveGCN

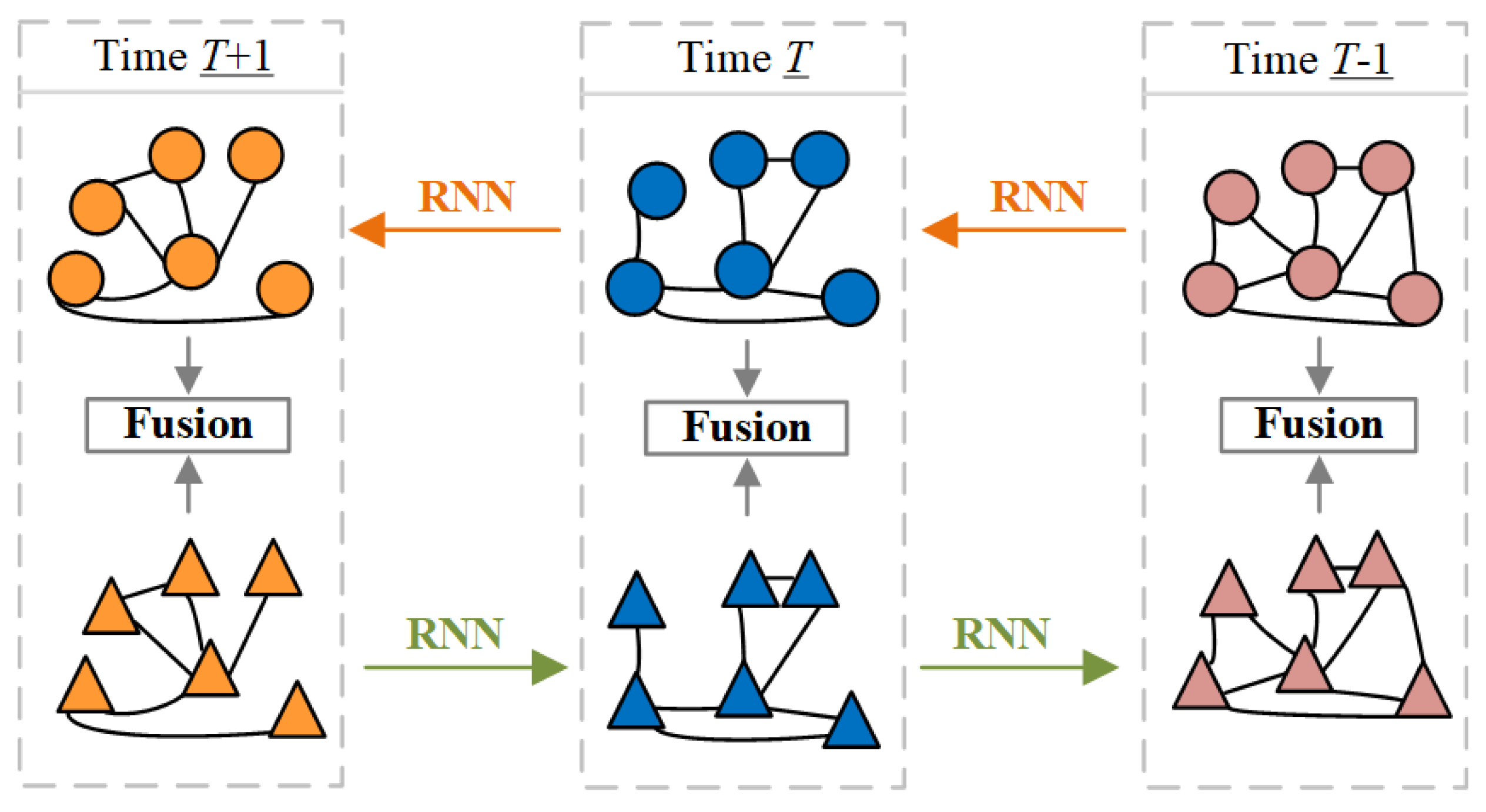

In the feature extraction stage, Balanced-BiEGC designs a bidirectional temporal feature fusion extractor (Bi-EvolveGCN) based on EvolveGCN. As briefly shown in

Figure 2, at three consecutive time points

T − 1,

T, and

T + 1, Bi-EvolveGCN embeds forward-backward temporal subgraphs (to better distinguish the direction, the subgraph nodes are displayed as circles and triangles, respectively) into the feature graph. This design concept borrows the bidirectional temporal modeling mechanism of Bi-LSTM [

30] and is particularly suitable for long-range dependency analysis tasks in dynamic graphs. Specifically, taking time point

as an example, the Bi-EvolveGCN in

Figure 1a is composed of two bidirectional EvolveGCNs. The RNN temporal update network of EvolveGCN includes two versions, -O and -H, which differ in that they use LSTM and GRU [

31] as their core units, respectively. LSTM excels at handling data scenarios with sparse node features but significant dynamic changes in graph structure, giving the -O version an advantage in Bitcoin transaction anomaly detection tasks. This critical speed advantage ensures that EvolveGCN-O can flag suspicious transactions before they are confirmed on the blockchain, whereas attention-based models, bogged down by computational overhead, often miss the narrow window for intervention. Therefore, this paper selects the -O version as the foundation for constructing Bi-EvolveGCN.

Bi-EvolveGCN employs a bidirectional recurrent update strategy to model dynamic graph temporal features, with each EvolveGCN comprising two consecutive GCNs. First, we introduce the forward and backward learnable weights

and

of the GCN at the

l-th layer. The update rule for

is as follows:

The above is a forward weight update, which applies LSTM (a special variant of RNN) to capture forward temporal dependencies, inputs the weight at time

into LSTM, and learns the long-term temporal relationships of node features. Similarly, the reverse weight

is updated from

. Next, based on

, perform the forward update operation of node feature

. Taking the forward node feature

output by the

l-th layer of GCN as an example, the update formula is:

Among them,

stands for the ReLU activation function [

32], and nonlinear factors can be introduced to enhance the expressive power of the model.

is the adjacency matrix of the subgraph at time

.

(if

, then

) is the positive node feature of layer

, and

is the intercept. The final output of the forward node feature is

. Similarly,

is the final output of the reverse node feature. Finally,

and

are merged to obtain the final node feature

The above formula concatenates features and along dimension . The above process fuses bidirectional temporal information into node features, providing support for long-time distance relationship mining in subsequent tasks.

3.2. Classifier Based on Sample Class Transformation

The CSCT method uses existing abnormal nodes as references and generates new abnormal nodes based on partial normal nodes from the perspectives of adjacency and spatial distribution. As shown in

Figure 1a, teach temporal subgraph deploys a CSCT to perform node classification tasks.

Figure 1b take an arbitrary temporal subgraph as an example, the node features input to CSCT are denoted as

. Here,

is the total number of nodes in the graph, and

A is the dimension of a single node feature.

First, randomly select normal nodes

(numbered as

) with a ratio of

as the basis for conversion to new abnormal nodes. Next, simultaneously perform neighbor node embedding on

to obtain

and add noise to obtain

. The formulas are as follows:

Among them,

is the node adjacency matrix;

is used to generate a normal distribution random number matrix consistent with the feature

dimension, and the mean

and variance

jointly constrain the specific normal distribution that the noise obeys. This noise addition constructs a symmetric feature space that is close to normal node features and difficult to distinguish, which can provide a spatial distribution guide for the generation process of novel abnormal nodes. Subsequently,

undergoes feature transformation through a fully connected (FC) layer and a rectified linear unit (ReLU) layer, and the transformed results are replaced back into the corresponding node features of the original node set

with the numbered

. The formula is as follows:

In this process,

represents replacing the node features in set

whose IDs belong to

with the corresponding node features in set

in order. Finally, a multi-layer perceptron (MLP) [

33] consisting of three FC layers and two ReLU layers performs classification prediction on the replaced node feature set

, as shown in the following formula:

is the multi-layer perceptron method, .

3.3. Loss Functions

To ensure that the novel generated abnormal nodes are close to the real abnormal nodes, CSCT designed the adjacency distance adaptive loss function and the symmetric space adjustment loss function to constrain the novel generated abnormal nodes to tend toward the actual ones. At the same time, the basic binary cross-entropy loss function [

34] was introduced as the basic optimization objective for the classification task.

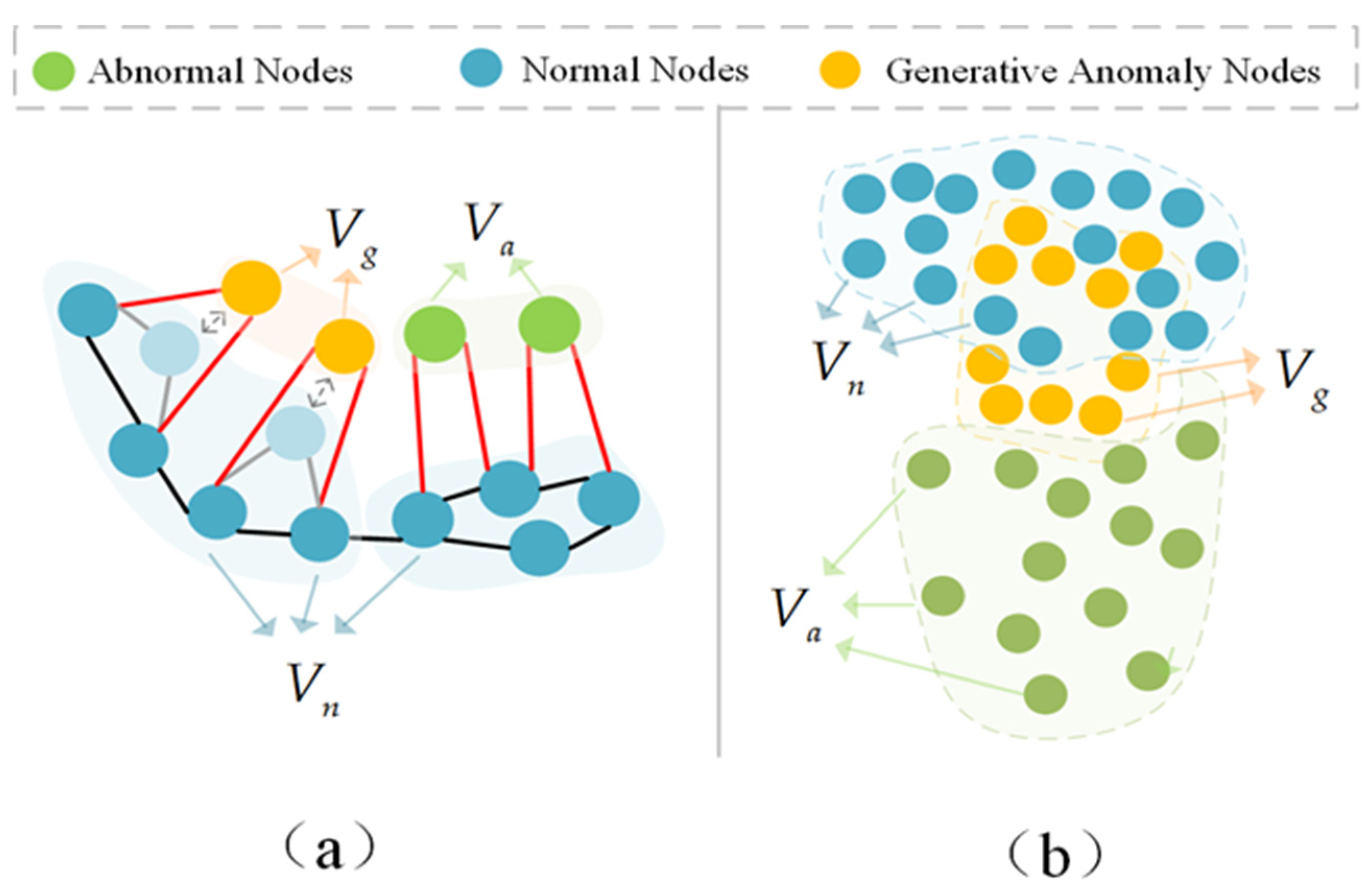

Specifically,

Figure 3 shows the design principle of the adjacency distance adaptive loss function and the symmetric space adjustment loss function, where

is the normal node class,

is the abnormal node class, and

is the generated abnormal node class. The adjacency distance adaptive loss function is shown in

Figure 3a, where

is more closely related to the adjacent nodes, and

is relatively loose. It aims to generate abnormal node class

by adjusting the adjacent distance of some normal nodes so that they are closer to

in terms of adjacent relationships. The symmetric space adjustment loss function is shown in

Figure 3b. The spatial distribution of difficult-to-distinguish abnormal node classes is closer to

than to the whole

, aiming to guide

to tend toward

in terms of spatial distribution.

3.3.1. Adjacency Distance Adaptive Loss Function

In order to map the adjacency relationship of existing abnormal nodes to the generation of novel abnormal nodes, CSCT designed an adjacency distance adaptive loss function to guide the aggregation degree of novel generated abnormal nodes and their neighboring nodes to be close to that of real abnormal nodes.

In the specific implementation, first, the feature matrix

of the initial node set is normalized. When there is an edge connection between nodes

and

, the similarity matrix

between the two is constructed by element-wise inner product computation, and the calculation formula is as follows:

Next, based on

, calculate the aggregation degree of each node in the adjacency relationship using the following formula:

In the above formula, calculates the number of adjacent nodes of node in the graph structure based on the graph adjacency matrix . The higher the value of , the closer the association between node and other nodes.

Then, the adjacency distance adaptation loss

is obtained by the following formula:

In the above formula, when , the affinity mean of the real abnormal node class is calculated, is the number of real abnormal nodes, and is the index of the real node in the overall node set. Similarly, when , the affinity mean of the abnormal nodes is calculated, corresponding to and . aims to reduce the difference in the degree of adjacency between the old and novel abnormal node classes.

3.3.2. Symmetric Space Adjustment Loss Function

In dynamic graph anomaly detection tasks, pseudo-anomalous nodes generated by traditional adjacency aggregation often have feature space symmetry deficiencies—their distribution is difficult to approximate the high similarity boundary region between real abnormal nodes and normal nodes (i.e., the difficult-to-distinguish category boundary region). To solve this problem, this paper proposes the symmetric space adjustment loss function

, which models subtle differences in the feature space to strengthen the model’s ability to learn complex decision boundaries. The specific formula is as follows:

The specific operation of the above formula is as follows: first, use the square sum of feature differences to amplify subtle deviations, thereby capturing hard-to-distinguish nodes; second, integrate global differences by taking the square root; finally, take the average value of the difference values of the new abnormal nodes, thereby guiding the model to focus on highly similar abnormal nodes.

3.3.3. Basic Loss Function

In addition to the

and

loss functions, Balanced-BiEGCN introduces a binary cross-entropy loss function as the basic classification loss, as shown in the following formula:

where

is the probability that the node output from the classifier belongs to the normal category;

is the node label (normal node

, abnormal node

); and

and

are the category weights, which are used to balance the loss contribution of positive and negative samples. Finally, to synthesize the effects of

,

, and

on model training, the hyperparameter

weighted combination is used to balance three loss functions, and the total loss formula is:

4. Experiments

4.1. Data and Set

Elliptic [

35] is the largest publicly available labeled dataset in the domain of Bitcoin transaction analysis, containing 49 consecutive temporal subgraphs. The dataset is divided into training (1–30), validation (31–35), and test sets (36–49) based on the node data corresponding to the timestamps. During the training and testing phases, only the labeled nodes and their associated edges are utilized. The dataset contains a total of 203,769 nodes, of which 4545 are labeled as illicit (invalid) and 42,019 as licit (valid), along with 234,355 edges representing transaction relationships.

Given that Bitcoin transaction anomaly detection is formulated as a binary classification problem, this study adopts

,

, and

as the primary performance evaluation metrics. The respective formulas are defined as follows:

In these equations, (true positives) denotes the number of correctly identified positive (illicit) samples; (false positives) refers to negative (licit) samples incorrectly classified as positive; and (false negatives) indicates positive samples that are misclassified as negative.

4.2. Implementation

The experiments are implemented using the PyTorch 1.12.1 framework and executed on an NVIDIA RTX 3090 GPU. The model is optimized using the Adam optimizer with an initial learning rate set to 0.001. During the data sampling process, subgraphs are randomly selected from five consecutive temporal windows for each training iteration. The training process is conducted over 100 epochs.

To address the class imbalance inherent in the dataset, category weights are introduced in the loss function (as described in Equation (12)), with the majority and minority classes assigned weights of 0.35 and 0.65, respectively. This weighting scheme is designed to improve the model’s sensitivity to the minority class and enhance its performance on imbalanced classification tasks.

4.3. Comparison with State-of-the-Art Methods

Table 1 presents the experimental results of Balanced-BiEGCN and some other methods on the Elliptic dataset. To ensure the stability of the experiments, each method was tested 10 times independently, and the average value was taken as the final evaluation benchmark. The experimental results show that Balanced-BiEGCN outperforms other methods in all detection metrics, especially the F1 score, which reaches 0.765.

This section conducts comparative experiments from the perspectives of static graphs and dynamic graphs, selecting 10 mainstream methods including GCN and Skip-GCN. The core difference between the two lies in the way time information is embedded: static graphs integrate time as node features, while dynamic graphs divide continuous subgraphs based on time to capture temporal dependencies. In the static graph scenario, four methods—GCN, Skip-GCN, and others—belong to graph neural networks, with Skip-GCN and HHLN-GNN performing the best; Balanced-BiEGCN improves upon Skip-GCN and HHLN-GNN by +6%/+3.9%@F1 through optimized time information embedding; RF and CTDM, as the better traditional machine learning algorithms, still achieves +7.1%/+2.7%@F1 improvement over Balanced-BiEGCN, indicating that incorporating node relationships and dynamic information effectively enhances detection robustness. In dynamic graph scenarios, EvolveGCN-O and EvolveGCN-H belong to dynamic graph neural networks, while GRU and LSTM belong to recurrent neural networks. EvolveGCN-O performs better, highlighting the critical role of feature mining in detection; Balanced-BiEGCN achieves a +4.5%@F1 improvement over EvolveGCN-O. Overall, the advantages of Balanced-BiEGCN stem from two innovations: first, it alleviates class imbalance by converting normal nodes into new abnormal nodes; second, it enhances the ability to mine feature dependencies in long-term subgraphs, significantly improving performance in anomaly detection tasks and validating the effectiveness of integrating dynamic temporal information with graph structure modeling.

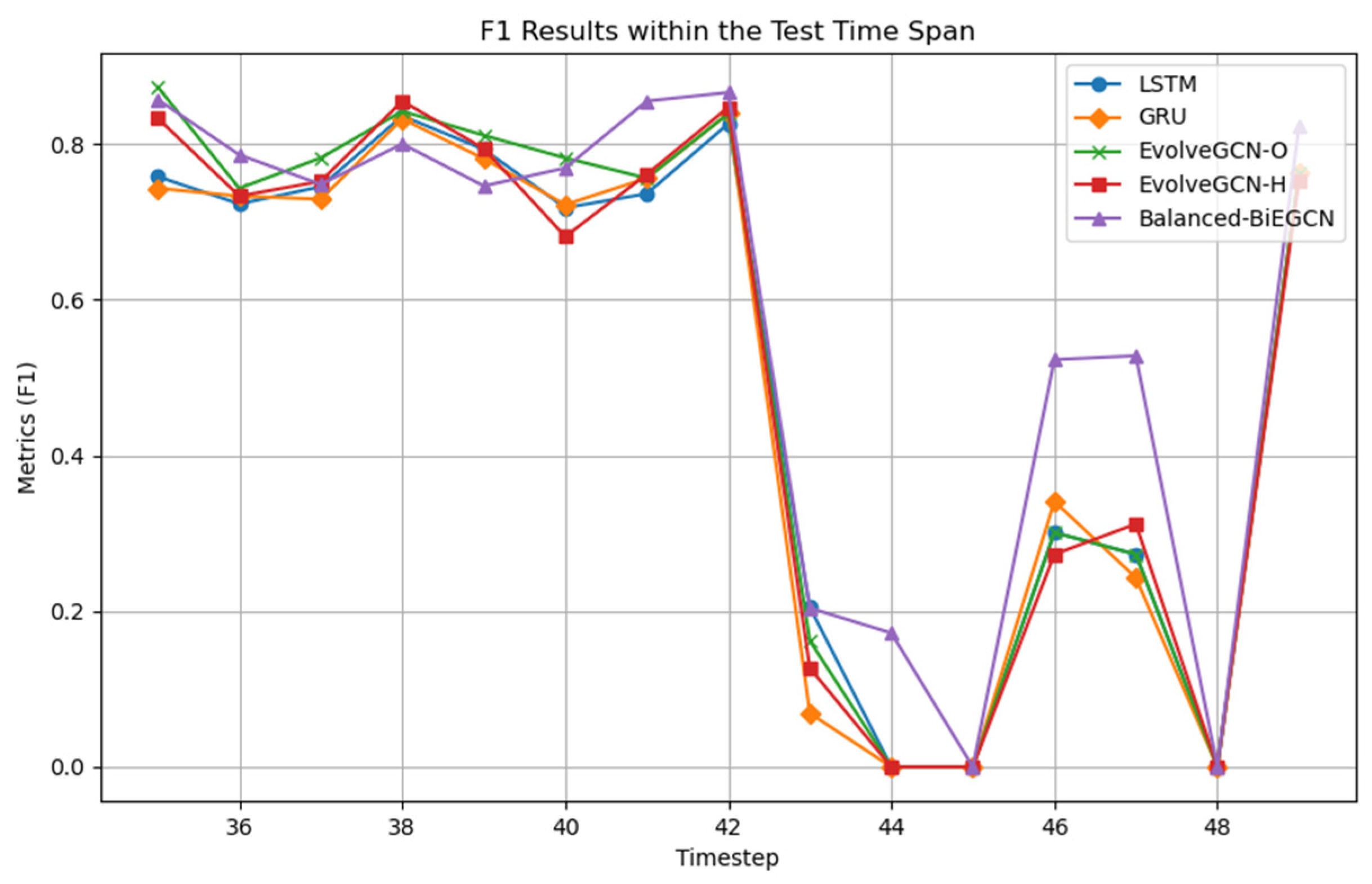

In addition, to explore the detection capabilities of the five dynamic graph approaches in

Table 1 at different time points, this section selects subgraphs from time points 35 to 49 for comparison experiments. The results are shown in

Figure 4. The experiment shows that in the time interval 35–42, all methods show good detection performance; however, starting from time point 43, the model performance fluctuates. It is worth noting that Balanced-BiEGCN has a significant advantage in difficult-to-detect subgraphs such as 44, 46, and 47, which fully demonstrates the advantages of this model in handling hard abnormal samples.

4.4. Ablation Study

To better demonstrate the effectiveness of Balanced-BiEGCN and the impact of each innovative component, this section will conduct ablation experiments using the test set.

The following components are removed: (1) Base (EvolveGCN-O); (2) Base + Bi-EvolveGCN (replacing the EvolveGCN in Base with Bi-EvolveGCN); (3) Balanced-BiEGCN— (removing loss function from Balanced-BiEGCN); (4) Balanced-BiEGCN— (removing Balanced-BiEGCN with loss function ); (5) Balanced-BiEGCN.

According to the experimental data in

Table 2, the Balanced-BiEGCN significantly outperforms the baseline method in key metrics, achieving improvements of +4.5%@F1, +2.9%@Pre, and +5.1%@Re. Further analysis of the functionality of each module reveals that modules (2), (3), and (4) contribute +1.5%, +3.3%, and +2.4%@F1 improvements over the Base model, respectively. These figures clearly demonstrate the contribution of each component to the overall model performance. First, the node feature fusion strategy under the bidirectional temporal update parameter mechanism effectively improves the model’s ability to mine deep node features by strengthening feature interactions in the temporal dimension. This can effectively provide richer features for the subsequent generation of pseudo-anomalous nodes. Second, adjacency aggregation degree loss plays a key role in the node conversion process. Based on the explicit modeling of structural dependencies between nodes, it optimizes the conversion quality of normal nodes to new abnormal nodes. Finally, symmetric space adjustment loss enhances the model’s ability to distinguish between fuzzy class boundaries by spatially aligning the novel abnormal node class with real hard-to-mine abnormal node classes. Through mechanism innovation and complementary functions, the above modules jointly contribute to the performance advantages of Balanced-BiEGCN in complex anomaly detection scenarios.

4.5. Quantitative Experiment

This section uses quantitative experimental strategies to optimize the model’s core hyperparameters and determine their optimal configuration.

Figure 5,

Figure 6 and

Figure 7 show the experimental results for the four key hyperparameters, followed by a detailed analysis of the design logic and output characteristics of each experiment.

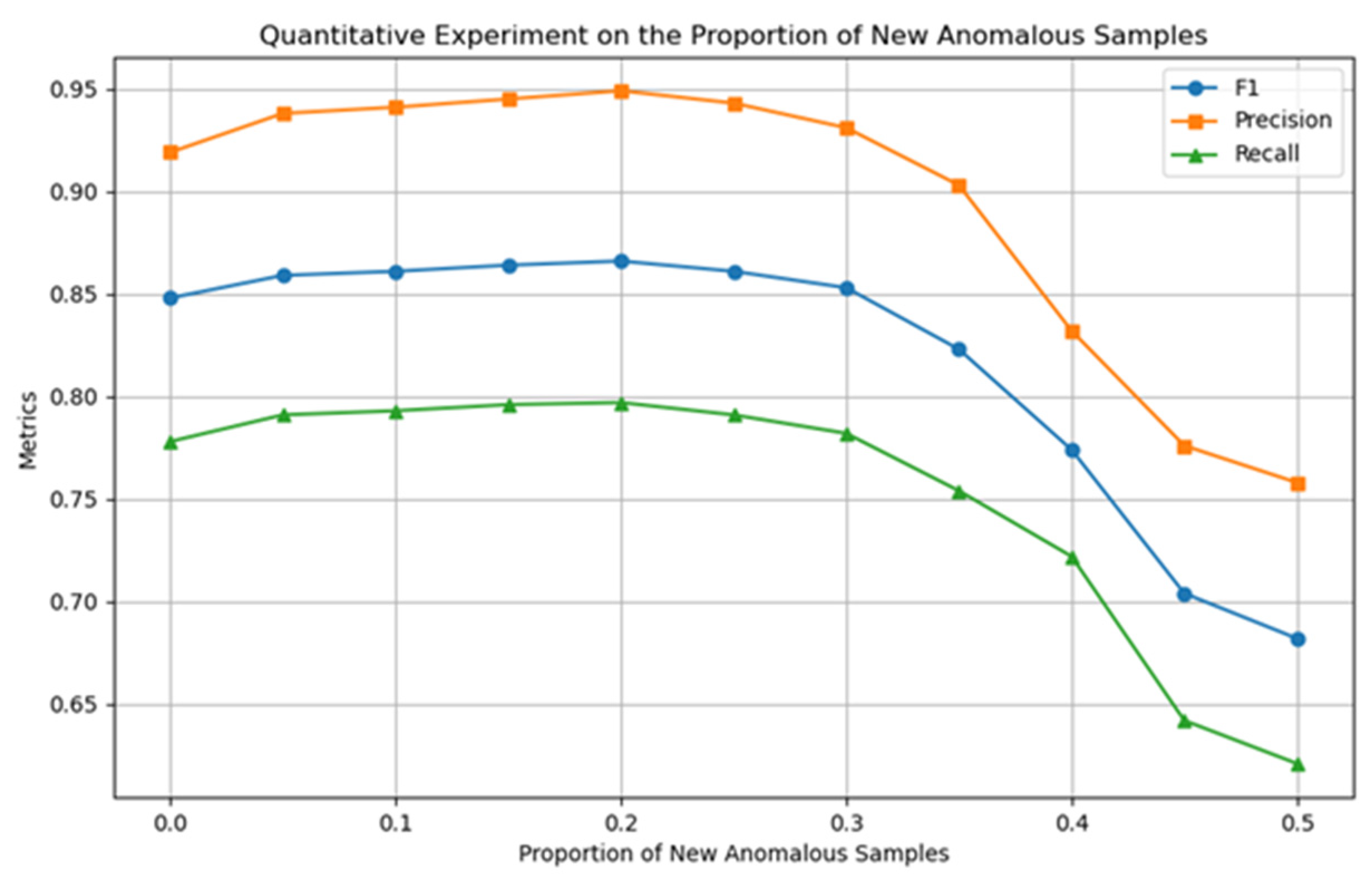

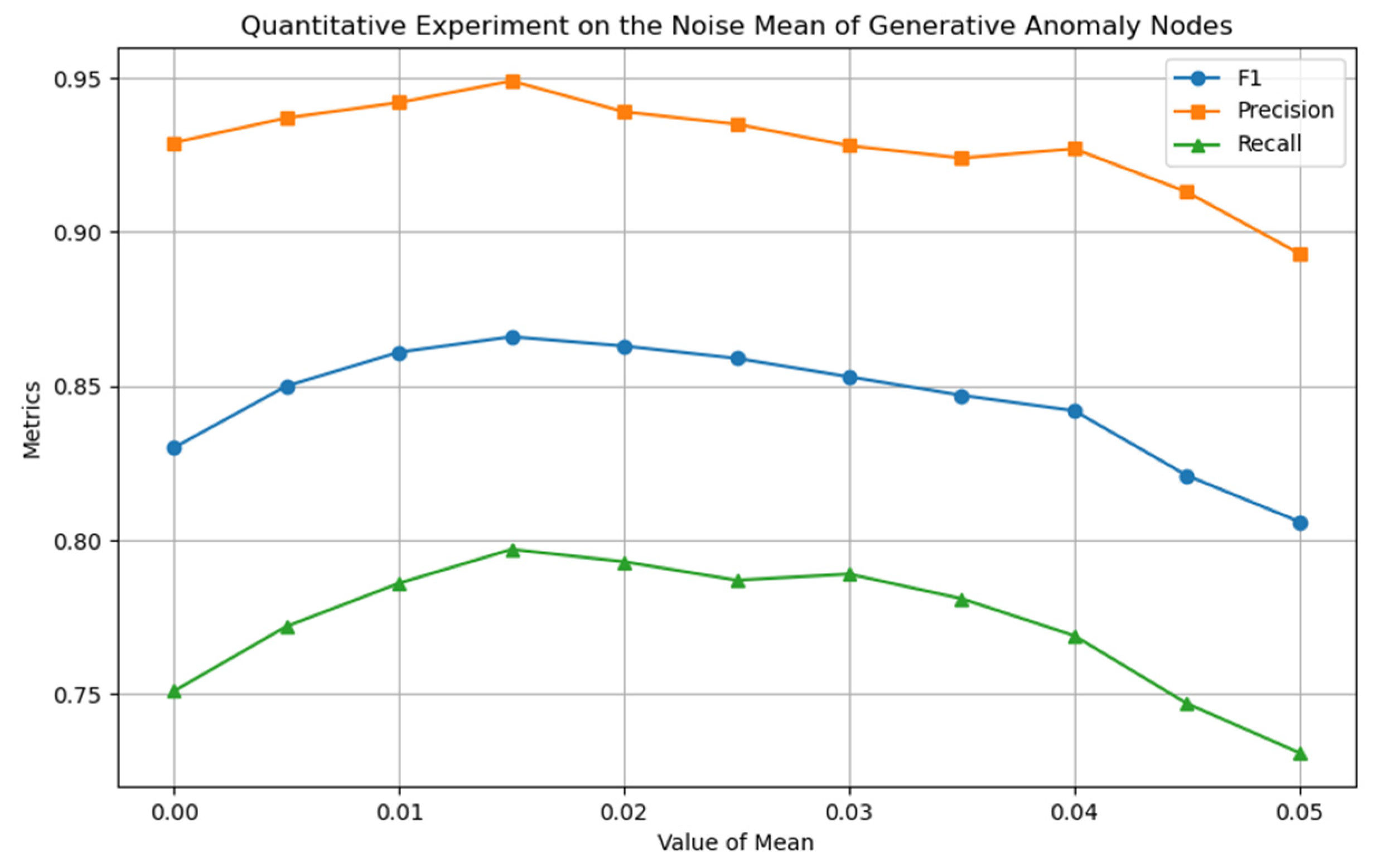

This section conducts a quantitative analysis of the impact of parameter

(defined in

Section 3.2) on the classification accuracy of the model for the proportion of newly generated abnormal samples. As shown in

Figure 5, when

, the classification accuracy of the model reaches its optimal level; after exceeding this threshold, as the proportion of pseudo-abnormal samples increases, the model performance shows a significant decline, so

is determined.

To construct a symmetrical feature distribution between novel abnormal node classes and normal node classes, this study introduced noise perturbations to normal nodes to generate a guiding feature set, thereby highlighting the core regulatory role of noise parameters in the construction of pseudo-abnormal samples. The experiment focused on the noise variance

(Equation (5)), which, as the core factor regulating the feature distribution range of novel abnormal node classes, directly determines the distribution fit between novel abnormal and real abnormal node classes. As shown in

Figure 6, when

is set, the model performance is optimal, indicating that this variance setting achieves a reasonable balance between novel abnormal nodes in the feature space of normal nodes and real abnormal nodes. We analyzed the impact of the mean parameter

on model performance. This parameter determines the degree of deviation of the generated novel abnormal node class relative to the normal node class in the feature space. As shown in

Figure 7, the model achieves the peak F1 value at

, indicating that the distribution center of novel abnormal nodes is highly consistent with the feature space position of real abnormal nodes under this parameter setting, effectively enhancing the model’s learning ability for abnormal nodes. In summary, the model achieves the optimal state at

and

. The feature space distribution of novel abnormal samples generated under this parameter combination has the smallest semantic distance from real abnormal samples, providing more effective learning information for the model.

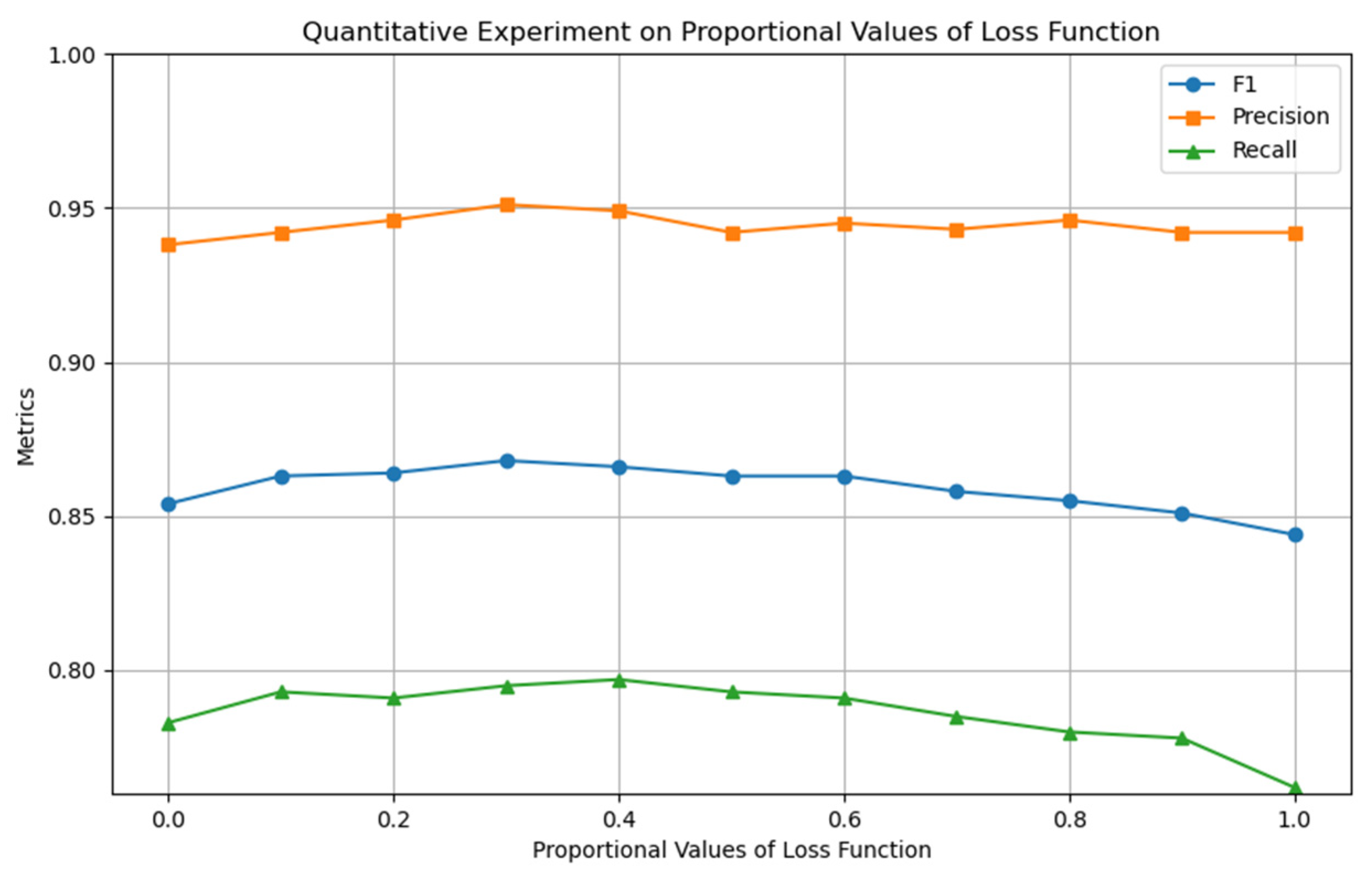

In the model training phase, optimization experiments were designed around the weight parameter

of the loss function (Equation (12)), to coordinate the optimization contributions of multiple loss terms. As shown in

Figure 8, the model performance reached its optimal state when

was set, verifying the coordination ability of this weight in optimizing multiple loss terms.

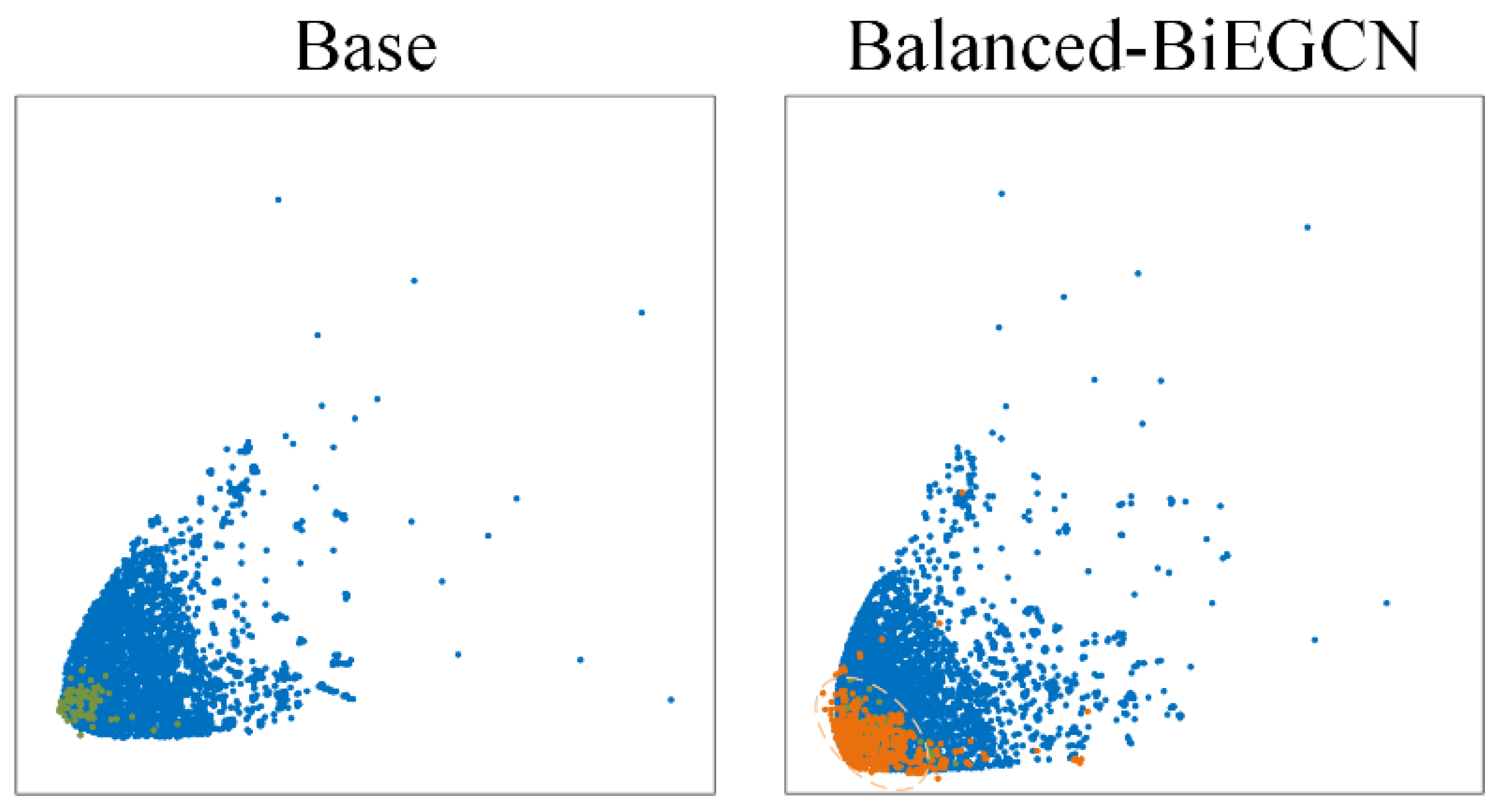

4.6. Visualization Experiment

To further investigate the performance characteristics of Balanced-BiEGCN in generating novel abnormal nodes, this experiment selected the subgraph at time step 1 from the Elliptic dataset for visualization and employed the t-SNE [

42] dimension reduction algorithm to visualize the feature space. By comparing the performance of the baseline method and the Balanced-BiEGCN method in

Figure 9, normal nodes are marked with blue circles, original abnormal nodes are labeled with green circles, and the novel generated abnormal nodes by Balanced-BiEGCN are represented by orange circles. The light orange dashed box outlines the spatial distribution area of the novel generated abnormal nodes.

From the comparison results in

Figure 9, it can be seen that the spatial distribution of all nodes in the benchmark method forms a clear boundary range, and abnormal nodes are mostly concentrated in the spatial area with the highest node density. The cluster area of the novel generated abnormal nodes in Balanced-BiEGCN is close to that of the real abnormal nodes, mainly distributed in the lower left of the overall area. Although the distribution of the novel generated abnormal nodes is mostly within the distribution range of normal nodes, further observation shows that their boundaries are deeper in terms of spatial distribution.

In summary, the novel generated abnormal nodes produced by Balanced-BiEGCN are highly similar to the real abnormal node set in terms of spatial distribution characteristics and are more difficult to distinguish, demonstrating strong concealment and mining difficulty. This characteristic provides more challenging training samples for the model, effectively improving its ability to identify anomalies in complex scenarios.

5. Conclusions and Outlook

This paper tackles two critical challenges in Bitcoin transaction anomaly detection: the inadequate modeling of temporal dependencies in dynamic graphs and the persistent issue of class imbalance. To address these challenges, we propose the Balanced-BiEGCN, a novel framework that combines the bidirectional EvolveGCN model with advanced class-balanced learning techniques. Our method improves temporal dependency modeling by leveraging a Bi-EvolveGCN architecture that embeds bidirectional temporal subgraphs. This enhances the capture of long-term dependencies in the complex transaction network dynamics of Bitcoin.

In the node classification phase, we introduce the innovative Classifier based on Sample Class Transformation (CSCT). This, paired with our novel symmetric space adjustment loss function and adjacency distance adaptive loss function, facilitates the generation of abnormal samples by transforming normal nodes, thereby effectively balancing the class distribution. This dual approach not only enhances anomaly detection but also overcomes the limitations posed by the scarcity of labeled anomaly data.

Our findings make significant contributions to the field by offering a more robust and scalable method for handling the evolving nature of Bitcoin transactions. This work also presents a promising direction for future research, where models could be developed that require only labeled normal samples, further simplifying and broadening the applicability of anomaly detection. This shift toward minimal supervision holds the potential to revolutionize how anomaly detection is approached in dynamic and imbalanced datasets, making it more accessible, scalable, and applicable to various real-world applications beyond Bitcoin transactions.

Looking ahead, we foresee several potential avenues for further exploration. Future models could integrate more sophisticated temporal dependency structures and fine-tune the generation of abnormal samples to capture increasingly subtle anomalies. Additionally, the framework could be extended to other cryptocurrency networks and dynamic systems with similar challenges, creating a broader impact in anomaly detection for financial and security applications. Thus, the work presented not only addresses current limitations but also opens new pathways for scalable, low-labeled anomaly detection in complex and evolving networks.

Author Contributions

Conceptualization, B.X.; methodology, B.X.; software, B.X.; validation, B.X., W.Y.; formal analysis, B.X. and W.Y.; investigation, B.X.; resources, B.X.; data curation, B.X.; writing—original draft preparation, B.X.; writing—review and editing, W.Y.; visualization, B.X.; supervision, W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

The laboratory of Philosophy and Social Sciences at Universities in Jiangsu Province-Fintech and Big Data Laboratory of Southeast University [7514009282].

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The datasets used in this study are publicly available: Elliptic,

www.elliptic.co.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nakamoto, S. Bitcoin: A Peer-To-Peer Electronic Cash System. 2008. Available online: https://bitcoin.org/bitcoin.pdf (accessed on 15 February 2025).

- Shah, A.F.M.S.; Karabulut, M.A.; Akhter, A.F.M.S.; Mustari, N.; Pathan, A.K.; Rabie, K.M.; Shongwe, T. On the Vital Aspects and Characteristics of Cryptocurrency—A Survey. IEEE Access 2023, 11, 9451–9468. [Google Scholar] [CrossRef]

- Zheng, B.; Zhu, L.; Shen, M.; Du, X.; Guizani, M. Identifying the Vulnerabilities of Bitcoin Anonymous Mechanism Based on Address Clustering. Sci. China Inf. Sci. 2020, 63, 105–119. [Google Scholar] [CrossRef]

- Lee, S.; Yoon, C.; Kang, H.; Kim, Y.; Kim, Y.; Han, D.; Son, S.; Shin, S. Cybercriminal Minds: An Investigative Study of Cryptocurrency Abuses in the Dark Web. In Proceedings of the 26th Annual Network and Distributed System Security Symposium (NDSS 2019), San Diego, CA, USA, 24–27 February 2019. [Google Scholar]

- Paquet-Clouston, M.; Haslhofer, B.; Dupont, B. Ransomware Payments in the Bitcoin Ecosystem. J. Cybersecur. 2019, 5, tyz003. [Google Scholar] [CrossRef]

- Liu, J.; Chen, J.; Wu, W.; Wu, Z.; Fang, J.; Zheng, Z. Fishing for Fraudsters: Uncovering Ethereum Phishing Gangs with Blockchain Data. IEEE Trans. Inf. Forensics Secur. 2024, 19, 5179–5193. [Google Scholar] [CrossRef]

- Sharifani, K.; Amini, M. Machine Learning and Deep Learning: A Review of Methods and Applications. World Inf. Technol. Eng. J. 2023, 10, 3897–3904. [Google Scholar]

- Zhang, Y.; Tiňo, P.; Leonardis, A.; Tang, K. A Survey on Neural Network Interpretability. IEEE Trans. Emerg. Top. Comput. Intell. 2021, 5, 726–742. [Google Scholar] [CrossRef]

- Duan, M.; Zheng, T.; Gao, Y.; Wang, G.; Feng, Z.; Wang, X. DGA-GNN: Dynamic Grouping Aggregation GNN for Fraud Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 11820–11828. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Mohan, A.; Sankar, P.; Sankar, P.; Manohar, K.M.; Peter, A. Improving Anti-Money Laundering in Bitcoin Using Evolving Graph Convolutions and Deep Neural Decision Forest. Data Technol. Appl. 2023, 57, 313–329. [Google Scholar] [CrossRef]

- Zhou, Y.; Luo, X.; Zhou, M.C. Cryptocurrency Transaction Network Embedding from Static and Dynamic Perspectives: An Overview. IEEE/CAA J. Autom. Sin. 2023, 10, 1105–1121. [Google Scholar] [CrossRef]

- Patel, V.; Rajasegarar, S.; Pan, L.; Liu, J.; Zhu, L. EvAnGCN: Evolving Graph Deep Neural Network Based Anomaly Detection in Blockchain. In Proceedings of the International Conference on Advanced Data Mining and Applications, Nanjing, China, 25–27 November 2022. [Google Scholar]

- Pareja, A.; Domeniconi, G.; Chen, J.; Ma, T.; Suzumura, T.; Kanezashi, H.; Kaler, T.; Schardl, T.; Leiserson, C. EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Hopfield, J.J. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef]

- Zhao, T.; Zhang, X.; Wang, S. GraphSMOTE: Imbalanced Node Classification on Graphs with Graph Neural Networks. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, Virtual Event Israel, 8–12 March 2021. [Google Scholar]

- Nayyer, N.; Javaid, N.; Akbar, M.; Aldegheishem, A.; Alrajeh, N.; Jamil, M. A New Framework for Fraud Detection in Bitcoin Transactions Through Ensemble Stacking Model in Smart Cities. IEEE Access 2023, 11, 111408–111424. [Google Scholar] [CrossRef]

- Bergman, L.; Hoshen, Y. Classification-Based Anomaly Detection for General Data. arXiv 2020, arXiv:2005.02359. [Google Scholar] [CrossRef]

- Patrick, M.; Vukosi, M.; Bhesipho, T. A Multifaceted Approach to Bitcoin Fraud Detection: Global and Local Outliers. In Proceedings of the 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016. [Google Scholar]

- Alarab, I.; Prakoonwit, S. Graph-Based LSTM for Anti-Money Laundering: Experimenting Temporal Graph Convolutional Network with Bitcoin Data. Neural Process. Lett. 2023, 55, 689–707. [Google Scholar] [CrossRef]

- Lo, W.W.; Kulatilleke, G.K.; Sarhan, M.; Layeghy, S.; Portmann, M. Inspection-L: Self-Supervised GNN Node Embeddings for Money Laundering Detection in Bitcoin. Appl. Intell. 2023, 53, 19406–19417. [Google Scholar] [CrossRef]

- Liu, C.; Xu, Y.; Sun, Z. Directed Dynamic Attribute Graph Anomaly Detection Based on Evolved Graph Attention for Blockchain. Knowl. Inf. Syst. 2024, 66, 989–1010. [Google Scholar] [CrossRef]

- Ekle, O.A.; Eberle, W. Anomaly Detection in Dynamic Graphs: A Comprehensive Survey. ACM Trans. Knowl. Discov. Data 2024, 18, 1–42. [Google Scholar] [CrossRef]

- Cui, Z.; Li, Z.; Wu, S.; Zhang, X.; Liu, Q.; Wang, L.; Ai, M. DyGCN: Dynamic Graph Embedding with Graph Convolutional Network. arXiv 2021, arXiv:2104.02962. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef]

- Zhang, P.; Yan, Y.; Li, C.; Wang, S.; Xie, X.; Song, G.; Kim, S. Continual Learning on Dynamic Graphs via Parameter Isolation. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval, Taipei, Taiwan, 23–27 July 2023. [Google Scholar]

- Yin, N.; Wang, M.; Chen, Z.; De Masi, G.; Xiong, H.; Gu, B. Dynamic Spiking Graph Neural Networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Jin, Y.; Wei, Y.; Cheng, Z.; Tai, W.; Xiao, C.; Zhong, T. Multi-Scale Dynamic Graph Learning for Time Series Anomaly Detection (Student Abstract). In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Liu, J.; Shang, X.; Han, X.; Zheng, K.; Yin, H. Spatial-Temporal Memories Enhanced Graph Autoencoder for Anomaly Detection in Dynamic Graphs. arXiv 2024, arXiv:2403.09039. [Google Scholar] [CrossRef]

- Jin, R.; Chen, Z.; Wu, K.; Wu, M.; Li, X.; Yan, R. Bi-LSTM-Based Two-Stream Network for Machine Remaining Useful Life Prediction. IEEE Trans. Instrum. Meas. 2022, 71, 3511110. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar] [CrossRef]

- Yuan, G.; Fang, J.; Sui, Y.; Li, Y.; Wang, X.; Feng, H.; Zhang, Y. Graph Anomaly Detection with Bi-Level Optimization. In Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024. [Google Scholar]

- Taud, H.; Mas, J.F. Multilayer Perceptron (MLP). In Geomatic Approaches for Modeling Land Change Scenarios; Springer: Cham, Switzerland, 2018; pp. 451–455. [Google Scholar]

- Mao, A.; Mohri, M.; Zhong, Y. Cross-Entropy Loss Functions: Theoretical Analysis and Applications. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Weber, M.; Domeniconi, G.; Chen, J.; Weidele, D.K.I.; Bellei, C.; Robinson, T.; Leiserson, C.E. Anti-Money Laundering in Bitcoin: Experimenting with Graph Convolutional Networks for Financial Forensics. arXiv 2019, arXiv:1908.02591. [Google Scholar] [CrossRef]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph Convolutional Networks: A Comprehensive Review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef]

- Cates, J.; Lewis, J.; Hoover, R.; Caudle, K. Skip-GCN: A Framework for Hierarchical Graph Representation Learning. In Proceedings of the 2023 IEEE Conference on Neural Networks and Applications, Qingdao, China, 18–21 August 2023. [Google Scholar]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive Representation Learning on Large Graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1025–1035. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Tong, G.; Shen, J. Financial Transaction Fraud Detector Based on Imbalance Learning and Graph Neural Network. Appl. Soft Comput. 2023, 149, 110984. [Google Scholar] [CrossRef]

- Xiao, L.; Han, D.; Li, D.; Liang, W.; Yang, C.; Li, K.-C.; Castiglione, A. CTDM: Cryptocurrency Abnormal Transaction Detection Method with Spatio-Temporal and Global Representation. Soft Comput. 2023, 27, 11647–11660. [Google Scholar] [CrossRef]

- Van der Maaten, L.J.P.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).