Abstract

In a percolating system, there are typically exponentially many spanning paths. Here, we study numerically, for a two-dimensional diluted system, restricted to percolating realizations, the number N of directed percolating paths. First, we study the average entropy as a function of the occupation density p and compare with mathematical results from the literature. Furthermore, we investigate the distribution . By using large-deviation approaches, we are able to obtain down to the very low-probability tail reaching probabilities as small as . We consider the percolating phase, the (typically) non-percolating phase, and the critical point. Finally, we also analyze the structure of the realizations for some values of S and p.

1. Introduction

Percolation is a widespread phenomenon [1,2,3,4] where one asks, e.g., whether a porous material allows for the flow of liquids through the bulk of a probe. It finds applications in many fields [3,5] such as graph theory, magnetic systems, analysis of algorithms, social systems, economy, and even games [6]. In general, one distinguishes active or occupied sites from non-active or unoccupied sites. Note that for the case of the porous material, the occupied sites are the pores, which are actually not occupied by the material. Often, one considers given realizations on finite-dimensional lattices, like hyper-cubic ones, and asks whether a percolation path exists or not, for example, from one side of the system to an opposite site, or from one specific site to another specific site. When studying ensembles of realizations, parameterized, e.g., by the density of occupied sites, a phase transition between the percolating phase, with high occupation density, and a non-percolating phase, with low occupation density, emerges. In physics, the properties of these phase transitions are of interest, as described, e.g., by critical exponents.

Nevertheless, in the case of a percolating system, there usually exists not only one percolating path but many, and in most cases, exponentially many [7]. Since the number N of paths, in particular, its logarithm, i.e., the entropy S, plays an important role in statistical mechanics, it makes sense to ask how S behaves in the different phases. Note that this means that in both phases, the analysis is limited to the percolating realizations, which are frequent in the percolating phase and rare in the non-percolating phase. Because the percolation model exhibits disorder, which means that there are many random realizations, S itself is a random quantity. This means that S is described by a distribution where its functional form might depend, e.g., on the fraction p of occupied sites. In particular, we test whether the observed distributions are broadly compatible with Gaussian distributions.

The behavior of the average entropy has received some interest. Recently, for the case of directed percolation [8,9] on hypercubic lattices, the set of paths of a certain length l starting at the origin was considered [10]. It was shown that for a large enough value of p, i.e., deep enough in the percolating phase, the typical number of distinct paths of length l for a given realization grows according to

in the limit of large path lengths, where

and where z is the maximum number of possible directions a directed path can proceed. Note that for the so-far cited samples for dimensional systems, i.e., d spatial and one “time” direction, the case was considered. For the present model in dimensions (see below), we have . Note that in earlier work [11,12], it was only shown that is an upper bound and becomes tight for higher dimensions , in the limit of large occupation .

In this work, we study, by computer simulations, two-dimensional directed percolation. By using a dynamic-programming algorithm, we determine, for each percolating realization, the number of percolating paths. By averaging over the disorder, this yields the average entropy as a function of the occupation density p. But our main effort is devoted to the full distribution . By using Markov chain large-deviation approaches, where the configurations of the Markov chain are the disorder realizations [13,14,15], we are able to obtain down to exponential small probabilities, such that we can also determine the large-deviation rate function , which is a fundamental quantity in large-deviation theory [16,17]. In particular, we study and in the percolating and non-percolating phases, as well as at the critical point. Note that even in the non-percolating phase, rare percolating realizations exist. These we can sample numerically also by using the Markov chain approach.

The paper is organized as follows. In the following section, we present the model. Next, we explain the dynamic programming algorithm to calculate N and the large-deviation approach to obtain also in the tails where the probabilities are as small as . Then, in Section 4, we show our results for the average and the distribution and present some analysis of the structure of the disorder realizations in the different phases. Finally, we summarize our results and outline some possible future directions of research.

2. Model

We define, for a two-dimensional lattice of size , a realization of the disorder as a set of occupation values with for with

For the present model, for implementational simplicity, we consider, below, paths of occupied sites between the lower left and the upper right corner. Thus, we choose that these corners are always occupied, i.e., . Each other site is independently occupied with probability p with . An example for such a realization is shown in Figure 1.

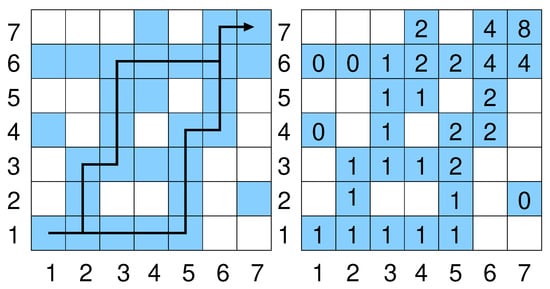

Figure 1.

(left) An example system with size . The shaded sites are occupied; the white sites are empty. Two percolating paths are shown, i.e., connecting with . (right) For each occupied site the number of paths from to is shown, as given by Equation (8). In total, there are percolating paths.

An occupied site is considered connected to a neighboring site , , , if the neighboring site is occupied as well. A directed path from to with and is a sequence , …, of length of connected sites such that the position of the sites increases by one in the x or y direction in each step, i.e., or . Two examples for such directed paths are shown in Figure 1.

A disorder realization which contains a directed path of length from to is called percolating (or spanning), and also the path is called percolating. Thus, the indicator function, whether a realization is percolating or not, is a random variable. We denote by the probability that a realization is percolating and estimate it below numerically as a function of occupation probability p.

For a percolating realization, there might be several directed percolating paths. Note that different paths might share some sites, but they cannot share all sites. We denote, for each disorder realization,

and the entropy

We consider the entropy as the quantity of interest here, because on average, it will not be dominated by rare realizations with extreme large number of paths, in contrast to N. Since S is a disorder-dependent random quantity, it is described by a distribution . Here, we explicitly include the dependence on the system size L and on the occupation density p, which is omitted from Section 3 for simplicity of notation. Note that this distribution is conditioned to those disorder realizations which are percolating.

We denote the related disorder average by , in particular, is the average entropy constrained to the percolating realizations, i.e., where and, hence, .

From Equations (1) and (5), we expect and define correspondingly, for given size L and given value of p, the finite-size entropy per length as

Many random quantities exhibit the large-deviation property [16,17], i.e., the distribution is exponentially small in the system size, here, the (half) length L of the path, with rate function . For S, this means it has the functional form where denotes contributions which are sub-linear in L. Correspondingly, we define the empirical rate function

Note that in our analysis, we have shifted the rate function such that its minimum occurs at a -value of 0, i.e., we have subtracted the minimum of . This is commonly performed for easier comparison, since the actual must exhibit a minimum at zero, to allow for normalization and for nonzero probabilities.

3. Methods

A realization of the disorder with size can be generated directly by iterating through all sites , except and , and independently assigning with probability p and with probability . Thus, the probability for a given realization is , where is the number of sites assigned with 1, including the two always occupied sites and , and the number of sites assigned with 0.

For a given realization x, we define for as the number of directed paths from site to site . Since site is always occupied in our model, we have . The number of directed paths to a given site is zero if the site is not occupied (). Else it is the sum of the number of paths to sites “incoming” to , i.e., which can precede on directed paths. Thus, we have

Thus, starting from the lower left, all values of can be calculated, e.g., in a row by row fashion, leading to a running time of . Such an approach is called dynamic programming in computer science [18]. An example calculation is shown in the right of Figure 1.

We obtain the number N of percolating paths for each realization as . If , the realization is not percolating, and, therefore, not included in the statistics. For a percolating realization x, the obtained entropy is denoted as .

One can obtain an estimate for the distribution by direct sampling. This means that one generates a certain number K of realizations, say, , applies the dynamic programming approach Equation (8) to each realization and, finally, calculates for the percolating realizations, respectively. Then, a histogram of the values of S can be calculated, which is an approximation of . Clearly, the smallest non-zero frequency one measures in the histogram is at least , e.g., .

To obtain the distribution down to the tails, for probabilities here as small as , we employ a large-deviation algorithm [13,14,15] based on Markov chain Monte Carlo sampling. With direct sampling, this would require about samples, which is unfeasible. We explain here, for brevity only, the main steps and mention all necessary details relevant for the present simulation.

The basic idea is to generate realizations x not according to the original probabilities , but according to probabilities where the sampling is concentrated in some region of interest, commonly called importance sampling. Here, we extend by an exponential bias, or tilt

inspired by the Boltzmann distribution controlled by a temperature-like parameter . For , the bias is one, i.e., the original distribution recovered. For , being small, realizations with small values of S are preferred, while for , realizations with a large value of S appear with higher probability.

We implemented this bias by performing a Markov chain of realizations, starting with some initial realization . The initial realization can be a random one, a fully occupied one, etc. We use the Metropolis–Hastings algorithm [19,20] as follows: In each step of the Markov chain, given the current realization , we generate a trial realization by, first, copying to . Then, we randomly select, times, a site , and each time, we reassign its occupation according to the original rules, i.e., with probability p and else. Thus, the parameter controls the number of reassignments performed on to yield (For simplicity, we do not exclude that a site is reassigned more than once within one trial generation. This does not violate detailed balance).

Next, we compute the number of percolating paths for by using the dynamic programming algorithm Equation (8). If , the trial realization is immediately rejected, i.e., . This guarantees that the Markov chain contains only percolating realizations. If , the entropy is obtained. Let be the entropy of the current realization. Now, the trial configuration is accepted, i.e., with probability

Otherwise, i.e., with probability , the trial realization is rejected. This algorithm guarantees, after suitable equilibration, that the sampling of configurations is performed with the total weight , where is the normalization.

As a rule of thumb, we attempted to obtain an empirical acceptance rate of the trial realizations of about 50%. We tuned the parameter for each temperature-like parameter accordingly. Therefore, for small , one has smaller values of , while in the limit , where , one can reassign all entries of , which corresponds to direct sampling restricted to percolating realizations.

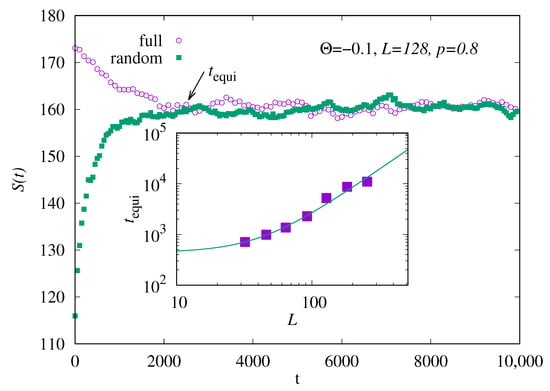

Equilibration, i.e., approximate convergence of the Markov chain to the desired measure, can be easily monitored by starting the Markov chain with different initial realizations, for example, one run with a random but percolating realization, and another run with a completely occupied realization. Then, one monitors for both runs. We consider the Markov chain to be equilibrated, i.e., long enough to sample S according to the desired Boltzmann distribution, when the different time series agree within fluctuations. The corresponding time we denote by . An example is shown in Figure 2 for and . Here, equilibration is achieved for about . To obtain an impression how the equilibration time depends on the system sizes, we have averaged the equilibration time for 10 independent runs and repeated the simulation for six other system sizes . The resulting slightly averaged is shown in the inset of Figure 2. We have fitted a function

to the data, resulting in an exponent , which means that the equilibration time roughly grows quadratically with the system size. For other values of , where is larger, the results look similar, as they do for other values of p, but equilibration is achieved faster and h is somehow smaller.

Figure 2.

Main plot: Entropy S as a function of the Monte Carlo time t for one run with system size , and temperature-like parameter , for two different initial realizations. Inset: equilibration time (see text) as a function of system size L for . The line shows a fit to the function shown in Equation (11).

Thus, for long enough simulations, one can sample realizations x and corresponding values of S. They can be collected in histograms, which approximate the biased distributions .

One can easily show [13] that the desired unbiased distribution can be obtained by . This holds for any value of , but the temperature value determines in which interval data are actually available. Thus, one has to perform simulations typically for several dozens of values of or more, to cover a large range of values of S. Note that the values can be determined, e.g., by exploiting == for pairs such that the generated histograms for S overlap [13]. This fixes all relative normalization factors, and the final normalization can be obtained by using normalization of . Alternatively, one can apply the multi-histogram rescaling approach [21], where, also, a convenient Python (Vers. 3 and upwards) tool [22] exists. This allows one to obtain over many decades in probability.

This approach is rather general and has been used, e.g., to study non-equilibrium processes like measuring the work distribution for the Ising model [15] or unfolding RNA secondary structures [23], the Kardar–Parisi–Zhang model [24], and traffic flows [25]. Also, like in the present work, large-deviation properties of equilibrium problems have been studied, e.g., properties of random graphs [26,27] of biological sequence alignment [13,28], or to obtain the partition function of the Potts model [29].

4. Results

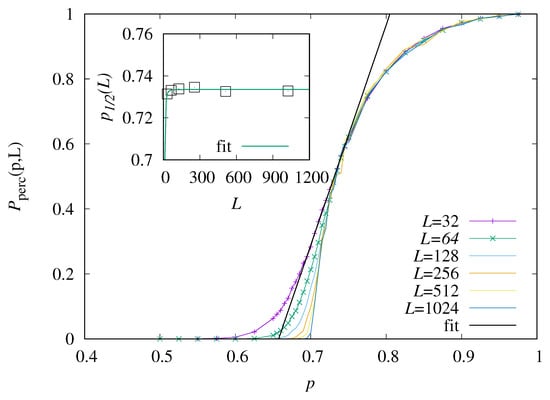

To find out where regions of interest are, first, we have performed direct-sampling simulations, to establish the percolation phase diagram. This means that we generated realizations of the disorder and measured, each time, the number N of paths. If , the realization is percolating. From these data, we have estimated the percolation probability as a function of the occupation probability p, for varying system sizes . The result is shown in Figure 3.

Figure 3.

Estimated percolation probability as function of p, for varying system sizes L. The line shows the fit to Equation (12) for . The inset shows the estimated critical points as function of system size L.

One observes that for small values of p, almost all relevant realizations do not percolate, while for large values of p, most do. For increasing size L, the functions become steeper near . Note that even close to , there is still a finite probability that a realization does not percolate, because the directed paths run from the lower left to the upper right corner, i.e., few non-occupied sites close to these corners are sufficient to prevent a percolating path.

We determined the percolation threshold as the value of p where in the limit of infinite system sizes . The choice of the value is somehow arbitrary; we expect the results not to change much, except for choices too close to 1, which would define almost all realizations as non-percolating. For actually obtaining the critical value, we fit the linear function

with parameters m and in the range of p values where is close to 0.5. The value of is taken as finite-size estimate of the percolation point. Finally, we extrapolate to infinite system size by fitting a function to the data, as shown in the inset of Figure 3. This results in the estimate of the critical point. Since the variation of as function of L is very small, the obtained fit value for f about 3 has an error bar of the same size and, therefore, carries not much information. The value found here is near the result obtained by series expansion [30] for a slightly different model. In [30], not only one one target site was considered, but instead all target sites with , and . Note that this difference leads also to a different percolation criterion.

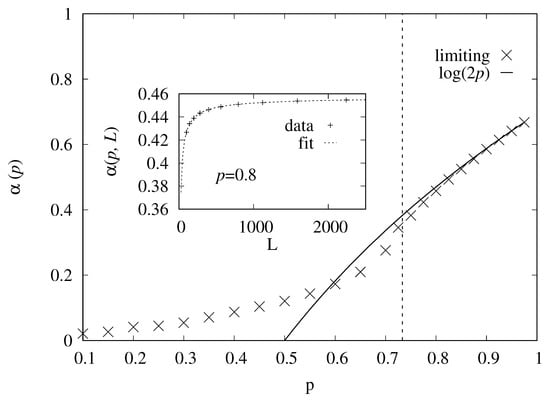

Next, we analyze, for those realizations where , the entropy per length from Equation (6). To determine the limiting value of for infinite system, we performed fits to power laws

An example for such an extrapolation is shown in the inset of Figure 4. The main plot shows the extrapolated value as a function of the occupation probability p. One observes that, indeed, for the asymptotics of Equation (2) is approached, while for smaller values of p, it is indeed an upper bound within the percolating phase .

Figure 4.

The extrapolated value of as a function of the site-occupation probability p. The solid line shows the analytical result from Equation (2). The vertical dotted line indicates the critical point . The inset shows an example for the extrapolation as function of the system size L, for the case .

We can use the Markov chain simulation without bias, corresponding to , to generate just percolating realizations even deep in the non-percolation phase . For this purpose, we started each Markov chain with a fully occupied realization, and exploited the fact that only moves are accepted that generate still percolating realizations. Thus, after equilibration, we can again measure N and the average entropy. This is restricted to smaller system sizes , due to the higher numerical effort. Note that for , the size dependence of L was rather weak but noisy, so we have used a fit to Equation (13) with fixing . One can see in Figure 4 that becomes a bit steeper near the percolation transition but then becomes almost flat for . Still, the entropy per length (remember that it is an average constrained to the percolating realizations) remains finite for all values of p. Naturally, the function , which estimates only in the upper percolating phase, will vanish at and cannot be an upper bound for smaller values of p.

Next, we consider the distribution of the entropy over the realizations. Some realizations may exhibit many paths, while others few. Also, the functional form of the function may depend on the phase, whether it is percolating or not. Here, we check, in particular, whether the distribution appears to be (almost) Gaussian or not. Here, to reach the tails of the distributions, we have used the data from the large-deviation Markov chain simulations, with the bias Equation (9). Since these Markov chain simulations require a much larger effort than direct sampling, we have restricted the system sizes to .

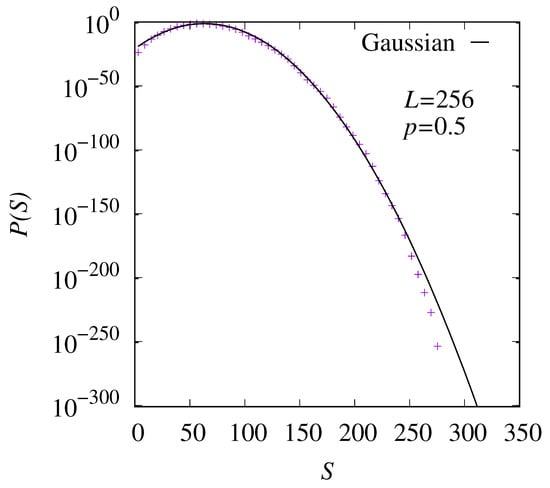

In Figure 5, is shown in the non-percolating phase at for system size . Note that the choice of is rather arbitrary; it is just one representative point in the non-percolating phase. By using the large-deviation approach, we were able to obtain the distribution down to probabilities as small as . The distribution is centered about a rather small entropy , as compared to the maximum entropy for this system size. The functional form of the distribution is almost Gaussian, indicated by the result of a fit to a Gaussian which we have performed, finding a peak at and variance .

Figure 5.

The entropy distribution for the case and size . The line represents a fit to a Gaussian.

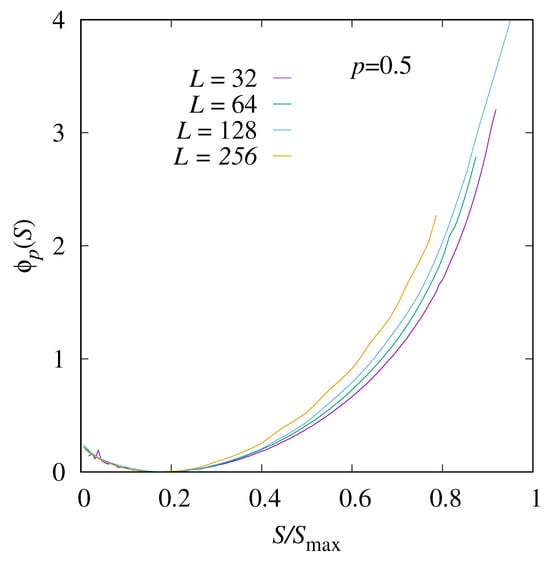

Next, we show the empirical shifted rate function Equation (7) in Figure 6 for different system sizes L. In the left part, for small values of S, the empirical rate functions are basically on top of each other, which means that the limiting rate function exists and is about the same. In total, the Gaussian form is visible for all system sizes. In the right part, one observes considerable finite-size corrections, such that one cannot tell whether a limiting rate function exists and where it runs.

Figure 6.

The shifted rate function for and four system sizes, , and 256.

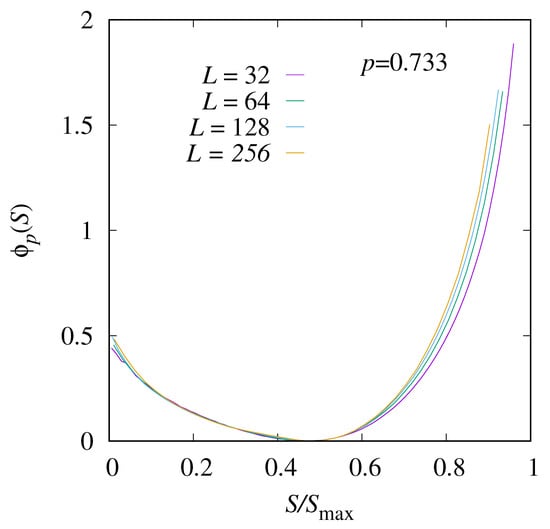

The corresponding rate-function results near the critical point, at , are shown in Figure 7. The position of the minimum has shifted to a larger value of near 0.5, which is natural, because a higher occupation allows for more paths. The overall functional form is not Gaussian anymore. In the interval between 0 and the minimum, only few changes with the system size are visible, and the data should represent the actual limiting rate function. For larger values of S, again, some finite-size corrections are visible and one cannot observe a convergence so far.

Figure 7.

The shifted rate function for and four system sizes and 256.

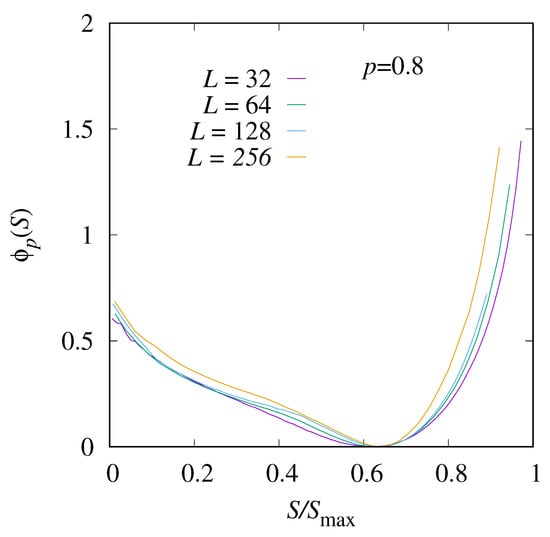

The rate function for a value of p in the percolating region, here, , is shown in Figure 8. Here, the typical value of , i.e., the minimum position of the rate function, appears for an even higher value of S. The functional form of the distribution is very different, in particular, for , the function seems to behave almost linearly, corresponding to an exponential distribution. Nevertheless, the finite-size corrections are even stronger, so a definite statement about the functional form of the limiting rate function cannot be made.

Figure 8.

The shifted rate function for and four system sizes, , and 256.

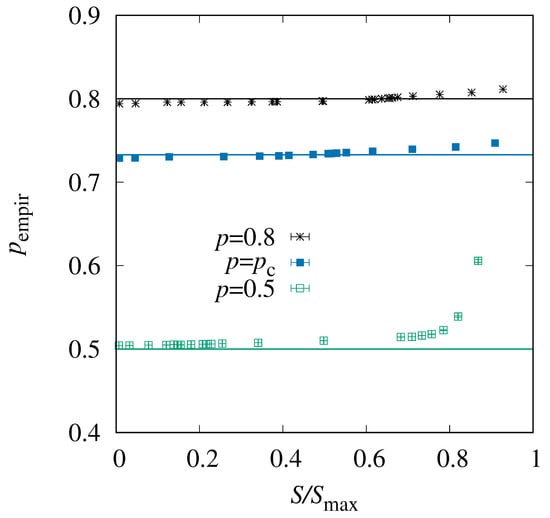

Finally, we consider the actual realizations and try to establish how they influence their values of S. In particular, for any value of p, each realization exhibits an individual empirical density of actually occupied sites. A natural question to ask is whether influences the observed entropy S, i.e., whether there is a correlation. One would expect, following the Fortuin–Kasteleyn–Ginibre inequality [31], that realizations with more occupied sites exhibit more paths, i.e., a higher value of S. To verify this, we have, for each temperature , individually recorded and averaged S as well as over all obtained realizations. The result is shown in Figure 9. One observes that for having larger entropy, more sites are actually occupied. For and , small values of S correspond to values , while it is the other way around for large values of S. The change of with S is rather moderate. On the other hand, for the same value of S, the empirical occupation increases considerably with p. For the case , all empirical, actually observed occupation densities are larger than p, which makes sense, because one needs a certain number of occupied sites to have a spanning path at all. Interestingly, for most values of S, the value of is only slightly larger than 0.5 and well below . This means the occupation is much lower than necessary, on average, for spanning paths, Thus, the occupied sites have to be concentrated in some region, to allow for a percolating path even with an overall small occupation density. We have tested this explicitly.

Figure 9.

The empirical density of occupied sites as a function of the normalized entropy , for system size . The horizontal lines indicate the values of p, respectively.

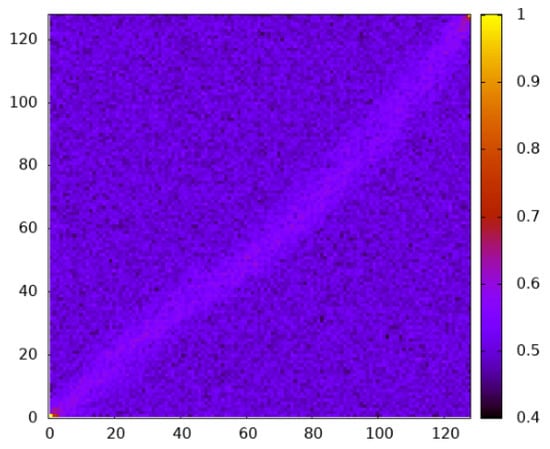

In Figure 10, the average site-dependent empirical occupation values are shown for 9800 independent realizations taken from the MCMC simulation at almost infinite temperature . As usual, these realizations are conditioned to be percolating ones. Still, the high temperature means that the average entropy is typical within the set of conditioned realizations. One observes that the occupation near the diagonal is slightly higher. This explains the existence of percolating paths with a considerable entropy, although the overall empirical occupation is smaller than the percolation threshold.

Figure 10.

Heat map showing the empirical occupation per site for percolating realizations taken at for and .

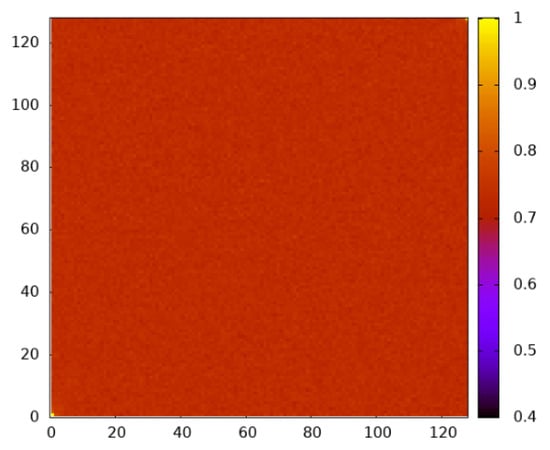

On the other hand, for the case , apart from the fact that the site-dependent occupation is near , the typical configurations do not exhibit any spatial structure, as shown in Figure 11. We observed this also for other values of S, as obtained from the biased sampling. For the case within the percolation regime, , also, no spatial structure could be observed.

Figure 11.

Heat map showing the empirical occupation per site for percolating realizations taken at for and .

5. Summary, Discussion, and Outlook

We have studied by computer simulations the number of percolating paths, in particular, the corresponding entropy S, for two-dimensional directed percolation. The number grows exponentially with the path length in the limit of large l, which can be written as . Our results confirm this scaling according to Equation (2) in the limit .

By using a large-deviation approach, we have been able to obtain not only the disorder-averaged entropy but also the distribution . The distributions exhibit an almost Gaussian distribution in the non-percolating region , while they have a very non-Gaussian form above the percolation threshold. From the study of the rate function, one observes one part which appears to be linear, i.e., an exponential distribution. Interestingly, this is quite different to that found [24] for the related model of directed polymers in random media (DPRM). The reason is probably that for the directed polymers, a Gaussian distribution of the site weights and a finite temperature were considered, so, also, higher energy sites may be contained in the paths. Here, in contrast, the occupied and non-occupied sites correspond to DPRM with a bimodal (Bernoulli) randomness in the limit.

In the percolating regime , realizations with large value of S have a slightly higher empirical occupation than p, while it is the other way around for small values of S. Still, the occupied and non-occupied sites appear to be uniformly distributed within a realization. Below the percolating regime, basically all realizations that exhibit percolating paths, i.e., a nonzero value of S, exhibit a higher empirical occupation than p but smaller than . This is possible because the occupied sites appear with higher probability near the diagonal, i.e., are concentrated within the realization.

For future studies, in would be of interest to also consider higher dimensions d. Here, the Fukushima–Junk bound [10] is expected to be tight, which could be visible for a larger range of values of p than in the present work. Still, nothing is known about the distribution . Other percolation models, particularly non-directed ones, would also be of interest. But here, the number of percolating paths is not so straightforward to define, nor to obtain. In this case, one could consider the number of shortest percolating paths instead. Finally, it would be desirable if our work motivates analytical studies with respect to the distribution , in particular, in the large-deviation regime.

Author Contributions

Conceptualization, A.K.H.; Software, L.S.; Validation, L.S. and A.K.H.; Investigation, L.S.; Data curation, L.S.; Writing—original draft, A.K.H.; Writing—review and editing, L.S.; Visualization, L.S. and A.K.H.; Supervision, A.K.H.; Project administration, A.K.H. All authors have read and agreed to the published version of the manuscript.

Funding

The work was funded by the DFG through its Major Research Instrumentation Program (INST 184/225-1 FUGG) and the Ministry of Science and Culture (MWK) of the Lower Saxony State.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Acknowledgments

The simulations were performed at the the HPC cluster ROSA, located at the University of Oldenburg (Germany).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Flory, P.J. Molecular Size Distribution in Three Dimensional Polymers. I. Gelation1. J. Am. Chem. Soc. 1941, 63, 3083–3090. [Google Scholar] [CrossRef]

- Broadbent, S.R.; Hammersley, J.M. Percolation processes: I. Crystals and mazes. Math. Proc. Camb. Philos. Soc. 1957, 53, 629–641. [Google Scholar] [CrossRef]

- Stauffer, D.; Aharony, A. Introduction to Percolation Theory; Taylor & Francis: London, UK, 2003. [Google Scholar]

- Saberi, A.A. Recent advances in percolation theory and its applications. Phys. Rep. 2015, 578, 1–32. [Google Scholar] [CrossRef]

- Sahimi, M. Applications of Percolation Theory; Springer: Cham, Switzerland, 2023. [Google Scholar]

- Hartmann, A.K. Non-universality for Crossword Puzzle Percolation. Phys. Rev. E 2024, 110, 064138. [Google Scholar] [CrossRef] [PubMed]

- Darling, R.W.R. The Lyapunov exponent for products of infinite- dimensional random matrices. In Lyapunov Exponents, Proceedings of the Conference, Oberwolfach, Germany, 28 May–2 June 1990; Arnold, L., Crauel, H., Eckmann, J.P., Eds.; Springer: Berlin/Heidelberg, Germany, 1991; Volume 1486, pp. 206–215. [Google Scholar]

- Durrett, R. Oriented percolation in two dimensions. Ann. Probab. 1984, 12, 999–1040. [Google Scholar] [CrossRef]

- Hinrichsen, H. Non-equilibrium critical phenomena and phase transitions into absorbing states. Adv. Phys. 2000, 49, 815–958. [Google Scholar] [CrossRef]

- Fukushima, R.; Junk, S. Number of paths in oriented percolation as zero temperature limit of directed polymer. Prob. Theor. Rel. Fields 2022, 183, 1119–1151. [Google Scholar] [CrossRef]

- Yashida, N. Phase Transitions for the Growth Rate of Linear Stochastic Evolutions. J. Stat. Phys. 2008, 133, 1033–1058. [Google Scholar] [CrossRef]

- Garet, O.; Gouéré, J.P.; Marchand, R. The number of open paths in oriented percolation. Ann. Prob. 2017, 45, 4071–4100. [Google Scholar] [CrossRef][Green Version]

- Hartmann, A.K. Sampling rare events: Statistics of local sequence alignments. Phys. Rev. E 2002, 65, 056102. [Google Scholar] [CrossRef]

- Bucklew, J.A. Introduction to Rare Event Simulation; Springer: New York, NY, USA, 2004. [Google Scholar]

- Hartmann, A.K. High-precision work distributions for extreme nonequilibrium processes in large systems. Phys. Rev. E 2014, 89, 052103. [Google Scholar] [CrossRef] [PubMed]

- Touchette, H. The large deviation approach to statistical mechanics. Phys. Rep. 2009, 478, 1–69. [Google Scholar] [CrossRef]

- Touchette, H. A basic introduction to large deviations: Theory, applications, simulations. In Modern Computational Science 11: Lecture Notes from the 3rd International Oldenburg Summer School; Leidl, R., Hartmann, A.K., Eds.; BIS-Verlag: Oldenburg, Germany, 2011. [Google Scholar] [CrossRef]

- Cormen, T.H.; Clifford, S.; Leiserson, C.E.; Rivest, R.L. Introduction to Algorithms; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.; Teller, E. Equation of State Calculations by Fast Computing Machines. J. Chem. Phys. 1953, 21, 1087. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Ferrenberg, A.M.; Swendsen, R.H. Optimized Monte Carlo Data Analysis. Phys. Rev. Lett. 1989, 63, 1195. [Google Scholar] [CrossRef]

- Werner, P. A Software Tool for ”Gluing” Distributions. arXiv 2022, arXiv:2207.08429. [Google Scholar] [CrossRef]

- Werner, P.; Hartmann, A.K. Similarity of extremely rare nonequilibrium processes to equilibrium processes. Phys. Rev. E 2021, 104, 034407. [Google Scholar] [CrossRef]

- Hartmann, A.K.; Doussal, P.L.; Majumdar, S.N.; Rosso, A.; Schehr, G. High-precision simulation of the height distribution for the KPZ equation. Europhys. Lett. 2018, 121, 67004. [Google Scholar] [CrossRef]

- Staffeldt, W.; Hartmann, A.K. Rare-event properties of the Nagel-Schreckenberg model. Phys. Rev. E 2019, 100, 062301. [Google Scholar] [CrossRef]

- Engel, A.; Monasson, R.; Hartmann, A.K. On the large deviation properties of Erdös-Rényi random graphs. J. Stat. Phys. 2004, 117, 387. [Google Scholar] [CrossRef]

- Hartmann, A.K. Large-deviation properties of largest component for random graphs. Eur. Phys. J. B 2011, 84, 627–634. [Google Scholar] [CrossRef]

- Newberg, L. Significance of Gapped Sequence Alignments. J. Comp. Biol. 2008, 15, 1187–1194. [Google Scholar] [CrossRef]

- Hartmann, A.K. Calculation of partition functions by measuring component distributions. Phys. Rev. Lett. 2005, 94, 050601. [Google Scholar] [CrossRef]

- Jensen, I. Low-density series expansions for directed percolation on square and triangular lattices. J. Phys. A Math. Gen. 1996, 29, 7013–7040. [Google Scholar] [CrossRef]

- Fortuin, C.M.; Kasteleyn, P.W.; Ginibre, J. Correlation inequalities on some partially ordered sets. Commun. Math. Phys. 1971, 22, 89–103. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).