1. Introduction

The rapid development of information technology has led to a flood of sound signals in daily life, and each carries valuable information about the physical environment [

1]. Consequently, sound signals contain a great deal of information, and their processing facilitates our understanding of specific physical situations. Among them, environmental sound classification (ESC) plays a vital role, and the goal is to classify the environment based on surrounding environmental sounds [

2]. ESC covers a wide range of topics and a wide range of categories, such as animals, natural soundscapes, humans, domestic sounds, urban noises, etc. ESC is also used in a wide range of practical applications such as surveillance systems [

3,

4], machine hearing [

5,

6], environmental monitoring [

7], crime alert systems [

8], soundscape assessment [

9], smart cities [

10], etc. Because ESC aims to classify acoustic signals in complex environments where a variety of unknown sound sources are present, it remains an open research topic with a wide range of applications. We work on the ESC task and aim to propose a network to better carry out the classification task.

Due to the rapid rise of deep learning (DL) and its success in other fields [

11], such as biomedical waste management [

12], real-time agricultural disease diagnosis [

13], and speech enhancement [

14], a large number of deep learning models have been widely used for ESC tasks in recent years [

15,

16,

17,

18,

19,

20,

21]. However, environmental sounds are dynamic and unstructured, which makes it more difficult to design appropriate feature extraction networks. We improved the model and conducted extensive experimental comparisons to address this issue, ultimately proposing a compact architecture with small parameters but high classification accuracy. In this study, we made the following key contributions:

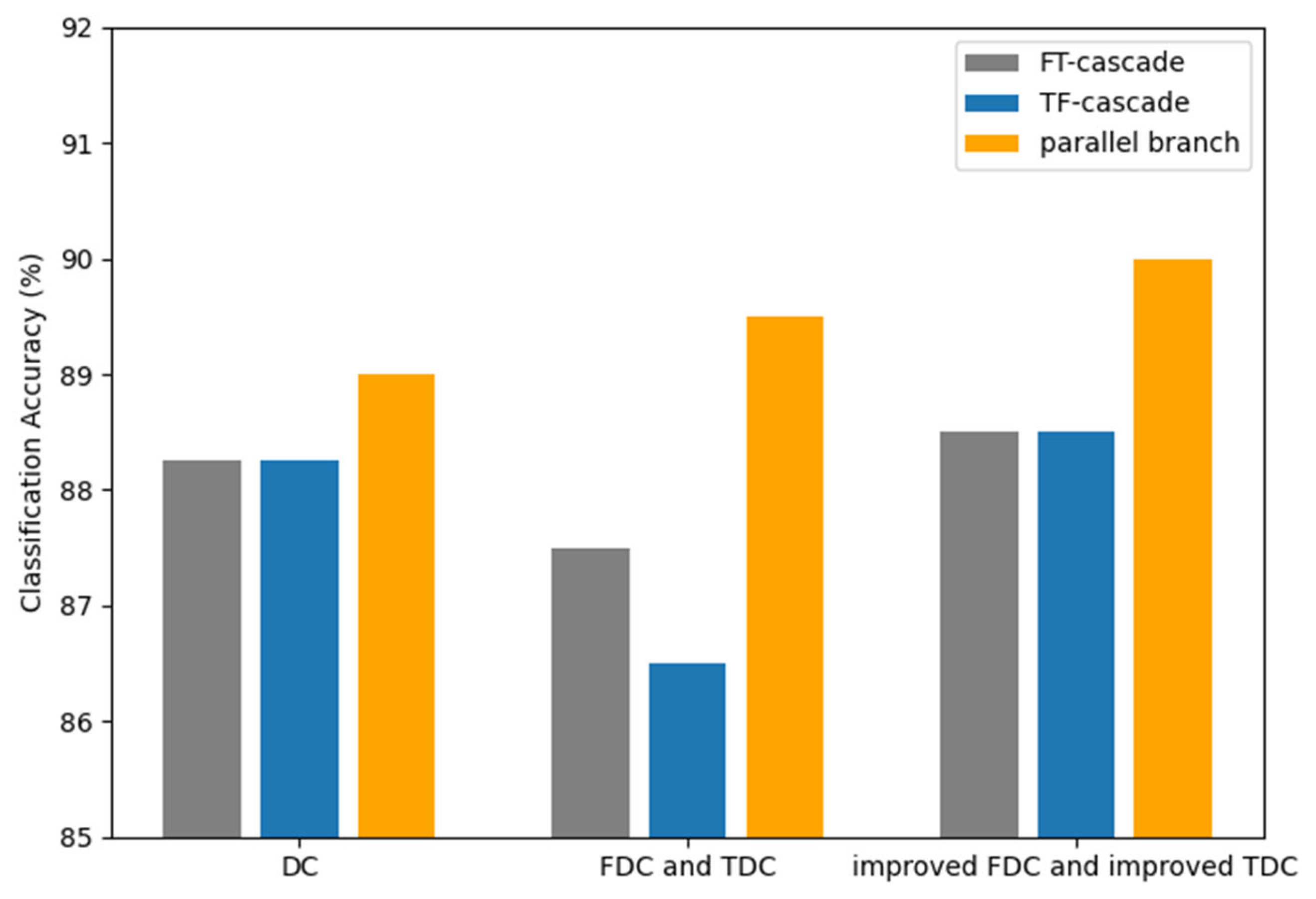

(1) An improved dynamic convolution that extracts dynamic attention by combining a convolution and a fully connected layer. This approach not only ensures global and local correlation but also maintains the shift-invariance characteristic of the 2D convolution in the frequency dimension.

(2) Frequency multi-scale attention (FMSA) and temporal multi-scale attention (TMSA) modules are proposed, which apply improved dynamic convolution for multi-scale feature extraction in frequency and time dimensions, respectively. This enables the features to contain more information to avoid the limitations of the convolutional feature and ensures physical consistency.

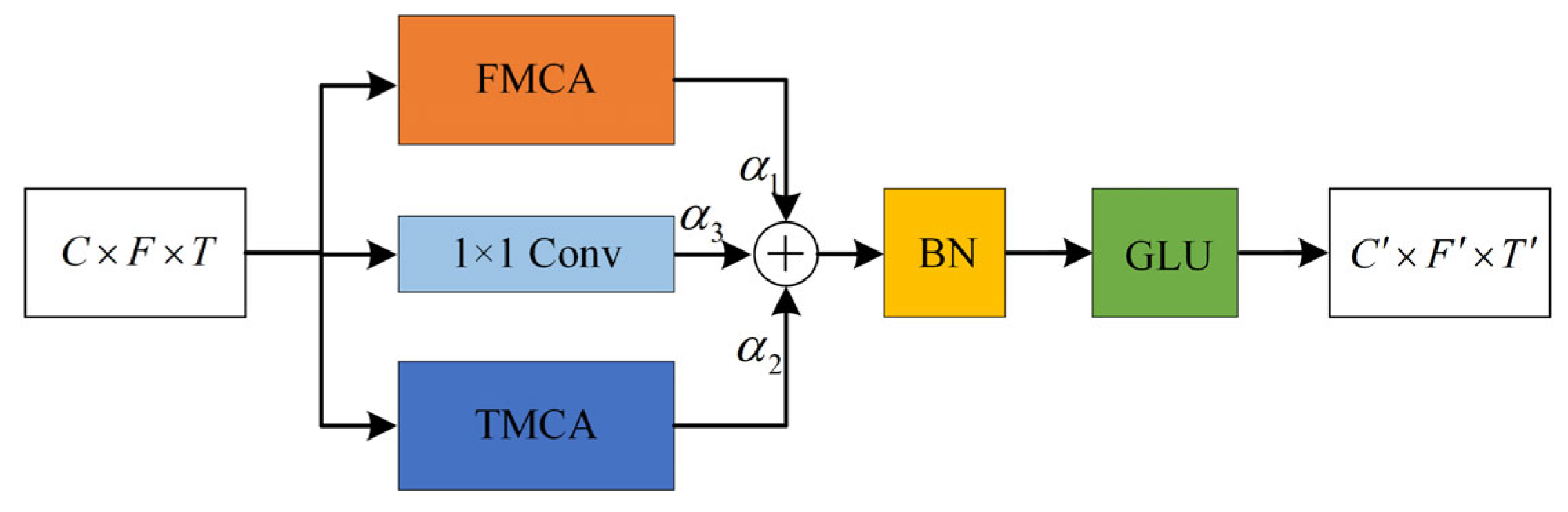

(3) The PTFMSA module is used to extract temporal and frequency features from audio. It adopts a parallel branching structure to avoid interference between features of different dimensions and learns adjustable dynamic parameters to adjust the weights of different branches during network training.

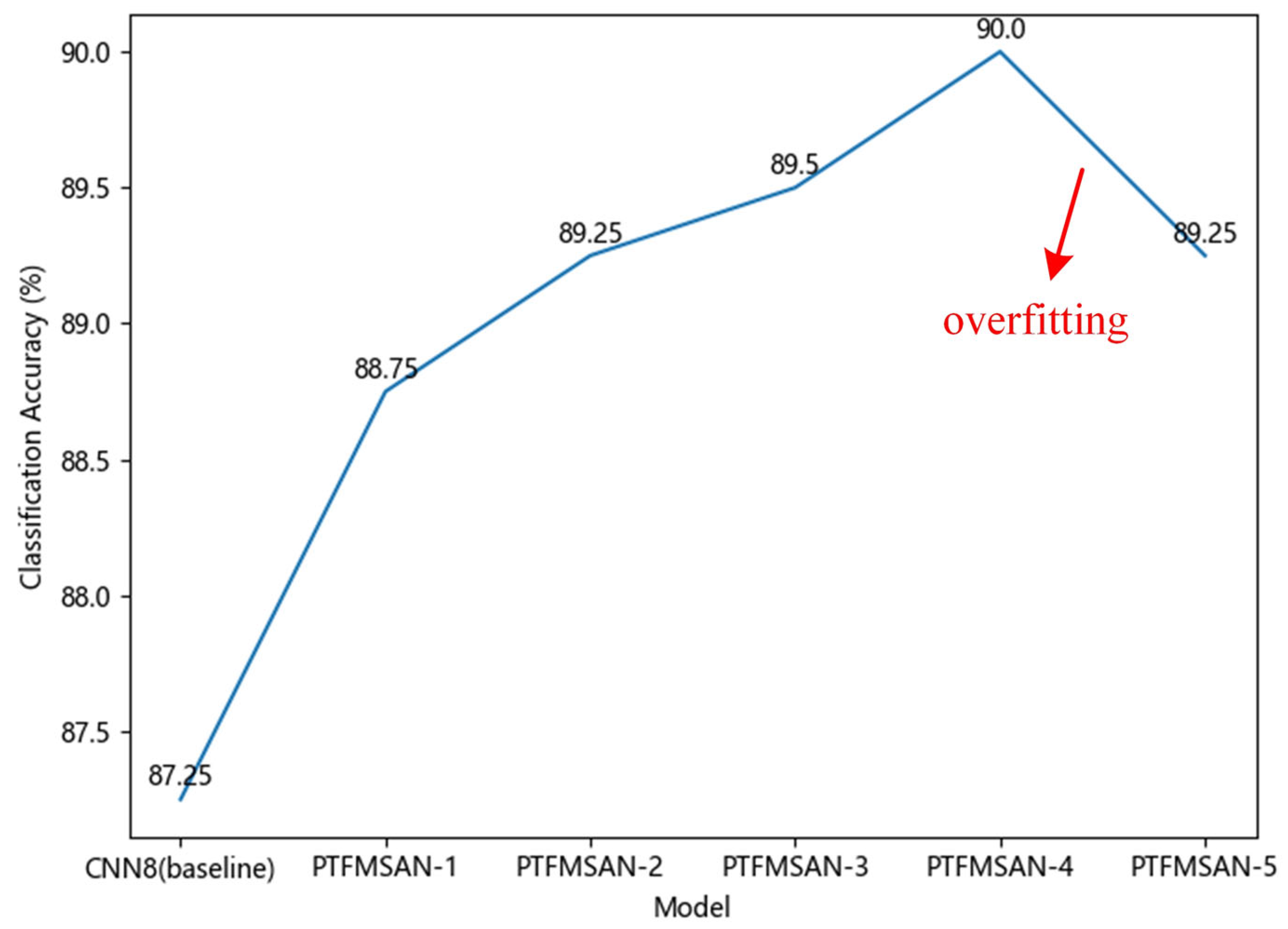

(4) As previously described, our novel PTFMSAN architecture for ESC applies the PTFMSA module and convolution layer to directly process raw audio input. Experimental results on the ESC-50 dataset show that PTFMSAN performs better than the baseline network, achieving a classification accuracy of 90%, which is higher than other state-of-the-art CNN-based networks.

The remaining part of the paper is organized as follows.

Section 2 describes the modules and network we propose.

Section 3 describes the proposed methodology.

Section 4 shows the experimental work. The result and discussion are given in

Section 5.

Section 6 represents the conclusion.

2. Related Research

Through extensive experiments, people have discovered that convolutional neural networks (CNNs) can provide the most advanced performance compared to other structures [

22,

23]. Initially, CNN was used for feature extraction in the ImageNet [

24] competition to perform convolution on 2D features. However, the raw audio signal is a 1D signal. In order to apply CNN in audio feature extraction, the signal is converted into a 2D spectrogram. Therefore, spectrograms and mel-scale frequency cepstral coefficients (MFCC) are often used as inputs to the network. For example, Piczak [

25] proposes two convolutional layers in tandem with two fully connected layers to evaluate the potential of CNNs in environmental classification. The results show that the classification accuracy of the proposed network is better than other state-of-the-art methods. Behnaz et al. [

26] proposed a recurrent neural network (RNN) coupled with CNN to solve the problem, but the network needs a large dataset to show its efficient performance. The experimental results show that the proposed CNN-RNN architecture model achieves better performance.

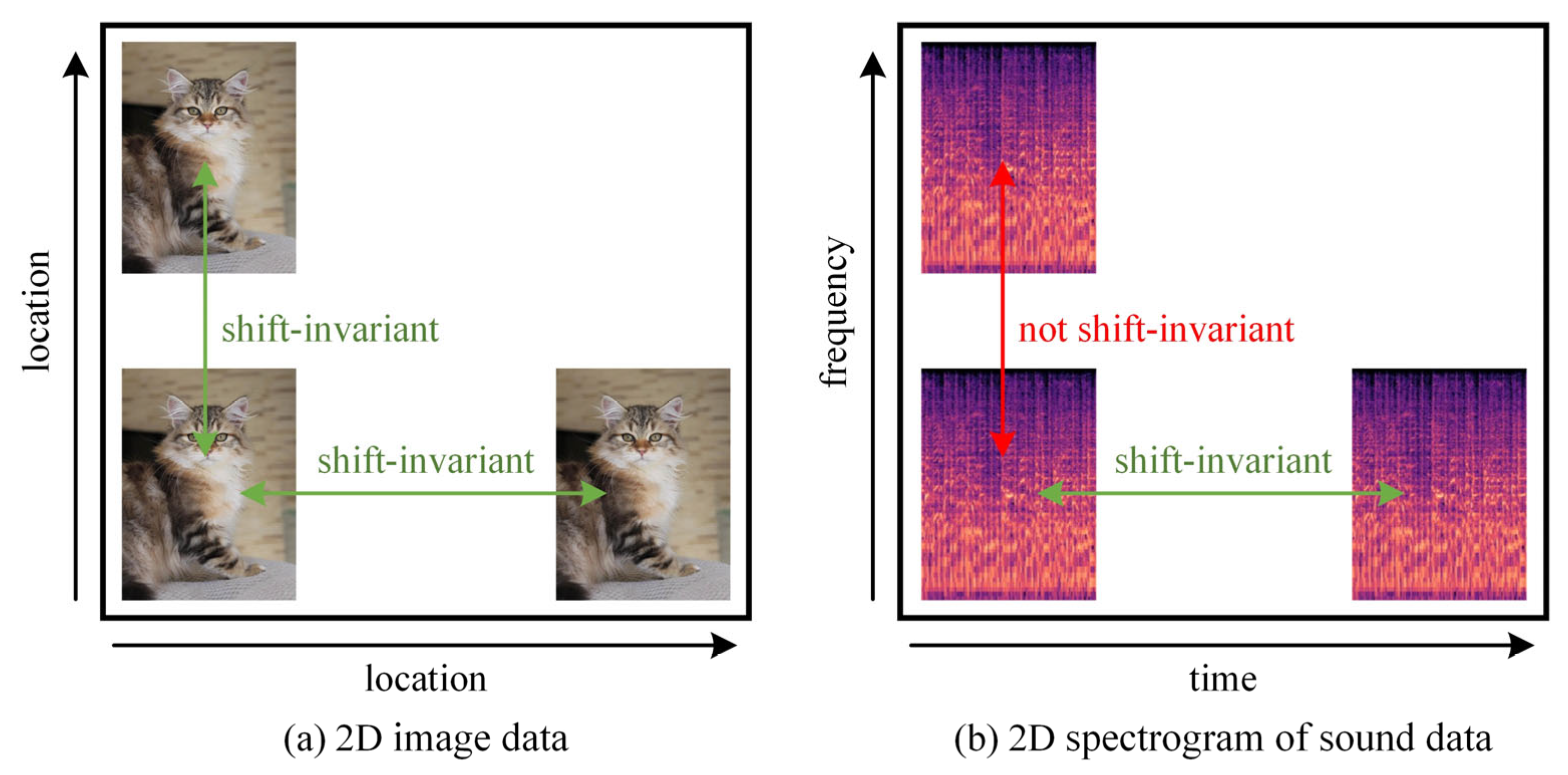

Two-dimensional convolution has been widely used in feature extraction of spectrograms of sound signals and has achieved certain success. However, 2D convolution has been proposed to recognize 2D image data and is therefore not fully compatible with audio data.

Figure 1 illustrates the different shift-invariance of 2D image data and 2D audio data. Since shifting 2D image data in two dimensions does not result in a change of information, it only differs in position. Therefore, it remains unchanged in both dimensions, and convolution operations in any dimension are physically continuous. But the spectrogram of an audio signal sounds different when it is shifted along the frequency axis. This means that the spectrogram of the audio is shift-invariant only along the time axis and not along the frequency axis. To solve this problem, Nam et al. [

27] proposed Frequency Dynamic Convolution (FDC), which applies kernels that adapt to the input frequency components. However, extracting attention using convolution instead of fully connection leads to a loss of global associations. Inspired by this, we propose an improved FDC by combining these two structures. In addition, the single scale of FDC makes the receptive field limited, resulting in insufficiently rich local features. Based on the above factors, this paper proposes two modules: an (a) FMSA module and a (b) TMSA module. These two modules apply improved dynamic convolution for feature extraction at multiple scales in the frequency and time dimensions, respectively. This makes the features contain more information to avoid the limitations of the convolutional feature and ensure physical consistency.

In order to obtain rich sound information, features are extracted from both the time and frequency domains. The traditional way of acquiring time-frequency features is to directly cascade two modules [

28,

29]. However, the cascade approach has the disadvantage that temporal and frequency features may interfere with each other, which will affect the effectiveness of the network. Therefore, we propose the PTFMSA module that uses the structure of parallel branches along the time and frequency dimensions to avoid interference between features of different dimensions. The importance of different branches is different, and if the corresponding weights are fixed, it will make the network ineffective. Therefore, we use learnable parameters that can dynamically adjust the weights of different branches during network training to obtain the optimal coefficients.

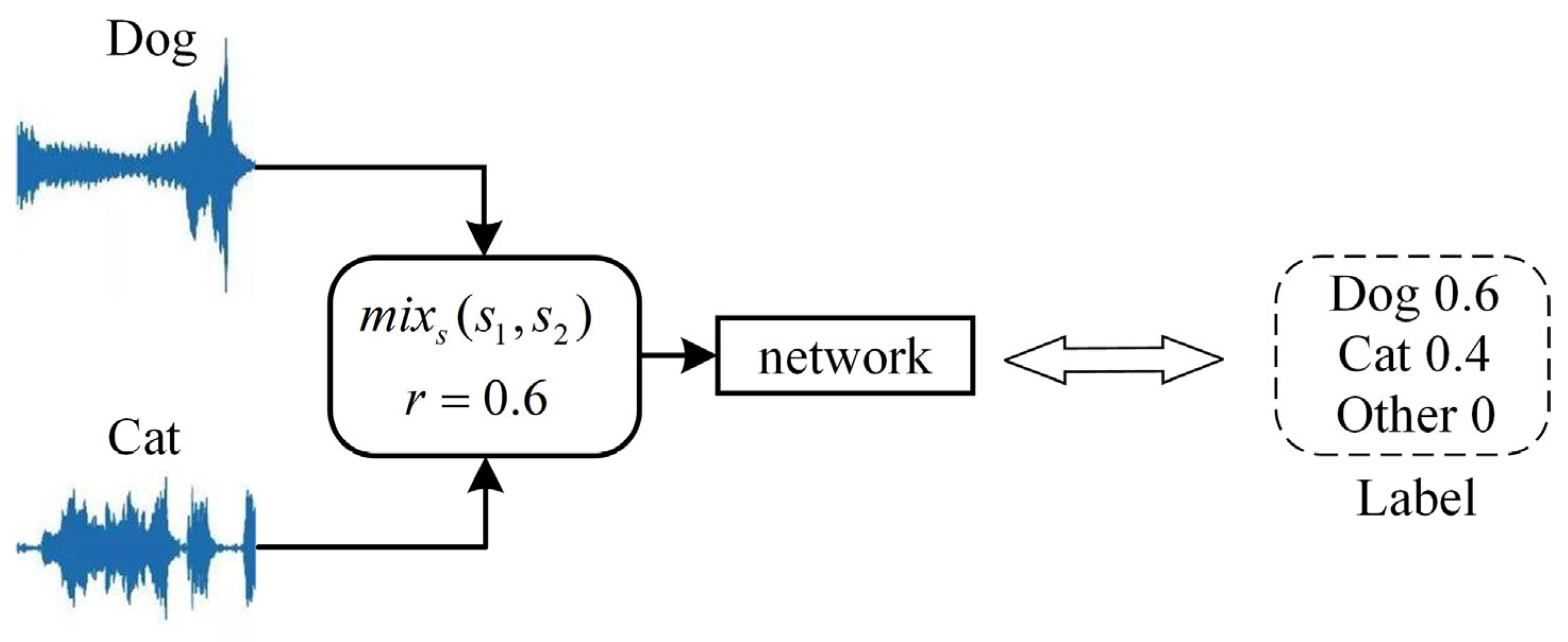

After applying the PTFMSA module, we propose a parallel time-frequency multi-scale attention network (PTFMSAN) for ESC tasks. This network applies 1D convolution to replace the conversion from raw audio to spectrograms. It not only allows the raw audio to be used directly as input to the network but also allows the network to dynamically adjust features. In addition, Tokozume et al. [

30] proposed a mixing method called between-class (BC) learning, which can increase the number of training datasets during the training phase of deep learning models. The mixing strategy is where sounds between classes are generated by mixing two sounds belonging to different classes at a random rate. At the same time, BC learning also takes the sound pressure level of the raw sound signal into account for balancing accordingly. The study shows that the BC learning approach performs better in sound recognition networks and data enhancement. Therefore, we used BC learning to process the dataset to improve the model. PTFMSAN is evaluated on a benchmark dataset [

31] and achieves a state-of-the-art classification accuracy of 90%. We also performed ablation experiments to verify the effectiveness of each module. Due to the small parameter count and high classification accuracy, PTFMSAN can be quickly trained and deployed on real-world devices for practical classification tasks.

Recent Transformer-based ESC models: In addition to CNNs, Transformer-based techniques have recently demonstrated exceptionally high ESC-50 accuracies. With Audio Set pre-training, the Audio Spectrogram Transformer (AST) achieves 95.6% accuracy, HTS-AT reaches 97.0%, and the Efficient Audio Transformer (EAT) achieves approximately 96%. Tens of millions of parameters are frequently required for these models, which typically rely on deep architectures and extensive pre-training. On the other hand, we concentrate on a CNN-based architecture that is small (about 3.7 M parameters) and can be trained directly on ESC-50. As a result, although we acknowledge the improved absolute performance of current Transformer-based models, we mainly compare with CNN baselines.

3. The Proposed Method

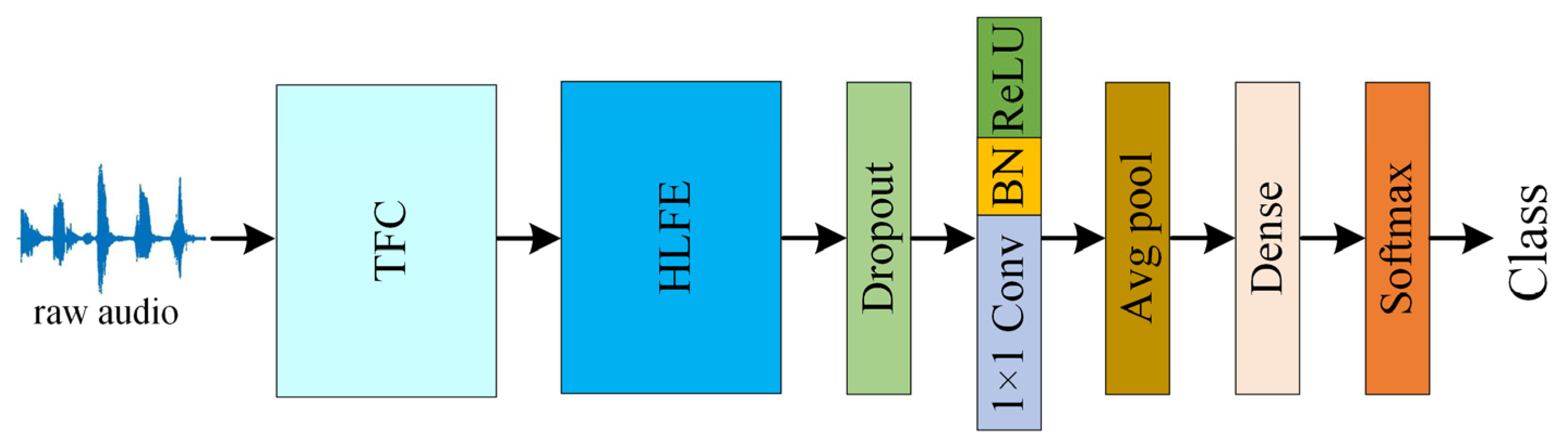

This section proposes a parallel time-frequency multi-scale attention network (PTFMSAN) for ESC tasks as shown in

Figure 2. Firstly, the raw audio is converted into 2D frequency features through the time-frequency conversion (TFC) module. The high-level feature extraction (HLFE) module then fully extracts time-frequency features. After that, a 2D convolutional layer with a kernel size of 1 and an average pooling (avgpool) layer are used for post-processing. Finally, features are flattened and then fed into the softmax output layer for classification. The following subsections first introduce the TDC and HLFE module. Then, the detailed improved dynamic convolution is shown, which is the core of the PTFMSA module. Next, the detailed PTFMSA module is given.

3.1. TFC and HLFE Module

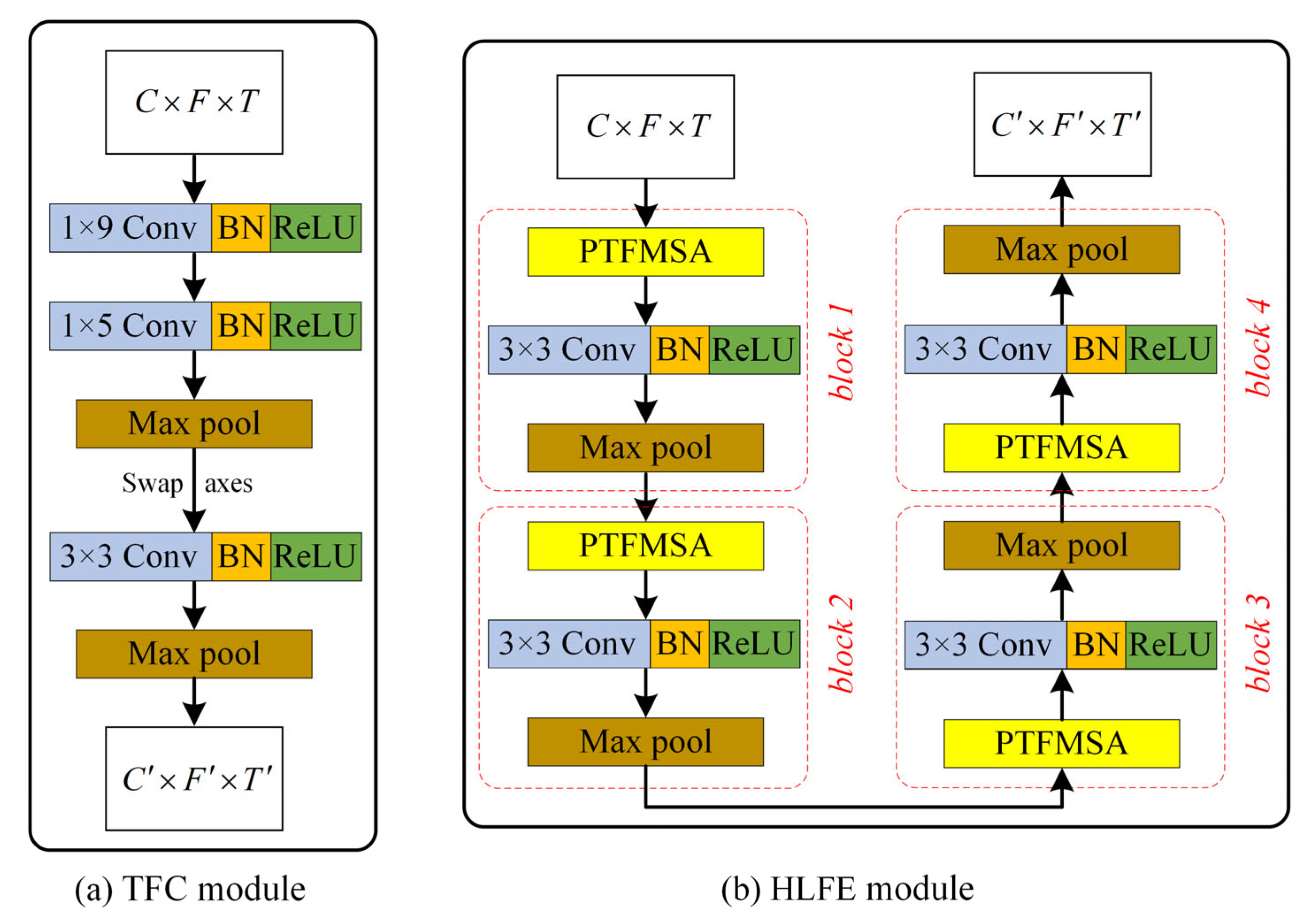

In order to apply 2D convolution to audio data, it is often necessary to convert the raw audio signal into a 2D spectrogram as a network input. However, it is a very time-consuming and empirical task to select the appropriate frequency domain features and dimensions. The proposed TFC module dynamically performs time-frequency conversion in the network based on convolution, so that raw audio can be directly used as an input to the network. As illustrated in

Figure 2, the overall architecture begins with a raw waveform of size 1 ×

T.

Figure 3a further details the TFC block: the input waveform (1 ×

T) passes through two 1-D convolution layers (kernel size 9 and 5) with BN and ReLU, followed by max-pooling. The resulting features are then reshaped and swapped to

C ×

F ×

T representation, which serves as a learned filter bank-like input for subsequent 2-D operations. After this step, a 2D convolution layer with a kernel size of 3 is used to preprocess and then followed by a max-pooling layer.

Effective receptive field: The TFC block’s initial two 1-D convolution kernels are rather small (sizes 9 and 5 at 44.1 kHz), but the stacking of convolution and pooling layers causes the effective receptive field to increase quickly. Consequently, the network can record both transients or short-term stimuli and the longer-term context required for low-frequency data. We note that using larger or dilated kernels at the input stage may enhance low-frequency modeling even more, and we point to this as an interesting avenue for additional research.

After obtaining the 2D features, further time-frequency high-level features need to be extracted. As shown in

Figure 3b, the HLFE module mainly consists of 4 blocks. Each block is composed of a PTFMSA layer and a 2D convolutional layer with a kernel size of 3, followed by a maxpool layer. All convolution layers are followed by a BN and a ReLU layer by default. The PTFMSA module extracts multi-scale time-frequency features, and then the level of features is improved by the 2D convolutional layer. Finally, the features are compressed by the maxpool layer. The HLFE module is formed by stacking 4 blocks, which are used to extract high-level features.

3.2. Improved Dynamic Convolution

As shown in

Figure 4, improved FDC and improved temporal dynamic convolution (TDC) are implementations of improved dynamic convolution in the frequency and time domains, respectively. Take improved FDC, for example; it contains two branches, one is the attention branch and the other is the convolutional branch. In the attention branch, input feature map

is first compressed along the time dimension by avgpool. The 1 ×

k convolution followed by a BN and a ReLU layer is applied to extract frequency local correlation features

to ensure shift-invariance of the 2D convolution along the frequency dimension. The reduction from C channels to

is achieved through an additional 1 × 1 point wise convolution after the 1 ×

k operation, which serves as a channel projection for efficiency.

where

avg (•, T),

Conv (•),

BN (•), and

ReLU (•) denote the avgpool along time, the convolution block, the batch normalization, and the ReLU activation, respectively.

Then, frequency local correlation features

are compressed along the frequency dimension by avgpool and the fully connected layer is used to extract global feature attention

. If only convolution is applied to extract the attention weights, it leads to loss of global features. If only fully connected layer is applied to extract the attention weights, it leads to the 2D convolution that does not satisfy shift-invariance along the frequency dimension. This is why we combine convolutional and fully connected layers for improvement. Next, softmax is performed along the channel dimension to make the attentional weights range from 0 to 1 and make the sum of the weights of the different basis kernels to be 1

.

where

avg (•, F),

FC (•) and

S (•) denote the avgpool along frequency, the fully connected block, and the softmax, respectively. After that, the convolution branch applies

k ×

k convolutions to generate

n features

, which are then multiplied with the weights generated by the attention branch and then summed to get the final output

.

where

n and

denote the number of basis kernels and the multiplication of elements, respectively. The improved TDC is similar to the improved FDC; only the avgpool dimension is different.

3.3. Parallel Time-Frequency Multi-Scale Attention (PTFMSA) Module

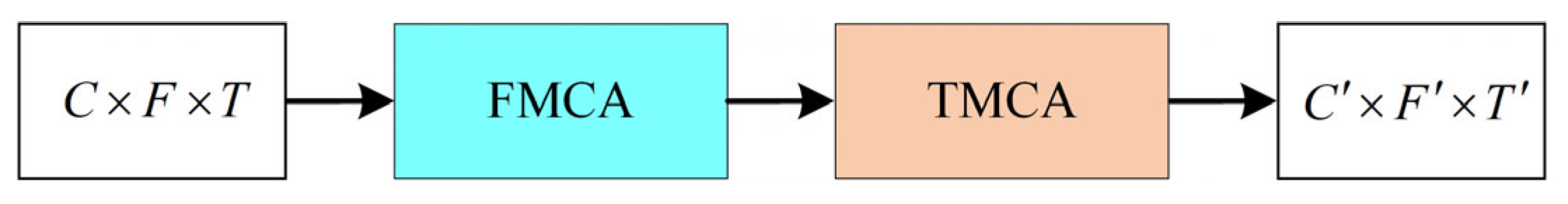

The traditional way of acquiring time-frequency features is to directly cascade two modules [

28,

29] as shown in

Figure 5. However, the cascade approach has the disadvantage that temporal features and frequency features may interfere with each other. When temporal features are extracted, the network focuses more on the correlation between different time frames and suppresses the importance of different frequency bands. When frequency features are extracted, the network will focus more on the importance of different frequency bands and ignore the correlation between different time frames. Therefore, we propose the parallel time-frequency multi-scale attention (PTFMSA) module that uses the structure of parallel branches along the time dimension and frequency dimension to avoid interference between features of different dimensions as shown in

Figure 6. The main purpose of the 1 × 1 Conv branch is to change the number of channels of the input feature so that they can be added together. The important coefficients of different branches are also put into the network for training to ensure optimal coefficients. We can express it as

where

,

,

are learnable dynamic parameters with the same initial values of 1/3,

is the feature of the FMSA branch outputs,

is the feature of the TMSA branch outputs,

is the feature of the 1 × 1 Conv branch outputs, and

is the feature of the sum of the three branching features.

Experimental results show that learnable dynamic parameters that can dynamically adjust the weights of different branches during network training can perform better compared to when they are all set to a fixed size of 1/3. After the BN layer, we apply the GLU layer to replace the ReLU layer, which can help the network capture long-term dependencies and contextual information.

As shown in

Figure 7a,b, we propose a frequency multi-scale attention (FMSA) module and a temporal multi-scale attention (TMSA) module that apply improved dynamic convolution for feature extraction at multiple scales in the frequency and time dimensions, respectively. This enables the features containing more information to avoid the limitations of the convolutional feature and ensure physical consistency. For improved FDC, the number of basis kernels affects the performance of the module and the speed of convergence. The optimal number of basis kernels was obtained through experimental comparison by setting different numbers of base kernels. In this way, we set

k to be 3, 5, and 7, corresponding to three different scales of improved FDCs, respectively. These improved FDCs were applied to the input features to obtain features of different receptive fields. For the improved FDC with

k set to 1, a convolution with kernel size 1 is used to replace it, which can reduce the amount of computation and avoid the problem of gradient disappearance or gradient explosion. In order to prevent different scale features from interfering with each other, we merge rather than add these four scale features in the channel dimension. Based on the above design, the FMSA module not only enriches the receptive field of features but also enforces frequency-dependency on 2D convolution. The temporal multi-scale attention (TMSA) module is an implementation of the multi-scale attention module along the frequency dimension, as shown in

Figure 7b. It is designed along the same lines as the FMSA module, except that the dimension has been changed from frequency to time, which can strengthen the connection and importance of time frames at different scales.

6. Conclusions

In this paper, we improved dynamic convolution by combining convolution and fully connected layers to extract global and local features, ensuring shift-invariance of 2D convolution in the frequency dimension. The proposed PTFMSA module applies improved dynamic convolution for feature extraction at multiple scales, and the introduction of parallel branches avoids the mutual interference of time-frequency features. Based on the PTFMSA module, we propose PTFMSAN for ESC tasks. The results show that our proposed network achieves improvements in ESC tasks and has a high classification accuracy of 90%, competitive among CNN-based networks. While recent Transformer-based models have achieved higher absolute accuracy on ESC-50 with large-scale pre-training, our result demonstrates that compact CNN-based architectures remain effective and competitive. In addition, ablation experiments prove the effectiveness of our proposed modules. The proposed structure provides an efficient time-frequency feature extraction method for the ESC task. On limited hardware platforms, PTFMSAN’s high performance and lightweight design allow for effective training and real-world implementation. However, its generalization ability has to be further verified. In future work, we will try to apply it to other fields to verify its generalization ability. Meanwhile, we will try more attention module methods to improve the classification accuracy.