1. Introduction

The identification (ID) scheme suggested by Ahlswede and Dueck [

1] in 1989 is conceptually different from the classical message transmission scheme proposed by Shannon [

2]. In classical message transmission, the encoder transmits a message over a noisy channel; at the receiver side, the aim of the decoder is to output an estimation of this message based on the channel observation. In the ID paradigm, however, the encoder sends an ID message (also called the identity) over a noisy channel, and the decoder aims to check whether or not a specific ID message of special interest to the receiver has been sent. Obviously, the sender has no prior knowledge of the specific ID message that the receiver is interested in. Ahlswede and Dueck demonstrated that in the theory of ID [

1], if randomized encoding is used, then the size of ID codes for discrete memoryless channels (DMCs) grows doubly exponentially fast with the blocklength. If only deterministic encoding is allowed, then the number of identities that can be identified over a DMC scales exponentially with the blocklength. Nevertheless, the rate is still more significant than the transmission rate in the exponential scale, as shown in [

3,

4].

New applications in modern communications demand high reliability and latency requirements, including machine-to-machine and human-to-machine systems, digital watermarking [

5,

6,

7], industry 4.0 [

8], and 6G communication systems [

9,

10]. The aforementioned requirements are crucial for achieving trustworthiness [

11]. For this purpose, the necessary latency resilience and data security requirements must be embedded in the physical domain. In this situation, the classical Shannon message transmission is limited and an ID scheme can achieve a better scaling behavior in terms of necessary energy and needed hardware components. It has been proved that information-theoretic security can be integrated into the ID scheme without paying an extra price for secrecy [

12,

13]. Further gains within the ID paradigm can be achieved by taking advantage of additional resources such as quantum entanglement, common randomness (CR), and feedback. In contrast to the classical Shannon message transmission, feedback can increase the ID capacity of a DMC [

14]. Furthermore, it has been shown in [

15] that the ID capacity of Gaussian channels with noiseless feedback is infinite. This holds to both rate definitions

(as defined by Shannon for classical transmission) and

(as defined by Ahlswede and Dueck for ID over DMCs). Interestingly, the authors of [

15] showed that the ID capacity with noiseless feedback remains infinite regardless of the scaling used for the rate, e.g., double exponential, triple exponential, etc. In addition, the resource CR allows for a considerable increase in the ID capacity of channels [

6,

16,

17]. The aforementioned communication scenarios emphasize that the ID capacity has completely different behavior than Shannon’s capacity.

A key technology within 6G communication systems is the joint design of radio communication and sensor technology [

11]. This enables the realization of revolutionary end-user applications [

18]. Joint communication and radar/radio sensing (JCAS) means that sensing and communication are jointly designed based on sharing the same bandwidth. Sensing and communication systems are usually designed separately, meaning that resources are dedicated to either sensing or data communications. The joint sensing and communication approach is a solution that can overcome the limitations of separation-based approaches. Recent works [

19,

20,

21,

22] have explored JCAS and showed that this approach can improve spectrum efficiency while minimizing hardware costs. For instance, the fundamental limits of joint sensing and communication for a point-to-point channel were studied in [

23]. In this case, the transmitter wishes to simultaneously send a message to the receiver and sense its channel state through a strictly causal feedback link. Motivated by the drastic effects of feedback on the ID capacity [

15], this work investigates joint ID and sensing. To the best of our knowledge, the problem of joint ID and sensing has not been treated in the literature yet. We study the problem of joint ID and channel state estimation over a DMC with i.i.d. state sequences. The sender simultaneously sends an ID message over the DMC with a random state and estimates the channel state via a strictly causal channel output. The random channel state is available to neither the sender nor the receiver. We consider the ID capacity–distortion tradeoff as a performance metric. This metric is analogous to the one studied in [

24], and is defined as the supremum of all achievable ID rates such that some distortion constraint on state sensing is fulfilled. This model was motivated by the problem of adaptive and sequential optimization of the beamforming vectors during the initial access phase of communication [

25]. We establish a lower bound on the ID capacity–distortion tradeoff. In addition, we show that in our communication setup, sensing can be viewed as an additional resource that increases the ID capacity.

Outline:The remainder of this paper is organized as follows.

Section 2 introduces the system models, reviews key definitions related to identification (ID), and presents the main results, including a complete characterization of the ID capacity–distortion function.

Section 3 provides detailed proofs of these main results. In

Section 4, we explore an alternative more flexible distortion constraint, namely, the average distortion, and establish a lower bound on the corresponding ID capacity–distortion function. Finally,

Section 5 concludes the paper with a discussion of the results and potential directions for future research.

Notation: The distribution of an RV X is denoted by ; for a finite set , we denote the set of probability distributions on by and the cardinality of by . If X is a RV with distribution , we denote the Shannon entropy of X by , the expectation of X by , and the variance of X by . If X and Y are two RVs with probability distributions and , then the mutual information between X and Y is denoted by . Finally, denotes the complement of , denotes the difference set, and all logarithms and information quantities are taken to base 2.

2. System Models and Main Results

Consider a discrete memoryless channel with random state

consisting of a finite input alphabet

, finite output alphabet

, finite state set

, and pmf

on

. The channel is memoryless, i.e., if the input sequence

is sent and the sequence state is

, then the probability of a sequence

being received is provided by

The state sequence

is i.i.d. according to the distribution

. We assume that the input

and state

are statistically independent for all

. In our settup depicted in

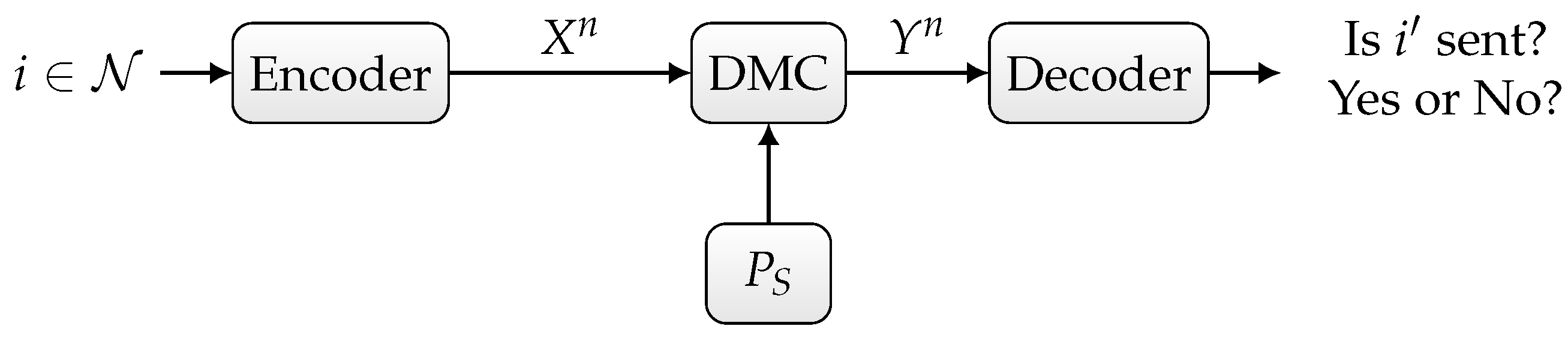

Figure 1, we assume that the channel state is known to neither the sender nor the receiver.

In the sequelae, we distinguish three scenarios:

Randomized ID over the state-dependent channel

, as depicted in

Figure 1,

Deterministic or randomized ID over the state-dependent channel

in the presence of noiseless feedback between the sender and the receiver, as depicted in

Figure 2,

Joint deterministic or randomized ID and sensing, in which the sender wishes to simultaneously send an identity to the receiver and sense the channel state sequence based on the output of the noiseless feedback link, as depicted in

Figure 3.

First, we define randomized ID codes for the state-dependent channel defined above.

Definition 1. An randomized ID code with for channel is a family of pairs withsuch that the errors of the first kind and the second kind are bounded as follows: In the following, we define the achievable ID rate and ID capacity for our system model.

Definition 2. - 1.

The rate R of a randomized ID code for the channel is bits.

- 2.

The ID rate R for is said to be achievable if for there exists an such that for all there exists an randomized ID code for .

- 3.

The randomized ID capacity of the channel is the supremum of all achievable rates.

The following theorem characterizes the randomized ID capacity of the state-dependent channel when the state information is known to neither the sender nor the receiver.

Theorem 1. The randomized ID capacity of the channel is provided bywhere denotes the Shannon transmission capacity of . Proof. The proof of Theorem 1 follows from Theorem 6.6.4 of [

26] and Equation (7.2) of [

27]. Because the channel

satisfies the strong converse property [

26], the randomized ID capacity of

coincides with its Shannon transmission capacity determined in [

27]. □

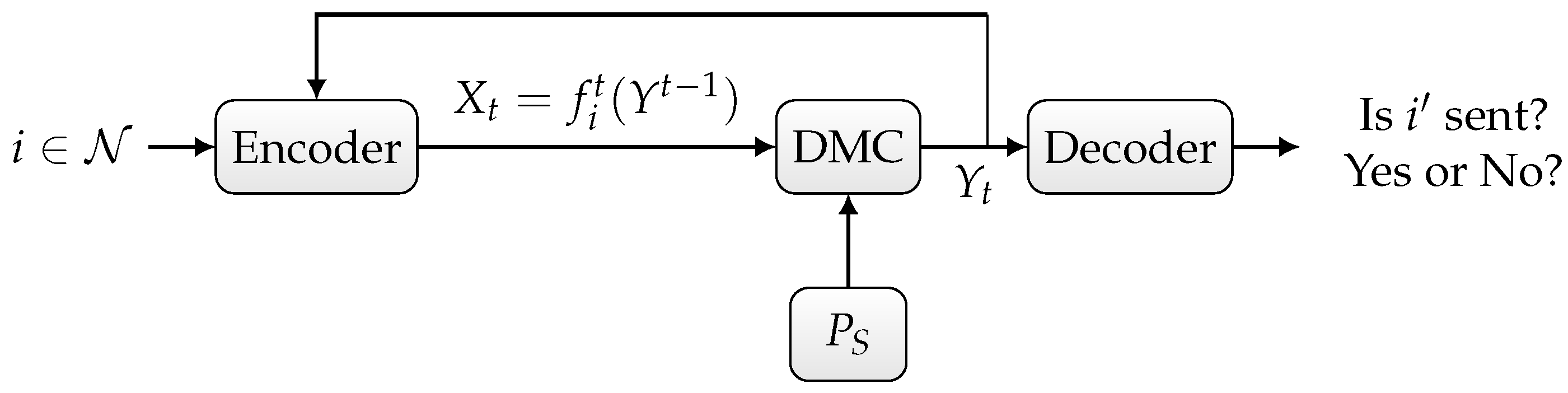

Now, we consider the second scenario depicted in

Figure 2. Let further

denote the output of the noiseless backward (feedback) channel:

In the following, we define a deterministic and randomized ID feedback code for the state-dependent channel

.

Definition 3. An deterministic ID feedback code with for the channel is characterized as follows. The sender wants to send an ID message that is encoded by the vector-valued functionwhere and for . At , the sender sends . At , the sender sends . The decoding sets should satisfy the following inequalities: Definition 4. An randomized ID feedback codewith for the channel is characterized as follows. The sender wants to send an ID message that is encoded by the probability distributionwhere denotes the set of all n-length functions as . The decoding sets , should satisfy the following inequalities: Definition 5. - 1.

The rate R of a (deterministic/randomized) ID feedback code for the channel is bits.

- 2.

The (deterministic/randomized) ID feedback rate R for is said to be achievable if for there exists an such that for all there exists a (deterministic/randomized) ID feedback code for .

- 3.

The (deterministic/randomized) ID feedback capacity / of the channel is the supremum of all achievable rates.

It has been demonstrated in [

26] that noise increases the ID capacity of the DMC in the case of feedback. Intuitively, noise is considered a source of randomness, i.e., a random experiment for which outcome is provided to the sender and receiver via the feedback channel. Thus, adding a perfect feedback link enables the realization of a correlated random experiment between the sender and the receiver. The size of this random experiment can be used to compute the growth of the ID rate. This result has been further emphasized in [

15,

28], where it has been shown that the ID capacity of the Gaussian channel with noiseless feedback is infinite. This is because the authors of [

15,

28] provided a coding scheme that generates infinite common randomness between the sender and the receiver. Here, we want to investigate the effect of feedback on the ID capacity of the system model depicted in

Figure 2. Theorem 2 characterizes the ID feedback capacity of the state-dependent channel

with noiseless feedback. The proof of Theorem 2 is provided in

Section 3.

Theorem 2. If , then the deterministic ID feedback capacity of is provided by Theorem 3. If , then the randomized ID feedback capacity of is provided by Remark 1. It can be shown that the same ID feedback capacity formula holds if the channel state is known to either the sender or the receiver. This is because we achieve the same amount of common randomness as in the scenario depicted in Figure 2. Intuitively, the channel state is an additional source of randomness that we can take advantage of. Now, we consider the third scenario depicted in

Figure 3, where we want to jointly identify and sense the channel state. The sender comprises an encoder that sends a symbol

for each identity

and delayed feedback output

along with a state estimator that outputs an estimation sequence

based on the feedback output and input sequence. We define the per-symbol distortion as follows:

where

is a distortion function and the expectation is over the joint distribution of

conditioned by the ID message

.

Definition 6. - 1.

An ID rate–distortion pair for is said to be achievable if for every there exists an such that for all there exists an (deterministic/randomized) ID code for and if for all .

- 2.

The deterministic ID capacity–distortion function is defined as the supremum of R such that is achievable.

Without loss of generality, we choose the following deterministic estimation function

:

where

is an estimator that maps a channel input–feedback output pair to a channel state. If there exist several functions

, we choose one randomly. We define the minimal distortion function for each input symbol

as in [

29]:

and the minimal distortion function for each input distribution

as

In the following, we establish the ID capacity–distortion function defined above.

Theorem 4. The deterministic ID capacity–distortion function of the state-dependent channel depicted in Figure 3 is provided bywhere the set is provided by We now turn our attention to a randomized encoder. In the following, we derive the ID capacity–distortion function of the state-dependent channel under the assumption of randomized encoding.

Theorem 5. The randomized ID capacity–distortion function of the state-dependent channel is provided bywhere the set is provided by Remark 2. Randomized encoding achieves higher rates than deterministic encoding. This is because we are combining two sources of randomness: local randomness used for the encoding, and shared randomness generated via the noiseless feedback link. The result is analogous to randomized ID over DMCs in the presence of noiseless feedback, as studied in [14]. 4. Average Distortion

In addition to the per-symbol distortion constraint, an alternative and more flexible distortion constraint is the average distortion. This approach is valuable because it relaxes the per-symbol fidelity requirement, allowing for minor variations in individual symbol quality as long as the overall average distortion remains below a specified threshold. As defined in [

32], the average distortion for a sequence of symbols is provided by

This metric captures the average quality of the reconstructed sequence, making it suitable for applications where consistent strict fidelity for each symbol is not essential but where the overall fidelity of the transmission needs to remain within acceptable limits.

In the case of a deterministic ID code, the average distortion can be expressed in a more detailed form as

Using the code construction method from

Section 3.5 along with the minimum distortion condition defined in (17), we propose the following theorem, which provides a lower bound on the deterministic ID capacity–distortion function for a state-dependent channel

under an average distortion constraint.

Theorem 6. The deterministic ID capacity–distortion function with average distortion constraint of the state-dependent channel is lower-bounded as follows:where the set is provided by Despite the practical implications of this result, proving a converse theorem for this bound remains an open problem.

5. Conclusions and Discussion

In this work, we have studied the problem of joint ID and channel state estimation over a DMC with i.i.d. state sequences where the sender simultaneously sends an identity and senses the state via a strictly causal channel output. After establishing the capacity on the corresponding ID capacity–distortion function, it emerges that sensing can increase the ID capacity. In the proof of our theorem, we noticed that the generation of common randomness is a key tool for achieving a high ID rate. The common randomness generation is helped by feedback. The ID rate can be further increased by adding local randomness at the sender.

Our framework closely mirrors the one described in [

23], with the key distinction being that we utilize an identification scheme instead of the classical transmission scheme. We want to simultaneously identify the sent message and estimate the channel’s state. As noted in the results of [

23], the capacity–distortion function is consistently smaller than the transmission capacity of the state-dependent DMC except when the distortion is infinite. This observation aligns with expectations for the message transmission scheme, as the optimization is performed over a constrained input set defined by the distortion function. However, this does not directly apply to the ID scheme. An interesting aspect is that the capacity–distortion function for the deterministic encoding case scales double exponentially with the blocklength, as highlighted in Theorem 4. However, the ID capacity of the state-dependent DMC with deterministic encoding scales only exponentially with the blocklength. This is because feedback significantly enhances the ID capacity, enabling double-exponential growth of the ID capacity for the state-dependent DMC, as established in Theorem 2. This contrasts sharply with the message transmission scheme, where feedback does not increase the capacity of a DMC. Introducing an estimator into our framework naturally reduces the ID capacity compared to the scenario with feedback but without an estimator. This reduction occurs because the optimization is performed over a constrained input set defined by the distortion function. Nevertheless, the capacity–distortion function remains higher than in the case without feedback and without an estimator. This difference underscores a unique characteristic of the ID scheme, highlighting its distinct scaling behavior and potential advantages in certain scenarios.

We consider two cases, namely, deterministic and randomized identification. For a transmission system without sensing, it was shown in [

1,

3] that the number of messages grows exponentially, i.e.,

.

Remarkably, Theorem 4 demonstrates that by incorporating sensing, the growth rate of the number of messages becomes double exponential (

); this result is notable, and closely parallels the findings on identification with feedback in [

14].

In the case of randomized identification, Theorem 5 shows that the capacity is also improved by incorporating sensing. However, in both the deterministic and randomized settings, the scaling remains double exponential.

One application of message identification is in control and alarm systems [

10,

33]. For instance, it has been shown that identification codes can be used for status monitoring in digital twins [

34]. In this context, our results demonstrate that incorporating a sensing process can significantly enhance the capacity.

Another potential application of our framework is molecular communication, where nanomachines use identification codes to determine when to perform specific actions such as drug delivery [

35]. In this context, sensing the position of the nanomachines can enhance the communication rate. For such scenarios, it is also essential to explore alternative channel models such as the Poisson channel.

Furthermore, it is clear that in other applications it would be necessary to consider different distortion functions.

In the future, it would be interesting to apply the method proposed in this paper to other distortion functions. Furthermore, in practical scenarios, there are models where the the sensing is performed either additionally or exclusively by the receiver. This suggests the need to study a wider variety of system models. For wireless communications, the Gaussian channel is more practical and widely applicable. Therefore, it would be valuable to extend our results to the Gaussian case (JIDAS scheme with a Gaussian channel as the forward channel). It has been shown in [

15,

28] that the ID capacity of a Gaussian channel with noiseless feedback is infinite. Interestingly, the ID capacity of a Gaussian channel with noiseless feedback remains infinite regardless of the scaling used for the rate, e.g., double exponential, triple exponential, etc. By introducing an estimator, we conjecture that the same results will hold, leading to an infinite capacity–distortion function. Thus, considering scenarios with noisy feedback is more practical for future research.

.png)