1. Introduction

A part of Karl Friston’s massive and highly influential body of work is concerned with understanding human behavior by employing mathematical forms of theories. In Karl’s work, the theoretical framework is mainly the predictive coding theory mathematically framed in terms of the Free Energy principle [

1,

2,

3,

4,

5]. Here, we also take a computational approach to understanding human behavior and focus on decision making in a noisy visual environment (e.g., in which direction an object is moving or what the name of an object is). The mathematical framework in this paper is the drift-diffusion model (DDM). The model assumes that decisions are made by sequentially sampling evidence from a stimulus and accumulating that evidence over time until a criterial amount is reached. The assumed stochasticity in the accumulation of evidence allows the DDM to capture the typical variation in both the speed (response time distribution) and accuracy of many decision processes. Consequently, the model has been applied to various aspects of cognition, including the processing of multiple object scenes in visual search tasks [

6], word recognition in lexical decision tasks [

7] and the discrimination of motion direction in fields of moving dots (random-dot kinematograms RDKs [

8,

9,

10,

11,

12,

13,

14]).

Not only is the DDM behaviorally valid, but its assumptions are also supported by neurophysiological studies [

15,

16] examining neural firing rates in the lateral intraparietal area (LIP) when subjects view RDK stimuli, a frequently used stimulus in perceptual decision-making experiments (e.g., [

12], see

Figure 1 for an illustration). However, a potential shortcoming of the DDM is that it assumes the evidence accumulation process operates on a fully formed representation of perceptual information, available immediately after a brief encoding stage. In other words, it presumes evidence accumulation begins only once stimuli are fully encoded, thereby oversimplifying the integration of perceptual information. This assumption overlooks the possibility that integration may be stimulus-dependent and could simultaneously influence the decision-making process.

For example, forming a representation of luminous disks is very quick (around 100 ms), whereas the formation for RDKs’ motion direction is considerably longer at around 400 ms [

17,

18]. Considering this long formation period, it seems important for a model to acknowledge that a meaningful proportion of the evidence accumulation begins before the representation formation is completed. Recently, Smith and colleagues proposed an extension of DDM to rectify this shortcoming [

19]. Their model, which here we refer to as time-varying DDM (TV-DDM), introduces a mechanism of perceptual integration by which the representation of perceptual information is formed over time during the decision process and modulates the speed (drift rate) of evidence accumulation. Indeed, a series of studies by Smith and colleagues has shown that a model incorporating time-varying drift rates well accounts for responses to stimuli (letters, bars, and grating patches) embedded in dynamic noise compared to the standard DDM [

20,

21]. It is also worth noting that Heinke and colleagues have introduced a similar type of model for reaching movements in which movements are assumed to be executed in parallel to the selection of the movement target (see [

22,

23] for details). Consequently, the movement control of the reaches is also affected by the target selection process analogous to how the formation of perceptual representation affects the decision process in TV-DDM. The assumptions of the TV-DDM not only incorporate the effect of perceptual integration on the decision process, but Smith and Lilburn [

19] also demonstrated that the DDM’s disregard for this assumption can be particularly problematic. Specifically, when applied to the RDK task in which encoding is extended over time, the DDM’s assumption of an abrupt onset of perceptual evidence can lead to clear violations of “selective influence” and, in turn, false inferences [

19,

24].

The concept of “selective influence” goes back to Sternberg’s [

25] additive factor method (see also [

26] for a summary) and is set within a classical analysis of variance (ANOVA) framework of reaction time analysis. The assumption is that if an experimental factor is processed only by a specific corresponding cognitive process, then manipulation of this factor should only affect the processing duration within this cognitive process while other cognitive processes are unaffected. Importantly if two or more factors are varied in an experiment and these factors each tap into a separate process, ANOVA should only show an additive effect of these factors and no significant interaction. However, if factors share cognitive processes, significant interactions should be found. In model-based approaches, the concept of selective influence applies to parameters, which are assumed to represent cognitive constructs. Indeed, a key benefit of computational models is their ability to decompose overt behavior into latent cognitive constructs via their parameters. Hence, experimental manipulations designed to influence a given cognitive process should selectively influence parameter values that represent said process, while leaving other parameters unchanged (see [

27,

28] for a more formal treatment). Importantly, if a model is unable to accurately discriminate between different cognitive constructs through its parameters, then this benefit is undermined. At this point, it may be worth mentioning that this framework is very different from the currently very popular approach with deep neural networks where no such link with theoretical constructs exist, leading to a black box (see [

29] for a detailed discussion).

The DDM possesses three parameters with clear predictions for experimental manipulations of selective influence: speed of accumulation of evidence (drift rate); the amount of evidence needed to generate a response (response boundary); and the necessary time for encoding perceptual information plus execution time for the motor response (non-decision time). Under a strict selective influence assumption, the discriminability of stimuli should only affect the drift rate, instructing participants to respond either quickly or accurately should affect only the response boundary (less (more) evidence required for fast (accurate) decisions) and response handicapping should only affect non-decision time. However, there is now a good amount of evidence for violations of selective influence.

For instance, studies exploring selective influence violations have focused heavily on the effect of speed–accuracy instructions and have reported that these instructions affect not only response boundary but also estimates of drift rate [

30,

31,

32] and non-decision time [

33,

34,

35,

36,

37]. For example, Voss et al. [

37] used a color discrimination task in which speed–accuracy instructions were found to influence both response boundary and non-decision time but not drift rate. Starns et al. [

38] reported effects of speed instructions on all three parameters in an item recognition task. Rae et al. [

32] also reported that emphasizing speed results in decreased estimates of drift rate, and reanalysis of these data by Ratcliff and McKoon [

39] found additional decreases in non-decision time also. A similar finding was reported by Dambacher and Hübner [

40] using a DDM-based model of the flanker task, where increased time pressure led to decreased estimates of response boundaries, non-decision time and drift rates for both early response selection and stimulus selection. The strongest evidence for violation of selective influence through manipulations of response caution comes from an RDK study by Dutilh et al. [

24]. The authors manipulated difficulty (two noise levels), speed–accuracy trade-off (speeded response vs. accurate response) and response bias (proportion of left/right stimuli). A total of 17 modelling teams were invited to fit a model of their choice to a subset of the data and were asked to infer from these fits which experimental manipulations had been made, with most researchers using some variant of the diffusion model. Overall, the modelling results indicate that the DDMs tend to conflate manipulations of response caution with changes in non-decision time, where estimates for non-decision time are higher when participants are instructed to respond more cautiously. Importantly, Smith and Lilburn [

19] replicated these findings but also demonstrated that this violation of selective influence is largely reduced by TV-DDM, underlining the importance of a perceptual integration stage.

It is important to note that not only have these speed–accuracy instructions been shown to influence estimates of response boundary, but there is also evidence that this manipulation leads to participants adopting response boundaries that “collapse” over time (e.g., [

41]). Here, the assumption is that participants utilize time-dependent response boundaries to optimize their performance ([

41,

42,

43] for examples of formal models). Typically, it is assumed that these within-trial changes in response boundaries occur when costs are associated, or deadlines are employed (see [

41] for an example). However, a theoretical study by Malhotra et al. [

44] showed that a mix of easy and difficult decisions can also lead to the same effect. Similarly, it has been proposed that adopting a collapsing boundary is an optimal strategy when decision evidence is unreliable [

45], or when decisions are difficult (i.e., in difficult trials, subjects may reduce the criterial amount of evidence needed near the end of the trial in order to reserve cognitive resources, so that the next trial can be initiated [

46]). On the other hand, a theoretical study by Boehm et al. [

47] demonstrated that for most levels of difficulty, static response boundaries are robust and only at extreme levels are collapsing boundaries more optimal. Empirical support for collapsing boundaries comes from a study by Lin et al. [

6] using the EZ2 variant of a DDM with data from a visual search task where participants are tasked with finding a designated target among non-targets. They found that the response boundary increased with increasing number of objects on the screen (i.e., the difficulty of the search task). Together, these findings imply a relationship between task difficulty and boundary, an effect which may manifest itself in apparent violations of selective influence in fits of the DDM.

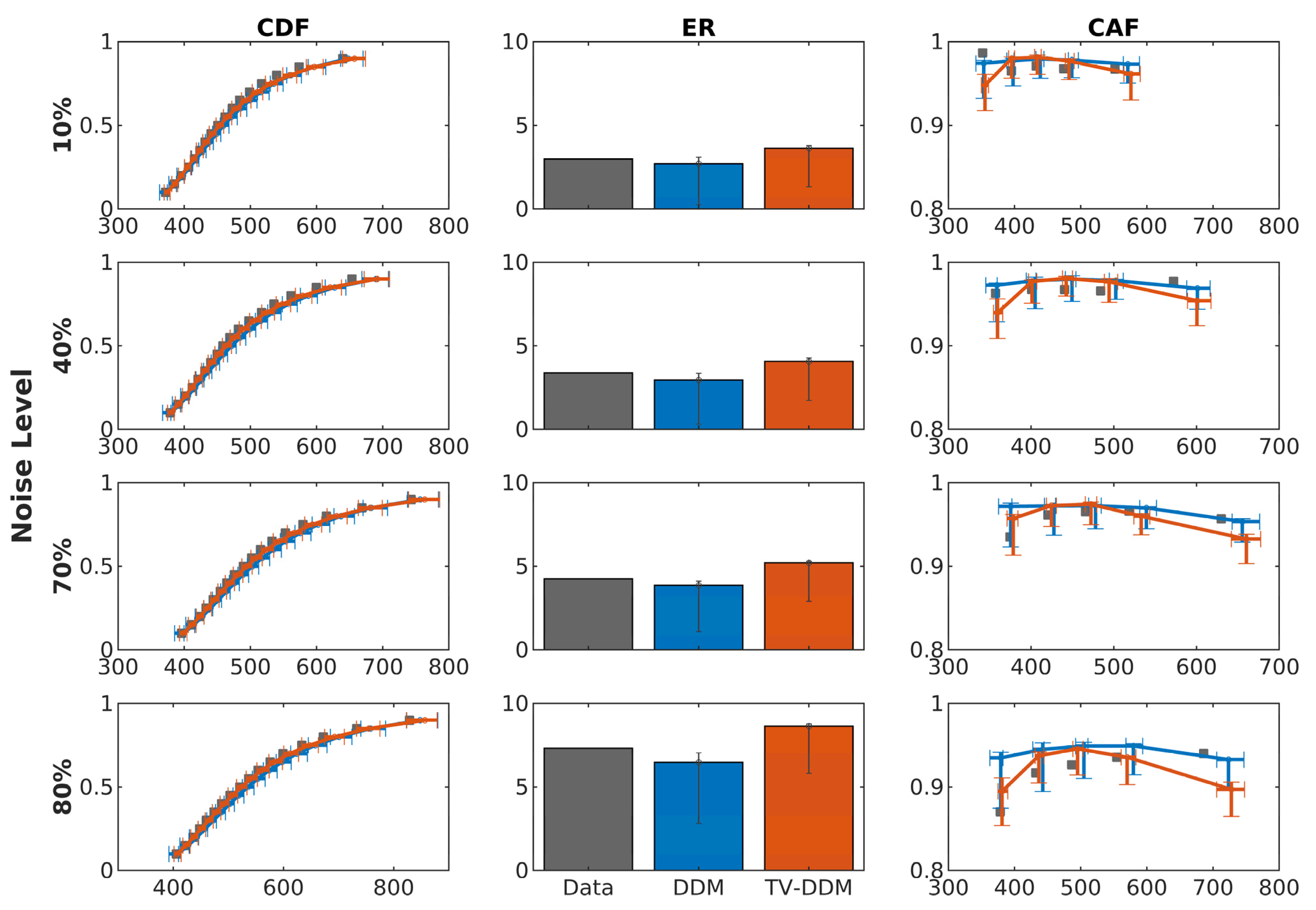

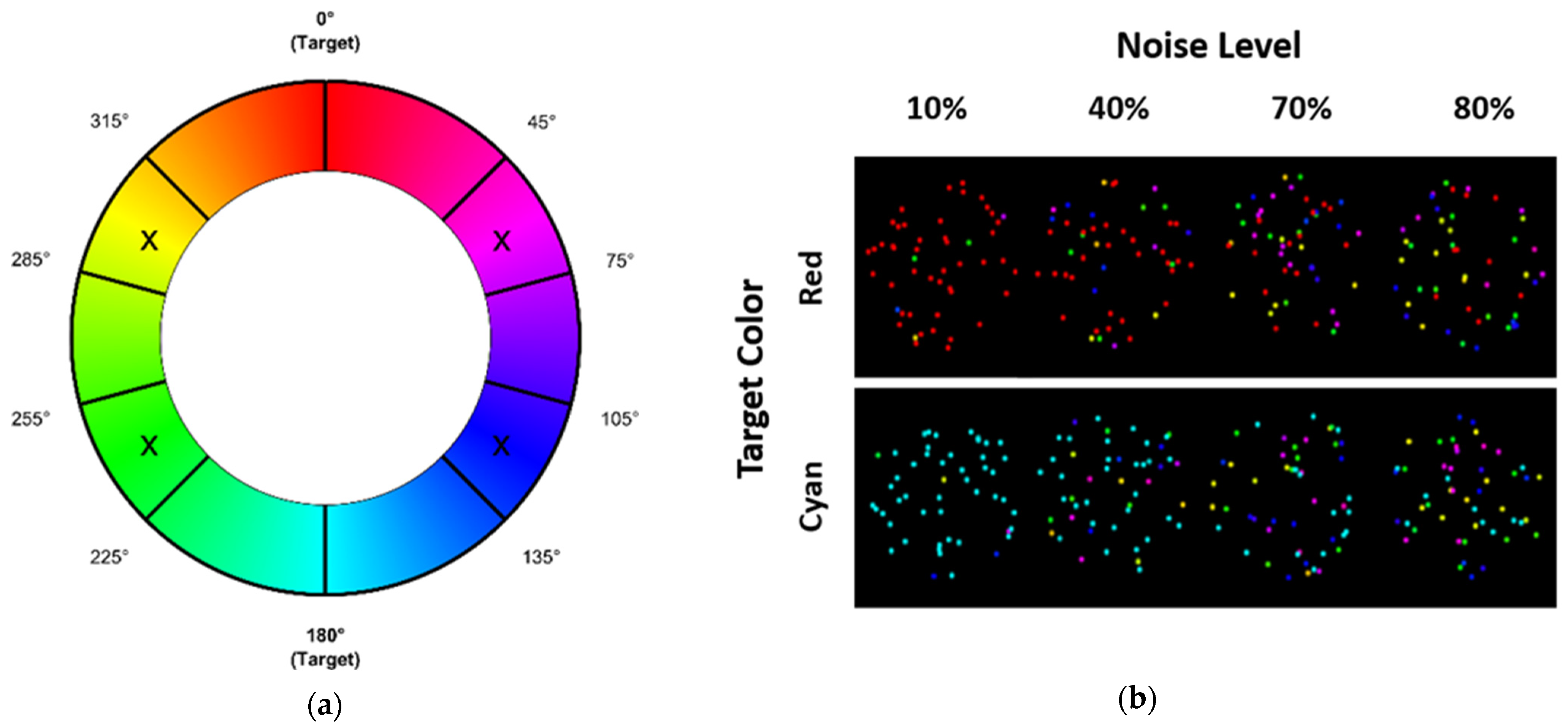

The present study aims to follow up these few studies and examine violations of selective influence by manipulating task difficulty with a standard RDK stimulus (Study 1). To realize the most complete test, we let all main parameters vary freely across all noise levels in both models, DDM and TV-DDM. From a strict viewpoint, both models should be able to explain the data with only changes in drift rate. However, the lack of integration of perceptual information in a standard DDM suggests that non-decision time may not be constant across the noise levels as pointed out by Smith and colleagues. In contrast, TV-DDM should be able to mitigate this problem. Noteworthy, Dutilh et al. [

24] did not report a violation of selective influence for this experimental manipulation. However, this may have been due to the limited number of noise levels. Here, we will test a wider range of noise levels (10%, 40%, 70%, and 80%). Finally, Malhotra et al.’s [

44] study predicts that we could find within-trial changes in decision boundaries (i.e., different boundaries for difference noise levels) as the study mixes up easy and hard conditions. In a second study, we introduced a novel type of noise manipulation where we maintained the spatial structure of the RDK (i.e., randomly placed dots in an aperture). However, rather than randomizing the motion direction, we randomized the hue of the noise dots, used a certain hue (cyan or red) for signal dots (rather than left or right moving dots), and varied the proportion of signal dots analogous to the noise manipulation in RDKs of coherently moving dots. Hence, participants were asked to perform a color discrimination task while static noise was manipulated. We termed these novel stimuli random dot patterns (RDPs). These stimuli allowed us to test a potential confounding factor in Smith et al. [

21] which showed perceptual integration is pertinent in dynamic noise (see above). However, their stimuli with static noise manipulation requires only little integration of spatial information while RDKs require a good amount of such integration. Hence, it is conceivable that the support for TV-DDM may have been also due to this time-consuming spatial integration and not only the dynamic noise. Hence, if our observation is correct, we expect TV-DDM to also be superior for the RDPs as they also require spatial integration, like RDKs. Finally, it is important to note that we fit the models using Bayesian parameter estimation to test the selective influence assumption, and for the model comparison we used the ratio between marginal likelihoods (Bayes factor). This Bayesian methodology allowed us to consider prior knowledge about parameter estimations from Smith and Lilburn’s [

19] maximum likelihood estimate for both models.

1.1. Model Descriptions

1.1.1. DDM

DDM’s evidence accumulation process is described by a Wiener process with drift rate

v, which determines the speed and accuracy of the evidence accumulation process. The change in evidence (

x) at any time point is described by:

where

dt is a small timestep,

σ is the diffusion coefficient which controls the noise in the process, and

W represents the Wiener process, which adds normally distributed noise to the accumulation.

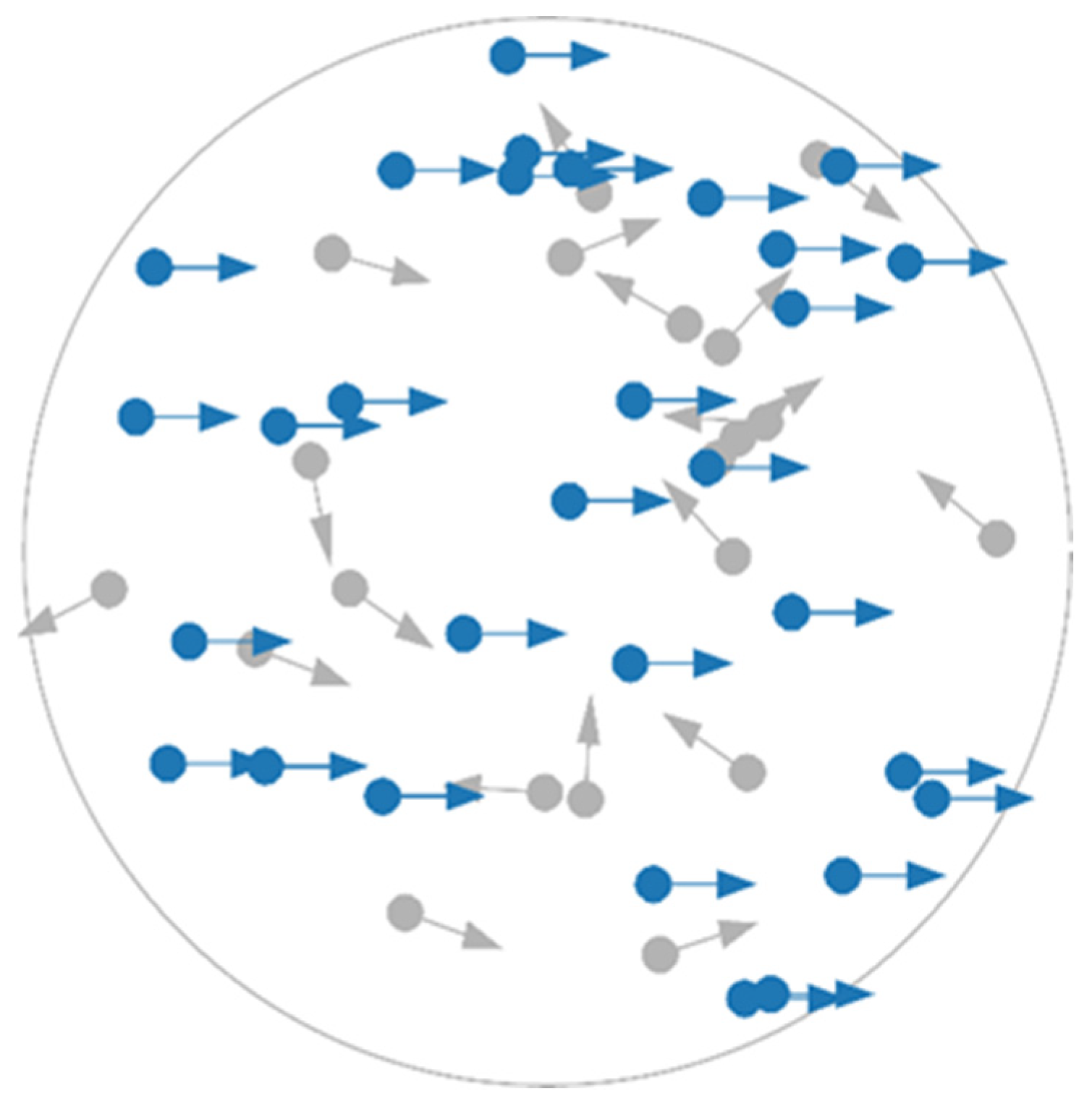

Figure 2 shows the resulting noisy accumulation of decision evidence. The diffusion process begins at start point

z (usually

A/2 assuming no bias) and accumulates evidence towards one of two response boundaries. If the diffusion process hits the upper boundary

A, the correct response is given, whereas if the lower boundary is hit, an error response is given. The DDM assumes total RT is a sum of decision time (the time taken for the process to hit a boundary) and non-decision time

Ter. Non-decision time encapsulates both the time required for stimulus encoding (

Terenc in

Figure 2) and the time taken to execute the motor response following a decision. The DDM also assumes some variability in start point (

sz), non-decision time (

ster), and drift rate (

sv), which allow the model to account for a wider range of data patterns, particularly those regarding the relationship between RT and accuracy [

48,

49]. Both

sz and

ster are assumed to be drawn from uniform distributions, while drift-rate variability (

sv) is drawn from a normal distribution. Here, we fit the DDM with 6 free parameters, namely response boundary (

A), non-decision time (

ter), drift rate (

v), and the three between-trial variability parameters (variability in start point (

sz), drift rate (

sv), and non-decision time (

ster)).

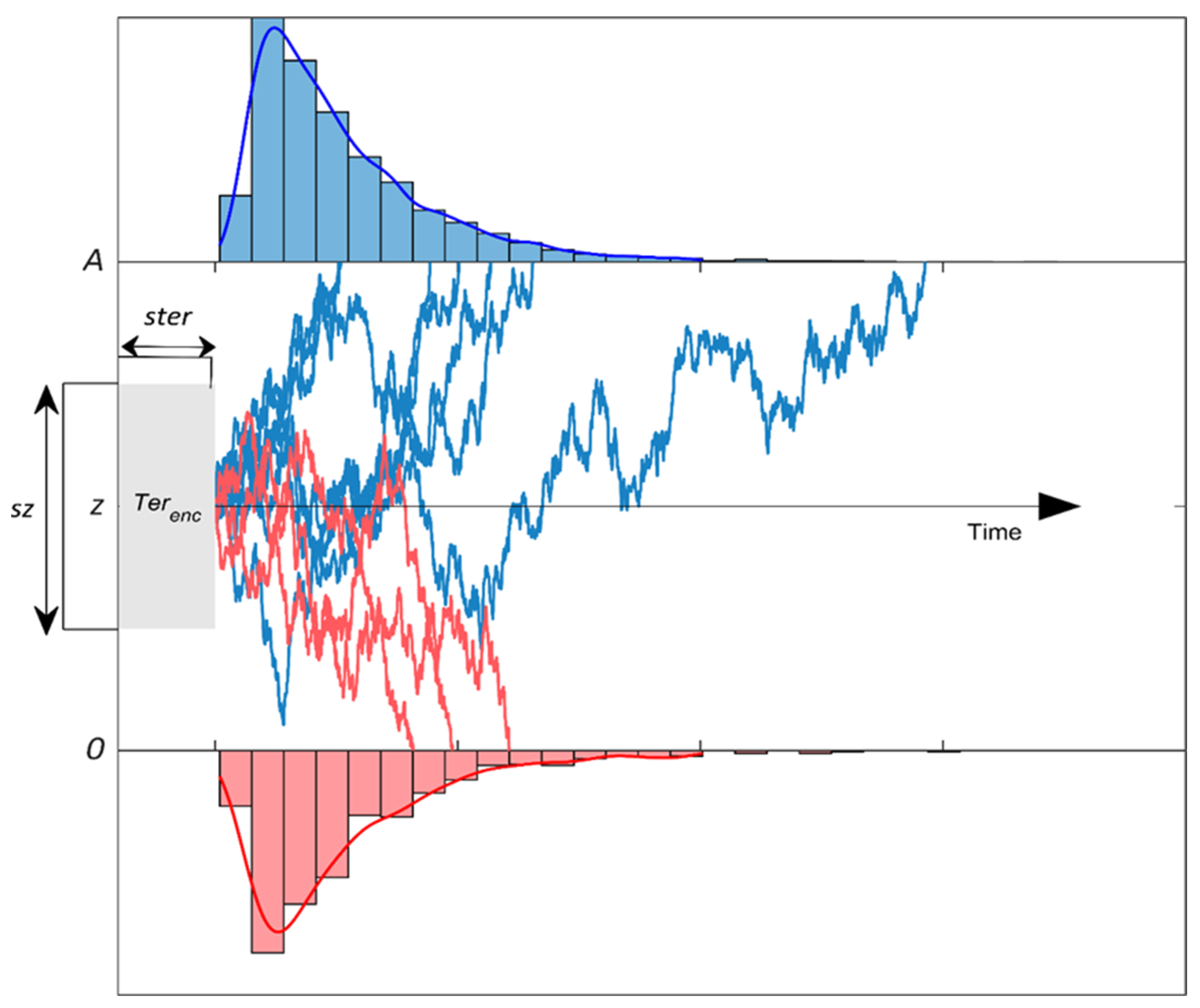

1.1.2. TV-DDM

For TV-DDM, Smith and Lilburn [

19] extended the Wiener process equation:

by adding an additional variance, σ

2, and θ(t), a “growth-rate function”. σ

2 aims to capture premature sampling prior to stimulus onset. The evidence growth term aims to reflect integration of perceptual information with dynamic noise described through an incomplete gamma function (see

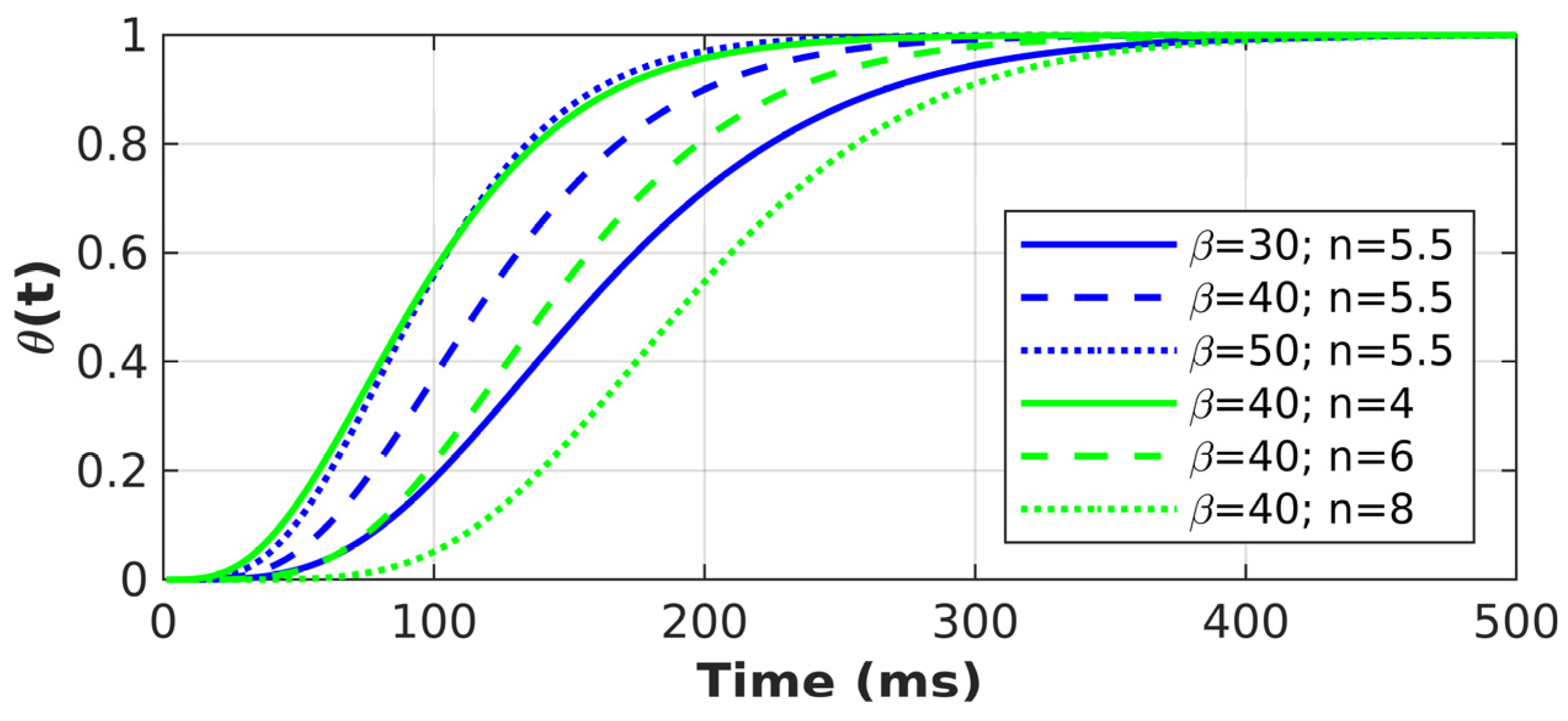

Figure 3 for an illustration):

with

β (evidence growth rate) and

n (evidence growth shape) as parameters and

Γ(n) as the gamma function. The gamma function can be interpreted as describing n-stages of linear filters, creating a representation of the perceptual information starting at no representation (0%) and leading to full representation (100%).

Figure 3 illustrates the influence of the parameters on this build-up. Note that the chosen values are in the range of the model-fitting results. An intuitive description of how the parameters affect the function can be that both parameters influence the speed of the build-up, but n has a particularly strong influence on the onset of this build-up, consistent with representing the number filtering stages. In summary, TV-DDM has the following parameters: response boundary (

A), non-decision time (

ter), drift rate (v), the evidence growth rate (

β), and shape (

n) parameters, premature sampling noise (

σ2), and the between-trial variability in drift rate parameter (

sv). Hence, TV-DDM has one more free parameter than DDM.

4. Discussion

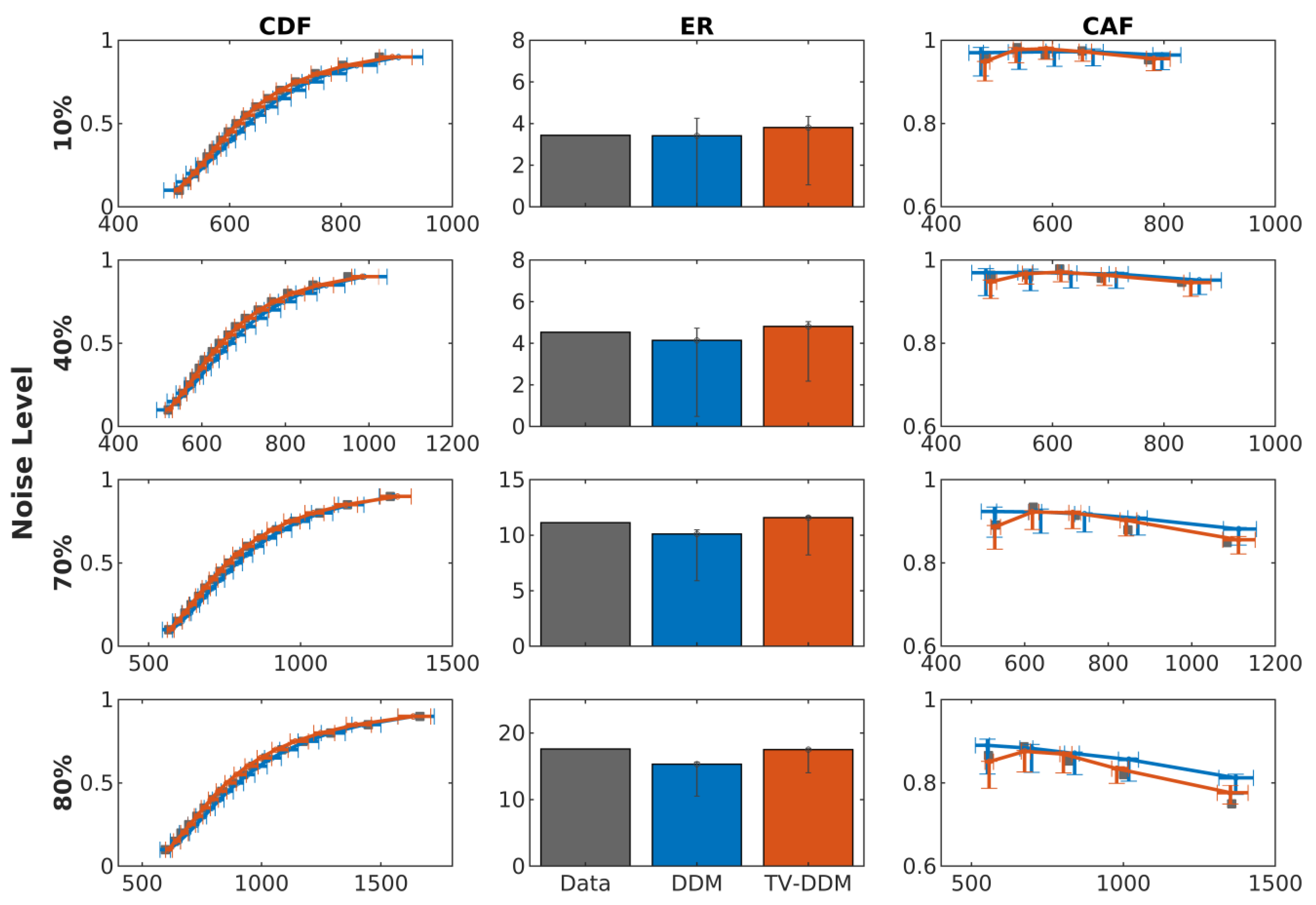

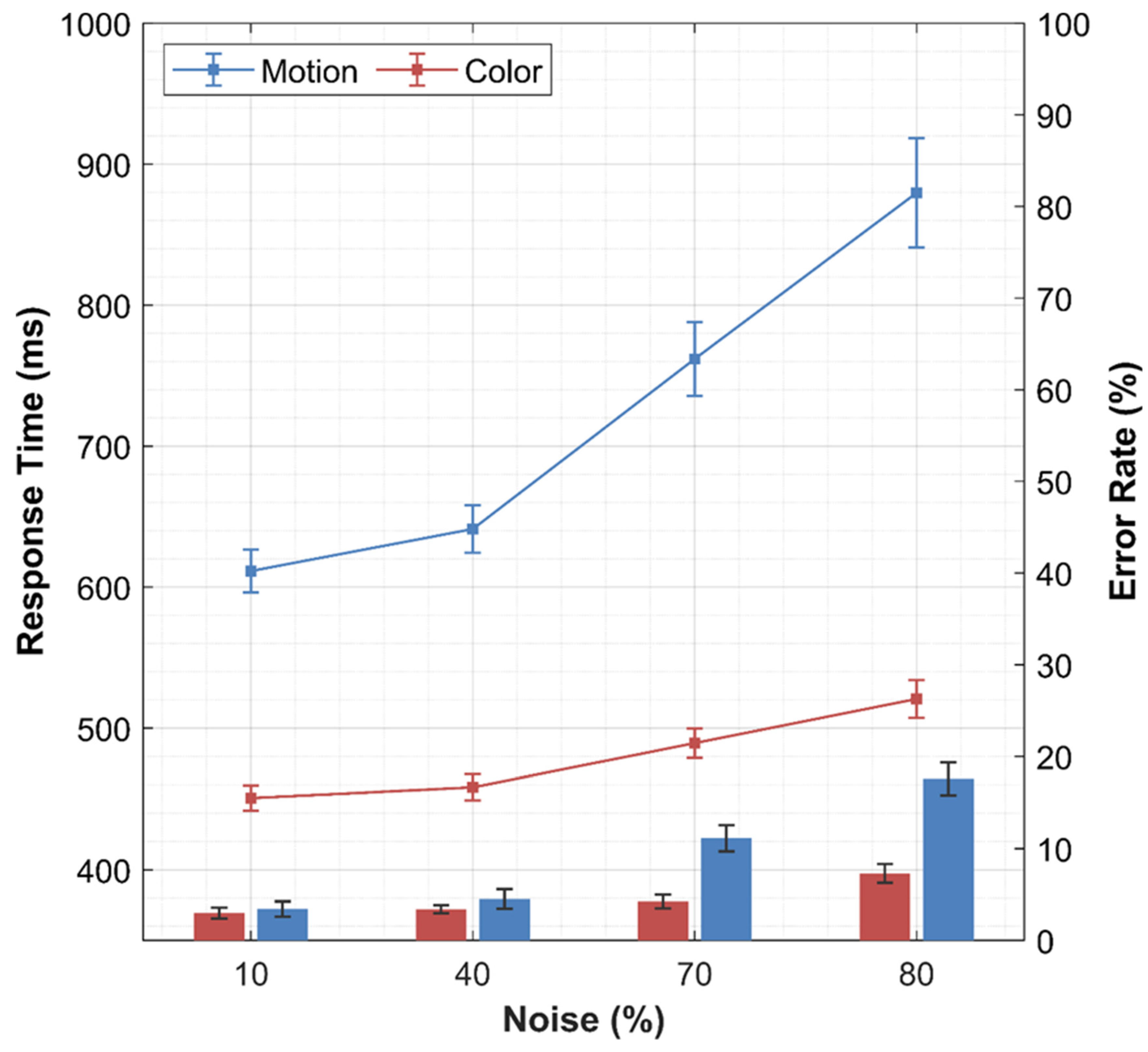

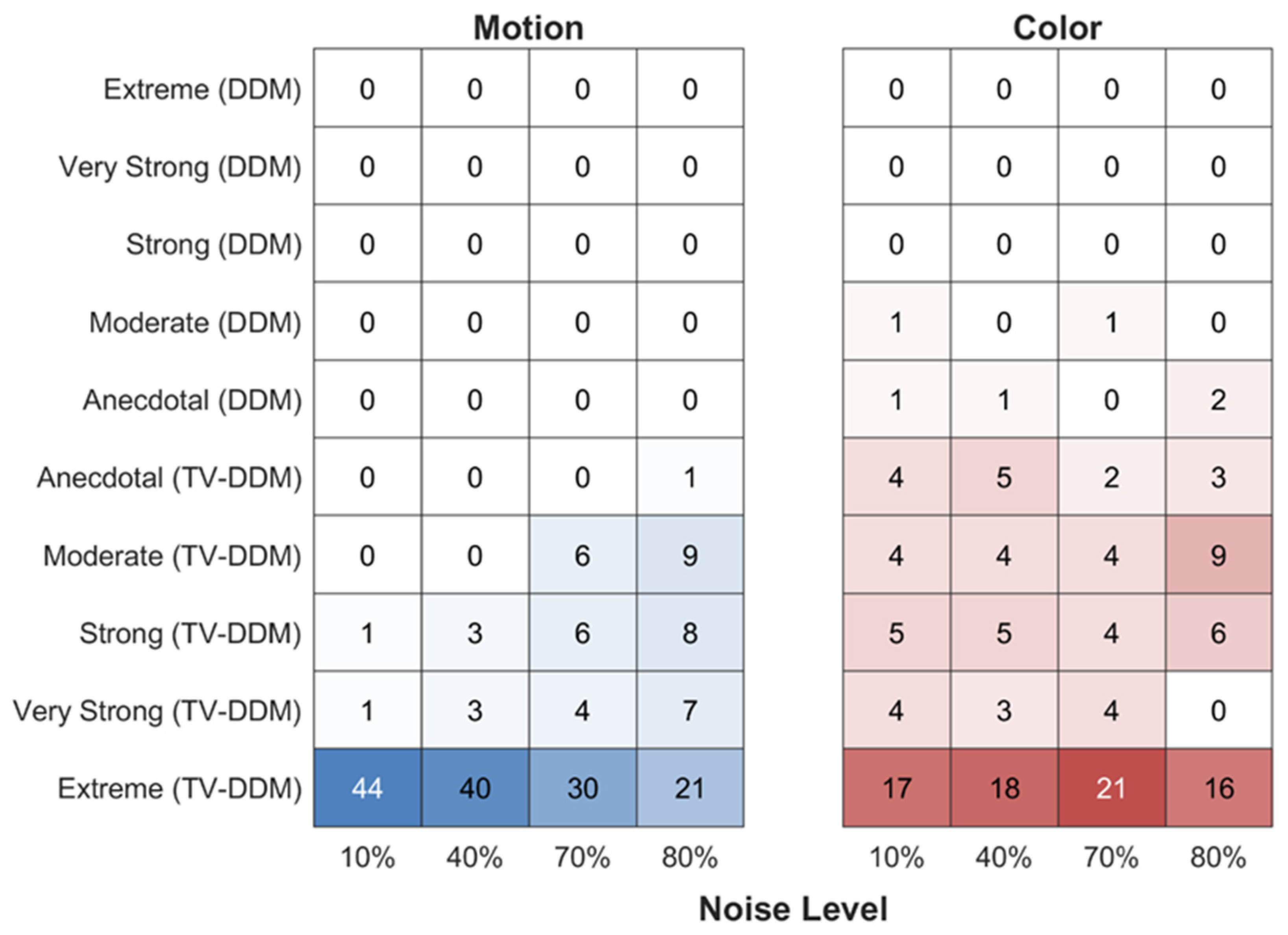

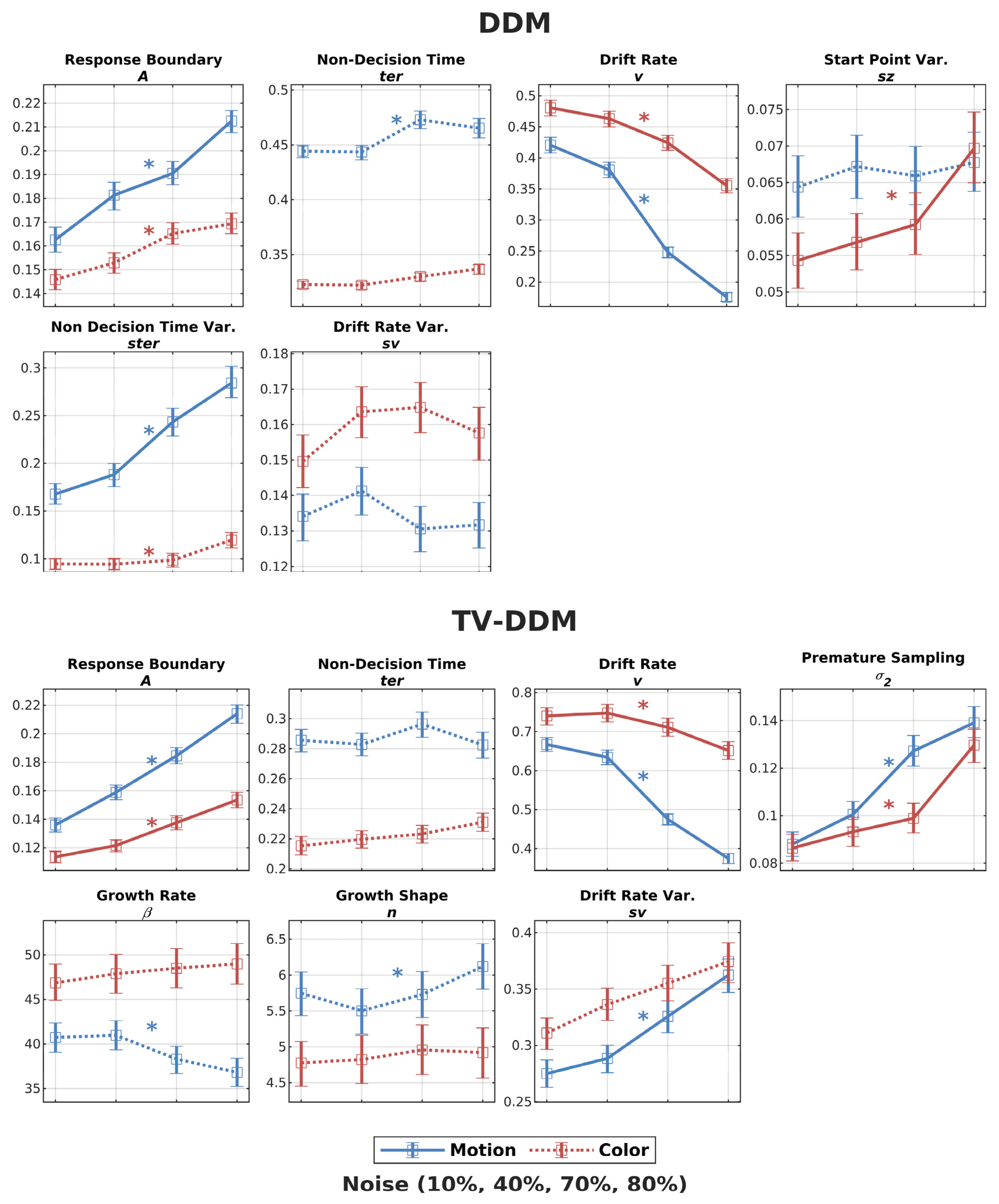

The aims of the paper were threefold: first, we aimed to replicate Smith and colleagues’ findings that their model, TV-DDM, is superior to the traditional DDM for modelling perceptual decisions about RDK stimuli; second, we aimed to test TV-DDM’s ability to model data patterns regarding the discriminability of stimuli without violating selective influence assumptions (amount of noise in RDKs across four levels 10%, 40%, 70%, and 80%) accurately and compared it to DDM in this respect; third, we tested TV-DDM with a novel type of stimuli (Study 2), a static color version of RDKs, here termed random dot patterns (RDPs). Again, this third aim explored the superiority of TV-DDM compared to DDM. Both studies fit and compare the two models using Bayesian parameter estimation and model comparison. We will begin this discussion with the more mundane aspects of our study, the psychophysical characteristic of our novel type of stimuli, and then turn to the more exciting topic of this paper, the modelling.

The behavioral analysis for RDP stimuli revealed that our manipulation of noise (proportion of noise dots) was successful as reaction time and error rate increased with increasing levels of noise. However, the effects were smaller in the RDP task compared to the RDK task, i.e., the overall performance (reaction times and error rate) was better and decreased less with increasing noise than in the RDK task. Hence, this color discrimination task is an overall easier task, which is also confirmed by the a posteriori estimates (EAP) of both models, e.g., response boundary, drift rate, etc. We think this is mainly due to the way the noise dots were chosen, i.e., intervals of hue fairly distinct to the signal dots. However, in brief pilot studies we found it difficult to hit a “sweet spot”, e.g., sampling noise dot hues in a similar way to the RDK (i.e., sampling from all 360 possible directions) made the task too difficult. However, there is also the possibility that color discrimination is easier than motion discrimination. Future research will need to explore this further. For the purpose of this paper, it is important to note that the noise manipulation was successful, which in turn allows us to contrast static noise with dynamic noise while important properties of both types of noise, especially the need to integrate across space to make the best decision, are maintained.

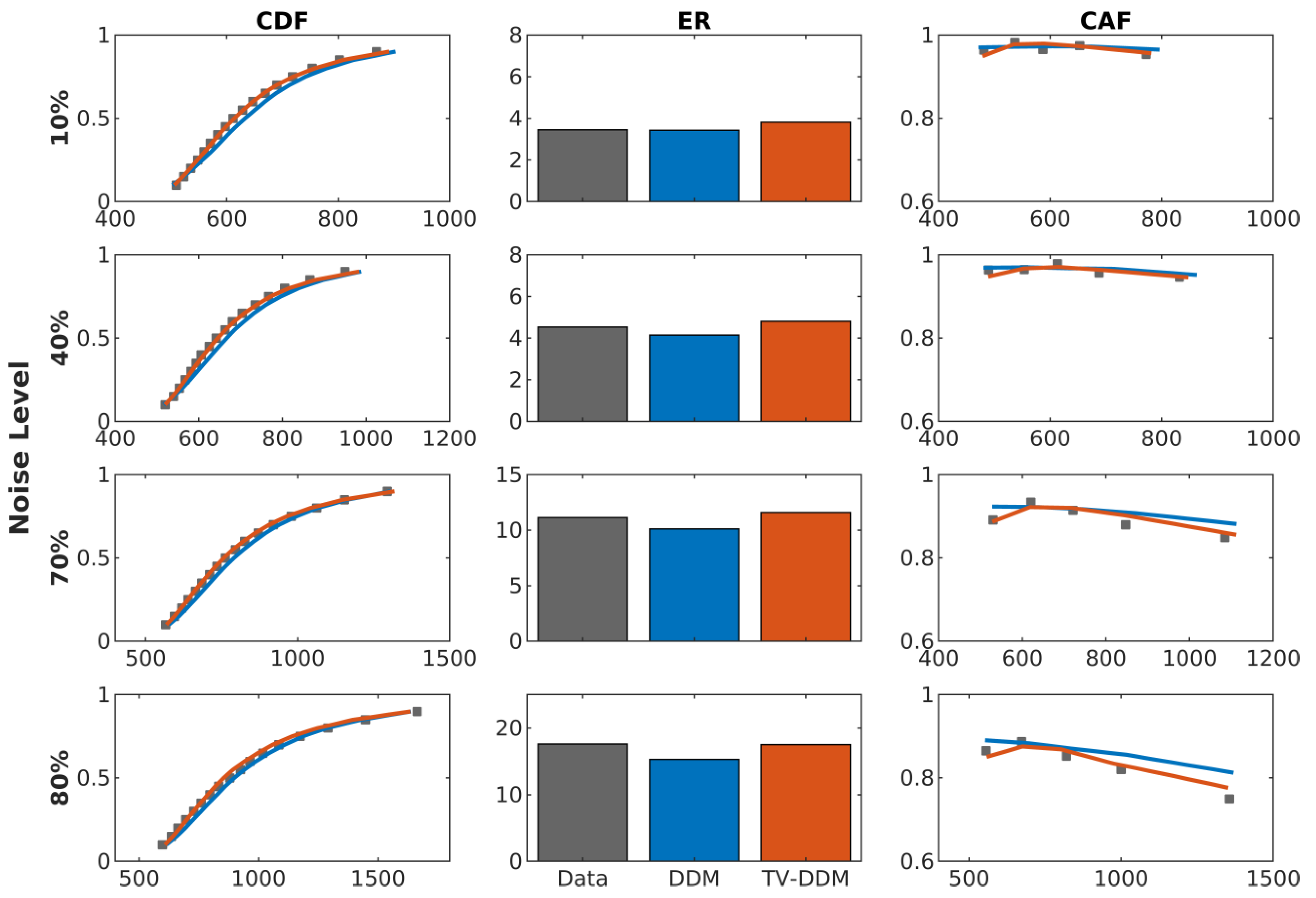

Now we turn to the modelling results of this paper. The model comparison using the Bayes factor revealed a strong win for TV-DDM for both studies despite TV-DDM having one parameter more than DDM (albeit less clear-cut for the RDP stimuli). The distributional measures for both studies revealed that TV-DDM is better equipped to predict the time course of accuracy than DDM. Specifically, the sharp increase in accuracy at short reaction times is well captured by TV-DDM, reflecting the effect of the growth-rate function. However, it is worth noting that the DDM with between-trial variability in start point (

sz), drift rate (

sv), and non-decision time (

ster) (see [

48,

49,

68,

69]) should have been able to predict this pattern of fast and slow errors, but it seems the required flexibility in balancing the two types of errors was insufficient for this data. The finding that TV-DDM also wins for the RDP task is perhaps surprising as Smith and colleagues found that support for TV-DDM mainly from stimuli with dynamic noise (e.g., [

17]). However, Ratcliff and Smith [

70] also found that letters with static noise produce also support for TV-DDM, but the effects were small. Hence, a possible way of reconciling our strong support for TV-DDM for static noise with their findings is that both letters and our RDPs require some degree of integrating information across space, whereas RDPs require the consideration of a “larger” space than letters, explaining the difference in terms of support. Hence, it is not only dynamic noise which requires the perceptual integration stage, but also the integration of information across space. Of course, there is also the possibility that this is due to methodological differences. Future research will need to explore this hypothesis in more detail.

With respect to the test of selective influence, both studies revealed a mixed picture. To begin with, it is important to note that the effect of drift rate from both tasks revealed the expected noise dependency with drift rate decreasing with increasing noise. Also, TV-DDM was able to model the data without violating selective influence assumptions regarding non-decision time (non-decision time did not change across noise levels for both types of tasks). This was also true for DDM not supporting our expectation that lack of perceptual integration may lead to a violation of selective influence. However, our findings are in line with Dutilh et al.’s findings. Here we confirm them for a wider range of difficulties. It is conceivable that the other parameters (e.g., drift rate) are able to absorb the influence of perceptual integration.

Even though TV-DDM wins for both types of stimuli, the parameter estimates revealed quantitative differences between the two types of stimuli. The parameters show faster integration (

) and fewer stages (

n) in the RDP task than in the RDK task. These findings are plausible as it makes sense that the processing of color involves fewer stages and is quicker as it does not involve the integration of perceptual information across time compared to information about motion. In fact, the integration time is around 100 ms faster. This difference is broadly consistent with the well-known effect of color-motion asynchrony, which indicates that humans perceive color earlier than motion by around 80 ms (e.g., [

64,

65]; see [

71] for DDM-based approach and a comprehensive review). It is also interesting to note that the integration time of around 300 ms is similar to Smith and Lilburn’s [

19] finding of 400 ms (which, in turn, is consistent with Watamaniuk and Sekuler’s [

18] findings). The shorter duration may be because our RDK’s were smaller and contained fewer dots, i.e., less perceptual information to integrate. Moreover, even though the distributional measures indicate a good fit, particularly for short RTs, a closer inspection for intermediate and longer RTs suggests that the exact shape of the gamma function may not precisely describe the perceptual integration. Study 1 suggests that for high noise, RDK integration proceeds slightly slower compared to low noise stimuli. For RDPs, the integration may be slightly faster for longer RTs. These mismatches may not be surprising as the neural processing of perceptual stimuli is highly non-linear (see [

9] for evidence pointing in this direction), inconsistent with linear filtering foundations of the gamma function. In fact, Smith and Lilburn [

19] did acknowledge such potential problems with the design of the gamma function. Nevertheless, the gamma function may be a good compromise between a highly complex function and the oversimplification of the formation of perceptual representation in the DDM.

Finally, we found a violation of selective influence for the response boundary in both models and both tasks. This finding suggests that the change in drift rate across noise levels is not sufficient to capture the change in the RT distribution’s shape across noise levels, e.g., the leading edge, and therefore mandates additional changes in the response boundary. The fact that this effect is also found in DDM suggests that this is not due to the additional perceptual integration but is an effect on the decision-making stage. Importantly, these findings point to within-trial changes in response boundary, as the estimates indicate that participants adapt the response boundary to each noise level despite this factor being randomized across trials, which prevents them from adapting the response boundary to each noise level at the beginning of a trial. However, in contrast to the common finding of “collapsing” boundaries (e.g., [

41,

42,

43]) being associated with higher costs or response deadlines, in our case, the increase in task difficulty leads to an increase in response boundary rather than a decrease. Interestingly, this is consistent with findings by Lin et al. [

6] in a visual search study where participants were tasked with finding a designated target among non-targets. They found that the response boundary increased with an increasing number of objects on the screen (i.e., the difficulty of the search task). Also, the theoretical study by Malhotra et al. [

44] showed that a certain mix of easy and difficult decisions can also lead to an increase in the response boundary. This particular effect could be found if blocked easy conditions result in a lower response boundary than blocked difficult conditions. In this scenario, the study showed that initial low boundaries are raised when the critical amount of evidence for the easy condition cannot be obtained very quickly, and it is getting increasingly evident that it is a difficult stimulus i.e., requiring a higher respond boundary to initiate a correct decision. Future studies will have to examine this prediction and examine the conditions in our design individually. Moreover, future studies should fit formal models of collapsing boundaries to our data (e.g., [

41,

42,

43,

72]). Another alternative to explaining our findings are urgency-gating models (e.g., urgency models [

73,

74]) using our using our Bayesian parameter estimation and model comparison.