Abstract

Salient object detection (SOD) aims to accurately identify significant geographical objects in remote sensing images (RSI), providing reliable support and guidance for extensive geographical information analyses and decisions. However, SOD in RSI faces numerous challenges, including shadow interference, inter-class feature confusion, as well as unclear target edge contours. Therefore, we designed an effective Global Semantic-aware Aggregation Network (GSANet) to aggregate salient information in RSI. GSANet computes the information entropy of different regions, prioritizing areas with high information entropy as potential target regions, thereby achieving precise localization and semantic understanding of salient objects in remote sensing imagery. Specifically, we proposed a Semantic Detail Embedding Module (SDEM), which explores the potential connections among multi-level features, adaptively fusing shallow texture details with deep semantic features, efficiently aggregating the information entropy of salient regions, enhancing information content of salient targets. Additionally, we proposed a Semantic Perception Fusion Module (SPFM) to analyze map relationships between contextual information and local details, enhancing the perceptual capability for salient objects while suppressing irrelevant information entropy, thereby addressing the semantic dilution issue of salient objects during the up-sampling process. The experimental results on two publicly available datasets, ORSSD and EORSSD, demonstrated the outstanding performance of our method. The method achieved 93.91% Sα, 98.36% Eξ, and 89.37% Fβ on the EORSSD dataset.

1. Introduction

As an extension of the visual attention mechanism in target segmentation tasks, salient object detection (SOD) aims to accurately identify significant regions within a scene [1]. In the domain of SOD, it is often necessary to extract distinctive features from images to distinguish between targets and backgrounds. Information entropy can be used to measure the complexity and information content of different regions in an image. By calculating the information entropy of each pixel or region in the image, regions with high information entropy can be selected as potential target areas because targets typically introduce more information. By identifying high information entropy regions in the image, SOD can effectively discover key geographic features in remote sensing images (RSI), such as buildings, roads, water bodies, etc., providing reliable support and guidance for urban planning [2], resource management [3], environmental monitoring [4], and other fields. Therefore, by measuring the information content of different regions in the image, SOD technology can gain a deeper understanding of the distribution and structure of information in the image. This enables focusing attention on regions that may contain targets, thereby enhancing the efficiency and accuracy of SOD.

The application of SOD in RSI holds significant practical value for resource monitoring, urban planning, agricultural development, and other areas [5,6]. In terms of resource monitoring, SOD can help identify and monitor resources, such as water bodies, vegetation, and land, providing precise data support for resource management. For example, by detecting water bodies, it can realize the monitoring of water resources and analyze changes in water bodies, providing important references for the utilization and protection of water resources. In the aspect of urban planning, SOD contributes to identifying important buildings and transportation routes in cities, providing data support for urban planning and traffic management. In addition, SOD can also identify important targets, such as farmland and crops, analyze cultivation areas and crop types, and assist farmers in optimizing planting schemes, thereby improving crop yield and quality.

Unlike natural scenes [7,8], SOD faces numerous challenges in RSI. Firstly, RSI typically exhibit complex backgrounds and diverse distributions of objects, making accurate identification of salient objects more difficult. Secondly, RSI are susceptible to disturbances, such as atmospheric conditions and cloud cover, leading to decreased image quality and subsequently affecting the accuracy of SOD. Additionally, remote sensing images contain many small-scale objects, such as trees and vehicles, posing challenges to their identification and localization. Therefore, addressing these challenges requires comprehensive consideration of rich local features, acquiring global dependencies, and enhancing the semantic representation capability of salient objects.

Existing salient segmentation approaches primarily depend on CNN for feature extraction. These methods design multiple functional modules to explore the inherent relationships between features at various levels, enhancing contextual information. Zhou et al. [9] designed two decoder branches for multitask processing, simultaneously obtaining edge information and semantic features, using edge information to enhance the saliency of each level of features. Zheng et al. [10] enlarged the receptive field to capture more contextual information, enhancing the adaptability of the model to salient objects in different scenes. Liu et al. [11] explored the connections between foreground, edge, background, and global features, achieving feature complementation on a single-level feature map. However, they merely enhanced single-scale local features and ignored the potential connections among multi-scale features, which did not fully utilize the salient information in each level of features. Therefore, some researchers have tried to explore how to utilize contextual information of multi-level feature to enhance the understanding of salient objects. Gong et al. [12] proposed a strategy using semantic matching and edge alignment to achieve channel interaction between different features. Additionally, Li et al. [13] adopted a strategy of progressively refining features to generate abstract semantic features and guide the positioning of low-level features. Furthermore, Zeng et al. [14] aggregated global and local information by coordinating adjacent contextual information, thereby further improving the performance of the model.

However, convolutional networks are incapable of capturing extensive global information due to their limited receptive fields [15]. On the other hand, the transformer [16] is renowned for its ability to capture global dependencies between word vectors in NLP. Scholars have attempted to apply transformers to the computer vision domain. The Vision Transformer [17] segmented input images into small patches and transformed these patches into linear embeddings, serving as the input for transformers, marking the first application of transformers in the field of images. Liu [18] proposed a sliding-window mechanism to mitigate the computational complexity of transformers, restricting attention calculations to a window. The Pyramid Vision Transformer [19,20] introduced a feature pyramid into transformers, allowing them to perform various downstream dense prediction tasks. Xie [21] redesigned the encoder and decoder, adopting a hierarchical encoder structure to output multi-scale features and fusing them together in the decoder. Therefore, Gao [22] proposed an adaptive spatial tokenization transformer to extract features, gradually integrating contextual dependencies and addressing the issue of insufficient perception range. Zhang [23] designed a dual-stream encoder that simultaneously utilizes CNN and the transformer to extract features.

Undoubtedly, the research on these methods has provided valuable insights and inspiration for SOD. However, some issues remain unresolved. Firstly, some methods based on feature extraction with ResNet or VGG lack sufficient capability in feature extraction, failing to fully comprehend the semantic information of salient objects. Secondly, due to the obvious semantic disparities among features at different levels, simply fusing them may cause the loss of salient semantics, thereby failing to fully utilize salient information in multi-scale features. Lastly, some methods lack the capability to perceive small-scale targets, leading to the occurrence of omissions. Therefore, we design a Global Semantic-aware Aggregation Network to aggregate multi-scale salient information, achieving global–local semantic interaction and salient semantic feature perception. Specifically, to fully utilizing the advantages of convolution and self-attention, we adopt UniFormer to extract local features and global dependencies of salient objects. This not only preserves the detailed texture information and edge contours of salient objects but also enables the model to comprehend the overall information of the image, resulting in better handling of significant targets in complex scenes. Meanwhile, we propose a Semantic Detail Embedding Module (SDEM) to achieve feature fusion and semantic complementation by coordinating information across different scales, fully utilizing salient information in multi-scale features. Finally, considering the issue of semantic dilution of salient objects during the process of feature map restoration to the original image, we propose a Semantic Perception Fusion Module (SPFM) in the decoder to compensate for the lost salient features during up-sampling.

Our primary contributions are outlined as follows:

(1) We design a Global Semantic-aware Aggregation Network, named GSANet, to provide a crucial solution for precise localization and semantic understanding of salient objects in remote sensing images. This approach calculates the information entropy of each pixel in the image, measuring the complexity and information content in different regions, coordinating global and local semantic information, focusing on high-entropy areas, and deeply excavating the semantic features of salient objects.

(2) We propose a Semantic Detail Embedding Module (SDEM) for the fusion of multi-scale features and enhancement of semantic representation capabilities. SDEM explores potential connections between different scale feature maps, dynamically fusing shallow texture details and deep semantic features. Therefore, SDEM enhances the ability of the model to integrate salient information of different scales, efficiently aggregates the information entropy of salient regions, and improves the perception and understanding level of the model to salient objects in complex scenes.

(3) We propose a Semantic Perception Fusion Module (SPFM) to address the issue of semantic dilution of deep features during the up-sampling stage. SPFM regards salient semantic information as prior knowledge, enhancing the perception capabilities of each level’s feature map for the salient features.

2. Materials and Methods

2.1. Network Overview

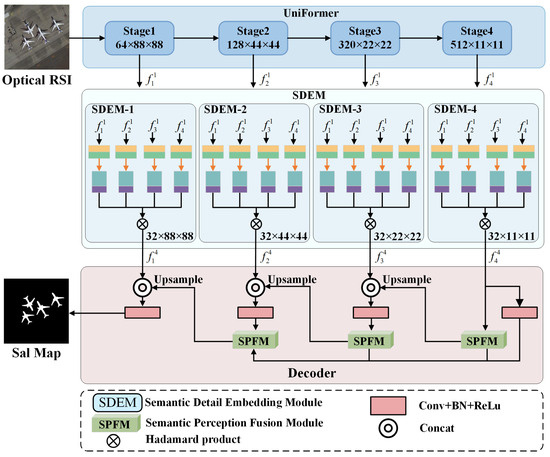

The challenges encountered in SOD from RSI include interference from shadows, the blurring of foreground and background information, and the omission of small-scale targets during the detection process. Therefore, we proposed a novel approach named GSANet that utilizes multi-level semantic fusion to fully exploit salient information, while simultaneously increasing the detection accuracy of salient objects through global semantic perception. The architecture of GSANet is shown in Figure 1. The network comprises three main components: the feature extraction module UniFormer [24], the Semantic Detail Embedding Module (SDEM), and the Semantic Perception Fusion Module (SPFM). UniFormer efficiently encodes input information, extracting abstract salient features while avoiding the generation of local redundancy. The SDEM aggregates four different levels of feature inputs, fully utilizing salient information in multi-scale features. The SPFM injects high sensitivity to semantic information during the decoding process, thereby enhancing the perception ability of the model to salient objects.

Figure 1.

The overall framework of GSANet consists of three parts: the UniFormer, SDEM, and the decoder with SPFM. and (i = 1, 2, 3, 4), respectively, denote the multi-scale features extracted by the UniFormer and the features processed by the SDEM.

2.2. UniFormer

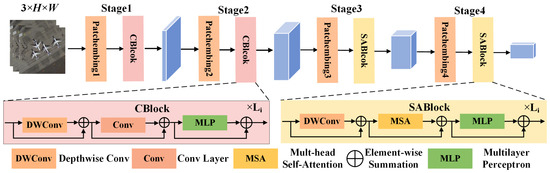

In the current methods, the main architectures for feature extraction include CNN and the transformer [25]. CNN, with convolution as its core operation, excels at extracting local details and effectively capturing local features and texture information in images. Nevertheless, CNN has limitations in capturing global dependencies, resulting in the model struggling to comprehend the correlation between different regions of the image. The transformer adopts self-attention to extract global dependencies, thereby facilitating the model to better comprehend the global semantic information within images. However, self-attention captures tokens with high similarity, leading to the occurrence of information redundancy in shallow networks, which negatively impacts the efficiency of the model learning process. It is necessary to find an effective way to combine the advantages of CNN and the transformer while addressing their individual limitations. Therefore, we adopted UniFormer [24] to extract salient features from remote sensing images, preserving more details in salient regions while avoiding irrelevant information from overshadowing salient features. The UniFormer architecture is illustrated in Figure 2.

Figure 2.

Illustration of the UniFormer structure. UniFormer adopts convolutional modules to extract shallow features and self-attention modules to extract deep features.

In SOD, preserving the local details and capturing the global semantics of RSI are crucial. The local details encompass crucial information, such as the outlines of buildings, the textures of roads, and other subtle features, which are typically key characteristics of salient targets within the image. Meanwhile, the global semantics reflect the interrelation and overall structure among different regions within the image, such as the layout of urban areas and the distribution of vegetation. Therefore, we need a method to effectively balance the acquisition of local details and global semantics. The UniFormer module, with its staged design and integration of convolutional blocks and self-attention blocks, provides a solution to this balance issue. In stages 1 and 2, it utilizes convolutional blocks (CBlock) to extract local details, accurately capturing the small targets and subtle features in the image. In stages 3 and 4, it leverages self-attention blocks (SABlock) to effectively comprehend the global semantics of the image, thereby better grasping the overall structure and semantic information of the image. Each stage outputs a feature map, denoted as , where C, H, and W are the channel, height, and width of the feature map, respectively.

Specifically, convolution adopts the mechanisms of local connectivity and weight sharing, excelling at capturing shallow texture information and local details. Local connectivity means each neuron is only connected to a small portion of the input data, enabling the network to focus more on detecting local features, such as edges and corners. Weight sharing enhances the generalization of the model, making it easier to capture common features in images, such as texture and shape. Self-attention is particularly effective at capturing global dependencies in images, primarily because it can simultaneously consider all positions within the image and effectively weight and aggregate them. This global consideration enables the model to comprehend long-range dependencies within images, thus better grasping the overall structure and semantics of the image.

2.3. Semantic Detail Embedding Module (SDEM)

In SOD, clear-edge structures and rich semantic features are considered effective means to enhance the pronounced disparity between the foreground and background. Clear-edge structures contribute to accurately capturing object contours, providing visual clues for the localization of salient objects. Meanwhile, rich semantic features provide valuable contextual information for salient objects, assisting the model in comprehensively understanding the connotations and background of salient targets [26]. In shallow neural networks, feature maps have higher spatial resolution and carry richer spatial positional information. However, they appear relatively vague in semantics. In contrast, feature maps in deep networks contain more abundant semantic information but lack spatial texture details. This indicates that shallow networks focus on capturing spatial fine-grained structures in images but have limited capacity for expressing abstract semantics. Deep networks concentrate more on extracting high-level semantic features but may lose some detailed expression of spatial texture in the process. As a consequence, how to integrate the strengths of shallow and deep features to overcome their respective limitations is a significant research challenge. On the other hand, RSI contain rich information, including not only a large number of small targets but also complex geographical features. However, due to the uneven distribution of this information in the images and its possible existence across different scales, it implies that a single feature often struggles to fully capture and express this information. Therefore, to fully utilize the abundant information in the images and enhance the perceptual capability of model, we need to consider features at different scales, comprehensively. By integrating multi-scale features, we can gain a more comprehensive understanding of the image content and enable the model to more effectively capture the relationship between targets and their environment, thereby improving the performance and accuracy of detection.

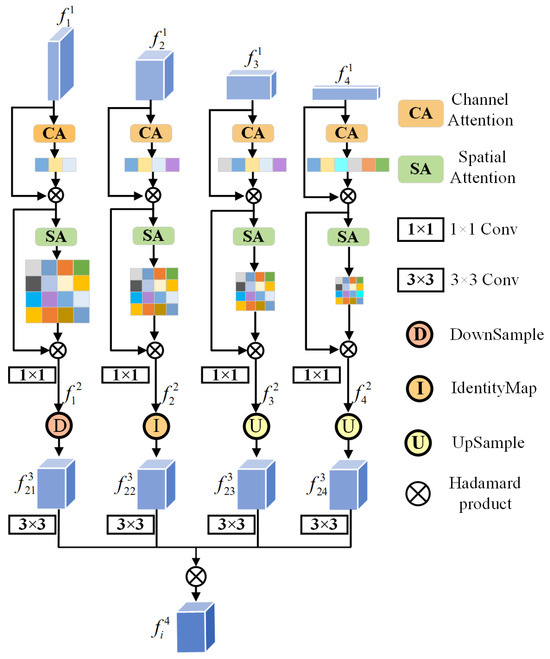

Inspired by [27], we proposed a Semantic Detail Embedding Module (SDEM) to fuse global dependencies, local texture details, spatial positional information, and deep semantic features, which enables the model to comprehensively understand and fully utilize salient information between multi-scale features. The SDEM weights the single feature with attention, enabling the model to focus more specifically on and emphasize the crucial feature information. In addition, the SDEM aggregates multi-scale information onto a single feature map using the Hadamard product, effectively achieving the fusion of multi-scale features. This approach not only preserves the spatial structure and semantic information of the original features but also ensures the consistency and integrity of multi-scale features.

When dealing with complex scenes in RSI, the SDEM plays a crucial role. On the one hand, it embeds salient semantic into shallow features, enhancing the semantic representation capability of salient targets, thereby enabling the model to more accurately understand the semantic content of salient objects. On the other hand, the SDEM injects spatial texture details into high-level features, contributing to the precise localization of object edge contours, and enhancing the level of perception of key areas in complex scenes by the model. Therefore, the SDEM contributes to a more comprehensive analysis and understanding of images by fully leveraging semantic features and edge information. The structure of the SDEM is illustrated in Figure 3.

Figure 3.

Illustration of the SDEM structure. As an example, we only demonstrated the processing of the feature map at the second level.

The SDEM fuses the shallow spatial texture structure and deep abstract semantic representation to fully exploit salient information in multi-scale features. Specifically, for the i-th SDEM, it receives four input feature maps from the encoder, denoted as . When processing the feature map at the i-th level, a weighted operation was first performed using channel attention and spatial attention mechanisms [28], assigning more weight to semantic features and boundary information of salient objects. This enhanced both local spatial details and global channel features of salient objects. After that, a convolution was performed to normalize the number of channels, adjusting it to 32. The detailed steps of the entire process are as follows:

where denotes the i-th feature map, is channel attention, is spatial attention, and is a 1 × 1 convolution used to adjust the channels.

Next, regarding the feature map as prior information, the resolution of the remaining feature maps, , was adjusted to align with . The specific formula is as follows:

where , , and , respectively, denote the operations to scale the resolution of the feature map to match that of using adaptive average pooling, identity mapping, and bilinear interpolation. The final output is denoted as .

Then, a 3 × 3 convolution was applied to all resampled feature maps. Through element-wise multiplication, the multi-scale feature information was gradually fused into the i-th feature map. Then, it can retain richer deep features and more detailed shallow information, resulting in the final output, denoted as :

where denotes element-wise multiplication, and is the parameters of the 3 × 3 convolution.

2.4. Semantic Perception Fusion Module (SPFM)

In the classical U-Net structure, the encoder extracts deep features, while the decoder restores high-level feature maps to the original image resolution through up-sampling. However, during the up-sampling process, the decoder faces two common issues. Firstly, the semantic information of deep features is gradually diluted during up-sampling. This leads to insufficient expression of semantic features, which causes fragmentation of edges and internal semantic voids in salient objects. Secondly, there exists a disparity in semantics among multi-scale feature maps. Simply combining them may result in semantic confusion between the foreground and background information, thereby interfering with the accurate identification of significant targets.

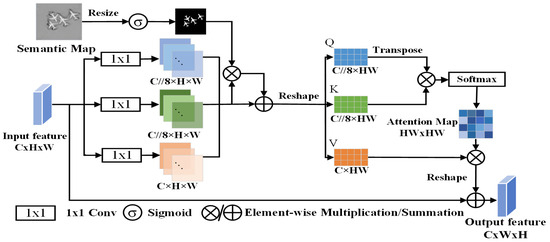

Therefore, we introduced a Semantic Perception Fusion Module (SPFM) into the decoder, using the most semantically rich high-level feature map as prior knowledge to compensate the loss of salient semantics during the fusion process of multi-scale feature maps. The SPFM explored the mapping relationship between global features and local details through self-attention mechanisms, embedding global semantic information into local features. This enhanced the ability of model to perceive salient targets, enabling it to more effectively capture and retain salient semantic information. During the up-sampling process, the SPFM guided the decoder to fuse semantic information from different-level feature maps, allocating more attention weights to the semantic features of salient targets. This enabled the model to prioritize salient targets while disregarding irrelevant factors. The structure of the SPFM is illustrated in Figure 4.

Figure 4.

Illustration of the SPFM structure. The SPFM enhanced contextual awareness of salient objects through salient semantic maps.

Inspired by [29,30,31], the SPFM first utilized the salient semantic map as prior knowledge to weight the input information, and then employed self-attention to achieve context awareness, guiding the model to perceive the spatial distribution of salient objects. Through this process, SPFM was able to allocate weights for different regions of the image, injecting additional semantic information into the region of salient targets on the input feature map, and preserving this crucial information as much as possible.

Firstly, the semantic map was resized to align with the size of the input feature map. Subsequently, following processing through the sigmoid function, the value of each pixel in the semantic map was mapped to the range of 0–1, resulting in a semantic weighting map, . In , larger pixel values indicate increasing importance of information at the corresponding positions:

On the flip side, the input feature map underwent linear projection to generate three attention components, and these were further subjected to residual processing with the semantic weight map, , ultimately producing the weighted attention components , , and . This operation, through fusing semantic information with attention components, elevated the weight proportion of pixels associated with salient targets. It led the model to effectively capture salient information in the input feature map during the process of encoding contextual information for input features through the interaction of three attention components . The calculation process is as follows:

where denotes the semantic weight, denotes the input feature, and , , and are, respectively, query convolution, key convolution, and value convolution of linear projections.

The components , , and were computed according to the following formula, resulting in the ultimate output:

where denotes the dimensionality of , , and .

2.5. Loss Function

We adopted a hybrid loss function to train the proposed network. This hybrid loss function comprises two components: Intersection Over Union (IOU) loss and Binary Cross-Entropy (BCE) loss. The IOU loss evaluates the precision of the model in predicting the position and shape of the target, while the BCE loss focuses more on the binary classification performance of model. By combining these two loss functions, the model can be comprehensively trained to achieve better performance in both target localization and target classification. The process for computing the overall loss is as follows:

where and denote the IOU loss and BCE loss, respectively. is the ground truth label, and is the salient prediction map.

3. Results

3.1. Experimental Setup

(1) Datasets: We conducted experiments on two datasets, ORSSD [32] and EORSSD [33]. The ORSSD dataset comprises 800 images with pixel-level annotations, divided into 600 images for training and 200 images for testing. These images are predominantly sourced from Google Earth, incorporating data from multiple satellites and aerial photography. Their spatial resolution ranges from 0.5 to 2 m. The EORSSD dataset is an extension of ORSSD, with an additional 1200 samples from Google Earth. It includes 2000 images with ground truth (GT), with 1400 images allocated for training and the remaining 600 for testing. Compared to ORSSD, EORSSD faces greater challenges in dealing with small targets, more complex scenes, and handling image interference. Additionally, we augmented the training data by performing rotations of 90°, 180°, and 270°, as well as horizontal flips followed by rotations with the same angles for both the images and the GT. Finally, the training samples for ORSSD and EORSSD reached 4800 and 11,200, respectively.

(2) Network implementation: All experiments were conducted using the advanced deep learning framework PyTorch [34], trained on a high-performance NVIDIA graphics processor 4060ti GPU (16 GB memory). During training, we utilized UniFormer [24] as the feature extraction network, initializing it by loading pre-trained weights. The resolution of input images and GT was adjusted to 352 × 352. We adopted the Adam optimizer for network training, with a batch size of 8, 45 epochs, and an initial learning rate set to 1 × 10−4. It is worth noting that after every 30 epochs, the learning rate decayed according to the specified schedule, reaching 1/10 of the initial value. The code is available at https://github.com/OrangeCat12352/GSANet.

3.2. Evaluation Metrics

To comprehensively evaluate the performance of the model, we adopted a series of common performance metrics for quantitative analysis. These metrics included S-measure () [35], mean absolute error (), E-measure () [36], F-measure () [37], PR curve, and F-measure curve.

The S-measure comprehensively considers both object similarity and region similarity, providing a comprehensive evaluation of the structural similarity between the detected results and the real objects. It is represented as:

where the weight coefficient is set to 0.5, assigning equal weight to both object similarity and region similarity .

evaluates the mean absolute error between the prediction result (Sal) and the ground truth (GT). A smaller indicates that the predicted results are closer to the ground truth. It can be expressed as follows:

where and represent the width and height of the image, respectively, while denotes the pixel coordinates.

The E-measure is an enhanced matching metric used to measure the matching degree between the global average and local pixels. The metric is defined as follows:

where is the enhanced alignment matrix, capturing two properties of the binary mapping: pixel-level matching and image-level statistics.

The F-measure combines Precision and Recall, serving as their weighted harmonic mean. It is a commonly used comprehensive evaluation metric and can be expressed as:

where is the weight coefficient between and .

3.3. Comparison with State-of-the-Art

To validate the advancement of our approach, we selected 15 state-of-the-art SOD models for comparison, including CNN-based methods, such as SAMNet [38], HVPNet [39], DAFNet [33], MSCNet [40], MJRBM [41], PAFR [42], CorrNet [13], EMFINet [43], MCCNet [11], ACCoNet [14], AESINet [44], ERPNet [9], and ADSTNet [45], and transformer-based methods, such as HFANet [46] and GeleNet [47].

3.3.1. Visual Comparison

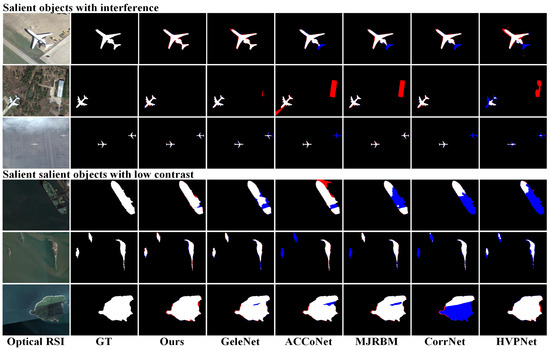

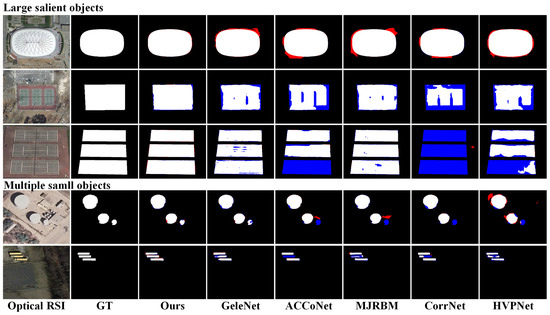

We present visual comparisons between our approach and some comparative methods in Figure 5 and Figure 6, showing prediction results in different scenarios. In these figures, red annotations indicate false positives, where the model incorrectly identified the background as the target. Blue annotations represent false negatives, where the model incorrectly considered the target as the background. These visual results intuitively illustrate the performance differences among various methods for SOD tasks.

Figure 5.

Visual comparison of saliency maps under interference and low-contrast scenes. Red indicates false positives, and blue indicates false negatives.

Figure 6.

Visual comparison of saliency maps in scenes with a large target and multiple small targets. Red indicates false positives, and blue indicates false negatives.

In the first scenario, the target was affected by various factors, as depicted in the first three rows of Figure 5. The images were disrupted by factors such as shadows, complex backgrounds, and dense fog. Only our method accurately detected the target in these scenarios, avoiding false positives and false negatives, demonstrating outstanding anti-interference capabilities and robustness. Some CNN-based methods, such as ACCoNet, although enhancing salient regions by coordinating multi-level features, still suffered from the insufficient feature extraction capability of CNN, resulting in misidentifying the background as the target.

The second scenario involved detecting targets under low-contrast conditions, as illustrated in the last three rows of Figure 5. In this scene, the salient object closely resembled the background in color, leading to confusion between the foreground and background, posing a challenge for SOD. Other methods failed to detect salient regions similar in color to the background. For instance, parts of the boat resembled the color of the water surface, and GeleNet confused the features of these parts, resulting in incomplete boat detection. Our approach produced detection results closest to the ground truth, successfully identifying relatively complete salient targets. This can be attributed to the semantic-aware fusion strategy adopted by SPFM, which selectively enhanced the semantic information of salient targets during the multi-scale feature fusion process while effectively suppressing the influence of background features. This strategy enables the SPFM module to more effectively enhance the discrimination between the foreground and background, thereby assisting the model to more accurately capture the key features of salient targets.

The third scenario involved the detection of large objects, as depicted in the first three rows of Figure 6. In this scene, salient objects occupied a large portion of the entire image, making them easily detectable. However, their backgrounds had complex texture features, which could interfere with the detection performance. The saliency maps generated by other methods exhibited issues such as unclear boundary details and semantic voids within the target. On the contrary, our approach embedded salient semantic information through the SDEM to compensate for missing semantics within large-scale targets. The design of the SDEM addresses the issue of semantic information voids within targets by integrating deep semantic and shallow texture information. Deep semantic information offers an understanding of the overall properties of the targets, while shallow texture information captures subtle features and boundary details of the target.

The fourth scenario involved multiple small objects, as shown in the last three rows of Figure 6. In this scenario, other methods may have missed small targets. Our method, employing the SDEM for multi-scale feature fusion and the SPFM for enhancing local details, accurately segmented all salient objects. This indicates that our approach possesses strong global perception and local detail-capturing capabilities when dealing with the saliency detection task of multiple small targets, thereby enhancing the comprehensiveness and accuracy of SOD.

3.3.2. Quantitative Comparison

We selected 15 methods for quantitative comparison with our approach. All experimental results were provided by the original authors or retrained. Specifically, we retrained six recent SOD methods, including CorrNet, EMFINet, MCCNet, ACCoNet, AESINet, and GeleNet, on the same operational environment, GPU, and default dataset settings. Table 1 and Table 2 report the quantitative comparison results of our approach and other compared methods on , , , , , , , and metrics. In these metrics, a lower indicates smaller model errors, while higher values for the other seven metrics suggest better model performance. From the tables, it is evident that our method outperformed other methods on both the ORSSD and EORSSD datasets, demonstrating outstanding performance and generalization capabilities. Specifically, on the ORSSD dataset, our approach surpassed other methods in all metrics except , , and , achieving values of 0.9491, 0.0070, 0.9815, 0.9864, and 0.9253, respectively. On the EORSSD dataset, our approach outperformed the second-ranked method in (0.9391 vs. 0.9380), (0.0053 vs. 0.0060), (0.9743 vs. 0.9728), (0.9784 vs. 0.9740), (0.8657 vs. 0.8648), (0.8790 vs. 0.8781), and (0.8937 vs. 0.8910).

Table 1.

Quantitative comparisons with state-of-the-art SOD methods on ORSSD datasets.

Table 2.

Quantitative comparisons with state-of-the-art SOD methods on EORSSD datasets.

Additionally, some CNN-based methods, such as CorrNet and ERPNet, adopted different strategies for SOD. CorrNet adopted a coarse-to-fine approach to detect targets, achieving 91.53% on the EORSSD. ERPNet provided an additional branch for target edge perception, offering edge cues for SOD and achieving 92.10% on the EORSSD. However, constrained by the limited sensitivity of CNN to global information, the overall performance of these methods was unsatisfactory. In contrast, our method enhanced receptive fields through the transformer, obtaining more comprehensive semantic information and achieving a highest of 93.91% on EORRSD.

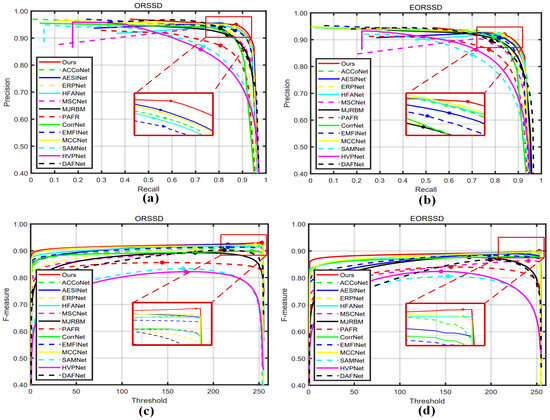

Figure 7 illustrates the PR curves and F-measure curves for different approaches, serving as an assessment of the overall performance of the models. On both datasets, our method consistently maintained a high detection accuracy as recall increased in the PR curves. Regarding the F-measure curves, our method consistently maintained relatively high F-measure scores across different thresholds. This indicates that our approach had a low false positive rate, further validating the outstanding performance of our method.

Figure 7.

Quantitative comparison of PR curves (a,b) and F-measure curves (c,d) for the two datasets.

3.4. Ablation Studies

To validate the effectiveness of each module in our approach, we conducted model analysis and ablation studies on the EORSSD dataset, evaluating the contributions of the SDEM and SPFM modules. We removed the SDEM and SPFM modules from the model, retaining only the UniFormer encoder and a simple decoder as the baseline. The decoder consisted of three up-sampling modules, each composed of two convolutions and one transposed convolution. Skip connections were used to fuse features between the encoder and decoder. Consequently, we designed four different combinations for ablation experiments: (1) baseline, (2) baseline + SDEM, (3) baseline + SPFM, and (4) baseline + SDEM + SPFM. The results of the ablation are shown in Table 3.

Table 3.

Ablation results of evaluating the individual contribution of each module.

From Table 3, we observe that compared to the baseline, the SDEM improved by 0.45%, 0.21%, and 0.35% on metrics , , and , respectively. Similarly, the SPFM enhanced by 0.29%, 0.17%, and 0.17% on metrics , , and , respectively. This directly demonstrates the effectiveness of the two proposed modules. The collaboration between different modules further improved the model’s performance. With all modules included, the complete model surpassed the baseline by 0.24%, 0.32%, and 0.49% on metrics , , and , respectively.

3.5. Computational Efficiency Experiment

We evaluated the computational efficiency of various models using two metrics: the number of parameters (Params) and the floating points of operations (FLOPs). Lower Params and FLOPs generally indicate higher computational efficiency. We tested each method with images of the same size (1 × 3 × 256 × 256), and the results are shown in Table 4.

Table 4.

Analysis of the computational efficiency of various methods.

In Table 4, CorrNet was identified as a lightweight network with the fewest parameters, demonstrating excellent computational efficiency but a lower detection accuracy. The methods such as EMFINet, MCCNet, ACCoNet, and ERPNet, which are based on CNN backbones, exhibited poor computational efficiency. Additionally, our Params and FLOPs reached 49.461 M and 11.373 M, respectively, ranking just below GeleNet in terms of computational efficiency. Nevertheless, our model surpassed GeleNet in detection accuracy, making the sacrifice in computational efficiency worthwhile.

4. Discussion

This paper presented a new solution for the precise localization and semantic understanding of salient objects in RSI. We explored the potential connections between multi-scale features and fused spatial texture information and abstract semantic expressions. Meanwhile, we realized that the abstract semantic information of salient features might be interfered with by background information during the up-sampling process, thereby enhancing the perception of salient targets through multi-scale features and further filtering out irrelevant information. Our method partially addressed the issues faced by SOD in RSI, such as shadow interference and inter-class feature confusion.

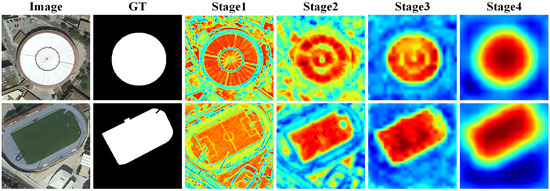

Figure 8 illustrates the process of feature extraction on the input image by GSANet, presenting the actual changes in salient objects at different stages. The attention of the model gradually shifted from global to salient regions, clearly demonstrating the effectiveness of feature extraction. As can be seen in stage 1, the spatial texture details of the input image were clearly visible, while in stage 4, the semantic features of salient objects were prominently evident. This indicates that the shallow network was inclined toward capturing the fine-grained spatial structure of the image but had limited expression for abstract semantics. On the other hand, the deep network focused more on extracting high-level semantic features but lost some detailed spatial information in the process. To fully utilize the information from every stage, a sophisticated fusion of global features and local details is necessary. Thus, we proposed the SDEM and SPFM, which achieved semantic interaction and information fusion across different stages, further retaining the completeness of semantic information and enhancing the global effectiveness of semantic features.

Figure 8.

Visual comparison of extracted salient features.

It is clear that as feature extraction progressed, all attention was focused on salient regions. This process clearly demonstrated the effectiveness of our proposed method, which can accurately capture salient features in images based on reliable information, thereby emphasizing its potential advantages in SOD.

5. Conclusions

We designed an efficient Global Semantic Perception Aggregation Network, which achieved precise localization and semantic understanding of salient objects in remote sensing images by computing the information entropy of different areas. The information entropy provided a way to measure the complexity and information content of local image regions. The regions with high information entropy often imply the presence of more information in the image and may contain salient objects. By calculating the information entropy of each pixel or region in the image, we can prioritize regions with high information entropy as potential target areas. The advantage of this approach is that it can focus attention on areas that may contain salient objects, thereby improving the efficiency and accuracy of SOD. Therefore, the use of information entropy enables algorithms to better understand the image content and provides important clues for identifying potential salient regions, laying the foundation for further analysis and processing.

GSANet adopted UniFormer to effectively extract local features and global dependencies of salient objects from remote sensing images. Detailed local information preserved more texture details and edge contours, helping the model locate salient objects. Global features assisted the model in understanding the image context information, enhancing the distinction between salient objects and the background. Meanwhile, to better capture global context information and local details, we adopted the SDEM for multi-level feature fusion and semantic interaction. The SDEM searched for high-entropy regions among multi-scale features to effectively aggregate the salient semantic information contained within these regions. Additionally, we also adopted the SPFM to enhance the perceptual capabilities of the model for salient objects during the decoding process. Specifically, the SDEM is a Semantic Detail Embedding Module. By fusing multi-level features, it embedded salient semantic information into low-level features and injected texture details into high-level features. It enabled high-level features to have richer salient information and more clear spatial details, which enhanced perception and understanding of key areas of complex scenes. The SPFM regarded salient semantic features as prior information to compensate for the semantic loss of salient objects during the multi-level feature fusion process, enabling the model to effectively capture and retain crucial salient information. Extensive comparative experiments and ablation studies demonstrated the superiority of our approach, as well as the effectiveness of both the SDEM and SPFM.

The limitation of GSANet is the inability to accurately depict the edge contours of salient objects in some complex scenes, resulting in detected edges that are blurred or fragmented. To address this issue, we will consider utilizing graph convolutional networks [48] in future research to capture the spatial relationships within remote sensing images and further enhance edge features.

Author Contributions

Conceptualization, H.L., X.C. (Xuhui Chen), W.Y. and L.M.; methodology, L.M. (Liye Mei); software, X.C.; validation, X.C., K.S., J.H. and A.H.; formal analysis, W.Y.; investigation, L.M.; data curation, Y.W.; writing—original draft preparation, X.C. (Xuhui Chen); writing—review and editing, H.L.; visualization, A.H.; supervision, W.Y., L.M. and H.L.; project administration, H.L.; funding acquisition, H.L. and W.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Open Research Fund Program of LIESMARS (Grant No. 21E02); the Hubei Key Laboratory of Intelligent Robot (Wuhan Institute of Technology) of China (HBIRL 202113); the Hubei Province Young Science and Technology Talent Morning Hight Lift Project (202319); the Natural Science Foundation of Hubei Province (2022CFB501); the University Student Innovation and Entrepreneurship Training Program Project (202210500028); the Doctoral Starting Up Foundation of Hubei University of Technology (XJ2023007301).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sun, L.; Wang, Q.; Chen, Y.; Zheng, Y.; Wu, Z.; Fu, L.; Jeon, B. CRNet: Channel-Enhanced Remodeling-Based Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Yan, R.; Yan, L.; Geng, G.; Cao, Y.; Zhou, P.; Meng, Y. ASNet: Adaptive Semantic Network Based on Transformer-CNN for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–16. [Google Scholar] [CrossRef]

- Gong, A.; Nie, J.; Niu, C.; Yu, Y.; Li, J.; Guo, L. Edge and Skeleton Guidance Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7109–7120. [Google Scholar] [CrossRef]

- Quan, Y.; Xu, H.; Wang, R.; Guan, Q.; Zheng, J. ORSI Salient Object Detection via Progressive Semantic Flow and Uncertainty-aware Refinement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Wen, D.; Huang, X.; Bovolo, F.; Li, J.; Ke, X.; Zhang, A.; Benediktsson, J.A. Change detection from very-high-spatial-resolution optical remote sensing images: Methods, applications, and future directions. IEEE Geosci. Remote Sens. Mag. 2021, 9, 68–101. [Google Scholar] [CrossRef]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Cong, R.; Qin, Q.; Zhang, C.; Jiang, Q.; Wang, S.; Zhao, Y.; Kwong, S. A weakly supervised learning framework for salient object detection via hybrid labels. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 534–548. [Google Scholar] [CrossRef]

- Song, K.; Huang, L.; Gong, A.; Yan, Y. Multiple graph affinity interactive network and a variable illumination dataset for RGBT image salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 3104–3118. [Google Scholar] [CrossRef]

- Zhou, X.; Shen, K.; Weng, L.; Cong, R.; Zheng, B.; Zhang, J.; Yan, C. Edge-guided recurrent positioning network for salient object detection in optical remote sensing images. IEEE Trans. Cybern. 2022, 53, 539–552. [Google Scholar] [CrossRef]

- Zheng, J.; Quan, Y.; Zheng, H.; Wang, Y.; Pan, X. ORSI Salient Object Detection via Cross-Scale Interaction and Enlarged Receptive Field. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Lin, W.; Ling, H. Multi-Content Complementation Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Zhang, X.; Lin, W. Lightweight Salient Object Detection in Optical Remote-Sensing Images via Semantic Matching and Edge Alignment. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Bai, Z.; Lin, W.; Ling, H. Lightweight Salient Object Detection in Optical Remote Sensing Images via Feature Correlation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Zeng, D.; Lin, W.; Ling, H. Adjacent Context Coordination Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Cybern. 2023, 53, 526–538. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Ye, Z.; Mei, L.; Shen, S.; Sun, S.; Wang, Y.; Yang, W. Cross-Attention Guided Group Aggregation Network for Cropland Change Detection. IEEE Sens. J. 2023, 23, 13680–13691. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Gao, L.; Liu, B.; Fu, P.; Xu, M. Adaptive Spatial Tokenization Transformer for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, J.; Yue, H.; Yin, X.; Zheng, S. Transformer guidance dual-stream network for salient object detection in optical remote sensing images. Neural Comput. Appl. 2023, 35, 17733–17747. [Google Scholar] [CrossRef]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. Uniformer: Unifying convolution and self-attention for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12581–12600. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Ye, Z.; Mei, L.; Yu, H.; Liu, J.; Yalikun, Y.; Jin, S.; Liu, S.; Yang, W.; Lei, C. Hybrid Attention-Aware Transformer Network Collaborative Multiscale Feature Alignment for Building Change Detection. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Mei, L.; Yu, Y.; Shen, H.; Weng, Y.; Liu, Y.; Wang, D.; Liu, S.; Zhou, F.; Lei, C. Adversarial multiscale feature learning framework for overlapping chromosome segmentation. Entropy 2022, 24, 522. [Google Scholar] [CrossRef]

- Peng, Y.; Sonka, M.; Chen, D.Z. U-Net v2: Rethinking the Skip Connections of U-Net for Medical Image Segmentation. arXiv 2023, arXiv:2311.17791. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Maaz, M.; Shaker, A.; Cholakkal, H.; Khan, S.; Zamir, S.W.; Anwer, R.M.; Shahbaz Khan, F. Edgenext: Efficiently amalgamated cnn-transformer architecture for mobile vision applications. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 3–20. [Google Scholar]

- Mei, L.; Hu, X.; Ye, Z.; Tang, L.; Wang, Y.; Li, D.; Liu, Y.; Hao, X.; Lei, C.; Xu, C. GTMFuse: Group-Attention Transformer-Driven Multiscale Dense Feature-Enhanced Network for Infrared and Visible Image Fusion. Knowl. Based Syst. 2024, 293, 111658. [Google Scholar] [CrossRef]

- Han, C.; Wu, C.; Guo, H.; Hu, M.; Li, J.; Chen, H. Change guiding network: Incorporating change prior to guide change detection in remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 8395–8407. [Google Scholar] [CrossRef]

- Li, C.; Cong, R.; Hou, J.; Zhang, S.; Qian, Y.; Kwong, S. Nested Network With Two-Stream Pyramid for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9156–9166. [Google Scholar] [CrossRef]

- Zhang, Q.; Cong, R.; Li, C.; Cheng, M.-M.; Fang, Y.; Cao, X.; Zhao, Y.; Kwong, S. Dense attention fluid network for salient object detection in optical remote sensing images. IEEE Trans. Image Process. 2020, 30, 1305–1317. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Fan, D.-P.; Cheng, M.-M.; Liu, Y.; Li, T.; Borji, A. Structure-measure: A new way to evaluate foreground maps. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4548–4557. [Google Scholar]

- Fan, D.-P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.-M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. arXiv 2018, arXiv:1805.10421. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Liu, Y.; Zhang, X.-Y.; Bian, J.-W.; Zhang, L.; Cheng, M.-M. SAMNet: Stereoscopically attentive multi-scale network for lightweight salient object detection. IEEE Trans. Image Process. 2021, 30, 3804–3814. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, Y.-C.; Zhang, X.-Y.; Wang, W.; Cheng, M.-M. Lightweight salient object detection via hierarchical visual perception learning. IEEE Trans. Cybern. 2020, 51, 4439–4449. [Google Scholar] [CrossRef]

- Lin, Y.; Sun, H.; Liu, N.; Bian, Y.; Cen, J.; Zhou, H. A lightweight multi-scale context network for salient object detection in optical remote sensing images. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 238–244. [Google Scholar]

- Tu, Z.; Wang, C.; Li, C.; Fan, M.; Zhao, H.; Luo, B. ORSI Salient Object Detection via Multiscale Joint Region and Boundary Model. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Li, X.; Xu, Y.; Ma, L.; Huang, Z.; Yuan, H. Progressive Attention-Based Feature Recovery With Scribble Supervision for Saliency Detection in Optical Remote Sensing Image. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Zhou, X.; Shen, K.; Liu, Z.; Gong, C.; Zhang, J.; Yan, C. Edge-Aware Multiscale Feature Integration Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Zeng, X.; Xu, M.; Hu, Y.; Tang, H.; Hu, Y.; Nie, L. Adaptive Edge-Aware Semantic Interaction Network for Salient Object Detection in Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Zhao, J.; Jia, Y.; Ma, L.; Yu, L. Adaptive Dual-Stream Sparse Transformer Network for Salient Object Detection in Optical Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5173–5192. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, Y.; Xiong, Z.; Yuan, Y. Hybrid feature aligned network for salient object detection in optical remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Li, G.; Bai, Z.; Liu, Z.; Zhang, X.; Ling, H. Salient object detection in optical remote sensing images driven by transformer. IEEE Trans. Image Process. 2023, 32, 5257–5269. [Google Scholar] [CrossRef]

- Khlifi, M.K.; Boulila, W.; Farah, I.R. Graph-based deep learning techniques for remote sensing applications: Techniques, taxonomy, and applications—A comprehensive review. Comput. Sci. Rev. 2023, 50, 100596. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).