Abstract

The causal structure of a system imposes constraints on the joint probability distribution of variables that can be generated by the system. Archetypal constraints consist of conditional independencies between variables. However, particularly in the presence of hidden variables, many causal structures are compatible with the same set of independencies inferred from the marginal distributions of observed variables. Additional constraints allow further testing for the compatibility of data with specific causal structures. An existing family of causally informative inequalities compares the information about a set of target variables contained in a collection of variables, with a sum of the information contained in different groups defined as subsets of that collection. While procedures to identify the form of these groups-decomposition inequalities have been previously derived, we substantially enlarge the applicability of the framework. We derive groups-decomposition inequalities subject to weaker independence conditions, with weaker requirements in the configuration of the groups, and additionally allowing for conditioning sets. Furthermore, we show how constraints with higher inferential power may be derived with collections that include hidden variables, and then converted into testable constraints using data processing inequalities. For this purpose, we apply the standard data processing inequality of conditional mutual information and derive an analogous property for a measure of conditional unique information recently introduced to separate redundant, synergistic, and unique contributions to the information that a set of variables has about a target.

Keywords:

causality; directed acyclic graphs; causal discovery; structure learning; causal structures; marginal scenarios; hidden variables; mutual information; unique information; entropic inequalities; data processing inequality MSC:

62H22; 62D20; 94A15; 94A17

1. Introduction

The inference of the underlying causal structure of a system using observational data is a fundamental question in many scientific domains. The causal structure of a system imposes constraints on the joint probability distribution of variables generated from it [1,2,3,4], and these constraints can be exploited to learn the causal structure. Causal learning algorithms based on conditional independencies [1,2,5] allow the construction of a partially oriented graph [6] that represents the equivalence class of all causal structures compatible with the set of conditional independencies present in the distribution of the observable variables (the so-called Markov equivalence class). However, without restrictions on the potential existence and structure of an unknown number of hidden variables that could account for the observed dependencies, Markov equivalence classes may encompass many causal structures compatible with the data.

Conditional independencies impose equality constraints on a joint probability distribution; namely, an independence results in the equality between conditional and unconditional probability distributions, or equivalently, in a null mutual information between independent variables. In addition to the information from independencies between the observed variables, causal information can also be obtained from other functional equality constraints [7], such as dormant independencies that would occur under active interventions [8]. Further causal inference power can be obtained incorporating assumptions on the potential form of the causal mechanisms in order to exploit additional independencies associated with hidden substructures within the generative model [9,10], or independencies related to exogenous noise terms [11,12,13]. Other approaches have studied the identifiability of specific parametric families of causal models [3,14]. However, these methods only provide additional inference power if the actual causal mechanisms conform to the required parametric form.

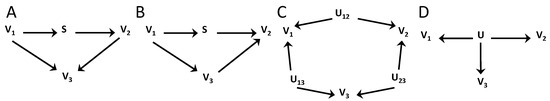

Beyond equality constraints, the causal structure may also impose inequality constraints on the distribution of the data [15,16], which reflect non-verifiable independencies involving hidden variables. Figure 1 illustrates this distinction between pairs of causal structures distinguishable based on independence constraints (Figure 1A,B) and causal structures that may be discriminated based on inequality constraints (Figure 1C,D). The structures of Figure 1A,B belong to different Markov equivalence classes because in Figure 1A variables and are independent conditioned on S, while in Figure 1B, to obtain an independence it is required to further the condition on . On the other hand, the structures of Figure 1C,D belong to the same equivalence class because no independencies exist between the observable variables . Nonetheless, if the hidden variables were also observable, these structures would be distinguishable. In Figure 1D, all the dependencies between the observable variables are caused by a single hidden variable U, while in Figure 1C dependencies are created pairwise by different hidden variables. In this case, a testable inequality constraint involving the observable variables reflects the non-verifiable independencies that involve also hidden variables. Intuitively, in Figure 1C, the inequality constraint imposes an upper bound on the overall degree of dependence between the three variables, given that these dependencies arise only in a pairwise manner, while in Figure 1D no such bound exists.

Figure 1.

Examples of causal structures distinguishable from independencies (A,B) and structures that may only be discriminated based on inequality constraints (C,D). In this case, the structure in (C), and not the one in (D), intrinsically imposes a constraint due to dependencies between the observable variables arising only from pairwise dependencies with hidden common causes.

Importantly, unlike equality constraints, inequality constraints provide necessary but not sufficient conditions for the compatibility of data with a certain causal structure. While a certain hypothesized causal structure—like in Figure 1C—may impose the fulfillment of a given inequality intrinsically from its structure, other causal structures—like in Figure 1D—can generate data that, given a particular instantiation of the causal mechanisms, also fulfill the inequality. Accordingly, the causal inference power of inequality constraints lies in the ability to reject hypothesized causal structures that would intrinsically require the fulfillment of an inequality when that inequality is not fulfilled by the data. This means that tighter inequalities have more inferential power, giving the capacity to discard more causal structures.

Two main classes of inequality constraints have been derived. The first class corresponds to inequality constraints in the probability space, which comprise tests of compatibility such as Bell-type inequalities [17,18], instrumental inequalities [19,20], and inequalities that appear on identifiable interventional distributions [21]. The second class corresponds to inequalities involving information-theoretic quantities. The relation between these probabilistic and entropic inequalities has been examined in [22]. One approach to construct entropic inequalities combines the inequalities defining the Shannon entropic cone, i.e., associated with the non-negativity, monotonicity, and submodularity properties of entropy, and additional independence constraints related to the causal structure [23,24]. Additional causally informative inequalities can be derived if considering the so-called Non-Shannon inequalities [25,26]. When the causal structure to be tested involves hidden variables, all non-trivial entropic inequalities in the marginal scenario associated with the set of observable variables can be derived with an algorithmic procedure [23,24] that projects the set of inequalities of all variables into inequalities that only involve the subset of observable variables.

As an alternative approach, information-theoretic inequality constraints can be derived by an explicit analytical formulation [24,27]. In particular, [27] introduced inequalities comparing the information about a target variable contained in a whole collection of variables with a weighted sum of the information contained in groups of variables corresponding to subsets of the collection. Two procedures were introduced to select the composition of these groups. In a first type of inequalities, the composition of the groups is arbitrarily determined, but an inequality only exists under some conditions of independence between the chosen variables, whose fulfillment reflects the underlying causal structure. In a second type, no conditions are required for the existence of an inequality, but the groups must be ancestral sets; that is, must contain all other variables that have a causal effect on any given element of the group. In both cases, [27] showed that the coefficients in the weighted sum of the information contained in groups of variables are determined by the number of intersections between the groups.

In this work, we build upon the results of [27] and generalize their framework of groups-decomposition inequalities in several ways. First, we generalize both types of inequalities to the conditional case, when the inequalities involve conditional mutual information measures instead of unconditional ones. While this extension is trivial for the first type of inequalities, we show that for the second type it requires a definition of augmented ancestral sets. Second, we formulate more flexible conditions of independence for which the first type of inequalities exists. Third, we add flexibility to the construction of the ancestral sets that appear in the second type of inequalities. We show that, given a causal graph and a conditioning set of variables used for the conditional mutual information measures, alternative inequalities exist when determining ancestors in subgraphs that eliminate causal connections from different subsets of the conditioning variables. Furthermore, we determine conditions in which an inequality also holds when removing subsets of ancestors from the whole set of variables, hence relaxing for the second type of inequalities the requirement that the groups correspond to ancestral sets.

Apart from these generalizations, we expand the power of the approach of [27] by considering inequalities whose existence is determined by the partition into groups of a collection of variables that also contains hidden variables. That is, hidden variables can appear not only as hidden common ancestors of the collection but also as part (or even all) of the variables in the collection for which the inequality is defined. To render operational the use of inequalities derived from collections containing hidden variables, we develop procedures that allow mapping those inequalities into testable inequalities that only involve observable variables. While this mapping can be carried out by simply applying the monotonicity of mutual information to remove hidden variables from the groups, this does not work when all variables in the collection are hidden. We show that data processing inequalities [28] can be applied to obtain testable inequalities also in this case, or applied to obtain tighter inequalities than those obtained by simply removing the hidden variables. We illustrate how testable inequalities whose coefficients in the weighted sum depend on intersections among subsets of hidden variables instead of among subsets of observable variables can result into tighter inequalities with higher inferential power.

In order to derive testable groups-decomposition inequalities, we do not only apply the standard data processing inequality of conditional mutual information [28], but we derive an additional data processing inequality for the so-called unique information measure introduced in [29]. This measure was introduced in the framework of a decomposition of mutual information into redundant, unique, and synergistic information components [30]. Recently, alternative decompositions have been proposed to decompose the joint mutual information that a set of predictor variables has about a target variable into redundant, synergistic, and unique components [31,32,33,34,35] (among others). These alternative decompositions generally differ in the quantification of each component and differ in whether the measures fulfill certain properties or axioms. However, in our work, we do not apply the unique information measure of [29] as part of a decomposition of the joint mutual information. Instead, we show that it provides an alternative data processing inequality that holds for different causal configurations than the standard data processing inequality of conditional mutual information. In this way, the unique information data processing inequality increases the capability to eliminate hidden variables in order to obtain testable groups-decomposition inequalities. Accordingly, the groups-decomposition inequalities we derive can contain unique information terms apart from the standard mutual information and entropy measures that appear when considering the constraints of the Shannon entropic cone [23,24].

We envisage the application of the causally informative tests here proposed in the following way. Given a data set, a hypothesized causal structure is selected to test its compatibility with the data. First, the set of inequality constraints enforced by that causal structure is determined. Second, their fulfillment is evaluated from the data and the causal structure is discarded if some inequality does not hold. In the first step, the determination of the set of groups-decomposition inequalities enforced by a causal structure requires at different levels the verification of conditional independencies. This is the case, for example, with the conditional independencies that are necessary conditions for the existence of the first type of inequalities introduced by [27]. If all variables involved were observable, this verification could be conducted directly from the data. However, as mentioned above, we here consider groups-decomposition inequalities that may contain hidden variables as part of the collection of variables, which precludes this direct verification. For this reason, we will work under the assumption that statistical independencies can be assessed from the structure of the causal graph, namely with the graphical criterion of separability between nodes in the graph known as d-separation [36]. That is, we will rely on the assumption that graphical separability is a sufficient condition for statistical independence and hence characterize the set of groups-decomposition inequalities enforced by a causal structure without using the data. Data would only be used in the second step, in which the actual fulfillment of the inequalities is evaluated.

This paper is organized as follows. In Section 2, we review previous work relevant for our contributions. In Section 3.1, we formulate the data processing inequality for the unique information measure. In Section 3.2, we generalize the first type of inequalities of [27], formulating for the conditional case more general conditions of independence for which a groups-decomposition inequality exists. We also apply data processing inequalities to derive testable groups-decomposition inequalities when collections include hidden variables. In Section 3.3, we generalize the second type of inequalities of [27] as outlined above. In Section 4, we discuss the connection of this work with other approaches to causal structure learning and point to future continuations and potential applications. The Appendix contains proofs of the results (Appendix A and Appendix B) and a discussion of the relations between conditional independencies and d-separations required so that the inequalities here derived are applicable to test causal structures (Appendix C).

2. Previous Work on Information-Theoretic Measures and Causal Graphs Relevant for Our Derivations

In this section we review properties of information-theoretic measures and concepts of causal graphs relevant for our work. In Section 2.1, we review basic inequalities of the mutual information and in Section 2.2 the definition and relevant properties of the unique information measure of [29]. We then review in Section 2.3 Directed Acyclic Graphs (DAGs) and their relation to conditional independence through the graphical criterion of d-separation [36,37]. Finally, we review the inequalities introduced by [27] to test causal structures from information decompositions involving sums of groups of variables (Section 2.4). We do not aim to more broadly review other types of information-theoretic inequalities [23,24] also used for causal inference. The relation with these other types will be considered in the Discussion.

2.1. Mutual Information Inequalities Associated with Independencies

We present in Lemma 1 two well-known inequalities that will be used in our derivations. This lemma corresponds to Lemma 1 in [27]. For completion, we provide the proof of the lemma.

Lemma 1.

The mutual information fulfills the following inequalities in the presence of the corresponding independencies:

(Conditional mutual information data processing inequality): Let , , , and be four sets of variables. If , then it follows that .

(Increase through conditioning on independent sets): Let , , , and be four sets of variables. If , then .

Proof.

is proven applying, in two different orders, the chain rule of the mutual information to :

Since and the mutual information is non-negative, this implies the inequality. To prove , the chain rule is applied in different orders to :

Since and the mutual information is non-negative, this implies the inequality. □

2.2. Definition and Properties of the Unique Information

The concept of unique information as part of a decomposition of the joint mutual information that a set of predictor variables has about a target (possibly multivariate) variable was introduced in [30]. In the simplest case of two predictors , this framework decomposes the joint mutual information about into four terms, namely the redundancy of and , the unique information of with respect to , the unique information of with respect to , and the synergy between and . The predictors share the redundant component, the synergistic one is only obtained by combining the predictors, and unique components are exclusive to each predictor. Several information measures have been proposed to define this decomposition, aiming to comply with a set of desirable properties which were not all fulfilled by the original proposal [29,31,32,33]. However, in this work we will not study the whole decomposition but specifically apply the bivariate measure of unique information introduced in [29]. In Section 3.1, we derive a data processing inequality for this measure and in Section 3.2 we show how it can help to obtain testable groups-decomposition inequalities for causal structures for which the standard data processing inequality of the mutual information would not allow elimination of the hidden variables. In this Section, we review the definition of the unique information measure of [29], we provide a straightforward generalization to a conditional unique information measure, and state a monotonicity property that will be used to derive the data processing inequality of the unique information. The unique information of with respect to about was defined as

where is defined as the set of distributions on that preserve the marginals and of the original distribution . The notation is used to indicate that the mutual information is calculated on the probability distribution Q. We use to refer to the unique information of with respect to , compared to , which is the standard conditional information of given . We use the notation instead of the notation introduced by [29] to differentiate it from the set notation , which indicates the subset of variables in that is not contained in , since we will also be using this set notation. This unique information measure is a maximum entropy measure, since all distributions within preserve the conditional entropy , and hence the minimization is equivalent to a maximization of the conditional entropy . The rationale that supports this definition is that the unique information of with respect to about has to be determined by the marginal probabilities and , and cannot depend on any additional structure in the joint distribution that contributes to the dependence between and [29]. This additional contribution is removed by minimizing within .

In a straightforward generalization, we define the conditional unique information given another set of variables as

where is the set of distributions on that preserve the marginals and of the original . By construction [29], the conditional unique information is bounded as

This is consistent with the intuition of the decomposition that the unique information is a component exclusive of . In Lemma 2, we present a type of monotonicity fulfilled by the conditional unique information. This result is a straightforward extension to the conditional case of the one stated in Lemma 3 of [38]. We include the full proof because it will be useful in the Results section to prove a related data processing inequality for the unique information. To better suit our subsequent use of notation, we consider the two predictors to be and , and the conditioning set to be .

Lemma 2.

The maximum entropy conditional unique information is monotonic on its second argument, corresponding to the non-conditioning predictor, as follows:

Proof.

Consider the distribution and its marginal . Consider any distribution and its marginal on . Then . By monotonicity of the mutual information, is lower than or equal to . Since does not have as an argument, it is equal to the information calculated on its marginal . Since this holds for any distribution in , it holds in particular for the distribution that minimizes in . Since belongs to , the minimum of in is equal to or smaller than and hence equal to or smaller than . □

2.3. Causal Graphs and Conditional Independencies

We here review basic notions of Directed Acyclic Graphs (DAGs) and the relation between causal structures and dependencies. Consider a set of random variables . A DAG consists of nodes and edges between the nodes. The graph contains for each . We refer to V as both a variable and its corresponding node. Causal influences can be represented in acyclic graphs given that causal mechanisms are not instantaneous and causal loops can be spanned using separate time-indexed variables. A path in G is a sequence of (at least two) distinct nodes such that there is an edge between and for all . If all edges are directed as the path is a causal or directed path. A node is a collider in a path if it has incoming arrows and is a noncollider otherwise. A node is called a parent of if there is an arrow . The set of parents is denoted . A node is called an ancestor of if there is a directed path from to . Conversely, in this case is a descendant of . For convenience, we define the set of ancestors as including itself, and the set of descendants as also containing itself.

The link between generative mechanisms and causal graphs relies on the fact that in the graph a variable is a parent of another variable if and only if it is an argument of an underlying functional equation that captures the mechanisms that generate ; that is, an argument of , where captures additional sources of stochasticity exogenous to the system. If a DAG constitutes an accurate representation of the causal mechanisms, an isomorphic relation exists between the conditional independencies that hold between variables in the system and a graphical criterion of separability between the nodes, called d-separation [36]. Two nodes X and Y are d-separated given a set of nodes if and only if no -active paths exist between X and Y. A path is active given the conditioning set (-active) if no noncollider in the path belongs to and every collider in the path either is in or has a descendant in . A causal structure G and a generated probability distribution are faithful[1,2] to one another when a conditional independence between X and Y given —denoted by —holds if and only if there is no -active path between them; that is, if X and Y are d-separated given —denoted by . Accordingly, faithfulness is assumed in the algorithms of causal inference [1,2] that examine conditional independencies to characterize the Markov equivalence class of causal structures that share a common set of independencies. A well-known example of a system that is unfaithful to its causal structure is the exclusive-OR (X-OR) logic gate, whose output is independent of the two inputs separately but dependent on them jointly.

In contrast to the algorithms that infer Markov equivalence classes, we will show that the applicability of the groups-decomposition inequalities here studied relies on the assumption that d-separability is a sufficient condition for conditional independence. That is, instead of an if and only if relation between d-separability and conditional independence, as required in the faithfulness assumption, it is enough to assume that d-separability implies conditional independence. As we further discuss in Appendix C, this is a substantially weaker assumption, since usually faithfulness is violated due to the presence of independencies that are incompatible with the causal structure. This is the case, for example, of the X-OR logic gate, for which faithfulness is violated because the inputs are separately independent of the output despite each having an arrow towards the output in the corresponding causal graph. Conversely, the X-OR gate complies with d-separability being a sufficient condition for independence, since in the graph only the input nodes are d-separated and the corresponding input variables of the X-OR gate are independent. Despite only requiring that d-separability implies independence, to simplify the presentation of our results in the main text we will assume faithfulness and indistinctively use to indicate statistical independence and graphical separability, instead of distinguishing between and . In Appendix C, we will more closely examine how in the proofs of our results the sufficient condition of d-separability for conditional independencies is enough. An important implication of independencies following from d-separability is that, if variables are separately independent from Y—namely and —because of the lack of any connection between node Y and both nodes and , then cannot be jointly dependent on Y, namely  cannot occur. This is because d-separability between node Y and the set of nodes is determined by separately considering the lack of active paths between Y and each node and . Since the set of paths between Y and is the union of the paths between Y and both and , considering jointly does not add new paths that could create a dependence of Y with . A dependence can only be created by conditioning on some other variable, which could activate additional paths by activating a collider.

cannot occur. This is because d-separability between node Y and the set of nodes is determined by separately considering the lack of active paths between Y and each node and . Since the set of paths between Y and is the union of the paths between Y and both and , considering jointly does not add new paths that could create a dependence of Y with . A dependence can only be created by conditioning on some other variable, which could activate additional paths by activating a collider.

cannot occur. This is because d-separability between node Y and the set of nodes is determined by separately considering the lack of active paths between Y and each node and . Since the set of paths between Y and is the union of the paths between Y and both and , considering jointly does not add new paths that could create a dependence of Y with . A dependence can only be created by conditioning on some other variable, which could activate additional paths by activating a collider.

cannot occur. This is because d-separability between node Y and the set of nodes is determined by separately considering the lack of active paths between Y and each node and . Since the set of paths between Y and is the union of the paths between Y and both and , considering jointly does not add new paths that could create a dependence of Y with . A dependence can only be created by conditioning on some other variable, which could activate additional paths by activating a collider.2.4. Inequalities for Sums of Information Terms from Groups of Variables

We now review two results in [27] that are at the foundation of our results. The first corresponds to their Proposition 1. We provide a slightly more general formulation that is useful for subsequent extensions.

Proposition 1.

(Decomposition of information from groups with conditionally independent non-shared components): Consider a collection of groups , where each group consists of a subset of observable variables , being the set of all observable variables. For every , define as the maximal value such that has a non-empty intersection where it intersects jointly with other distinct groups out of . Consider a conditioning set and target variables . If each group is conditionally independent given from the non-shared variables in each other group (i.e., ), then the conditional information that has about the target variables given is bounded from below by

Proof.

The proof is presented in Appendix A. It is a generalization to the conditional case of the proof of Proposition 1 in [27] and a slight generalization that allows for dependencies to exist between variables shared by two groups as long as dependencies with non-shared variables do not exist. □

An illustration of Proposition 1 for the unconditional case is presented in Figure 3 of [27], together with further discussion. In Section 3.2 we will provide further illustrations for the extensions of Proposition 1 that we introduce. We will use to indicate the maximal values for all groups. We will add a subindex to specify the collection if different collections are compared. A trivial refinement of Proposition 1 would consider and for each group . This may lead to a tighter lower bound by decreasing some values in if some intersections between groups occur in . We do not present this refinement in order to simplify the presentation.

The second result from [27] that we will be relying on is their Theorem 1. We present a version that is slightly reduced and modified, which is more convenient in order to relate to our own results.

Theorem 1.

(Decomposition of information in ancestral groups.) Let G be a DAG model that includes nodes corresponding to the variables in a collection of groups , which is a subset all observable variables . Let be the collection of ancestors of the groups, as determined by G. For every ancestral set of a group, , let be maximal, such that there is a non-empty joint intersection of and other distinct ancestral sets out of . Let be a set of target variables. Then the information of about is bounded as

Proof.

The original proof can be found in [27]. □

In contrast to Proposition 1, a generalization to the conditional mutual information is not trivial and will be developed in Section 3.3. We will also propose additional generalizations regarding which graph to use to construct the ancestral sets and conditions to exclude some ancestors from the groups. In their work, [27] conceptualized as corresponding to leaf nodes in the graph, for example providing some noisy measurement of , with being the case of perfect measurement. While this conceptualization guided their presentation, their results were general, and here we will not assume any concrete causal relation between and . We have slightly modified the presentation of Theorem 1 from [27] to add the upper bound and to remove some additional subcases with extra assumptions presented in their work. The upper bound is the standard upper bound of mutual information by entropy [28]. In the Results, we will also be interested in cases in which contains hidden variables, so that cannot be estimated. Given the monotonicity of mutual information, the terms from each ancestral group can be lower bounded by the information in the observable variables within each group and is used as a testable upper bound.

There are two main differences between Proposition 1 and Theorem 1. First, Theorem 1 does not impose conditions of independence for the inequality to hold. Second, while the value of each group is determined in Proposition 1 by the overlap between groups, with no influence of the causal structure relating the variables, on the other hand in Theorem 1 the value depends on the causal structure, since it is determined from the intersections between ancestral sets. Despite these differences, given the relation between causal structure and independencies reviewed in Section 2.3, both types of inequalities can have causal inference power to test the compatibility of certain causal structures with data.

3. Results

In Section 3.1, we introduce a data processing inequality for the conditional unique information measure of [29]. In Section 3.2, we develop new information inequalities involving groups of variables and examine how data processing inequalities can help to derive testable inequalities in the presence of hidden variables. In Section 3.3, we develop new information inequalities involving ancestral sets. The application of these inequalities for causal structure learning is discussed. As justified in the proofs of our results (Appendix A and Appendix B) and further discussed in Appendix C, our derivations of groups-decomposition inequalities only rely on the assumption that d-separability implies conditional independence. No further assumptions are used in our work, in particular, our application of the unique information measures of [29] does not require any assumption regarding the precise distribution of the joint mutual information among redundancy, unique, and synergistic components.

3.1. Data Processing Inequality for Conditional Unique Information

Proposition 2.

(Conditional unique information data processing inequality): Let , , , , and be five sets of variables. If , then .

Proof.

Let be the original distribution of the variables and define as the set of distributions on that preserve the two marginals and . Let be the marginal of and be the set of distributions that preserve the marginals and . By the definition of unique information (Equation (2))

Equality follows from the chain rule of mutual information. Equality holds because does not depend on and can be calculated with the marginal , marginalizing on . Note that . Since is null, factorizes as . For any distribution , which preserves and , a distribution can be constructed as , such that , since continues to preserve and is preserved by construction. Also by construction, for any created from any . In particular, this holds for the distribution constructed from that minimizes , which determines . The distribution minimizes the first term in the r.h.s of Equation (4) and, given the non-negativity of mutual information, it also minimizes the second term, hence providing the minimum in . Accordingly, . The monotonicity of unique information on the non-conditioning predictor (Lemma 2) leads to . □

A related data processing inequality has already been previously derived for the unconditional unique information in the case of , with [39]. Differently, Proposition 2 formulates a data processing inequality for the case . When , Proposition 2 states a weaker requirement for the existence of an inequality, given the decomposition axiom of the mutual information [27]. As we will now see in Section 3.2, Proposition 2 will allow us to apply the unique information data processing inequality in cases in which . In particular, allows us to obtain a lower bound when contains hidden variables that we want to eliminate in order to have a testable groups-decomposition inequality. In contrast, the application of the standard data processing inequality of the mutual information requires , and hence the two types of data processing inequalities may be applicable in different cases to eliminate . This will be fully appreciated in Propositions 5 and 6. Note that this application of the unique information measure of Equation (2) to eliminate hidden variables is not restrained by the role of the measure in the mutual information decomposition and by considerations about which alternative decompositions optimally quantify the different components [30,35].

3.2. Inequalities Involving Sums of Information Terms from Groups

In this section, we extend Proposition 1 in several ways. Propositions 3–6 present subsequent generalizations, all subsumed by Proposition 6. We present these generalizations progressively to better appreciate the new elements. For these Propositions, examples are displayed in Figure 2 and Figure 3 and explained in text after the enunciation of each Proposition. Which Proposition is illustrated by each example is indicated in the figure caption and in the main text. The objective of these generalizations is twofold: First, to derive new testable inequalities for causal structures not producing a testable inequality from Proposition 1. Second, to find inequalities with higher inferential power, even when some already exist. These objectives are achieved introducing inequalities with less constringent requirements of conditional independence and using data processing inequalities to substitute certain variables from , so that the conditions of independence are fulfilled or the number of intersections is reduced and lower values in are obtained. The first extension relaxes the conditions required in Proposition 1:

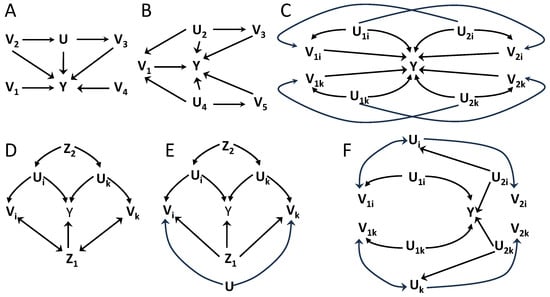

Figure 2.

Examples of applications of Proposition 3 (A–C) and Proposition 4 (D–F) to obtain testable inequalities. The causal graphs allow verifying if the required conditional independence conditions are fulfilled by using d-separation. Variable Y is the target variable, observable variables are denoted by V, hidden variables by U, and conditioning variables by Z. For all examples, the composition of groups is described in the main text. For graphs using subindexes i, k to display two concrete groups, those are representative of the same causal structure for all groups that compose the system. In those graphs, variables with no subindex have the same connectivity with all groups. Bidirectional arrows indicate common hidden parents not included in any group.

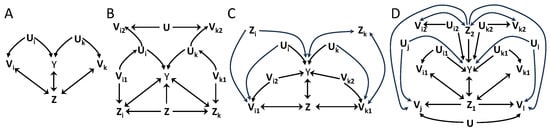

Figure 3.

Examples of the application of Proposition 5 (A–C) and Proposition 6 (D) to obtain testable inequalities. Notation is analogous to Figure 2. The composition of groups is described in the main text.

Proposition 3.

(Weaker conditions of independence through group augmentation for a decomposition of information from groups with conditionally independent non-shared components): Consider a collection of groups , a conditioning set , and target variables as in Proposition 1. Consider that for each group a group exists, such that and can be partitioned in two disjoint subsets such that fulfills the conditions of independence and the conditions , and such that and are disjoint. Define the maximal values like in Proposition 1 but for the augmented groups . Then, the conditional information that has about the target variables given is bounded from below by:

Proof.

The proof is provided in Appendix A. □

The contribution of Proposition 3 is to relax the conditional independence requirements . Analogous conditions remain for , but needs to fulfill the conditions . This means that the variables in are used to separate the variables in from other groups. If is empty for all i, Proposition 3 reduces to Proposition 1.

Another difference between Propositions 1 and 3 regards the role of hidden variables. Assume that each is formed by , where are hidden variables and observable variables. In Proposition 1, the requirement that the variables are observable is not fundamental and could be removed. However, to obtain a testable inequality, monotonicity of mutual information would need to be applied to reduce each term to its estimable lower bound that does not contain the hidden variables . On the other hand, the fulfillment of implies , and reducing to can only decrease the number of intersections, and hence values are equal or smaller than . Therefore, with Proposition 1, there is no advantage in including hidden variables. When testing Proposition 1 for a hypothesis of the underlying causal structure (and related independencies), it is equally or more powerful to use than .

This changes in Proposition 3, since appears in the conditioning side of the independencies that constrain . If hidden variables within are necessary to create the independencies for , it is not possible to reduce each group to its subset of observable variables. Note that, for a hypothesized causal structure, whether the independence conditions required by Proposition 3 are fulfilled can be verified without observing the hidden variables by using the d-separation criterion on the causal graph, assuming d-separation implies independence. The actual estimation of mutual information values is only needed when testing an inequality from the data.

If includes hidden variables, in general cannot be estimated and is used as an upper bound. For the r.h.s. of the inequality, a lower bound is obtained by monotonicity of the mutual information, removing the hidden variables. In general, a testable inequality has the form

with being the observable variables within each group. In the case that , that is, if the hidden variables do not add information, then a testable tighter upper bound is available using . Importantly, the values are determined using the groups in . Since , group augmentation comes at the price that are equal or higher than , but the conditional independence requirements may not be fulfilled without it. Note also that the partition is not known a priori, but determined in the process of finding suitable augmented groups that fulfill the conditions.

We examine some examples before further generalizations. Throughout all figures, we will read independencies from the causal structures using d-separation, assuming faithfulness. In Figure 2A, consider groups and , and . Proposition 1 is not applicable due to  . Augmenting the groups to , , and the conditions of Proposition 3 are fulfilled, as can be verified by d-separation. Coefficients are determined by due to the intersection of the groups in U. Note that hidden variables are not restricted to be hidden common ancestors, and here U is a mediator between and . In Figure 2B, consider groups , , , which do not fulfill the conditions of Proposition 1. Augmenting the groups to , , , , , and the conditions are fulfilled. Maximal intersection values are . In both examples the upper bound is since cannot be estimated due to hidden variables.

. Augmenting the groups to , , and the conditions of Proposition 3 are fulfilled, as can be verified by d-separation. Coefficients are determined by due to the intersection of the groups in U. Note that hidden variables are not restricted to be hidden common ancestors, and here U is a mediator between and . In Figure 2B, consider groups , , , which do not fulfill the conditions of Proposition 1. Augmenting the groups to , , , , , and the conditions are fulfilled. Maximal intersection values are . In both examples the upper bound is since cannot be estimated due to hidden variables.

. Augmenting the groups to , , and the conditions of Proposition 3 are fulfilled, as can be verified by d-separation. Coefficients are determined by due to the intersection of the groups in U. Note that hidden variables are not restricted to be hidden common ancestors, and here U is a mediator between and . In Figure 2B, consider groups , , , which do not fulfill the conditions of Proposition 1. Augmenting the groups to , , , , , and the conditions are fulfilled. Maximal intersection values are . In both examples the upper bound is since cannot be estimated due to hidden variables.

. Augmenting the groups to , , and the conditions of Proposition 3 are fulfilled, as can be verified by d-separation. Coefficients are determined by due to the intersection of the groups in U. Note that hidden variables are not restricted to be hidden common ancestors, and here U is a mediator between and . In Figure 2B, consider groups , , , which do not fulfill the conditions of Proposition 1. Augmenting the groups to , , , , , and the conditions are fulfilled. Maximal intersection values are . In both examples the upper bound is since cannot be estimated due to hidden variables.We also consider scenarios with more groups. Figure 2C represents groups organized in pairs, with subindexes indicating two particular pairs. The groups are defined in pairs, with and , . The causal structure is the same across pairs, but the mechanisms generating the variables beyond the causal structure can possibly differ. Proposition 1 is not fulfilled since  . Groups can be augmented to , , for . Proposition 3 then holds with for all groups. The pairs of groups contribute to the sum as , which in the testable inequality of the form of Equation (5) reduces to . The upper bound to the sum of terms is . This inequality provides causal inference power because for all j is not directly testable. As previously indicated, the inference power of an inequality emanates from the possibility to discard causal structures that do not fulfill it. Note that for this system an alternative is to define N groups instead of groups, each as . In this case Proposition 1 is already applicable with the coefficients being all 1, since for all . For this inequality, each of the N groups contributes with , and since there are no hidden variables the l.h.s. is . However, this latter inequality holds for any causal structure that fulfills for all . Given that these independencies do not involve hidden variables, they are directly testable from data, so that the latter inequality does not provide additional inference power, in contrast to the former one.

. Groups can be augmented to , , for . Proposition 3 then holds with for all groups. The pairs of groups contribute to the sum as , which in the testable inequality of the form of Equation (5) reduces to . The upper bound to the sum of terms is . This inequality provides causal inference power because for all j is not directly testable. As previously indicated, the inference power of an inequality emanates from the possibility to discard causal structures that do not fulfill it. Note that for this system an alternative is to define N groups instead of groups, each as . In this case Proposition 1 is already applicable with the coefficients being all 1, since for all . For this inequality, each of the N groups contributes with , and since there are no hidden variables the l.h.s. is . However, this latter inequality holds for any causal structure that fulfills for all . Given that these independencies do not involve hidden variables, they are directly testable from data, so that the latter inequality does not provide additional inference power, in contrast to the former one.

. Groups can be augmented to , , for . Proposition 3 then holds with for all groups. The pairs of groups contribute to the sum as , which in the testable inequality of the form of Equation (5) reduces to . The upper bound to the sum of terms is . This inequality provides causal inference power because for all j is not directly testable. As previously indicated, the inference power of an inequality emanates from the possibility to discard causal structures that do not fulfill it. Note that for this system an alternative is to define N groups instead of groups, each as . In this case Proposition 1 is already applicable with the coefficients being all 1, since for all . For this inequality, each of the N groups contributes with , and since there are no hidden variables the l.h.s. is . However, this latter inequality holds for any causal structure that fulfills for all . Given that these independencies do not involve hidden variables, they are directly testable from data, so that the latter inequality does not provide additional inference power, in contrast to the former one.

. Groups can be augmented to , , for . Proposition 3 then holds with for all groups. The pairs of groups contribute to the sum as , which in the testable inequality of the form of Equation (5) reduces to . The upper bound to the sum of terms is . This inequality provides causal inference power because for all j is not directly testable. As previously indicated, the inference power of an inequality emanates from the possibility to discard causal structures that do not fulfill it. Note that for this system an alternative is to define N groups instead of groups, each as . In this case Proposition 1 is already applicable with the coefficients being all 1, since for all . For this inequality, each of the N groups contributes with , and since there are no hidden variables the l.h.s. is . However, this latter inequality holds for any causal structure that fulfills for all . Given that these independencies do not involve hidden variables, they are directly testable from data, so that the latter inequality does not provide additional inference power, in contrast to the former one.We now continue with further generalizations. Group augmentation in Proposition 3 cannot decrease the values of the maximal number of intersections. We now describe how the data processing inequalities in Lemma 1 and Proposition 2 can be used to substitute variables within the groups, potentially reducing the number of intersections. We start with the data processing inequality for the conditional mutual information.

Proposition 4.

(Decomposition of information from groups modified with the conditional mutual information data processing inequality): Consider a collection of groups , a conditioning set , and target variables as in Proposition 1. Consider that for some group a group exists such that , with . Define as the collection of groups that replaces by for those following the previous independence condition. If fulfills the conditions of Proposition 3, the inequality derived for also provides an upper bound for the sum of the information provided by the groups in :

Proof.

The proof applies Proposition 3 to followed by the data processing inequality of Lemma 1 to each term within the sum in which and are different. Given that implies , their sum is also smaller or equal. □

Proposition 3 envisaged cases in which the conditions of independence of Proposition 1 were not fulfilled for a collection and augmentation allowed fulfilling weaker conditions, even if with higher values compared to . Proposition 4 is useful not only when the conditions of independence are not fulfilled for , but more generally if some values in are lower than in , hence providing a tighter inequality. Including hidden variables in is beneficiary when replacing observed by hidden variables leads to fewer intersections. The procedures of Proposition 3 and 4 can be combined, that is, starting with that contains only observable variables, a new collection can be constructed adding new variables and removing others from , ending with that contains both observable and hidden variables. The collection fulfilling the conditions of Proposition 3 may even contain only hidden variables, and a testable inequality is obtained as long as the data processing inequality allows calculating observable lower bounds for all terms in the sum.

Figure 2D–F are examples of Proposition 4. Again we consider cases with N groups with equal causal structure and use indexes to represent two concrete groups. In Figure 2D, with , Proposition 3 does not apply for conditioning on because  , for all . However, given that , each can be replaced to build , and since , for all Proposition 3 applies after using Proposition 4 to create . A testable inequality is derived with upper bound and a sum of terms , each being a lower bound of given the data processing inequality that follows from . The coefficients are . Therefore, in this case Proposition 4 results in an inequality when no inequality held for . In Figure 2E, the same procedure relies on and to use to create a testable inequality with l.h.s. and the sum of terms in the r.h.s. with . Note that by U, which has no subindex, we represent in Figure 2E a hidden common driver of all N groups, not only the displayed . In this example Proposition 3 could have been directly applied without using Proposition 4 if augmenting to , with and , since . However, , since all groups intersect in U. Therefore, in this case an inequality already exists without applying Proposition 4, but its use allows replacing by , hence creating a tighter inequality with higher causal inference power.

, for all . However, given that , each can be replaced to build , and since , for all Proposition 3 applies after using Proposition 4 to create . A testable inequality is derived with upper bound and a sum of terms , each being a lower bound of given the data processing inequality that follows from . The coefficients are . Therefore, in this case Proposition 4 results in an inequality when no inequality held for . In Figure 2E, the same procedure relies on and to use to create a testable inequality with l.h.s. and the sum of terms in the r.h.s. with . Note that by U, which has no subindex, we represent in Figure 2E a hidden common driver of all N groups, not only the displayed . In this example Proposition 3 could have been directly applied without using Proposition 4 if augmenting to , with and , since . However, , since all groups intersect in U. Therefore, in this case an inequality already exists without applying Proposition 4, but its use allows replacing by , hence creating a tighter inequality with higher causal inference power.

, for all . However, given that , each can be replaced to build , and since , for all Proposition 3 applies after using Proposition 4 to create . A testable inequality is derived with upper bound and a sum of terms , each being a lower bound of given the data processing inequality that follows from . The coefficients are . Therefore, in this case Proposition 4 results in an inequality when no inequality held for . In Figure 2E, the same procedure relies on and to use to create a testable inequality with l.h.s. and the sum of terms in the r.h.s. with . Note that by U, which has no subindex, we represent in Figure 2E a hidden common driver of all N groups, not only the displayed . In this example Proposition 3 could have been directly applied without using Proposition 4 if augmenting to , with and , since . However, , since all groups intersect in U. Therefore, in this case an inequality already exists without applying Proposition 4, but its use allows replacing by , hence creating a tighter inequality with higher causal inference power.

, for all . However, given that , each can be replaced to build , and since , for all Proposition 3 applies after using Proposition 4 to create . A testable inequality is derived with upper bound and a sum of terms , each being a lower bound of given the data processing inequality that follows from . The coefficients are . Therefore, in this case Proposition 4 results in an inequality when no inequality held for . In Figure 2E, the same procedure relies on and to use to create a testable inequality with l.h.s. and the sum of terms in the r.h.s. with . Note that by U, which has no subindex, we represent in Figure 2E a hidden common driver of all N groups, not only the displayed . In this example Proposition 3 could have been directly applied without using Proposition 4 if augmenting to , with and , since . However, , since all groups intersect in U. Therefore, in this case an inequality already exists without applying Proposition 4, but its use allows replacing by , hence creating a tighter inequality with higher causal inference power.In Figure 2F, again we consider groups, consisting of N pairs with the same causal structure across pairs and indices representing two of these pairs. For groups , with and , Proposition 3 is directly applicable for and , with . The data processing inequalities associated with allow applying Proposition 4 to obtain an inequality for the groups , which .

Proposition 4 relies on the data processing inequality of the conditional mutual information. The data processing inequality of unique information can also be used for the same purpose, and both data processing inequalities can be combined applying them to different groups.

Proposition 5.

(Decomposition of information from groups modified using across different groups the conditional or unique information data processing inequality): Consider a collection of groups , a conditioning set , and target variables as in Proposition 1. Consider a subset of groups such that for a group exists such that, for some , , with . Define as the collection of groups that replaces by for those following the previous independence conditions. Define for the unaltered groups and for all groups. If fulfills the conditions of Proposition 3, the inequality derived for also provides an upper bound for a sum combining conditional and unique information terms for different groups in :

Proof.

The proof applies Proposition 3 to and then both types of data processing inequalities depending on which one holds for different groups:

Inequality follows from the unique information always being equal to or smaller than the conditional mutual information (Equation (3)). Inequality applies the conditional mutual information data processing inequality to those groups with different than but , and the unique information data processing inequality to those groups with . □

Proposition 5 is useful when the conditions of independence required to apply Proposition 3 do not hold for . It can also be useful to obtain inequalities with higher causal inferential power if are smaller than , even if Proposition 3 is directly applicable. By definition, the terms are equal to or smaller than , which can only decrease the lower bound, but the data processing inequality may hold only for the unique information and not the conditional information term. Note that the partition can be group-specific and selected such that data processing inequalities can be applied.

Figure 3A shows an example of the application of the data processing inequality of unique information. For , Proposition 3 does not apply to because  . The data processing inequality of conditional mutual information does not hold with

. The data processing inequality of conditional mutual information does not hold with  . This data processing inequality could be used adding to the latent common parent in , but this variable would be shared by all augmented groups , leading to an intersection of all N groups. Alternatively, the data processing inequality holds for the unique information with , and for all . Proposition 5 is applied with , , and , . This leads to an inequality with as upper bound and the sum of terms at the r.h.s. with coefficients determined by . In Figure 3B, taking and defining the conditioning set , we have

. This data processing inequality could be used adding to the latent common parent in , but this variable would be shared by all augmented groups , leading to an intersection of all N groups. Alternatively, the data processing inequality holds for the unique information with , and for all . Proposition 5 is applied with , , and , . This leads to an inequality with as upper bound and the sum of terms at the r.h.s. with coefficients determined by . In Figure 3B, taking and defining the conditioning set , we have  and

and  . On the other hand, , so that the data processing can be applied with the unique information and Proposition 5 is applied with , and . An inequality exists given that , and the testable inequality has an upper bound and at the r.h.s. the sum of terms , with .

. On the other hand, , so that the data processing can be applied with the unique information and Proposition 5 is applied with , and . An inequality exists given that , and the testable inequality has an upper bound and at the r.h.s. the sum of terms , with .

. The data processing inequality of conditional mutual information does not hold with

. The data processing inequality of conditional mutual information does not hold with  . This data processing inequality could be used adding to the latent common parent in , but this variable would be shared by all augmented groups , leading to an intersection of all N groups. Alternatively, the data processing inequality holds for the unique information with , and for all . Proposition 5 is applied with , , and , . This leads to an inequality with as upper bound and the sum of terms at the r.h.s. with coefficients determined by . In Figure 3B, taking and defining the conditioning set , we have

. This data processing inequality could be used adding to the latent common parent in , but this variable would be shared by all augmented groups , leading to an intersection of all N groups. Alternatively, the data processing inequality holds for the unique information with , and for all . Proposition 5 is applied with , , and , . This leads to an inequality with as upper bound and the sum of terms at the r.h.s. with coefficients determined by . In Figure 3B, taking and defining the conditioning set , we have  and

and  . On the other hand, , so that the data processing can be applied with the unique information and Proposition 5 is applied with , and . An inequality exists given that , and the testable inequality has an upper bound and at the r.h.s. the sum of terms , with .

. On the other hand, , so that the data processing can be applied with the unique information and Proposition 5 is applied with , and . An inequality exists given that , and the testable inequality has an upper bound and at the r.h.s. the sum of terms , with .In Figure 3C, we examine an example in which groups differ in the causal structure of the conditioning variable : For the groups of the type of group i, is a common parent of Y and . For the groups of the type of k, is a collider in a path between Y and . Consider M groups of the former type and of the latter. We examine the existence of an inequality for groups defined as , with . Proposition 3 cannot be applied to because  for all . The mutual information data processing inequality is not applicable to substitute because

for all . The mutual information data processing inequality is not applicable to substitute because  . However, for the M groups like i, the independence leads to the data processing inequality . For these groups, and . For the groups like k, the independence leads to . For these groups and . In all cases the modified groups are , which fulfill the requirement for all needed to apply Proposition 3. The testable inequality that follows from Proposition 5 has upper bound and in the sum at the r.h.s. has M terms of the form and terms of the form . The coefficients are determined by .

. However, for the M groups like i, the independence leads to the data processing inequality . For these groups, and . For the groups like k, the independence leads to . For these groups and . In all cases the modified groups are , which fulfill the requirement for all needed to apply Proposition 3. The testable inequality that follows from Proposition 5 has upper bound and in the sum at the r.h.s. has M terms of the form and terms of the form . The coefficients are determined by .

for all . The mutual information data processing inequality is not applicable to substitute because

for all . The mutual information data processing inequality is not applicable to substitute because  . However, for the M groups like i, the independence leads to the data processing inequality . For these groups, and . For the groups like k, the independence leads to . For these groups and . In all cases the modified groups are , which fulfill the requirement for all needed to apply Proposition 3. The testable inequality that follows from Proposition 5 has upper bound and in the sum at the r.h.s. has M terms of the form and terms of the form . The coefficients are determined by .

. However, for the M groups like i, the independence leads to the data processing inequality . For these groups, and . For the groups like k, the independence leads to . For these groups and . In all cases the modified groups are , which fulfill the requirement for all needed to apply Proposition 3. The testable inequality that follows from Proposition 5 has upper bound and in the sum at the r.h.s. has M terms of the form and terms of the form . The coefficients are determined by .Proposition 5 combines both types of data processing inequalities, but only across different groups. Our last extension of Proposition 1 combines both types across and within groups. For each group, we introduce a disjoint partition into subgroups and define . Subgroups are analogously defined for , also with . In general, for any ordered set of vectors we use to refer to all elements up to k, where in general can be nonempty.

Proposition 6.

(Decomposition of information from groups modified with the conditional or unique information data processing inequality across and within groups): Consider a collection of groups , a conditioning set , and a target variable as in Proposition 1. Consider that for each group there are disjoint partitions and , and a collection of sets of additional variables , such that for . Define the collection with the modified groups . If fulfills the conditions of Proposition 3, the inequality derived for also provides an upper bound for sums combining conditional and unique information terms for different groups in :

for .

Proof.

The proof is provided in Appendix A. □

If for all i, then , , , and Proposition 6 reduces to Proposition 3. If and for all i, we recover Proposition 4, with . If for all i and for some i, we recover Proposition 5, with and . Like for previous propositions, some groups may be unmodified such that .

The tightest inequality results from maximizing across each term in the sum. In the proof of Proposition 6 in Appendix A we show that, when increasing , the terms are monotonically increasing. However, in general can contain hidden variables, which means that, to obtain a testable inequality, for each each term needs to be substituted by its lower bound that quantifies the information in the subset of observable variables. For each group, the optimal leading to the tightest inequality will depend on the subset of observable variables and the corresponding values of .

Figure 3D shows an example of application of Proposition 6. Like in Figure 3C, there are two types of groups with different causal structure. M groups have the structure of the variables with indexes , and . The other groups have the structure of the variables with indexes , and . The conditioning set selected is . Proposition 3 cannot be applied directly because  for all within the M groups, and

for all within the M groups, and  for all within the groups. Proposition 6 applies as follows. For the groups, with , , , and . The independencies for correspond in this case to , for . For the other M groups, with , , , , , , , and . The independencies involved are , for , and , for .

for all within the groups. Proposition 6 applies as follows. For the groups, with , , , and . The independencies for correspond in this case to , for . For the other M groups, with , , , , , , , and . The independencies involved are , for , and , for .

for all within the M groups, and

for all within the M groups, and  for all within the groups. Proposition 6 applies as follows. For the groups, with , , , and . The independencies for correspond in this case to , for . For the other M groups, with , , , , , , , and . The independencies involved are , for , and , for .

for all within the groups. Proposition 6 applies as follows. For the groups, with , , , and . The independencies for correspond in this case to , for . For the other M groups, with , , , , , , , and . The independencies involved are , for , and , for .Proposition 6 applies because with defined as for the groups and for the M groups, the requirements of independence of Proposition 3 are fulfilled, in particular for all . The terms for the groups are and are substituted by lower bounds in the testable inequality. For the M groups, we have the subsequent sequence of inequalities: ≥≥≥. The first inequality follows from the independence for , the second from the unique information being equal or smaller than the conditional information, and the third from the independence for . Considering that a testable inequality can only contain observable variables, for the M groups the terms in the sum can be or , depending on which one is higher. The coefficients are determined by and the resulting testable inequality has upper bound .

Overall, Propositions 4–6 further extend the cases in which groups-decomposition inequalities of the type of Proposition 1 can be derived. Our Proposition 1 extends Proposition 1 of [27] to allow conditioning sets, Proposition 3 further weakens the conditions of independence required in Proposition 1, and Propositions 4–6 use data processing inequalities to obtain testable inequalities from groups-decompositions derived comprising hidden variables, which can be more powerful than inequalities directly derived without comprising hidden variables. In Figure 2 and Figure 3, we have provided examples of causal structures for which these new groups-decompositions inequalities exist. In all these cases, the use of our groups-decomposition inequalities increases the set of available inequality tests that can be used to reject hypothesized causal structures underlying data.

3.3. Inequalities Involving Sums of Information Terms from Ancestral Sets

We now examine inequalities involving ancestral sets as in Theorem 1 of Steudel and Ay [27], which we reviewed in our Theorem 1 (Section 2.4). We extend this theorem allowing for a conditioning set and adding flexibility on how ancestral sets are constructed, as well as allowing the selection of reduced ancestral sets that exclude some variables. Like for Theorem 1, we will use to indicate the collection of all ancestral sets in graph G from the collection of groups .

The extension of Theorem 1 to allow for a conditioning set requires an extension of the notion of ancestral set that will be used to determine the coefficients in the inequalities. The intuition for this extension is that conditioning on can introduce new dependencies between groups, in particular when a variable is a common descendant of several ancestral groups, and hence conditioning on it activates paths in which it is a collider. The coefficients need to take into account that common information contributions across ancestral groups can originate from these new dependencies. At the same time, conditioning can also block paths that created dependencies between the ancestral groups. To also account for this, we will not only consider ancestral sets in the original graph G, but in any graph , with . The graph is constructed by removing from G all the outgoing arrows from nodes in . This has an effect equivalent to conditioning on with regard to eliminating dependencies enabled by paths through in which the variables in are noncolliders, since removing those arrows deactivates the paths. To account for these effects of conditioning on , for each we define an augmented ancestral set of the groups as follows:

We then define the set , that is, the set of groups that have some ancestor not independent from some ancestor of that is also ancestor of , given .

For each , let be the maximal number such that a non-empty intersection exists between and other distinct augmented ancestral sets of . Furthermore, we define as the maximum for all :

We will use to refer to the whole set of maximal values for all groups. If required, we will use to specify that the collection is .

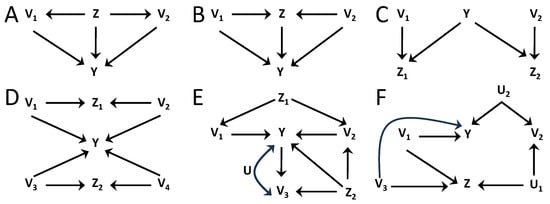

In Figure 4A–D, we consider examples to understand the rationale of how is determined in inequalities with a conditioning . In Figure 4A, for groups and , the augmented ancestral sets on graph G are and , which intersect on Z and for . However, Z is a noncollider in the path creating a dependence between and , and conditioning on Z renders them independent, so that overestimates the amount of information the groups may share after conditioning. Alternatively, selecting the ancestral sets are and , which do not intersect and for when calculated following Equation (7). A priori, we do not know which graph , , results in a tighter inequality. Here we see that leads to an inequality with more causal inference power than G for Figure 4A. In Figure 4B, Z is a collider between and , so that conditioning on Z creates a dependence between the groups. If the values were determined from the standard ancestral sets, in this case , for , which do not intersect, leading to unit coefficients. However, the augmented ancestral sets following Equation (7) are for , so that . This illustrates that the augmented ancestral sets are necessary to properly determine the coefficients in inequalities with conditioning sets , in this case reflecting that and can have redundant information.

Figure 4C shows a scenario in which conditioning creates dependencies of Y with and , which were previously independent. The standard ancestral sets and would not intersect in any , with and would lead to unit values for . On the other hand, the augmented ancestral sets are for and for , for all , with . This results in in all cases, which appropriately captures that the two groups can have common information about Y when conditioning on . The example of Figure 4D illustrates why each value is determined separately (Equation (7)) first, and only after is the maximum calculated (Equation (8)). Four groups are defined as for . If were to be determined directly from Equation (7) but using , instead of using separately and , then for all the ancestral sets the augmented ancestral set would include all variables, since is equal to . This would lead to . However, that determination would overestimate how many groups become dependent when conditioning on , since creates a dependence between and and between and , but no dependencies across these pairs are created. The determination of from Equations (7) and (8) properly leads to a tighter inequality than the one obtained if considering jointly both conditioning variables.

Equipped with this extended definition of , we now present our generalization of Theorem 1:

Theorem 2.

Let G be a DAG model containing nodes corresponding to a set of (possibly hidden) variables . Let be a set of observable target variables, and a conditioning set of observable variables, with . Let be a collection of (possibly overlapping) groups of (possibly hidden) variables . Consider a DAG selected as with , constructed by removing from graph G all the outgoing arrows from nodes in . Following Equation (7), define an augmented ancestral set in for each group for each variable in the conditioning set, . Following Equation (8), determine for each group, given the intersections of the augmented ancestral sets . Select a variable and a group of variables , possibly . Define the reduced ancestral sets for each , and the reduced collection . The information about in this reduced collection when conditioning on is bounded from below by

Proof.

The proof is provided in Appendix B. □

Theorem 2 provides several extensions of Theorem 1. First, it allows for a conditioning set . Second, given a hypothesis of the generative causal graph G underlying the data, Theorem 2 can be applied to any with , and hence offers a set of inequalities potentially adding causal inference power. As we have discussed in relation to Figure 4A–D, the selection of that leads to the tightest inequality in some cases will be determined by the causal structure, but in general it also depends on the exact probability distribution of the variables. Third, Theorem 2 allows excluding some variables from the ancestral sets, although imposing constraints in the causal structure of . The role of these constraints is clear in the proof at Appendix B. The case of Theorem 1 corresponds to , , and .

Excluding some variables can be advantageous. For example, if is univariate and it overlaps with some ancestral sets, as it is the case when some groups include descendants of Y, then the upper bound is equal to and also is equal to for all ancestral sets that include Y. Excluding provides a tighter upper bound and may provide more causal inferential power. Another scenario in which a reduced collection can be useful is when excluding removes all hidden variables from , such that is observable, giving as a testable upper bound instead of . When comparing inequalities with different sets , in some cases the form of the causal structure and the specification of which variables are hidden or observable will a priori determine an order of causal inference power among the inequalities. However, like for the comparison across with , in general the power of the different inequalities depends on the details of the generated probability distributions. Formulating general criteria to rank inequalities with different , , and in terms of their inferential power is beyond the scope of this work.