Abstract

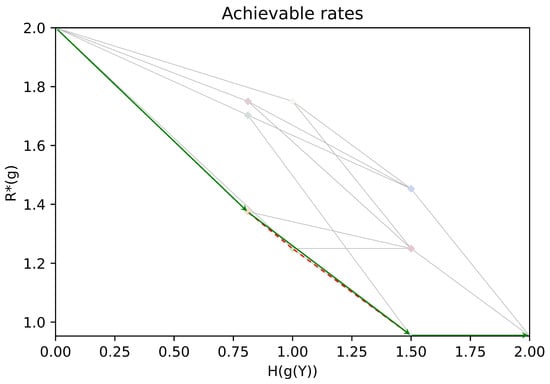

We investigate the zero-error coding for computing problems with encoder side information. An encoder provides access to a source X and is furnished with side information . It communicates with a decoder that possesses side information Y and aims to retrieve with zero probability of error, where f and g are assumed to be deterministic functions. In previous work, we determined a condition that yields an analytic expression for the optimal rate ; in particular, it covers the case where is full support. In this article, we review this result and study the side information design problem, which consists of finding the best trade-offs between the quality of the encoder’s side information and . We construct two greedy algorithms that give an achievable set of points in the side information design problem, based on partition refining and coarsening. One of them runs in polynomial time.

1. Introduction

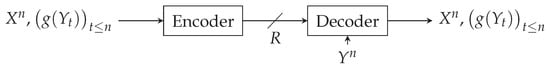

1.1. Zero-Error Coding for Computing

The problem of Figure 1 is a zero-error setting that relates to Orlitsky and Roche’s coding for computing problems from []. This coding problem appears in video compression [,], where models a set of images known at the encoder. The decoder does not always want to retrieve each whole image. Instead, the decoder receives, for each image , a request to retrieve information . This information can, for instance, be a detection: cat, dog, car, bike; or a scene recognition: street/city/mountain, etc. The encoder does not know the decoder’s exact request but has prior information about it (e.g., type of request), which is modeled by . This problem also relates to the zero-error Slepian–Wolf open problem, which corresponds to the special case, where g is constant and .

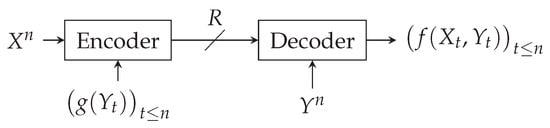

Figure 1.

Zero-error coding for computing with side information at the encoder.

Similar schemes to the one depicted in Figure 1 have already been studied, but they differ from the one we are studying in two ways. First, they consider that no side information is available to the encoder. Second, and more importantly, they consider different coding constraints: the lossless case is studied by Orlitsky and Roche in [], the lossy case by Yamamoto in [], and the zero-error “unrestricted inputs” case by Shayevitz in []. The latter results can be used as bounds for our problem depicted in Figure 1, but do not exactly characterize its optimal rate.

Numerous extensions of the problem depicted in Figure 1 have been studied recently. The distributed context, for instance, has an additional encoder that encodes Y before transmitting it to the decoder. Achievability schemes have been proposed for this setting by Krithivasan and Pradhan in [] using abelian groups; by Basu et al. in [] using hypergraphs for the case with maximum distortion criterion; and by Malak and Médard in [] using hyperplane separations for the continuous lossless case.

Another related context is the network setting, where the function of source random variables from source nodes has to be retrieved at the sink node of a given network. For tree networks, the feasible rate region is characterized by Feizi and Médard in [] for networks of depth one, and by Sefidgaran and Tchamkerten in [] under a Markov source distribution hypothesis. In [], Ravi and Dey consider a bidirectional relay with zero-error “unrestricted inputs” and characterize the rate region for a specific class of functions. In [], Guang et al. study zero-error function computation on acyclic networks with limited capacities, and give an inner bound based on network cut-sets. For both distributed and network settings, the zero-error coding for computing problems with encoder side information remains open.

In a previous work [], we determined a condition that we called “pairwise shared side information” such that, if satisfied, the optimal rate has a single-letter expression. This covers many cases of interest, in particular the case where is full support for any functions . For the sake of completeness, we review this result. Moreover, we propose an alternative and more interpretable expression for this pairwise shared side information. More precisely, we show that the instances where the “pairwise shared side information” condition is satisfied correspond to the worst possible optimal rates in an auxiliary zero-error Slepian–Wolf problem.

1.2. Encoder’s Side Information Design

In the zero-error coding for computing problems with encoder side information, it can be observed that a “coarse” encoder side information (e.g., if g constant) yields a high optimal rate , whereas a “fine” encoder side information (e.g., ) yields a low optimal rate . The side information design problem consists of determining the best trade-offs between the optimal rate and the quality of the encoder’s side information, which is measured by its entropy . This expression describes the optimal rate of a zero-error code that transmits the quantized version of Y via the g function. The best trade-offs correspond to the Pareto front of the achievable set, i.e., whose corner-points cannot be obtained by a time sharing between other coding strategies. In short, we aim at determining the Pareto front of the convex hull of the achievable pairs .

In this article, we propose a greedy algorithm that gives an achievable set of points in the side information design problem, when is full support. Studying our problem with the latter hypothesis is interesting because, unlike the case of the Slepian–Wolf problem, it does not necessarily correspond to a worst-case scenario. Recall indeed, that, when is full support, the Slepian–Wolf encoder does not benefit from the side information available at the decoder and needs to send X. In our problem instead, if the retrieval function , since the decoder already has access to Y, no information needs to be sent by the encoder and the optimal rate is 0. Finally, the proposed algorithm relies on our results with “pairwise shared side information”, which gives the optimal rate for all functions g and performs a greedy partition coarsening when choosing the next achievable point. Moreover, it runs in polynomial time.

This paper is organized as follows. In Section 2, we formally present the zero-error coding for computing problems and the encoder’s side information design problem. In Section 3, we give our theoretic results on the zero-error coding for computing problems, including the “pairwise shared side information” condition. In Section 4, we present our greedy algorithms for the encoder’s side information design problem.

2. Formal Presentation of the Problem

We denote sequences by . The set of probability distributions over is denoted by . The distribution of X is denoted by and its support is denoted by . Given the sequence length , we denote by the set of empirical distributions of sequences from . We denote by the set of binary words. The collection of subsets of a set is denoted by .

Definition 1.

The zero-error source-coding problem of Figure 1 is described by the following:

- -

- Four finite sets , , , and a source distribution .

- -

- For all , is the random sequence of n copies of , drawn in an i.i.d. fashion using .

- -

- Two deterministic functions

- -

- An encoder that knows and sends binary strings over a noiseless channel to a decoder that knows and that wants to retrieve without error.

A coding scheme in this setting is described by:

- -

- A time horizon and an encoding function such that is prefix-free.

- -

- A decoding function .

- -

- The rate is the average length of the codeword per source symbol,i.e., , where ℓ denotes the codeword length function.

- -

- n, , must satisfy the zero-error property:

The minimal rate under the zero-error constraint is defined by

The definition of the Pareto front that we give below is adapted to the encoder’s side information design problem and allows us to describe the best trade-off between the quality of the encoder side information and the rate to compute the function at the decoder. In other works, the definition of a Pareto front may differ depending on the minimization/maximization problem considered and on the number of variables to be optimized.

Definition 2

(Pareto front). Let be a set, the Pareto front of is defined by

Definition 3.

The side information design problem in Figure 1 consists of determining the Pareto front of the achievable pairs :

where Conv denotes the convex hull.

In our zero-error setup, all alphabets are finite. Therefore, the Pareto front of the convex hull in (6) is computed on a finite set of points, which correspond to the best trade-offs for the encoder’s side information.

3. Theoretic Results

Determining the optimal rate in the zero-error coding for computing problems, with or without encoder side information, is an open problem. In a previous contribution [], we determined a condition that, when satisfied, yields an analytic expression for the optimal rate. Interestingly, this condition is general as it does not depend on the function f to be retrieved at the decoder.

3.1. General Case

We first build the characteristic graph , which is a probabilistic graph that captures the zero-error encoding constraints on a given number n of source uses. It differs from the graphs used in [], as we do not need a cartesian representation of these graphs to study the optimal rates. Furthermore, it has a vertex for each possible realization of known at the encoder, instead of as in the zero-error Slepian–Wolf problem [].

Definition 4

(Characteristic graph ). The characteristic graph is defined by the following:

- -

- as a set of vertices with distribution .

- -

- are adjacent if and there exists such thatwhere .

The characteristic graph is designed with the same core idea as in []: and are adjacent if there exists a side information symbol compatible with the observation of the encoder (i.e., and ), such that . In order to prevent erroneous decodings, the encoder must map adjacent pairs of sequences to different codewords; hence the use of graph colorings, defined below.

Definition 5

(Coloring, independent subset). Let be a probabilistic graph. A subset is independent if for all . Let be a finite set (the set of colors), a mapping is a coloring if is an independent subset for all .

The chromatic entropy of gives the best rate of n-shot zero-error encoding functions, as in [].

Definition 6

(Chromatic entropy ). The chromatic entropy of a probabilistic graph is defined by

Theorem 1

(Optimal rate). The optimal rate is written as follows:

Proof.

By construction, the following holds: for all encoding functions , is a coloring of if and only if there exists a decoding function such that satisfies the zero-error property. Thus, the best achievable rate is written as follows:

where (12) comes from Fekete’s Lemma and from the definition of . □

A general single-letter expression for is missing due to the lack of the intrinsic structure of . In Section 3.2, we introduce a hypothesis that gives structure to and allows us to derive a single-letter expression for .

3.2. Pairwise Shared Side Information

Definition 7.

The distribution and the function g satisfy the “pairwise shared side information” condition if

where is the image of the function g. This means that for all z output of g, every pair “shares” at least one side information symbol .

Note that any full-support distribution satisfies the “pairwise shared side information” hypothesis. In Theorem 2, we give an interpretation of the “pairwise shared side information” condition in terms of the optimal rate in an auxiliary zero-error Slepian–Wolf problem.

Theorem 2.

The tuple satisfies the condition “pairwise shared side information” (13)

⟺ in the case , and for all , is full support.

The proof of Theorem 2 is given in Appendix A.1.

Definition 8

(AND, OR product). Let , be two probabilistic graphs; their AND (resp. OR) product denoted by (resp. ) is defined by the following: as a set of vertices, as probability distribution on the vertices, and are adjacent if

with the convention that all vertices are self-adjacent. We denote by (resp. ) the n-th AND (resp. OR) power.

AND and OR powers significantly differ in terms of existing single-letter expression for the associated asymptotic chromatic entropy. Indeed, in the zero-error Slepian–Wolf problem in [], the optimal rate , which relies on an AND power, does not have a single-letter expression. Instead, closed-form expressions for OR powers of graphs exist. More precisely, as recalled in Proposition 1, admits a single-letter expression called the Körner graph entropy, introduced in [], and defined below. This observation is key for us to derive a single-letter expression for our problem. More precisely, by using a convex combination of Körner graph entropies, we provide a single-letter expression in Theorem 3 for the optimal rate .

Definition 9

(Körner graph entropy ). For all , let be the collection of independent sets of vertices in G. The Körner graph entropy of G is defined by

where the minimum is taken over all distributions , with and the constraint that the random vertex V belongs to the random set W with probability one.

Below, we recall that the limit of the normalized chromatic entropy of the OR product of graphs admits a closed-form expression given by the Körner graph entropy . Moreover, the Körner graph entropy of OR products of graphs is simply the sum of the individual Körner graph entropies.

Proposition 1

(Properties of ). Theorem 5 in [] for all probabilistic graphs G and ,

Definition 10

(Auxiliary graph ). For all , we define the auxiliary graph by

- -

- as set of vertices with distribution ;

- -

- are adjacent if for some .

Theorem 3

(Pairwise shared side information). If and g satisfy (13), the optimal rate is written as follows:

The proof is in Appendix A.2, the keypoint is the particular structure of : a disjointed union of OR products.

Remark 1.

Now, consider the case where is full support. This is a sufficient condition to have (13). The optimal rate in this setting is derived from Theorem 3, which leads to the analytic expression in Theorem 4.

Theorem 4

(Optimal rate when is full support). When is full support, the optimal rate is written as follows:

where the function j returns a word in , defined by

Proof.

By Theorem 3, . It can be shown that is complete multipartite for all z as is full support; and it satisfies . □

3.3. Example

In this example, the “pairwise shared side information” assumption is satisfied and is strictly less than a conditional Huffman coding of X knowing ; and also strictly less than the optimal rate without exploiting at the encoder.

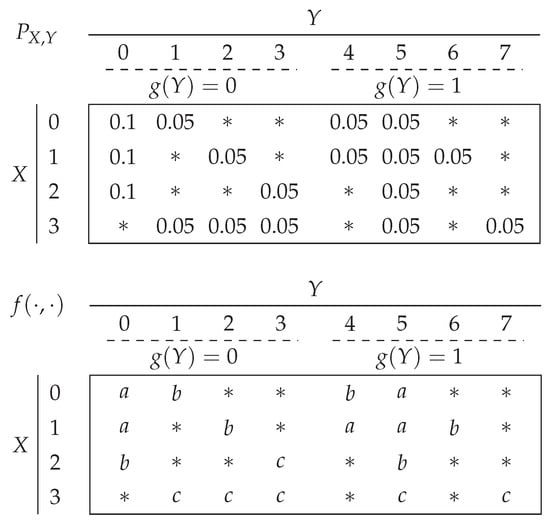

Consider the probability distribution and function outcomes depicted in Figure 2, with , , , and . Let us show that the “pairwise shared side information” assumption is satisfied. The source symbols share the side information symbol 0 (resp. 5) when (resp. ). The source symbol shares the side information symbols with the source symbols , respectively, when , and the source symbol 3 shares the side information symbol 5 with all other source symbols when .

Figure 2.

An example of and g that satisfies (13), along with the outcomes . The elements outside are denoted by *.

Since the “pairwise shared side information” assumption is satisfied, we can use Theorem 3; the optimal rate is written as follows:

First, we need to determine the probabilistic graphs and . In , the vertex 0 is adjacent to 2 and 3, as and . The vertex 1 is also adjacent to 2 and 3 as and . Furthermore is uniform, hence where is the cycle graph with 4 vertices.

In , the vertices 1, 2, 3 are pairwise adjacent as , and are pairwise different; and 0 is adjacent to 1, 2, and 3 because of the different function outputs generated by and . Thus, with , and is the complete graph with 4 vertices.

Now, let us determine and . On the one hand,

with ; and where in (22) is maximized by taking when , and otherwise.

The rate that we would obtain by transmitting X knowing at both encoder and decoder with a conditional Huffman algorithm is written as .

The rate that we would obtain without exploiting at the encoder is because of the different function outputs generated by and .

Finally, .

In this example, we have

This illustrates the impact of the side information at the encoder in this setting, as we can observe a large gap between the optimal rate and .

4. Optimization of the Encoder Side Information

4.1. Preliminary Results on Partitions

In order to optimize the function g in the encoder side information, we propose a new equivalent characterization of the function g in the form of a partition of the set . The equivalence is shown in Proposition 2 below.

Proposition 2.

For all , the collection of subsets is a partition of .

Conversely, if is a partition of , then there exists a mapping such that .

Proof.

The direct part results directly from the fact that g is a function. For the converse part, we take such that and we define by , where is the unique index such that . The property is therefore satisfied. □

Now, let us define coarser and finer partitions, with the corresponding notions of merging and splitting. These operations on partitions are the core idea of our greedy algorithms; as illustrated in Proposition 2, the partitions of correspond to functions for the encoder’s side information. Therefore, obtaining a partition from another means finding another function for the encoder’s side information.

Definition 11

(Coarser, Finer). Let be two partitions of the finite set . We say that is coarser than if

If so, we also say that is finer than .

Example 1.

Let , the partition is coarser than .

Definition 12

(Merging, Splitting). A merging is an operation that maps a partition to the partition . A splitting in an operation that maps a partition to the partition , where form a partition of the subset .

We also define the set of partitions (resp. ), which correspond to all partitions that can be obtained with a merging (resp. splitting) of :

Proposition 3.

If is coarser (resp. finer) than , then can be obtained from by performing a finite number of mergings (resp. splittings).

4.2. Greedy Algorithms Based on Partition Coarsening and Refining

In this Section, we assume to be full support.

With Proposition 2, we know that determining the Pareto front by a brute force approach would at least require to enumerate the partitions of . Therefore, the complexity of this approach is exponential in . In the following we describe the greedy Algorithms 1 and 2 that give an achievable set for the encoder’s side information design problem; one of them has a polynomial complexity. Then we give an example where the Pareto front coincides with the boundary of the convex hull of the achievable rate region obtained by both greedy algorithms.

| Algorithm 1 Greedy coarsening algorithm |

|

| Algorithm 2 Greedy refining algorithm |

|

In these a argmin (resp. argmax) means any minimizer (resp. maximizer) of the specified quantity; and the function is a function for the encoder’s side information corresponding to the partition , whose existence is given by Proposition 2.

The coarsening (resp. refining) algorithm starts by computing its first achievable point with being the finest (resp. coarsest) partition: it evaluates , with (resp. constant); and (resp. ). Then, at each iteration, the next achievable point will be computed by using a merging (resp. splitting) of the current partition . The next partition will be a coarser (resp. finer) partition chosen from (resp. ), following a greedy approach. Since we want to achieve good trade-offs between and , we want to decrease (resp. ) as much as possible while increasing the other quantity as less as possible. We do so by maximizing over the negative ratio

resp. minimizing over the negative ratio

hence the use of slope maximization (resp. minimization) in the algorithm. At the end, the set of achievable points computed by the algorithm is returned.

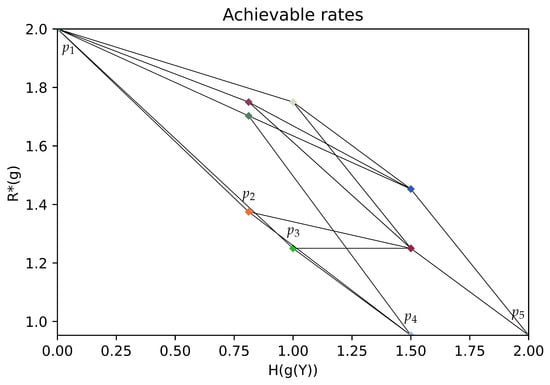

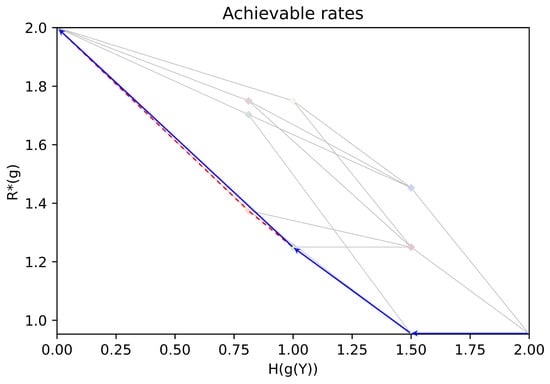

In Figure 3, we show rate pairs associated with all possible partitions of : a point corresponds to a partition of , its position gives the associated rates . Two points are linked if their corresponding partitions satisfy or . The obtained graph is the Hasse diagram for the partial order “coarser than”. Note that due to symmetries in the chosen example, several points associated with different partitions may overlap. In Figure 4, (resp. Figure 5), we give an illustration of the trajectory of the greedy coarsening (resp. refining) algorithm.

Figure 3.

An illustration of the rate pairs associated with all partitions of . The Pareto front is the broken line corresponding to the partitions ––––; with , , , , .

Figure 4.

An illustration of the trajectory of the coarsening greedy algorithm (blue), with the Pareto front of the achievable rates (dashed red).

Figure 5.

An illustration of the trajectory of the refining greedy algorithm (green), with the Pareto front of the achievable rates (dashed red).

As stated in Theorem 5, the complexity of the coarsening greedy algorithm is polynomial since is quadratic in and the evaluation of can be conducted in polynomial time. This polynomial complexity property is not satisfied by the refining greedy algorithm, as is exponential in .

Theorem 5.

The coarsening greedy algorithm runs in polynomial time in . The refining greedy algorithm runs in exponential time in .

Proof.

The number of points evaluated by the coarsening (resp. refining) greedy algorithm is (resp. ): mergings (resp. splittings) are made; and for each of these mergings, all points from (resp. ) are evaluated; they are, at most, (resp. , in the worst case ). Since the expression from Theorem 4 allows for an evaluation of in polynomial time in , the coarsening (resp. refining) greedy algorithm has a polynomial (resp. exponential) time complexity. □

Author Contributions

Formal analysis, N.C.; validation, N.C., M.L.T. and A.R.; writing—original draft preparation, N.C.; writing—review and editing, M.L.T. and A.R.; supervision, M.L.T. and A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Cominlabs excellence laboratory with the French National Research Agency’s funding (ANR-10-LABX-07-01).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Appendix A.1. Proof of Theorem 2

Consider the particular case of Figure 1. The optimal rate in this particular case equals the optimal rate in the following auxiliary problem, depicted in Figure A1: as source available at the encoder and to be retrieved by the decoder which knows Y (thus, expecting it to retrieve in addition to X does not change the optimal rate).

Figure A1.

An auxiliary zero-error Slepian–Wolf problem.

Definition A1

(Characteristic graph for the zero-error Slepian–Wolf problem). Let be two finite sets and be a conditional distribution from . The characteristic graph associated with is defined by the following:

- -

- as set of vertices;

- -

- are adjacent if for some .

This auxiliary problem is a particular instance of the zero-error Slepian–Wolf problem; its optimal rate is written as , where is the complementary graph entropy [] and G is the characteristic graph in the Slepian–Wolf problem, defined in Definition A1, for the pair . The graph G has as a set of vertices, and is adjacent to if there exists a side information symbol such that . It can be observed that the vertices and such that are not adjacent in G. The graph G is therefore a disjoint union indexed by :

where for all , is the characteristic graph defined in Definition A1 for the pair .

Assume that g and satisfy the “pairwise shared side information” condition. It directly follows that is full support for all . Let , and let be any two vertices of . By construction, there exists such that ; hence, , and are adjacent in . Each graph is therefore complete and perfect; the graph is a disjoint union of perfect graphs and is therefore also perfect. We have the following:

where (A3) comes from (A2); (A4) and (A5) follow from Corollary 12 in [] used on the perfect graph ; and (A6) holds as the independent subsets of the complete graph are singletons containing one of its vertices.

Conversely, assume that is full support for all , and .

Assume, ad absurdum, that at least one of the is not complete; then, there exists a coloring of that graph that maps two different vertices to the same color. Thus, there exists such that

as is full support. We have

where (A10) comes from (A2), (A11) results from Theorem 2 in [], and (A12) follows from (A8). We arrive at a contradiction, and hence all the graphs are complete: for all and , there exists a side information symbol such that ; hence, and satisfies . The condition “pairwise shared side information” is satisfied by .

Appendix A.2. Proof of Theorem 3

Let us specify the adjacency condition in under assumption (13). Two vertices are adjacent if they satisfy (7) and (8); however, (7) is always satisfied under (13). Thus, are adjacent if and

It can be observed that condition (A13) is the adjacency condition of an OR product of adequate graphs; more precisely,

Although cannot be expressed as an n-th OR power, we will show that its chromatic entropy asymptotically coincides with that of an appropriate OR power: we now search for an asymptotic equivalent of .

Definition A2.

is the set of colorings of that can be written as for some mapping ; where denotes the type of .

In the following, we define . Now, we need several Lemmas. Lemma A1 states that the optimal coloring of has the type of as a prefix at a negligible rate cost. Lemma A3 gives an asymptotic formula for the minimal entropy of the colorings from .

Lemma A1.

The following asymptotic comparison holds as follows:

Definition A3

(Isomorphic probabilistic graphs). Let and be two probabilistic graphs. We say that is isomorphic to (denoted by ) if there exists an isomorphism between them, i.e., a bijection such that

- -

- For all , ;

- -

- For all , .

Lemma A2.

Let be a finite set, let and let be a family of isomorphic probabilistic graphs, then for all .

Lemma A3.

The following asymptotic comparison holds as follows:

The proof of Lemma A1 is given in Appendix A.3 and its keypoint is the asymptotically negligible entropy of the prefix of the colorings of . The proof of Lemma A2 is given in Appendix A.5. The proof of Lemma A3 is given in Appendix A.4 and relies on the decomposition , where is the subgraph induced by the vertices such that the type of is . We show that is a disjoint union of isomorphic graphs whose chromatic entropy is given by Lemma A2 and (17): . Finally, uniform convergence arguments enable us draw a conclusion.

Appendix A.3. Proof of Lemma A1

Appendix A.4. Proof of Lemma A3

For all , let

with the probability distribution induced by . This graph is formed of the connected components of whose corresponding has type . We need to find an equivalent for . Since is a disjoint union of isomorphic graphs, we can use Lemma A2 as follows:

On one hand,

where (A27) comes from Lemma 14 in [], (A28) comes from (17). On the other hand,

where is a quantity that does not depend on and satisfies ; (A29) comes from the subadditivity of . Combining Equations (A26), (A28), and (A30) yields

Appendix A.5. Proof of Lemma A2

Let be isomorphic probabilistic graphs and G such that . Let be the coloring of with minimal entropy, and let be the coloring of G defined by

where is the unique integer such that and is an isomorphism between and . In other words, applies the same coloring pattern on each connected component of G. We have

where h denotes the entropy of a distribution; (A44) comes from the definition of ; and (A46) comes from the definition of .

Now, let us prove the upper bound on . Let c be a coloring of G, and let (i.e., is the index of the connected component for which the entropy of the coloring induced by c is minimal). We have

where (A48) follows from the concavity of h; (A49) follows from the definition of ; (A50) comes from the fact that c induces a coloring of ; (A51) comes from the fact that and are isomorphic. Now, we can combine the bounds (A46) and (A51): for all coloring c of G we have

which yields the desired equality when taking the infimum over c.

References

- Orlitsky, A.; Roche, J.R. Coding for computing. In Proceedings of the IEEE 36th Annual Foundations of Computer Science, Milwaukee, WI, USA, 23–25 October 1995; IEEE: Piscataway, NJ, USA, 1995; pp. 502–511. [Google Scholar]

- Duan, L.; Liu, J.; Yang, W.; Huang, T.; Gao, W. Video coding for machines: A paradigm of collaborative compression and intelligent analytics. IEEE Trans. Image Process. 2020, 29, 8680–8695. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Liu, S.; Xu, X.; Rafie, M.; Zhang, Y.; Curcio, I. Recent standard development activities on video coding for machines. arXiv 2021, arXiv:2105.12653. [Google Scholar]

- Yamamoto, H. Wyner-ziv theory for a general function of the correlated sources (corresp.). IEEE Trans. Inf. Theory 1982, 28, 803–807. [Google Scholar] [CrossRef]

- Shayevitz, O. Distributed computing and the graph entropy region. IEEE Trans. Inf. Theory 2014, 60, 3435–3449. [Google Scholar] [CrossRef]

- Krithivasan, D.; Pradhan, S.S. Distributed source coding using abelian group codes: A new achievable rate-distortion region. IEEE Trans. Inf. Theory 2011, 57, 1495–1519. [Google Scholar] [CrossRef]

- Basu, S.; Seo, D.; Varshney, L.R. Hypergraph-based Coding Schemes for Two Source Coding Problems under Maximal Distortion. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020. [Google Scholar]

- Malak, D.; Médard, M. Hyper Binning for Distributed Function Coding. In Proceedings of the 2020 IEEE 21st International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Atlanta, GA, USA, 26–29 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–5. [Google Scholar]

- Feizi, S.; Médard, M. On network functional compression. IEEE Trans. Inf. Theory 2014, 60, 5387–5401. [Google Scholar] [CrossRef]

- Sefidgaran, M.; Tchamkerten, A. Distributed function computation over a rooted directed tree. IEEE Trans. Inf. Theory 2016, 62, 7135–7152. [Google Scholar] [CrossRef]

- Ravi, J.; Dey, B.K. Function Computation Through a Bidirectional Relay. IEEE Trans. Inf. Theory 2018, 65, 902–916. [Google Scholar] [CrossRef]

- Guang, X.; Yeung, R.W.; Yang, S.; Li, C. Improved upper bound on the network function computing capacity. IEEE Trans. Inf. Theory 2019, 65, 3790–3811. [Google Scholar] [CrossRef]

- Charpenay, N.; Le Treust, M.; Roumy, A. Optimal Zero-Error Coding for Computing under Pairwise Shared Side Information. In Proceedings of the 2023 IEEE Information Theory Workshop (ITW), Saint-Malo, France, 23–28 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 97–101. [Google Scholar]

- Alon, N.; Orlitsky, A. Source coding and graph entropies. IEEE Trans. Inf. Theory 1996, 42, 1329–1339. [Google Scholar] [CrossRef]

- Witsenhausen, H. The zero-error side information problem and chromatic numbers (corresp.). IEEE Trans. Inf. Theory 1976, 22, 592–593. [Google Scholar] [CrossRef]

- Körner, J. Coding of an information source having ambiguous alphabet and the entropy of graphs. In Proceedings of the 6th Prague Conference on Information Theory, Prague, Czech Republic, 19–25 September 1973; pp. 411–425. [Google Scholar]

- Tuncel, E.; Nayak, J.; Koulgi, P.; Rose, K. On complementary graph entropy. IEEE Trans. Inf. Theory 2009, 55, 2537–2546. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J.; Lovász, L.; Marton, K.; Simonyi, G. Entropy splitting for antiblocking corners and perfect graphs. Combinatorica 1990, 10, 27–40. [Google Scholar] [CrossRef]

- Csiszár, I.; Körner, J. Information Theory: Coding Theorems for Discrete Memoryless Systems; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).