Abstract

It has been over 100 years since the discovery of one of the most fundamental statistical tests: the Student’s t test. However, reliable conventional and objective Bayesian procedures are still essential for routine practice. In this work, we proposed an objective and robust Bayesian approach for hypothesis testing for one-sample and two-sample mean comparisons when the assumption of equal variances holds. The newly proposed Bayes factors are based on the intrinsic and Berger robust prior. Additionally, we introduced a corrected version of the Bayesian Information Criterion (BIC), denoted BIC-TESS, which is based on the effective sample size (TESS), for comparing two population means. We studied our developed Bayes factors in several simulation experiments for hypothesis testing. Our methodologies consistently provided strong evidence in favor of the null hypothesis in the case of equal means and variances. Finally, we applied the methodology to the original Gosset sleep data, concluding strong evidence favoring the hypothesis that the average sleep hours differed between the two treatments. These methodologies exhibit finite sample consistency and demonstrate consistent qualitative behavior, proving reasonably close to each other in practice, particularly for moderate to large sample sizes.

1. Introduction

One of the fundamental topics in statistics revolves around the one-sample population means and the comparison of two-sample means. The go-to method for addressing this question is typically the Student’s t test [1]. Conducting a hypothesis test for the population mean holds significant importance in the scientific research community and various fields where making inferences about population parameters is pivotal. Frequentists heavily rely on p-values to determine whether to reject or not reject the null hypothesis [2]. However, p-values, along with significance testing based on fixed -levels, tend to exaggerate evidence against null hypotheses for large sample sizes and lack the operational meaning of a probability [3,4,5]. While the Bayesian approach has gained attention in hypothesis testing and model selection [6,7], its application in essential statistics topics remains somewhat limited [8]. This raises the question: Why is a Bayesian Student’s t test necessary? We argue for two main reasons. Firstly, Bayesian tests provide evidence for a hypothesis of interest that naturally adapts to any sample size. Secondly, the Bayes factor can be easily converted to posterior model probabilities and support one of the testing frameworks. Another crucial consideration is that scientific questions often have a Bayesian nature, such as, “What is the probability that these two treatments differ?”.

Bayesian hypothesis testing and model selection have been undergoing extensive development because of recent advances in the creation of ”default” Bayes factors that can be used in the absence of substantial subjective prior information [5,9,10,11]. The study in [12] proposed some arguments for the choice of the prior, such as (i) the fact that it is located around zero, (ii) the scale parameter , (iii) the fact that it is symmetric, and (iv) that it should have no moments. Bayes factors are attractive in terms of interpretation as odds, and the direct probability of the posterior model is readily understandable by general users of statistics [13]. Methods based on conjugate priors for the Student’s t test have a long history. Perhaps the most transparent approach for the two-sample Student’s t is in [14]. However, natural conjugate priors do not lead to robust procedures; they have tails that are typically of the same form as the likelihood function and will hence remain influential when the likelihood function is concentrated in the prior tails, which can lead to inconsistency [15]. This conjugate Bayes factor for comparing two samples based on the Student’s t is finite sample-inconsistent, i.e., it does not go to zero when the estimates go to infinity.

In this work, we proposed an objective and robust Bayes factor for testing the hypothesis of one-sample and two-sample means based on the t-statistic. Our Bayes factors can be easily implemented, allowing researchers to determine support for a particular hypothesis. This manuscript proceeds as follows. In Section 2, we derive these objectives and robust Bayes factors for one-sample and two-sample scenarios and demonstrate their finite sample consistency. In Section 3, we compare our Bayes factors with existing methodologies under several experimental frameworks. In Section 4, we apply our methodologies to real-life datasets such as the original Gosset sleep data and to comparisons of changes in blood pressure in mice according to their assigned diet. We conclude this work with a discussion in Section 5.

2. Methodology

Statistical inference for the mean (one or two samples) has an important rule in statistics and several fields. For instance, it is very common to test in terms of the average or population mean. Suppose that we are comparing two hypotheses, . Suppose that we have available prior densities for each hypothesis and let be the probability density function under the ith hypothesis. Define the marginal or predictive densities for each hypothesis of interest (or model),

which are sometimes called the evidence of the ith hypothesis or model. The Bayes factor for comparing to is then given by

The interpretation of the Bayes factor proceeds as follows. If , then the evidence is in favor of the null hypothesis, while gives evidence in favor of the alternative hypothesis. If prior probabilities of the hypotheses are available, then one can compute the posterior probabilities of it from the Bayes factors. The posterior probability of , given the data , is

where .

2.1. One-Sample Mean Hypothesis Testing

A one-sample hypothesis test for the population mean is one of the most fundamental statistics topics, either as an introductory topic or to address research questions. Suppose we have a random sample from a normal distribution, i.e., , with an unknown standard deviation . We are interested in testing for the population mean .

A Bayesian approach to test this hypothesis is based on the theory of intrinsic priors [16,17]. The authors begin with the noninformative priors for the null and alternative hypotheses, and After some calculations, the authors showed that the conditional proper intrinsic prior under the alternative Hypothesis is given by

One can express . The resulting intrinsic prior under is defined as

The approximate Bayes factors based on the intrinsic prior for a one-sample population mean are

Here, and are the Maximum Likelihood Estimators (MLEs) under . The resulting Bayes factor for the hypothesis in (4) is

where , where is the sample mean and s is the sample standard deviation. Larges values of give evidence in favor of the null hypothesis. Also, we can transform these Bayes factors using the natural logarithm scale (), and values above 3 give some evidence in favor of the null hypothesis, while values above 10 give stronger evidence in favor of the null hypothesis; see [13].

This Bayes factor satisfies the finite sample consistency principle. Suppose that we are comparing the alternative hypothesis with the null hypothesis, . As the least squares estimate (and the noncentrality parameter) goes to infinity, so that one becomes sure that is wrong, the Bayes factor of to goes to zero.

Theorem 1.

For a fixed sample size , the Bayes factor based on the intrinsic prior () for the one-sample mean μ is finite sample-consistent.

Proof.

For a fixed sample, , and letting or equivalently , the Bayes factor based on the intrinsic prior goes to 0, i.e.,

The Bayes Factor based on the intrinsic prior is finite sample consistent. □

Robust Bayes Factor for the One-Sample Test for the Mean

Even though the Bayes Factor constructed using the intrinsic prior is finite sample-consistent, it is only an approximation. Evidence has been found that priors with flatter tails than those of the likelihood function tend to be fairly robust, [18,19]. The robust prior proposed here is developed by [20]; we call it the Berger robust prior. This prior is hierarchical; by such a choice, we can obtain robustness while keeping the calculations relatively simple, and the computations are exact. The definition of this robust prior, denoted , can be defined as follows:

- , where

- has a density on .

where p is the rank of the design matrix. Recall that we are interested in testing (4); therefore, under the null hypothesis, the likelihood is in the form

The noninformative prior under the null hypothesis is . The marginal density under the null hypothesis is given by

where , the sums of squares under and is the gamma function. Similarly, we can obtain an alternative likelihood under the alternative Hypothesis :

Here, is the sample mean and is the sum of squares under the alternative. The Berger robust prior will be considered under the alternative hypothesis . The marginal density under is

Computing the ratio of the marginals from (6) and (7), the Bayes factor based on the Berger robust prior is given by

Here, is the usual t-statistic with degrees of freedom, where and s are the sample mean and sample standard deviation, respectively.

Theorem 2.

For a fixed sample size , the Bayes factor based on the Berger robust prior () for the one-sample mean μ is finite sample-consistent.

Proof.

For a fixed sample, , and letting , or equivalently , the Bayes factor based on the Berger robust prior goes to 0, i.e.,

The Bayes factor based on the Berger robust prior is finite sample-consistent. □

Unlike the Bayes factor derived with the intrinsic prior, this robust Bayes factor has a closed form. We conclude the derivations for the Bayesian approach based on the intrinsic and robust prior that are finite sample-consistent. We now extend the objective and robust Bayesian approach to the two-sample scenarios.

2.2. Two-Sample Mean Hypothesis Test

Another fundamental research question of interest is whether or not the two groups are similar. This problem is usually addressed in the two-sample Student’s t test to compare if these groups differ in means. Let and let independent of X with unknown. At first, we noticed that we were assuming that these two samples arise from a normal distribution with different means but equal variances. It is common interest to determine if these two samples are equal, or at least that they do not differ in location. To answer this, a hypothesis test for comparing two-sample means is performed, i.e., . To answer this question, Ref. [14] proposed the conjugate Bayes factor. This Bayes factor is based on the conjugate prior: Centering the prior assessment on the null hypothesis, i.e., making , is usually a very reasonable choice. Then, the conjugate Bayes factor is simplified as However, this Bayes factor is not finite sample-consistent, as , or ; the does not go to zero, or equivalently, the posterior probability of the null hypothesis does not go to zero. In fact, as , then , where and are the degrees of freedom.

2.2.1. Intrinsic Bayes Factor for Two-Sample Means

To address the limitation of the conjugate prior, our first approach is based on the theory of intrinsic priors introduced in [16,17]. Similar to the one-sample case, the method is to dig out a prior that yields, for moderate to large sample sizes, results equivalent to an established method for scaling the intrinsic Bayes factors. The resulting set of equations typically has solutions, at least in the nested hypothesis scenario, which is our case, and has been successfully applied coupled with the intrinsic Bayes factor method. Consider the hypotheses tests for the comparison of two populations means with unknown and equal variance , . Let and , then and . This transformation leads us to the following design matrix based on the training samples:

where and are vectors of 1’s and 0’s of length k. The parameter of non-centrality can be computed as

which becomes, in the comparison of two means, . Following the general theory of the intrinsic Bayes factor for linear models [16,17], we have that an intrinsic prior is of the following form:

Substitution from using the non-centrality parameter of (9), then the transformation to the conditional of the parameter under the simple test, is . The conditional intrinsic prior for the hypothesis test is

This conditional prior is proper, i.e., and it satisfies the condition discussed by [12]. The intrinsic prior, under the alternative Hypothesis , is of the form , where , i.e.,

Setting up this framework, we can derive the intrinsic Bayes factor to compare two-sample means. We will first obtain the marginal density under the null hypothesis . First, consider the joint likelihood function of the two samples under the null Hypothesis :

Here, is the sample mean of the first group, is the sample mean of the first group, and , are the sums of squares under the null hypothesis. The marginal density using the non-informative prior is computed as

where , where , is the pooled standard deviation, i.e., and . Similarly, the joint likelihood function of the two samples under the alternative Hypothesis is given by

The marginal density using the intrinsic prior defined in (11) is given by

As in the one-sample framework, this Bayes factor can be approximated using the non-informative prior in the asymptotic result as

Using (12) and (14), we can compute :

where , is the pooled estimate of the variance, and . Let and be the corresponding maximum likelihood estimator (MLE). Let and ; where is the variance pooled estimates and . We can express the in terms of the t-statistic. Then, the approximate intrinsic Bayes factor can be obtained by

Here, and is the hyperbolic cotangent function defined as .

Theorem 3.

For a fixed sample size , the Bayes factor based on the intrinsic prior () for the comparison of two population means is finite sample-consistent.

Proof.

For a fixed sample, , and letting , or equivalently , the Bayes factor based on the intrinsic prior goes to 0, i.e.,

The Bayes factor based on the intrinsic prior is finite sample-consistent. □

2.2.2. Robust Bayes Factor for the Comparison of Two-Sample Means

Consider observations of a random sample from group 1 and group 2 of size and , respectively. We assume these groups have common variance (, respectively. The model of interest in this case, , with , for and . We want to compare against . Further, consider the constraint that ; then, the design matrix can be written as

This leads us to consider the following hypothesis, . The reference’s priors, under the null hypothesis and alternative hypothesis : and . First, we proceed to find the marginal density under . Consider the joint likelihood function under the null Hypothesis :

Here, and , is the sample mean of the ith group, and is the sum of squares under the null hypothesis. The marginal density under the null hypothesis is given by

where . Here, is the sample pooled estimate of the variance, , and . For the alternative Hypothesis , we first consider the joint likelihood of group 1 and group 2:

The marginal density is given by

Here, . The robust Bayes factor is obtained by computing the ratio of the marginal densities of (17) and (18):

To finish the calculation of the robust Bayes factor , the term has to be defined. Therefore, we propose using the effective sample size (TESS) of [21]. The first factor , and the second factor b is ; then, . Derivation of TESS is displayed in Appendix A.1.

Theorem 4.

For a fixed sample size n ≥ 4, the Bayes factor based on the robust prior () for the comparison of two populations means is finite sample-consistent.

Proof.

For a fixed sample, , and letting , or equivalently , the Bayes factor based on the Berger robust prior goes to 0, i.e.,

The Bayes factor based on the Berger robust prior is finite sample-consistent. □

The Berger robust prior yields the following (exact) expression for the correction of the main term (for group i):

Making the change of variables and then taking the Jacobian,

the conditional intrinsic prior of Equation (10) is exactly recovered; . This established a correspondence between the intrinsic and Berger’s robust priors for the Student’s t test.

2.2.3. The Effective Sample Size Bayesian Information Criterion (BIC-TESS)

Our final Bayes factor for comparing two-sample means is a variation of the Bayesian Information Criterion (BIC) of [22]. The BIC is a popular method to determine the best model in a set of competing models. However, in comparing the two-sample means, the BIC does not consider the information available in both groups but rather the entire sample. Here, we proposed replacing the sample size n with TESS. This may be used to form what may be claimed to be the corrected BIC or BIC-TESS. It can be demonstrated that BIC with TESS is:

where is defined by [11]. Derivation of TESS is in Appendix A.1. If we have a balanced situation, where , the BIC-TESS is similar to the regular BIC. If the situation is unbalanced, the BIC-TESS is stabilized, since as , the .

Theorem 5.

For a fixed sample size , the corrected BIC () for the two-sample mean μ is finite sample-consistent.

Proof.

For a fixed sample, , and letting , or equivalently , the Bayesian Information Criterion constructed with TESS goes to 0, i.e.,

The corrected Bayesian Information Criterion is is finite sample-consistent. □

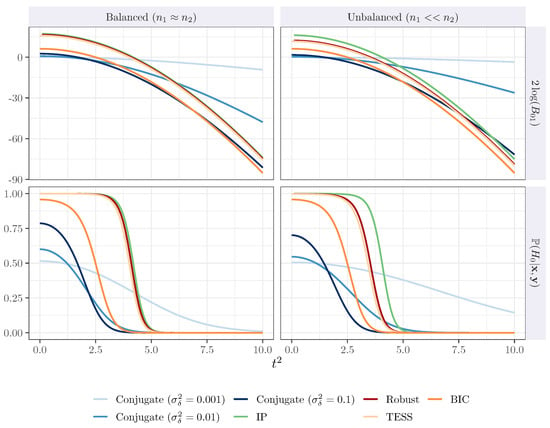

In Figure 1, we compare the asymptotic behavior of the Bayes factors and the posterior probability of the null hypothesis when the samples are balanced () and unbalanced (. The Bayes factor, based on the Berger robust prior (dark red), is very close in the range of evidence to the intrinsic Bayes factor (green) and the BIC-TESS (light orange). The robust Bayes factor, the intrinsic Bayes factor, and the BIC-TESS are relatively closed when the situation is balanced. In the unbalanced scenario, the robust Bayes factor and the BIC-TESS remain relatively close, while the intrinsic Bayes factor slightly increases. The conjugate Bayes factor (blue) is represented with different values of the prior variance ; darker color means higher values for the prior variance. Recall that the conjugate Bayes factor is not finite sample-consistent, and its behavior depends on the choice of .

Figure 1.

Results in terms of and posterior probability for the finite sample consistency.

3. Simulation Experiments

Experiments for the One- and Two-Sample Mean Comparisons

We generated 500 datasets from random samples taken from a normal distribution, Student’s t distribution with one degree of freedom, and gamma distribution. For each of these distributions, the mean and standard deviation values were set to and . The second group was created with a combination of several parameters for the location; the mean values were , and for the standard deviation of the second group, . In the case of the Student’s t distribution, both groups were simulated with degrees of freedom. The simulated gamma samples were obtained using the method of moments for the shape parameter, with , and the scale parameter, with , for .

We compared our methodologies with several Bayes factors used when comparing two population means, displayed in Table 1. is the classical Bayesian Information Criterion (BIC) of [22], () is based on the Zellner and Siow prior [23], the two-sample Student’s t Bayes factor of [14] is based on the conjugate prior with , the arithmetic Bayes factor () of [24], and [12]’s Bayes Factor () for the comparison of two-sample means with equal variances. One set of these Bayes factors—the BIC of Schwartz and the Zellner and Siow Bayes factors—depends only on the sample size n. The other set, based on the conjugate prior, intrinsic, Berger’s (here called robust), and finally, the modified Jeffrey’s prior, depends on the term . In our experiments, we do not consider the constant for , since we believe it satisfies the condition that the samples arise from the same distribution; for more details about the use of the constant , see [12]. We also studied these Bayes factors in unbalanced situations. Heavily unbalanced samples are interesting not only from a theoretical point of view but also because they are often observed in practice in observational studies; the results of these are displayed in Figure A1, Figure A2 and Figure A3.

Table 1.

Bayes factors based on the one- and two-sample means based on the Student’s t test. The third column applies only to the two-sample comparison and is limiting when and .

Performance was compared using the twice natural base logarithm Bayes factors for comparing the null hypothesis () against the alternative (). This transformation allows the interpretation to be on the same scale as the deviance and likelihood ratio test statistics, as discussed in [13].

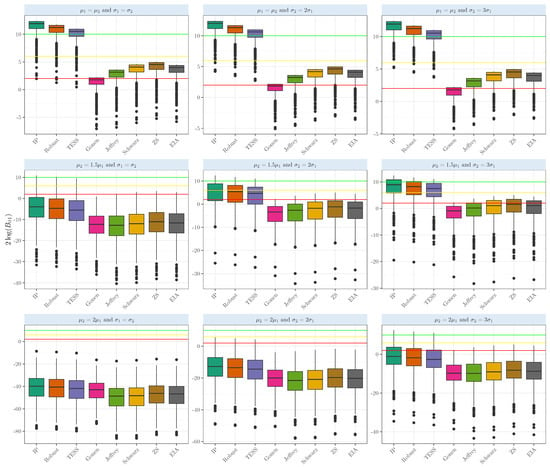

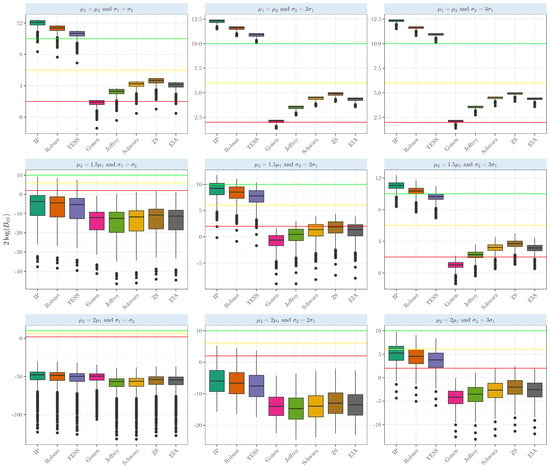

Figure 2 displays the results for the evidence based on the normal distributions when testing whether two-sample means are equal (). The red line represents the cut-off for 10 (strong evidence), the yellow line for 6 (positive evidence), and the green for 2 (weak evidence). In the actual case when the means are equal, the Bayes factors based on the intrinsic prior and robust prior show strong evidence in favor of the null hypothesis. The average based on the intrinsic prior shows strong evidence in favor of the null hypothesis , while the Bayes factor based on the robust prior gives strong evidence in favor of the true case , all above the red line. BIC-TESS also strongly supports the true case . The other Bayes factors provide positive evidence for the true case, with averages ranging from 2.54 to 3.93. Even when the means were equal, and the samples had larger variance (), our objectives and robust Bayes factors provided strong evidence in favor of the true case, with the average above 10. The intrinsic Bayes factor and the robust prior were above 90%, showing either strong or very strong evidence in favor of the null hypothesis when the means were equal; see Table A2 for a detailed comparison.

Figure 2.

Evidence in the scale when comparing the population means of two samples that arise from a normal distribution with several means and variances with equal sizes .

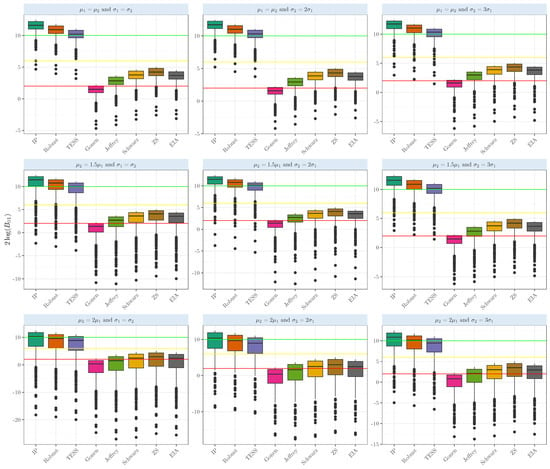

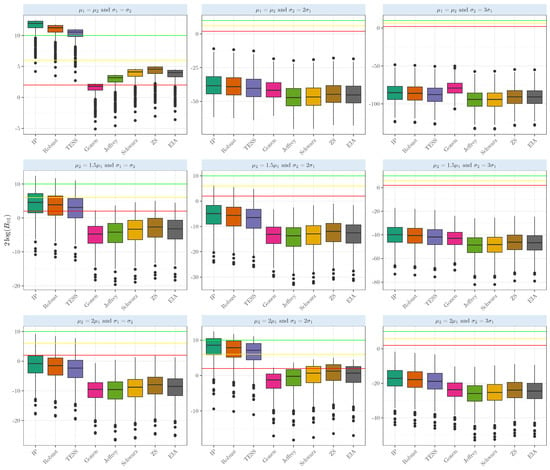

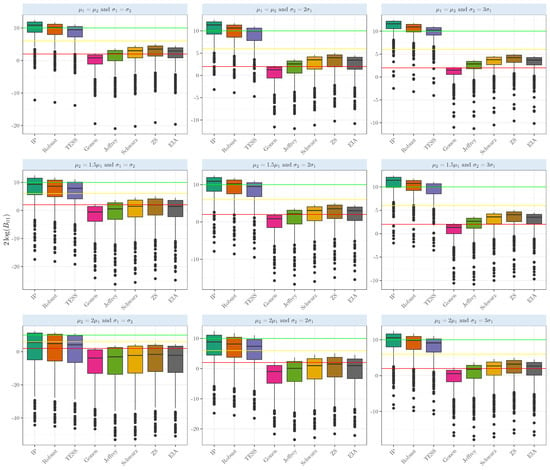

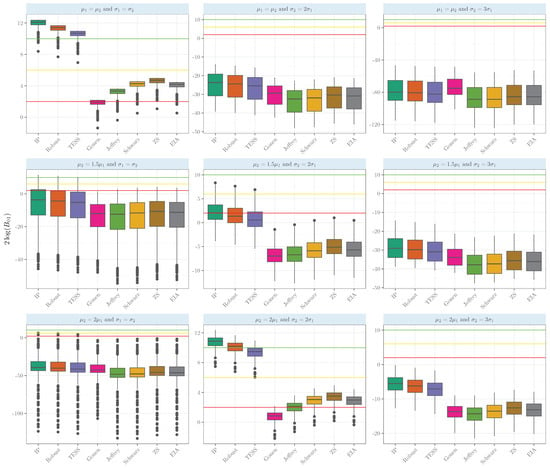

In the Student’s t random samples, when testing whether two-sample means are equal (), we can observe in Figure 3 the results when the means are equal. The Bayes Factors based on the intrinsic prior and robust prior show strong evidence in favor of the null hypothesis. The average based on the intrinsic prior, robust prior, and BIC-TESS shows strong evidence in favor of the null hypothesis (averages above 10), with values of , and ; dispersion was relatively low, ranging from 1.01 to 1.03. The competing Bayes factors provide slightly positive evidence for the true case, with averages ranging from 2.65 to 4.04. It is interesting to see that in the case of , our Bayes factor gave positive evidence above the yellow line but was very variable; the sample standard deviation ranged from 4.98 to 5.03. Finally, in the case of gamma samples, our Bayes factors gave strong evidence only when the means and the variances were equal. Departing from any of these conditions gave strong evidence that the means were unequal; see Figure 4. For more details about the simulation results’ numerical performance, see Table A1.

Figure 3.

Evidence in the scale when comparing the population means of two samples that arise from a Student’s t with one degree of freedom with several means and variances with equal sizes .

Figure 4.

Evidence in the scale when comparing the population means of two samples that arise from a gamma distribution with several shapes and scales with equal sizes .

4. Application in Real Dataset

In this section, we applied the proposed one and two Bayes factors based on the intrinsic, Berger, and robust priors, and BIC-TESS based on the Student’s t statistic.

4.1. Gosset Original Dataset

We first consider the century-long original Student’s t sleep data from [1,25] that still raise interesting discussion; see [26,27]. In this study, the number of hours of sleep under both drugs (Dextro and Laevo) was recorded for each patient. The difference in hours was recorded to determine effectiveness, and the average number of hours of sleep gained by using each drug (Dextro and Laevo) was measured. The authors concluded that, in usual doses, Laevo was soporific, but Dextro was not. This analysis is treated as a paired sample, since it compares the sleep hours between treatments. Paired samples lead us to the one sample. The hypothesis of interest is versus ; the test statistic is with a p-value of 0.002. At the 5% significance level, we can conclude that there is a difference in the average sleep hours between Laevo and Dextro.

However, the Gosset original dataset has not been addressed using an objective and robust Bayesian approach. The value of the test statistics is the same as before, with . The () was computed for the intrinsic and robust Bayes factor, along with the associated posterior probabilities (). The and are positive, indicating strong evidence that the average sleep hours are different. Further, the posterior probability based on the intrinsic prior is 0.949, and the posterior probability based on the Berger robust prior is 0.952. Both posterior probabilities are above 90%, suggesting strong evidence favoring the average sleep difference.

This dataset is considered as a paired sample, since the recorded number of sleep hours belongs to the same participant. However, the treatments, Dextro and Laevo, might need to be considered independently. If these are considered independently, then a two-sample framework arises. We are interested in determining the sleep hours when receiving Laevo versus when receiving Dextro. Assuming equal variances between Laevo and Dextro, the hypothesis of interest is versus , where is the average sleep hours when receiving Laevo and is the average sleep hours when receiving Dextro. The two-sample test statistic is with a p-value of 0.079. At the 5% significance level, we can conclude that there is no difference in the average sleep hours when using Laevo versus Dextro. In our Bayesian approach, and , indicating weak evidence that the average number of sleep hours differs between Laevo and Dextro. Both posterior probabilities are above 15%, suggesting weak evidence that the average number of sleep hours differs when using Laevo and Dextro.

4.2. Induced Hypertension on Mice According to Diet

Our first application consists of the data from [28], but they were analyzed in a Bayesian framework using intrinsic priors by [24]. In this study, the researchers were interested in how intermittent feeding affected the blood pressure of rats. The treatment group consisted of eight rats fed intermittently for weeks, and at the final period, the rats’ blood pressure measurements were taken. The blood pressure measurements of a second group of seven rats fed the usual way were defined as a control group. The hypothesis of interest is that the average blood pressure is different when the rats have intermittent fasting compared to those with their usual diet, i.e., versus . At the 5% significance level, with a p-value , one can conclude that there exists a difference in the mean blood pressure level according to their feeding style.

The study in Ref. [24] computed the expected arithmetic Bayes factor that favors the alternative hypothesis with , providing support that the average blood pressure measurements differ based on diet. Notably, the Bayes factors based on the intrinsic priors and robust priors yield negative values, and , respectively, indicating evidence against . However, the corresponding posterior probabilities () are and , suggesting weak evidence for the alternative hypothesis that the means are different. The corrected BICTESS suggests weaker evidence against with a and a posterior probability of . In contrast, the conjugate and , indicating very weak evidence in favor of . The associated posterior probability is 0.681.

The extreme observation in the intermittent group (115) was removed. The , suggesting evidence in favor of , while the posterior probability of indicates a moderate level of confidence in this conclusion. The Bayes factor constructed with the Berger robust prior exhibits a higher , along with the posterior probability of , indicating stronger support that the average of blood pressure differs by type of fasting. TESS models present even higher , respectively, with corresponding posterior probabilities of 0.926, indicating substantial evidence for .

5. Discussion

In this work, we proposed the objective and robust Bayes factors for the one-sample and two-sample comparisons. These newly proposed Bayes factors are finite sample-consistent. Both the exact and approximate forms of the Bayes factors can be easily implemented using any open-source or commercial software. Another advantage of using Bayes factors is that the posterior probabilities of the hypothesis test are easily interpretable. We reanalyzed the original study by [1] and the comparison of blood pressure in rats according to different feeding types. Our objective and robust Bayes factors showed strong evidence that the average number of hours differed between Laevo and Dextro in the mouse application. When removing potential extreme values, we concluded that there is strong evidence that the means differed. However, we reported weak evidence with the complete dataset that these averages differed according to their diet. This might occur, since the assumption of equal variances might not hold. Even though the samples might have equal means, departing from the assumption of equal variances can lead in favor of the wrong hypothesis. Although we have made a significant contribution, an aspect that might alleviate this issue is deriving an objective and robust Bayes factor for the Behrens–Fisher problem, i.e., unequal variances for both groups. Also, the Bayes factor based on the intrinsic prior depends on the maximum likelihood estimate (MLE); perhaps robust estimates can be considered, although a modified test statistic might arise. Another possible extension is to develop an objective Bayes factor for the hypothesis of several equal means; this will be an analysis of variance (ANOVA) approach in the frequentist approach.

Author Contributions

Conceptualization, I.A.A.-R. and L.R.P.-G.; methodology, I.A.A.-R. and L.R.P.-G.; software, I.A.A.-R.; validation, I.A.A.-R. and L.R.P.-G.; formal analysis, I.A.A.-R. and L.R.P.-G.; writing—original draft preparation, I.A.A.-R. and L.R.P.-G. All authors have read and agreed with the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available in [1,24].

Acknowledgments

The second author thanks Jim Berger for the fundamental discussions about the subject of this article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TESS | The effective sample size |

| BIC | Bayesian Information Criterion |

| Bayes Factor based on the intrinsic prior for measuring vs. | |

| Bayes Factor based on the Berger’s Robust prior for measuring vs. | |

| Corrected BIC using the effective sample size for measuring vs. | |

| Bayes Factor based on Jeffreys’s prior for measuring vs. | |

| Bayes Factor based on the conjugate prior for measuring vs. | |

| Bayes Factor based on the Zellner and Siow prior for measuring vs. | |

| Bayes Factor expected arithmetic intrinsic prior for measuring vs. |

Appendix A

Appendix A.1. Calculation of the Effective Sample Size

We suggest using the effective sample size of [21] to define the robust Bayes factor and BIC-Tess. The effective sample size for the parameter , . Let be the design matrix:

where . Let be the second column of the design matrix , and let . Further, let , where is an identity matrix of size n. It follows from the definition of the effective sample size for the original that

Since , . Then, the final expression of the effective sample size is obtained. The definition of the unit information is and for b,

The factors b and d will be defined as

This last calculation defines the factors b and d for the Robust Bayesian Student’s t test, .

Appendix A.2. Simulation Experiments

In the two-sample framework, we generated 500 datasets from a random sample from a normal distribution with parameters ; the second group was created with a combination of several parameters for the location. The mean values were for the standard deviation . In the case of the Student’s t with degrees of freedom, the gamma distribution was simulated using the methods of moments for the shape parameter and scale parameter for . We reported the twice natural base logarithm Bayes factors for comparing the null hypothesis () against the alternative (). This transformation allows the interpretation to be on the same scale as deviance and likelihood ratio test statistics; see Ref. [13] for a deeper discussion.

Table A1.

Summary statistics (means ± standard deviation) for the balanced sample ( of the simulation experiments for the intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Table A1.

Summary statistics (means ± standard deviation) for the balanced sample ( of the simulation experiments for the intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

| Normal | IP | |||||||||

| Robust | ||||||||||

| TESS | ||||||||||

| Conjugate | ||||||||||

| Jeffrey’s | ||||||||||

| Schwarz’s | ||||||||||

| ZS | ||||||||||

| EIA | ||||||||||

| Student-t(1) | IP | |||||||||

| Robust | ||||||||||

| TESS | ||||||||||

| Conjugate | ||||||||||

| Jeffrey’s | ||||||||||

| Schwarz’s | ||||||||||

| ZS | ||||||||||

| EIA | ||||||||||

| Gamma | IP | |||||||||

| Robust | ||||||||||

| TESS | ||||||||||

| Conjugate | ||||||||||

| Jeffrey’s | ||||||||||

| Schwarz | ||||||||||

| ZS | ||||||||||

| EIA |

Table A2.

Frequency distribution for the balanced framework ( in the normal random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Table A2.

Frequency distribution for the balanced framework ( in the normal random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IP | 434 (86.8%) | 56 (11.2%) | 9 (1.8%) | 1 (0.2%) | 0 (0%) | 439 (87.8%) | 57 (11.4%) | 4 (0.8%) | 0 (0%) | 0 (0%) | 438 (87.6%) | 60 (12%) | 2 (0.4%) | 0 (0%) | 0 (0%) |

| Robust | 400 (80%) | 85 (17%) | 13 (2.6%) | 2 (0.4%) | 0 (0%) | 407 (81.4%) | 85 (17%) | 8 (1.6%) | 0 (0%) | 0 (0%) | 402 (80.4%) | 93 (18.6%) | 5 (1%) | 0 (0%) | 0 (0%) |

| TESS | 339 (67.8%) | 141 (28.2%) | 17 (3.4%) | 3 (0.6%) | 0 (0%) | 344 (68.8%) | 144 (28.8%) | 12 (2.4%) | 0 (0%) | 0 (0%) | 329 (65.8%) | 164 (32.8%) | 7 (1.4%) | 0 (0%) | 0 (0%) |

| Conjugate | 0 (0%) | 0 (0%) | 171 (34.2%) | 264 (52.8%) | 65 (13%) | 0 (0%) | 0 (0%) | 200 (40%) | 241 (48.2%) | 59 (11.8%) | 0 (0%) | 0 (0%) | 182 (36.4%) | 261 (52.2%) | 57 (11.4%) |

| Jeffrey | 0 (0%) | 0 (0%) | 392 (78.4%) | 73 (14.6%) | 35 (7%) | 0 (0%) | 0 (0%) | 402 (80.4%) | 68 (13.6%) | 30 (6%) | 0 (0%) | 0 (0%) | 396 (79.2%) | 78 (15.6%) | 26 (5.2%) |

| Schwarz | 0 (0%) | 0 (0%) | 437 (87.4%) | 40 (8%) | 23 (4.6%) | 0 (0%) | 0 (0%) | 444 (88.8%) | 39 (7.8%) | 17 (3.4%) | 0 (0%) | 0 (0%) | 445 (89%) | 45 (9%) | 10 (2%) |

| ZS | 0 (0%) | 0 (0%) | 452 (90.4%) | 30 (6%) | 18 (3.6%) | 0 (0%) | 0 (0%) | 458 (91.6%) | 31 (6.2%) | 11 (2.2%) | 0 (0%) | 0 (0%) | 459 (91.8%) | 34 (6.8%) | 7 (1.4%) |

| EIA | 0 (0%) | 0 (0%) | 435 (87%) | 42 (8.4%) | 23 (4.6%) | 0 (0%) | 0 (0%) | 444 (88.8%) | 39 (7.8%) | 17 (3.4%) | 0 (0%) | 0 (0%) | 444 (88.8%) | 46 (9.2%) | 10 (2%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 2 (0.4%) | 19 (3.8%) | 67 (13.4%) | 48 (9.6%) | 364 (72.8%) | 69 (13.8%) | 184 (36.8%) | 110 (22%) | 54 (10.8%) | 83 (16.6%) | 176 (35.2%) | 195 (39%) | 87 (17.4%) | 21 (4.2%) | 21 (4.2%) |

| Robust | 1 (0.2%) | 12 (2.4%) | 60 (12%) | 45 (9%) | 382 (76.4%) | 50 (10%) | 176 (35.2%) | 122 (24.4%) | 52 (10.4%) | 100 (20%) | 137 (27.4%) | 214 (42.8%) | 97 (19.4%) | 23 (4.6%) | 29 (5.8%) |

| TESS | 0 (0%) | 11 (2.2%) | 45 (9%) | 45 (9%) | 399 (79.8%) | 24 (4.8%) | 167 (33.4%) | 137 (27.4%) | 46 (9.2%) | 126 (25.2%) | 76 (15.2%) | 248 (49.6%) | 116 (23.2%) | 26 (5.2%) | 34 (6.8%) |

| Conjugate | 0 (0%) | 0 (0%) | 0 (0%) | 2 (0.4%) | 498 (99.6%) | 0 (0%) | 0 (0%) | 8 (1.6%) | 63 (12.6%) | 429 (85.8%) | 0 (0%) | 0 (0%) | 36 (7.2%) | 147 (29.4%) | 317 (63.4%) |

| Jeffrey | 0 (0%) | 0 (0%) | 1 (0.2%) | 5 (1%) | 494 (98.8%) | 0 (0%) | 0 (0%) | 50 (10%) | 77 (15.4%) | 373 (74.6%) | 0 (0%) | 0 (0%) | 132 (26.4%) | 128 (25.6%) | 240 (48%) |

| Schwarz | 0 (0%) | 0 (0%) | 2 (0.4%) | 9 (1.8%) | 489 (97.8%) | 0 (0%) | 0 (0%) | 77 (15.4%) | 97 (19.4%) | 326 (65.2%) | 0 (0%) | 0 (0%) | 186 (37.2%) | 117 (23.4%) | 197 (39.4%) |

| ZS | 0 (0%) | 0 (0%) | 3 (0.6%) | 9 (1.8%) | 488 (97.6%) | 0 (0%) | 0 (0%) | 101 (20.2%) | 96 (19.2%) | 303 (60.6%) | 0 (0%) | 0 (0%) | 228 (45.6%) | 99 (19.8%) | 173 (34.6%) |

| EIA | 0 (0%) | 0 (0%) | 2 (0.4%) | 9 (1.8%) | 489 (97.8%) | 0 (0%) | 0 (0%) | 74 (14.8%) | 103 (20.6%) | 323 (64.6%) | 0 (0%) | 0 (0%) | 185 (37%) | 119 (23.8%) | 196 (39.2%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 11 (2.2%) | 13 (2.6%) | 476 (95.2%) | 12 (2.4%) | 52 (10.4%) | 109 (21.8%) | 53 (10.6%) | 274 (54.8%) |

| Robust | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 10 (2%) | 9 (1.8%) | 481 (96.2%) | 5 (1%) | 46 (9.2%) | 102 (20.4%) | 54 (10.8%) | 293 (58.6%) |

| TESS | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 5 (1%) | 12 (2.4%) | 483 (96.6%) | 5 (1%) | 38 (7.6%) | 90 (18%) | 53 (10.6%) | 314 (62.8%) |

| Conjugate | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 1 (0.2%) | 11 (2.2%) | 488 (97.6%) |

| Jeffrey | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 5 (1%) | 19 (3.8%) | 476 (95.2%) |

| Schwarz | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 13 (2.6%) | 21 (4.2%) | 466 (93.2%) |

| ZS | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 18 (3.6%) | 25 (5%) | 457 (91.4%) |

| EIA | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 13 (2.6%) | 21 (4.2%) | 466 (93.2%) |

Table A3.

Frequency distribution for the balanced framework ( Student’s t random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Table A3.

Frequency distribution for the balanced framework ( Student’s t random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IP | 456 (91.2%) | 41 (8.2%) | 3 (0.6%) | 0 (0%) | 0 (0%) | 455 (91%) | 44 (8.8%) | 1 (0.2%) | 0 (0%) | 0 (0%) | 448 (89.6%) | 49 (9.8%) | 3 (0.6%) | 0 (0%) | 0 (0%) |

| Robust | 410 (82%) | 87 (17.4%) | 3 (0.6%) | 0 (0%) | 0 (0%) | 416 (83.2%) | 82 (16.4%) | 2 (0.4%) | 0 (0%) | 0 (0%) | 410 (82%) | 83 (16.6%) | 7 (1.4%) | 0 (0%) | 0 (0%) |

| TESS | 310 (62%) | 187 (37.4%) | 3 (0.6%) | 0 (0%) | 0 (0%) | 320 (64%) | 178 (35.6%) | 2 (0.4%) | 0 (0%) | 0 (0%) | 310 (62%) | 181 (36.2%) | 8 (1.6%) | 1 (0.2%) | 0 (0%) |

| Conjugate | 0 (0%) | 0 (0%) | 131 (26.2%) | 326 (65.2%) | 43 (8.6%) | 0 (0%) | 0 (0%) | 156 (31.2%) | 301 (60.2%) | 43 (8.6%) | 0 (0%) | 0 (0%) | 155 (31%) | 297 (59.4%) | 48 (9.6%) |

| Jeffrey | 0 (0%) | 0 (0%) | 404 (80.8%) | 85 (17%) | 11 (2.2%) | 0 (0%) | 0 (0%) | 412 (82.4%) | 74 (14.8%) | 14 (2.8%) | 0 (0%) | 0 (0%) | 396 (79.2%) | 86 (17.2%) | 18 (3.6%) |

| Schwarz | 0 (0%) | 0 (0%) | 459 (91.8%) | 37 (7.4%) | 4 (0.8%) | 0 (0%) | 0 (0%) | 457 (91.4%) | 39 (7.8%) | 4 (0.8%) | 0 (0%) | 0 (0%) | 454 (90.8%) | 36 (7.2%) | 10 (2%) |

| ZS | 0 (0%) | 0 (0%) | 479 (95.8%) | 18 (3.6%) | 3 (0.6%) | 0 (0%) | 0 (0%) | 474 (94.8%) | 24 (4.8%) | 2 (0.4%) | 0 (0%) | 0 (0%) | 474 (94.8%) | 17 (3.4%) | 9 (1.8%) |

| EIA | 0 (0%) | 0 (0%) | 457 (91.4%) | 39 (7.8%) | 4 (0.8%) | 0 (0%) | 0 (0%) | 457 (91.4%) | 39 (7.8%) | 4 (0.8%) | 0 (0%) | 0 (0%) | 454 (90.8%) | 36 (7.2%) | 10 (2%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 378 (75.6%) | 92 (18.4%) | 22 (4.4%) | 5 (1%) | 3 (0.6%) | 395 (79%) | 88 (17.6%) | 14 (2.8%) | 1 (0.2%) | 2 (0.4%) | 421 (84.2%) | 69 (13.8%) | 10 (2%) | 0 (0%) | 0 (0%) |

| Robust | 327 (65.4%) | 132 (26.4%) | 31 (6.2%) | 5 (1%) | 5 (1%) | 347 (69.4%) | 129 (25.8%) | 19 (3.8%) | 3 (0.6%) | 2 (0.4%) | 375 (75%) | 111 (22.2%) | 14 (2.8%) | 0 (0%) | 0 (0%) |

| TESS | 259 (51.8%) | 187 (37.4%) | 39 (7.8%) | 9 (1.8%) | 6 (1.2%) | 271 (54.2%) | 199 (39.8%) | 23 (4.6%) | 4 (0.8%) | 3 (0.6%) | 284 (56.8%) | 191 (38.2%) | 24 (4.8%) | 1 (0.2%) | 0 (0%) |

| Conjugate | 0 (0%) | 0 (0%) | 125 (25%) | 259 (51.8%) | 116 (23.2%) | 0 (0%) | 0 (0%) | 109 (21.8%) | 289 (57.8%) | 102 (20.4%) | 0 (0%) | 0 (0%) | 139 (27.8%) | 283 (56.6%) | 78 (15.6%) |

| Jeffrey | 0 (0%) | 0 (0%) | 321 (64.2%) | 102 (20.4%) | 77 (15.4%) | 0 (0%) | 0 (0%) | 342 (68.4%) | 100 (20%) | 58 (11.6%) | 0 (0%) | 0 (0%) | 366 (73.2%) | 90 (18%) | 44 (8.8%) |

| Schwarz | 0 (0%) | 0 (0%) | 387 (77.4%) | 53 (10.6%) | 60 (12%) | 0 (0%) | 0 (0%) | 403 (80.6%) | 60 (12%) | 37 (7.4%) | 0 (0%) | 0 (0%) | 424 (84.8%) | 47 (9.4%) | 29 (5.8%) |

| ZS | 0 (0%) | 0 (0%) | 403 (80.6%) | 46 (9.2%) | 51 (10.2%) | 0 (0%) | 0 (0%) | 426 (85.2%) | 44 (8.8%) | 30 (6%) | 0 (0%) | 0 (0%) | 443 (88.6%) | 32 (6.4%) | 25 (5%) |

| EIA | 0 (0%) | 0 (0%) | 387 (77.4%) | 53 (10.6%) | 60 (12%) | 0 (0%) | 0 (0%) | 401 (80.2%) | 63 (12.6%) | 36 (7.2%) | 0 (0%) | 0 (0%) | 423 (84.6%) | 49 (9.8%) | 28 (5.6%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 264 (52.8%) | 125 (25%) | 60 (12%) | 12 (2.4%) | 39 (7.8%) | 263 (52.6%) | 144 (28.8%) | 64 (12.8%) | 11 (2.2%) | 18 (3.6%) | 324 (64.8%) | 114 (22.8%) | 48 (9.6%) | 7 (1.4%) | 7 (1.4%) |

| Robust | 225 (45%) | 150 (30%) | 65 (13%) | 18 (3.6%) | 42 (8.4%) | 231 (46.2%) | 166 (33.2%) | 72 (14.4%) | 9 (1.8%) | 22 (4.4%) | 270 (54%) | 157 (31.4%) | 55 (11%) | 8 (1.6%) | 10 (2%) |

| TESS | 162 (32.4%) | 190 (38%) | 79 (15.8%) | 22 (4.4%) | 47 (9.4%) | 183 (36.6%) | 196 (39.2%) | 81 (16.2%) | 16 (3.2%) | 24 (4.8%) | 183 (36.6%) | 229 (45.8%) | 64 (12.8%) | 10 (2%) | 14 (2.8%) |

| Conjugate | 0 (0%) | 0 (0%) | 73 (14.6%) | 194 (38.8%) | 233 (46.6%) | 0 (0%) | 0 (0%) | 73 (14.6%) | 193 (38.6%) | 234 (46.8%) | 0 (0%) | 0 (0%) | 82 (16.4%) | 245 (49%) | 173 (34.6%) |

| Jeffrey | 0 (0%) | 0 (0%) | 224 (44.8%) | 87 (17.4%) | 189 (37.8%) | 0 (0%) | 0 (0%) | 229 (45.8%) | 102 (20.4%) | 169 (33.8%) | 0 (0%) | 0 (0%) | 261 (52.2%) | 110 (22%) | 129 (25.8%) |

| Schwarz | 0 (0%) | 0 (0%) | 268 (53.6%) | 77 (15.4%) | 155 (31%) | 0 (0%) | 0 (0%) | 269 (53.8%) | 98 (19.6%) | 133 (26.6%) | 0 (0%) | 0 (0%) | 330 (66%) | 75 (15%) | 95 (19%) |

| ZS | 0 (0%) | 0 (0%) | 287 (57.4%) | 72 (14.4%) | 141 (28.2%) | 0 (0%) | 0 (0%) | 298 (59.6%) | 82 (16.4%) | 120 (24%) | 0 (0%) | 0 (0%) | 349 (69.8%) | 64 (12.8%) | 87 (17.4%) |

| EIA | 0 (0%) | 0 (0%) | 268 (53.6%) | 78 (15.6%) | 154 (30.8%) | 0 (0%) | 0 (0%) | 268 (53.6%) | 100 (20%) | 132 (26.4%) | 0 (0%) | 0 (0%) | 330 (66%) | 77 (15.4%) | 93 (18.6%) |

Table A4.

Frequency distribution for the balanced framework ( from the gamma random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Table A4.

Frequency distribution for the balanced framework ( from the gamma random variables of the based on the scale proposed by [13]. Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IP | 449 (89.8%) | 49 (9.8%) | 2 (0.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Robust | 417 (83.4%) | 78 (15.6%) | 5 (1%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| TESS | 348 (69.6%) | 139 (27.8%) | 13 (2.6%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Conjugate | 0 (0%) | 0 (0%) | 183 (36.6%) | 268 (53.6%) | 49 (9.8%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Jeffrey | 0 (0%) | 0 (0%) | 412 (82.4%) | 62 (12.4%) | 26 (5.2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Schwarz | 0 (0%) | 0 (0%) | 452 (90.4%) | 31 (6.2%) | 17 (3.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| ZS | 0 (0%) | 0 (0%) | 465 (93%) | 25 (5%) | 10 (2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| EIA | 0 (0%) | 0 (0%) | 451 (90.2%) | 32 (6.4%) | 17 (3.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 30 (6%) | 141 (28.2%) | 186 (37.2%) | 63 (12.6%) | 80 (16%) | 0 (0%) | 3 (0.6%) | 33 (6.6%) | 35 (7%) | 429 (85.8%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Robust | 21 (4.2%) | 119 (23.8%) | 191 (38.2%) | 68 (13.6%) | 101 (20.2%) | 0 (0%) | 0 (0%) | 22 (4.4%) | 39 (7.8%) | 439 (87.8%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| TESS | 8 (1.6%) | 109 (21.8%) | 186 (37.2%) | 72 (14.4%) | 125 (25%) | 0 (0%) | 0 (0%) | 16 (3.2%) | 33 (6.6%) | 451 (90.2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Conjugate | 0 (0%) | 0 (0%) | 2 (0.4%) | 32 (6.4%) | 466 (93.2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Jeffrey | 0 (0%) | 0 (0%) | 19 (3.8%) | 49 (9.8%) | 432 (86.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Schwarz | 0 (0%) | 0 (0%) | 36 (7.2%) | 63 (12.6%) | 401 (80.2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| ZS | 0 (0%) | 0 (0%) | 50 (10%) | 73 (14.6%) | 377 (75.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| EIA | 0 (0%) | 0 (0%) | 35 (7%) | 64 (12.8%) | 401 (80.2%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | Very Strong | Strong | Positive | Weak | Negative | |

| IP | 0 (0%) | 19 (3.8%) | 103 (20.6%) | 86 (17.2%) | 292 (58.4%) | 148 (29.6%) | 218 (43.6%) | 103 (20.6%) | 17 (3.4%) | 14 (2.8%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Robust | 0 (0%) | 13 (2.6%) | 87 (17.4%) | 73 (14.6%) | 327 (65.4%) | 111 (22.2%) | 229 (45.8%) | 122 (24.4%) | 16 (3.2%) | 22 (4.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| TESS | 0 (0%) | 6 (1.2%) | 71 (14.2%) | 66 (13.2%) | 357 (71.4%) | 73 (14.6%) | 239 (47.8%) | 140 (28%) | 21 (4.2%) | 27 (5.4%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Conjugate | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) | 0 (0%) | 0 (0%) | 28 (5.6%) | 124 (24.8%) | 348 (69.6%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Jeffrey | 0 (0%) | 0 (0%) | 0 (0%) | 2 (0.4%) | 498 (99.6%) | 0 (0%) | 0 (0%) | 107 (21.4%) | 130 (26%) | 263 (52.6%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| Schwarz | 0 (0%) | 0 (0%) | 0 (0%) | 5 (1%) | 495 (99%) | 0 (0%) | 0 (0%) | 157 (31.4%) | 139 (27.8%) | 204 (40.8%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| ZS | 0 (0%) | 0 (0%) | 0 (0%) | 7 (1.4%) | 493 (98.6%) | 0 (0%) | 0 (0%) | 200 (40%) | 115 (23%) | 185 (37%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

| EIA | 0 (0%) | 0 (0%) | 0 (0%) | 5 (1%) | 495 (99%) | 0 (0%) | 0 (0%) | 154 (30.8%) | 143 (28.6%) | 203 (40.6%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 500 (100%) |

Figure A1.

Evidence in the scale when comparing the population means of two samples that arise from normal distributions with several means and variances with equal sizes and . Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Figure A2.

Evidence in the scale when comparing the population means of two samples that arise from a Student’s t distributions with several means and variances with equal sizes and . Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

Figure A3.

Evidence in the scale when comparing the population means of two samples that arise from gamma random samples with several means and variances with equal sizes and . Intrinsic priors (IP), Berger robust prior (Robust), BIC based on the effective sample size (TESS), conjugate, Jeffrey’s, Schwarz, Zellner and Siow (ZS), and the expected arithmetic intrinsic prior of [24].

References

- Student. The probable error of a mean. Biometrika 1908, 6, 1–25. [Google Scholar] [CrossRef]

- Greenland, S.; Senn, S.J.; Rothman, K.J.; Carlin, J.B.; Poole, C.; Goodman, S.N.; Altman, D.G. Statistical tests, p values, confidence intervals, and power: A guide to misinterpretations. Eur. J. Epidemiol. 2016, 31, 337–350. [Google Scholar] [CrossRef] [PubMed]

- Wasserstein, R.L.; Lazar, N.A. The ASA statement on p-values: Context, process, and purpose. Am. Stat. 2016, 70, 129–133. [Google Scholar] [CrossRef]

- Vidgen, B.; Yasseri, T. p-values: Misunderstood and misused. Front. Physics 2016, 4, 6. [Google Scholar] [CrossRef]

- Held, L.; Ott, M. On p-values and Bayes factors. Annu. Rev. Stat. Its Appl. 2018, 5, 393–419. [Google Scholar] [CrossRef]

- Dienes, Z. How Bayes factors change scientific practice. J. Math. Psychol. 2016, 72, 78–89. [Google Scholar] [CrossRef]

- Marden, J.I. Hypothesis testing: From p values to Bayes factors. J. Am. Stat. Assoc. 2000, 95, 1316–1320. [Google Scholar] [CrossRef]

- Page, R.; Satake, E. Beyond p Values and Hypothesis Testing: Using the Minimum Bayes Factor to Teach Statistical Inference in Undergraduate Introductory Statistics Courses. J. Educ. Learn. 2017, 6, 254–266. [Google Scholar] [CrossRef]

- Lavine, M.; Schervish, M.J. Bayes factors: What they are and what they are not. Am. Stat. 1999, 53, 119–122. [Google Scholar]

- Berger, J.O.; Mortera, J. Default Bayes factors for nonnested hypothesis testing. J. Am. Stat. Assoc. 1999, 94, 542–554. [Google Scholar] [CrossRef]

- Berger, J.; Pericchi, L. Bayes factors. In Wiley StatsRef: Statistics Reference Online; John Wiley & Sons: Hoboken, NJ, USA, 2014; pp. 1–14. [Google Scholar]

- Jeffreys, H. The Theory of Probability; OuP Oxford: Oxford, UK, 1998. [Google Scholar]

- Kass, R.E.; Raftery, A.E. Bayes factors. J. Am. Stat. Assoc. 1995, 90, 773–795. [Google Scholar] [CrossRef]

- Gönen, M.; Johnson, W.O.; Lu, Y.; Westfall, P.H. The Bayesian two-sample t test. Am. Stat. 2005, 59, 252–257. [Google Scholar] [CrossRef]

- Berger, J.O.; Pericchi, L.R.; Ghosh, J.; Samanta, T.; De Santis, F.; Berger, J.; Pericchi, L. Objective Bayesian Methods for Model Selection: Introduction and Comparison; Lecture Notes-Monograph Series; Institute of Mathematical Statistics: Beachwood, OH, USA, 2001; pp. 135–207. [Google Scholar]

- Berger, J.O.; Pericchi, L.R. The intrinsic Bayes factor for linear models. Bayesian Stat. 1996, 5, 25–44. [Google Scholar]

- Berger, J.O.; Pericchi, L.R. The intrinsic Bayes factor for model selection and prediction. J. Am. Stat. Assoc. 1996, 91, 109–122. [Google Scholar] [CrossRef]

- Berger, J.O. Robust Bayesian analysis: Sensitivity to the prior. J. Stat. Plan. Inference 1990, 25, 303–328. [Google Scholar] [CrossRef]

- Moreno, E. Bayes Factors for Intrinsic and Fractional Priors in Nested Models. Bayesian Robustness; Lecture Notes-Monograph Series; Institute of Mathematical Statistics: Beachwood, OH, USA, 1997; pp. 257–270. [Google Scholar]

- Berger, J.O.; Berger, J. Bayesian Analysis; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- Berger, J.; Bayarri, M.; Pericchi, L. The effective sample size. Econom. Rev. 2014, 33, 197–217. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Zellner, A.; Siow, A. Posterior odds ratios for selected regression hypotheses. Trab. Estad. Investig. Oper. 1980, 31, 585–603. [Google Scholar] [CrossRef]

- Kim, D.H.; Kang, S.G.; Lee, W.D. Intrinsic priors for testing two normal means with intrinsic bayes factors. Commun. Stat. Methods 2006, 35, 63–81. [Google Scholar] [CrossRef]

- Cushny, A.R.; Peebles, A.R. The action of optical isomers: II. Hyoscines. J. Physiol. 1905, 32, 501. [Google Scholar] [CrossRef]

- Senn, S.; Richardson, W. The first t-test. Stat. Med. 1994, 13, 785–803. [Google Scholar] [CrossRef]

- Senn, S. A century of t-tests. Significance 2008, 5, 37–39. [Google Scholar] [CrossRef]

- Falk, J.L.; Tang, M.; Forman, S. Schedule-induced chronic hypertension. Psychosom. Med. 1977, 39, 252–263. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).