Abstract

The information bottleneck (IB) framework formalises the essential requirement for efficient information processing systems to achieve an optimal balance between the complexity of their representation and the amount of information extracted about relevant features. However, since the representation complexity affordable by real-world systems may vary in time, the processing cost of updating the representations should also be taken into account. A crucial question is thus the extent to which adaptive systems can leverage the information content of already existing IB-optimal representations for producing new ones, which target the same relevant features but at a different granularity. We investigate the information-theoretic optimal limits of this process by studying and extending, within the IB framework, the notion of successive refinement, which describes the ideal situation where no information needs to be discarded for adapting an IB-optimal representation’s granularity. Thanks in particular to a new geometric characterisation, we analytically derive the successive refinability of some specific IB problems (for binary variables, for jointly Gaussian variables, and for the relevancy variable being a deterministic function of the source variable), and provide a linear-programming-based tool to numerically investigate, in the discrete case, the successive refinement of the IB. We then soften this notion into a quantification of the loss of information optimality induced by several-stage processing through an existing measure of unique information. Simple numerical experiments suggest that this quantity is typically low, though not entirely negligible. These results could have important implications for the structure and efficiency of incremental learning in biological and artificial agents, the comparison of IB-optimal observation channels in statistical decision problems, and the IB theory of deep neural networks.

1. Introduction

1.1. Conceptualisation and Organisation Outline

Consider the problem, for an information-processing system, of extracting relevant information about a target variable Y within a correlated source variable X, under constraints on the cost of the information processing needed to do so—yielding a compressed representation T. This situation can be formalised in an information-theoretic language, where the information-processing cost is measured with the mutual information between the source X and the representation T of it, while the relevancy about Y of the information extracted by T is measured by . The problem thus becomes that of maximising the relevant information under bounded information-processing cost , i.e., we are interested in the information bottleneck (IB) problem [1,2], which, in primal form, can be formulated as

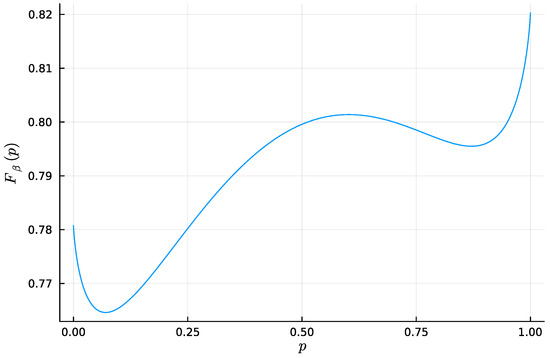

Here, the trade-off parameter controls the bound on the permitted information-processing cost and thus, intuitively, the resulting representation’s granularity. The Markov chain condition ensures that any information that the bottleneck T extracts about the relevancy variable Y can only come from the source X. The solutions to (1) for varying trace the so-called information curve, i.e., the -parameterised curve

where and are defined by a bottleneck T of parameter (see the black curve in the first figure in Section 2 below). This curve indicates the informationally optimal bounds on the feasible trade-offs between relevancy and complexity of the representation T. In this sense, the IB method provides a fundamental understanding of the informationally optimal limits of information-processing systems.

These limits are crucial for both understanding and building adaptive behaviour. For instance, choosing X to be an agent’s past and Y to be its future leads it to extract the most relevant features of its environment [3,4,5,6]. More generally, the IB point of view on modelling embodied agents’ representations has been leveraged for unifying efficient and predictive coding principles in theoretical neuroscience—at the level of single neurons [3,7,8,9] and neuronal populations [9,10,11,12,13]—but also for studying sensor evolution [14,15,16], the emergence of common concepts [17] and of spatial categories [18], the evolution of human language [19,20,21], or for implementing informationally efficient control in artificial agents [22,23,24]. This line of research brings increasing support to the hypothesis that, particularly for evolutionary reasons, biological agents are often poised close to optimality in the IB sense. It also provides a framework for both measuring and improving artificial agents’ performance.

However, one aspect of the IB framework conflicts with a crucial feature of real-world systems: the informationally optimal limits that it describes only consider a given representation T taken in isolation from any other one in the system. This point of view a priori disregards the relationship between representations, which is crucial in real-world information-processing systems. Thus, it is crucial to consider the following question: does the relationship between a set of internal representations impact their individual information optimality? In this paper, we are mostly interested in a specific kind of relationship: when are successively produced in this order, and each new builds on both the previous representation and new information from the fixed source X to extract information about the fixed relevancy Y. This scenario formalises the incorporation of information into already learned representations—as is the case in developmental learning, or, more generally, any kind of learning process that goes through identifiable successive steps.

More precisely, consider an informationally bounded agent that extracts information about a relevant variable Y within an environment X. If the agent is informationally optimal, given an affordable complexity cost , it must maximise the relevant information that it extracts from the environment—resulting in a bottleneck representation , i.e., a solution to (1) with parameter . Then, assume that at, a later stage, the complexity cost that the agent can afford increases to , while the goal is still to extract information about the same relevant feature Y within the same environment X. To keep being informationally optimal, the agent should thus update its representation so it becomes a bottleneck of parameter . Given this setting, the question we ask is: to which extent can the content learned into be leveraged for the production of ? It is indeed not intuitively clear that should keep all the information from . An informal example is the fact that most pedagogical curricula teach knowledge via successive approximations, where, at a more advanced level, the content learned at the beginner level must sometimes be unlearned to successively proceed further, even though it was perfectly reasonable—in our language, informationally optimal—to deliver the first beginner sketch to students that would never progress to learn the expert level.

This question has been formalised, in the rate-distortion literature, with the notion of successive refinement (SR) [25,26,27,28,29], which, in short, refers to the situation where several-stage processing does not incur any loss of information optimality. More precisely, in the context outlined above, there is successive refinement if the processing cost of first producing a coarse bottleneck of parameter and then refining it to a finer bottleneck of parameter is no larger than the processing cost of directly producing a bottleneck of parameter without any intermediary bottleneck (see Section 2.1 and Appendix B.2 for formal definitions). The aim of this work is to push the understanding of successive refinement in the IB framework [30,31,32] further, as well as to expand the analysis to a quantification of the lack of SR, in cases where the latter does not hold exactly. We start by leveraging general results in existing IB literature [33,34] to prove that successive refinement always holds for jointly Gaussian , and when Y is a deterministic function of X. However, it is seems crucial, for further progress on more general scenarios, to design specifically tailored mathematical and numerical tools. In this regard, we provide two main contributions.

First, we present a simple geometric characterisation of SR, in terms of convex hulls of the decoder symbol-wise conditional probabilities , for t varying in the bottleneck alphabet . This characterisation is proven in the discrete case under an additional but mild assumption of injectivity of the decoder . This new point of view fits well with an ongoing convexity approach to the IB problem [35,36,37,38,39] and might thus help develop a new geometric perspective on the successive refinement of the IB. As an example, we use this geometric characterisation to prove that SR always holds for binary source X and binary relevancy Y. Moreover, this characterisation makes it straightforward to numerically assess, with a linear program checking convex hull inclusions, whether or not two discrete bottlenecks and achieve successive refinement. As we demonstrate with minimal numerical examples, this can help in investigating the SR structure of any given IB problem, i.e., how successive refinement depends on the particular combination of trade-off parameters and .

Second, we soften [18] the traditional notion of successive refinement and study the extent to which several-stage processing incurs a loss of information optimality. More precisely, we propose to measure soft successive refinement with the unique information [40] (UI) that the coarser bottleneck holds about the source X, as compared to the finer one . Explicitly, this UI is defined as the minimal value of over all distributions whose marginals and coincide with the corresponding bottleneck distributions (see Section 3.1 for details). As a first exploration of soft SR’s qualitative features, we investigate the landscapes of unique information over trade-off parameters, for again some simple example distributions . These landscapes seem to unveil a rich structure, which was largely hidden by the traditional notion of SR, that only distinguished between SR being present or absent. Among the general features suggested by these experiments, the most significant are that soft SR seems strongly influenced by the trajectories of the decoders over ; the UI often goes through sharp variations at the bifurcations [41,42,43,44] undergone by the bottlenecks (in a fashion compatible with the presence of discontinuities of either the UI itself, or its differential, with regard to trade-off parameters); and the loss of information optimality seems always small—more precisely, the global bound on the UI was observed to be typically one or two orders of magnitude lower than the system’s globally processed information (see Section 3.2 for formal statements). These three conclusions are phenomenological and limited to our minimal examples, but they shed light on the kind of structure that can be investigated by further research. They also suggest the relevance that developing this theoretical framework might have for the scientific question that motivates it. In particular, the link with IB bifurcations and the overall small loss of information optimality would, if generalisable, have interesting consequences for the structure and efficiency of incremental learning.

As a side contribution, we draw along the paper formal equivalences between our framework and other notions proposed in the literature, thus making the formal framework also relevant to decision problems [40,45] and to the information-theoretic approach to deep learning [46]. This flexibility of interpretation stems from the fact that even though our formal framework crucially depends on the order of the bottleneck representations’ trade-off parameters, it does not depend on the order in which these representations are produced. Thus, a sequence of bottlenecks can be equally well interpreted as produced from coarsest to finest—as is the case for the information incorporation interpretation outlined above—or from finest to coarsest—as is the case in feed-forward processing. This conceptual unity sheds light on the common formal structure shared by these diverse phenomena.

In the next Section 1.2, we review related work. After having established notations and recalled some general notions in Section 1.3, we formally introduce the notion of the successive refinement of the IB in Section 2.1, where we also prove successive refinability in the case of Gaussian vectors and deterministic channel . We then present the convex hull characterisation in Section 2.2, before using it to prove successive refinement for the case of binary source and relevancy variables. The following Section 2.3 leverages the convex hull characterisation to gather some first insights from minimal experiments. These experiments suggest an intuition for defining soft successive refinement, which we formalise in Section 3.1 through a measure of unique information [40], where we provide theoretical motivations for our choice. This new measure is explored in Section 3.2 with additional numerical experiments that highlight the general features described above. The alternative interpretations of both exact and soft SR, in terms of decision problems and feed-forward deep neural networks, are developed in Section 4.1 and Section 4.2, respectively. We then describe the limitations and potential future work in Section 5, and conclude in Section 6.

1.2. Related Work

The notion of successive refinement has been long studied in the rate-distortion literature [25,26,27,28,29]. However, classic rate-distortion theory [47] usually considers distortion functions defined on the random variables’ alphabets, whereas the IB framework can be regarded as a rate-distortion problem only if one allows the distortion to be defined on the space of probability distributions [48]. Successive refinement thus needed to be adapted to the IB framework, which was achieved starting from various perspectives.

In [30,31], successive refinement is formulated within the IB framework. Then, Ref. [32] goes further by considering the informationally optimal limits of several-stage processing in general, without comparing it to single-stage processing. In both these works, the problem is initially defined in asymptotic coding terms, and only then given a single-letter characterisation. On the contrary, we will directly define successive refinement from a single-letter perspective. It turns out that our single-letter definition and the operational multi-letter definition from [30,31] are equivalent. The two latter works—as well as [32]—thus provide our single-letter definition with an operational interpretation that also formalises the intuition of an informationally optimal incorporation of information (see Proposition 1 and Appendix B.2).

Another notion named “successive refinement” as well can be found in [46]. This work, instead of modelling information incorporation, rather considers the successive processing of data along a feed-forward pipeline—which encompasses the example of deep neural networks. Fortunately, the “successive refinement” defined in [46] happens to encompass the notion we develop here; more precisely, in [46], the relevancy variable is allowed to vary across processing stages, but if we choose it to be always the same, then “successive refinement” as defined in [46] and “successive refinement” as defined here are formally equivalent (see Section 4.2). In other words, the situation considered in this paper is a particular case of [46], so our results, methods, and phenomenological insights are directly relevant to [46]. For instance, our proof of SR for binary X and Y (see Proposition 5) is a generalisation of Lemma 1 in [46], which proves SR when X is a Bernoulli variable of parameter and is a binary symmetric channel.

More generally speaking, the link between successive refinement and the IB theory of deep learning [49,50,51,52,53,54,55,56] has been noted since the inception of the latter research agenda [49], and, besides in [46], it was also further developed in [57]. Section 4.2 makes clear in which sense our results are relevant to this line of research. In particular, our minimal experiments suggest (if they are scalable to the much richer deep learning setting) that trained deep neural networks should lie close to IB bifurcations: i.e., if X is the network’s input, Y the feature to be learnt and the network’s successive layers, the points should lie close to points of the information curve corresponding to IB bifurcations. This feature was already suggested in [49,50], but for reasons not explicitly related to successive refinement. Note that while the phenomenon of IB bifurcations has been studied from a variety of perspectives (see, e.g., [41,42,43,44]), here, we adopt that of [43], which frames IB bifurcations as parameter values where the minimal number of symbols required to represent a bottleneck increases.

In [58], successive refinability is proved for discrete source X and relevancy . Our Proposition 3 generalises this result to either discrete or continuous source X, with relevancy Y being an arbitrary function of X, with a similar argument as that in [58].

In [33], links between the IB framework and renormalisation group theory are exhibited. Even though the questions addressed in the latter work are thus distinct from those addressed here, the Gaussian IB’s semigroup structure defined and proven in [33] implies the successive refinability of Gaussian vectors (see Proposition 2, and see Appendix 2 for more details on the semigroup structure). This generalises Lemma 3 in [46], which proves SR when X and Y are jointly Gaussian, but each one-dimensional (see Section 4.2 for the relevance of [46] to our framework).

The geometric approach in which we propose to study the successive refinement of the IB is closely related to the convexity approach to the IB [35,36,37,38,39], which frames the IB problem as that of finding the lower convex hull of a well-chosen function. This formulation happens to fit neatly with our convex hull characterisation of successive refinement; we use it to apply the characterisation to proving successive refinability in the case of a binary source and relevancy. Moreover, it is worth noting that our convex hull characterisation makes successive refinement tightly related to the notion of input-degradedness [59], through which additional operational interpretations can be given to successive refinement, particularly in terms of randomised games.

The loss of information optimality induced by several-stage processing has already been studied in [60] (see next paragraph), but a quantification of it based on soft Markovianity was, to the best of our knowledge, only considered in [18]. Here, we take inspiration in the latter work to quantify soft successive refinement, but we explicitly address the problem that joint distributions over distinct bottlenecks are not uniquely defined. This leads us to use the unique information defined in [40] within the context of partial information decomposition [61,62,63,64] as our measure of soft SR. This unique information has tight links with the Blackwell order [45,65], which allows us in Section 4.1 to provide a second alternative interpretation of (exact and soft) successive refinement in terms of decision problems.

Ref. [60] proves the near-successive refinability of rate-distortion problems when the distortion measure is the squared error. However, the latter work’s approach is different from ours in two respects. First, the distortion measures are different: in particular, as mentioned above, the IB distortion is defined over the space of probability distributions on symbols, unlike the squared error, which is defined on the space of symbols itself. Second, Ref. [60] quantifies the lack of SR as the respective differences between sequences of optimal rates (for given distortion sequences) of a several-stage processing system and the corresponding optimal rates (for the same distortions) of a single-stage processing system. Here, we quantify the lack of SR with a single quantity: the unique information defined by bottlenecks with different granularities. We are, at this stage, not aware of a link between this value of unique information and differences in one-stage and several-stage optimal rates.

1.3. Technical Preliminaries

In this section, we fix the notations and conventions that we will use along the paper and recall some general notions that we will need.

1.3.1. Notations and Conventions

The random variables are denoted by capital letters, e.g., X, their alphabets by calligraphic ones, e.g., , and their symbols by lower-case letters, e.g., x. Sometimes, we will mix upper- and lower-case notations to denote a family where some symbols vary, while others are fixed, e.g., , or . Throughout the whole paper, X is the fixed source and Y the fixed relevancy of the IB problem. The variable T defined by the solution to the primal IB problem (1) is called a primal bottleneck. We use the same symbol T for Lagrangian bottlenecks, i.e., variables defined by solutions to the Lagrangian bottleneck problem (see Equation (3) below). By “bottleneck” without further specification, we refer to either a primal or Lagrangian bottleneck. The fixed source-relevancy distribution is denoted , and any distribution involving at least one bottleneck is denoted with the letter q, e.g., . When it is necessary to make the trade-off parameter explicit, we index the corresponding objects by , e.g., or . Unless explicitly stated otherwise, the source X, relevancy Y, and any considered bottleneck T are defined as either all discrete or all continuous. Probability simplices, and sometimes some of their subsets are written using the generic symbol ; for instance, the source simplex is denoted by .

Without loss of generality, we always restrict X, Y, and the bottleneck T to their respective supports so that, in particular, all the conditional distributions are unambiguously well-defined, both in the discrete and the continuous case.

1.3.2. General Facts and Notions

The following properties of the IB framework will be useful [35,37]:

- A bottleneck must saturate the information constraint, i.e., solutions T to (1) must satisfy . In other words, the primal trade-off parameter is the complexity cost of the corresponding bottleneck.

- The function is constant for . We will thus always assume, without loss of generality, that .

- In the discrete case, choosing a bottleneck cardinality is enough to obtain optimal solutions. Thus, we always assume, without loss of generality, that , where might occur if needed to make T full support.

To compute bottleneck solutions, instead of directly solving the primal problem (1), following common practice, we will solve its Lagrangian relaxation [66]:

where the complexity-relevancy trade-off is now parameterised by , which corresponds to the inverse of the information curve’s slope [41]. As the information curve is known to be concave, the Lagrangian parameter is an increasing function of the primal parameter . Moreover, we can, without loss of generality, assume that [43]. (Note that when the information curve is not strictly concave, the Lagrangian formulation does not allow one to obtain all the solutions to the primal problem [39,67]. However, in our simple numerical experiments, we always obtained strictly concave information curves.)

We will also need the following concepts [43]:

Definition 1.

Let T be a (primal or Lagrangian) discrete bottleneck. The effective cardinality is the number of distinct pointwise conditional probabilities for varying t.

Definition 2.

A discrete (primal or Lagrangian) bottleneck T is a canonical bottleneck, or is in canonical form, if all the pointwise conditional probabilities are distinct, i.e., equivalently, if , where is the effective cardinality of T.

Our definition of effective cardinality, even though slightly different from the original one in [43], is equivalent to the latter for Lagrangian bottlenecks. And, importantly, every (primal or Lagrangian) bottleneck can be reduced to its canonical form by merging the symbols with identical (see Appendix A.1 for more details). We will be particularly interested in the change of effective cardinality, which has been identified in [43] as characterising the bottleneck phase-transitions, or bifurcations.

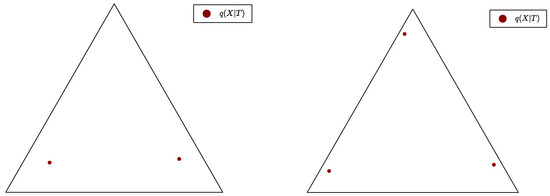

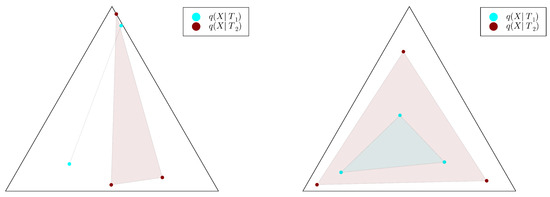

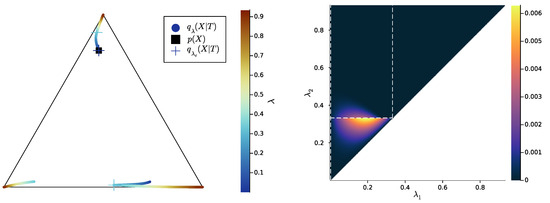

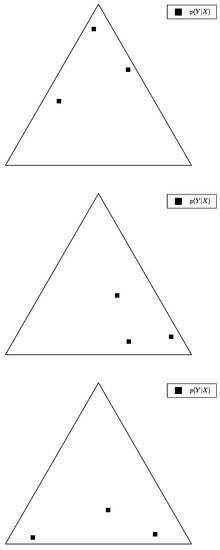

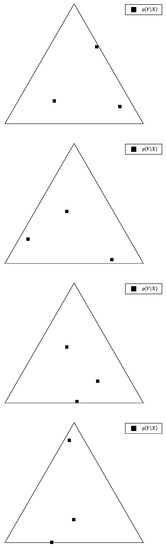

In Figure 1, we present examples of bottleneck conditional distributions , visualised as the family of points on the source simplex , where, here, , and the bottleneck is computed with in both examples. However, in Figure 1 (left), there are only two distinct , so there must be two equal pointwise probabilities and ; thus, and the canonical form of T is obtained by merging and . On the contrary, in Figure 1 (right), there are three distinct , so, here, and the bottleneck is already in canonical form.

Figure 1.

Examples of distributions , visualised as families of points on the source simplex , where, here, . Each of the triangle’s vertices represents the Dirac probability of some . The bottleneck’s effective cardinality is on the left and on the right.

Eventually, the notions of consistency and extension will be crucial to us.

Definition 3.

Let be a Cartesian product of (continuous or discrete) alphabets. For a subset of coordinates, we write

For each , we consider a subset of coordinates and a probability distribution over . The distributions are said to be consistent if, for every , the respective marginals of and on their common coordinates are equal.

For instance, if and are two bottlenecks, they define consistent distributions and because, by definition, their respective marginals on their common coordinates are .

Definition 4.

Let be a Cartesian product of (continuous or discrete) alphabets, and be consistent probability distributions over distinct but potentially overlapping coordinates of . A distribution q over the whole is called an extension of the family of distributions if it is consistent with each .

Consider bottlenecks of same source X and relevancy Y for resp. parameters . They define a consistent family of distributions . One of the central mathematical objects of this work is the set of their extensions into joint distributions :

Notation 1.

For given bottlenecks of respective parameters , we denote by the set of extensions of the family of distributions .

In general, for a fixed family of bottlenecks, there is a multitude of possible ways to extend them into a joint distribution; indeed, traces a polytope on the simplex of joint distributions (see Appendix A in [40]). This feature is the formal version of our previous statement that the IB framework does not entirely specify the relationship between representations : it only constrains it through the set . Questions about possible relationships between IB representations are thus questions about properties of the set .

2. Exact Successive Refinement of the IB

2.1. Formal Framework and First Results

Here, we formally describe, within the IB framework, the rate-distortion-theoretic notion of successive refinement (SR) [25,26,27,29]. We propose a purely single-letter definition (i.e., we only consider single source, relevancy, and bottleneck variables), which makes the presentation simpler but still conveys the intuition of information incorporation. After having presented the notion of SR in the IB framework, we describe its Markov chain characterisation (see Proposition 1), which mirrors the characterisation of SR for classic rate-distortion problems [26], and makes our formulation equivalent to previous multi-letter operational definitions, which also formalise the intuition of information incorporation [30,31,32]. We then leverage this characterisation to prove SR in the case of Gaussian vectors and deterministic channel .

Intuitively, there is successive refinement when a finer bottleneck does not discard any of the information extracted by a coarser bottleneck . This can be imposed by requiring that for some variable , which encodes the “supplement” of information that “refines” into . In the general case:

Definition 5.

Let , and a discrete or continuous be given. There is successive refinement (SR) for parameters if there exist variables such that

- is a bottleneck with parameter ;

- For every , the variable is a bottleneck with parameter .

Note that even though it does not appear explicitly in this definition, the relevancy variable Y is indeed crucial to it, as it defines what a bottleneck is (see Equation (1)). If the conditions of Definition 5 hold, we will also say that the IB problem defined by is -refinable. If bottlenecks satisfy the definition’s conditions, we will say that they achieve successive refinement, or, simply, that there is successive refinement between these bottlenecks. If there is successive refinement for all combinations of trade-off parameters, we will say that the corresponding IB problem is successively refinable. Eventually, when it will be needed in later sections to contrast this notion with that of soft successive refinement, we will refer to it as exact successive refinement.

For instance, let and . We consider , where ⊕ denotes the modulo-2 addition, and X and Z are Bernouilli variables with parameters and a, respectively, for an arbitrary . In this case, it is proven in Lemma 1 of [46] that, for well-chosen binary variables and , we have that X, , and are mutually independent, and the variables and are bottlenecks of resp. parameters and . Moreover, using the independence of with and the assumed Markov chain , a straightforward computation shows that to get a bottleneck of parameter , the variable can be replaced by . Thus, here, the IB problem is -refinable, where successive refinement is achieved by and .

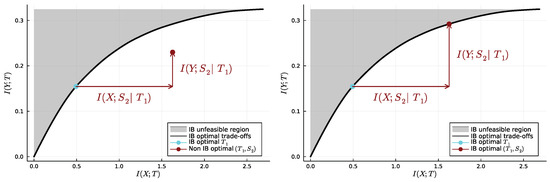

It is helpful to visualise SR on the information plane, i.e., that on which lies the information curve. Indeed, successive refinement can be understood in terms of specific translations on the information plane: those resulting from concatenating an already existing variable with a new variable —let us call them “accumulative translations” because they result from a processing that does not discard any of the information already collected. Let us focus on the case and first note that, whether or not is a bottleneck, we have

and, similarly,

In other words, the measure of both the complexity cost and relevance for can be decomposed into the same measures first for and then for the “supplement” of information , conditionally on the “already collected” information . In Figure 2 (left and right), we first fix a coarse bottleneck , understood here as a point on the information curve. Once is known, we supplement it with a new variable , which incurs both an additional complexity cost and an additional relevant information gain . The question of successive refinement is that of whether the additional complexity cost can be leveraged enough for the resulting relevant information gain to take “up to the information curve”, i.e., to be such that is on the information curve. This is the case in Figure 2, right, and not the case in Figure 2, left. In short, there is successive refinement between two points on the information curve if and only if there exists an “accumulative translation” from the coarser one to the finer one.

Figure 2.

Successive refinement visualised on the information plane. On the left, adding the information from the variable (the supplement variable) is not efficient enough to achieve successive refinement. On the right, it is. See main text for details (the values of and have been chosen arbitrarily to illustrate each case).

Let us now describe a more formal characterisation, where point will mirror the characterisation of SR for classic rate-distortion problems [26].

Proposition 1.

Let . The following are equivalent:

- (i)

- There is successive refinement for parameters ;

- (ii)

- There exist bottlenecks , of common source X and relevancy Y, with respective parameters , and an extension of the , such that, under q, we have the Markov chain

- (iii)

- There exist bottlenecks , of common source X and relevancy Y, with respective parameters , and an extension of the , such that, under q, we have the Markov chain

Proof.

See Appendix B.1. It is relatively straightforward because we started directly from a single-letter definition. □

Proposition 1 was already known to be a characterisation of SR of the IB [30,31,32]. However, as the latter references start from an operational problem in terms of asymptotic rates and distortions for multi-letter systems, here, Proposition 1 shows that our single-letter Definition 5 is equivalent to the operational definitions in [30,31,32]. See Appendix B.2 for more details.

Remark 1.

Crucially, the order of the indexing in (4) and (5) depends only on the order of the trade-off parameters , and not on the order in which the bottlenecks are produced, which is just the interpretation we started from. In particular, Proposition 1 makes equally legitimate the interpretation of bottlenecks produced from the finest one to the coarsest one, each new bottleneck thus implementing a further coarsening of the source X. This alternative interpretation renders successive refinement relevant to feed-forward processing, including in particular the Blackwell order (see Section 4.1) and deep neural networks (see Section 4.2). For ease of presentation, though, we will stick to the information incorporation interpretation along most of the paper.

Moreover, from Proposition 1, we can leverage existing IB literature to prove the successive refinability of two specific settings. (For an explicit definition of what we mean, in Proposition 2, by successive refinement in the case of the Lagrangian IB problem, see Appendix B.3.)

Proposition 2.

If are jointly Gaussian vectors, then the Lagrangian IB problem defined by is -refinable for all .

This result is a direct consequence of a property named a semigroup structure, and is proven for the Gaussian IB framework in [33], which relates the latter framework with renormalisation group theory. The semigroup structure denotes, in short, the situation where iterating the operation of coarse graining a variable by computing a bottleneck—where, at each iteration, the previous bottleneck becomes the source of the next IB problem—still outputs a bottleneck for the original problem. This semigroup structure is a stronger property than successive refinement and, as it is satisfied in the Gaussian case, this implies the successive refinability of Gaussian vectors (see Appendix B.3 for more details). Beyond Proposition 2, this relationship between successive refinement and the semigroup structure hints at potentially interesting links between the composition of coarse-graining operators and successive refinement. In this respect, note that our numerical results below (see Section 2.3 and Section 3.2) suggest that, for non-Gaussian vectors, successive refinement does not always hold and thus, a fortiori, that the semigroup structure might not always be satisfied in the IB framework—or at least not perfectly.

Eventually, in the case of deterministic channel , an explicit solution to the IB problem (1) is known [34]: with probability , and with probability , for e a dummy symbol, and some well-chosen . This specific solution allows one to address successive refinement for the deterministic case:

Proposition 3.

Let X be a discrete or continuous variable, and Y be a deterministic function of X. Then, the IB problem defined by is successively refinable for all trade-off parameters .

Proof.

See Appendix B.4. A proof was already proposed, from an asymptotic coding perspective, for discrete X and , in [58]. We use a similar argument here. □

Note, though, that the solution used here to prove successive refinement is, as noted in [34], not very interesting: it is nothing more than an increasingly noisy version of Y. It is not clear whether or not there exists more interesting bottleneck solutions in the deterministic case, and if so, whether these other solutions are successively refinable. Proposition 3 will in any case be useful for our own purposes: we will use it to set aside the deterministic case in the proof of SR for binary X and Y (Proposition 5 below).

Until now, we used existing results from the IB literature that, even though not originally aimed at it, happen to yield interesting consequences for the problem of the successive refinement of the IB. However, it seems crucial, for further progress on the latter topic, to design specifically tailored mathematical and numerical tools. This is the purpose of the following sections of this paper; in particular, in the next section, we present a simple geometric characterisation of the IB’s successive refinability.

2.2. The Convex Hull Characterisation and the Case

In this section, we present our convex hull characterisation of successive refinement. We then show its relevance both to numerical computations—thanks to a linear program for checking the condition—and to proving new mathematical results—which we exemplify by proving, thanks to this new characterisation, the successive refinability of binary variables. Here, as in our subsequent numerical experiments in Section 2.3, we will focus on discrete variables and processing stages, even though our results are thought of as a first step towards a generalisation to continuous variables and an arbitrary number of processing stages.

The convexity approach that we propose hinges upon changing the perspective on the IB problem (1) from an optimisation over the encoder channels to an optimisation over the decoder channels ; indeed, (1) can be equivalently presented as the “reversed” optimisation problem

Formulations (1) and (6) yield the same solutions because, through the Markov chain , the joint distribution is equivalently determined by specifying some or specifying some pair that satisfies the consistency condition . This condition says that the source distribution must be retrievable as a convex combination of the , where the weights are given by the .

Moreover, this formulation leads to a crucial intuition concerning the relationship between successive refinement and the set , where, for a set , we denote by the convex hull of E, i.e., the set of points obtained as convex combinations of points in E. First, note that, for a bottleneck T, the set is reduced to a single point if and only if T is independent from the source X. Conversely, coincides with the whole source simplex if and only if T captures all the information from the source, i.e., if . Generalising these extreme cases suggests the intuition that describes the information content held by the bottleneck T about the source X. Now, let us recall that successive refinement from a coarse bottleneck to a finer bottleneck means intuitively that can be obtained without discarding any of the information extracted by about the source X; in other words, that the information content of about the source Xis included in that of . Combining this latter intuition with the one about being the information content of a bottleneck T suggests the following characterisation of successive refinement:

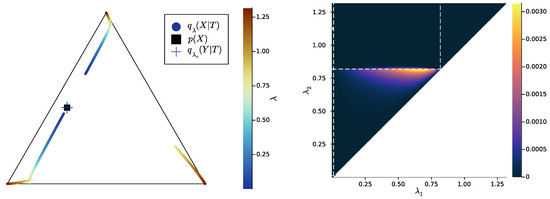

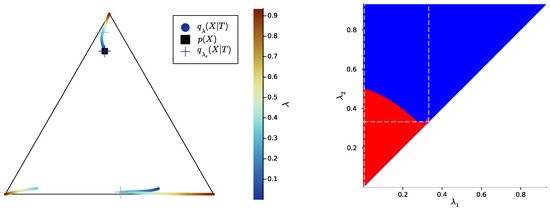

where and are bottlenecks of parameters , respectively. This condition is visualised in Figure 3. The characterisation indeed holds, at least for the discrete case and under a mild assumption of injectivity of the finer bottleneck’s decoder:

Figure 3.

Illustration of the convex hull condition. The black triangle represents the source simplex with, here, , and the pointwise bottleneck decoder probabilities are represented on it (in cyan for the coarser bottleneck and in red for the finer one ). The convex hull of the respective families of points are shaded with the corresponding color. On the left, the condition is not satisfied; on the right, it is.

Proposition 4.

Let , and assume that is discrete.

If there is successive refinement for parameters , then there exist bottlenecks of parameters , respectively, such that the convex hull condition (7) is satisfied.

Conversely, if there exist bottlenecks of parameters , respectively, such that the convex hull condition (7) holds and such that the decoder , seen as a probability transition matrix, is injective, then there is successive refinement for parameters . Moreover in this latter case, if , are bottlenecks that achieve successive refinement, the extension of and such that holds is uniquely defined.

Proof.

See Appendix B.5. The idea consists in translating the Markov chain characterisation into the convex hull condition (7). The direct sense is straightforward. For the converse direction, observe that, even though as soon as (7) is satisfied it provides a joint distribution that satisfies the Markov chain , it is not clear whether this distribution is consistent with . The potential problem stems from the fact that must be such that the channel maps the marginal to the marginal . The injectivity assumption, however, provides a sufficient condition for it to be the case. This assumption happens to also imply the uniqueness of the extension, among all those that satisfy the Markov chain . □

Even though the injectivity assumption might seem restrictive, in practice, in our numerical experiments below (see Section 2.3 and Section 3.2), we always found that the decoder channel could be chosen as injective by reducing it to its effective cardinality (see Section 1.3)—a process that leaves the convex hull condition (7) unchanged because it leaves the points unchanged. See also Appendix D for a conjecture that, if true, would simplify our convex hull characterisation in the case of a strictly concave information curve.

Remark 2.

The convex hull condition happens to be equivalent to the input-degradedness pre-order on channels (see Proposition 1 in [59]). Even though we will not develop this point further when considering alternative interpretations of SR (Section 4), it is worth noting that, through input-degradedness, SR can be given additional operational interpretations, particularly in terms of randomised games (see Section IV-C in [59]).

Our new characterisation provides a simple way of checking whether or not two bottlenecks and achieve SR. Recall that the Markov chain characterisation (Proposition 1, point ) shows that SR is a feature of the space of all extensions of individual bottleneck distributions and . While this set might, a priori, be difficult to study directly, our characterisation (7) reduces the problem to a simple geometric property relating only two explicitly given conditional distributions: and . Moreover, note that (7) is equivalent to

and that checking whether a point is in the convex hull of a finite set of other points can be cast as a linear programming problem [68]. As a consequence, one can bound the time complexity of checking condition (7) as , where K is the time complexity bound of a linear program with variables and constraints. As a consequence, using the bound on K proved in [69], the time complexity of checking (7) is no worse that , where corresponds to the complexity of matrix multiplication, is the relative accuracy, and the notation hides polylogarithmic factors (see Appendix B.6 for details).

We deem this convex hull characterisation to be important for theory as well. Indeed, it reduces the question of successive refinement to a question about the structure of the trajectories, on the source probability simplex , of the points for varying . Thus, any theoretical progress on the description of these bottleneck trajectories might lead to theoretical progress on the side of successive refinement. As a first step in this direction, we show that this geometric point of view helps to solve the question of SR in the case of a binary source and relevancy (This result generalises the already known fact that there is always successive refinement when X is a Bernoulli variable of parameter and is a binary symmetric channel (see Lemma 1 in [46] and see Section 4.2 for explanations on why the latter work’s framework encompasses ours). Moreover, a potential generalisation of our result to an arbitrary number of processing stages is left to future work).

Proposition 5.

If , then, for any discrete distribution and any trade-off parameters , the IB problem defined by is -successively refinable.

Proof.

Let us here outline the proof presented in Appendix B.7. The case of deterministic was already dealt with in Proposition 3, so we can assume that is not deterministic. In this case, the IB problem with and has been extensively studied in [35]. In short, the latter approach leverages the fact that a pair is a solution to the IB problem (6) if the convex combination of the points , with weights given by , achieves the lower convex envelope of the function , where is a well-chosen function on the source simplex and is the information curve’s inverse slope. This work, along with considerations from [37], which uses the same convexity approach, yields in particular that the points are the extreme points of a non-empty open segment uniquely defined by , and this latter segments grows as a function of the inverse slope and thus, by concavity, as a function of . This implies that the convex hull condition is always satisfied for . As point also implies that, here, must be injective, Theorem 4 allows us to conclude the successive refinability for processing stages. □

The proof of Proposition 5 exemplifies how our convex hull characterisation interlocks well with the convexity approach to the IB [35,36,37,38,39]. In this sense, our characterisation brings a new theoretical tool to the study of the successive refinement of the IB.

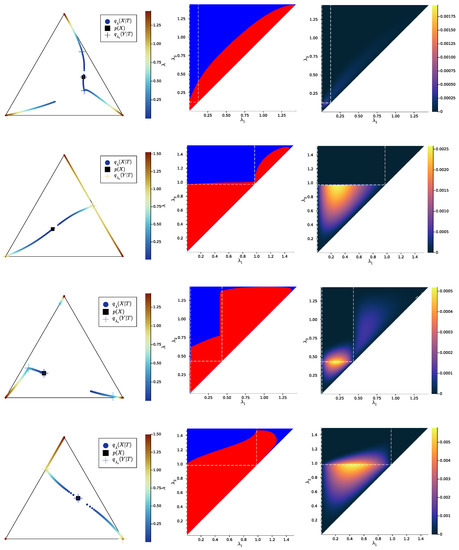

2.3. Numerical Results on Minimal Examples

In this section, we leverage our new convex hull characterisation to investigate successive refinement on minimal numerical examples, i.e., with discrete and low-cardinality distributions . Our experiments suggest that, in general, successive refinement does not always hold exactly. However, they also highlight two other features: first, it seems that successive refinement is often shaped by IB bifurcations [41,42,43,44]. Second, even though successive refinement is often not satisfied exactly, visualisations suggest that it is often “close” to being satisfied. The formalisation of this latter intuition will be the topic of the next section.

We consider the Lagrangian form (3) of the IB problem (see Section 1.3). We compute solutions to it with the Blahut–Arimoto (BA) algorithm [1], combined with reverse deterministic annealing [19,70], starting from (i.e., in practice, ) at the IB solution (we noticed that regular deterministic annealing sometimes yielded sub-optimal solutions because they followed sub-optimal branches at IB bifurcations [1,71], which was not the case for reverse annealing). We always obtained that was a strictly increasing function of the Lagrangian parameter , so it makes sense to index the solutions by rather than ; for instance, in this section and Section 3.2, we will write for our algorithm’s output for a such that .

In all our numerical experiments, after reducing a bottleneck T to its canonical form (see Section 1.3), the decoder channel was injective. Therefore, thanks to Theorem 4, the convex hull condition (7) being satisfied here does imply successive refinement. In the remainder of the paper, we will thus use the convex hull condition as a proxy for numerically assessing successive refinement (see Appendix D for more details on what we mean by “proxy”). This condition can be investigated in two ways. First, for two distinct trade-off parameters , we can compute whether the convex hull condition (7) holds or not with the linear program described in Appendix B.6. Second, for , we can visualise the whole trajectories, for varying , of the points on the source simplex . As we will see, this yields interesting qualitative insights.

As a sanity check for our algorithm, we compute bottleneck solutions for binary X and Y, which we proved in Proposition 5 to be successively refinable for all trade-off parameters. We used the linear program to check the convex hull condition numerically for all pairs and for distributions uniformly sampled on the joint probability simplex . We find that the convex hull condition is indeed always numerically satisfied.

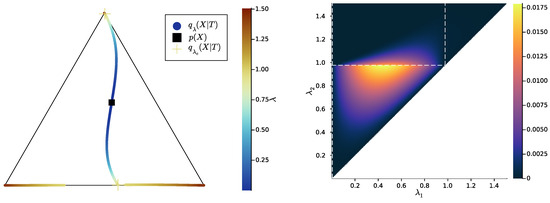

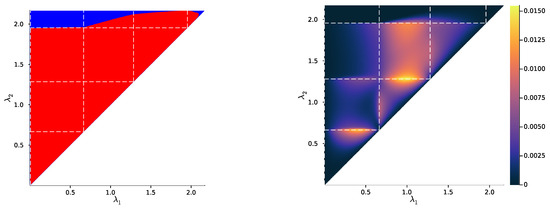

Then, we study the case , once again uniformly sampling example distributions on . Figure 4, Figure 5 and Figure 6 show, for representative examples, visualisations of the trajectories over of the (left)—which we will refer to as the bottleneck trajectories—along with the corresponding computations of the convex hull condition as a function of and (right)—which we will refer to as the SR patterns (The correspodning are plotted in Appendix E, and is shown in Figure 4, Figure 5 and Figure 6 (left). The explicit corresponding to each of these paper’s figures can be found at: https://gitlab.com/uh-adapsys/successive-refinement-ib/(accessed on 12 September 2023).

Figure 4.

Left: bottleneck trajectories for an example distribution such that , i.e., trajectory of , represented as the family of points on the source simplex , as a function of (crosses: value of just before a symbol split at a critical parameter , where the crosses’ color corresponds to the value of ). The conditional distribution is defined by the single point when (dark blue cross on the black square), or by two distinct points between the first and second symbol splits (dark blue to cyan), or by three distinct points after the second symbol split (cyan to red). Note the discontinuity of at each symbol split (without the discontinuity, the trajectory around a symbol split would look like a branching). Right: corresponding SR pattern, i.e., corresponding output for the convex hull condition (blue: satisfied; red: not satisfied; dashed white lines: critical values of either or ). For instance, the critical value corresponds, on the bottleneck trajectories (left), to the symbol split from two to three symbols (cyan crosses). Note that . The respective corresponding to this figure and to Figure 5 and Figure 6 are plotted in Appendix E.

Figure 5.

Same as Figure 4, with a different example distribution such that .

Figure 6.

Same as Figure 4, with a different example distribution such that .

Let us first give a general description of the bottleneck trajectories. For , the all coincide with the source distribution . This should be the case, as, for , the bottleneck T is independent of X. Then, when increases, the trajectories seem piecewise continuous, where each discontinuity corresponds to a symbol split, i.e., a change in effective cardinality (see Section 1.3). We mark with a cross, for each , the located just before such a change in effective cardinality.

In the examples of Figure 4, Figure 5 and Figure 6, as , there are two symbol splits, corresponding to that from one to two and two to three symbols, respectively. Eventually, for large , the last continuous segment of bottleneck trajectories corresponds to effective cardinality , and, for the maximal , each corner of the source simplex is reached by for some . This means that for maximum , there is a deterministic bijective relationship between T and X. The latter is expected: for maximum , bottlenecks are minimal sufficient statistics of X for Y [72]; where for sampled uniformly on the simplex, these minimal sufficient statistics are, with probability 1, just permutations of X.

Definition 6.

In the following, we refer to the piece of trajectory where the bottleneck’s effective cardinality is equal to the integer i as the“segment ”, i.e., it is the segment where corresponds to exactly i distinct points on the source simplex ; for instance, in Figure 4, the segment corresponds to the first piece of trajectory spanning colors from dark blue to cyan.

Notation 2.

We denote by the trade-off parameter’s critical value corresponding to the i-th change in effective cardinality, i.e., the symbol split from i to symbols. Here, we will only need to consider the critical values and , corresponding to the splits from one to two and two to three symbols, respectively.

Let us now come back to the question of successive refinement: for which parameters is the convex hull condition satisfied? The right-hand sides of Figure 4, Figure 5 and Figure 6 provide the answers corresponding to trajectories on the respective left-hand sides—where blue and red mean that the condition is and is not satisfied, respectively. Moreover, we highlight with dashed white vertical and horizontal lines the critical parameter values and , respectively, at which the symbol split occurs (see Appendix B.8 for details on the computation of these symbols splits). Note that we always have , which is expected, as a bottleneck T corresponding to some must necessarily define at least two distinct .

First, in these examples as in most non-reported examples, the convex hull condition (right) breaks as long as , i.e., as long as the finer bottleneck’s effective cardinality is at most . This can also be read from the bottleneck trajectories (left): if the condition was satisfied for all , for instance, then the segment would be a line segment. This is clearly not the case in Figure 4 and Figure 6, and even though visually it virtually seems to be the case in Figure 5, the segment happens to be very slightly curved, which is enough to break the convex hull condition. In other words, for , several-stage processing seems to induce, in these examples, a nonzero loss of information optimality.

Then, for , even though there is no single general pattern, the trajectory’s structure at the bifurcation seems to impact successive refinement. Indeed, at the bifurcation at , the set opens up along a new, third dimension, and keeps widening when increases. This allows it to (gradually in Figure 4 and Figure 6, or virtually straight away in Figure 5) encompass the segment because it “overcomes” the curvature of this piece of trajectory. For instance, in Figure 4, because the segment is strongly curved, the convex hull condition gets satisfied for all only if is significantly larger than . On the contrary, because in Figure 5, the segment is virtually not curved, it is almost as soon as that the convex hull condition is satisfied for all .

In Figure 6, the lack of successive refinement for does not seem to be due to the same phenomenon as the one just described. Generally speaking, we observed a whole variety of SR patterns (see Appendix F for more examples), and our aim here is not to try to interpret all of them. However, despite this diversity, the SR patterns that we studied typically shared a common qualitative feature: the bifurcation structure of the bottleneck trajectories seemingly participates in shaping these SR patterns. Mostly, it seems typically necessary, for SR to hold, that the larger parameter has crossed the bifurcation value , because the non-zero curvature of the segment can only be “overcome” by opening the set along a new dimension, through the symbol split at . This phenomenon will be explored in more details in Section 3.2.

Besides this relationship between SR and the structure of bottleneck bifurcations, this numerical study suggests a generalisation of the notion of successive refinement. Indeed, in Figure 5 for instance, even though the right-hand side asserts that successive refinement does not hold for , the virtually linear piece of trajectory on the left-hand side suggests that this is “almost” the case. In the next section, we formalise this intuition.

3. Soft Successive Refinement of the IB

The minimal experiments from Section 2.3 suggest the intuition that even though successive refinement might not always hold exactly, when broken, it might still be “close” to being satisfied. More generally speaking, let us recall that we are trying here to understand the informationally optimal limits of several-stage information processing. As our numerical experiments suggest that the IB problem is not always successively refinable, it is desirable to quantify the lack of successive refinement—i.e., the lack of informational optimality induced by several-stage processing. These considerations lead to the notion of soft successive refinement [18], which we define and motivate in this section. As we will see, this generalisation of exact SR does not depend on the specific structure of the IB setting; rather, it can also be used as a generalisation of exact SR for any rate-distortion scenario.

3.1. Formalism

Let us first focus on the case : we thus want to quantify the amount of information captured by a coarse bottleneck and then discarded by a finer bottleneck . Let us recall that, from Proposition 1, bottlenecks and achieve successive refinement if there exists an extension of and such that, under q, we have the Markov chain , which is equivalent to . It thus seems natural to quantify soft successive refinement with the conditional mutual information . However, the IB method does not entirely define the relationship between distinct bottlenecks; formally, there is a whole polytope of possible extensions of and (see Section 1.3). Among these possible extensions, it seems natural to search for those that minimise the violation of the SR condition . This leads us to use the unique information [40]

This quantity was already defined in [40] in the context of partial information decomposition [61,62,63,64], and it happens to be relevant to us for several reasons.

First of all, it depends only on the distributions and , which are indeed the only distributions provided by the IB framework. Second, from Proposition 1, there is successive refinement if and only if there are two bottlenecks and such that . Third, it is thoroughly argued in [40] that (8) is a good measure of the information that only , and not , has about X, which is an interpretation that coincides neatly with the intuition that we want to operationalise here. Eventually, Proposition 6 below, which first requires some definitions, provides an information-geometric justification.

Definition 7.

For Δ a probability simplex and , we define

where is the Kullback–Leibler divergence: , if the probability distributions and are defined on the discrete alphabet .

Definition 8.

The successive refinement set is the set of distributions r on such that, under r, the Markov chain holds.

Note that does not require its elements to be extensions of any fixed bottleneck distributions but imposes the Markov chain that characterises SR (see Proposition 1). SR is achieved for bottlenecks if and only if the successive refinement set and the extension set share a common distribution . In general (for ), the following proposition can easily be derived:

Proposition 6.

For fixed distributions , , we have

Proof.

See Appendix C.1. □

In this sense, quantifies “how far” the joint distributions extending the bottlenecks and are from making the successive refinement condition hold true, where the “distance” is understood as a minimised Kullback–Leibler divergence.

Our new measure of soft SR is continuous:

Proposition 7

([73], Property P.7). The unique information is a continuous function of the probabilities and .

Remark 3.

In particular, if has a discontinuity as a function of the parameter or , which define the bottleneck distribution or , respectively, then this can only be a consequence of a discontinuity of the probability as a function of or as a function of , itself, respectively. This consideration will be useful for analysing our numerical experiments in Section 3.2.

Moreover, the formulation (9) of unique information suggests a natural generalisation to an arbitrary number of processing stages:

Definition 9.

Let be bottlenecks with respective parameters , and their respective individual distributions. One can quantify soft successive refinement, or, equivalently, the lack of successive refinement, through the divergence .

While [74] proposes a provably convergent algorithm to compute , to the best of our knowledge, there currently exists no provably convergent algorithm to compute for . Our numerical investigations (see Section 3.2) will stick to the case , but this generalisation makes soft SR in particular, at least conceptually for now, more relevant to deep learning (see Section 4.2).

For the sake of completeness, let us point out that for each , there is a whole set of solutions —or, equivalently, —to the IB problem (1). Thus, the unique information, which is defined as a function of specific bottleneck distributions and , could a priori not be uniquely defined by the corresponding trade-off parameters and . This subtlety is further explained in Appendix D, where we also formulate a conjecture that would prove that, at least in the case of a strictly concave information curve, the trade-off parameters do uniquely define the unique information.

3.2. Numerical Results on Minimal Examples

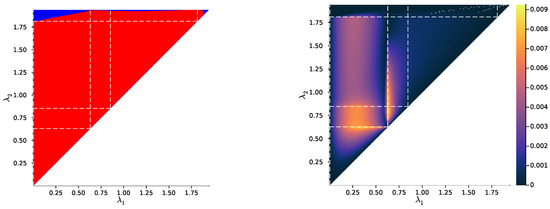

A provably convergent algorithm that computes, in the discrete case, the unique information (8), was provided in [74]. In this section, we use the authors’ implementation of this algorithm (https://github.com/infodeco/computeUI, accessed on 12 September 2023) to qualitatively investigate, on minimal examples, the landscapes of unique information (UI) and their relationship to the bottleneck trajectories on the simplex.

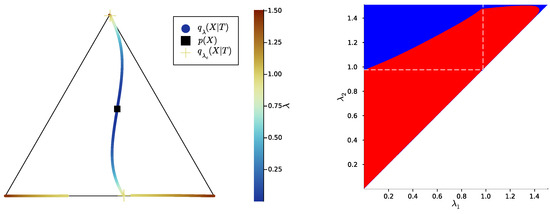

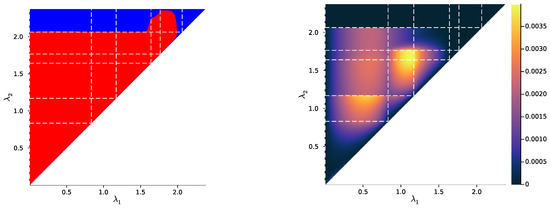

In Figure 7, Figure 8 and Figure 9 (left), we plot again the same bottlenecks trajectories as in Figure 4, Figure 5 and Figure 6 (left), but compare them this time with the unique information , plotted as a function of and (right). We also plot, in Figure 10, Figure 11 and Figure 12, some representative examples of the exact SR patterns (left) and UI landscapes (right) for slightly larger source and relevancy cardinalities, where is, as above, uniformly sampled — the explicit distributions corresponding to Figure 10, Figure 11 and Figure 12 can be found at https://gitlab.com/uh-adapsys/successive-refinement-ib/. (see Appendix F for additional examples of comparison of the UI landscapes with bottleneck trajectories, and with the exact SR patterns.) Once again, we highlight with dashed white vertical and horizontal lines the critical parameter values and , respectively, where, as expected, . We will first describe, for a fixed , the relative variations in unique information as a function of and . Then, we will compare the absolute values of unique information to the information globally processed by the system.

Figure 7.

Left: example trajectory of as a function of (crosses: value of just before a symbol split at a critical parameter ). Right: corresponding unique information, in bits (color), expressed as a function of the pair of trade-off parameters (white dashed lines indicate critical values of either or .). For instance, the critical value (right) corresponds, on the bottleneck trajectories (left), to the symbol split from two to three symbols (cyan crosses). The respective corresponding to this figure and to Figure 8 and Figure 9 are plotted in Appendix E.

Figure 10.

New example of an exact SR pattern and the corresponding UI landscape over trade-off parameters , where, here, and . Left: exact SR pattern, i.e., output for the convex hull condition (blue: satisfied, red: not satisfied). Right: corresponding UI landscape, in bits (color). White dashed lines indicate critical values of either or . Note that the binary notion of exact SR (left) filters out most of the structure unveiled by UI (right), the UI landscape seems highly impacted by IB bifurcations, and the UI is in any case always small, even though not entirely negligible. See main text for more details.

Figure 11.

Same as Figure 10, with a new example distribution , where, here, and . Besides the white orthogonal dashed lines, other white dots correspond to values of for which the algorithm did not converge (see main text for a comment on this lack of convergence).

Figure 12.

Same as Figure 10, with a new example distribution , where, here, and .

For all Figures from Figure 7 to Figure 9, the UI landscape partly mirrors the respective exact SR pattern of Figure 4, Figure 5 and Figure 6 (right). However, within the region where these latter figures answered a binary “no” to the question of exact SR, Figure 7, Figure 8 and Figure 9 reveal a sharply uneven variation in the violation of SR, where, for important ranges of trade-off parameters, the unique information is negligible comparative to others. For instance, even though Figure 5 (right) seems to indicate that SR does not hold for , the corresponding UI in Figure 8 (right) is virtually zero on a large part of this set of parameters, while still peaking for close to . This richer structure of the unique information landscape is further evidenced by Figure 10, Figure 11 and Figure 12.

Moreover, the unique information landscapes seem shaped by the bottleneck trajectories. Most importantly, the influence of IB bifurcations on SR can be seen even more clearly with soft than with exact SR. In particular, in Figure 10, Figure 11 and Figure 12, it seems that along the lines where one of the trade-off parameters crosses a critical value, the UI often goes through discontinuities, or at least sharp variations in either , , or both directions. In particular, even though patterns widely vary across different example distributions , unique information can significantly drop when crosses a critical value from below—a feature observed in both shown and non-shown examples. As we know that the unique information is continuous, the apparent discontinuity should be one of the bottleneck probability itself (see Proposition 7 and Remark 3). This is consistent with the observation from Section 2.3 that, at symbol splits, the trajectory of often seems to go through a discontinuity. Further, the fact that the sharp variation in UI is a decrease in this quantity (in increasing order of ) is intuitively consistent with the fact that the bottleneck trajectory’s discontinuity often induces a sudden “widening” (in increasing order of ) of

Indeed, for fixed , when crosses a critical value from below, the corresponding symbol split means that “widens” by opening up a new dimension, so it “more easily” encompasses , yielding as a consequence a drop in unique information. Recalling our intuition (see Section 2.2) that describes the information content that a bottleneck T contains about the source X, the feature just described can be interpreted in the following way: the IB bifurcations seem to induce a sudden “expansion” (in increasing order of ) of the information content carried by the bottleneck about the source, which makes the latter’s content more easily contain the information content of coarser bottlenecks.

Note, however, that these simple numerical results do not allow one to discriminate between the interpretation of the UI’s sharp variations at bifurcations as a discontinuity with regard to trade-off parameters, or a discontinuity of the UI’s differential. For instance, if the derivative with regard to discontinuously takes a value close to for slightly larger than some , then the UI graph can seem discontinuous at finite numerical resolution, even if, formally, only the UI’s differential is so. On the other hand, as an example, bifurcations can be characterised precisely as points of discontinuities of the derivatives, with regard to the trade-off parameter, of and [43,75], even though the functions themselves are continuous [2,75]. A more involved analysis distinguishing discontinuities of UI from those of its differential is left to future work. In any case, the interpretation as a discontinuity of the differential rests on a weaker assumption, which is still sufficient for explaining the numerical results.

More generally, these results suggest that for a several-stage processing that is IB-optimal at each stage, to minimise the information discarded along stages, the trade-off parameters should rather lie close to well-chosen IB bifurcations. If this happens to be a general feature of the IB framework, it would have implications for incremental learning. Indeed, coming back to the modelling of embodied agents (see Section 1), for instance, it would mean that organisms that are poised close to information optimality by evolution should have a very specific structure of developmental learning, where the stages of learning should be discrete and determined by the right trade-off parameters.

Eventually, a last crucial feature was also satisfied on these minimal examples: whatever the structure of bottleneck trajectories, the maximal UI was significantly lower than the mutual information between the external source X and the system’s internal representations . More precisely, for an extension of and that achieves the minimum in (8), let us define

Note that decomposing , where , with the chain rule for mutual information shows that this quantity only depends on and : thus here, is indeed well-defined by and . The maximum ratio over all trade-off parameters was typically of the order of in our minimal experiments; for instance, it was , , , , , and for the IB problems corresponding to Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12, respectively. Among all the (shown and non-shown) studied examples, it never exceeded , and we did not notice an increase in this maximum ratio when the source or relevancy cardinalities were increased (the largest cardinalities that we experimented with were , ). In short, even though several-stage processing might incur a non-negligible loss of information optimality in the IB sense, these results suggest that this loss could often be significantly limited. Of course, here as in Section 2.3, on the one hand, the numerical results are purely phenomenological, and, on the other, it is at this stage far from being clear that the qualitative insights brought by these minimal experiments generalise well to more complex situations. However, they exhibit the potentially crucial qualitative features of exact and soft successive refinement in the IB framework, which can be targeted by further theoretical research.

4. Alternative Interpretations: Decision Problems and Deep Learning

The notion of successive refinement presented in this work builds on the intuition of the optimal incorporation of information. However, alternative interpretations can be given to the very same mathematical notion. First, thanks to the Sherman–Stein–Blackwell theorem [45,65], the rate-distortion-theoretic notion of SR can be shown to be equivalent to a specific order relation between the encoder of the finer bottleneck and that of the coarser one , namely the Blackwell order. This point of view turns SR into an operational decision-theoretic statement; in short, there is SR when, for any task and any source distribution , the optimal performance is better (or at least as good) when decisions are based on the output of than when they are based on the output of . Second, the Markov chain (4) characterising successive refinement makes it directly relevant [46] to the IB analysis of deep neural networks [49,50,51,52,53,54,55,56]. In the next two sections, we make these connections explicit and relate them to this paper’s investigations.

4.1. Successive Refinement, Decision Problems, and Orders on Encoder Channels

Here, we show that exact and soft successive refinement can be, in the discrete case at least, understood in terms of optimally solving decision problems on arbitrary tasks, through orders on the encoder channels (or more precisely, pre-orders: i.e., we will consider binary relations that are reflexive and transitive). We will rely on [45], where these orders were considered.

Let us first make clear what we mean here by a decision problem. Consider a state variable X over a finite set , another finite set of possible actions, and a reward function that depends on both the value x of the state X, and the chosen action . The agent’s observation is not the state X itself, but only the output T of X through some stochastic channel (where we assume here that the observation space is finite). To each observation-dependent policy corresponds an expected reward

where is determined from , through the Bayes rule, and denotes the product measure of and . Solving the decision problem for the observation channel means choosing a policy that yields an optimal expected reward

For instance, any Markov decision process can be seen as a decision problem as defined above (for discrete time and finite state-space, number of possible actions at each state, and horizon). In this case, and are the spaces of state trajectories and observation trajectories, respectively, that an agent can go through along one episode; is the space of action sequences that can be chosen along the episode; and u is the cumulative reward, i.e., the (potentially discounted) sum of rewards obtained at each time-step in the episode. (See, e.g., [76] for more details on the terminology used in this example.)

We can now define the following order [45]:

Definition 10.

For two channels κ and μ, we write , if, for any decision problem , we have

In short, means that, for any conceivable task based on any data distribution over the fixed data space , the observation channel can yield a performance at least as good as that of the observation channel —if combined with a well-chosen policy. The second order is the Blackwell order [65]:

Definition 11.

For two channels κ and μ, we write if there exists a channel η such that , where “∘” denotes the composition of channels, i.e., such that , where , , and are the column transition matrices corresponding to μ, η, and κ.

It turns out that successive refinement can be characterised by either of these two orders, thanks to the Sherman–Stein–Blackwell theorem [45,65]. In other words, SR, which is a priori not a decision-theoretic statement, turns into one through its equivalence with the Blackwell order:

Proposition 8.

Let . The following are equivalent:

- (i)

- There is successive refinement for parameters .

- (ii)

- There are bottlenecks of respective parameters such that

- (iii)

- There are bottlenecks of respective parameters such that

Proof.

Using the Markov chain characterisation (point in Proposition 1), the result is nothing more than a reformulation of Theorem 4 in [45] in the language of the present paper. Note that, to use this theorem, we need to assume that the source X is fully supported, but this is indeed an assumption that we are using along the whole paper because it does not incur any loss of generality (see Section 1.3). □

Let us highlight the intuitive meaning of Proposition 8. Point means that there is SR when the coarse representation can be retrieved by post-processing the finer representation —which has implications in terms of feed-forward processing (see Section 4.2).

Now, the equivalence of SR with point relies on the mathematically deep part of the Sherman–Stein–Blackwell theorem [45], and provides a new operational meaning to SR. Namely, there is SR when, for any distribution on the source, and any reward function, the optimal performance is at least as good when the decisions are based on the output of , seen as an observation channel, than when they are based on the output of . Let us stress that the fact that defines a finer bottleneck than crucially depends on , i.e., on the specific source distribution , and on how the latter relates to the specific relevancy variable through . Proposition 8 shows that SR describes a much more “universal” relation between the channels and .

For example, assume that evolution poises the sensors of a given biological organism at optimality in the IB sense [10,16], i.e., if X is the environment, S some sensor’s output (e.g., a retina’s ganglion cells activation), and Y a behaviourally relevant feature (e.g., the edibility of food), then S is a bottleneck for . Successive refinement here means that if the sensor is finer than as a bottleneck for the fixed feature Y relevant to a particular task, then will afford to the organism—if combined with the right decision making—better performances than on any other task, for any other input distribution . In other words, is then “universally better” than , which is a very strong (and somewhat unexpected) generalisation.

Eventually, the unique information that we chose as our measure of soft SR has initially been thought precisely as measuring the deviation from the order “” (see arguments in [45]). Unique information can thus, for instance, be understood as quantifying the deviation from a finer IB-optimal sensor to be “universally better” than a coarser one.

4.2. Successive Refinement and Deep Learning

As suggested by Remark 1 and Proposition 8-, successive refinement can be equally well understood in terms of feed-forward processing, an interpretation which is particularly relevant to deep neural networks. Indeed, while the information bottleneck theory of deep learning [49,50,51] is still under debate [52,53,54,55,56], our results can be connected to some of this theory’s specific claims concerning the benefits of hidden and output layers’ IB-optimality.

Let denote the successive layers of a feed-forward deep neural network (DNN), which is fed with an input X and attempts to extract, within it, information about a target variable Y,thus satisfying the Markov chain [49]