Abstract

Network epidemiology plays a fundamental role in understanding the relationship between network structure and epidemic dynamics, among which identifying influential spreaders is especially important. Most previous studies aim to propose a centrality measure based on network topology to reflect the influence of spreaders, which manifest limited universality. Machine learning enhances the identification of influential spreaders by combining multiple centralities. However, several centrality measures utilized in machine learning methods, such as closeness centrality, exhibit high computational complexity when confronted with large network sizes. Here, we propose a two-phase feature selection method for identifying influential spreaders with a reduced feature dimension. Depending on the definition of influential spreaders, we obtain the optimal feature combination for different synthetic networks. Our results demonstrate that when the datasets are mildly or moderately imbalanced, for Barabasi–Albert (BA) scale-free networks, the centralities’ combination with the two-hop neighborhood is fundamental, and for Erdős–Rényi (ER) random graphs, the centralities’ combination with the degree centrality is essential. Meanwhile, for Watts–Strogatz (WS) small world networks, feature selection is unnecessary. We also conduct experiments on real-world networks, and the features selected display a high similarity with synthetic networks. Our method provides a new path for identifying superspreaders for the control of epidemics.

1. Introduction

Epidemics always threaten human health and affect social stability, especially recently, in the COVID-19 pandemic. Since pandemics severely disrupt people’s daily lives, research on mitigating the impacts of the disease is attracting more and more attention. Moreover, based on the promising performance of machine learning, studies exploring pandemics using machine learning have been emphasized in the past few years. These studies explore different types of data, including text data such as the blood test reports of patients [1]; voice data such as cough sounds [2]; image data such as X-rays, CT, and ultrasound scans [3]; and multimode data [4]. Besides the above research on clinical diagnosis, identifying influential individuals in the process of the epidemic’s spread is also an effective instrument to control epidemic propagation.

Theoretical epidemiology [5] describes the spreading rule of an epidemic as a quantitative mathematics model. Later, the complex network theory provided a new direction for spreading dynamics, and the transmission of infectious diseases in real systems can be abstracted into a dynamical process on complex networks, which is graph data. With respect to network epidemiology, the influence of each node depends on the expected outbreak size when the spread of the disease originates from the node itself.

However, it is challenging to directly estimate the influence of each individual spreader. In previous studies, traditional centrality methods [6,7] focus on a network’s structural properties and use top ranked nodes by different centralities as influential spreaders. In general, centralities can be classified as local and global measures [8]. Local measures are skewed towards the information of a neighborhood, such as degree centrality [9]. On the other hand, global measures mainly depict the position of a node in the network. For instance, closeness centrality [9] measures a spreader by averaging the shortest paths between the spreader and others, while betweenness centrality [9] is concerned with the frequency of the shortest paths between the node pair passing through the spreader. K-Shell [10] and its extension methods aim to decompose the network into different levels. Moreover, eigenvector centrality [11] is based on iteration; its variants include Katz centrality [12], PageRank [13], etc.

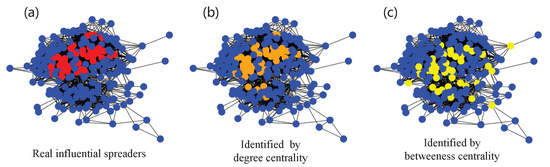

However, at present, different centrality methods describe structural properties from different aspects; thus, the definition of centrality has no uniform standard. In addition, a single centrality usually contains limited topological knowledge, resulting in an inability to completely reflect the real spreading potential of each spreader. For instance, as shown in Figure 1, neither the identification results of the degree centrality (Figure 1b) nor the betweenness centrality (Figure 1c) can cover the real situation.

Figure 1.

Identifying the top 20% influential spreaders in the human social network Jazz. (a) Real influential spreaders (red nodes) ranked by simulation results using Susceptible–Infectious–Recovered (SIR) dynamics. (b) Influential spreaders (orange nodes) ranked by degree centrality. (c) Influential spreaders (yellow nodes) ranked by betweenness centrality.

To overcome the deficiencies of traditional centrality methods, machine learning has been introduced as a new tool that acquires the hidden rules from existing data and accomplishes the prediction automatically [14]. In the field of network epidemiology, since the coupling relationship between the underlying network topology and dynamic results is hard to express explicitly, machine learning provides a key to approximating it. Recently, there have been some interdisciplinary works on integrating network epidemiology and machine learning that provide a new way to solve challenges, such as epidemic threshold identification [15,16], basic reproduction number prediction [17], source tracing [18], state transition probability estimation [19], and individual’s health state inferences [20].

In relation to the identification of influential spreaders, machine learning methods use structural attributes as the input, and tasks are briefly categorized as regression and imbalanced classifications. The former task is concerned with how to predict the size of the epidemic outbreak. For example, Rodrigues et al. adopted an artificial neural network (ANN) and random forest (RF) to predict disease dynamic variables and the importance of central attributes of patient zero [21]. Bucur et al. utilized support vector machine (SVM) and RF to estimate the exact expected outbreak size calculated on all possible infection paths. They found that a spectral-based centrality plus a property based on the edge density is sufficient to accomplish the task [22]. Regarding the imbalanced classification problem, Zhao et al. defined a machine learning process to distinguish the infection capability of a spreader. By comparing the performance of seven classifiers, they demonstrated the effectiveness and the scalability of classifiers [23]. Bucur found that the combination of a local centrality and a global centrality is capable of drawing a decision boundary, which distinguishes superspreaders from others based on SVM [24]. Recently, deep learning has become an active research orientation in the field of machine learning. Yu et al. generated a feature matrix based on a adjacency matrix and the node’s degree and solved the regression problem using a convolutional neural network (CNN) [25]. Zhao et al. proposed the learning model, i.e., InfGCN based on a graph convolutional network (GCN), and classified the minority of influential spreaders from the majority of less influential spreaders [26].

The machine learning methods that integrate multiple centrality methods, are more predictive than a single centrality method. However, these authors subjectively chose some centralities as features. For instance, Zhao et al. used the combination of degree [9], betweenness centrality [9], closeness centrality [9], and clustering coefficient [27] as their features [26], which were chosen without a specific criterion. Moreover, with the growing network size, some of the centralities chosen, such as betweenness centrality and closeness centrality [9], become computationally expensive, i.e., , and they are useless for the identification task [22]. Therefore, in order to enhance efficiency, it is crucial to figure out the optimal combination of centralities for identifying influential spreaders.

Previous studies solve the insufficiency of the machine learning methods using an exhaustive search method [22,24], which also brings high computational complexity and ignores the relationship between local or global centralities. Instead of completing searches between pairs of features, feature selection methods help to reduce feature redundancy and optimize feature combination. Broadly, feature selection methods are categorized into three classes, i.e., filter, wrapper, and embedding methods [28]. The filter method is independent of the subsequent machine learning model, as it only scores features based on their own properties or the correlation between the labels and the features [29]. In contrast, the wrapper method and the embedding method involve a machine learning model [29]. The wrapper method regards the performance of classifiers like SVM as a feature subset evaluation measure. To enhance feature selection efficiency, some wrapper methods employ intelligent algorithms such as particle swarm optimization (PSO) as their feature search strategy [30,31]. The embedding method obtains the importance of features while performing the classification task, such as RF. Based on the feature selection methods mentioned above, hybrid feature selection methods and ensemble feature selection methods have achieved significant developments [28]. In order to enhance performance, hybrid feature selection methods combine various types of feature selection methods, while ensemble feature selection methods deal with the instability of feature selection. However, these methods mentioned above are not appropriate for imbalanced data, the feature subsets selected by them are biased towards the majority class, affecting the reliability of classification performance over the minority class [32].

In all, a single heuristic centrality lacks prediction capability, and machine learning methods manually choose a number of centralities as features without a consistent standard, resulting in the inclusion of redundant, computationally expensive features. In order to adaptively select important centralities, here, we propose a two-phase feature selection method for identifying influential spreaders. In view of the imbalanced data distribution, to gain the optimal combination of centralities, the initial selection is conducted using an ensemble feature selection, with sampling technology and the wrapper technique used to balance the data. To obtain representative centralities, the secondary selection adopts hierarchical clustering [33] and the filter technique. By utilizing our feature selection method on three types of synthetic networks, we find that the combination of centralities involving a two-hop neighborhood with PageRank or degree is more effective for identifying influential spreaders in BA networks, while the combination of degree and one-hop neighborhood or two-hop neighborhood centralities is key for ER networks. The performance of classifiers with our feature selection method is competitive, while the complexity of calculation is remarkably reduced. For WS networks, the identification task calls for the maximal incorporation of features, rendering the selection of features unnecessary. We also validate our model on a real-world network, which helps us to figure out the most important feature for each network.

The rest paper is organized as follows. Section 2 presents our method, including the generation of features and labels and the feature selection method. In Section 3, we show the performance of our method, comparing it with other baselines. We conclude our work in Section 4 and discuss the future work in Section 5.

2. Methods

In this section, we introduce our machine learning scheme including the proposed feature selection method for identifying influential spreaders for disease dynamics, as shown in Figure 2. It illustrates two components, i.e., the description of data (original network and the content of the data set on its right in Figure 2) and the process of training and testing. First of all, it is indispensable to construct data sets, which are the resources of machine learning models. Given a network, we choose centralities that reflect structural characteristics as features, and we label nodes as superspreaders or not according to the results of the disease dynamics in the network. Then, during the process of training, in view of high computational complexity of some centralities such as betweenness centrality, we propose a two-phase feature selection method, which reduces the feature dimensions and maintains the high performance of the classifier. After obtaining the selected features, we feed them into a classifier for the training set and conduct tests on the corresponding testing set.

Figure 2.

The machine learning scheme for identifying influential spreaders for disease dynamics. In the original network, the color of the node represents its spreading capability. Red nodes are influential spreaders while blue nodes are less influential spreaders.

2.1. Data Set Generation

2.1.1. Features Based on Centralities

For disease dynamics, the topological attributes of the source of infection affect the spreading results. We associate the infection capability of a spreader with classical centrality measures, which characterize the network structure from various perspectives. For a network G with N nodes, the feature vector of node i is expressed as , , where d is the amount of original features obtained from different centrality methods. Here, inspired by previous works, we select 9 typical centrality methods as follows: (1) neighborhood-based centrality, including degree (K) [9], one-hop neighborhood () [34], two-hop neighborhood () [34], K-shell () [10], and clustering coefficient (C) [27]; (2) path-based centrality, including betweenness centrality (B) [9] and closeness centrality () [9]; and (3) spectral-based centrality, including eigenvector centrality () [11] and PageRank () [13], as shown in Table 1. Among the above centralities, neighborhood-based centralities, except KS, are local measures, while path-based centralities and spectral-based centralities are global measures.

Table 1.

Selected centralities. In the formulas, A is an adjacency matrix, if node i has a relationship with node j, and otherwise; is the one-hop neighbor set of node i; is the two-hop neighbor set of node i; is the amount of edges among the elements of ; is the number of shortest paths between node s and node t; is the number of shortest paths between node s and node t passing node i as a bridge; is the distance between node i and node j; is the leading eigenvalue of matrix A; and and are both hyperparameters.

2.1.2. Labels Based on the SIR Model

In network epidemiology, it is always assumed that the time scale of the network’s evolution is much larger than the epidemic dynamics; thus, the underlying network is static. During the spreading process, the influence of each spreader is measured by the outbreak size when it is taken as a seed. In order to obtain the outbreak size caused by each seed spreader, we adopt the SIR compartmental model due to its ability to reach an absorbing state, i.e., the number of infected individuals will be reduced to zero within a finite time. In addition to the field of disease dynamics, the SIR model is also utilized in rumor spreading, information spreading, etc. [6]. The SIR model defines three states, susceptible, infectious, and recovered. A node can change its state according to transition probabilities until the system reaches a steady state. At every time step t, each infected node transmits the disease to all its susceptible neighbors at an infection rate and recovers at a recovery rate . The effective infection rate for the disease dynamics is defined as . Here, we assume to be a fixed value for simplicity, i.e., . The epidemic threshold is the vital value which distinguishes between whether a disease outbreak will or will not occur in the system. Here, is numerically estimated by the variability measure as follows [35]:

where is the fraction of infected nodes obtained from the SIR process caused by a random seed node. The dynamic observable remains constant when the system reaches a stationary state, given by

where denotes the binary dynamical state of node i. Moreover, means node i is infected, while means node i is susceptible. In the finite range of , the variability measure achieves the maximum at the epidemic threshold .

Let us take the issue of identifying the influential spreaders of a network as a classification problem. The influence of each node is labeled by according to the result of the SIR model for a given . More specifically, in order to quantify the infection ability of each node, initially, we successively set node as seeded nodes, while others are susceptible. Then, the SIR process in the networks is simulated using the Monte Carlo method. We repeat the simulation times, and measure the average spreading result of node i for a given transmission rate , . Considering that the result is fine-grained, we sort in descending order. Let us define the top f percentage of nodes as influential spreaders, and the remaining are not; if node i is grouped into the top f spreaders, it is labeled with ; otherwise, it is given the label . The task is to classify the nodes in the network as influential or not. Table 2 gives a sample of the data set.

Table 2.

A sample of the data set in a BA network with , .

2.2. A Two-Phase Feature Selection Method

In view of the high time complexities of some of the centrality methods fed into the classifier, it is necessary to optimize feature combination. Different from previous works using exhaustive search methods, how to apply feature selection to manage the task of identifying influential spreaders is the point of our study. More specifically, according to the imbalanced data set, we propose a two-phase feature selection method in the training process, which aims to explore the best combination of features from spreading data. The process of our feature selection includes 2 phases; that is, the initial selection of our method focuses on dealing with the imbalanced data sets, and the secondary selection of our method concentrates on selecting more representative features from the results of the initial selection.

2.2.1. Initial Selection of the Features

Considering the imbalanced data distribution of the spreading model, as shown in Figure 3, we implement the initial selection to achieve feature selection in imbalanced data sets. The initial selection is an ensemble feature selection using sampling technology and the wrapper technique [28]. We apply bootstrap sampling to balance the data sets, i.e., we randomly sample instances from the majority class until the number of sampled data is equal to that of minority class. The new balanced training data set contains both sampled less influential spreaders and unsampled influential spreaders.

Figure 3.

The initial selection of feature selection process. The red-colored words represent influential spreaders, while the blue-colored words represent less influential spreaders.

Then, we need to select features on the processed balanced data sets. Considering the versatility and scalability of the SVM, we employ the wrapper technique and an SVM based on recursive feature elimination and cross-validation (SVM-RFE-CV) [36], a feature selection method that takes the results of the classifier as its evaluation criteria, which eliminates features with low weight in turn. It follows 4 steps:

- Step 1: Train SVM classifiers on the training data set with 10-fold cross-validation;

- Step 2: Summarize the 10 importance scores of each feature independently, and then accumulate the classifier performance of each fold;

- Step 3: Remove the least important feature;

- Step 4: Return to Step 1 until all features are eliminated.

The best feature subset has the highest classifier performance. We set a linear SVM with a regularization parameter as the classifier and choose weight as the importance score for each feature. Inspired by ensemble learning, we execute SVM-RFE-CV on k different balanced training data sets and obtain k feature subsets . Then, we calculate the frequency of each feature over all the feature subsets by making a vote on the feature subsets. If the frequency goes beyond the given threshold , we add the feature to the subset after the initial feature selection . Above all, the initial selection fully taps the imbalanced spreading data and improves the stability of the feature selection method based on the diversity of feature subsets.

2.2.2. Secondary Selection of the Features

In addition, considering that the initial selection is unable to remove redundant features, to obtain representative features of different levels structure information, we further propose performing the secondary selection using hierarchical clustering and the filter technique. Figure 4 illustrates the entire process.

Figure 4.

The secondary selection of the feature selection process. The colors represent the different clusters to which the features belong.

Determined by the feature subset resulting from the initial selection, to measure the similarity of features, we first obtain a correlation matrix of these features; more specifically, we calculate the Pearson correlation coefficient to measure the linear association between each pair of features i and j, , given by

where represents the value of feature i; means the average of ; and is in the range of . A larger means a higher redundancy between feature i and feature j.

Then, we apply hierarchical clustering to realize feature clustering. To be specific, initially, every single feature is regard as a cluster. Thereafter, according to the correlation matrix, we merge clusters with the largest at each step. Since the previous work [22,24] sorted features into two categories and found that at least 2 features can identify top spreaders, here, we assume the number of final feature clusters to be two.

Eventually, for each cluster, we generate the final feature subset using the a filter technique, namely, the ReliefF algorithm [37], which measures the discrimination between the different classes of each feature. Another important point is the critical amount of the final selected features. Here, we define it as . If the number of features of a cluster is larger than , we select a main feature and a supplementary feature; otherwise, we only obtain a main feature. Depending on the ReliefF algorithm, we take the feature with larger weight as the main feature of the cluster, and the supplementary feature has a minimum linear association with the main feature, as evaluated by the correlation matrix. Algorithm 1 shows the pseudocode of our proposed method.

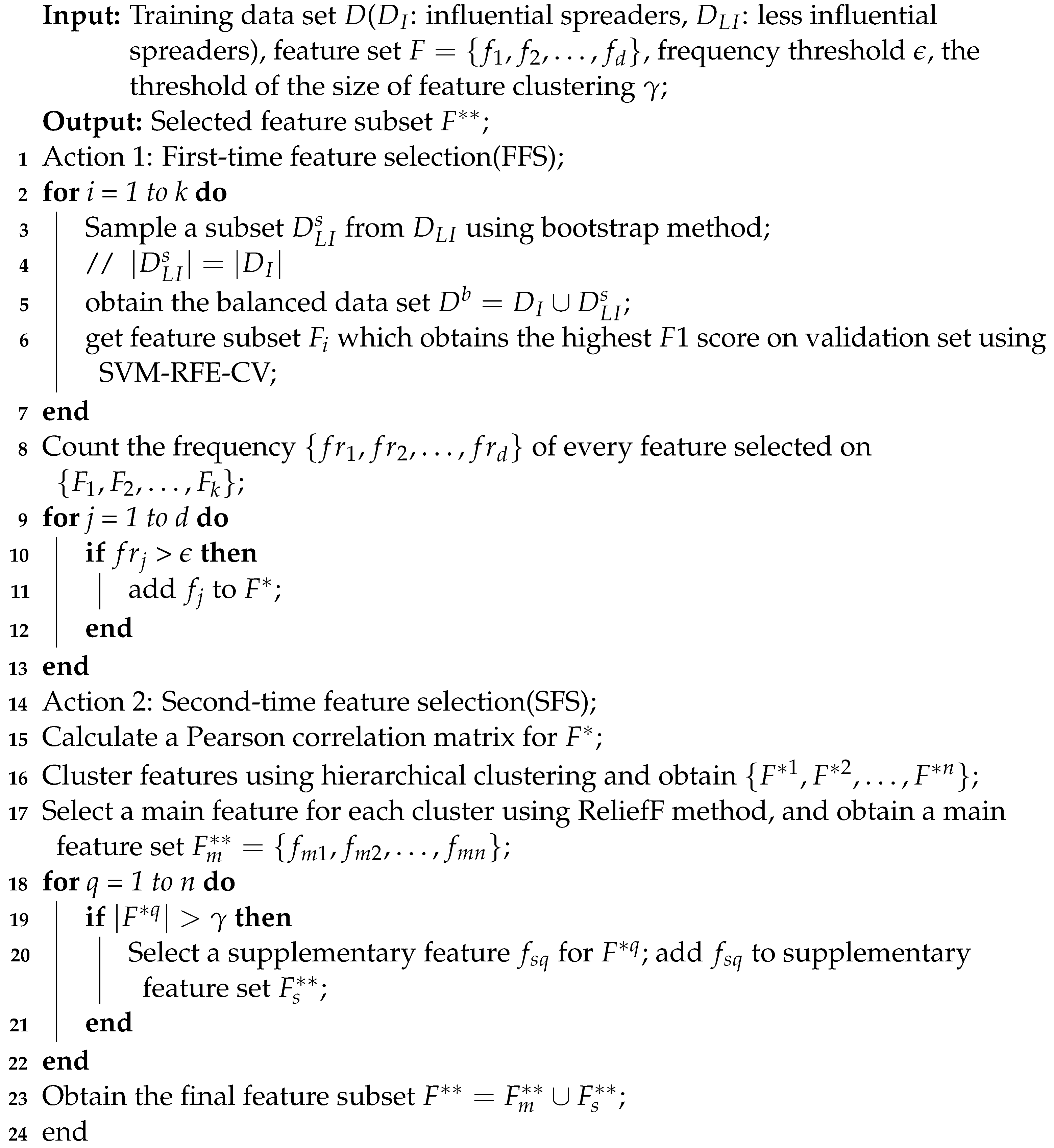

| Algorithm 1: The proposed two-phase feature selection method FFS-SFS |

|

3. Results

In view of the coupling relationship between the network structure and the disease dynamics in networks, we mainly explore the optimal combination of centralities for various network models with the proposed two-phase feature selection method. Here, we study three representative types of synthetic networks, including BA scale-free networks [38], ER random graphs [39], and WS small world networks [40]. The network size is and the average degree is . For each network model, we sample 10 networks. Finally, we also verify our method on real-world networks.

Since networks are assumed not to evolve over time, once the underlying network structure is fixed, the topological properties of each node remain invariant. We simulate the disease dynamics on the network and label each node i according to the average epidemic prevalence for a given effective infection rate and the percentage of influential spreaders f. To explore different epidemic behaviors at different infection rates, we select three representative values, i.e., , , . Similarly, to explore the impact of the imbalanced data set, we choose , , , and .

The data set is randomly split into two parts, 70% for training and 30% for testing. We repeat the process 30 times on each data set and obtain 30 results for each data set. Then, we select the one with the highest frequency as the final feature subset of the network. By summarizing the results of the networks generated from the same network model, we vote to select the optimal feature subset of the network model.

We systematically investigate the effects of the network model, the effective infection rate , and the percentage of influential spreaders f on the performance of the proposed feature selection method. Here, we evaluate the performance based on the number of features and the , , and F1-measure obtained on the testing sets. The performance metrics are based on a confusion matrix. measures the proportion of true positives () among the positive predictions, given by

measures the ratio of the positive samples correctly identified, expressed as

F1-measure () balances and and is written as

To verify the effectiveness of our proposed method, we compare our method, First-time Feature Selection–Second-time Feature Selection (-), with 13 comparison methods, including nine single centralities (, -, -, -, , , , , ) and four feature selection methods as follows:

- (1)

- : ignore feature selection and use the original feature set;

- (2)

- : directly adopt SVM-RFE-CV on the raw imbalanced training set;

- (3)

- First-time Feature Selection–ReliefF (-): filter features using ReliefF based on the result of the initial selection;

- (4)

- First-time Feature Selection–Weight (-): choose features according to the frequency based on the results of the initial selection.

Both - and - do not include feature clustering. Additionally, they are set to the same amount of features as our selected method, and the difference between them is the selection criterion in the second phase.

Moreover, we also conduct an ablation experiment to verify the effectiveness of each phase in our feature selection method, i.e., without (w/o) , which only utilizes the initial selection of our feature selection method, and w/o , which only utilizes the secondary selection of our feature selection method.

3.1. Results on BA Networks

Firstly, we focus on the results using our feature selection method on BA networks [38], where the degree distribution is heterogeneous, i.e., there are only a small number of hub nodes in the network. Here, we conduct the experiment on BA networks with 1000 nodes and 2991 edges, and the density of the networks is 0.005988.

3.1.1. Contrasting Experiment

Table 3 depicts the size of the feature subset selected by five feature selection methods among 12 configurations (the combination of different and f) and the features selected by our method. The original full set F includes nine centralities: , -, -, -, , , , , and . Since each node in the BA network obtains the same - value, i.e., , which is inadequate to identify the spreading potential, the number of centralities in the full set in Table 3 is eight.

Table 3.

Contrasting experiment. Size of feature subsets on BA networks based on different feature selection methods.

Regarding the number of selected features (see Table 3), almost selects all the features, which reveals that has a disadvantage in the reduction in feature dimensions on the imbalanced data set. On the contrary, our method - can effectively decrease the number of features by about in most scenarios with different epidemic parameters, where both the initial selection and the clustering module help to filter features. Although - and - also reduce the number of features, their processes for determining the number of selected features require manual intervention.

In order to verify the effectiveness of our method, we compare our method with eight single centralities and four feature selection methods. Figure 5 illustrates and of classifiers fed distinct optimal feature subsets. As shown in Figure 5a,b, when the effective infection rate is , a single centrality (the first eight bars), , performs better than other centralities across the whole range of f, while feature combinations (other bars) show better performance than a single centrality. This is because additional centralities bring more topological knowledge. In detail, the full feature set always performs the best. The result of is the full feature set whatever f we tested, so its performance is quite close to that of the full feature set, indicating that is insensitive to the imbalanced data distribution. While -, -, and - all depend on the initial selection, the difference among them is the criteria for the second phase of feature selection. Although their performance declines little, the dimensions of the features are dramatically reduced. Compared with - and -, our method - performs better in general. We find that feature clustering is beneficial for selecting features for imbalanced data classification. For -, it also shows that choosing the features with the highest weight given by the classifier is not necessarily optimal.

Figure 5.

Contrasting experiments on BA networks. (left column) and (right column) of classifiers based on different feature subsets obtained from 13 methods on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

As for our feature selection method, -, we observe that it can effectively reduce the feature dimensions and meanwhile show great performance. Its is superior to other methods within the assigned range of f. Obviously, when the data set is moderately imbalanced, i.e., f = or , of our method is higher than the full feature set. As for , though there is a narrow margin between the full feature set and the one obtained with our method, the size of the feature set selected by our method decreases significantly. Specifically, when f is , of our method only declines by compared with the baseline. However, when the data set is extremely imbalanced, i.e., , of our method is worse. The reason is that undersampling in the initial selection performs better on the less imbalanced data set. The initial selection of our method becomes volatile and introduces redundant features, resulting in errors in the process of feature clustering. Moreover, of performs similar to the full feature set.

On the whole, when is fixed, with the increase of f, and of the proposed feature selection methods gradually decrease, as shown in Figure 5a,b. It may be caused by the confusion of handmade labels, which regards the real less influential spreaders as the fake influential spreaders.

When , the feature combination method still performs better than the use of a single centrality, as shown in Figure 5c,d. Among the eight single centralities, - discriminates spreaders with the greatest efficiency. The performance of our feature combination is better than other combination methods measured by across all the ranges of f and is close to that of the full feature set measured by , which is similar to the results for . In addition, compared with , scores show that our method has a slight advantage over -, as it improved at least for and for . At , due to the fluctuation in the data, with greater knowledge on network topology, the gap in performance becomes smaller.

When , the result is similar to the one with , as shown in Figure 5e,f. is the best classifier among all the single centralities, especially when the data set is extremely imbalanced, i.e., . It even defeats some feature combination methods. The feature subset selected by our method still steadily classifies spreaders and improves precision.

3.1.2. Ablation Experiment

Table 4 shows the sizes of the feature subset selected by , , and our method, -. We demonstrate that (our method w/o ), which only executes the initial selection, can effectively decrease about of the features in most scenarios for different combinations of parameters and f.

Table 4.

Ablation experiment. Size of feature subsets on BA networks based on different feature selection methods.

When , the performance of is similar to , while the feature subset selected has fewer dimensions, as shown in Figure 6a,b. This shows that (our method without ) with only the secondary selection performs badly. The feature subset selected by includes -, , and for different f. The weakness of is that it prefers to select when clustering features on the full set, while the performance shows that combinations with are less useful in identifying influential spreaders. Actually, has a low correlation with the spreading process. Conversely, our method selects fewer features than the one without but keeps a similar performance for most spreading data with different f. When (Figure 6c,d) or (Figure 6e,f), the results are very similar.

Figure 6.

Ablation experiments on BA networks. (left column) and (right column) of classifiers based on different feature subsets obtained by different model components on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

Therefore, we obtain the optimal combination of centralities in BA networks using our feature selection method. When the data set is not extremely imbalanced (, , or ), the combination of - and () or the combination of - and () are able to identify the top influencers in BA networks. Here, we have to note that this is a natural result since and have a strong linear correlation. When , our feature selection method takes the combination of -, -, and to classify spreaders. This indicates that for , more knowledge on nodes’ centralities would help to identify influential spreaders. When the data set is extremely imbalanced (), centrality is capable of classifying spreaders (), and - is more important ().

3.2. Results on ER Networks and WS Networks

Different from BA networks, ER networks [39] and WS networks [40] are homogeneous networks, where their degree distributions are narrower than those in BA networks. WS networks are obtained at transitions between nearest-neighbor-coupled networks (edge reconnection probability ) and the ER networks (). We generate the WS networks using .

3.2.1. Contrasting Experiment on ER Networks

As shown in Table 5, we obtain different feature subsets on ER networks. We analyze the number of features after feature selection. Intuitively, , -, -, and - can reduce the number of features to a certain extent. However, is unstable and fails to select features in most scenarios.

Table 5.

Contrasting experiment. Size of feature subsets in ER networks based on different feature selection methods.

Figure 7 shows the and scores of different feature subsets obtained by the baseline methods and our method. As shown in Figure 7a, when , feature combination has better than a single centrality, and our method improves for most values of f. But in Figure 7b, when (), score of - surpasses -. This is because the feature subset selected by - is the combination of and . Since there is a strong linear correlation between these two centralities, the advantage of feature combination is not obvious. Moreover, - performs better than both of them. We also obtain that the greatest gap in score between our method and the baseline shrinks to , while it is in BA networks. This reflects that for identifying top spreaders in ER networks, even though the size of feature set increases, i.e., it is four times larger than the feature combination selected by our method, supplementary knowledge plays a minor role during identification. Overall, when is fixed, with the increase in f, and generally increase. This is because in ER networks, the degree distribution follows a Poisson distribution. As f increases, the differences in topology information between different categories become more evident. With the increase in f, more and wider structural knowledge is used in the training process, resulting in higher performance.

Figure 7.

Contrasting experiments on ER networks. (left column) and (right column) of classifiers based on different feature subsets obtained by 13 methods on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

When , we find that although the feature subsets selected by the five feature combination methods are distinct, their performances are remarkably similar, as shown in Figure 7c,d. This reveals that with more features, score cannot obviously improve, and the feature space of each method is similar. Moreover, score of is higher than most feature combinations across the range of f, so plays a crucial role when .

When , we also find similar rules as the result for ; that is, - performs better than -, as shown in Figure 7e,f. Classifiers based on our feature selection method still show high and competitive compared with full feature sets. Similar to the results in BA networks, as shown in Figure 7, across the whole range of , when , hindered by undersampling, the impact of our method on enhancing effectiveness is limited.

3.2.2. Ablation Experiment on ER Networks

Table 6 shows the results of the ablation experiment on ER networks. can adapt to different associations of and f and reduce the number of features. The mechanism of determines smaller feature space dimensions. We also find that selects fewer features for ER networks than BA networks, especially when . This reveals that some features such as are more dominant than others in ER networks.

Table 6.

Ablation experiment. Size of feature subsets in ER networks based on different feature selection methods.

When , as shown in Figure 8a,b, except for , the performances of the other methods are comparable when (). Among these methods, only also introduces in ER networks, which drags down the performance. When , tends to retain full feature set in most experiments, resulting in the follow-up of our method inevitably choosing representative but poorly performing features, for example, , which has weak linear relationship with other centralities.

Figure 8.

Ablation experiments on ER networks. (left column) and (right column) of classifiers based on different feature subsets obtained by different model components on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

When , as shown in Figure 8c,d, score is lower than when for all the values of f we testified, and all methods perform similarly to all feature sets. The reason is that is an element of their feature combination and plays a fundamental role in identifying influencers. As shown in Figure 8e,f, when , the result is very close to that for .

In ER networks, in summary, when and , our feature selection method takes the combination of and - as key factors for identifying influential spreaders. When , combinations with are also helpful. At , since the influence of the spreaders is very ambiguous to measure, to our surprise, the performance of is better than all the feature combinations we tested.

3.2.3. Results on WS Networks

Figure 9 illustrates the results of the ablation experiment, and Figure 10 shows the performance of contrasting experiments on WS networks. From Figure 9 and Figure 10, we find that despite adopting the full feature set, the performance of classifiers is unsatisfactory, much lower than the performance of classifiers for BA networks or ER networks. Especially for , where noise occurs during the process of disease dynamics, classifiers for WS networks perform worse. Therefore, the feature selection method is not applicable for WS networks, which requires more structural knowledge.

Figure 9.

Ablation experiments on WS networks. (left column) and (right column) of classifiers based on different feature subsets obtained by different model components on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

Figure 10.

Contrasting experiments on WS networks. (left column) and (right column) of classifiers based on different feature subsets obtained by 13 methods on data sets for different combinations of f and : (a,b) ; (c,d) ; (e,f) .

3.3. Results on Real-World Networks

To explore the similarity between the optimal centralities of real-world networks and synthetic networks, we apply our feature selection methods on four real-world networks, i.e., the musical collaboration network, Jazz [41]; the email interchange network of the university, Email [42]; the airline network, USairport [43]; and the online network of secure information interchange, Pretty Good Privacy [44]. Table 7 shows the statistical indicators of these real-world networks. For the convenience of statistics, we use the Venn diagram to obtain the most frequently selected features for each effective infection rate.

Table 7.

Attributes of the real-world networks. N is the number of nodes and m is the number of edges. For each network, is the average degree, is the max degree, c is the average clustering coefficient, and d is the density. All the networks are connected.

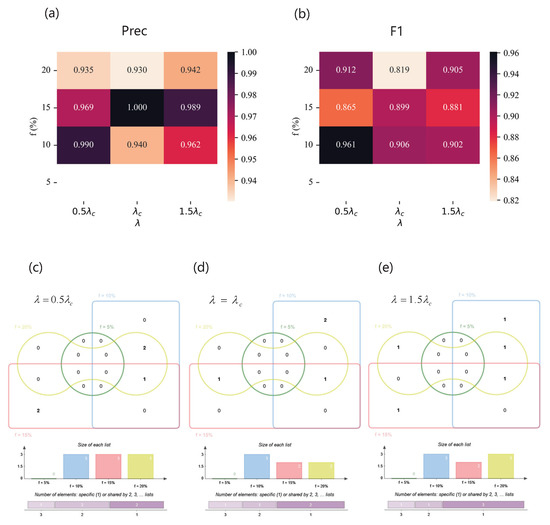

As for the Jazz network, Figure 11 illustrates the performance of the SVM based on feature subset selected by our method - and the Venn diagram of feature subsets of different spreading parameter combination. Figure 11a,b show and of the classifiers. When the imbalanced ratio is very high, i.e., , our method cannot work because of the insufficient sampling quantity of the Jazz dataset. However, despite being at the threshold of infection dynamics, the high performance shows that our model is able to select helpful feature subsets. Grouping by effective infection rate and intersecting feature combinations selected by our method under different f, we obtain the relatively important features of a specific propagation scenario. As shown in Figure 11c, when , is a key element of a feature subset, which covers three spreading parameter combinations, while - and cover two. As shown in Figure 11d,f, for and , also plays a important role in identification, followed by - for and for .

Figure 11.

The results of the Jazz network. (a,b) (upper left) and (upper right) of classifiers based on different feature subsets under different combinations of and f. (c–e) Venn diagrams of feature subsets versus . The top of each Venn diagram: four feature subsets corresponding to different label proportions f for each , that is, (green), (blue), (red), and (yellow), where the number in the box represents the amount of features of each area. The middle of each Venn diagram: the size of each feature subset. The bottom of each Venn diagram: the number of features which are specific to one f or shared by multiple f. (c) When , the feature subsets are (), (), and (); (d) when , the feature subsets are (), (), and (); (e) when , the feature subsets are (), (), and ().

As for Email network, the performance of the classifier is shown in Figure 12. Influenced by handmade labels, the result is fluctuating, but all the scores reach over 0.91 with our method. When and , is at the maximum. And the best can be achieved in the situation of and . When , and - span three spreading parameter combinations. When , encompasses three spreading parameter combinations. When , extends over three spreading parameter combinations. As for the USairport network, the result is shown in Figure 13. On the whole, with the increase in and f, the performance of the classifier roughly becomes higher. Especially when and , and are both at their maximum. covers most spreading parameter combinations. As for the Pretty Good Privacy network, the results are shown in Figure 14. When and , the optimal is achieved, and when and , the highest is attained. - and play crucial roles in the majority of spreading parameter combinations.

Figure 12.

The results of the Email network. (a,b) (upper left) and (upper right) of classifiers based on different feature subsets under different combinations of and f. (c–e) Venn diagrams of feature subsets versus . The top of each Venn diagram: four feature subsets corresponding to different label proportions f for each , that is, (green), (blue), (red), and (yellow), where the number in the box represents the amount of features of each area. The middle of each Venn diagram: the size of each feature subset. The bottom of each Venn diagram: the number of features which are specific to one f or shared by multiple f. (c) When , the feature subsets are (), (), (), and (); (d) when , the feature subsets are (), (), (), and (); (e) when , the feature subsets are (), (), (), and ().

Figure 13.

The results of the USairport network. (a,b) (upper left) and (upper right) of classifiers based on different feature subsets under different combinations of and f. (c–e) Venn diagrams of feature subsets versus . The top of each Venn diagram: four feature subsets corresponding to different label proportions f for each , that is, (green), (blue), (red), and (yellow), where the number in the box represents the amount of features of each area. The middle of each Venn diagram: the size of each feature subset. The bottom of each Venn diagram: number of features which are specific to one f or shared by multiple f. (c) When , the feature subsets are (), (), (), and (); (d) when , the feature subsets are (), (), (), and (); (e) when , the feature subsets are (), (), (), and ().

Figure 14.

The results of the Pretty Good Privacy network. (a,b) (upper left) and (upper right) of classifiers based on different feature subsets under different combinations of and f. (c–e) Venn diagrams of feature subsets versus . The top of each Venn diagram: four feature subsets corresponding to different label proportions f for each , that is, (green), (blue), (red), and (yellow), where the number in the box represents the amount of features of each area. The middle of each Venn diagram: the size of each feature subset. The bottom of each Venn diagram: number of features which are specific to one f or shared by multiple f. (c) When , the feature subsets are (), (), (), and (); (d) when , the feature subsets are (), (), (), and (); (e) when , the feature subsets are (), (), (), and ().

According to the results from real-world networks, the features selected by our method have substantial overlap with the ones obtained on synthetic networks, indicating that synthetic networks describe fundamental properties of real-world networks. And according to the above results, neighborhood-based centralities, including , -, -, and , are always selected, which is due to the fact that the result of disease spreading depends on the neighborhood of the central infected spreader. Moreover, we also find that the size of training data sets affects the performance of the classifier, i.e., the performance of the SVM on the Email or USairport networks is better than Jazz (a small-scale network) or Pretty Good Privacy (a large-scale network).

4. Conclusions

When using machine learning methods to identify influential spreaders, the selection of centrality metrics remains unresolved. Based on the classification of imbalanced data, we propose a two-phase feature selection method named FFS-SFS to obtain the optimal combination of centralities for the identification task. According to the experimental results of three representative synthetic networks and four real-world networks, our method can effectively reduce the dimensions, and the features selected have better classification performance than most baseline methods. Our supervised method finds that, for synthetic networks, the combination of centralities with a two-hop neighborhood is deemed optimal for the BA network, while the combination of centralities with degree matches the ER network. However, for the WS network, due to the higher homogeneity among the nodes, the identification task requires more network topology information. We also applied our feature selection method to various real-world networks and discovered an overlap in the results between synthetic and real-world networks. Our results reveal that the neighborhood-based centralities, such as degree and two-hop neighborhood, play crucial roles in identifying influential spreaders across a wide range of networks.

5. Discussion

There are still some limitations to our work. Our supervised method only focuses on the topological properties of the underlying network in the spreading process. However, in the real world, non-topological attributes like age, i.e., elderly people and young children are more susceptible to infection, also affect the influence of each spreader. In future work, we will enhance the generalizability of our method by incorporating more non-topological attributes on real-world data [45]. For the underlying network, when generating the dataset for large real-world networks, we encounter expensive computational problems such as calculating betweenness centrality. We can explore the use of subgraph sampling techniques to improve our methods in the future. Except for the limitations of the underlying network, in terms of network dynamics, we only apply the SIR model to simulate the influence of each node of the spread of the epidemic. However, disease propagation in real life is complex. For example, before symptoms become apparent, most diseases undergo an incubation period, so that other models similar to SIR, like SEIR [5], will be considered in the future, or we will further explore model-free simulation [46]. Moreover, although the SIR model is also applicable to the field of rumor spreading, etc., it cannot fully capture properties of some spreading dynamics such as behavior spreading [47]. In the future, we will explore a more general method to uncover the characteristics of different spreading processes effectively.

Author Contributions

Conceptualization, B.W. and Y.H.; formal analysis, X.W. and B.W.; writing—original draft preparation, X.W. and B.W.; writing—review and editing, B.W. and Y.H.; funding acquisition, B.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant No. 52273228, the Natural Science Foundation of Shanghai under Grant No. 20ZR1419000, and Key Research Project of Zhejiang Laboratory No. 2021PE0AC02.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Soares, F.; Villavicencio, A.; Fogliatto, F.S.; Pitombeira Rigatto, M.H.; José Anzanello, M.; Idiart, M.A.; Stevenson, M. A novel specific artificial intelligence-based method to identify COVID-19 cases using simple blood exams. medRxiv 2020. [Google Scholar] [CrossRef]

- Belkacem, A.N.; Ouhbi, S.; Lakas, A.; Benkhelifa, E.; Chen, C. End-to-end AI-based point-of-care diagnosis system for classifying respiratory illnesses and early detection of COVID-19: A theoretical framework. Front. Med. 2021, 8, 585578. [Google Scholar] [CrossRef]

- Bhosale, Y.H.; Patnaik, K.S. Application of deep learning techniques in diagnosis of COVID-19 (coronavirus): A systematic review. Neural Process. Lett. 2022, 55, 3551–3603. [Google Scholar] [CrossRef]

- Chen, H.J.; Mao, L.; Chen, Y.; Yuan, L.; Wang, F.; Li, X.; Cai, Q.; Qiu, J.; Chen, F. Machine learning-based CT radiomics model distinguishes COVID-19 from non-COVID-19 pneumonia. BMC Infect. Dis. 2021, 21, 931. [Google Scholar] [CrossRef] [PubMed]

- Pastor-Satorras, R.; Castellano, C.; Van Mieghem, P.; Vespignani, A. Epidemic processes in complex networks. Rev. Mod. Phys. 2015, 87, 925. [Google Scholar] [CrossRef]

- Borge-Holthoefer, J.; Moreno, Y. Absence of influential spreaders in rumor dynamics. Phys. Rev. E 2012, 85, 026116. [Google Scholar] [CrossRef]

- De Arruda, G.F.; Barbieri, A.L.; Rodriguez, P.M.; Rodrigues, F.A.; Moreno, Y.; da Fontoura Costa, L. Role of centrality for the identification of influential spreaders in complex networks. Phys. Rev. E 2014, 90, 032812. [Google Scholar] [CrossRef] [PubMed]

- Lü, L.Y.; Chen, D.B.; Ren, X.L.; Zhang, Q.M.; Zhang, Y.C.; Zhou, T. Vital nodes identification in complex networks. Phys. Rep. 2016, 650, 1–63. [Google Scholar] [CrossRef]

- Freeman, L.C. Centrality in social networks conceptual clarification. Soc. Netw. 1978, 1, 215–239. [Google Scholar] [CrossRef]

- Kitsak, M.; Gallos, L.K.; Havlin, S.; Liljeros, F.; Muchnik, L.; Stanley, H.E.; Makse, H.A. Identification of influential spreaders in complex networks. Nat. Phys. 2010, 6, 888–893. [Google Scholar] [CrossRef]

- Bonacich, P. Factoring and weighting approaches to status scores and clique identification. J. Math. Sociol. 1972, 2, 113–120. [Google Scholar] [CrossRef]

- Katz, L.; Moustaki, I. A new status index derived from sociometric analysis. Psychometrika 1953, 18, 39–43. [Google Scholar] [CrossRef]

- Page, L. The pagerank citation ranking: Bringing order to the Web. In Stanford Digital Library Technologies Project; Technical report; Stanford University: Stanford, CA, USA, 1998. [Google Scholar]

- Mehta, P.; Bukov, M.; Wang, C.H.; Day, A.G.; Richardson, C.; Fisher, C.K.; Schwab, D.J. A high-bias, low-variance introduction to machine learning for physicists. Phys. Rep. 2019, 810, 1–124. [Google Scholar] [CrossRef]

- Ni, Q.; Tang, M.; Liu, Y.; Lai, Y.C. Machine learning dynamical phase transitions in complex networks. Phys. Rev. E 2019, 100, 052312. [Google Scholar] [CrossRef] [PubMed]

- Ni, Q.; Kang, J.; Tang, M.; Liu, Y.; Zou, Y. Learning epidemic threshold in complex networks by Convolutional Neural Network. Chaos 2019, 29, 113106. [Google Scholar] [CrossRef] [PubMed]

- Tripathi, R.; Reza, A.; Garg, D. Prediction of the disease controllability in a complex network using machine learning algorithms. arXiv 2019, arXiv:1902.10224. [Google Scholar]

- Shah, C.; Dehmamy, N.; Perra, N.; Chinazzi, M.; Barabási, A.L.; Vespignani, A.; Yu, R. Finding patient zero: Learning contagion source with graph neural networks. arXiv 2020, arXiv:2006.11913. [Google Scholar]

- Murphy, C.; Laurence, E.; Allard, A. Deep learning of contagion dynamics on complex networks. Nat. Commun. 2021, 12, 4720. [Google Scholar] [CrossRef]

- Tomy, A.; Razzanelli, M.; Di Lauro, F.; Rus, D.; Della Santina, C. Estimating the state of epidemics spreading with graph neural networks. Nonlinear Dyn. 2022, 109, 249–263. [Google Scholar] [CrossRef]

- Rodrigues, F.A.; Peron, T.; Connaughton, C.; Kurths, J.; Moreno, Y. A machine learning approach to predicting dynamical observables from network structure. arXiv 2019, arXiv:1910.00544. [Google Scholar]

- Bucur, D.; Holme, P. Beyond ranking nodes: Predicting epidemic outbreak sizes by network centralities. PLoS Comput. Biol. 2020, 16, e1008052. [Google Scholar] [CrossRef] [PubMed]

- Zhao, G.; Jia, P.; Huang, C.; Zhou, A.; Fang, Y. A machine learning based framework for identifying influential nodes in complex networks. IEEE Access 2020, 8, 65462–65471. [Google Scholar] [CrossRef]

- Bucur, D. Top influencers can be identified universally by combining classical centralities. Sci. Rep. 2020, 10, 20550. [Google Scholar] [CrossRef] [PubMed]

- Yu, E.Y.; Wang, Y.P.; Fu, Y.; Chen, D.B.; Xie, M. Identifying critical nodes in complex networks via graph convolutional networks. Knowl.-Based Syst. 2020, 198, 105893. [Google Scholar] [CrossRef]

- Zhao, G.; Jia, P.; Zhou, A.; Zhang, B. InfGCN: Identifying influential nodes in complex networks with graph convolutional networks. Neurocomputing 2020, 414, 18–26. [Google Scholar] [CrossRef]

- Wang, Q.; Ren, J.; Wang, Y.; Zhang, B.; Cheng, Y.; Zhao, X. CDA: A clustering degree based influential spreader identification algorithm in weighted complex network. IEEE Access 2018, 6, 19550–19559. [Google Scholar] [CrossRef]

- Anukrishna, P.; Paul, V. A review on feature selection for high dimensional data. In Proceedings of the 2017 International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 19–20 January 2017; pp. 1–4. [Google Scholar]

- Azadifar, S.; Rostami, M.; Berahmand, K.; Moradi, P.; Oussalah, M. Graph-based relevancy-redundancy gene selection method for cancer diagnosis. Comput. Biol. Med. 2022, 147, 105766. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, W.; Kang, J.; Zhang, X.; Wang, X. A problem-specific non-dominated sorting genetic algorithm for supervised feature selection. Inf. Sci. 2021, 547, 841–859. [Google Scholar] [CrossRef]

- Zhou, Y.; Kang, J.; Kwong, S.; Wang, X.; Zhang, Q. An evolutionary multi-objective optimization framework of discretization-based feature selection for classification. Swarm Evol. Comput. 2021, 60, 100770. [Google Scholar] [CrossRef]

- Viegas, F.; Rocha, L.; Gonçalves, M.; Mourão, F.; Sá, G.; Salles, T.; Andrade, G.; Sandin, I. A genetic programming approach for feature selection in highly dimensional skewed data. Neurocomputing 2018, 273, 554–569. [Google Scholar] [CrossRef]

- Cilibrasi, R.L.; Vitányi, P.M. A fast quartet tree heuristic for hierarchical clustering. Pattern Recogn. 2011, 44, 662–677. [Google Scholar] [CrossRef]

- Pei, S.; Muchnik, L.; Andrade, J.S., Jr.; Zheng, Z.; Makse, H.A. Searching for superspreaders of information in real-world social media. Sci. Rep. 2014, 4, 5547. [Google Scholar] [CrossRef] [PubMed]

- Shu, P.; Wang, W.; Tang, M.; Do, Y. Numerical identification of epidemic thresholds for susceptible-infected-recovered model on finite-size networks. Chaos 2015, 25, 063104. [Google Scholar] [CrossRef]

- Zhang, F.; Kaufman, H.L.; Deng, Y.; Drabier, R. Recursive SVM biomarker selection for early detection of breast cancer in peripheral blood. BMC Med. Genom. 2013, 6, S4. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Wang, L.; Liu, H.; Ye, J. On similarity preserving feature selection. IEEE Trans. Knowl. Data Eng. 2011, 25, 619–632. [Google Scholar] [CrossRef]

- Barabási, A.L.; Albert, R. Emergence of scaling in random networks. Science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- Erdös, P.; Rényi, A. On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci. 1960, 5, 17–60. [Google Scholar]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Gleiser, P.M.; Danon, L. Community Structure in Jazz. Adv. Complex Syst. 2003, 6, 565–574. [Google Scholar] [CrossRef]

- Yin, H.; Benson, A.R.; Leskovec, J.; Gleich, D.F. Local Higher-Order Graph Clustering. In Proceedings of the International Conference on Knowledge Discovery & Data Mining (KDD), Halifax, NS, Canada, 13–17 August 2017; pp. 555–564. [Google Scholar]

- Colizza, V.; Pastor-Satorras, R.; Vespignani, A. Reaction-diffusion processes and metapopulation models in heterogeneous networks. Nat. Phys. 2007, 3, 276–282. [Google Scholar] [CrossRef]

- Boguñá, M.; Pastor-Satorras, R.; Díaz-Guilera, A.; Arenas, A. Models of social networks based on social distance attachment. Phys. Rev. E 2004, 70, 056122. [Google Scholar] [CrossRef] [PubMed]

- Sa-ngasoongsong, A.; Bukkapatnam, S.T. Variable Selection for Multivariate Cointegrated Time Series Prediction with PROC VARCLUS in SAS® Enterprise MinerTM 7.1. Available online: https://support.sas.com/resources/papers/proceedings12/340-2012.pdf (accessed on 22 April 2012).

- Szalay, K.Z.; Csermely, P. Perturbation Centrality and Turbine: A Novel Centrality Measure Obtained Using a Versatile Network Dynamics Tool. PLoS ONE 2013, 8, e78059. [Google Scholar] [CrossRef] [PubMed]

- Centola, D. The spread of behavior in an online social network experiment. Science 2010, 329, 1194–1197. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).