AM3F-FlowNet: Attention-Based Multi-Scale Multi-Branch Flow Network

Abstract

1. Introduction

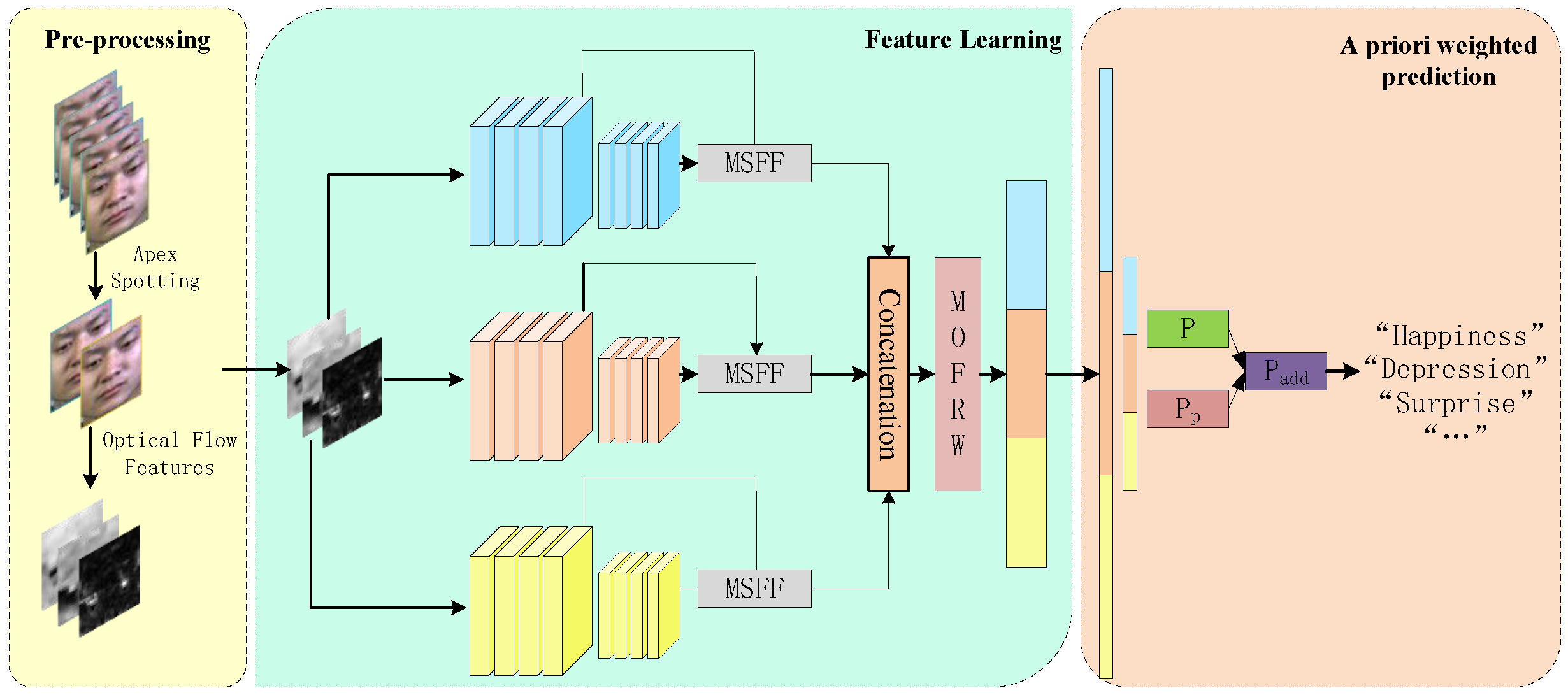

- In the AM3F-FlowNet framework, we designed a module called MOFRW. This module uses a channel attention mechanism to first score and weight the contribution of each piece of optical flow information, then adaptively select features for the channels within each optical flow feature. This double-weighting approach is effective in highlighting key features and suppressing redundant features.

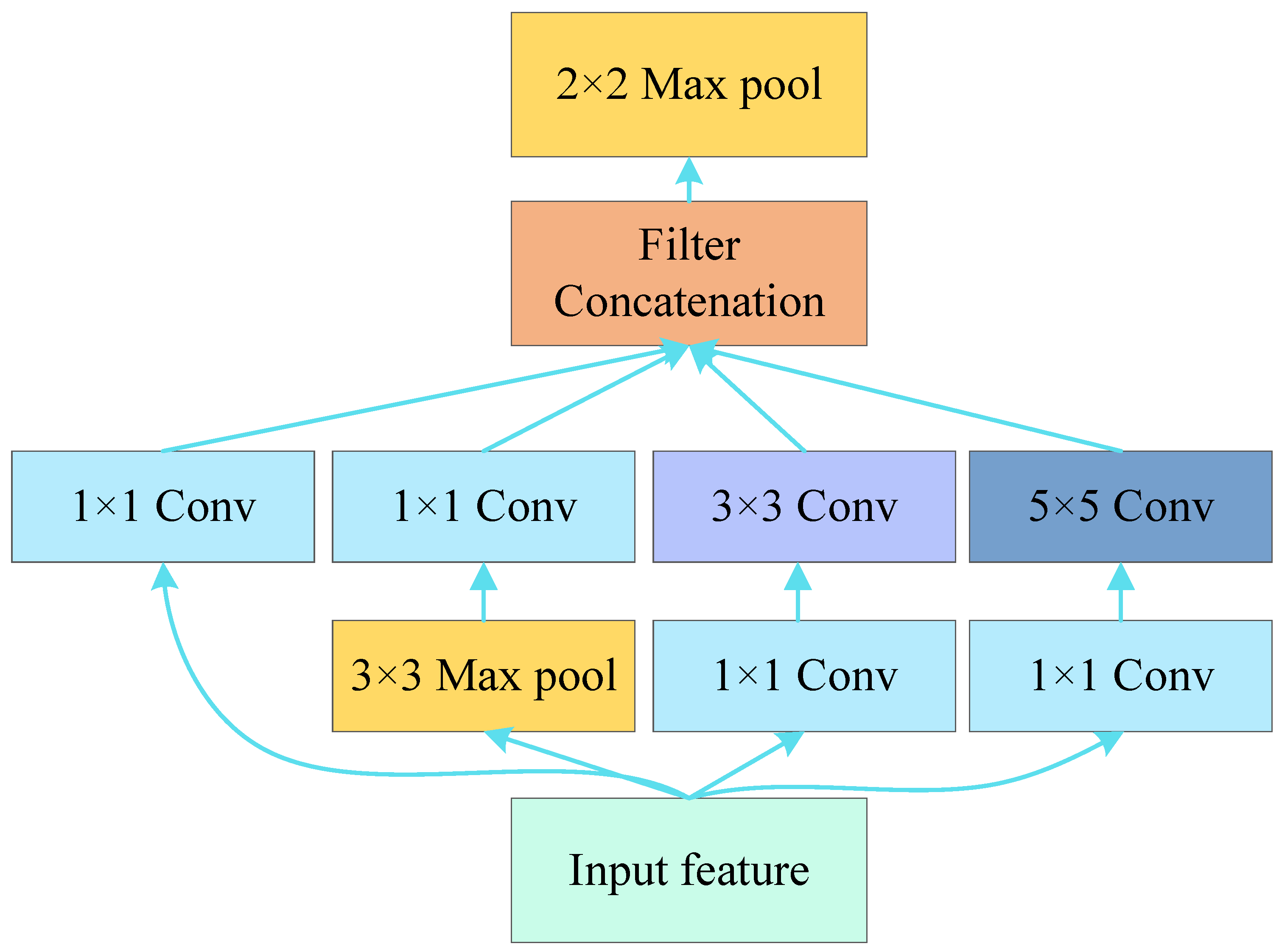

- We propose the MSFF module, which enables AM3F-FlowNet to learn the local detail of micro-expression facial movements by fusing information from different scales. The experimental results show that combining different scales to use the underlying stack information entirely plays a crucial role in recognizing locally fine micro-expression movements.

- Considering the category imbalance in the micro-expression dataset, we introduce a logit adjustment loss function that uses prior knowledge to weight the prediction scores of minority and majority class samples to mitigate the negative impact of category imbalance that may occur during the classification process.

- Our proposed method is evaluated not only on multiple micro-expression datasets but also on a composite dataset formed by combining multiple micro-expression datasets. The experimental results show that our method achieves intensely competitive performance with state-of-the-art methods.

2. Related Work

2.1. Micro-Expression Recognition

2.1.1. Traditional Machine Learning

2.1.2. Deep Learning

2.2. Attention Mechanism in Micro-Expression Recognition

3. Proposed Method

3.1. Data Preprocessing

3.1.1. Face Cropping and Apex Frame Positioning

3.1.2. Optical Flow Feature Extraction

- u: the horizontal component of the optical flow field;

- v: the vertical component of the optical flow field;

- : optical strain.

3.2. Network Architecture

3.2.1. Backbone Selection

3.2.2. MFSS Module

3.2.3. MOFRW Module

3.3. Logit-Adjusted a Priori Weighted Loss

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Experimental Setup

4.4. Results and Analysis

4.5. Ablation Experiment

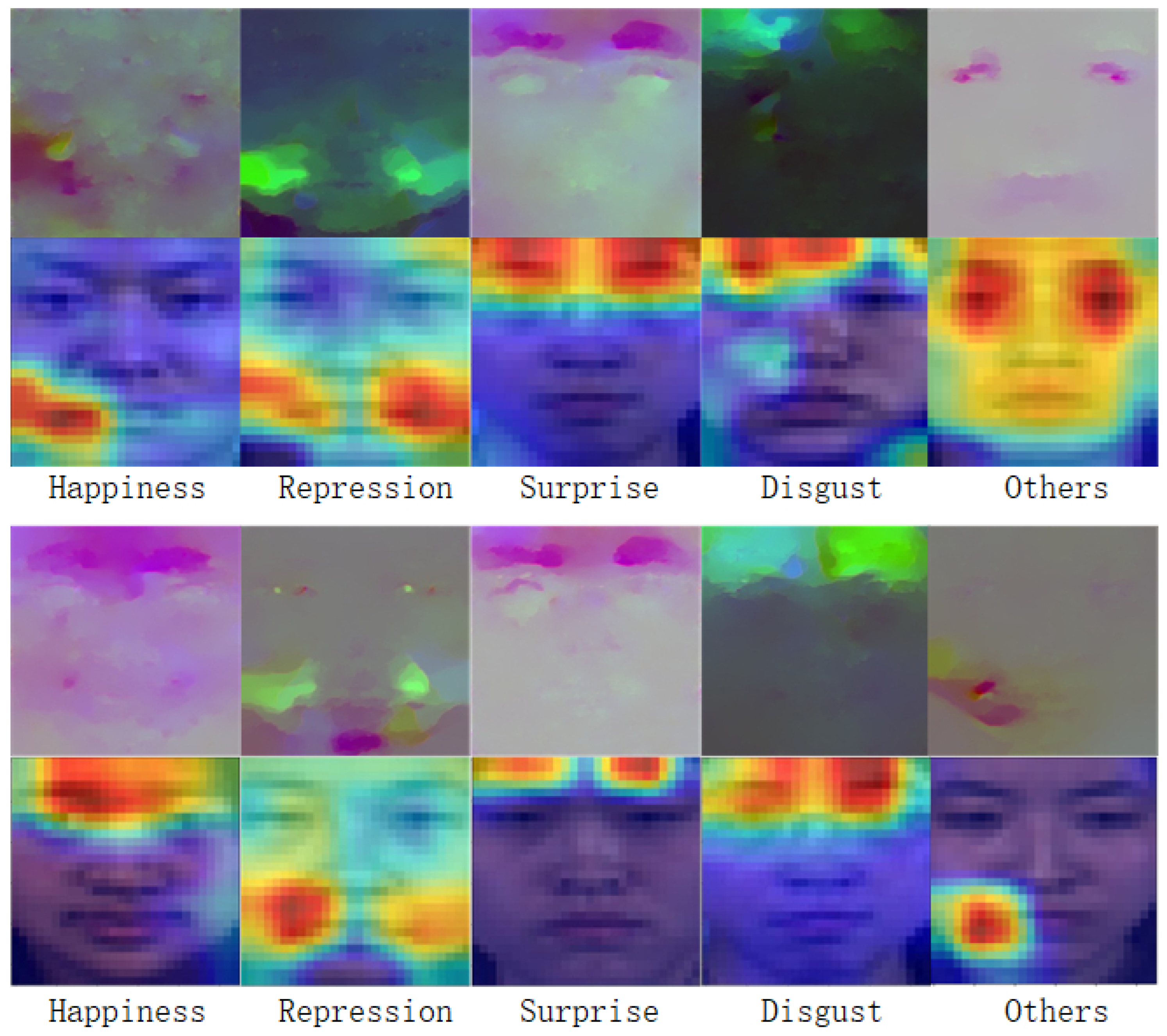

4.6. Feature Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Shen, X.-B.; Wu, Q.; Fu, X.-L. Effects of the duration of expressions on the recognition of microexpressions. J. Zhejiang Univ. Sci. B 2012, 13, 221–230. [Google Scholar] [CrossRef]

- Li, Y.; Wei, J.; Liu, Y.; Kauttonen, J.; Zhao, G. Deep learning for micro-expression recognition: A survey. IEEE Trans. Affect. Comput. 2022, 13, 2028–2046. [Google Scholar] [CrossRef]

- Thi Thu Nguyen, N.; Thi Thu Nguyen, D.; The Pham, B. Micro-expression recognition based on the fusion between optical flow and dynamic image. In Proceedings of the 2021 the 5th International Conference on Machine Learning and Soft Computing, Da Nang, Vietnam, 29–31 January 2021; pp. 115–120. [Google Scholar]

- Liong, S.T.; Phan, R.C.W.; See, J.; Oh, Y.H.; Wong, K. Optical strain based recognition of subtle emotions. In Proceedings of the 2014 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Kuching, Sarawak, Malaysia, 1–4 December 2014; pp. 180–184. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Menon, A.K.; Jayasumana, S.; Rawat, A.S.; Jain, H.; Veit, A.; Kumar, S. Long-tail learning via logit adjustment. arXiv 2020, arXiv:2007.07314. [Google Scholar]

- Yan, W.J.; Li, X.; Wang, S.J.; Zhao, G.; Liu, Y.J.; Chen, Y.H.; Fu, X. CASME II: An improved spontaneous micro-expression database and the baseline evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; See, J.; Phan, R.C.W.; Oh, Y.H. Lbp with six intersection points: Reducing redundant information in lbp-top for micro-expression recognition. In Proceedings of the Computer Vision–ACCV 2014: 12th Asian Conference on Computer Vision, Singapore, Singapore, 1–5 November 2014; Revised Selected Papers, Part I 12. Springer: Berlin/Heidelberg, Germany, 2015; pp. 525–537. [Google Scholar]

- Li, X.; Hong, X.; Moilanen, A.; Huang, X.; Pfister, T.; Zhao, G.; Pietikäinen, M. Towards reading hidden emotions: A comparative study of spontaneous micro-expression spotting and recognition methods. IEEE Trans. Affect. Comput. 2017, 9, 563–577. [Google Scholar] [CrossRef]

- Shreve, M.; Godavarthy, S.; Goldgof, D.; Sarkar, S. Macro-and micro-expression spotting in long videos using spatio-temporal strain. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–25 March 2011; pp. 51–56. [Google Scholar]

- Liu, Y.J.; Zhang, J.K.; Yan, W.J.; Wang, S.J.; Zhao, G.; Fu, X. A main directional mean optical flow feature for spontaneous micro-expression recognition. IEEE Trans. Affect. Comput. 2015, 7, 299–310. [Google Scholar] [CrossRef]

- Liong, S.T.; See, J.; Wong, K.; Phan, R.C.W. Less is more: Micro-expression recognition from video using apex frame. Signal Process. Image Commun. 2018, 62, 82–92. [Google Scholar] [CrossRef]

- Patel, D.; Hong, X.; Zhao, G. Selective deep features for micro-expression recognition. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2258–2263. [Google Scholar]

- Van Quang, N.; Chun, J.; Tokuyama, T. CapsuleNet for micro-expression recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–7. [Google Scholar]

- Gan, Y.S.; Liong, S.T.; Yau, W.C.; Huang, Y.C.; Tan, L.K. OFF-ApexNet on micro-expression recognition system. Signal Process. Image Commun. 2019, 74, 129–139. [Google Scholar] [CrossRef]

- Liong, S.T.; Gan, Y.S.; See, J.; Khor, H.Q.; Huang, Y.C. Shallow triple stream three-dimensional cnn (ststnet) for micro-expression recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–5. [Google Scholar]

- Zhou, L.; Mao, Q.; Xue, L. Dual-inception network for cross-database micro-expression recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–5. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Zhao, S.; Tang, H.; Liu, S.; Zhang, Y.; Wang, H.; Xu, T.; Chen, E.; Guan, C. ME-PLAN: A deep prototypical learning with local attention network for dynamic micro-expression recognition. Neural Netw. 2022, 153, 427–443. [Google Scholar] [CrossRef]

- Wang, C.; Peng, M.; Bi, T.; Chen, T. Micro-attention for micro-expression recognition. Neurocomputing 2020, 410, 354–362. [Google Scholar] [CrossRef]

- Yang, B.; Cheng, J.; Yang, Y.; Zhang, B.; Li, J. MERTA: Micro-expression recognition with ternary attentions. Multimed. Tools Appl. 2021, 80, 1–16. [Google Scholar] [CrossRef]

- Li, H.; Sui, M.; Zhu, Z.; Zhao, F. MMNet: Muscle motion-guided network for micro-expression recognition. arXiv 2022, arXiv:2201.05297. [Google Scholar]

- Su, Y.; Zhang, J.; Liu, J.; Zhai, G. Key facial components guided micro-expression recognition based on first & second-order motion. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Zhang, J.; Liu, F.; Zhou, A. Off-TANet: A Lightweight Neural Micro-expression Recognizer with Optical Flow Features and Integrated Attention Mechanism. In Proceedings of the PRICAI 2021: Trends in Artificial Intelligence: 18th Pacific Rim International Conference on Artificial Intelligence, PRICAI 2021, Hanoi, Vietnam, 8–12 November 2021; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2021; pp. 266–279. [Google Scholar]

- Davison, A.K.; Lansley, C.; Costen, N.; Tan, K.; Yap, M.H. Samm: A spontaneous micro-facial movement dataset. IEEE Trans. Affect. Comput. 2016, 9, 116–129. [Google Scholar] [CrossRef]

- Li, X.; Pfister, T.; Huang, X.; Zhao, G.; Pietikäinen, M. A spontaneous micro-expression database: Inducement, collection and baseline. In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (fg), Shanghai, China, 22–26 April 2013; pp. 1–6. [Google Scholar]

- Li, Q.; Yu, J.; Kurihara, T.; Zhang, H.; Zhan, S. Deep convolutional neural network with optical flow for facial micro-expression recognition. J. Circ. Syst. Comput. 2020, 29, 2050006. [Google Scholar] [CrossRef]

- Ben, X.; Ren, Y.; Zhang, J.; Wang, S.J.; Kpalma, K.; Meng, W.; Liu, Y.J. Video-based facial micro-expression analysis: A survey of datasets, features and algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 5826–5846. [Google Scholar] [CrossRef]

- Khor, H.Q.; See, J.; Phan, R.C.W.; Lin, W. Enriched long-term recurrent convolutional network for facial micro-expression recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 667–674. [Google Scholar]

- Khor, H.Q.; See, J.; Liong, S.T.; Phan, R.C.; Lin, W. Dual-stream shallow networks for facial micro-expression recognition. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 36–40. [Google Scholar]

- Xia, Z.; Hong, X.; Gao, X.; Feng, X.; Zhao, G. Spatiotemporal recurrent convolutional networks for recognizing spontaneous micro-expressions. IEEE Trans. Multimed. 2019, 22, 626–640. [Google Scholar] [CrossRef]

- Zhang, L.; Hong, X.; Arandjelović, O.; Zhao, G. Short and long range relation based spatio-temporal transformer for micro-expression recognition. IEEE Trans. Affect. Comput. 2022, 13, 1973–1985. [Google Scholar] [CrossRef]

- Nie, X.; Takalkar, M.A.; Duan, M.; Zhang, H.; Xu, M. GEME: Dual-stream multi-task GEnder-based micro-expression recognition. Neurocomputing 2021, 427, 13–28. [Google Scholar] [CrossRef]

- Hong, J.; Lee, C.; Jung, H. Late fusion-based video transformer for facial micro-expression recognition. Appl. Sci. 2022, 12, 1169. [Google Scholar] [CrossRef]

- Tang, J.; Li, L.; Tang, M.; Xie, J. A novel micro-expression recognition algorithm using dual-stream combining optical flow and dynamic image convolutional neural networks. Signal Image Video Process. 2022, 17, 769–776. [Google Scholar] [CrossRef]

- Wei, J.; Lu, G.; Yan, J.; Zong, Y. Learning two groups of discriminative features for micro-expression recognition. Neurocomputing 2022, 479, 22–36. [Google Scholar] [CrossRef]

- Zhao, X.; Ma, H.; Wang, R. STA-GCN: Spatio-Temporal AU Graph Convolution Network for Facial Micro-expression Recognition. In Proceedings of the Pattern Recognition and Computer Vision: 4th Chinese Conference, PRCV 2021, Beijing, China, 29 October–1 November 2021; Proceedings, Part I 4. Springer: Berlin/Heidelberg, Germany, 2021; pp. 80–91. [Google Scholar]

- Lei, L.; Chen, T.; Li, S.; Li, J. Micro-expression recognition based on facial graph representation learning and facial action unit fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1571–1580. [Google Scholar]

- Chen, B.; Liu, K.H.; Xu, Y.; Wu, Q.Q.; Yao, J.F. Block division convolutional network with implicit deep features augmentation for micro-expression recognition. IEEE Trans. Multimed. 2022, 25, 1345–1358. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Dataset | SAMM | SMIC-HS | CASMEII | 3DB-Combined | |

|---|---|---|---|---|---|

| Number of subjects | 28 | 16 | 24 | 68 | |

| Number of samples | 133 | 164 | 145 | 442 | |

| Expression | Positive | 26 | 51 | 32 | 109 |

| Negtive | 92 | 70 | 88 | 250 | |

| Surprise | 15 | 43 | 25 | 83 | |

| Method | SAMM (5 Classes) | SMIC-HS (3 Classes) | CASMEII (5 Classes) | |||

|---|---|---|---|---|---|---|

| Acc | F1 | Acc | F1 | Acc | F1 | |

| LBP-TOP [8] | - | - | 53.66 | 0.5384 | 46.46 | 0.4241 |

| MDMO [12] (2016) | - | - | 61.5 | 0.406 | 51.0 | 0.418 |

| Bi-WOOF [13] (2018) | 59.8 | 0.591 | 59.3 | 0.620 | 58.9 | 0.610 |

| DSSN [31] (2019) | 57.35 | 0.4644 | 63.41 | 0.6462 | 70.78 | 0.7297 |

| STRCN [32] (2019) | 54.5 | 0.492 | 53.1 | 0.514 | 56.0 | 0.542 |

| SLSTT [33] (2021) | 72.388 | 0.640 | 73.17 | 0.724 | 75.806 | 0.753 |

| GEME [34] (2021) | 55.88 | 0.4538 | 64.63 | 0.6158 | 75.2 | 0.7354 |

| Later [35] (2022) | - | - | 73.17 | 0.7447 | 70.68 | 0.7106 |

| FDCN [36] (2022) | 58.07 | 0.57 | - | - | 73.09 | 0.72 |

| KTGSL [37] (2022) | - | - | 72.58 | 0.6820 | 75.64 | 0.6917 |

| AM3F-FlowNet (Ours) | 66.18 | 0.5410 | 74.25 | 0.7254 | 84.52 | 0.8288 |

| Method | Full | SAMM | SMIC | CASMEII | ||||

|---|---|---|---|---|---|---|---|---|

| UF1 | UAR | UF1 | UAR | UF1 | UAR | UF1 | UAR | |

| LBP-TOP [8] | 0.5882 | 0.5280 | 0.3954 | 0.4102 | 0.2000 | 0.5280 | 0.7026 | 0.7429 |

| Bi-WOOF [13] (2018) | 0.6296 | 0.6227 | 0.5211 | 0.5139 | 0.5727 | 0.5829 | 0.7805 | 0.8026 |

| OFF-ApexNet [16] (2019) | 0.7196 | 0.7096 | 0.5409 | 0.5392 | 0.6817 | 0.6695 | 0.8764 | 0.8681 |

| Dual-Inception [18] (2019) | 0.7322 | 0.7278 | 0.5868 | 0.5663 | 0.6645 | 0.6726 | 0.8621 | 0.8560 |

| CapsuleNet [15] (2019) | 0.6520 | 0.6506 | 0.6209 | 0.5989 | 0.5820 | 0.5877 | 0.7068 | 0.7018 |

| STSTNet [17] (2019) | 0.7353 | 0.7605 | 0.6588 | 0.6810 | 0.6801 | 0.7013 | 0.8382 | 0.8686 |

| EMR [30] (2019) | 0.7885 | 0.7824 | 0.7754 | 0.7152 | 0.7461 | 0.7530 | 0.8293 | 0.8209 |

| STA-GCN [38] (2021) | - | - | - | - | - | - | 0.7608 | 0.7096 |

| SLSTT [34] (2021) | 0.8160 | 0.7900 | 0.7150 | 0.6420 | 0.7240 | 0.7070 | 0.9010 | 0.8850 |

| AUGCN [39] (2021) | 0.7914 | 0.7933 | 0.7392 | 0.7163 | 0.7651 | 0.7780 | 0.9071 | 0.8878 |

| BDCNN [40] (2022) | 0.8509 | 0.8500 | 0.8538 | 0.8507 | 0.7859 | 0.7869 | 0.9501 | 0.9516 |

| AM3FFlowNet (Ours) | 0.8536 | 0.8594 | 0.7643 | 0.7452 | 0.7946 | 0.7941 | 0.9591 | 0.9567 |

| Method | CASMEII (5 Classes) | |

|---|---|---|

| Acc (%) | F1 | |

| AM3F-FlowNet without multi-scale | 81.17 | 0.7905 |

| AM3F-FlowNet without MSFF | 83.26 | 0.8231 |

| AM3F-FlowNet without MOFRW | 81.59 | 0.8092 |

| AM3F-FlowNet | 84.52 | 0.8288 |

| Loss | CASMEII (5 Classes) | |

|---|---|---|

| Acc (%) | F1 | |

| CE | 82.38 | 0.8050 |

| Focal | 79.92 | 0.7799 |

| LA-Focal | 82.43 | 0.8048 |

| LASCE | 84.52 | 0.8288 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Yang, W.; Chen, D.; Wei, F. AM3F-FlowNet: Attention-Based Multi-Scale Multi-Branch Flow Network. Entropy 2023, 25, 1064. https://doi.org/10.3390/e25071064

Fu C, Yang W, Chen D, Wei F. AM3F-FlowNet: Attention-Based Multi-Scale Multi-Branch Flow Network. Entropy. 2023; 25(7):1064. https://doi.org/10.3390/e25071064

Chicago/Turabian StyleFu, Chenghao, Wenzhong Yang, Danny Chen, and Fuyuan Wei. 2023. "AM3F-FlowNet: Attention-Based Multi-Scale Multi-Branch Flow Network" Entropy 25, no. 7: 1064. https://doi.org/10.3390/e25071064

APA StyleFu, C., Yang, W., Chen, D., & Wei, F. (2023). AM3F-FlowNet: Attention-Based Multi-Scale Multi-Branch Flow Network. Entropy, 25(7), 1064. https://doi.org/10.3390/e25071064