Kernel-Free Quadratic Surface Support Vector Regression with Non-Negative Constraints

Abstract

:1. Introduction

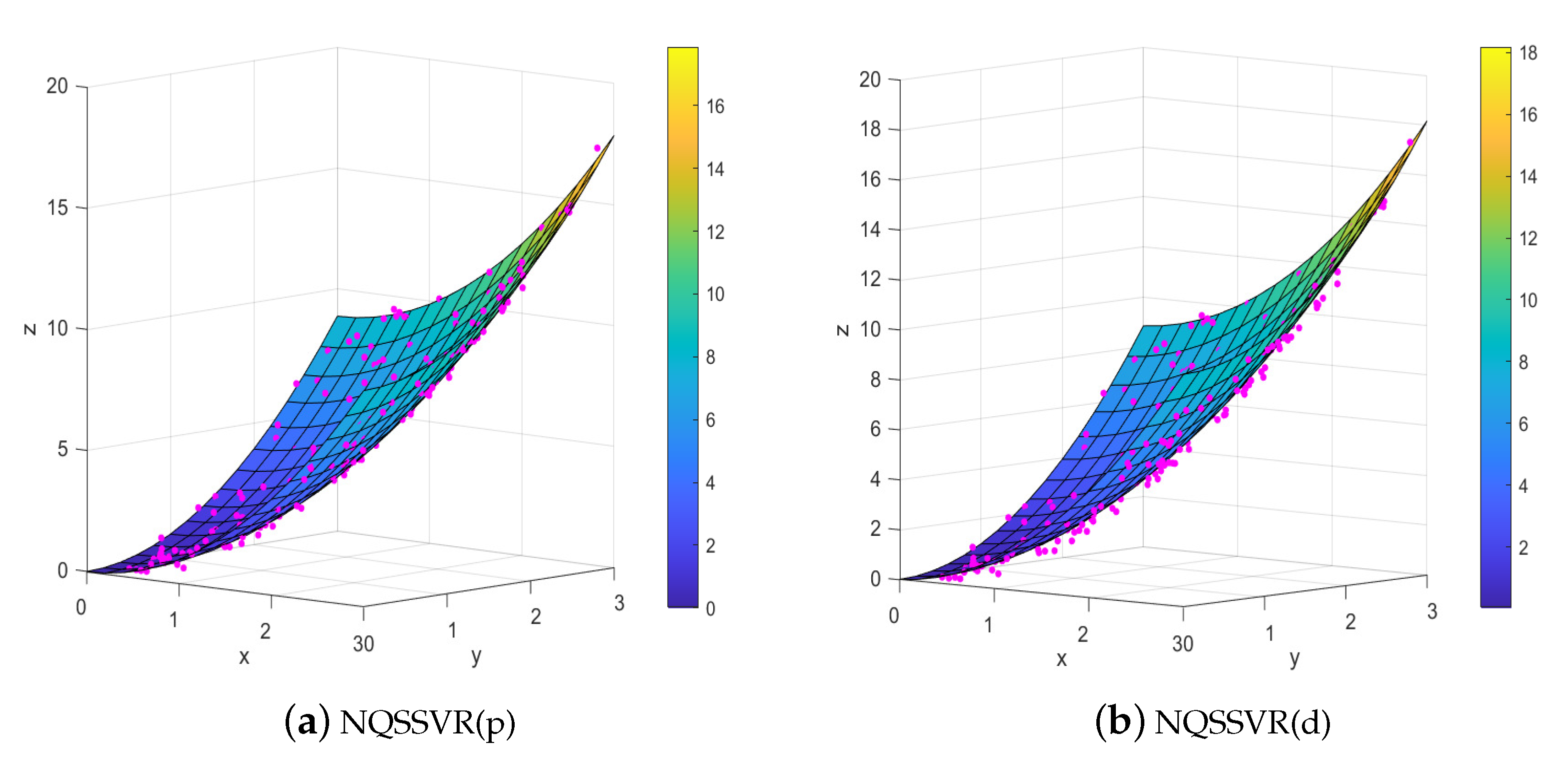

- NQSSVR is proposed by utilizing the kernel-free technique, which avoids the complexity of choosing the kernel functions and their parameters, and has interpretability to some extent. In fact, the task of NQSSVR is to find a quadratic regression function to fit the data, so it can achieve better generalization ability than other linear regression methods.

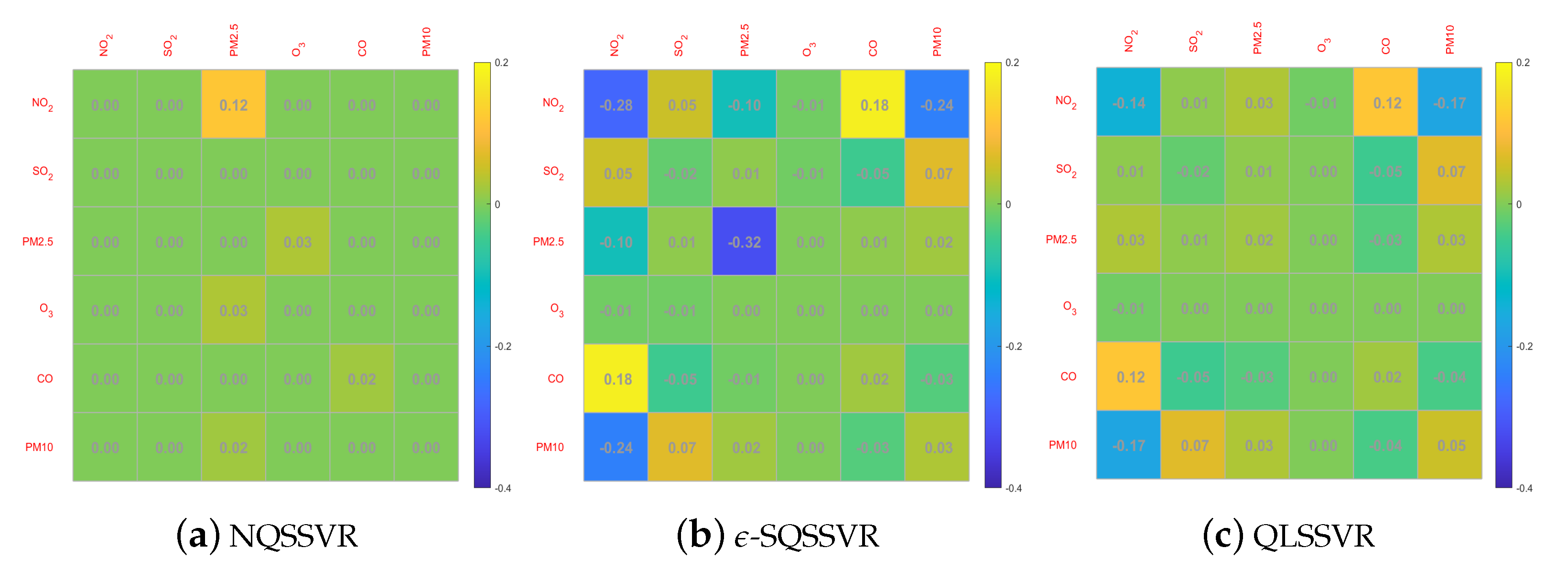

- The non-negative constraints with respect to the regression coefficients are added to construct the optimization problem of NQSSVR, which can obtain a monotonically increasing regression function with explanatory variables on a non-negative interval. In some cases, the value of the response variable grows as the explanatory variable grows. For example, when exploring the air quality examples, the air quality index will increase as the concentration of gases in the air increases.

- Both the primal and dual problems can be solved, since our method does not involve kernel functions. In the theoretical analysis, the existence and uniqueness of solutions to the primal and dual problems, as well as their interconnections, are analyzed. In addition, the properties of regression function on the domain of definition are given.

- Numerical experiments on artificial datasets demonstrate the visualization results of the regression function obtained by our NQSSVR. The results on benchmark datasets show that the comprehensive performance of the method is relatively better than that of linear-SVR and NNSVR. In addition, more importantly, by exploring the practical application of air quality, it can be shown that our method is more applicable than QLSSVR and -SQSSVR.

2. Background

2.1. Definition and Notations

2.2. -SQSSVR

3. Kernel-Free QSSVR with Non-Negative Constraints (NQSSVR)

3.1. Primal Problem

3.2. Dual Model

3.3. Some Theoretical Analysis

4. Numerical Experiments

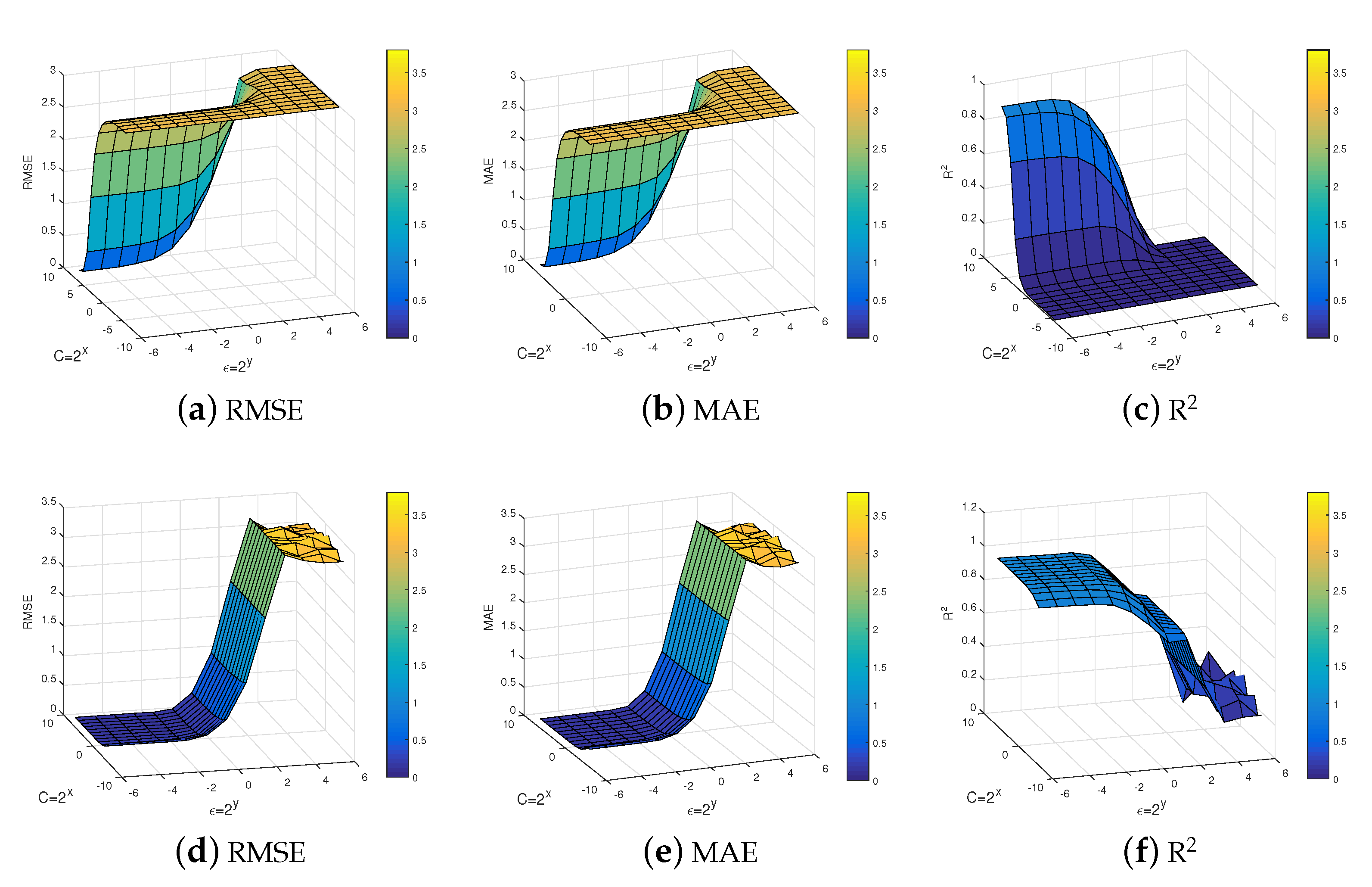

4.1. Artificial Datasets

4.2. Benchmark Datasets

4.3. Air Quality Composite Index Dataset (AQCI)

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhou, L.; Harrach, B.; Seo, J.K. Monotonicity-based electrical impedance tomography for lung imaging. Inverse Probl. 2018, 34, 045005. [Google Scholar] [CrossRef] [Green Version]

- Chatterjee, S.; Guntuboyina, A.; Sen, B. On matrix estimation under monotonicity constraints. Bernoulli 2018, 24, 1072–1100. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Qian, Y.; Li, F.; Liang, J.; Ding, W. Fusing fuzzy monotonic Decision Trees. IEEE Trans. Fuzzy Syst. 2019, 28, 887–900. [Google Scholar] [CrossRef]

- Henderson, N.C.; Varadhan, R. Damped anderson acceleration with restarts and monotonicity control for accelerating em and em-like algorithms. J. Comput. Graph. Stat. 2019, 28, 834–846. [Google Scholar] [CrossRef]

- Bro, R.; Sidiropoulos, N.D. Least squares algorithms under unimodality and non-negativity constraints. J. Chemom. J. Chemom. Soc. 1998, 12, 223–247. [Google Scholar] [CrossRef]

- Luo, X.; Zhou, M.C.; Li, S.; Hu, L.; Shang, M. Non-negativity constrained missing data estimation for high-dimensional and sparse matrices from industrial applications. IEEE Trans. Cybern. 2019, 50, 1844–1855. [Google Scholar] [CrossRef]

- Theodosiadou, O.; Tsaklidis, G. State space modeling with non-negativity constraints using quadratic forms. Mathematics 2019, 9, 1905. [Google Scholar] [CrossRef]

- Haase, V.; Hahn, K.; Schndube, H.; Stierstorfer, K.; Maier, A.; Noo, F. Impact of the non-negativity constraint in model-based iterative reconstruction from CT data. Med. Phys. 2019, 46, 835–854. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yamashita, S.; Yagi, Y.; Okuwaki, R.; Shimizu, K.; Agata, R.; Fukahata, Y. Potency density tensor inversion of complex body waveforms with time-adaptive smoothing constraint. Geophys. J. Int. 2022, 231, 91–107. [Google Scholar] [CrossRef]

- Wang, J.; Deng, Y.; Wang, R.; Ma, P.; Lin, H. A small-baseline InSAR inversion algorithm combining a smoothing constraint and L1-norm minimization. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1061–1065. [Google Scholar] [CrossRef]

- Mammen, E.; Marron, J.S.; Turlach, B.A.; Wand, M.P. A general projection framework for constraints smoothing. Stat. Sci. 2001, 16, 232–248. [Google Scholar] [CrossRef]

- Powell, H.; Lee, D.; Bowman, A. Estimating constraints concentration–response functions between air pollution and health. Environmetrics 2012, 23, 228–237. [Google Scholar] [CrossRef]

- Lawson, C.L.; Hanson, R.J. Solving Least Squares Problems; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1995. [Google Scholar]

- Chen, J.; Richard, C.; Honeine, P.; Bermudez, J.C.M. Non-negative distributed regression for data inference in wireless sensor networks. In Proceedings of the 2010 Conference Record of the Forty Fourth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 7–10 November 2010; pp. 451–455. [Google Scholar]

- Shekkizhar, S.; Ortega, A. Graph construction from data by non-negative kernel regression. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 3892–3896. [Google Scholar]

- Shekkizhar, S.; Ortega, A. NNK-Means: Dictionary learning using non-negative kernel regression. arXiv 2021, arXiv:2110.08212. [Google Scholar]

- Chapel, L.; Flamary, R.; Wu, H.; Févotte, C.; Gasso, G. Unbalanced optimal transport through non-negative penalized linear regression. Adv. Neural Inf. Process. Syst. 2021, 34, 23270–23282. [Google Scholar]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1996, 9, 779–784. [Google Scholar]

- Fauzi, A. Stock price prediction using support vector machine in the second Wave of COVID-19 pandemic. Insearch Inf. Syst. Res. J. 2021, 1, 58–62. [Google Scholar] [CrossRef]

- Huang, S.; Cai, N.; Pacheco, P.P.; Narrandes, S.; Wang, Y.; Xu, W. Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genom. Proteom. 2018, 15, 41–51. [Google Scholar]

- Zhong, H.; Wang, J.; Jia, H.; Mu, Y.; Lv, S. Vector field-based support vector regression for building energy consumption prediction. Appl. Energy 2019, 242, 403–414. [Google Scholar] [CrossRef]

- Guo, H.; Nguyen, H.; Bui, X.N.; Armaghani, D.J. A new technique to predict fly-rock in bench blasting based on an ensemble of support vector regression and GLMNET. Eng. Comput. 2021, 37, 421–435. [Google Scholar] [CrossRef]

- Dagher, I. Quadratic kernel-free non-linear support vector machine. J. Glob. Optim. 2008, 41, 15–30. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, Y.; Huang, M.; Luo, J.; Tang, S. A kernel-free fuzzy reduced quadratic surface ν-support vector machine with applications. Appl. Soft Comput. 2022, 127, 109390. [Google Scholar] [CrossRef]

- Zhou, J.; Tian, Y.; Luo, J.; Zhai, Q. Novel non-Kernel quadratic surface support vector machines based on optimal margin distribution. Soft Comput. 2022, 26, 9215–9227. [Google Scholar] [CrossRef]

- Ye, J.Y.; Yang, Z.X.; Li, Z.L. Quadratic hyper-surface kernel-free least squares support vector regression. Intell. Data Anal. 2021, 25, 265–281. [Google Scholar] [CrossRef]

- Ye, J.Y.; Yang, Z.X.; Ma, M.P.; Wang, Y.L.; Yang, X.M. ϵ-Kernel-free soft quadratic surface support vector regression. Inf. Sci. 2022, 594, 177–199. [Google Scholar] [CrossRef]

- Zhai, Q.; Tian, Y.; Zhou, J. Linear twin quadratic surface support vector regression. Math. Probl. Eng. 2020, 2020, 3238129. [Google Scholar] [CrossRef]

- Zheng, L.J.; Tian, Y.; Luo, J.; Hong, T. A novel hybrid method based on kernel-free support vector regression for stock indices and price forecasting. J. Oper. Res. Soc. 2023, 74, 690–702. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository. 2017. Available online: https://archive.ics.uci.edu/ml/index.php (accessed on 18 August 2021).

| Evaluation Criteria | Formulas |

|---|---|

| Mean Absolute Error (MAE) | MAE = |

| Root Mean Squared Error (RMSE) | RMSE = |

| T1 | Average test time |

| T2 | Time to select parameters |

| Datasets | Algorithms | RMSE | MAE | T1 | T2 | |

|---|---|---|---|---|---|---|

| lin-SVR | 0.0901 ± 0.0010 | 0.0716 ± 0.0011 | 0.9159 ± 0.0118 | 0.2085 ± 0.0188 | 31.2781 | |

| poly-SVR | 0.0894 ± 0.0015 | 0.0716 ± 0.0012 | 0.9502 ± 0.0134 | 0.1412 ± 0.0096 | 36.9959 | |

| Example 1 | rbf-SVR | 0.0865 ± 0.0010 | 0.0658 ± 0.0014 | 0.9680 ± 0.0153 | 0.1554 ± 0.0055 | 62.9346 |

| NQSSVR(p) | 0.0885 ± 0.0012 | 0.0714 ± 0.0018 | 0.9066 ± 0.0260 | 0.0612 ± 0.0094 | 16.2336 | |

| NQSSVR(d) | 0.0889 ± 0.0007 | 0.0716 ± 0.0004 | 0.8829 ± 0.0022 | 0.1278 ± 0.0019 | 24.2926 | |

| lin-SVR | 1.4762 ± 0.0085 | 1.2420 ± 0.0074 | 0.5141 ± 0.0021 | 0.4143 ± 0.0204 | 133.6888 | |

| poly-SVR | 0.1983 ± 0.0015 | 0.1567 ± 0.0018 | 0.9941 ± 0.0017 | 0.3464 ± 0.0413 | 130.0461 | |

| Example 2 | rbf-SVR | 0.2057 ± 0.0066 | 0.1615 ± 0.0047 | 0.9899 ± 0.0014 | 0.4600 ± 0.0282 | 573.8611 |

| NQSSVR(p) | 0.1969 ± 0.0016 | 0.1544 ± 0.0014 | 0.9930 ± 0.0014 | 0.1850 ± 0.0139 | 28.3872 | |

| NQSSVR(d) | 0.1982 ± 0.0017 | 0.1552 ± 0.0017 | 0.9896 ± 0.0012 | 0.2056 ± 0.0234 | 32.7823 |

| Data Points∖Dimensions | Methods | m = 2 | m = 4 | m = 8 | m = 16 |

|---|---|---|---|---|---|

| n = 200 | poly-SVR | 3.9670 ± 0.1183 | 3.3426 ± 0.0813 | 3.6770 ± 0.0596 | 3.8512 ± 0.0430 |

| rbf-SVR | 1.1682 ± 0.0318 | 1.0756 ± 0.0575 | 1.0694 ± 0.0437 | 1.1634 ± 0.0173 | |

| NQSSVR(p) | 0.1592 ± 0.0146 | 0.2542 ± 0.0066 | 0.4346 ± 0.0193 | 1.0058 ± 0.0608 | |

| NQSSVR(d) | 0.7322 ± 0.0557 | 0.8390 ± 0.0219 | 0.9502 ± 0.0449 | 1.1458 ± 0.0404 | |

| n = 400 | poly-SVR | 22.1766 ± 0.6565 | 21.0828 ± 0.6835 | 20.7110 ± 0.4407 | 23.2322 ± 07712 |

| rbf-SVR | 4.1252 ± 0.1725 | 4.2152 ± 0.1546 | 4.2804 ± 0.1735 | 4.4358 ± 0.1441 | |

| NQSSVR | 0.3942 ± 0.0199 | 0.5334 ± 0.0219 | 0.9442 ± 0.0281 | 3.2328 ± 0.1464 | |

| NQSSVR(d) | 3.2784 ± 0.1339 | 3.8628 ± 0.0954 | 4.2040 ± 0.3310 | 4.1320 ± 0.1463 | |

| n = 600 | poly-SVR | 64.8220 ± 1.0185 | 64.9902 ± 1.6431 | 63.5246 ± 1.3031 | 69.9104 ± 2.0957 |

| rbf-SVR | 9.6822 ± 0.4247 | 9.5922 ± 0.4015 | 10.0280 ± 0.6436 | 10.7400 ± 0.5887 | |

| NQSSVR | 0.6522 ± 0.0114 | 0.8818 ± 0.0064 | 1.4464 ± 0.0016 | 4.4658 ± 0.0995 | |

| NQSSVR(d) | 8.8504 ± 0.4567 | 9.4208 ± 0.3310 | 10.9936 ± 0.4588 | 12.3712 ± 1.3552 | |

| n = 800 | poly-SVR | 157.6060 ± 5.8607 | 139.2794 ± 7.3092 | 161.7490 ± 2.2436 | 163.0944 ± 4.0222 |

| rbf-SVR | 19.5872 ± 1.2377 | 17.9632 ± 1.2597 | 18.5810 ± 0.3514 | 19.8404 ± 1.4977 | |

| NQSSVR | 0.9408 ± 0.0407 | 1.2254 ± 0.0537 | 1.9072 ± 0.0832 | 6.2810 ± 0.2554 | |

| NQSSVR(d) | 16.5370 ± 0.5350 | 19.1804 ± 0.8508 | 23.1990 ± 1.2128 | 26.5350 ± 0.9734 | |

| n = 1000 | poly-SVR | 284.1451 ± 14.6313 | 288.7770 ± 11.1508 | 272.6956 ± 10.7412 | 141.2644 ± 8.5463 |

| rbf-SVR | 30.3622 ± 2.0704 | 32.5590 ± 2.4960 | 29.5694 ± 2.4395 | 30.9340 ± 9.9135 | |

| NQSSVR(p) | 1.4494 ± 0.1954 | 1.7290 ± 0.0310 | 2.2676 ± 0.0452 | 5.3260 ± 0.01937 | |

| NQSSVR(d) | 24.7908 ± 1.2614 | 28.3166 ± 1.4892 | 33.8146 ± 2.2722 | 44.1248 ± 1.5144 |

| Datasets | Abbreviations | Sample Points | Attributes |

|---|---|---|---|

| Concrete Slump Test | Concrete | 103 | 7 |

| Computer Hardware | Computer | 209 | 9 |

| Yacht Hydrodynamics | Yacht | 308 | 7 |

| Forest Fires | Forest | 517 | 13 |

| Energy efficiency (Heating) | Energy(H) | 768 | 8 |

| Energy efficiency (Cooling) | Energy(C) | 768 | 8 |

| Air quality | Air | 1067 | 6 |

| Datasets | Algorithms | RMSE | MAE | T1 | T2 |

|---|---|---|---|---|---|

| lin-SVR | 0.0703 ± 0.0032 | 0.0499 ± 0.0027 | 0.1618 ± 0.0122 | 24.2926 | |

| poly-SVR | 0.0476 ± 0.0016 | 0.0301 ± 0.0011 | 0.1708 ± 0.0130 | 49.2486 | |

| rbf-SVR | 0.0406 ± 0.0023 | 0.0275 ± 0.0015 | 0.1544 ± 0.0116 | 170.5862 | |

| NNSVR | 0.0752 ± 0.0021 | 0.0597 ± 0.0020 | 0.0572 ± 0.0057 | 7.6194 | |

| Concrete | QLSSVR | 0.0571 ± 0.0022 | 0.0453 ± 0.0017 | 0.0300 ± 0.0020 | 5.0718 |

| -SQSSVR | 0.0378 ± 0.0011 | 0.0242 ± 0.0014 | 0.3366 ± 0.0184 | 44.0096 | |

| NQSSVR(p) | 0.0381 ± 0.0016 | 0.0296 ± 0.0011 | 0.1608 ± 0.0051 | 24.0741 | |

| NQSSVR(d) | 0.0317 ± 0.0300 | 0.0267 ± 0.0016 | 0.1432 ± 0.0020 | 18.5869 | |

| lin-SVR | 0.0393 ± 0.0014 | 0.0199 ± 0.0003 | 0.5438 ± 0.0303 | 64.1097 | |

| poly-SVR | 0.0216 ± 0.0006 | 0.0084 ± 0.0002 | 0.5014 ± 0.0272 | 161.7245 | |

| rbf-SVR | 0.0179 ± 0.0011 | 0.0093 ± 0.0005 | 0.4554 ± 0.0193 | 507.4994 | |

| NNSVR | 0.0376 ± 0.0026 | 0.0217 ± 0.0009 | 0.0874 ± 0.0063 | 11.1911 | |

| Computer | QLSSVR | 0.0194 ± 0.0015 | 0.0099 ± 0.0004 | 0.0324 ± 0.0006 | 5.2495 |

| -SQSSVR | 0.0139 ± 0.0007 | 0.0118 ± 0.0003 | 0.6674 ± 0.0230 | 74.2415 | |

| NQSSVR(p) | 0.0119 ± 0.0017 | 0.0077 ± 0.0007 | 0.2132 ± 0.0115 | 41.8217 | |

| NQSSVR(d) | 0.0098 ± 0.0012 | 0.0063 ± 0.0005 | 0.3018 ± 0.0131 | 26.7490 | |

| lin-SVR | 0.1428 ± 0.0010 | 0.1129 ± 0.0007 | 3.2864 ± 0.0893 | 360.9378 | |

| poly-SVR | 0.0676 ± 0.0204 | 0.0559 ± 0.0105 | 3.4052 ± 0.0965 | 798.5638 | |

| rbf-SVR | 0.0287 ± 0.0036 | 0.0204 ± 0.0011 | 1.6488 ± 0.0835 | 307.3457 | |

| NNSVR | 0.1547 ± 0.0008 | 0.1012 ± 0.0003 | 0.1876 ± 0.0182 | 27.4442 | |

| Yacht | QLSSVR | 0.1056 ± 0.0005 | 0.0817 ± 0.0006 | 0.0620 ± 0.0110 | 8.7560 |

| -SQSSVR | 0.0711 ± 0.0009 | 0.0551 ± 0.0007 | 1.8485 ± 0.1059 | 56.9315 | |

| NQSSVR(p) | 0.0965 ± 0.0006 | 0.0741 ± 0.0002 | 0.5432 ± 0.0105 | 67.1763 | |

| NQSSVR(d) | 0.0708 ± 0.0013 | 0.0533 ± 0.0010 | 1.8038 ± 0.0704 | 167.1142 | |

| lin-SVR | 0.0528 ± 0.0010 | 0.0196 ± 0.0001 | 17.3862 ± 0.7161 | 1561.1308 | |

| poly-SVR | 0.0486 ± 0.0014 | 0.0179 ± 0.0001 | 6.8746 ± 0.0634 | 1603.4728 | |

| rbf-SVR | 0.0465 ± 0.0013 | 0.0170 ± 0.0000 | 3.2826 ± 0.1706 | 812.7531 | |

| NNSVR | 0.0498 ± 0.0010 | 0.0198 ± 0.0001 | 0.2688 ± 0.0151 | 45.1113 | |

| Fores | QLSSVR | 0.0500 ± 0.0009 | 0.0186 ± 0.0001 | 0.1756 ± 0.0170 | 29.1384 |

| -SQSSVR | 0.0484 ± 0.0011 | 0.0196 ± 0.0001 | 0.7936 ± 0.1830 | 696.7686 | |

| NQSSVR(p) | 0.0475 ± 0.0031 | 0.0183 ± 0.0001 | 0.7360 ± 0.0168 | 120.5714 | |

| NQSSVR(d) | 0.0470 ± 0.0024 | 0.0175 ± 0.0001 | 9.1456 ± 0.4374 | 540.1738 | |

| lin-SVR | 0.0801 ± 0.0001 | 0.0556 ± 0.0001 | 15.0628 ± 0.6460 | 1559.3228 | |

| poly-SVR | 0.0298 ± 0.0001 | 0.0218 ± 0.0001 | 12.9536 ± 0.3113 | 3408.8491 | |

| rbf-SVR | 0.0237 ± 0.0004 | 0.0208 ± 0.0003 | 5.7626 ± 0.3335 | 10,338.7745 | |

| NNSVR | 0.0826 ± 0.0006 | 0.0572 ± 0.0001 | 0.3374 ± 0.0115 | 52.2095 | |

| Energy(H) | QLSSVR | 0.0704 ± 0.0003 | 0.0499 ± 0.0002 | 0.1482 ± 0.0081 | 24.4898 |

| -SQSSVR | 0.0685 ± 0.0010 | 0.0464 ± 0.0004 | 9.8748 ± 0.1596 | 1065.6020 | |

| NQSSVR(p) | 0.0516 ± 0.0002 | 0.0405 ± 0.0002 | 0.8624 ± 0.0274 | 105.5643 | |

| NQSSVR(d) | 0.0587 ± 0.0003 | 0.0423 ± 0.0001 | 16.8058 ± 0.6380 | 679.5034 | |

| lin-SVR | 0.0855 ± 0.0009 | 0.0592 ± 0.0014 | 21.4692 ± 0.5119 | 2708.5711 | |

| poly-SVR | 0.0623 ± 0.0001 | 0.0408 ± 0.0002 | 28.3624 ± 0.8986 | 6579.0492 | |

| rbf-SVR | 0.0398 ± 0.0018 | 0.0276 ± 0.0008 | 9.2944 ± 0.0669 | 13,515.7641 | |

| NNSVR | 0.0922 ± 0.0006 | 0.0583 ± 0.0004 | 0.5539 ± 0.0259 | 88.6845 | |

| Energy(C) | QLSSVR | 0.0820 ± 0.0004 | 0.0572 ± 0.0003 | 0.2338 ± 0.0409 | 39.7269 |

| -SQSSVR | 0.0795 ± 0.0008 | 0.0512 ± 0.0005 | 14.7644 ± 0.1254 | 1782.9128 | |

| NQSSVR(p) | 0.0681 ± 0.0002 | 0.0476 ± 0.0001 | 1.4326 ± 0.0303 | 175.3763 | |

| NQSSVR(d) | 0.0702 ± 0.0005 | 0.0463 ± 0.0002 | 10.3206 ± 0.2744 | 1161.6354 | |

| lin-SVR | 0.1965 ± 0.0004 | 0.1637 ± 0.0002 | 18.5452 ± 0.9660 | 3563.6484 | |

| poly-SVR | 0.1197 ± 0.0018 | 0.0884 ± 0.0001 | 32.7722 ± 0.7051 | 7896.3575 | |

| rbf-SVR | 0.1246 ± 0.0003 | 0.0727 ± 0.0002 | 12.2458 ± 0.3911 | 16,768.0504 | |

| NNSVR | 0.1963 ± 0.0002 | 0.1038 ± 0.0002 | 0.4592 ± 0.0041 | 77.6678 | |

| Air | QLSSVR | 0.1346 ± 0.0002 | 0.0958 ± 0.0001 | 0.1078 ± 0.0077 | 17.8609 |

| -SQSSVR | 0.1265 ± 0.0003 | 0.1033 ± 0.0003 | 20.6978 ± 0.1596 | 2066.3358 | |

| NQSSVR(p) | 0.1385 ± 0.0001 | 0.0936 ± 0.0002 | 0.6869 ± 0.0241 | 122.4225 | |

| NQSSVR(d) | 0.1458 ± 0.0007 | 0.0965 ± 0.0006 | 16.4676 ± 0.7046 | 677.5034 |

| Datasets | Algorithms | RMSE | MAE | T1 | T2 | |

|---|---|---|---|---|---|---|

| NNSVR | 0.0274 ± 0.0001 | 0.0244 ± 0.0012 | 1.0058 ± 0.0635 | 0.0432 ± 0.0084 | 7.1174 | |

| QLSSVR | 0.0783 ± 0.0011 | 0.0668 ± 0.0008 | 0.1027 ± 0.1052 | 0.0094 ± 0.0015 | 0.1103 | |

| monthly | -SQSSVR | 0.1202 ± 0.0055 | 0.0898 ± 0.0052 | 0.9865 ± 0.1071 | 0.1356 ± 0.0534 | 1.8532 |

| NQSSVR(p) | 0.0140 ± 0.0012 | 0.0115 ± 0.0011 | 1.0130 ± 0.0457 | 0.1600 ± 0.0156 | 2.3799 | |

| NQSSVR(d) | 0.0185 ± 0.0015 | 0.0156 ± 0.0011 | 1.0241 ± 0.0672 | 0.0482 ± 0.0072 | 0.4796 | |

| NNSVR | 0.0072 ± 0.0003 | 0.0060 ± 0.0001 | 1.0000 ± 0.0003 | 2.7365 ± 0.1292 | 37.1562 | |

| QLSSVR | 0.0071 ± 0.0001 | 0.0056 ± 0.0004 | 1.0000 ± 0.0002 | 0.1714 ± 0.0188 | 2.1832 | |

| daily | -SQSSVR | 0.0071 ± 0.0002 | 0.0057 ± 0.0001 | 1.0001 ± 0.0001 | 32.4848 ± 0.5036 | 405.5820 |

| NQSSVR(p) | 0.0062 ± 0.0001 | 0.0054 ± 0.0001 | 1.0002 ± 0.0001 | 2.9516 ± 0.0389 | 43.7415 | |

| NQSSVR(d) | 0.0067 ± 0.0001 | 0.0058 ± 0.0001 | 1.0000 ± 0.0001 | 2.3086 ± 0.2897 | 28.2437 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, D.; Yang, Z.; Ye, J.; Yang, X. Kernel-Free Quadratic Surface Support Vector Regression with Non-Negative Constraints. Entropy 2023, 25, 1030. https://doi.org/10.3390/e25071030

Wei D, Yang Z, Ye J, Yang X. Kernel-Free Quadratic Surface Support Vector Regression with Non-Negative Constraints. Entropy. 2023; 25(7):1030. https://doi.org/10.3390/e25071030

Chicago/Turabian StyleWei, Dong, Zhixia Yang, Junyou Ye, and Xue Yang. 2023. "Kernel-Free Quadratic Surface Support Vector Regression with Non-Negative Constraints" Entropy 25, no. 7: 1030. https://doi.org/10.3390/e25071030

APA StyleWei, D., Yang, Z., Ye, J., & Yang, X. (2023). Kernel-Free Quadratic Surface Support Vector Regression with Non-Negative Constraints. Entropy, 25(7), 1030. https://doi.org/10.3390/e25071030