Attention-Guided Huber Loss for Head Pose Estimation Based on Improved Capsule Network

Abstract

1. Introduction

- (1)

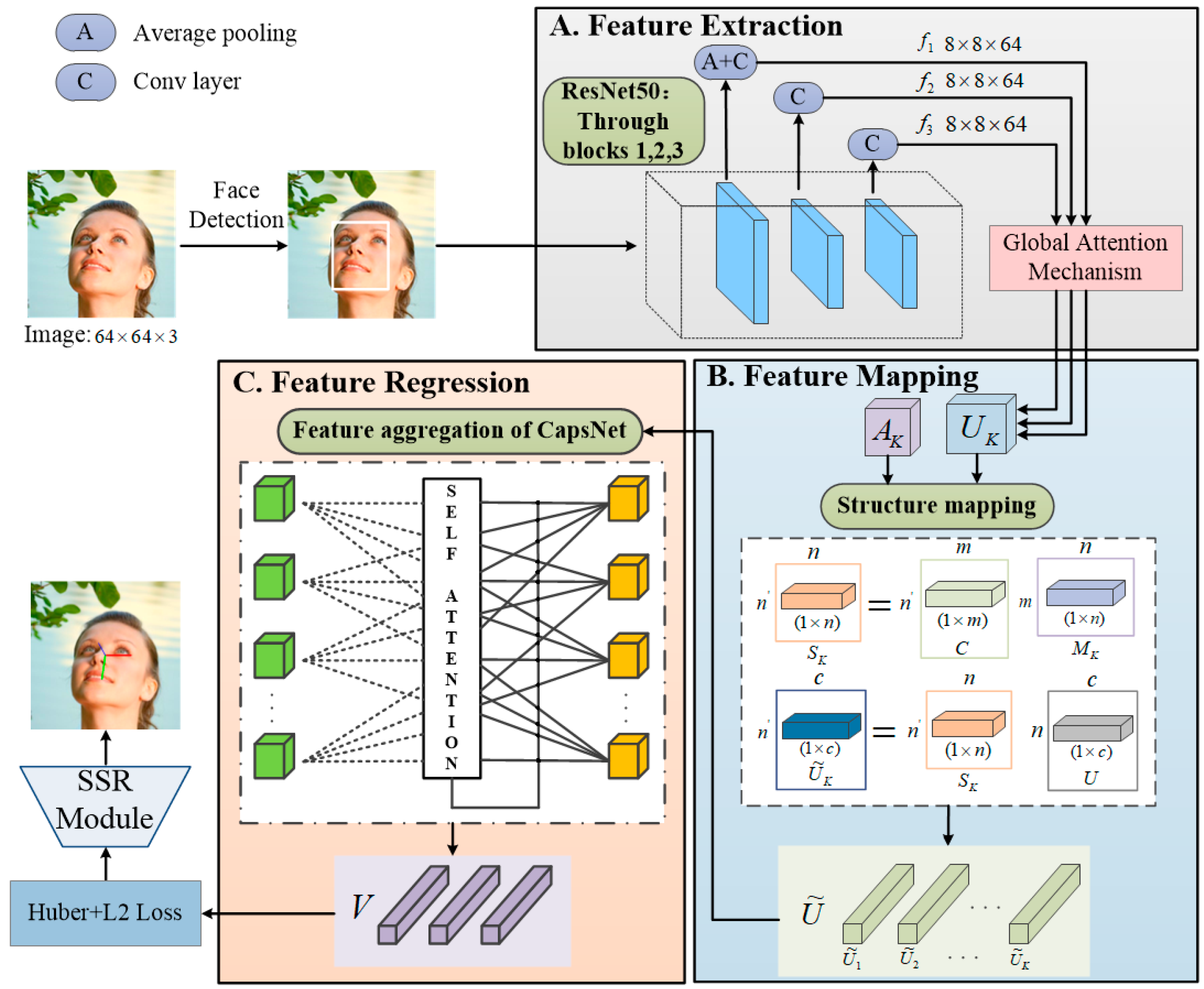

- We propose a detection model that combines CNN and capsule network for head pose estimation. The model includes feature extraction, feature mapping, and feature aggregation modules. A multi-level output structure backbone network is used to extract spatial structure and semantic information at different levels for improved estimation accuracy.

- (2)

- In order to obtain more effective and representative features, we apply an improved feature extraction module to enhance the spatial weight of feature extraction, which can obtain more spatial information and significantly improve the feature extraction performance and network efficiency for capturing the main features.

- (3)

- To address the problem of loss of spatial structure information, we utilize an improved capsule network with reduced number of capsules and parameters to enhance the network’s generalization ability. Moreover, we optimize the regression loss to improve the model’s robustness.

- (4)

- To validate the effectiveness of the proposed method, we conduct tests and ablation experiments on the AFLW2000 and BIWI datasets. The results demonstrated that the performance of our method outperformed previous methods significantly.

2. Related Work

2.1. Landmark-Based Methods

2.2. Landmark-Freed Methods

3. Method

3.1. Problem Formulation

3.2. Overview of Proposed Network

3.3. Feature Extraction

3.3.1. Face Detection

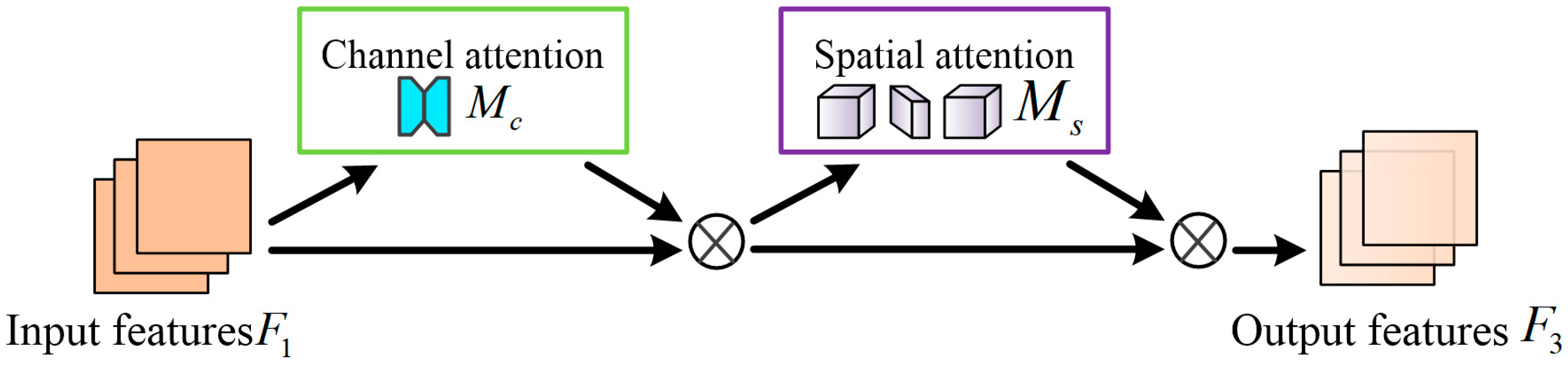

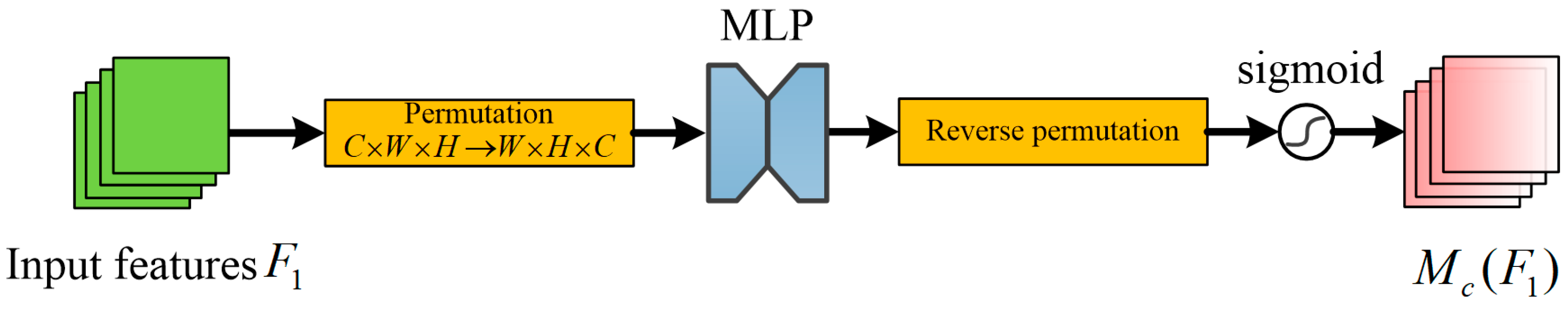

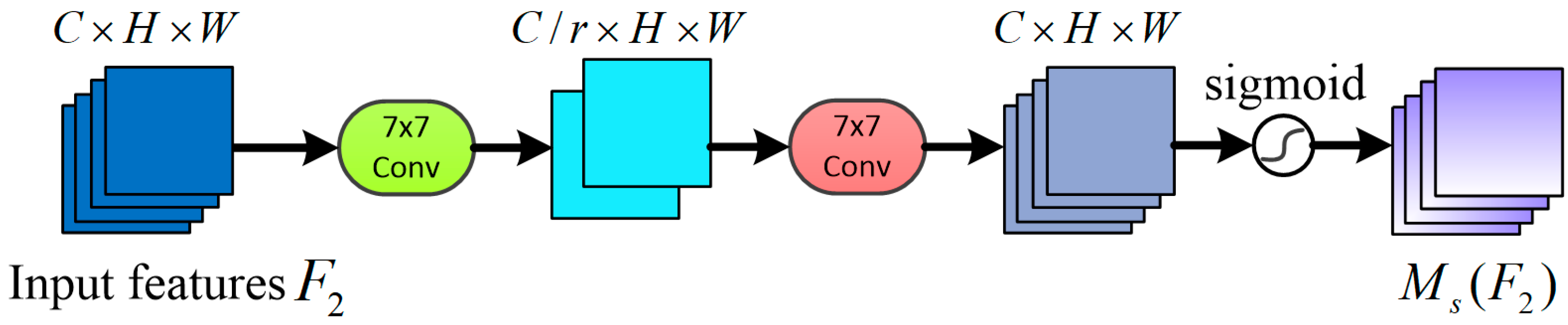

3.3.2. Global Attention Block

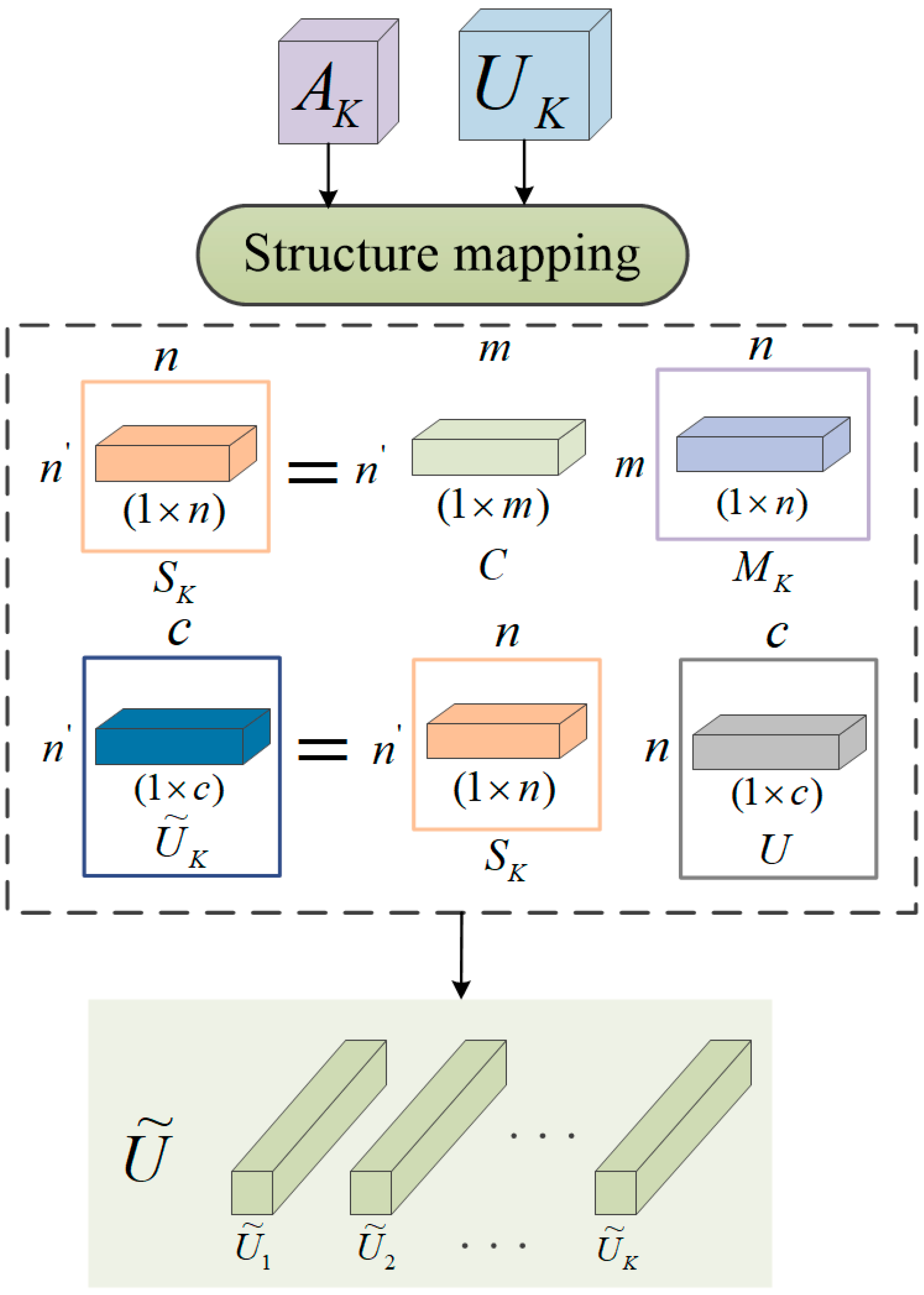

3.4. Feature Mapping

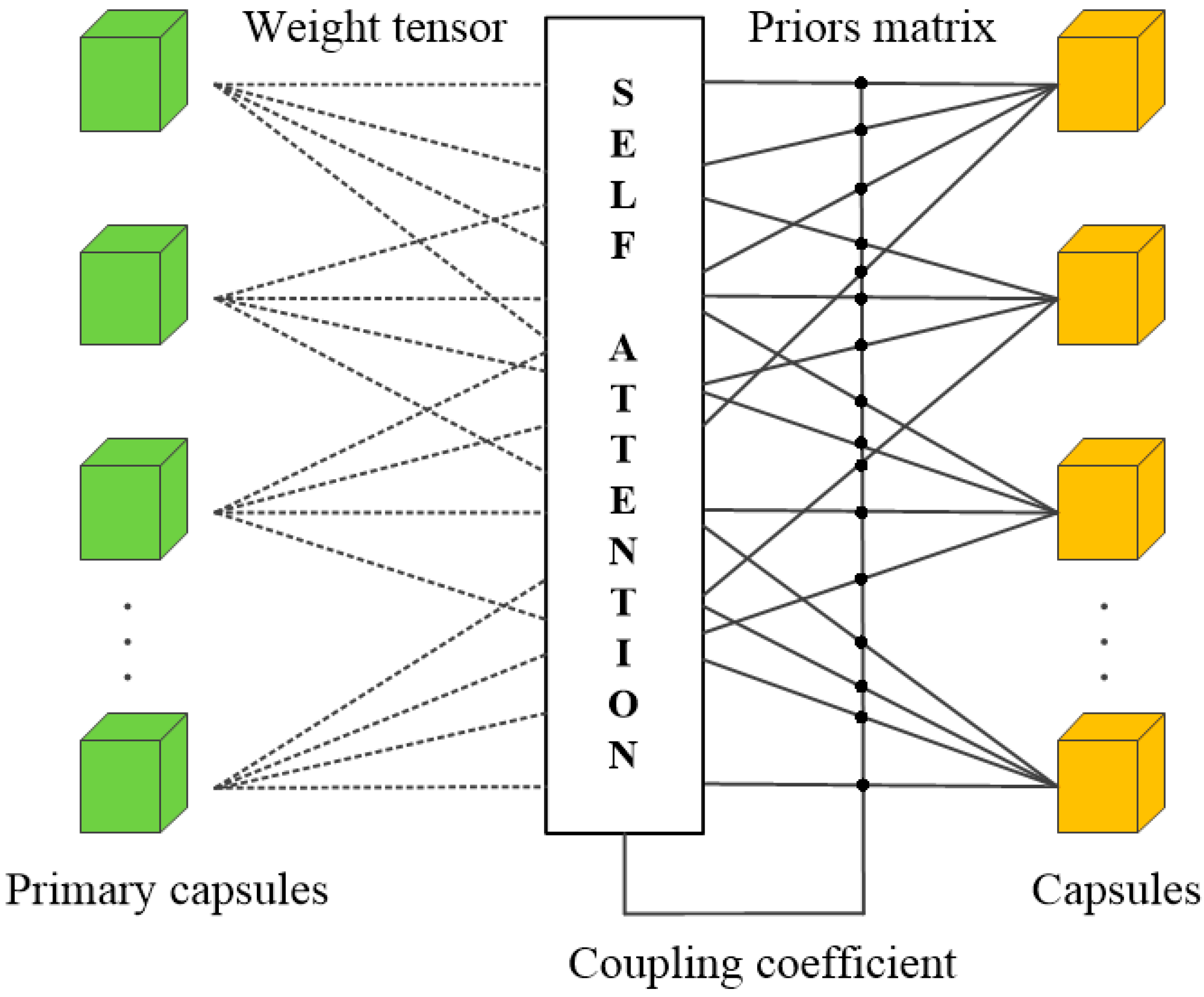

3.5. Feature Aggregation

3.6. Optimization of Loss Functions

4. Experiments

4.1. Experimental Implementation

4.2. Datasets and Experimental Protocols

- (1)

- In our first protocol, we follow the convention by using the 300W-LP dataset for training and AFLW2000 and BIWI datasets for testing. When evaluating on the BIWI dataset, we do not use tracking and only consider using MTCNN [45] face detection samples whose rotation angles are in the range of [−99°, +99°] to keep consistent with the strategies used by Hopenet [33], FSA-Net [21] and TriNet [42]. Also, we compare several state-of-the-art landmark-based pose estimation methods using this protocol.

- (2)

- For the second protocol, we follow the convention by FSA-Net [21] and randomly split the BIWI dataset in a ratio of 7:3 for training and testing, respectively. The train set is not crossed with the test set. MTCNN [45] uses experience tracking technology to detect faces in the BIWI datasets, avoiding the failure of face detection. This protocol is used by several pose estimation methods such as RGB, depth, and time, whereas our method uses only a single RGB frame.

4.3. Experiment Results

4.3.1. Results with Protocol 1

4.3.2. Results with Protocol 2

4.3.3. Results with Model Size and Computation Time

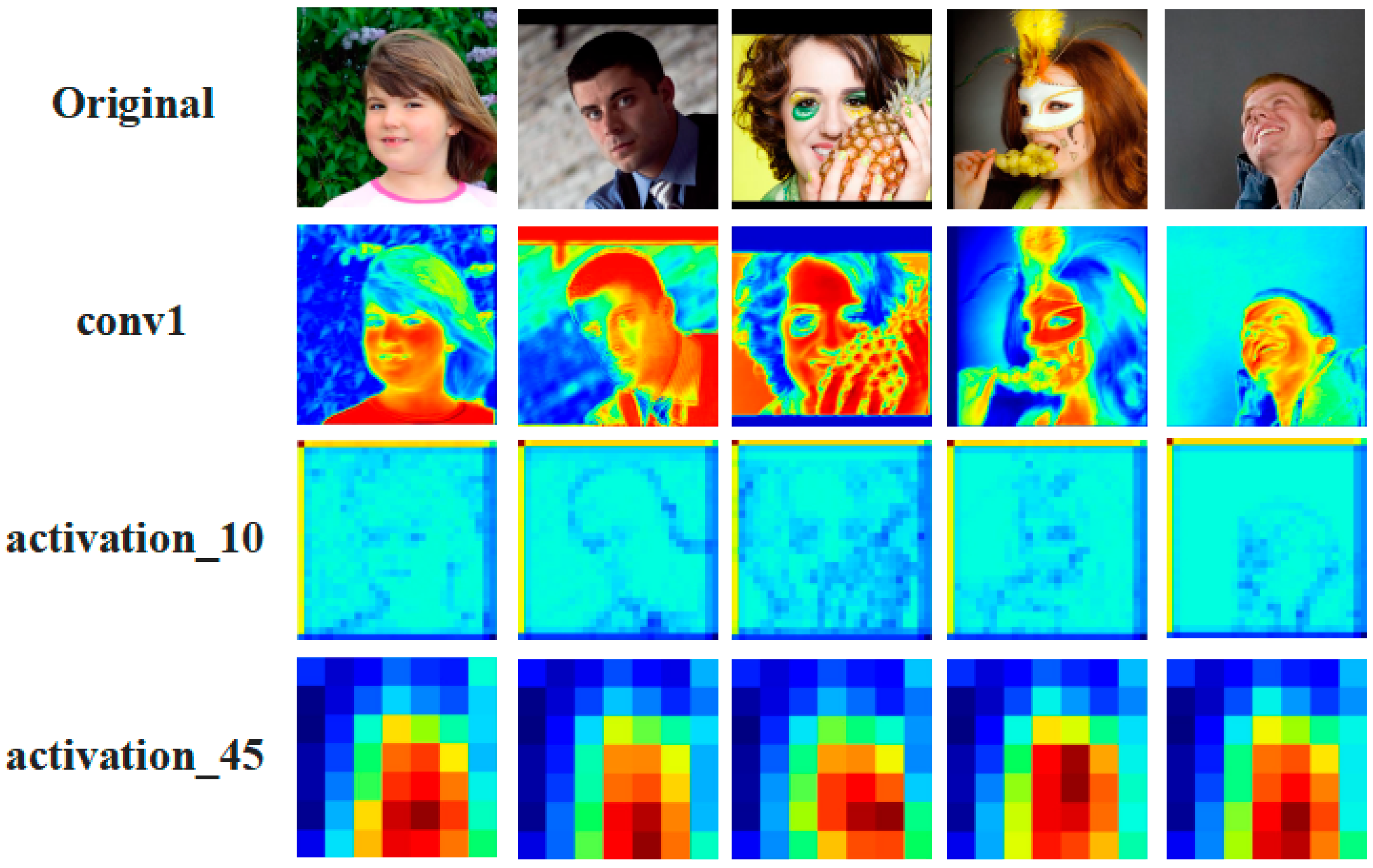

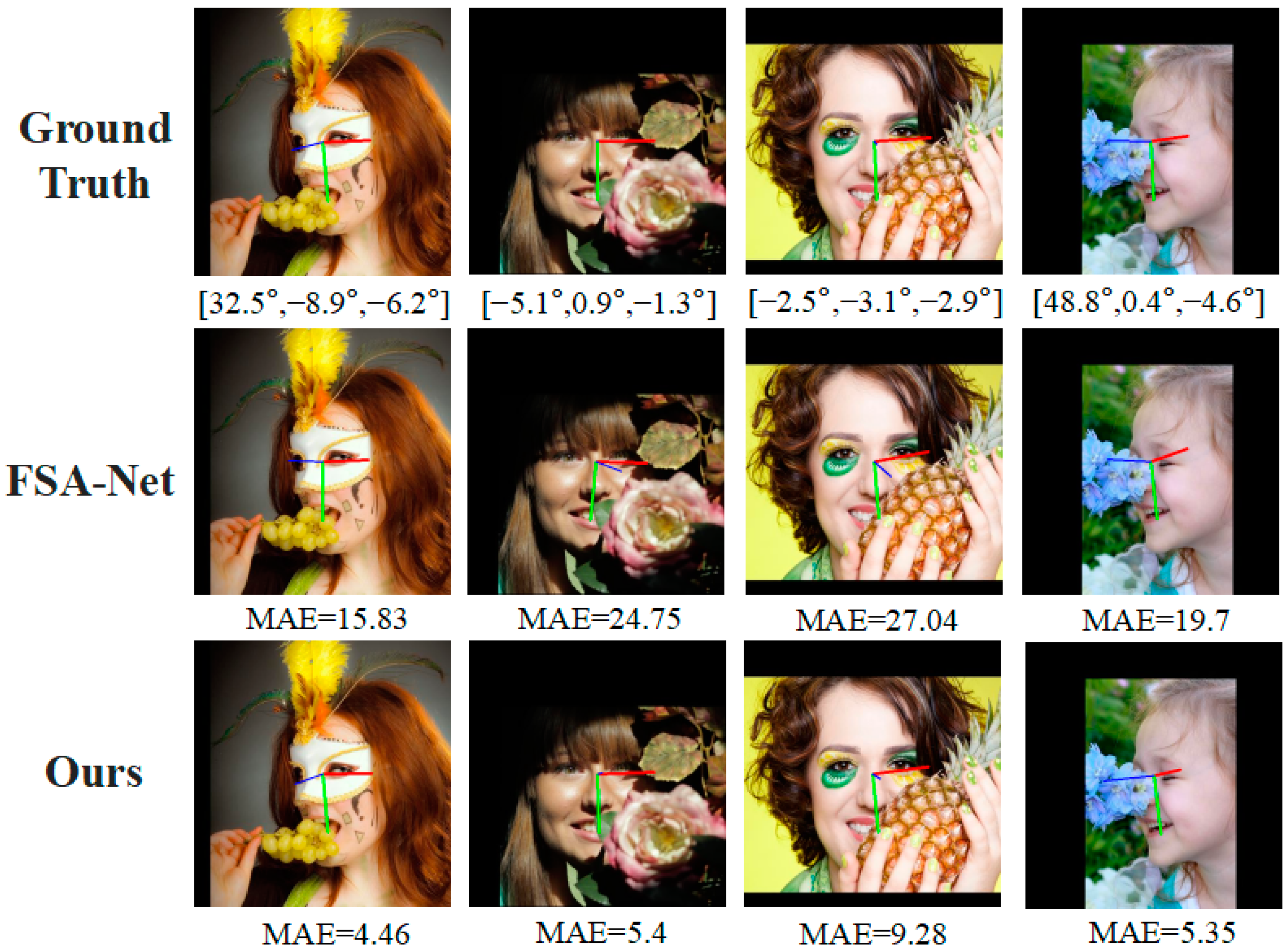

4.4. Visualization

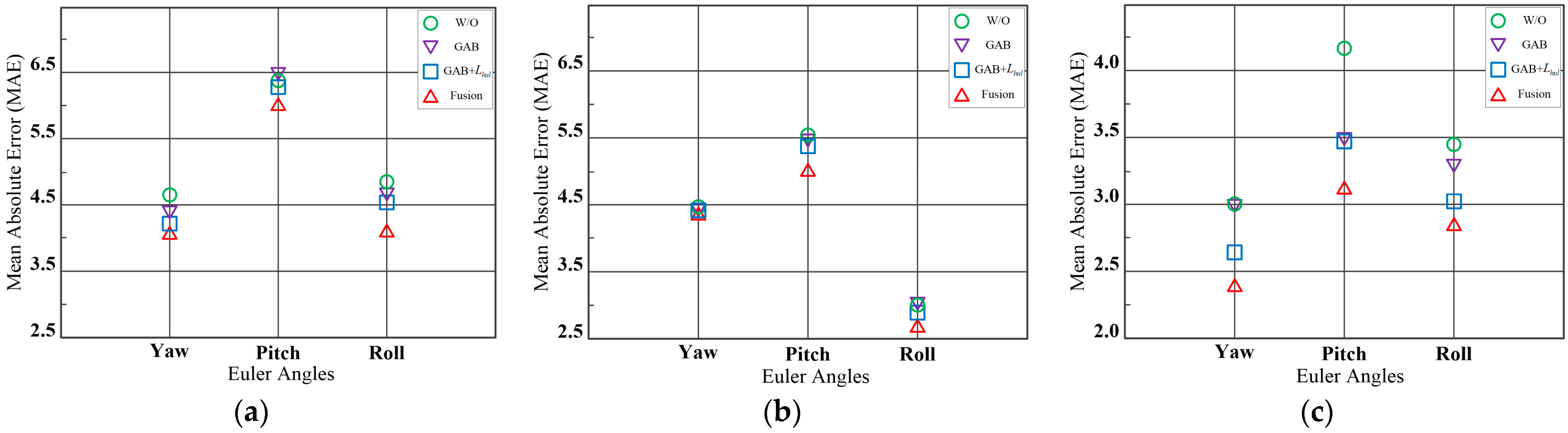

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moller, R.; Furnari, A.; Battiato, S. A survey on human-aware robot navigation. Robot. Auton. Syst. 2021, 145, 103837. [Google Scholar] [CrossRef]

- Murphy-Chutorian, E.; Trivedi, M.M. Head pose estimation in computer vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 607–626. [Google Scholar] [CrossRef] [PubMed]

- Jie, S.; Lu, S. An improved single shot multibox for video-rate head pose prediction. IEEE Sens. J. 2020, 20, 12326–12333. [Google Scholar]

- Yining, L.; Liang, W.; Fang, X.; Yibiao, Z.; Lap-Fai, Y. Synthesizing Personalized Training Programs for Improving Driving Habits via Virtual Reality. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018; pp. 297–304. [Google Scholar]

- Ye, M.; Zhang, W.; Cao, P. Driver fatigue detection based on residual channel attention network and head pose estimation. Appl. Sci. 2021, 11, 9195. [Google Scholar] [CrossRef]

- Fan, Z.; Li, X.; Li, Y. Multi-Agent Deep Reinforcement Learning for Online 3D Human Poses Estimation. Remote Sens. 2021, 13, 3995. [Google Scholar] [CrossRef]

- Murphy-Chutorian, E.; Trivedi, M.M. Head pose estimation and augmented reality tracking: An integrated system and evaluation for monitoring driver awareness. IEEE Trans. Intell. Transp. Syst. 2010, 11, 300–311. [Google Scholar] [CrossRef]

- Vankayalapati, H.D.; Kuchibhotla, S.; Chadalavada, M.S.K. A Novel Zernike Moment-Based Real-Time Head Pose and Gaze Estimation Framework for Accuracy-Sensitive Applications. Sensors 2022, 22, 8449. [Google Scholar] [CrossRef]

- Qi, S.; Wang, W.; Jia, B. Learning human-object interactions by graph parsing neural networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 401–417. [Google Scholar]

- Wang, K.; Zhao, R.; Ji, Q. Human computer interaction with head pose, eye gaze and body gestures. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; p. 789. [Google Scholar]

- Sankaranarayanan, K.; Chang, M.C.; Krahnstoever, N. Tracking gaze direction from far-field surveillance cameras. In Proceedings of the IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; pp. 519–526. [Google Scholar]

- Chen, C.W.; Aghajan, H. Multiview social behavior analysis in work environments. In Proceedings of the 5th ACM/IEEE International Conference on Distributed Smart Cameras, Ghent, Belgium, 22–25 August 2011; pp. 1–6. [Google Scholar]

- Yunjuan, H.; Li, F. Isospectral Manifold Learning Algorithm. J. Softw. 2013, 24, 2656–2666. [Google Scholar]

- Wu, J.; Shang, Z.; Wang, K. Partially Occluded Head Posture Estimation for 2D Images using Pyramid HoG Features. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 507–512. [Google Scholar]

- Yujia, W.; Wei, L.; Jianbing, S.; Yunde, J. A deep Coarse-to-Fine network for head pose estimation from synthetic data. Pattern Recognit. 2019, 94, 196–206. [Google Scholar]

- Junliang, X.; Zhiheng, N.; Junshi, H. Towards robust and accurate multi-view and partially-occluded face alignment. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 987–1001. [Google Scholar]

- Bisogni, C.; Nappi, M.; Pero, C.; Ricciardi, S. FASHE: A FrActal Based Strategy for Head Pose Estimation. IEEE Trans. Image Process. 2021, 30, 3192–3203. [Google Scholar] [CrossRef]

- Mazzia, V.; Salvetti, F.; Chiaberge, M. Efficient-capsnet: Capsule network with self-attention routing. Sci. Rep. 2021, 11, 14634. [Google Scholar] [CrossRef]

- Hinton, G.E.; Krizhevsky, A.; Wang, S.D. Transforming auto-encoders. In Artificial Neural Networks and Machine Learning–ICANN, Proceedings of the 21st International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 44–51. [Google Scholar]

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic routing between capsules. Adv. Neural Inf. Process. Syst. 2017, 30, 3856–3866. [Google Scholar]

- Yang, T.; Chen, Y.; Lin, Y.; Chuang, Y. FSA-Net: Learning fine-grained structure aggregation for head pose estimation from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1087–1096. [Google Scholar]

- Chang, F.J.; Tran, A.T.; Hassner, T. Expnet: Landmark-free, deep, 3d facial expressions. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; pp. 122–129. [Google Scholar]

- Liu, L.; Ke, Z.; Huo, J. Head pose estimation through keypoints matching between reconstructed 3D face model and 2D image. Sensors 2021, 21, 1841. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Pedrycz, W. A central profile-based 3D face pose estimation. Pattern Recognit. 2014, 47, 525–534. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Zhu, X.; Lei, Z.; Liu, X.; Shi, H.; Li, S.Z. Face alignment across large poses: A 3D solution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2–30 June 2016; pp. 146–155. [Google Scholar]

- Nikolaidis, A.; Pitas, I. Facial feature extraction and pose determination. Pattern Recognit. 2000, 33, 1783–1791. [Google Scholar] [CrossRef]

- Illingworth, J.; Kittler, J. The adaptive Hough transform. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 690–698. [Google Scholar] [CrossRef]

- Narayanan, A.; Kaimal, R.M.; Bijlani, K. Estimation of driver head yaw angle using a geometric model. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3446–3460. [Google Scholar] [CrossRef]

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2D & 3D face alignment problem? (And a dataset of 230,000 3D facial landmarks). In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Kumar, A.; Alavi, A.; Chellappa, R. KEPLER: Keypoint and pose estimation of unconstrained faces by learning efficient h-cnn regressors. In Proceedings of the 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG), Washington, DC, USA, 30 May–3 June 2017; pp. 258–265. [Google Scholar]

- Wang, Q.; Lei, H.; Qian, W. Siamese PointNet: 3D Head Pose Estimation with Local Feature Descriptor. Electronics 2023, 12, 1194. [Google Scholar] [CrossRef]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-grained head pose estimation without keypoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 215501–215509. [Google Scholar]

- He, K.; Zhang, X.; Ren, S. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, H.; Chen, Z.; Zhou, Y. Hybrid coarse-fine classification for head pose estimation. arXiv 2019, arXiv:1901.06778. [Google Scholar]

- Yang, T.; Huang, H.; Lin, Y.; Hsiu, P.; Chuang, Y. SSR-Net: A compact soft stagewise regression network for age estimation. In Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 1078–1084. [Google Scholar]

- Zhou, Y.; Gregson, J. WHEnet: Real-time fine-grained estimation for wide range head pose. arXiv 2020, arXiv:2005.10353. [Google Scholar]

- Zhang, H.; Wang, M.; Liu, Y.; Yuan, Y. FDN: Feature decoupling network for head pose estimation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020; pp. 12789–12796. [Google Scholar]

- Zhu, X.; Yang, Q.; Zhao, L. An Improved Tiered Head Pose Estimation Network with Self-Adjust Loss Function. Entropy 2022, 24, 974. [Google Scholar] [CrossRef] [PubMed]

- Dhingra, N. Lwposr: Lightweight efficient fine grained head pose estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022; pp. 1495–1505. [Google Scholar]

- Dhingra, N. HeadPosr: End-to-end Trainable Head Pose Estimation using Transformer Encoders. In Proceedings of the 16th IEEE International Conference on Automatic Face and Gesture Recognition (FG), Jodhpur, India, 15–18 December 2021; pp. 1–8. [Google Scholar]

- Cao, Z.; Chu, Z.; Liu, D.; Chen, Y. A vector-based representation to enhance head pose estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1187–1196. [Google Scholar]

- Jiawei, G.; Xiaodong, Y. Dynamic Facial Analysis: From Bayesian Filtering to Recurrent Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1531–1540. [Google Scholar]

- Martin, M.; Van De Camp, F.; Stiefelhagen, R. Real time head model creation and head pose estimation on consumer depth cameras. In Proceedings of the 2nd International Conference on 3D Vision (3DV), Tokyo, Japan, 8–11 December 2014; pp. 641–648. [Google Scholar]

- Zhang, K.; Zhang, Z.; Li, Z. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Joshi, M.; Pant, D.R.; Karn, R.R. Meta-Learning, Fast Adaptation, and Latent Representation for Head Pose Estimation. In Proceedings of the 31st Conference of Open Innovations Association (FRUCT), Helsinki, Finland, 27–29 April 2022; pp. 71–78. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Lecture Notes in Computer Science, Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar]

- Huber, P.J. Robust estimation of a location parameter. Breakthr. Stat. Methodol. Distrib. 1992, 492–518. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhu, X.; Lei, Z.; Yan, J.; Yi, D.; Li, S.Z. High-fifidelity pose and expression normalization for face recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 787–796. [Google Scholar]

- Fanelli, G.; Dantone, M.; Gall, J. Random forests for real time 3d face analysis. Int. J. Comput. Vis. 2013, 101, 437–458. [Google Scholar] [CrossRef]

| Loss | Improved | AFLW2000 | BIWI | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Yaw | Pitch | Roll | MAE | Yaw | Pitch | Roll | MAE | ||

| MAE | 4.49 | 6.22 | 4.64 | 5.12 | 4.39 | 5.36 | 2.76 | 4.17 | |

| MAE | 4.00 | 6.17 | 4.27 | 4.81 | 4.36 | 4.89 | 2.64 | 4.05 | |

| Huber loss | 4.12 | 6.37 | 4.58 | 5.02 | 4.51 | 5.16 | 2.71 | 4.13 | |

| Huber loss | 3.94 | 6.13 | 4.27 | 4.78 | 4.42 | 4.90 | 2.59 | 3.97 | |

| loss | 4.03 | 6.21 | 4.38 | 4.87 | 4.34 | 5.02 | 2.70 | 4.02 | |

| loss | 3.91 | 5.78 | 4.11 | 4.60 | 4.25 | 4.96 | 2.57 | 3.93 | |

| Method | Yaw | Pitch | Roll | MAE |

|---|---|---|---|---|

| Dlib [25] | 23.10 | 13.60 | 10.50 | 15.80 |

| FAN [30] | 6.36 | 12.3 | 8.71 | 9.12 |

| 3DDFA [26] | 5.40 | 8.53 | 8.25 | 7.39 |

| Hopenet [33] | 6.47 | 6.56 | 5.44 | 6.16 |

| SSR-Net-MD [36] | 5.14 | 7.09 | 5.89 | 6.01 |

| FSA-Net [21] | 4.50 | 6.08 | 4.64 | 5.07 |

| WHENet [37] | 5.11 | 6.24 | 4.92 | 5.42 |

| TriNet [42] | 4.20 | 5.77 | 4.04 | 4.67 |

| LwPosr [40] | 4.80 | 6.38 | 4.88 | 5.35 |

| Ours | 3.91 | 5.78 | 4.11 | 4.60 |

| Method | Yaw | Pitch | Roll | MAE |

|---|---|---|---|---|

| Dlib [25] | 16.80 | 13.80 | 6.19 | 12.2 |

| FAN [30] | 8.53 | 7.48 | 7.63 | 7.89 |

| 3DDFA [26] | 36.20 | 12.30 | 8.78 | 19.10 |

| Hopenet [33] | 4.81 | 6.61 | 3.27 | 4.90 |

| KEPLER [31] | 8.80 | 17.3 | 16.2 | 13.9 |

| SSR-Net-MD [36] | 4.49 | 6.31 | 3.61 | 4.65 |

| FSA-Net [21] | 4.27 | 4.96 | 2.76 | 4.00 |

| TriNet [42] | 3.05 | 4.76 | 4.11 | 3.97 |

| LwPosr [40] | 4.11 | 4.87 | 3.19 | 4.05 |

| Ours | 4.25 | 4.96 | 2.57 | 3.93 |

| Method | Input | Yaw | Pitch | Roll | MAE |

|---|---|---|---|---|---|

| SSR-Net-MD [36] | RGB | 4.24 | 4.35 | 4.19 | 4.26 |

| FSA-Net [21] | RGB | 2.89 | 4.29 | 3.60 | 3.60 |

| TriNet [42] | RGB | 3.18 | 3.57 | 2.85 | 3.20 |

| VGG16+RNN [43] | RGB + Time | 3.14 | 3.48 | 2.60 | 3.07 |

| Martin [44] | RGB + Depth | 3.60 | 2.50 | 2.60 | 2.90 |

| Ours | RGB | 2.37 | 3.14 | 2.83 | 2.78 |

| Method | Image Size | Model Size (MB) | Parameters (M) | FPS |

|---|---|---|---|---|

| Hopenet [33] | 95.9 | 23.92 | 9 | |

| FSA-Net [21] | 5.1 | 1.17 | 6 | |

| TriNet [42] | 27.96 | 1.95 | 10 | |

| Ours | 21.3 | 1.68 | 12 |

| GAB | SARM | AFLW2000 | BIWI | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Yaw | Pitch | Roll | MAE | Yaw | Pitch | Roll | MAE | |||

| 4.55 | 6.24 | 4.72 | 5.17 | 4.37 | 5.46 | 2.89 | 4.24 | |||

| 4.31 | 6.32 | 4.59 | 5.07 | 4.33 | 5.41 | 2.94 | 4.20 | |||

| 4.43 | 6.15 | 4.45 | 5.01 | 4.35 | 5.24 | 2.80 | 4.13 | |||

| 4.47 | 6.28 | 4.52 | 5.09 | 4.40 | 5.39 | 2.69 | 4.16 | |||

| 4.05 | 5.87 | 4.33 | 4.75 | 4.32 | 5.08 | 2.60 | 4.00 | |||

| 4.14 | 6.11 | 4.42 | 4.89 | 4.30 | 5.25 | 2.72 | 4.09 | |||

| 4.20 | 6.06 | 4.23 | 4.83 | 4.36 | 5.21 | 2.61 | 4.06 | |||

| 3.91 | 5.78 | 4.11 | 4.60 | 4.25 | 4.96 | 2.57 | 3.93 | |||

| GAB | SARM | BIWI | ||||

|---|---|---|---|---|---|---|

| Yaw | Pitch | Roll | MAE | |||

| 2.96 | 4.43 | 3.80 | 3.73 | |||

| 2.91 | 3.45 | 3.21 | 3.19 | |||

| 2.56 | 3.48 | 3.19 | 3.07 | |||

| 2.73 | 3.53 | 3.07 | 3.11 | |||

| 2.45 | 3.25 | 2.90 | 2.87 | |||

| 2.60 | 3.41 | 2.91 | 2.97 | |||

| 2.39 | 3.27 | 2.87 | 2.84 | |||

| 2.37 | 3.14 | 2.83 | 2.78 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, R.; He, L.; Wang, H.; Yuan, L.; Li, K.; Liu, Z. Attention-Guided Huber Loss for Head Pose Estimation Based on Improved Capsule Network. Entropy 2023, 25, 1024. https://doi.org/10.3390/e25071024

Zhong R, He L, Wang H, Yuan L, Li K, Liu Z. Attention-Guided Huber Loss for Head Pose Estimation Based on Improved Capsule Network. Entropy. 2023; 25(7):1024. https://doi.org/10.3390/e25071024

Chicago/Turabian StyleZhong, Runhao, Li He, Hongwei Wang, Liang Yuan, Kexin Li, and Zhening Liu. 2023. "Attention-Guided Huber Loss for Head Pose Estimation Based on Improved Capsule Network" Entropy 25, no. 7: 1024. https://doi.org/10.3390/e25071024

APA StyleZhong, R., He, L., Wang, H., Yuan, L., Li, K., & Liu, Z. (2023). Attention-Guided Huber Loss for Head Pose Estimation Based on Improved Capsule Network. Entropy, 25(7), 1024. https://doi.org/10.3390/e25071024