Text Matching in Insurance Question-Answering Community Based on an Integrated BiLSTM-TextCNN Model Fusing Multi-Feature

Abstract

1. Introduction

2. Related Work

2.1. Artificial Intelligence in the Insurance Field

2.2. Text Matching

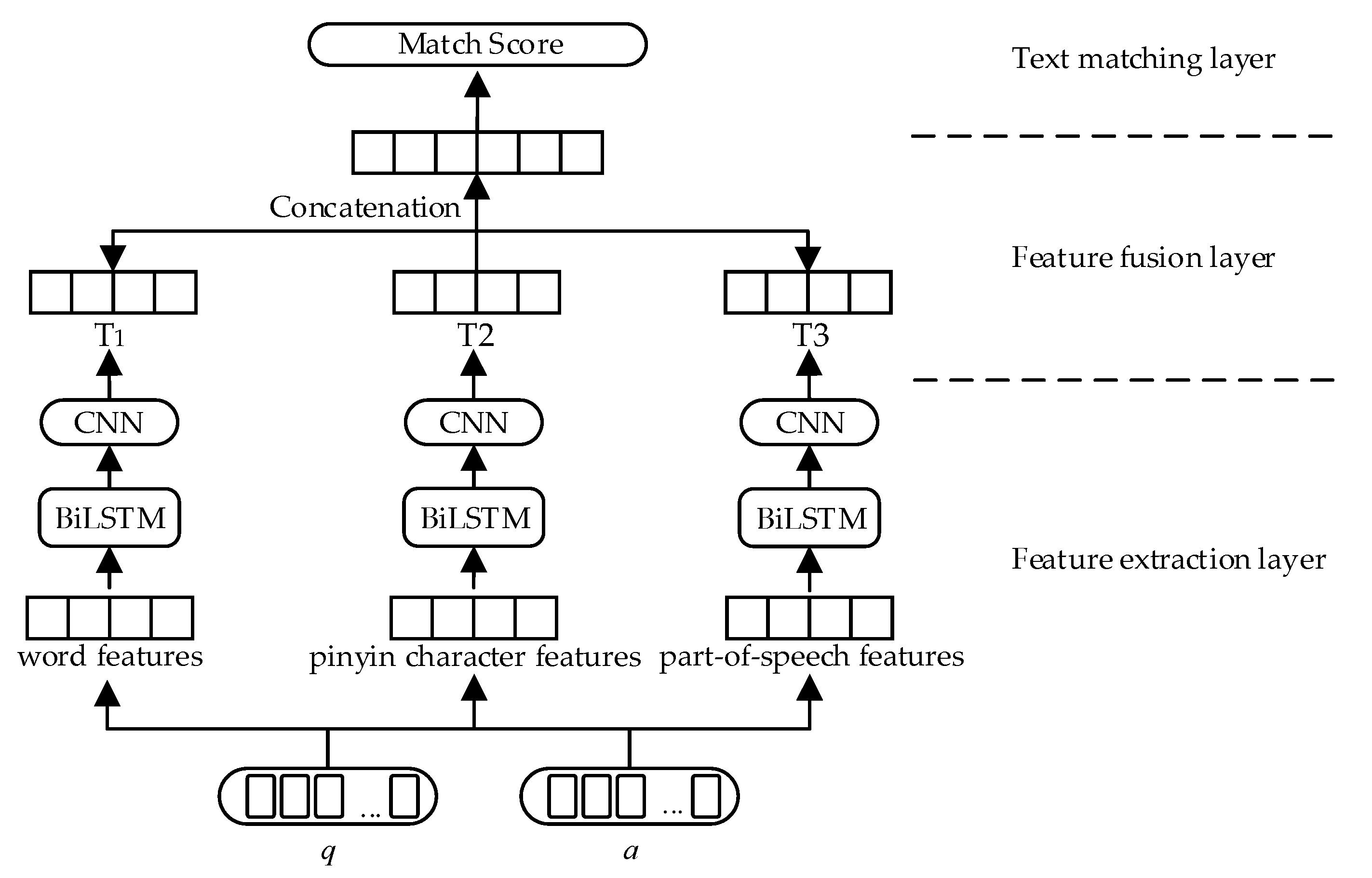

3. Construction of Text Matching Model

3.1. Problem Definition

3.2. Feature Extraction Layer

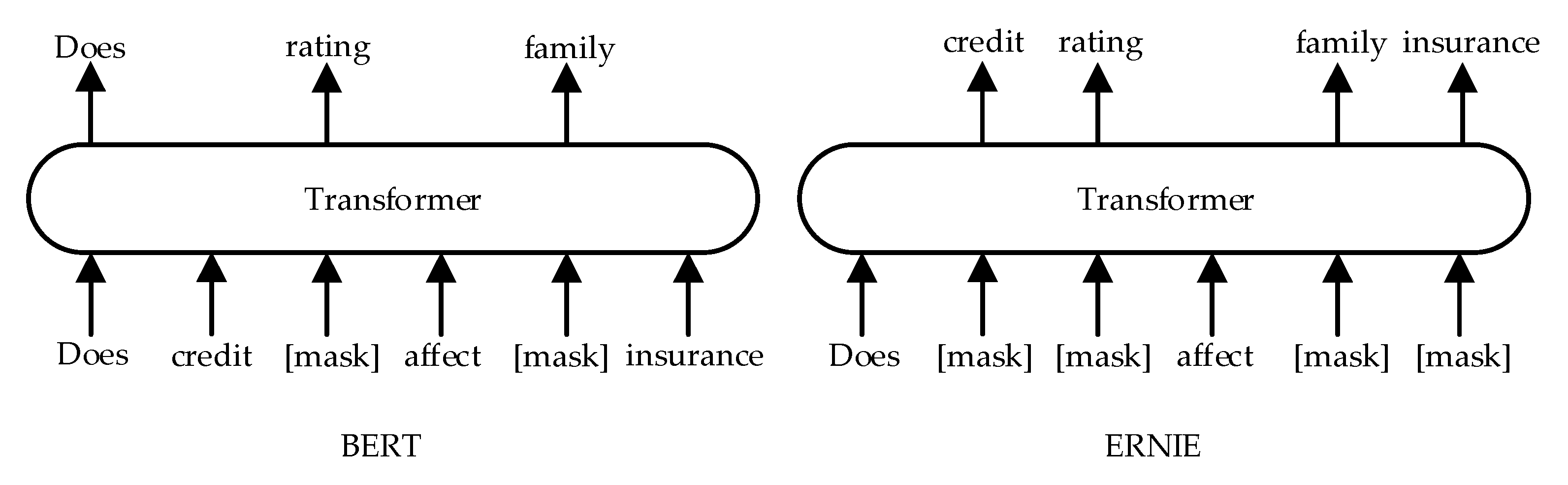

3.2.1. Word Features Extraction

3.2.2. Pinyin Character Features Extraction

3.2.3. Part-of-Speech Features Extraction

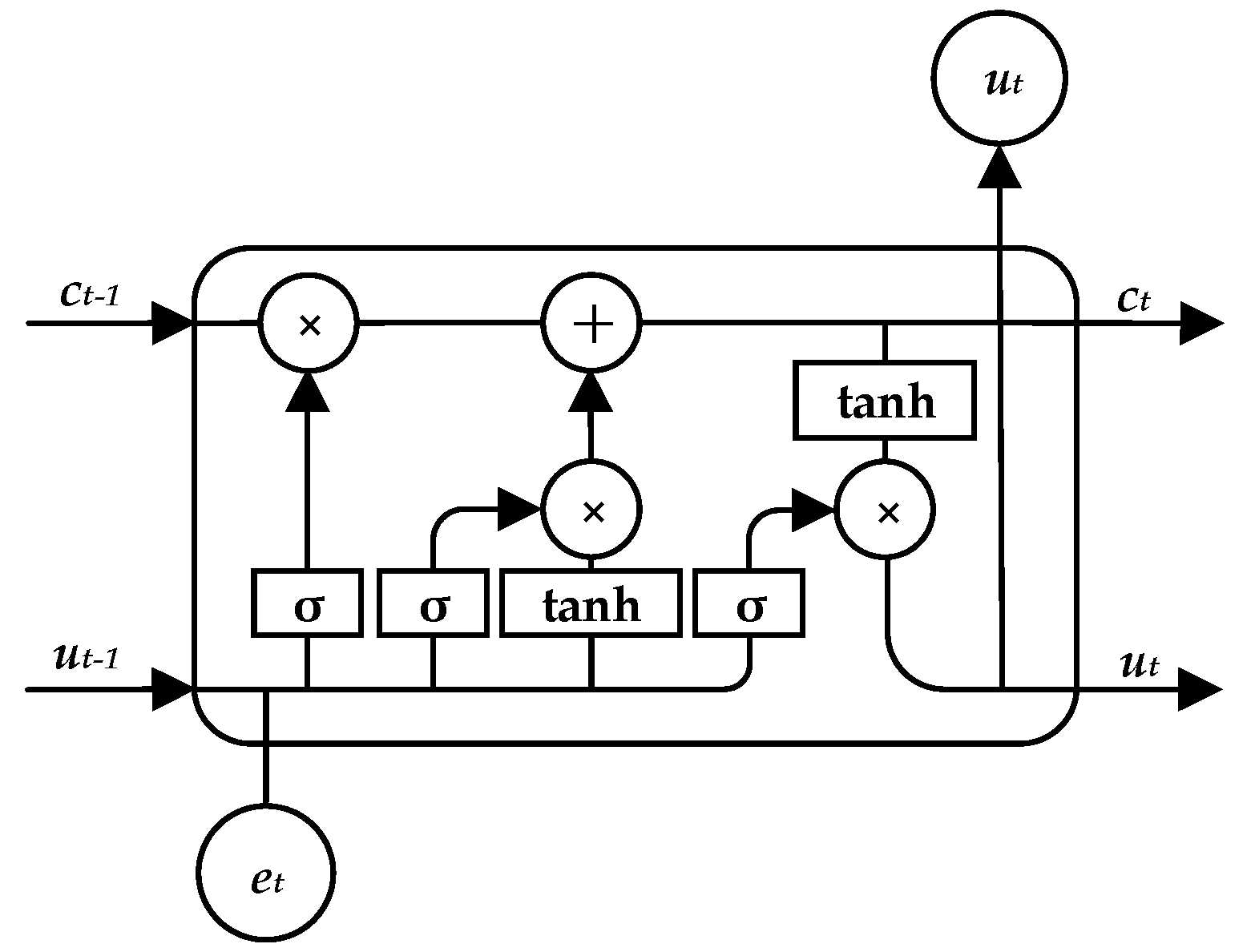

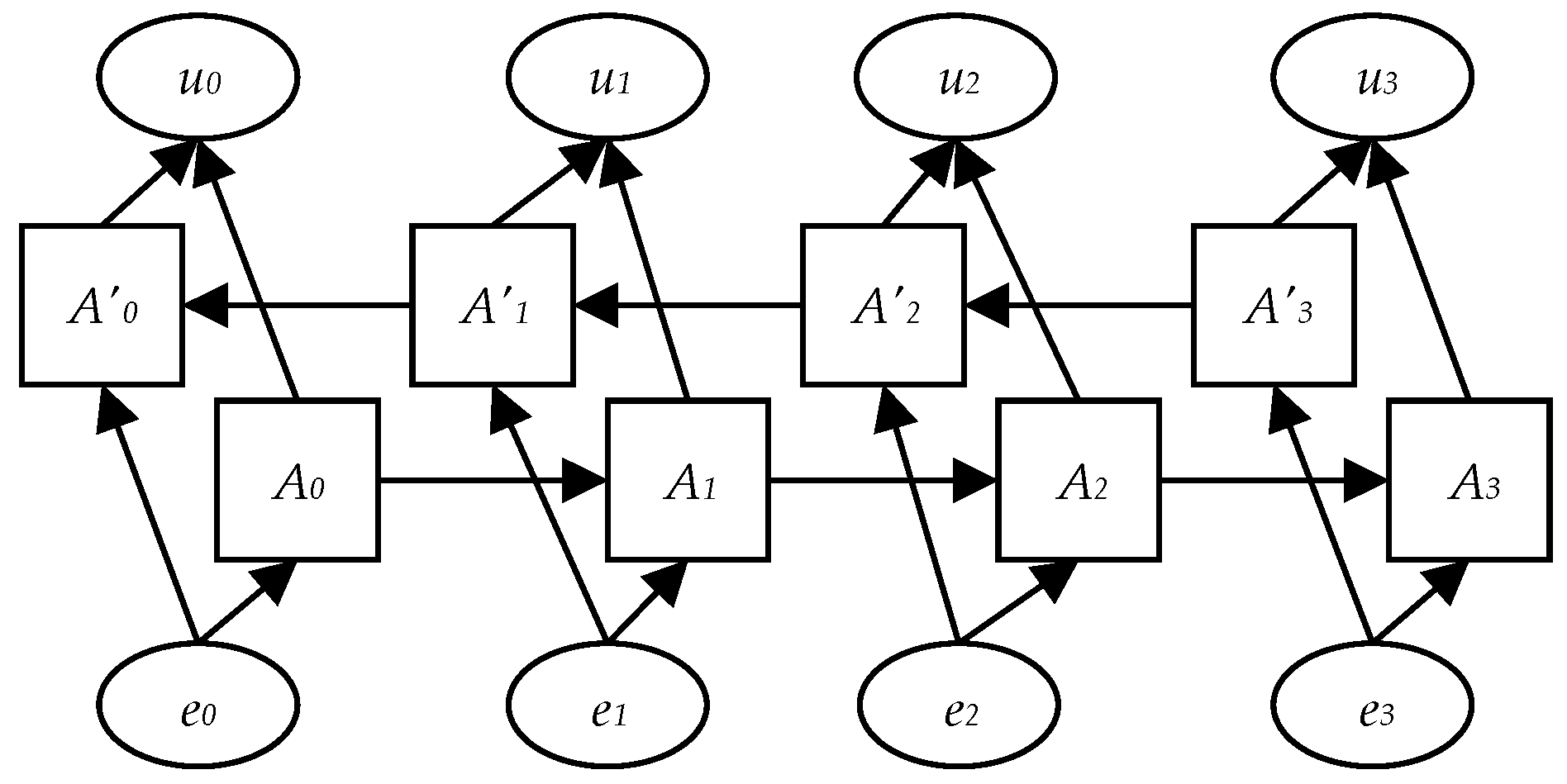

3.2.4. Global Feature Extraction

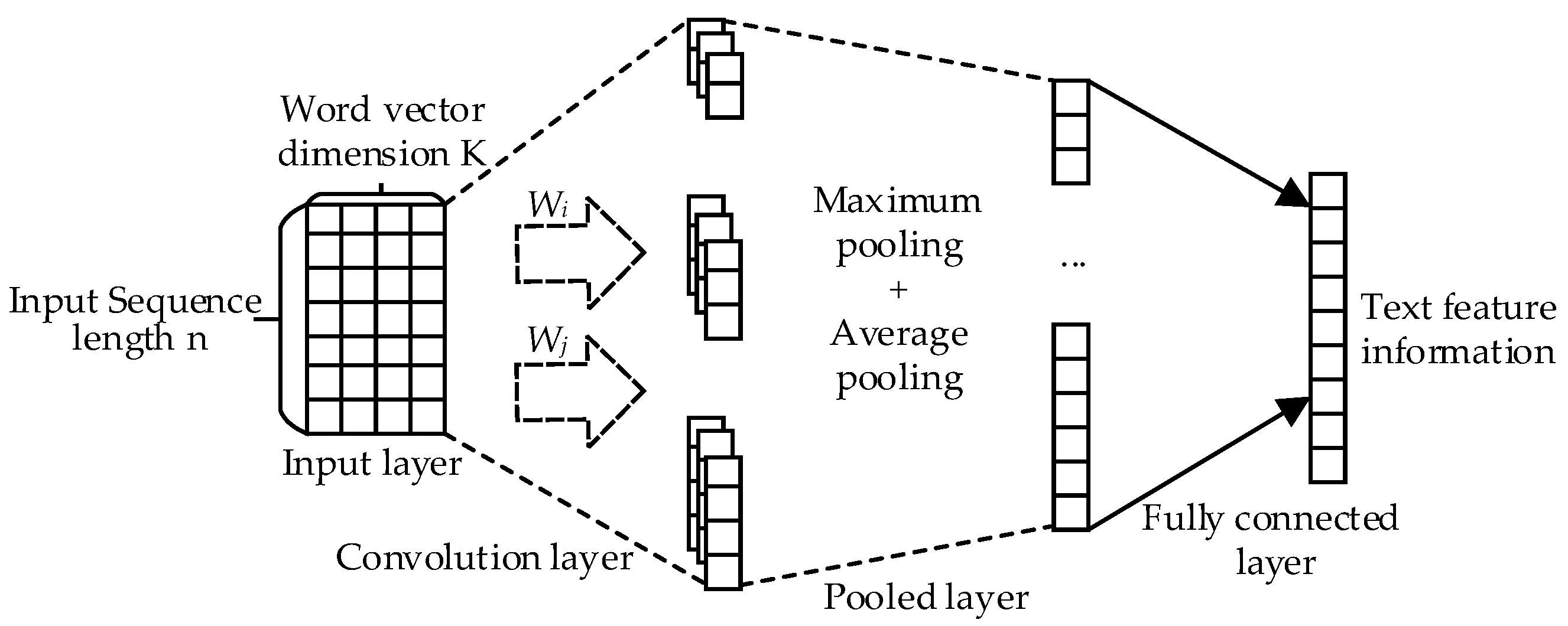

3.2.5. Local Feature Extraction

3.3. Feature Fusion Layer

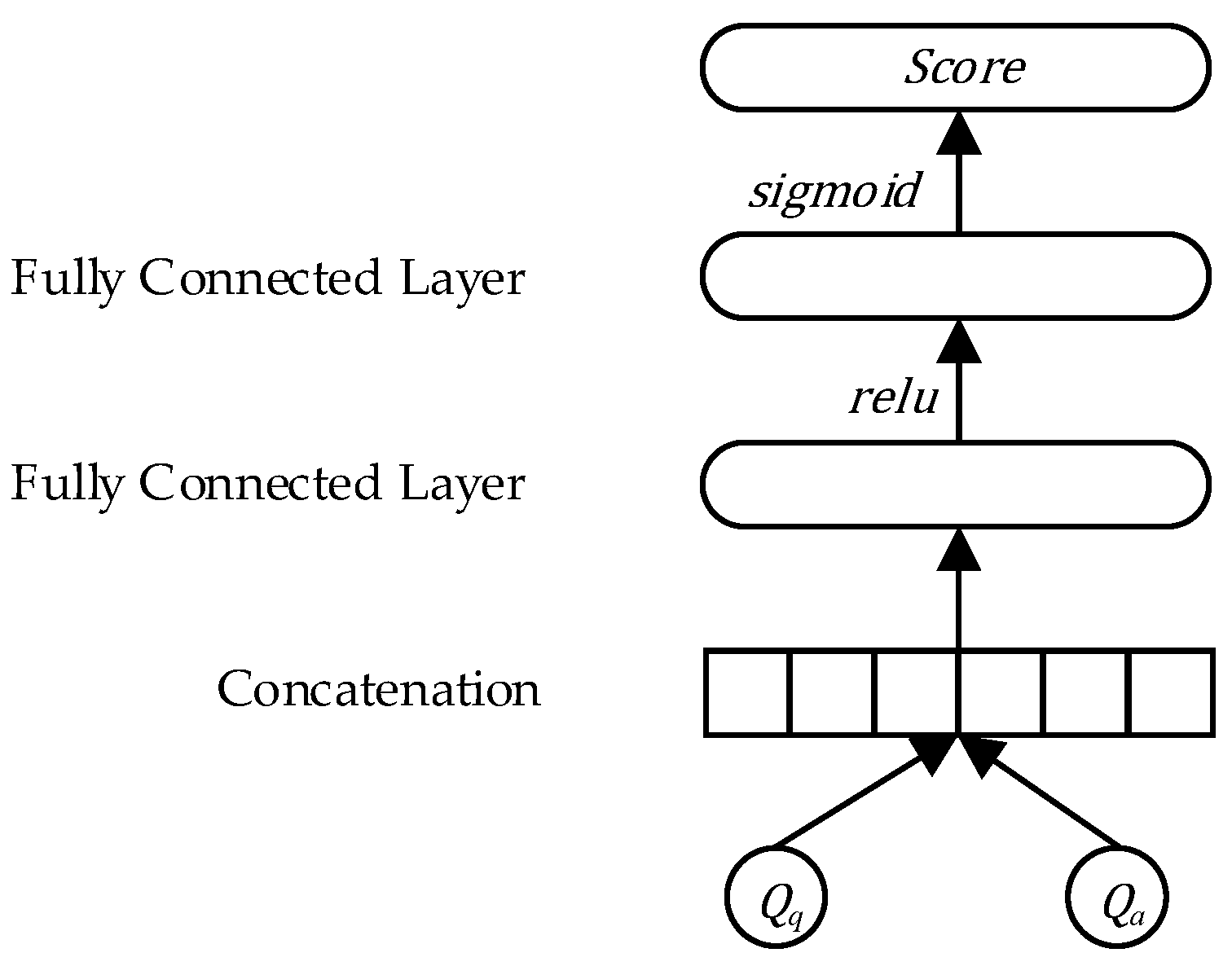

3.4. Text Matching Layer

4. Experiments and Result Analysis

4.1. Experimental Data

4.2. Evaluation Index

4.3. Parameter Setting

4.4. Contrast Experiment and Results Analysis

- (1)

- QACNN [33]: using CNN to learn the distributed vector representation of questions and answers, and using cosine similarity to measure whether they match;

- (2)

- QALSTM [6]: using the BiLSTM to obtain the distributed vector representations of questions and candidate answers, which are weighted based on the correlation between questions and answers, and cosine similarity is used to measure whether they match;

- (3)

- BERT [29]: using the BERT model to generate the context embedding vector of the text, and finally measure whether it matches by cosine similarity;

- (4)

- ERNIE [28]: using the ERNIE model to obtain the context embedding vector of the text, and finally measure whether it matches by cosine similarity;

- (5)

- DARCNN [12]: combining BiLSTM, attention mechanism and CNN, the interaction between questions and candidate answers is established, and multi-dimensional semantic modeling is carried out. Finally, a multi-layer perceptron is used to predict the matching score;

- (6)

- KAAS [16]: obtain the professional information of the vertical field through an external knowledge map, encode the text with word2vec, then obtain the characteristic matrix of questions and answers with BiLSTM, and finally obtain the similarity score by calculating cosine similarity.

4.5. Ablation Experiment and Result Analysis

4.6. Question-and-Answer Results Analysis

5. Discussion

6. Conclusions

- (1)

- The use of ERNIE for word feature extraction makes up for the lack of prior knowledge in the text feature representation of BERT and other models to a certain extent, and preserves the professional terms of the text in the field of insurance to the greatest extent;

- (2)

- Using pinyin character features as another semantic extension of text can solve the problem of different homophones in the text;

- (3)

- Through the part-of-speech features of the text, the influence of some keywords in the insurance corpus on the model performance is fully considered, so as to improve the feature representation ability of the model;

- (4)

- By combining BiLSTM with TextCNN, our method can comprehensively obtain the context information and local semantic information of the text, thus better representing the text and helping the machine to understand the semantics.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bian, Y. How the insurance industry can use artificial intelligence. China Informatiz. Wkly. 2021, 29, 15. [Google Scholar]

- Zhang, R.-G.; Wu, Y.-Y. Research on the development of modern insurance industry under the background of digital economy. Southwest Financ. 2022, 7, 91–102. [Google Scholar]

- Gomaa, W.H.; Fahmy, A.A. A survey of text similarity approaches. Int. J. Comput. Appl. 2013, 68, 13–18. [Google Scholar]

- Deng, Y.; Shen, Y.; Yang, M.; Li, Y.; Du, N.; Fan, W.; Lei, K. Knowledge as a bridge: Improving cross-domain answer selection with external knowledge. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 3295–3305. [Google Scholar]

- Han, H.; Choi, S.; Park, H.; Hwang, S.-W. Micron: Multigranular interaction for contextualizing representation in non-factoid question answering. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 5890–5895. [Google Scholar]

- Tan, M.; Dos Santos, C.; Xiang, B.; Zhou, B. Improved representation learning for question answer matching. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; Volume 1 (Long Papers), pp. 464–473. [Google Scholar]

- Rücklé, A.; Gurevych, I. Representation learning for answer selection with LSTM-based importance weighting. In Proceedings of the IWCS 2017-12th International Conference on Computational Semantics-Short Papers, Montpellier, France, 19–22 September 2017. [Google Scholar]

- Bachrach, Y.; Zukov-Gregoric, A.; Coope, S.; Tovell, E.; Maksak, B.; Rodriguez, J.; Bordbar, M. An attention mechanism for neural answer selection using a combined global and local view. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017; pp. 425–432. [Google Scholar]

- Zhao, D.W.; Du, Q. Research on Insurance industry under the background of Artificial Intelligence. Financ. Theory Pract. 2020, 12, 91–100. [Google Scholar]

- Deng, Y.; Lam, W.; Xie, Y.; Chen, D.; Li, Y.; Yang, M.; Shen, Y. Joint learning of answer selection and answer summary generation in community question answering. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 7651–7658. [Google Scholar]

- Yuan, Y.; Chen, L. Answer Selection Using Multi-Layer Semantic Representation Learning. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Osaka, Japan, 28–31 March 2020; Volume 768, p. 072009. [Google Scholar]

- Bao, G.; Wei, Y.; Sun, X.; Zhang, H. Double attention recurrent convolution neural network for answer selection. R. Soc. Open Sci. 2020, 7, 191517. [Google Scholar] [CrossRef] [PubMed]

- Ha, T.T.; Takasu, A.; Nguyen, T.C.; Nguyen, K.H.; Nguyen, V.N.; Nguyen, K.A.; Tran, S.G. Supervised attention for answer selection in community question answering. IAES Int. J. Artif. Intell. 2020, 9, 203–211. [Google Scholar] [CrossRef]

- Mozafari, J.; Nematbakhsh, M.A.; Fatemi, A. Attention-based pairwise multi-perspective convolutional neural network for answer selection in question answering. arXiv 2019, arXiv:1909.01059. [Google Scholar]

- Zhang, X.; Li, S.; Sha, L.; Wang, H. Attentive interactive neural networks for answer selection in community question answering. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Jing, F.; Ren, H.; Cheng, W.; Wang, X.; Zhang, Q. Knowledge-enhanced attentive learning for answer selection in community question answering systems. Knowl. Based Syst. 2022, 250, 109117. [Google Scholar] [CrossRef]

- Jagvaral, B.; Lee, W.-K.; Roh, J.-S.; Kim, M.-S.; Park, Y.-T. Path-based reasoning approach for knowledge graph completion using CNN-BiLSTM with attention mechanism. Expert Syst. Appl. 2020, 142, 112960. [Google Scholar] [CrossRef]

- Pang, L.; Lan, Y.-Y.; Xu, J. A review of deep text matching. Chin. J. Comput. 2017, 40, 985–1003. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A convolutional neural network for modelling sentences. arXiv 2014, arXiv:1404.2188. [Google Scholar]

- Kim, Y. Convolutional neural networks for sentence classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Li, J.; Jurafsky, D.; Hovy, E. When are tree structures necessary for deep learning of representations. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015; pp. 2304–2314. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K. Recurrent convolutional neural networks for text classification. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; pp. 2267–2273. [Google Scholar]

- Bromley, J.; Bentz, J.-W.; Bottou, L. Signature verifycation using a “Siamese” time delay neural network. Int. J. Pattern Recognit. Artif. Intell. 1993, 7, 669–688. [Google Scholar] [CrossRef]

- Yin, W.; Schütze, H. MultiGranCNN: An architecture for general matching of text chunks on multiple levels of granularity. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015; ACL: Stroudsburg, PA, USA, 2015; pp. 63–73. [Google Scholar]

- Wan, S.; Lan, Y.; Guo, J. A deep architecture for semantic matching with multiple positional sentence representations. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; AAAI: Menlo-Park, CA, USA, 2015; pp. 2835–2841. [Google Scholar]

- Hu, B.; Lu, Z.; Li, H. Convolutional neural network architectures for matching natural language sentences. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; ACM: New York, NY, USA, 2014; pp. 2042–2050. [Google Scholar]

- Huang, P.-S.; He, X.; Gao, J. Learning deep structured semantic models for web search using click through data. In Proceedings of the 22nd ACM International Conference on Information and Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013; ACM: New York, NY, USA, 2013; pp. 2333–2338. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y. Ernie: Enhanced representation through knowledge integration. arXiv 2019, arXiv:1904.09223. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- Yu, S.-W.; Duan, H.-M.; Zhu, X.-F. Basic Processing Specifications of Modern Chinese Corpus of Peking University; Peking University: Beijing, China, 2002; Volume 5, pp. 49–64. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed]

- Feng, M.; Xiang, B.; Glass, M.-R. Applying deep learning to answer selection: A study and an open task. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 813–820. [Google Scholar]

| Training Set | Verification Set | Test Set | |

|---|---|---|---|

| Questions | 12,889 | 2000 | 2000 |

| Correct answers | 12,889 | 2000 | 2000 |

| Wrong answers | 128,890 | 20,000 | 20,000 |

| Maximum length of questions | 42 | 31 | 33 |

| Mean length of problems | 5 | 5 | 5 |

| Maximum length of answers | 878 | 878 | 878 |

| q | a | Label |

|---|---|---|

| What does car insurance cover | It depends on the coverage you have. If you are comprehensive... | 1 |

| What does car insurance cover | Direct auto insurance is not a kind of auto insurance but buying... | 0 |

| What does car insurance cover | In the use of credit scoring and multivariate rating formulas appear... | 0 |

| What does car insurance cover | Personal car policy PAP usually includes premiums that include... | 0 |

| What does car insurance cover | There was less for a while but in many cases cars... | 0 |

| … | … | … |

| What does car insurance cover | If you asked to cover a stolen car, answer... | 0 |

| What does long-term health insurance cover | Good long-term care policies will cover home health care... | 1 |

| What does long-term health insurance cover | It’s hard to say how many people have... | 0 |

| … | … | … |

| Whether the home insurance covers the apartment fee | Apartment owners have a special form of homeowner’s insurance that mostly... | 0 |

| Development Environment | Parameter |

|---|---|

| CPU | Intel(R)Core(TM)i7-11700F@2.5 GHz |

| Video card | NVIDIA GeForce RTX3060 Ti |

| Operating system | Ubuntu20 |

| Development tool | Pycharm |

| Programming language | Python3.6 |

| Development framework | Pytorch1.1 |

| Model | Acc | R | F1 |

|---|---|---|---|

| QACNN | 68.76% | 70.24% | 69.48% |

| QALSTM | 70.52% | 71.56% | 71.03% |

| BERT | 73.54% | 73.23% | 72.80% |

| ERNIE | 75.69% | 75.69% | 75.50% |

| DARCNN | 76.21% | 76.43% | 76.42% |

| KAAS | 77.82% | 77.80% | 77.81% |

| MFBT | 78.34% | 78.90% | 78.72% |

| Model | Acc | R | F1 |

|---|---|---|---|

| Method 1 | 77.27% | 77.27% | 77.27% |

| Method 2 | 77.78% | 77.75% | 77.76% |

| Method 3 | 77.40% | 77.40% | 77.40% |

| Method 4 | 68.04% | 68.12% | 68.08% |

| Method 5 | 70.14% | 70.06% | 70.10% |

| Method 6 | 76.98% | 77.52% | 77.25% |

| MFBT | 78.34% | 78.90% | 78.72% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Yang, X.; Zhou, L.; Jia, H.; Li, W. Text Matching in Insurance Question-Answering Community Based on an Integrated BiLSTM-TextCNN Model Fusing Multi-Feature. Entropy 2023, 25, 639. https://doi.org/10.3390/e25040639

Li Z, Yang X, Zhou L, Jia H, Li W. Text Matching in Insurance Question-Answering Community Based on an Integrated BiLSTM-TextCNN Model Fusing Multi-Feature. Entropy. 2023; 25(4):639. https://doi.org/10.3390/e25040639

Chicago/Turabian StyleLi, Zhaohui, Xueru Yang, Luli Zhou, Hongyu Jia, and Wenli Li. 2023. "Text Matching in Insurance Question-Answering Community Based on an Integrated BiLSTM-TextCNN Model Fusing Multi-Feature" Entropy 25, no. 4: 639. https://doi.org/10.3390/e25040639

APA StyleLi, Z., Yang, X., Zhou, L., Jia, H., & Li, W. (2023). Text Matching in Insurance Question-Answering Community Based on an Integrated BiLSTM-TextCNN Model Fusing Multi-Feature. Entropy, 25(4), 639. https://doi.org/10.3390/e25040639