Quantum Computing Approaches for Vector Quantization—Current Perspectives and Developments

Abstract

1. Introduction

2. Vector Quantization

2.1. Unsupervised Vector Quantization

2.1.1. Updates Using Vector Shifts

2.1.2. Median Adaptation

2.1.3. Unsupervised Vector Quantization as a Set-Cover Problem Using -Balls

2.1.4. Vector Quantization by Means of Associative Memory Networks

2.2. Supervised Vector Quantization for Classification Learning

2.2.1. Updates Using Vector Shifts

2.2.2. Median Adaptation

2.2.3. Supervised Vector Quantization as a Set-Cover Problem Using -Balls

2.2.4. Supervised Vector Quantization by Means of Associative Memory Networks

3. Quantum Computing—General Remarks

3.1. Levels of Quantum Computing

3.2. Paradigms of Quantum Computing

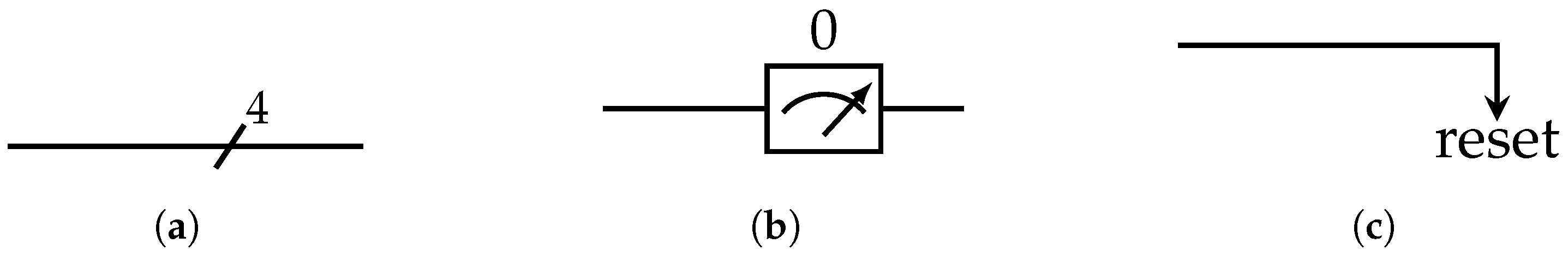

3.2.1. Gate Based Quantum Computing and Data Encoding

Basis Encoding

Amplitude Encoding

Gate-Based Quantum Paradigm

3.2.2. Adiabatic Quantum Computing and Problem Hamiltonians

3.2.3. QUBO, Ising Model, and Hopfield Network

3.3. State-of-the-Art of Practical Quantum Experiments

4. Quantum Approaches for Vector Quantization

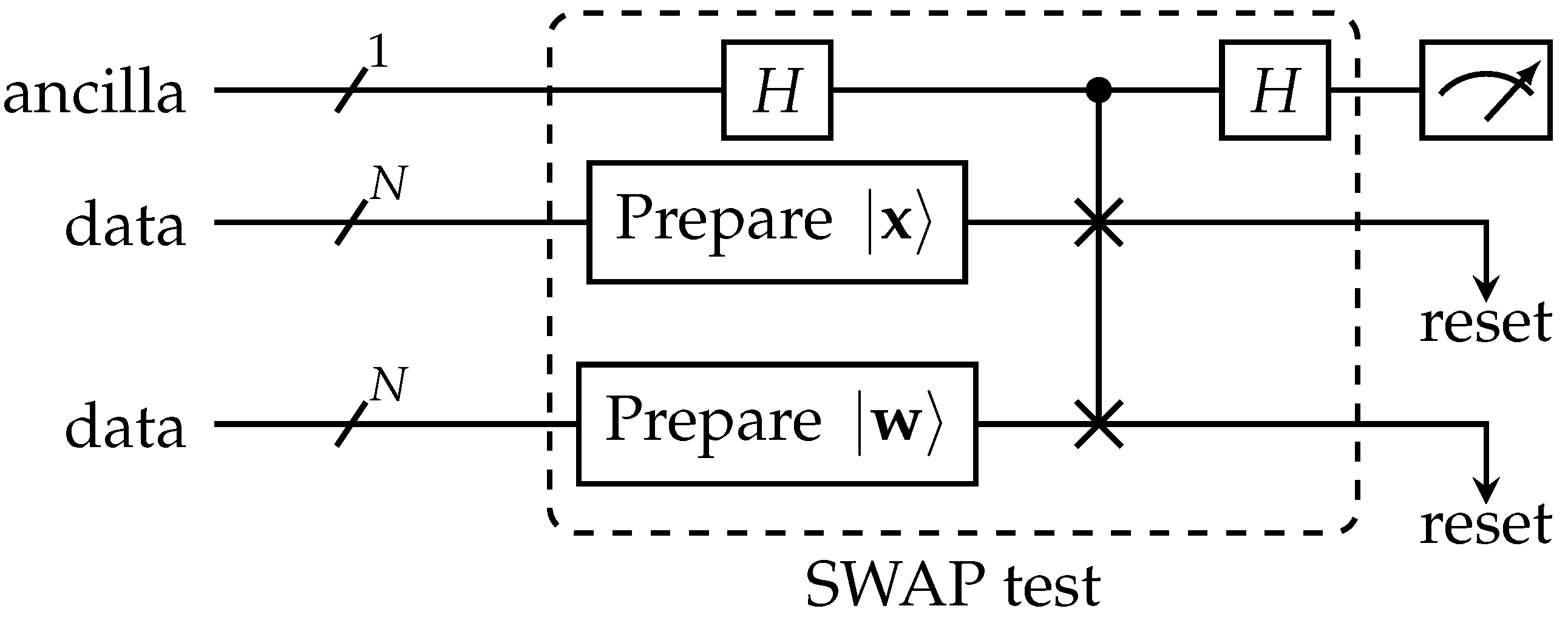

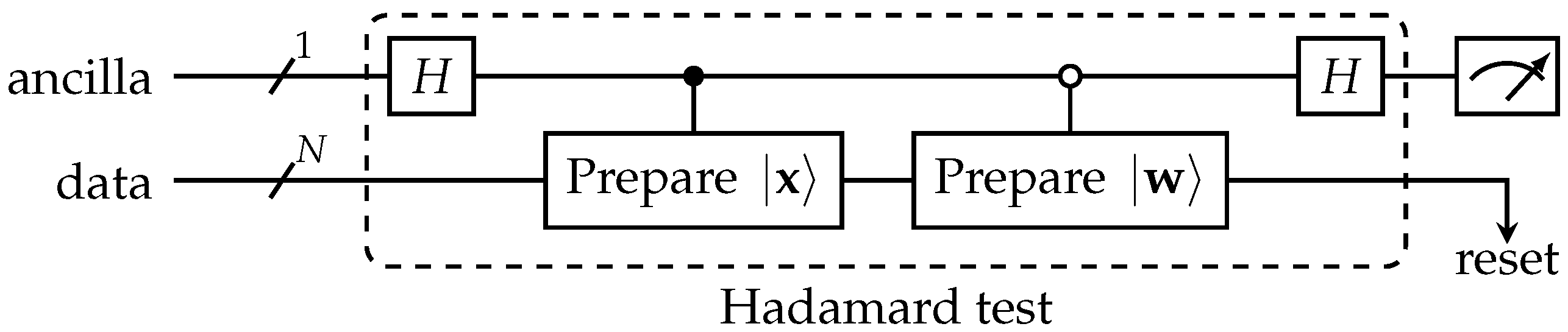

4.1. Dissimilarities

4.2. Winner Determination

4.3. Updates Using Vector Shift

4.4. Median Adaptation

4.5. Vector Quantization as Set-Cover Problem

4.6. Vector Quantization by Means of Associative Memory

4.7. Solving QUBO with Quantum Devices

- Solve QUBO with AQC

- Solve QUBO with Gate-Based Computing

- Solve QUBO with Photonic Devices

4.8. Further Aspects—Practical Limitations

- Impact of Coding

- Impact of Theoretical Approximation Boundaries and Constraints

- Impact of Noisy Circuit Execution

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AQC | Adiabatic Quantum Computing |

| HN | Hopfield Network |

| VQ | Vector Quantization |

| LVQ | Learning Vector Quantization |

| QUBO | Quadratic Unconstrained Binary Optimization |

References

- Arrazola, J.M.; Bromley, T.R. Using Gaussian Boson Sampling to Find Dense Subgraphs. Phys. Rev. Lett. 2018, 121, 030503. [Google Scholar] [CrossRef]

- Le, P.Q.; Dong, F.; Hirota, K. A flexible representation of quantum images for polynomial preparation, image compression, and processing operations. Quantum Inf. Process. 2010, 10, 63–84. [Google Scholar] [CrossRef]

- Martín-Guerrero, J.D.; Lamata, L. Quantum Machine Learning: A tutorial. Neurocomputing 2022, 470, 457–461. [Google Scholar] [CrossRef]

- Schuld, M.; Petruccione, F. Machine Learning with Quantum Computers; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Ostaszewski, M.; Trenkwalder, L.M.; Masarczyk, W.; Scerri, E.; Dunjko, V. Reinforcement learning for optimization of variational quantum circuit architectures. In Proceedings of the Advances in Neural Information Processing Systems 34, NeurIPS 2021, Online, 6–14 December 2021; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Nice, France, 2021; Volume 34. [Google Scholar]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Plenum: New York, NY, USA, 1981. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. K-Means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2007; pp. 1027–1035. [Google Scholar]

- Martinetz, T.M.; Berkovich, S.G.; Schulten, K.J. ‘Neural-Gas’ Network for Vector Quantization and Its Application to Time-Series Prediction. IEEE Trans. Neural Netw. 1993, 4, 558–569. [Google Scholar] [CrossRef] [PubMed]

- Kohonen, T. Self-Organization and Associative Memory; Springer: Berlin/Heidelberg, Germany, 1989. [Google Scholar] [CrossRef]

- Kohonen, T. Self-Organizing Maps; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar] [CrossRef]

- Villmann, T.; Der, R.; Herrmann, M.; Martinetz, T. Topology Preservation in Self-Organizing Feature Maps: Exact Definition and Measurement. IEEE Trans. Neural Netw. 1997, 8, 256–266. [Google Scholar] [CrossRef]

- Biehl, M.; Hammer, B.; Villmann, T. Prototype-based models in machine learning. Wiley Interdiscip. Rev. Cogn. Sci. 2016, 7, 92–111. [Google Scholar] [CrossRef]

- Crammer, K.; Gilad-Bachrach, R.; Navot, A.; Tishby, N. Margin analysis of the LVQ algorithm. In Proceedings of the Advances in Neural Information Processing (Proc. NIPS 2002), Vancouver, BC, Canada, 9–14 December 2002; Becker, S., Thrun, S., Obermayer, K., Eds.; MIT Press: Cambridge, MA, USA, 2003; Volume 15, pp. 462–469. [Google Scholar]

- Saralajew, S.; Holdijk, L.; Villmann, T. Fast Adversarial Robustness Certification of Nearest Prototype Classifiers for Arbitrary Seminorms. In Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Nice, France, 2020; Volume 33, pp. 13635–13650. [Google Scholar]

- Voráček, V.; Hein, M. Provably adversarially robust nearest prototype classifiers. In Proceedings of the 39th International Conference on Machine Learning (ICML), Baltimore, MD, USA, 17–23 July 2022; Volume 162, pp. 22361–22383. [Google Scholar] [CrossRef]

- Hammer, B.; Villmann, T. Generalized Relevance Learning Vector Quantization. Neural Netw. 2002, 15, 1059–1068. [Google Scholar] [CrossRef]

- Schneider, P.; Hammer, B.; Biehl, M. Adaptive Relevance Matrices in Learning Vector Quantization. Neural Comput. 2009, 21, 3532–3561. [Google Scholar] [CrossRef]

- Lisboa, P.; Saralajew, S.; Vellido, A.; Villmann, T. The coming of age of interpretable and explainable machine learning models. In Proceedings of the 29th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN’2021), Bruges, Belgium, 6–8 October 2021; Verleysen, M., Ed.; i6doc.com: Louvain-La-Neuve, Belgium, 2021; pp. 547–556. [Google Scholar] [CrossRef]

- Lisboa, P.; Saralajew, S.; Vellido, A.; Fernández-Domenech, R.; Villmann, T. The Coming of Age of Interpretable and Explainable Machine Learning Models. Neurocomputing 2023, 535, 25–39. [Google Scholar] [CrossRef]

- Li, O.; Liu, H.; Chen, C.; Rudin, C. Deep Learning for Case-Based Reasoning through Prototypes: A Neural Network that Explains Its Predictions. In Proceedings of the Thirty-Second AAAI Conferenceon Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; pp. 3530–3537. [Google Scholar] [CrossRef]

- Rudin, C.; Chen, C.; Chen, Z.; Huang, H.; Semenova, L.; Zhong, C. Interpretable Machine Learning: Fundamental Principles and 10 Grand Challenges. Stat. Surv. 2022, 16, 1–85. [Google Scholar] [CrossRef]

- Vellido, A. The importance of interpretability and visualization in machine learning for applications in medicine and health care. Neural Netw. Appl. 2020, 32, 18069–18083. [Google Scholar] [CrossRef]

- Villmann, T.; Engelsberger, A.; Ravichandran, J.; Villmann, A.; Kaden, M. Quantum-Inspired Learning Vector Quantizers for Prototype-Based Classification. Neural Comput. Appl. 2022, 34, 79–88. [Google Scholar] [CrossRef]

- Aïmeur, E.; Brassard, G.; Gambs, S. Quantum clustering algorithms. In Proceedings of the 24th International Conference on Machine Learning, Corvallis, OR, USA, 20–24 June 2007; ACM: New York, NY, USA, 2007. [Google Scholar] [CrossRef]

- Engelsberger, A.; Schubert, R.; Villmann, T. Steps Forward to Quantum Learning Vector Quantization for Classification Learning on a Theoretical Quantum Computer. In Proceedings of the Advances in Self-Organizing Maps, Learning Vector Quantization, Clustering and Data Visualization, Prague, Czech Republic, 6–7 July 2022; Faigl, J., Olteanu, M., Drchal, J., Eds.; Springer: Cham, Switzerland, 2022; pp. 63–73. [Google Scholar] [CrossRef]

- Cottrell, M.; Hammer, B.; Hasenfuß, A.; Villmann, T. Batch and median neural gas. Neural Netw. 2006, 19, 762–771. [Google Scholar] [CrossRef] [PubMed]

- Nebel, D.; Hammer, B.; Frohberg, K.; Villmann, T. Median variants of learning vector quantization for learning of dissimilarity data. Neurocomputing 2015, 169, 295–305. [Google Scholar] [CrossRef]

- Gorman, C.; Robins, A.; Knott, A. Hopfield networks as a model of prototype-based category learning: A method to distinguish trained, spurious, and prototypical attractors. Neural Netw. 2017, 91, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Pulvermüller, F.; Tomasello, R.; Henningsen-Schomers, M.R.; Wennekers, T. Biological constraints on neural network models of cognitive function. Nat. Rev. Neurosci. 2021, 22, 488–502. [Google Scholar] [CrossRef]

- Ramsauer, H.; Schafl, B.; Lehner, J.; Seidl, P.; Widrich, M.; Gruber, L.; Holzleitner, M.; Pavlovi’c, M.; Sandve, G.K.; Greiff, V.; et al. Hopfield Networks is All You Need. arXiv 2021, arXiv:2008.02217. [Google Scholar] [CrossRef]

- Graf, S.; Luschgy, H. Foundations of Quantization for Probability Distributions; Springer: Berlin/Heidelberg, Germany, 2000. [Google Scholar] [CrossRef]

- Linde, Y.; Buzo, A.; Gray, R. An Algorithm for Vector Quantizer Design. IEEE Trans. Commun. 1980, 28, 84–95. [Google Scholar] [CrossRef]

- Kirby, M.; Peterson, C. Visualizing Data Sets on the Grassmannian Using Self-Organizing Maps. In Proceedings of the 12th Workshop on Self-Organizing Maps and Learning Vector Quantization, Nancy, France, 28–30 June 2017; IEEE: New York, NY, USA, 2017; pp. 32–37. [Google Scholar] [CrossRef]

- Kaufman, L.; Rousseeuv, P.J. Partitioning Around Medoids (Program PAM). In Finding Groups in Data: An Introduction to Cluster Analysis; John Wiley & Sons, Inc.: New York, NY, USA, 1990; pp. 68–125. [Google Scholar] [CrossRef]

- Staps, D.; Schubert, R.; Kaden, M.; Lampe, A.; Hermann, W.; Villmann, T. Prototype-Based One-Class-Classification Learning Using Local Representations. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; IEEE: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Attali, D.; Nguyen, T.B.; Sivignon, I. ϵ-covering is NP-complete. In Proceedings of the European Workshop on Computational Geometry (EuroCG), Lugano, Switzerland, 30 March–1 April 2016. [Google Scholar]

- Tipping, M.E.; Schölkopf, B. A Kernel Approach for Vector Quantization with Guaranteed Distortion Bounds. Proc. Mach. Learn. Res. 2001, R3, 298–303. [Google Scholar]

- Steinwart, I.; Christmann, A. Support Vector Machines; Information Science and Statistics; Springer: New York, NY, USA, 2008. [Google Scholar] [CrossRef]

- Bauckhage, C. A neural network implementation of Frank-Wolfe optimization. In Artificial Neural Networks and Machine Learning, Proceedings of the International Conference on Artificial Neural Networks (ICANN), Alghero, Italy, 11–14 September 2017; Lecture Notes in Computer Science; Lintas, A., Rovetta, S., Verschure, P., Villa, A., Eds.; Springer: Cham, Switzerland, 2017; Volume 10613. [Google Scholar] [CrossRef]

- Bauckhage, C.; Sifa, R.; Dong, T. Prototypes Within Minimum Enclosing Balls. In Artificial Neural Networks and Machine Learning, Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2019: Workshop and Special Sessions, Munich, Germany, 17–19 September 2019; Lecture Notes in Computer Science; Tetko, I., Kurkova, V., Karpov, P., Theis, F., Eds.; Springer: Cham, Switzerland, 2019; Volume 11731, pp. 365–376. [Google Scholar] [CrossRef]

- Amari, S.I. Neural Theory of Association and Concept-Formation. Biol. Cybern. 1977, 26, 175–185. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Haykin, S. Neural Networks—A Comprehensive Foundation; Macmillan: New York, NY, USA, 1994. [Google Scholar]

- Hertz, J.A.; Krogh, A.; Palmer, R.G. Introduction to the Theory of Neural Computation; Avalon Publishing: London, UK, 1991. [Google Scholar]

- Minsky, M.L.; Papert, S. Perceptrons—An Introduction to Computational Geometry; MIT Press: Cambridge, MA, USA, 1969. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Krotov, D.; Hopfield, J.J. Dense Associative Memory for Pattern Recognition. In Proceedings of the 30th International Conference on Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016; Lee, D.D., von Luxburg, U., Garnett, R., Sugiyama, M., Guyon, I., Eds.; Curran Associates, Inc.: Nice, France, 2016; pp. 1180–1188. [Google Scholar]

- Bauckhage, C.; Ramamurthy, R.; Sifa, R. Hopfield Networks for Vector Quantization. In Proceedings of the Artificial Neural Networks and Machine Learning—ICANN 2020, Bratislava, Slovakia, 15–18 September 2020; Farkaš, I., Masulli, P., Wermter, S., Eds.; Springer: Cham, Switzerland, 2020; Volume 12397, pp. 192–203. [Google Scholar] [CrossRef]

- Jankowski, S.; Lozowski, A.; Zurada, J.M. Complex-valued multistate neural associative memory. IEEE Trans. Neural Netw. 1996, 7, 1491–1496. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.L. Improvements of complex-valued Hopfield associative memory by using generalized projection rules. IEEE Trans. Neural Netw. 2006, 17, 1341–1347. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J. Learning with Kernels—Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Villmann, T.; Bohnsack, A.; Kaden, M. Can Learning Vector Quantization Be an Alternative to SVM and Deep Learning?—Recent Trends and Advanced Variants of Learning Vector Quantization for Classification Learning. J. Artif. Intell. Soft Comput. Res. 2016, 7, 65–81. [Google Scholar] [CrossRef]

- Kohonen, T. Learning Vector Quantization. Neural Netw. 1988, 1, 303. [Google Scholar] [CrossRef]

- Sato, A.; Yamada, K. Generalized Learning Vector Quantization. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 27–30 November 1995; MIT Press: Cambridge, MA, USA, 1995; Volume 8. [Google Scholar]

- Gay, M.; Kaden, M.; Biehl, M.; Lampe, A.; Villmann, T. Complex Variants of GLVQ Based on Wirtinger’s Calculus. In Advances in Intelligent Systems and Computing, Proceedings of the Advances in Self-Organizing Maps and Learning Vector Quantization, Houston, TX, USA, 6–8 January 2016; Merényi, E., Mendenhall, M.J., O’Driscoll, P., Eds.; Springer: Cham, Switzerland, 2016; Volume 428, pp. 293–303. [Google Scholar] [CrossRef]

- Tang, F.; Fan, M.; Tino, P. Generalized Learning Riemannian Space Quantization: A Case Study on Riemannian Manifold of SPD Matrices. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 281–292. [Google Scholar] [CrossRef]

- Mohammadi, M.; Biehl, M.; Villmann, A.; Villmann, T. Sequence Learning in Unsupervised and Supervised Vector Quantization Using Hankel Matrices. In Proceedings of the Artificial Intelligence and Soft Computing, ICAISC 2017, Zakopane, Poland, 11–15 June 2017; Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M., Eds.; Springer: Cham, Switzerland, 2017; pp. 131–142. [Google Scholar] [CrossRef]

- Taghribi, A.; Canducci, M.; Mastropietro, M.; Rijcke, S.D.; Bunte, K.; Tiňo, P. ASAP—A sub-sampling approach for preserving topological structures modeled with geodesic topographic mapping. Neurocomputing 2022, 470, 376–388. [Google Scholar] [CrossRef]

- Bien, J.; Tibshirani, R. Prototype Selection for Interpretable Classification. Ann. Appl. Stat. 2011, 5, 2403–2424. [Google Scholar] [CrossRef]

- Paaßen, B.; Villmann, T. Prototype selection based on set covering and large margins. Mach. Learn. Rep. 2021, 14, 35–42. [Google Scholar]

- Hu, X.; Wang, T. Training the Hopfield Neural Network for Classification Using a STDP-Like Rule. In Neural Information Processing, Proceedings of the Neural Information Processing, Long Beach, CA, USA, 4–9 December 2017; Lecture Notes in Computer Science; Liu, D., Xie, S., Li, Y., Zhao, D., El-Alfy, E.S.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 737–744. [Google Scholar] [CrossRef]

- Cantini, L.; Caselle, M. Hope4Genes: A Hopfield-like class prediction algorithm for transcriptomic data. Sci. Rep. 2019, 9, 337. [Google Scholar] [CrossRef] [PubMed]

- Callison, A.; Chancellor, N. Hybrid Quantum-Classical Algorithms in the Noisy Intermediate-Scale Quantum Era and Beyond. Phys. Rev. A 2022, 106, 010101. [Google Scholar] [CrossRef]

- Aharonov, D.; van Dam, W.; Kempe, J.; Landau, Z.; Lloyd, S.; Regev, O. Adiabatic quantum computation is equivalent to standard quantum computation. In Proceedings of the 45th Annual IEEE Symposium on Foundations of Computer Science, Rome, Italy, 17–19 October 2004; pp. 42–51. [Google Scholar] [CrossRef]

- Nielsen, M.A.; Chuang, I.L. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar] [CrossRef]

- Lindner, A.; Strauch, D. A Complete Course on Theoretical Physics; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Scherer, W. Mathematics of Quantum Computing—An Introduction; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Ventura, D.; Martinez, T. Quantum associative memory. Inf. Sci. 2000, 124, 273–296. [Google Scholar] [CrossRef]

- Möttönen, M.; Vartiainen, J.; Bergholm, V.; Salomaa, M. Transformation of quantum states using uniformly controlled rotations. Quantum Inf. Comput. 2005, 5, 467–473. [Google Scholar] [CrossRef]

- Giovannetti, V.; Lloyd, S.; Maccone, L. Quantum Random Access Memory. Phys. Rev. Lett. 2008, 100, 160501. [Google Scholar] [CrossRef] [PubMed]

- Feynman, R.P. Quantum mechanical computers. Found. Phys. 1986, 16, 507–531. [Google Scholar] [CrossRef]

- Kato, T. On the Adiabatic Theorem of Quantum Mechanicsk. J. Phys. Soc. Jpn. 1950, 5, 435–439. [Google Scholar] [CrossRef]

- Schrödinger, E. An Undulatory Theory of the Mechanics of Atoms and Molecules. Phys. Rev. 1926, 28, 1049–1070. [Google Scholar] [CrossRef]

- Farhi, E.; Goldstone, J.; Gutmann, S.; Sipser, M. Quantum Computation by Adiabatic Evolution. arXiv 2000, arXiv:quant-ph/0001106. [Google Scholar] [CrossRef]

- Barends, R.; Shabani, A.; Lamata, L.; Kelly, J.; Mezzacapo, A.; Heras, U.L.; Babbush, R.; Fowler, A.G.; Campbell, B.; Chen, Y.; et al. Digitized Adiabatic Quantum Computing with a Superconducting Circuit. Nature 2016, 534, 222–226. [Google Scholar] [CrossRef]

- Lucas, A. Ising Formulations of Many NP Problems. Front. Phys. 2014, 2, 5. [Google Scholar] [CrossRef]

- Preskill, J. Quantum Computing in the NISQ Era and Beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- LaRose, R.; Mari, A.; Kaiser, S.; Karalekas, P.J.; Alves, A.A.; Czarnik, P.; Mandouh, M.E.; Gordon, M.H.; Hindy, Y.; Robertson, A.; et al. Mitiq: A software package for error mitigation on noisy quantum computers. Quantum 2022, 6, 774. [Google Scholar] [CrossRef]

- Treinish, M.; Gambetta, J.; Thomas, S.; Nation, P.; Qiskit-Bot; Kassebaum, P.; Rodríguez, D.M.; De La Puente González, S.; Lishman, J.; Hu, S.; et al. Qiskit/qiskit: Qiskit 0.41.0. Zenodo 2023. [Google Scholar] [CrossRef]

- Bergholm, V.; Izaac, J.; Schuld, M.; Gogolin, C.; Ahmed, S.; Ajith, V.; Alam, M.S.; Alonso-Linaje, G.; AkashNarayanan, B.; Asadi, A.; et al. PennyLane: Automatic differentiation of hybrid quantum-classical computations. arXiv 2018, arXiv:1811.04968. [Google Scholar] [CrossRef]

- Bauckhage, C.; Sanchez, R.; Sifa, R. Problem Solving with Hopfield Networks and Adiabatic Quantum Computing. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Rebentrost, P.; Mohseni, M.; Lloyd, S. Quantum support vector machines for big data classification. Phys. Rev. Lett. 2014, 113, 130503. [Google Scholar] [CrossRef] [PubMed]

- Dallaire-Demers, P.L.; Killoran, N. Quantum generative adversarial networks. Phys. Rev. A 2018, 98, 012324. [Google Scholar] [CrossRef]

- Schuld, M.; Killoran, N. Quantum Machine Learning in Feature Hilbert Spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef]

- Villmann, T.; Ravichandran, J.; Engelsberger, A.; Villmann, A.; Kaden, M. Quantum-Inspired Learning Vector Quantization for Classification Learning. In Proceedings of the 28th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN’2020), Bruges, Belgium, 2–4 October 2020; Verleysen, M., Ed.; i6doc.com: Louvain-La-Neuve, Belgium, 2020; pp. 279–284. [Google Scholar]

- Jerbi, S.; Fiderer, K.; Nautrup, H.P.; Kübler, J.; Briegel, H.; Dunkjo, V. Quantum machine learning beyond kernel methods. Nat. Commun. 2023, 14, 517. [Google Scholar] [CrossRef]

- Buhrman, H.; Cleve, R.; Watrous, J.; de Wolf, R. Quantum Fingerprinting. Phys. Rev. Lett. 2001, 87, 167902. [Google Scholar] [CrossRef]

- Aharonov, D.; Jones, V.; Landau, Z. A Polynomial Quantum Algorithm for Approximating the Jones Polynomial. Algorithmica 2009, 55, 395–421. [Google Scholar] [CrossRef]

- Schuld, M.; Fingerhuth, M.; Petruccione, F. Implementing a Distance-Based Classifier with a Quantum Interference Circuit. EPL (Europhys. Lett.) 2017, 119, 6. [Google Scholar] [CrossRef]

- Gitiaux, X.; Morris, I.; Emelianenko, M.; Tian, M. SWAP test for an arbitrary number of quantum states. Quantum Inf. Process. 2022, 21, 344. [Google Scholar] [CrossRef]

- Durr, C.; Hoyer, P. A Quantum Algorithm for Finding the Minimum. arXiv 1996, arXiv:quant-ph/9607014. [Google Scholar] [CrossRef]

- Boyer, M.; Brassard, G.; Høyer, P.; Tapp, A. Tight Bounds on Quantum Searching. Fortschritte Physik 1998, 46, 493–505. [Google Scholar] [CrossRef]

- Grover, L.K. A fast quantum mechanical algorithm for database search. In Proceedings of the STOC ’96: Proceedings of the Twenty-Eighth Annual ACM Symposium on Theory of Computing, Philadelphia, PA, USA, 22–24 May 1996; ACM Press: New York, NY, USA, 1996; pp. 212–219. [Google Scholar] [CrossRef]

- Wiebe, N.; Kapoor, A.; Svore, K.M. Quantum algorithms for nearest-neighbor methods for supervised and unsupervised learning. Quantum Inf. Comput. 2015, 15, 316–356. [Google Scholar] [CrossRef]

- Schuld, M.; Sinayskiy, I.; Petruccione, F. Quantum Computing for Pattern Classification. In PRICAI 2014: Trends in Artificial Intelligence; Pham, D.N., Park, S.B., Eds.; Springer: Cham, Switzerland, 2014; pp. 208–220. [Google Scholar] [CrossRef]

- Bauckhage, C.; Sifa, R.; Wrobel, S. Adiabatic Quantum Computing for Max-Sum Diversification. In Proceedings of the 2020 SIAM International Conference on Data Mining, Cincinnati, OH, USA, 7–9 May 2020; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2020; pp. 343–351. [Google Scholar] [CrossRef]

- Kerenidis, I.; Luongo, A.; Prakash, A. Quantum Expectation-Maximization for Gaussian Mixture Models. In Proceedings of the 37th International Conference on Machine Learning, PMLR, Virtual Event, 13–18 July 2020; pp. 5187–5197. [Google Scholar]

- Kerenidis, I.; Landman, J.; Luongo, A.; Prakash, A. Q-Means: A Quantum Algorithm for Unsupervised Machine Learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: New York, NY, USA, 2019; Volume 32. [Google Scholar]

- Cao, Y.; Jiang, S.; Perouli, D.; Kais, S. Solving Set Cover with Pairs Problem using Quantum Annealing. Sci. Rep. 2016, 6, 33957. [Google Scholar] [CrossRef]

- Miller, N.E.; Mukhopadhyay, S. A quantum Hopfield associative memory implemented on an actual quantum processor. Sci. Rep. 2021, 11, 23391. [Google Scholar] [CrossRef]

- Rebentrost, P.; Bromley, T.R.; Weedbrook, C.; Lloyd, S. Quantum Hopfield neural network. Phys. Rev. A 2018, 98, 042308. [Google Scholar] [CrossRef]

- D-Wave. Practical Quantum Computing: An Update. In D-Wave Whitepaper Series; D-Wave Systems Inc.: Burnaby, BC, Canada, 2020. [Google Scholar]

- Farhi, E.; Goldstone, J.; Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv 2014, arXiv:1411.4028. [Google Scholar] [CrossRef]

- Banchi, L.; Quesada, N.; Arrazola, J.M. Training Gaussian Boson Sampling Distributions. Phys. Rev. A 2020, 102, 012417. [Google Scholar] [CrossRef]

- Villmann, A.; Kaden, M.; Saralajew, S.; Villmann, T. Probabilistic Learning Vector Quantization with Cross-Entropy for Probabilistic Class Assignments in Classification Learning. In Artificial Intelligence and Soft Computing; Springer: Cham, Switzerland, 2018; pp. 724–735. [Google Scholar] [CrossRef]

- Schuld, M.; Killoran, N. Is Quantum Advantage the Right Goal for Quantum Machine Learning? PRX Quantum 2022, 3, 030101. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Engelsberger, A.; Villmann, T. Quantum Computing Approaches for Vector Quantization—Current Perspectives and Developments. Entropy 2023, 25, 540. https://doi.org/10.3390/e25030540

Engelsberger A, Villmann T. Quantum Computing Approaches for Vector Quantization—Current Perspectives and Developments. Entropy. 2023; 25(3):540. https://doi.org/10.3390/e25030540

Chicago/Turabian StyleEngelsberger, Alexander, and Thomas Villmann. 2023. "Quantum Computing Approaches for Vector Quantization—Current Perspectives and Developments" Entropy 25, no. 3: 540. https://doi.org/10.3390/e25030540

APA StyleEngelsberger, A., & Villmann, T. (2023). Quantum Computing Approaches for Vector Quantization—Current Perspectives and Developments. Entropy, 25(3), 540. https://doi.org/10.3390/e25030540