Jensen–Inaccuracy Information Measure

Abstract

1. Introduction

2. The Jensen–Inaccuracy Measure

2.1. The Jensen–Inaccuracy Measure and its Connection to Kullback–Leibler Divergence

2.2. Connection between Jensen–Inaccuracy and Arithmetic–Geometric Divergence Measures

2.3. The -Jensen–Inaccuracy Measure

3. Inaccuracy Information Measure of the Escort and Generalized Escort Distributions

- (i)

- ;

- (ii)

- ,

4. Inaccuracy Measure Based on Average Entropy

5. Optimal Information Model under Inaccuracy Information Measure

6. Application

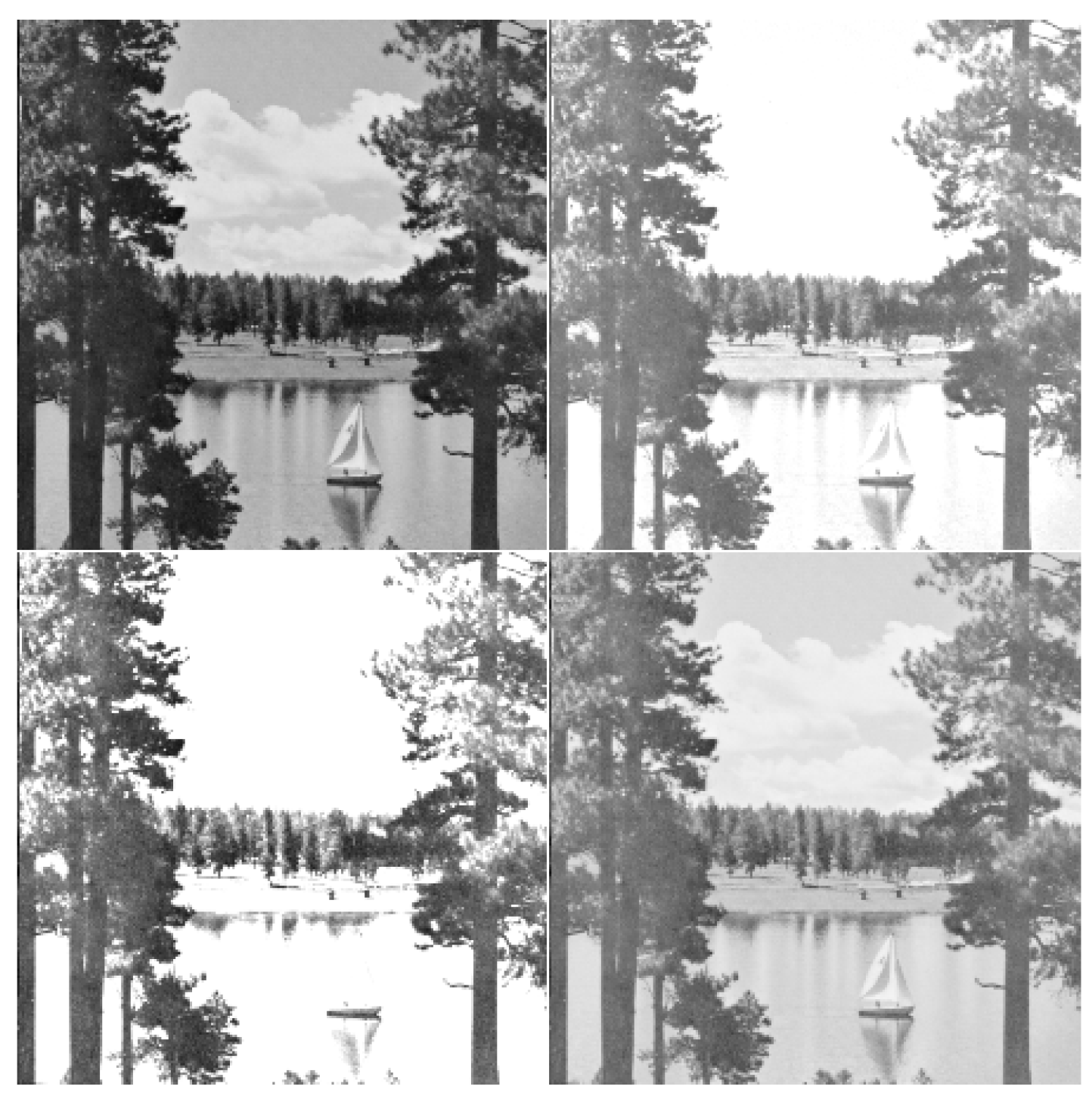

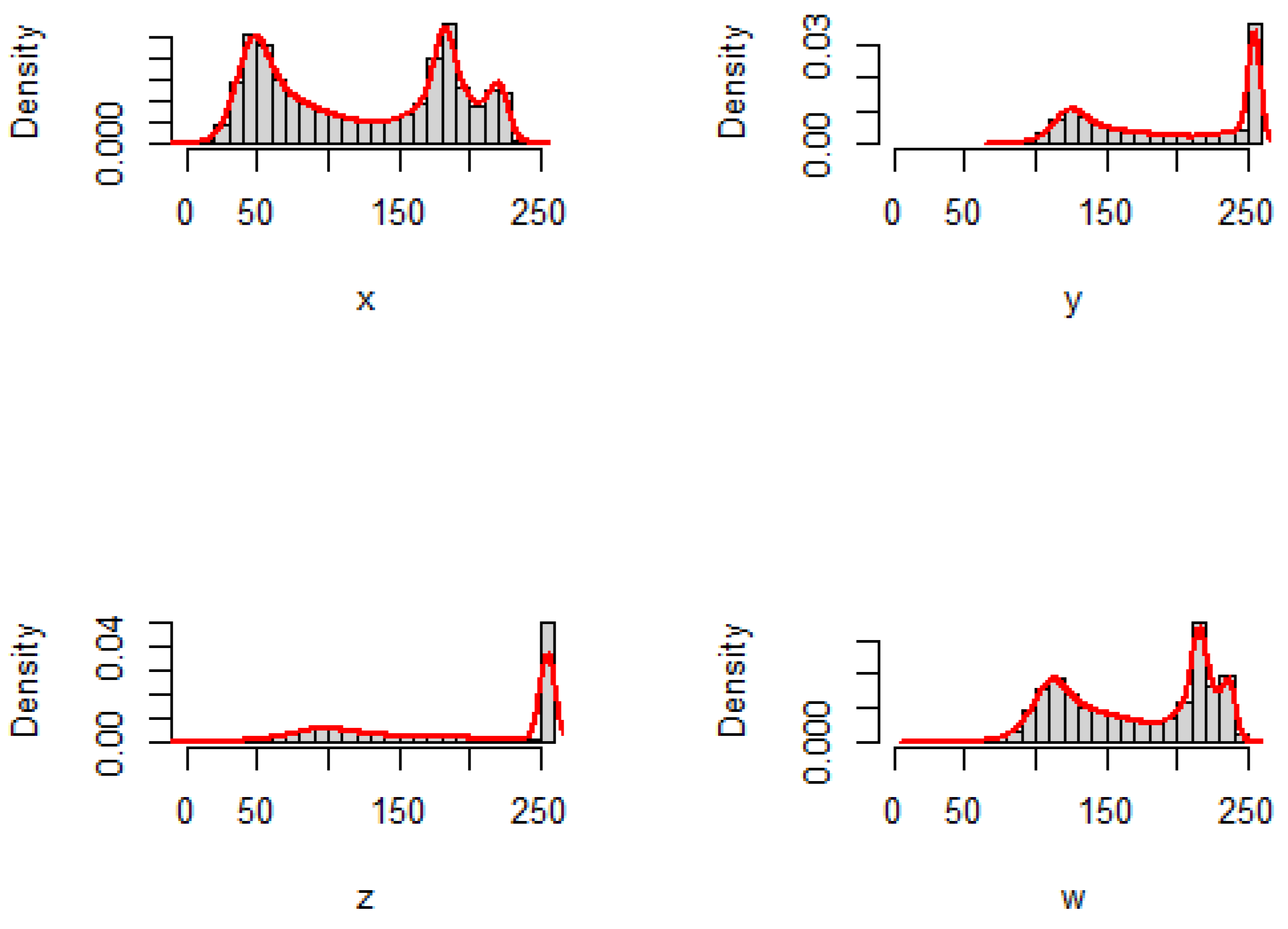

6.1. Image and Histogram

6.2. Non-Parametric Jensen–Inaccuracy Estimation

- Cameraman image

- Lake image

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Kullback–Leibler divergence between f and g | |

| inaccuracy measure between f and g | |

| probability density function | |

| JII | Jensen–inaccuracy information measure |

| Jensen–inaccuracy measure between and with respect to f | |

| extended Jensen–inaccuracy measure based on density functions | |

| arithmetic–geometric divergence measure | |

| extended arithmetic–geometric divergence measure | |

| -Jensen–inaccuracy measure between and with respect to f | |

| Rényi entropy of order | |

| relative Rényi entropy of order | |

| average entropy associated with f | |

| average inaccuracy measure |

References

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Rényi, A. On measures of information and entropy. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Oakland, CA, USA, 1 January 1961; pp. 547–561. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann-Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Nielsen, F.; Nock, R. On the chi square and higher-order chi distances for approximating f-divergences. IEEE Signal Process. Lett. 2013, 21, 10–13. [Google Scholar] [CrossRef]

- Di Crescenzo, A.; Longobardi, M. Some properties and applications of cumulative Kullback-Leibler information. Appl. Stoch. Model. Bus. Ind. 2015, 31, 875–891. [Google Scholar] [CrossRef]

- Van Erven, T.; Harremos, P. Rényi divergence and Kullback-Leibler divergence. IEEE Trans. Inf. Theory 2014, 60, 3797–3820. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Kerridge, D.F. Inaccuracy and inference. J. R. Stat. Soc. Ser. (Methodol.) 1961, 23, 184–194. [Google Scholar] [CrossRef]

- Kayal, S.; Sunoj, S.M. Generalized Kerridge’s inaccuracy measure for conditionally specified models. Commun.-Stat.-Theory Methods 2017, 46, 8257–8268. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Sánchez-Moreno, P.; Zarzo, A.; Dehesa, J.S. Jensen divergence based on Fisher’s information. J. Phys. A Math. Theor. 2012, 45, 125305. [Google Scholar] [CrossRef]

- Mehrali, Y.; Asadi, M.; Kharazmi, O. A Jensen-Gini measure of divergence with application in parameter estimation. Metron 2018, 76, 115–131. [Google Scholar] [CrossRef]

- Taneja, I.J. Generalized symmetric divergence measures and the probability of error. Commun.-Stat.-Theory Methods 2013, 42, 1654–1672. [Google Scholar] [CrossRef]

- Bercher, J.F. Source coding with escort distributions and Rényi entropy bounds. Phys. Lett. A 2009, 373, 3235–3238. [Google Scholar] [CrossRef]

- Kittaneh, O.A.; Khan, M.A.; Akbar, M.; Bayoud, H.A. Average entropy: A new uncertainty measure with application to image segmentation. Am. Stat. 2016, 70, 18–24. [Google Scholar] [CrossRef]

- Kharazmi, O.; Contreras-Reyes, J.E.; Balakrishnan, N. Optimal information, Jensen-RIG function and α-Onicescu’s correlation coefficient in terms of information generating functions. Phys. A Stat. Mech. Its Appl. 2023, 609, 128362. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Prentice Hall: New York, NY, USA, 2009. [Google Scholar]

- Duong, T.; Duong, M.T.; Suggests, M.A.S.S. Package ‘ks’. R Package Version, 1(5). 2022. Available online: https://cran.r-project.org/web/packages/ks/index.html (accessed on 15 January 2023).

- Pau, G.; Fuchs, F.; Sklyar, O.; Boutros, M.; Huber, W. EBImage—An R package for image processing with applications to cellular phenotypes. Bioinformatics 2010, 26, 979–981. [Google Scholar] [CrossRef] [PubMed]

- Fan, L.X.; Cai, M.Y.; Lin, Y.; Zhang, W.J. Axiomatic design theory: Further notes and its guideline to applications. Int. J. Mater. Prod. Technol. 2015, 51, 359–374. [Google Scholar] [CrossRef]

| Cameraman Image | Lake Image | ||

|---|---|---|---|

| Inaccuracy | Jensen–Inaccuracy | Inaccuracy | Jensen–Inaccuracy |

| 8.6142 | 0.9674 | 10.7143 | 1.9235 |

| 7.3654 | 0.5707 | 6.5744 | 1.2558 |

| 9.2097 | 1.4315 | 7.4086 | 0.5131 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kharazmi, O.; Shirazinia, F.; Buono, F.; Longobardi, M. Jensen–Inaccuracy Information Measure. Entropy 2023, 25, 483. https://doi.org/10.3390/e25030483

Kharazmi O, Shirazinia F, Buono F, Longobardi M. Jensen–Inaccuracy Information Measure. Entropy. 2023; 25(3):483. https://doi.org/10.3390/e25030483

Chicago/Turabian StyleKharazmi, Omid, Faezeh Shirazinia, Francesco Buono, and Maria Longobardi. 2023. "Jensen–Inaccuracy Information Measure" Entropy 25, no. 3: 483. https://doi.org/10.3390/e25030483

APA StyleKharazmi, O., Shirazinia, F., Buono, F., & Longobardi, M. (2023). Jensen–Inaccuracy Information Measure. Entropy, 25(3), 483. https://doi.org/10.3390/e25030483