Abstract

Inference from limited data requires a notion of measure on parameter space, which is most explicit in the Bayesian framework as a prior distribution. Jeffreys prior is the best-known uninformative choice, the invariant volume element from information geometry, but we demonstrate here that this leads to enormous bias in typical high-dimensional models. This is because models found in science typically have an effective dimensionality of accessible behaviors much smaller than the number of microscopic parameters. Any measure which treats all of these parameters equally is far from uniform when projected onto the sub-space of relevant parameters, due to variations in the local co-volume of irrelevant directions. We present results on a principled choice of measure which avoids this issue and leads to unbiased posteriors by focusing on relevant parameters. This optimal prior depends on the quantity of data to be gathered, and approaches Jeffreys prior in the asymptotic limit. However, for typical models, this limit cannot be justified without an impossibly large increase in the quantity of data, exponential in the number of microscopic parameters.

1. Introduction

No experiment fixes a model’s parameters perfectly. Every approach to propagating the resulting uncertainty must, explicitly or implicitly, assume a measure of the space of possible parameter values. A badly chosen measure can introduce bias, and we argue here that avoiding such bias is equivalent to the very natural goal of assigning equal weight to each distinguishable outcome. However, this goal is seldom reached, either because no attempt is made, or because the problem is simplified by prematurely assuming the asymptotic limit of nearly infinite data. We demonstrate here that this assumption can lead to a large bias in what we infer from the parameters, in models with features typical of many-parameter mechanistic models found in science. We propose a score for such bias, and advocate for using a measure that makes this zero. Such a measure allows for unbiased inference without the need to first simplify the model to just the right degree of complexity. Instead, weight is automatically spread according to a lower effective dimensionality, ignoring details irrelevant to visible outcomes.

We consider models which predict a probability distribution for observing data x given parameters . The degree of overlap between two such distributions indicates how difficult it is to distinguish the two parameter points, which gives a notion of distance on parameter space. The simplifying idea of information geometry is to focus on infinitesimally close parameter points, for which there is a natural Riemannian metric, the Fisher information [1,2]. This may be thought of as having units of standard deviations, so that along a line of integrated length L there are about L distinguishable points, and thus any parameter which can be measured to a few digits of precision has length . It is a striking empirical feature of models in science that most have a few such long (or relevant) parameter directions, followed by many more short (or irrelevant) orthogonal directions [3,4,5,6]. The irrelevant lengths, all , show a characteristic spectrum of being roughly evenly spaced on a log scale, often over many decades. As a result, much of the geometry of this Riemannian model manifold consists of features much smaller than 1, far too small to observe. However, the natural intrinsic volume measure, which follows from the Fisher metric, is sensitive to all of these unobservable dimensions, and as we demonstrate here, they cause this measure to introduce enormous bias.

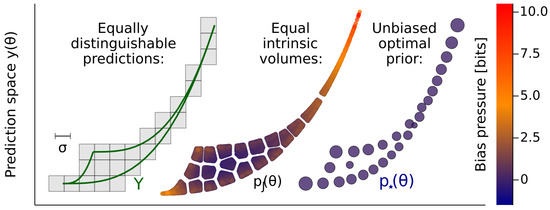

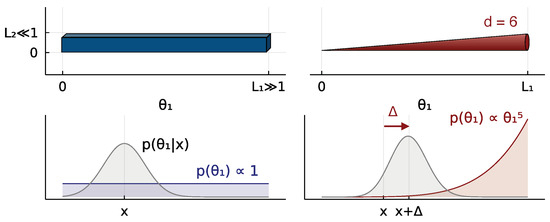

To avoid this problem, we need a measure tied to the Fisher length scale , instead of one from the continuum. Locally, this length scale partitions dimensions into relevant and irrelevant, which in turn approximately factorizes the volume element into a relevant part and what we term the irrelevant co-volume. The wild variations of this co-volume are the source of the bias we describe, and it is rational to ignore them. As we illustrate in Figure 1 for a simple two-parameter model, equally distinguishable predictions do not correspond to equal intrinsic volumes, and this failure is detected by a score we call bias pressure. The measure for which this score is everywhere zero, by contrast, captures relevant distinguishability and ignores the very thin irrelevant direction. The same measure is also obtained by maximizing the information learned about parameter from seeing data x [7,8,9], or equivalently from a particular minimax game [10,11,12]. Since is usually discrete [9,13,14,15,16,17,18], it can be seen as implementing a length cutoff, replacing the smooth differential-geometric view of the model manifold with something quantized [19].

Figure 1.

The natural volume is a biased measure for the space of distinguishable outcomes. The left panel outlines the space of possible predictions Y; the observed x is deterministic plus measurement noise. With the scale of the noise as shown, the upper half is effectively one-dimensional. The center panel shows a sample from the volume measure , divided into blocks of equal weight. These are strongly influenced by the unobservable thickness of the upper portion. Points are colored by bias pressure , which we define in Equation (5). The right panel shows the explicitly unbiased optimal measure , which gradually adjusts from two- to one-dimensional behavior (The model is Equation (6) with , , and , observed at times each with Gaussian noise ).

In the Bayesian framework, the natural continuous volume measure is known as Jeffreys prior, and is the canonical example of an uninformative prior: a principled, ostensibly neutral choice. It was first derived based on invariance considerations [20], and can also be justified by information- or game-theoretic ideas, provided these are applied in the limit of infinitely many repetitions [7,8,17,21,22]. This asymptotic limit often looks like a technical trick to simplify derivations. However, in realistic models, this limit is very far from being justified, exponentially far in the number of parameters, often requiring an experiment to be repeated for longer than the age of the universe. We demonstrate here that using the prior derived in this limit introduces a large bias in such models. Furthermore, we argue that such bias, and not only computational difficulties, has prevented the wide use of uninformative priors.

The promise of principled ways of tracking uncertainty, Bayesian or otherwise, is to free us from the need to select a model with precisely the right degree of complexity. This idea is often encountered in the context of overfitting, where the maximum likelihood point of an overly complex model gives worse predictions. The bias discussed here is a distinct way for overly complex models to give bad predictions. We begin with toy models in which the number of parameters can be easily adjusted. However, in the real models of interest, we cannot trivially tune the number of parameters. This is why we wish to find principled methods which are not fooled by the presence of many irrelevant parameters.

2. Results

We consider a model to be characterized by the likelihood of observing data when the parameters are . In such a model, the Fisher information metric (FIM) measures the distinguishability of nearby points in parameter space as a distance , where

For definiteness, we may take points separated along a geodesic by a distance to be distinguishable. Intuitively, though incorrectly, the d-dimensional volume implied by the FIM might be thought to correspond to the total number of distinguishable parameter values inferable from an experiment:

However, this counting makes a subtle assumption that all structure in the model has a scale much larger than 1. When many dimensions are smaller than 1, their lengths weigh the effective volume along the larger dimensions, despite having no influence on distinguishability.

The same effect applies to the normalized measure, Jeffreys prior:

This measure’s dependence on the irrelevant co-volume is an under-appreciated source of bias in posteriors derived from this prior. The effect is most clearly seen when the FIM is block-diagonal, . Then the volume form factorizes exactly, and the relevant effective measure is the factor times , an integral over the irrelevant dimensions.

A more principled measure of the (log of the) number of distinguishable outcomes is the mutual information between parameters and data, :

where is the Kullback–Leibler divergence between two probability distributions, which are not necessarily close: is typically much broader than . Unlike the volume Z, the mutual information depends on the prior . Past work, both by ourselves and others, has advocated for using the prior, which maximizes this mutual information, with [8] or without [9] taking the asymptotic limit:

The same prior arises from a minimax game in which you choose a prior, your opponent chooses the true , and you lose the (large) KL divergence [10,11,12]:

Here we stress a third perspective, defining a quantity we call bias pressure which captures how strongly the prior disfavors predictions from a given point:

The optimal has on its support, and can be found by minimizing Other priors have at some points, indicating that can be increased by moving weight there (and away from points where ). We demonstrate below that deserves to be called a bias, as it relates to large deviations of the posterior center of mass. We enact this by presenting a number of toy models, chosen to have information geometry similar to that typically found in mechanistic models from many scientific fields [5].

2.1. Exponential Decay Models

The first model we study involves inferring rates of exponential decay. This may be motivated, for instance, by the problem of determining the composition of a radioactive source containing elements with different half-lives, using Geiger counter readings taken over some period of time. The mean count rate at time t is

We take the decay rates as parameters, and fix the proportions , usually to , thus initial condition . If we make observations at m distinct times t, then the prediction y is an m-vector, restricted to a compact region . For radioactivity, we would expect to observe plus Poisson noise, but the qualitative features are the same if we simplify to Gaussian noise with constant width :

The Fisher metric then simplifies to be the Euclidean metric in the space of predictions Y, pulled back to parameter space :

thus, plots of in will show Fisher distances accurately. This model is known to be ill conditioned, with many small manifold widths and many small FIM eigenvalues when d is large [23].

With just two dimensions, , Figure 1 shows the region , Jeffreys prior , and the optimal prior , projected to densities on Y. Jeffreys is uniform (since the metric is constant in y), and hence always weights a two-dimensional area, both where this is appropriate and where it’s not. The upper portion of Y in the figure is thin compared to , so the points we can distinguish are those separated vertically: the model is effectively one-dimension there. Jeffreys does not handle this well, which we illustrate in two ways. First, the prior is drawn divided into 20 segments of equal weight (equal area), which roughly correspond to distinguishable differences where the model is two-dimensional, but not where it becomes one-dimensional. Second, the points are colored by , which detects this effect, and gives large values at the top (about 10 bits). The optimal prior avoids these flaws by smoothly adjusting from the one- to the two-dimensional part of the model [9].

The claim that some parts of the model are effectively one-dimensional depends on the amount of data gathered. Independent repetitions of the experiment have overall likelihood , which will always scale the FIM by M, hence all distances by a factor . This scaling is exactly equivalent to smaller Gaussian noise . Increasing M increases the number of distinguishable points, and large enough M (or small enough ) can eventually make any nonzero length larger than 1. Thus, the amount of data gathered affects which parameters are relevant. However, notice that such repetition has no effect at all on , since the scale of in Equation (2) is canceled by Z. In this sense, it is already clear that Jeffreys prior belongs to the fixed point of repetition, i.e., to the asymptotic limit .

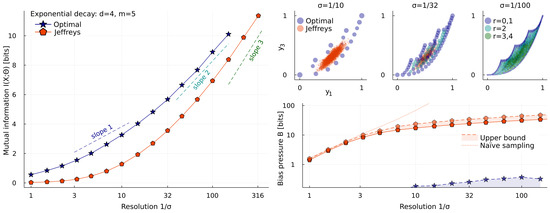

Figure 2 shows a more complicated version of the model (6), with parameters, and looks at the effect of varying the noise level . Jeffreys prior always fills the 4-dimensional bulk, but at moderate , most of the distinguishable outcomes are located far from this mass. At large , equivalent to few repetitions, all the weight of the optimal prior is on zero- and one-dimensional edges. As more data are gathered, it gradually fills in the bulk, until, in the asymptotic limit , it approaches Jeffreys prior [12,17,21,24]. However, while approaches a continuum at any interior point [25], it remains discrete at Fisher distances from the boundary. The worst-case bias pressure detects this; hence, the maximum for Jeffreys prior does not approach that for the optimal prior: . However, since mutual information is dominated by the interior in this limit, we expect the values for and to agree in the limit: .

Figure 2.

The effect of varying the noise level on a fixed model. The model is (6) with parameters, observed at times . Top right, the optimal prior has all of its weight on 0- and 1-dimensional edges at large , but adjusts to fill in the bulk at small (colors indicate the dimension r of the 4-dimensional shape’s edge on which a point is located, the rank of the FIM there). Jeffreys prior is independent of , and has nonzero density everywhere, but a sample of points is largely located near the middle of the shape. Left, the slope of gives a notion of effective dimensionality; in the asymptotic limit , we expect . Bottom right, the worst-case bias pressure is always zero for , up to the numerical error, but remains nonzero for even in the asymptotic limit. The Appendix A describes how upper and lower bounds for B are calculated.

One way to quantify the effective dimensionality is to look at the rate of increase in mutual information under repetition, or decreasing noise . Along a dimension with Fisher length , the number of distinguishable points is proportional to L, and thus a cube with large dimensions will have such points. This motivates defining by

Figure 2 shows lines for slope , and we expect in the limit .

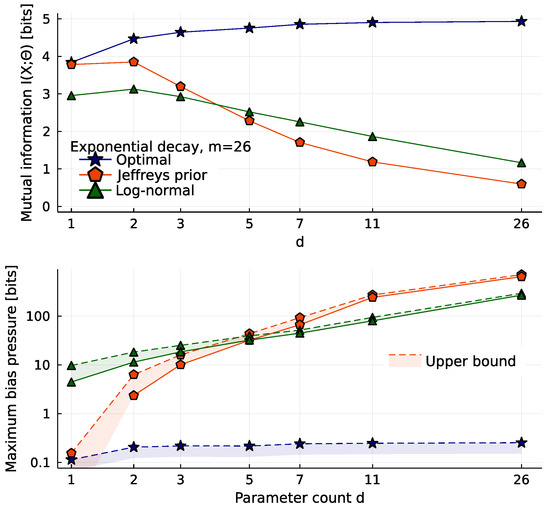

2.2. The Costs of High Dimensionality

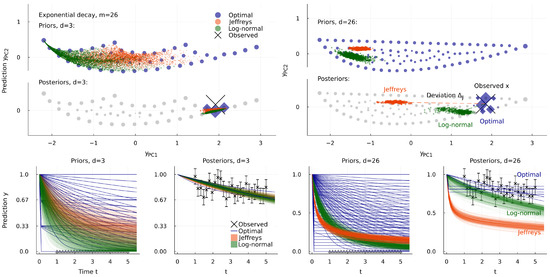

The problems of uneven measure grow more severe with more dimensions. To explore this, Figure 3, Figure 4 and Figure 5 show a sequence of models with 1 to 26 parameters. All describe the same data: observations at the same list of times in with the same noise . While Jeffreys prior is nonzero everywhere, its weight is concentrated where the many irrelevant dimensions are largest. With a Monte Carlo sample of a million points, all are found within the small orange area on the right of Figure 3. For a particular observation x, we plot also the posterior for each prior. The extreme concentration of weight in in pulls this some 20 standard deviations away from the maximum likelihood point . We call this distance the posterior deviation ; it is the most literal kind of bias in results.

Figure 3.

The effect of changing model dimension for fixed data and noise level. Left half, the exponential decay model of Equation (6) with parameters, observed with noise at times in . Three priors are shown, drawn above by projecting onto the first two principal components of vector y, and below-left as a time course (each point on the upper plot is a line on the lower one). The corresponding posteriors are shown for a particular fixed x, which is the large cross in the upper plot (where the prior is shown again in light gray as a visual guide) and the series of points in the lower plot. In the model, all three posteriors are reasonable fits to the data. Right half, the similar model with parameters, for the same observations with the same noise. Here Jeffreys prior is much more strongly concentrated, favoring the part of the manifold where the irrelevant dimensions are largest. This has the effect of biasing the posterior far from the data, more than 20 standard deviations away. Figure 4 and Figure 5 explore the same setup further, including intermediate dimensions d. The log-normal prior is introduced in Equation (10).

Figure 4.

Posterior bias due to concentration of measure. Above, priors for the case of the model in Figure 3. We calculate the posterior for each at 100 points x (marked), and draw a line connecting the maximum likelihood point to the posterior center of mass . Inset enlarges to show that there are blue lines too, for the optimal prior, most much shorter than the spacing of its atoms. Below, we compare the length of such lines (divided by ) to the bias pressure . Notice that is sometimes negative (it has zero expectation value: ), although the worst-case is non-negative. Each pair of darker and lighter points are a lower and an upper bound, explained in the Appendix A.

Figure 5.

Information-theoretic scores for priors, as a function of dimensionality d. Like Figure 3, these models all describe the same data, with the same noise. Above, mutual information (all plots are scaled thus to have units of bits). The optimal prior ignores the addition of more irrelevant parameters, but Jeffreys prior is badly affected, and ends up capturing less than 1 bit. Below, worst-case bias pressure . This should be zero for the optimal prior, but our numerical solution has small errors. For the other priors, we plot lower and upper bounds, calculated using Bennett’s method [26], as described in the Appendix A. The bias of Jeffreys prior increases strongly with the increasing concentration of its weight in higher dimensions.

Figure 4 compares the posterior deviation to the bias pressure defined in Equation (5). For each of many observations x, we find the maximum likelihood point , and calculate the distance from this point to the posterior expectation value of y:

Then, using the same prior, we evaluate the corresponding bias pressure, . The figure shows 100 observations x drawn from , and we believe this justifies the use of the word “bias” to describe . The figure is for , but a similar relationship is seen in other dimensionalities.

Instead of looking at particular observations x, Figure 5 shows global criteria and . The optimal prior is largely unaffected by the addition of many irrelevant dimensions. Once , it captures essentially the same information in any higher dimension and has zero bias (or near-zero bias, in our numerical approximation). We may think of this as a new invariance principle, that predictions should be independent of unobservable model details. This replaces one of the invariances of Jeffreys, that repetition of the experiment does not change the prior. Repetition invariance guarantees poor performance when we are far from the asymptotic limit, as we see here from the rapidly declining performance of Jeffreys prior with increasing dimension, capturing less than one bit in . This decline in information is mirrored by a rise in the worst-case bias B.

Figure 3, Figure 4 and Figure 5 also show a third prior, which is log-normal in each decay rate , that is, normal in terms of :

This is not a strongly principled choice, but something like this is commonly used for parameters known to be positive. Here it produces better results than Jeffreys prior in high dimensions. We observe that it also suffers a decline in performance with increasing d, despite making no attempt to deliberately adapt to the high-dimensional geometry. The details of how well it works will, of course, depend on the values chosen for , and more complicated priors of this sort can be invented. With enough free “meta-parameters” such as , we can surely adjust such a prior to approximate the optimal prior, and in practice, such a variational approach might be more useful than solving for the optimal prior directly. We believe that worst-case bias is a good score for this purpose, partly because its zero point is meaningful.

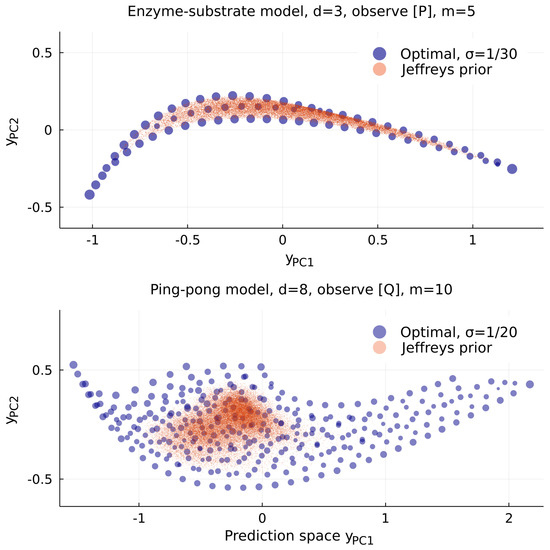

2.3. Inequivalent Parameters

Compared to these toy models, more realistic models often still have many parameter combinations poorly fixed by data, but seldom come in families that allow us to easily tune the number of dimensions. Instead of having many interchangeable parameters, each will often describe a different microscopic effect that we know to exist, even if we are not sure which combination of them will matter in a given regime [27]. To illustrate this, we now examine some models of enzyme kinetics, starting with the famous reaction:

This summarises differential equations for the concentrations, such as for the final product P, and for the enzyme, which combines with the substrate to form a bound complex.

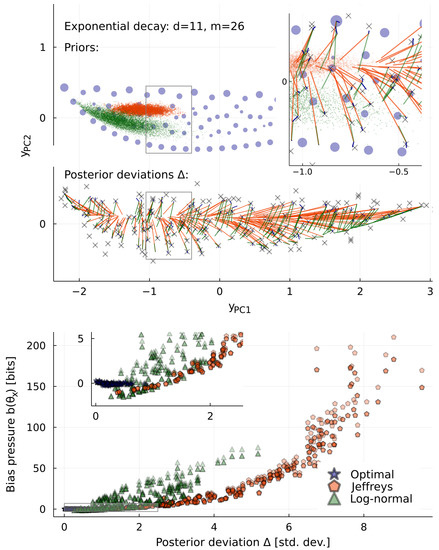

If the concentration of product is observed at some number times, with some noise, and starting from fixed initial conditions, then this model is not unlike the toy model above. Figure 6 shows the resulting priors for the rate constants appearing in Equation (11). The shape of the model manifold is similar, and the optimal prior again places most of its weight along two one-dimensional edges, while Jeffreys prior places it in the bulk, favoring the region where all three rate constants come closest to having independently visible effects on the data. However, the resulting bias is not extreme in three dimensions.

Figure 6.

Priors for two models of enzyme kinetics. Above, the 3-parameter model from Equation (11), observing only the concentration of product at times . Below, the 8-parameter model from Equation (12), observing only the final product at times . Here Jeffreys prior has worst-case bias bits, comparable to the models in Figure 5 at similar dimension, while the optimal prior for the model has its weight on well-known 2-parameter approximations, including that of Michaelis and Menten, the edge structure of the model is much more complicated (for suitable initial conditions, it will include the model as an edge).

The edges of this model are known approximations, in which certain rate constants become infinite (or equal), which we discuss in the Appendix A [28]. These approximations are useful in practice since each spans the full length of the most relevant parameter. However, the more difficult situation is when many different processes of comparable speed are unavoidably involved. The model manifold may still have many short directions, but the simpler description selected by will tend to have weight on many different processes. In other words, the simpler model, according to information theory, is not necessarily one simpler model obtained by taking a limit, but instead, a mixture of many different analytic limits.

To see this, we consider a slightly more complicated enzyme kinetics model, the ping-pong mechanism with rate constants:

Here is a deformed version of the enzyme E, which is produced in the reaction from A to P, and reverted in the reaction from B to final product Q. There are clearly many more possible limits in which some combination of the rate constants become large or small. Figure 6 shows that the optimal prior has weight on at least five different 1-edges, none of which is a good description by itself.

The concentration of weight seen in Jeffreys prior for these enzyme models is comparable to what we had before, with worst-case bias pressure bits in and 28 bits in . These examples share geometric features with many real models in science [5], and thus we believe the problems described here are generic.

3. Discussion

Before fitting a model to data, there is often a selection step to choose a model which is complex enough to fit the true pattern, but not so complex as to fit the noise. The motivation for this is clear in maximum likelihood estimation, where only one is kept, and there are various criteria for making the trade-off [29,30,31]. The motivation is less clear in Bayesian analysis, where slightly different criteria can be derived by approximating [32,33]. We might hope that if many different points are consistent with the noisy data x, then the posterior should simply have weight on all of them, encoding our uncertainty about .

Why, then, is model selection needed at all in Bayesian inference? Our answer here is that this is performed to avoid measure-induced bias, not overfitting. When using a sub-optimal prior, models with too much complexity do indeed perform badly. This problem is seen in Figure 5, in the rapid decline of scores or B with increasing d, and would also be seen in the more traditional model evidence —all of these scores prefer models with . However, the problem is not overfitting, since the extra parameters being added are irrelevant, i.e., they can have very little effect on the predictions . Instead, the problem is concentration of measure. In models with tens of parameters, this effect can be enormous: It leads to posterior expectation values standard deviations away from ideal, for the model with Jeffreys prior, and mutual information bit learned, and bits of bias. This problem is completely avoided by the optimal prior , which suffers no decline in performance with increasing parameter count d.

Geometrically, we can view traditional model selection as adjusting d to ensure that the model manifold only has dimensions of length . This ensures that most of the posterior weight is in the interior of the manifold; hence, ignoring model edges is justified. By contrast, when there are dimensions of length , the optimal posterior will usually have its weight at their extreme values, on several manifold edges, which are themselves simpler models [9]. Fisher lengths L depend on the quantity of data to be gathered, and repeating an experiment M times enlarges all by a factor . Large enough M can eventually make any dimension larger than 1, and thus repetition alters what d traditional model selection prefers. Similarly, repetition alters the effective dimensionality of . Some earlier work on model geometry studies a series in [22,33,34]; this expansion around captures some features beyond the volume but is not suitable for models with dimensions .

Real models in science typically have many irrelevant parameters [5,35,36,37,38]. It is common to have parameter directions times as important as the most relevant one, but impossible to repeat an experiment the times needed to bridge this gap. Sometimes it is possible to remove the irrelevant parameters and derive a simpler effective theory. This is what happens in physics, where a large separation of scales allows great simplicity and high accuracy [39,40]. However, many other systems we would like to model cannot, or cannot yet, be so simplified. For complicated biological reactions, climate models, or neural networks, it is unclear which of the microscopic details can be safely ignored, or what the right effective variable is. Unlike our toy models, we cannot easily adjust d, since every parameter has a different meaning. This is why we seek statistical methods which do not require us to find the right effective theory. Furthermore, in particular, here we study priors almost invariant to complexity.

The optimal prior is discrete, which makes it difficult to find, and this difficulty appears to be why its good properties have been overlooked. It is known analytically only for extremely simple models such as Bernoulli, and previous numerical work only treated slightly more complicated models, with parameters [9]. While our concern here is with the ideal properties, for practical use nearly optimal approximations may be required. One possibility is the adaptive slab-and-spike prior introduced in [6]. Another would be to use some variational family with adjustable meta-parameters [41].

Discreteness is also how the exactly optimal encodes a length scale in the model geometry, which is the divide between relevant and irrelevant parameters, between parameters that are constrained by data and those which are not. Making this distinction in some way is essential for good behavior, and it implies a dependence on the quantity of data. An effective model appropriate for much fewer data than observed will be too simple: the atoms of will be too far apart (much like recording too few significant figures), or else selecting a small d means picking just one edge (fixing some parameters which may, in fact, be relevant). On the other hand, what we have demonstrated here is that a model appropriate for much more data—infinitely more in the case of —will instead introduce enormous bias into our inference about .

Author Contributions

Both authors contributed to developing the theory and writing the manuscript. M.C.A. was responsible for software, algorithms, and graphs. All authors have read and agreed to the published version of the manuscript.

Funding

Our work is supported by a Simons Investigator Award. Parts of this work were performed at the Aspen Center for Physics, supported by NSF grant PHY-1607611. The participation of MCA at Aspen was supported by the Simons Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code used to find the priors (and the scores) shown is available at https://github.com/mcabbott/AtomicPriors.jl, using Julia [42].

Acknowledgments

We thank Isabella Graf, Mason Rouches, Jim Sethna, and Mark Transtrum for helpful comments on a draft.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

For brevity, the main text omits some standard definitions. The KL divergence (or relative entropy) is defined

With a conditional probability, in our notation integrates x but remains a function of . The Fisher information metric is the quadratic term from expanding .

The mutual information is

where we use Bayes theorem,

with , and entropy

Conditional entropy is for one value , or . With a Gaussian likelihood, Equation (7), and , this is a constant:

Many of these quantities depend on the choice of prior, such as the posterior and the mutual information . This is also true of our bias pressure and worst-case . When we need to refer to those for a specific prior, such as , we use the same subscript, writing and

All probability distributions are normalized. In particular, the gradient in (5) is taken with the constraint of normalization—varying the density at each point independently would give a different constant.

Appendix A.1. Square Hypercone

Here we consider an even simpler toy model, in which we can more rigorously define what we mean by co-volume, and analytically calculate the posterior deviation.

Consider a d-dimensional cone consisting of a line of length L thickened to have a square cross-section. This is with scale , and co-ordinate ranges:

Fixing noise , this has one relevant dimension, length L, and irrelevant dimensions whose lengths are always . The FIM is then

Thus the volume element is

Regarding the first factor as , it is trivial to integrate the second factor over for all , and this factor is the irrelevant co-volume. The effective Jeffreys prior along the one relevant dimension is thus

which clearly has much more weight at large , at the thick end of the cone.

Now observe some x, giving . With a few lines of algebra, we can derive, assuming , that the posterior deviation (9) is

Choosing and to roughly match Figure 3, at the deviation is . This is smaller than what is seen for the exponential decay model, whose geometry is, of course, more complicated. This difference is also detected by bias pressure. The maximum for this cone is about 55 bits, which is close to the model in Figure 5.

While this example takes all irrelevant dimensions to be of equal Fisher length, it would be more realistic to have a series equally spaced on a log scale. This makes no difference to the effective and hence to the posterior deviation . Figure A1 draws instead a cone with a round cross-section, which also makes no difference. It compares this to a shape of constant cross-section, for which ; hence, there is no such bias.

Figure A1.

The simplest geometry in which to see measure-induced posterior bias. We compare two model manifolds, both with relevant, Fisher length , and five irrelevant dimensions (, ) which are either of constant size (left) or taper linearly (right). The distinction between these two situations is, by assumption, unobservable, but using the d-dimensional notion of volume as a prior gives an effective which is either flat or . This can induce substantial bias in the posterior .

Appendix A.2. Estimating I(X;Θ) and Its Gradient

The mutual information can be estimated for a discrete prior

and a Gaussian likelihood (7) by replacing the integral over x with normally distributed samples:

This gives an unbiased estimate, and what we used for plotting in Figure 2 and Figure 5.

However, for finding , what we need is the very small gradients of I with respect to each : near to the optimum, the function is very close to flat. We find that, instead of Monte Carlo, the following kernel density approximation works well:

Here we Taylor expand the about for each atom [43]. For the purpose of finding we may ignore the constant and the conditional entropy in . Further, this is a better estimate used at .

Before maximizing using L-BFGS [44,45] to adjust all and together, we find it useful to sample initial points using Mitchell’s best-candidate algorithm [46].

Appendix A.3. Other Methods

Our focus here is on the properties of the optimal prior , but better ways to find nearly optimal solutions may be needed in order to use these ideas on larger problems. Some ideas have been explored in the literature:

- The famous algorithm for finding is due to Blahut and Arimoto [47,48], but it needs a discrete , which limits d. This was adapted to use MCMC sampling instead by [49], although their work appears to need discrete X instead. Perhaps it can be generalized.

- More recently, the following lower bound for was used by [41] to find approximations to :This bound is too crude to see the features of interest here: For all models in this paper, it favors a prior with just two delta functions, for any noise level .

- We mentioned above that adjusting some “meta-parameters” of some distribution would be one way to handle near-optimal priors. This is the approach of [41], and of many papers maximizing other scores, often described as “variational”.

- Another prior that typically has large was introduced in [6] under the name “adaptive slab-and-spike prior”. It pulls every point x in a distributionback to its maximum likelihood point :The result has weight everywhere in the model manifold, but has extra weight on the edges. Because the amount of weight on edges is controlled by , it adopts an appropriate effective dimensionality (8), and has a low bias (5).

Appendix A.4. Bias Alla Bennett

The KL divergence integral needed for is somewhat badly behaved when the prior’s weight is far from point . To describe how we handle this, we begin with the naïve Monte Carlo calculation of , which involves sampling from the prior. If x is very far from where the prior has most of its weight, then we will never obtain any samples where is not exponentially small, so we will miss the leading contribution to .

Sampling from the posterior instead, we will obtain points in the right area, but the wrong answer. The following identity due to Bennett [26] lets us walk from the prior to the posterior and obtain the right answer, sampling from distributions for several powers . Defining :

the result is

i.e.,

For the first is trivial, and the second reads .

Next, we want which involves . To plug this in, we would need to average at every x in the integral. Rather than sample from a fresh set of -distributions for each x, we can take one set at some , and correct using importance sampling to write:

All of this can be performed without knowing the normalization of the prior, Z in (2).

The same set of samples from -distributions can be used to calculate either forward or backward. These give upper and lower bounds on the true value. When they are sufficiently far apart to be visible, the plots show both of them. Figure 2 uses, as the -distributions, all larger -values on the plot, and also shows (dotted line) the result of naïve sampling from . Figure 5 uses about 30 steps.

Appendix A.5. Jeffreys and Vandermonde

To find Jeffreys prior, let us parameterize the exponential decay model (6) by which lives in the unit interval:

where . The Fisher information metric (1) reads in terms of a Jacobian which, in these co-ordinates, takes the simple form:

In high dimensionality, is very badly conditioned, and thus it is difficult to find the determinant with sufficient numerical accuracy (or at least, it is slow, as high-precision numbers are required). However, in the case , where the Jacobian is a square matrix, it is of Vandermonde form. Hence, its determinant is known exactly, and we can simply write:

For , more complicated formulae (involving a sum over Schur polynomials) are known, but the points in Figure 5 are chosen not to need them.

To sample from Jeffreys prior, or posterior, we use the affine-invariant “emcee” sampler [50]. This adapts well to badly conditioned geometries. Because the Vandermonde formula lets us work in machine precision, we can sample points in a few minutes, which is sufficient for Figure 3.

Appendix A.6. Michaelis–Menten et al.

The arrows in (11) summarise the following differential equations for the concentrations of the four chemicals involved:

These equations conserve (the enzyme is recycled) and (the substrate is converted to product), leaving two dynamical quantities, and . The plot takes them to have initial values , at , and we fix , .

The original analysis of Michaelis and Menten [52] takes the first two reactions to be in equilibrium. This can be viewed as taking the limit holding fixed , which picks a 2-parameter subspace of , an edge of the manifold. Then, becomes constant, leaving their equation

If we do not observe , then this is almost identical to the quasi-static limit of Briggs and Haldane [53], who take , and holding fixed and , which gives

which was much later shown to be analytically tractable [54].

In Figure 6, most of the points of weight in the optimal prior lie on the intersection of these two 2-parameter models, that is, on a pair of one-parameter models. These and other limits were discussed geometrically in [28].

For the ping-pong model (12), we take initial conditions of , , , .

Appendix A.7. Ever since the Big Bang

The claim that the age of the universe constrains us from taking the asymptotic limit in realistic multi-parameter models deserves a brief calculation. The conventional age is years, about s [55].

For the model (6), manifold widths are shown to scale such as in [23], and [5,23] shows FIM eigenvalues . Repeating an experiment M times scales lengths by , so we need roughly repetitions to make the smallest manifold width larger than 1. If the initial experiment took 1 s, then it is impossible to perform enough repetitions to make all dimensions of the model relevant.

Other models studied in [5,23] have different slopes vs. d, so the precise cutoff will vary. However, models with hundreds, or thousands, of parameters are also routine. It seems safe to claim that for most of these, the asymptotic limit is never justified.

Appendix A.8. Terminology

What we call Jeffreys prior, , is (apart from variations in apostrophes and articles) sometimes called “Jeffreys’s nonlocation rule” [56] in order to stress that it is not quite what Jeffreys favored. He argued for separating “location” parameters (such as an unconstrained position ) and excluding them from the determinant. The models we consider here have no such perfect symmetries.

What we call the optimal prior is sometimes called “Shannon optimal” [17], and sometimes called a “reference prior” after Bernardo [8]. The latter is misleading, as definition 1 in [8] explicitly takes the asymptotic limit . This is then Jeffreys prior, until some ways to handle nuisance parameters are appended. The idea of considering in the limit is older, for instance Lindley [7] considers it, and also notes that it leads to Jeffreys prior (which he does not like for multi-parameter models, but not for our reasons). In [15], the discrete prior for k repetitions is called the “k-th reference prior”, and is understood to be discrete, but is of interest only as a tool for showing that the limit exists. We stress that the asymptotic limit removes what are (for this paper) the interesting features of this prior.

The same can also be obtained by an equivalent minimax game, for which Kashyap uses the term “optimal prior” [10]. However, he, too, takes the asymptotic limit.

References

- Rao, C.R. Information and accuracy attainable in the estimation of statistical parameters. Bull. Calcutta Math. Soc. 1945, 37, 81–91. [Google Scholar]

- Amari, S.I. A foundation of information geometry. Electron. Commun. Jpn. 1983, 66, 1–10. [Google Scholar] [CrossRef]

- Brown, K.S.; Sethna, J.P. Statistical mechanical approaches to models with many poorly known parameters. Phys. Rev. E 2003, 68, 021904. [Google Scholar] [CrossRef]

- Daniels, B.C.; Chen, Y.J.; Sethna, J.P.; Gutenkunst, R.N.; Myers, C.R. Sloppiness, robustness, and evolvability in systems biology. Curr. Opin. Biotechnol. 2008, 19, 389–395. [Google Scholar] [CrossRef]

- Machta, B.B.; Chachra, R.; Transtrum, M.K.; Sethna, J.P. Parameter space compression underlies emergent theories and predictive models. Science 2013, 342, 604–607. [Google Scholar] [CrossRef]

- Quinn, K.N.; Abbott, M.C.; Transtrum, M.K.; Machta, B.B.; Sethna, J.P. Information geometry for multiparameter models: New perspectives on the origin of simplicity. Rep. Prog. Phys. 2023, 86, 035901. [Google Scholar] [CrossRef]

- Lindley, D.V. The use of prior probability distributions in statistical inference and decisions. In Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 30 June–30 July 1961. [Google Scholar]

- Bernardo, J.M. Reference posterior distributions for Bayesian inference. J. Roy. Stat. Soc. B 1979, 41, 113–128. [Google Scholar] [CrossRef]

- Mattingly, H.H.; Transtrum, M.K.; Abbott, M.C.; Machta, B.B. Maximizing the information learned from finite data selects a simple model. Proc. Natl. Acad. Sci. USA 2018, 115, 1760–1765. [Google Scholar] [CrossRef]

- Kashyap, R. Prior probability and uncertainty. IEEE Trans. Inform. Theory 1971, 17, 641–650. [Google Scholar] [CrossRef]

- Haussler, D. A general minimax result for relative entropy. IEEE Trans. Inform. Theory 1997, 43, 1276–1280. [Google Scholar] [CrossRef]

- Krob, J.; Scholl, H.R. A minimax result for the Kullback Leibler Bayes risk. Econ. Qual. Control 1997, 12, 147–157. [Google Scholar]

- Färber, G. Die Kanalkapazität allgemeiner Übertragunskanäle bei begrenztem Signalwertbereich beliebigen Signalübertragungszeiten sowie beliebiger Störung. Arch. Elektr. Übertr. 1967, 21, 565–574. [Google Scholar]

- Smith, J.G. The information capacity of amplitude-and variance-constrained scalar gaussian channels. Inf. Control. 1971, 18, 203–219. [Google Scholar] [CrossRef]

- Berger, J.O.; Bernardo, J.M.; Mendoza, M. On priors that maximize expected information. In Recent Developments in Statistics and Their Applications; Seoul Freedom Academy Publishing: Seoul, Republic of Korea, 1989. [Google Scholar]

- Zhang, Z. Discrete Noninformative Priors. Ph.D. Thesis, Yale University, New Haven, CT, USA, 1994. [Google Scholar]

- Scholl, H.R. Shannon optimal priors on independent identically distributed statistical experiments converge weakly to Jeffreys’ prior. Test 1998, 7, 75–94. [Google Scholar] [CrossRef]

- Sims, C.A. Rational inattention: Beyond the linear-quadratic case. Am. Econ. Rev. 2006, 96, 158–163. [Google Scholar] [CrossRef]

- Connes, A. Noncommutative Geometry; Academic Press: San Diego, CA, USA, 1994. [Google Scholar]

- Jeffreys, H. An invariant form for the prior probability in estimation problems. Proc. R. Soc. Lond. A 1946, 186, 453–461. [Google Scholar] [CrossRef]

- Clarke, B.S.; Barron, A.R. Jeffreys’ prior is asymptotically least favorable under entropy risk. J. Stat. Plan. Inference 1994, 41, 37–60. [Google Scholar] [CrossRef]

- Balasubramanian, V. Statistical inference, Occam’s razor, and statistical mechanics on the space of probability distributions. Neural Comput. 1997, 9, 349–368. [Google Scholar] [CrossRef]

- Transtrum, M.K.; Machta, B.B.; Sethna, J.P. Why are nonlinear fits to data so challenging? Phys. Rev. Lett. 2010, 104, 060201. [Google Scholar] [CrossRef]

- Clarke, B.; Barron, A. Information-theoretic asymptotics of Bayes methods. IEEE Trans. Inform. Theory 1990, 36, 453–471. [Google Scholar] [CrossRef]

- Abbott, M.C.; Machta, B.B. A scaling law from discrete to continuous solutions of channel capacity problems in the low-noise limit. J. Stat. Phys. 2019, 176, 214–227. [Google Scholar] [CrossRef]

- Bennett, C.H. Efficient estimation of free energy differences from Monte Carlo data. J. Comput. Phys 1976, 22, 245–268. [Google Scholar] [CrossRef]

- Hines, K.E.; Middendorf, T.R.; Aldrich, R.W. Determination of parameter identifiability in nonlinear biophysical models: A Bayesian approach. J. Gen. Physiol. 2014, 143, 401–416. [Google Scholar] [CrossRef] [PubMed]

- Transtrum, M.K.; Qiu, P. Bridging mechanistic and phenomenological models of complex biological systems. PLoS Comput. Biol. 2016, 12, e1004915. [Google Scholar] [CrossRef]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Automat. Contr. 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Rissanen, J. Modeling by shortest data description. Automatica 1978, 14, 465–471. [Google Scholar] [CrossRef]

- Grünwald, P.; Roos, T. Minimum description length revisited. Int. J. Math. Ind. 2019, 11, 1930001. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Statist. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Myung, I.J.; Balasubramanian, V.; Pitt, M.A. Counting probability distributions: Differential geometry and model selection. Proc. Natl. Acad. Sci. USA 2000, 97, 11170–11175. [Google Scholar] [CrossRef]

- Piasini, E.; Balasubramanian, V.; Gold, J.I. Effect of geometric complexity on intuitive model selection. In Machine Learning, Optimization, and Data Science; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–24. [Google Scholar] [CrossRef]

- O’Leary, T.; Williams, A.H.; Caplan, J.S.; Marder, E. Correlations in ion channel expression emerge from homeostatic tuning rules. Proc. Natl. Acad. Sci. USA 2013, 110, E2645–E2654. [Google Scholar] [CrossRef]

- Wen, M.; Shirodkar, S.N.; Plecháč, P.; Kaxiras, E.; Elliott, R.S.; Tadmor, E.B. A force-matching Stillinger-Weber potential for MoS2: Parameterization and Fisher information theory based sensitivity analysis. J. Appl. Phys. 2017, 122, 244301. [Google Scholar] [CrossRef]

- Marschmann, G.L.; Pagel, H.; Kügler, P.; Streck, T. Equifinality, sloppiness, and emergent structures of mechanistic soil biogeochemical models. Environ. Model. Softw. 2019, 122, 104518. [Google Scholar] [CrossRef]

- Karakida, R.; Akaho, S.; Amari, S.i. Pathological spectra of the Fisher information metric and its variants in deep neural networks. Neural Comput. 2021, 33, 2274–2307. [Google Scholar] [CrossRef] [PubMed]

- Kadanoff, L.P. Scaling laws for Ising models near Tc. Physics 1966, 2, 263–272. [Google Scholar] [CrossRef]

- Wilson, K.G. Renormalization group and critical phenomena. 1. Renormalization group and the Kadanoff scaling picture. Phys. Rev. 1971, B4, 3174–3183. [Google Scholar] [CrossRef]

- Nalisnick, E.; Smyth, P. Learning approximately objective priors. arXiv 2017, arXiv:1704.01168. [Google Scholar]

- Bezanson, J.; Karpinski, S.; Shah, V.B.; Edelman, A. Julia: A fast dynamic language for technical computing. arXiv 2012, arXiv:1209.5145. [Google Scholar]

- Huber, M.F.; Bailey, T.; Durrant-Whyte, H.; Hanebeck, U.D. On entropy approximation for Gaussian mixture random vectors. In Proceedings of the 2008 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Seoul, Republic of Korea, 20–22 August 2008; pp. 181–188. [Google Scholar] [CrossRef]

- Nocedal, J. Updating quasi-Newton matrices with limited storage. Math. Comp. 1980, 35, 773–782. [Google Scholar] [CrossRef]

- Johnson, S.G. The NLopt Nonlinear-Optimization Package. Available online: http://github.com/stevengj/nlopt (accessed on 6 May 2022).

- Mitchell, D.P. Spectrally optimal sampling for distribution ray tracing. SIGGRAPH Comput. Graph. 1991, 25, 157–164. [Google Scholar] [CrossRef]

- Blahut, R. Computation of channel capacity and rate-distortion functions. IEEE Trans. Inform. Theory 1972, 18, 460–473. [Google Scholar] [CrossRef]

- Arimoto, S. An algorithm for computing the capacity of arbitrary discrete memoryless channels. IEEE Trans. Inform. Theory 1972, 18, 14–20. [Google Scholar] [CrossRef]

- Lafferty, J.; Wasserman, L.A. Iterative Markov chain Monte Carlo computation of reference priors and minimax risk. Uncertain. AI 2001, 17, 293–300. [Google Scholar]

- Goodman, J.; Weare, J. Ensemble samplers with affine invariance. CAMCoS 2010, 5, 65–80. [Google Scholar] [CrossRef]

- Ma, Y.; Dixit, V.; Innes, M.; Guo, X.; Rackauckas, C. A comparison of automatic differentiation and continuous sensitivity analysis for derivatives of differential equation solutions. arXiv 2021, arXiv:1812.01892. [Google Scholar]

- Michaelis, L.; Menten, M.L. Die Kinetik der Invertinwirkung. Biochem. Z 1913, 49, 333–369. [Google Scholar]

- Briggs, G.E.; Haldane, J.B.S. A note on the kinetics of enzyme action. Biochem. J. 1925, 19, 338–339. [Google Scholar] [CrossRef]

- Schnell, S.; Mendoza, C. Closed Form Solution for Time-dependent Enzyme Kinetics. J. Theor. Biol. 1997, 187, 207–212. [Google Scholar] [CrossRef]

- Planck Collaboration. Planck 2018 results. VI. Cosmological parameters. Astron. Astrophys. 2021, 641, A6. [Google Scholar] [CrossRef]

- Kass, R.E.; Wasserman, L. The selection of prior distributions by formal rules. J. Am. Stat. Assoc. 1996, 91, 1343–1370. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).