1. Introduction

WSNs are a product of the new era, combining elements of computing, communication, and sensors to provide monitoring functions. A WSN consists of many sensor nodes forming a multi-hop self-organizing network using wireless communication. The WSN collaboratively senses, collects, and processes information in the signal coverage area and sends it to an observer to achieve the monitoring of the target area. Compared to traditional networks, WSNs have the advantage of being easy to deploy and fault-tolerant. Users can quickly deploy a practical WSN with limited time and conditions. Once deployed successfully, WSNs do not require much human effort, and the network automatically integrates and transmits information. Therefore, WSNs are widely used in various environments to perform monitoring tasks such as searching, battlefield surveillance, and disaster relief through the transmission of signals.

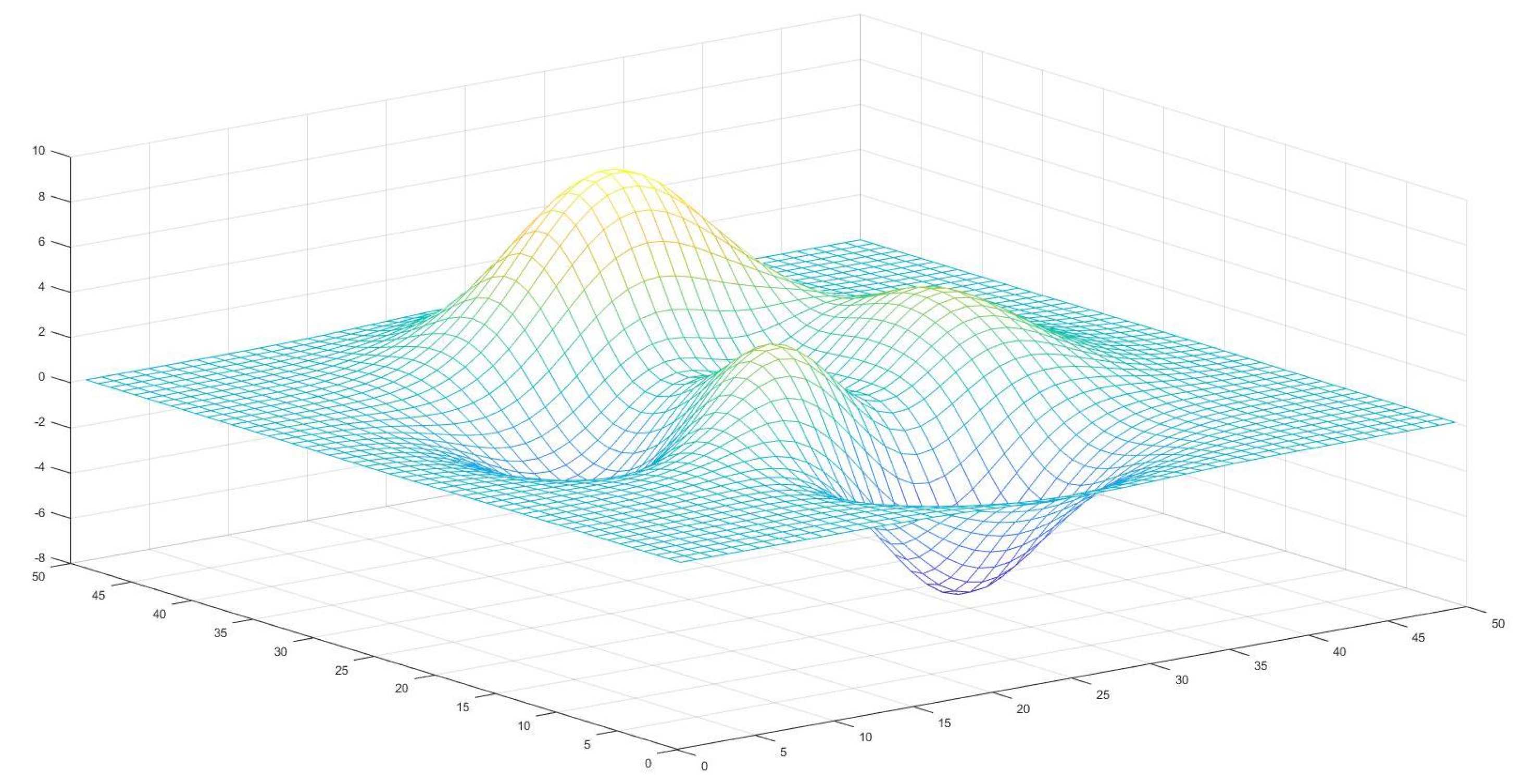

In a WSN, coverage is the ratio of the signal coverage of sensor nodes to the detection area. Since the number of sensors and the signal radius of a single sensor are limited, researchers are very interested in maximizing the detection of the target area under the premise of a limited number of sensors [

1]. Optimizing the location of sensor nodes can improve network coverage and save costs. Various meta-heuristic algorithms are now used to solve the sensor node deployment problem. For example, Zhao et al. [

2] used a particle swarm optimization algorithm and variable domain chaos optimization algorithm to find the optimal locations of sensors to improve the coverage in a two-dimensional environment. As the research progresses, the deployment of 3D WSN nodes applicable to the real environment is getting more and more attention. However, as the two-dimensional problems become three-dimensional problems, increasing the solution’s dimensions increases the computational difficulty and computational time, so we need to seek more suitable methods to solve such problems.

The meta-heuristic algorithm does not depend on the structure and characteristics of the problem and has a powerful search capability in dealing with non-convex and nondifferentiable problems. It can give relatively optimal solutions for practical problems in a certain time and is therefore, widely used in many engineering problems, such as speed reducer design [

3,

4], cantilever beam design [

5,

6], and wireless sensor network design [

7,

8,

9]. However, meta-heuristic algorithms to optimize various problems require the computation of fitness values, the time consumption of which usually increases significantly with the complexity of the problem, such as finite element analysis [

10] and 3D WSN node deployment [

11]. Trying to solve such problems is time-consuming and it often takes hours or even days to obtain a satisfactory result, which is a waste of resources and a challenge to the researcher’s patience. Therefore, to address this problem of long solution time for complex fitness functions, researchers have proposed a surrogate-assisted meta-heuristic algorithm to improve the utility of meta-heuristic algorithms for expensive problems [

12].

Surrogate-assisted meta-heuristic algorithms have been developed rapidly in recent years, and they have been studied by many researchers and are widely used in real-world problems. The surrogate-assisted meta-heuristic algorithm fits the fitness function using a surrogate model (also called an approximation model or meta-model). Nowadays, the widely used surrogate models are the Gaussian process (GP) or Kriging process [

13,

14], the polynomial approximation model [

15], the support vector regression model [

16], the radial basis function (RBF) model [

17,

18], etc., along with hybrid surrogate models that combine some of the models mentioned above [

19,

20]. Many papers have evaluated various surrogate models [

21,

22]. As the dimensionality of the optimization problem increases, the RBF surrogate model can obtain a relatively good performance, and the subsequent contents of this paper involve the RBF surrogate model.

The surrogate-assisted meta-heuristic algorithm can be classified according to the use of all samples or local samples when constructing the surrogate model. That is, it can be one of three types: a global, local, or hybrid surrogate-assisted meta-heuristic algorithm. Yu et al. [

23] proposed a global surrogate model combined with a social learning particle swarm optimization algorithm, restart strategies, and generation-based and individual-based model management methods into a coherently operating whole. Pan et al. [

24] proposed an effective, local, surrogate-assisted hybrid meta-heuristic algorithm that finds the nearest neighbors of an individual from a database based on Euclidean distance and uses this local information to train the surrogate model, introducing a selection criterion based on the best and top set of information to choose suitable individuals for true fitness evaluation. Wang et al. [

25] proposed a hybrid surrogate-assisted particle swarm optimization algorithm whose global model management strategy is inspired by active learning based on committees. The local surrogate model is built from the best samples. The local surrogate model is run when the global model does not improve the optimal solution, and the two models are alternated.

The process of generating samples that enable constructing a surrogate model is called infill criteria (sampling). The performance of the surrogate model depends heavily on the quality and quantity of the samples in the database, which can affect how well the surrogate model fits the true fitness function. As the sampled data points need to be evaluated by the true fitness before they can be added to the database, each act of adding points brings a high computational cost. Therefore, we must investigate the infill criteria to expect better samples to build the surrogate model. The sampling strategies are broadly classified into static and adaptive sampling (add-point). Static sampling means that the training points required to build the surrogate model are drawn simultaneously. Currently, the widely used static sampling methods include Latin hypercube sampling (LHS) [

26,

27], full factorial design [

28], orthogonal arrays [

29], central composite design [

30], etc. Since the sample points and the constructed surrogate model are independent in static sampling, static sampling cannot obtain helpful information for unknown problems. Static sampling hopes that the samples can regularly cover the whole solution space. LHS is a hierarchical sampling method which can avoid the aggregation of sample points in a small area and will use fewer samples when the same threshold is reached while making the computation less complicated. We use LHS to initialize the surrogate model.

Infill criteria are the product of combining static sampling with an add-point strategy. Static sampling techniques first establish the initial surrogate model, and the samples are updated using a meta-heuristic algorithm guided by the current surrogate model. Then, the samples are sampled according to the add-point strategy, and the surrogate model is re-updated to improve the overall accuracy of the current surrogate model. Finally, the above process is repeated until the maximum number of true fitness evaluations is satisfied. As the add-point strategy uses the previous experimental results as a reference for subsequent sampling, adaptive sampling can construct a more accurate surrogate model using a small number of samples compared to the inexperience of static sampling. Modern scientists are interested in the study of add-point strategies. They have proposed the statistical lower bound strategy [

31], the maximizing probability of improvement strategy [

32,

33], and the maximizing mean squared error strategy [

34,

35]. Based on the above discussion, this paper proposes a new adaptive sampling strategy based on the historical surrogate model information.

Several aspects, such as the choice of the surrogate model, the choice of the add-point strategy, and the choice of the meta-heuristic algorithm, determine the performance of the surrogate-assisted meta-heuristic algorithm. Among these, the meta-heuristic algorithm’s choice largely determines the surrogate-assisted algorithm’s performance. The surrogate-assisted meta-heuristic algorithm also performs differently when we choose different meta-heuristic algorithms. In recent years, researchers have proposed more and more meta-heuristic algorithms inspired by certain phenomena or natural laws in nature, from the classical GA [

36,

37], DE [

38], and PSO [

39,

40,

41] to the recently proposed GOA [

42] and PPE [

43]. PPE is a population evolution algorithm that imitates the evolution rules of phasmatodea populations, that is, the characteristics of convergent evolution, path dependence, population growth, and competition. These algorithms have shown powerful optimization capabilities. Using a single meta-heuristic algorithm to solve complex problems may follow a similar update strategy to update the samples and thus fall into a local optimum. Therefore, many researchers consider hybrid algorithms a research hotspot, such as combining the structures of two algorithms or connecting them in series. Zhang et al. [

44] proposed a strategy to combine the WOA and SFLA algorithms, i.e., combining the powerful optimization capability of WOA with the interpopulation communication capability of SFLA, and the hybrid algorithm obtained a more powerful optimization capability than when the two algorithms were run separately. However, few hybrid algorithms have been applied to surrogate-assisted meta-heuristic algorithms to deal with costly problems. We combined the recently proposed GOA with the DE algorithm to design a surrogate-assisted meta-heuristic algorithm.

We propose a surrogate-assisted hybrid meta-heuristic algorithm based on the historical surrogate model add-point strategy, the main work for which was as follows.

An add-point strategy is proposed, in which the useful information in the historical surrogate model is retained and will be compared with the information in the latest surrogate model to select the appropriate sample points for true fitness evaluation.

Combining GOA and DE algorithms, we make full use of the powerful exploration ability of GOA and the exploitation ability of DE, and propose an escape-from-the-local-optimum control strategy to control the selection of the two algorithms.

Generation-based optimal restart strategies are incorporated, and some of the best sample information is used to construct local surrogate models.

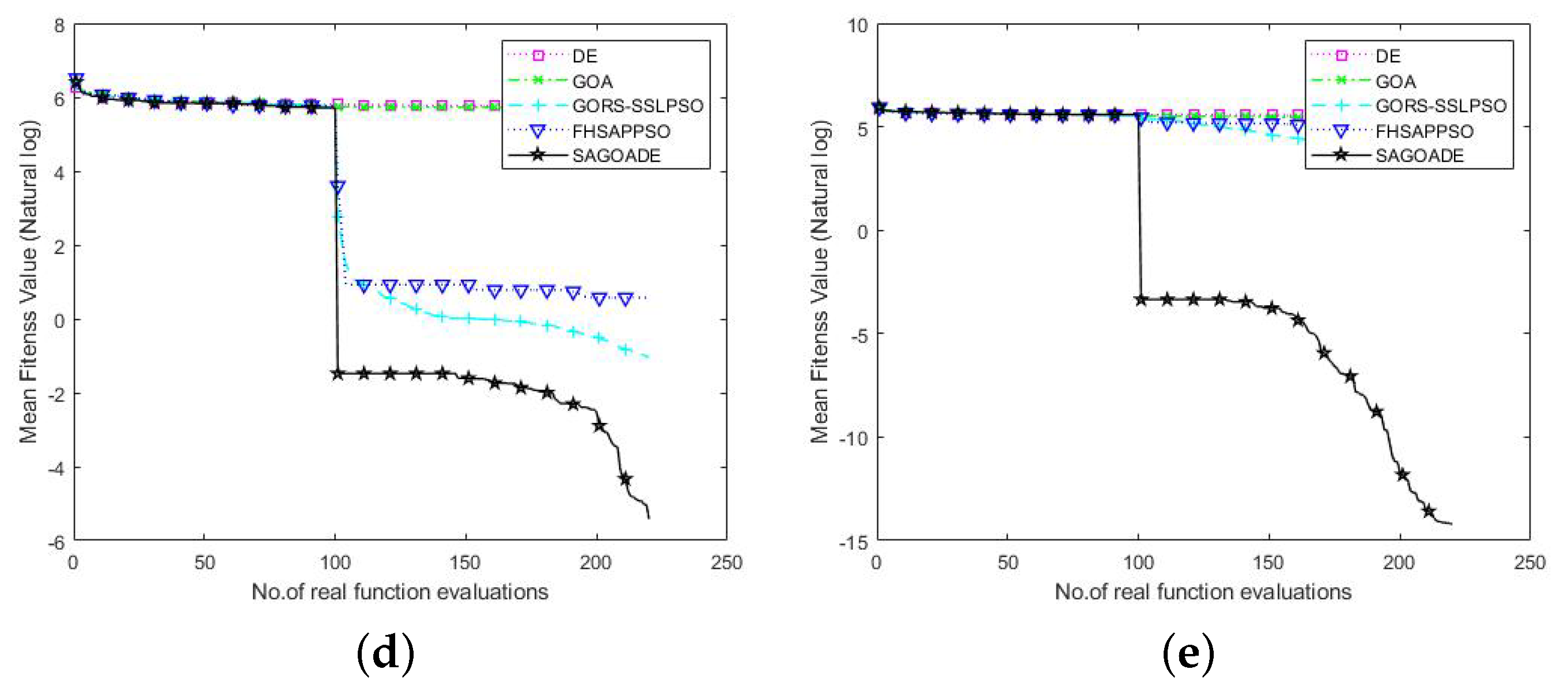

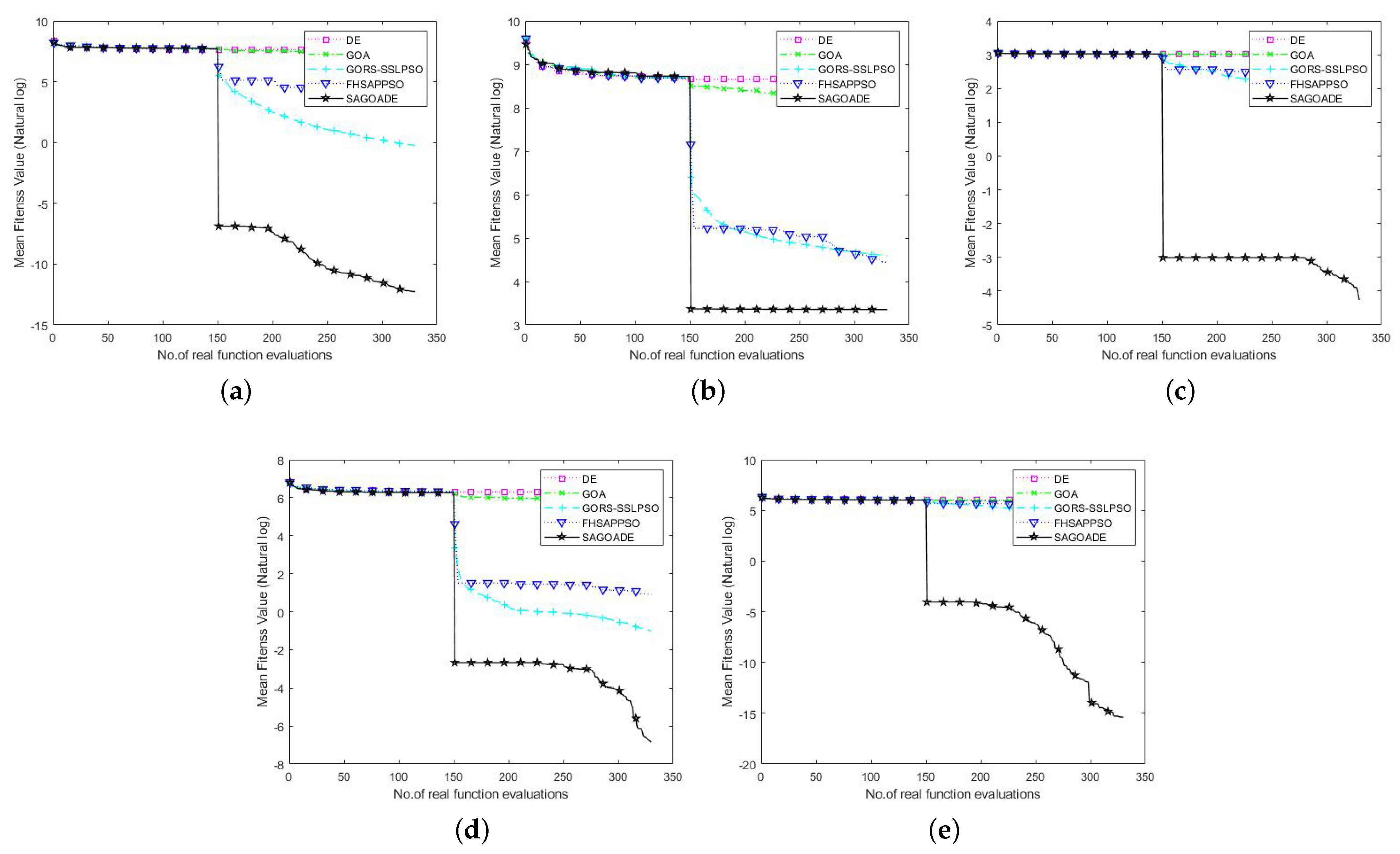

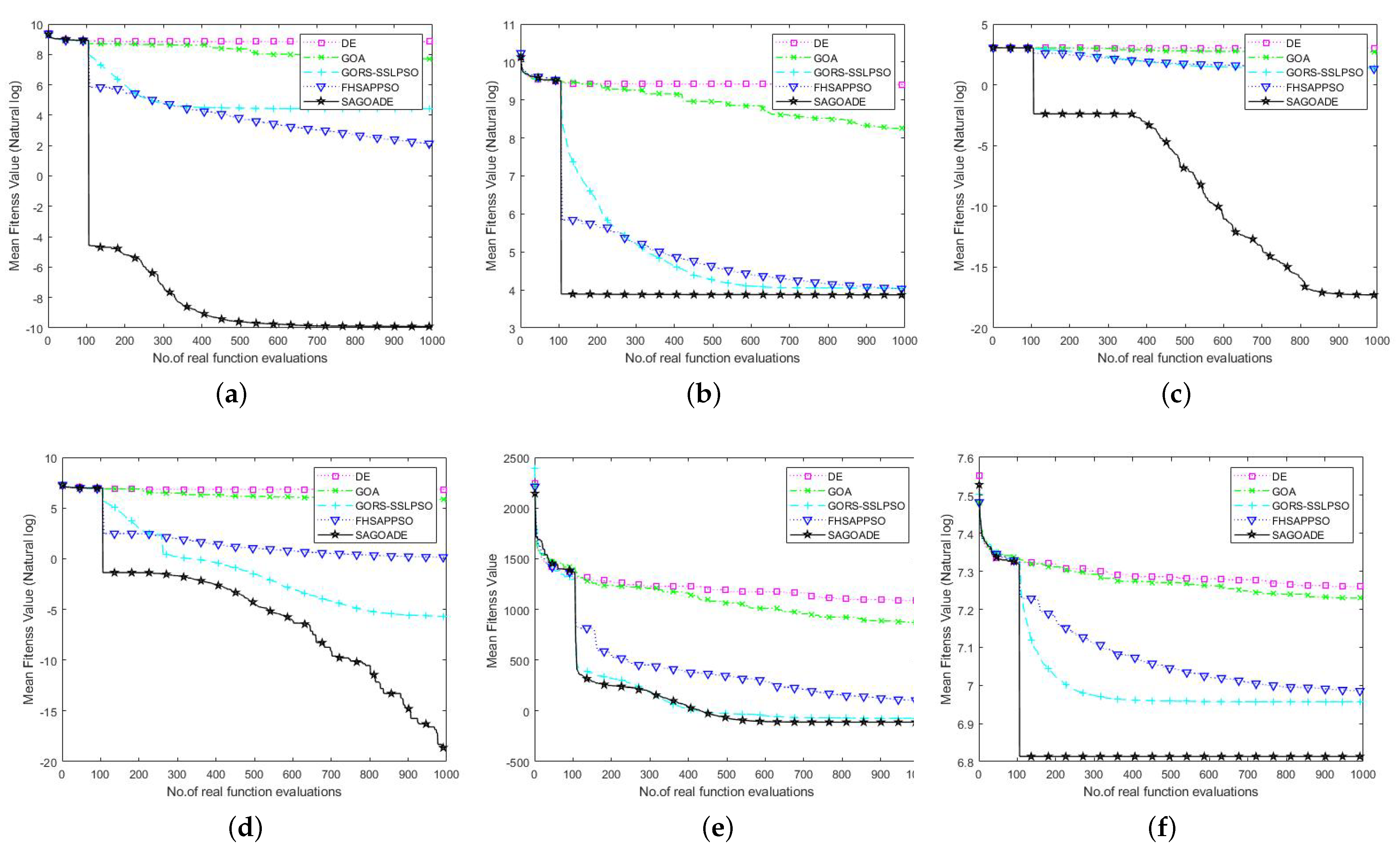

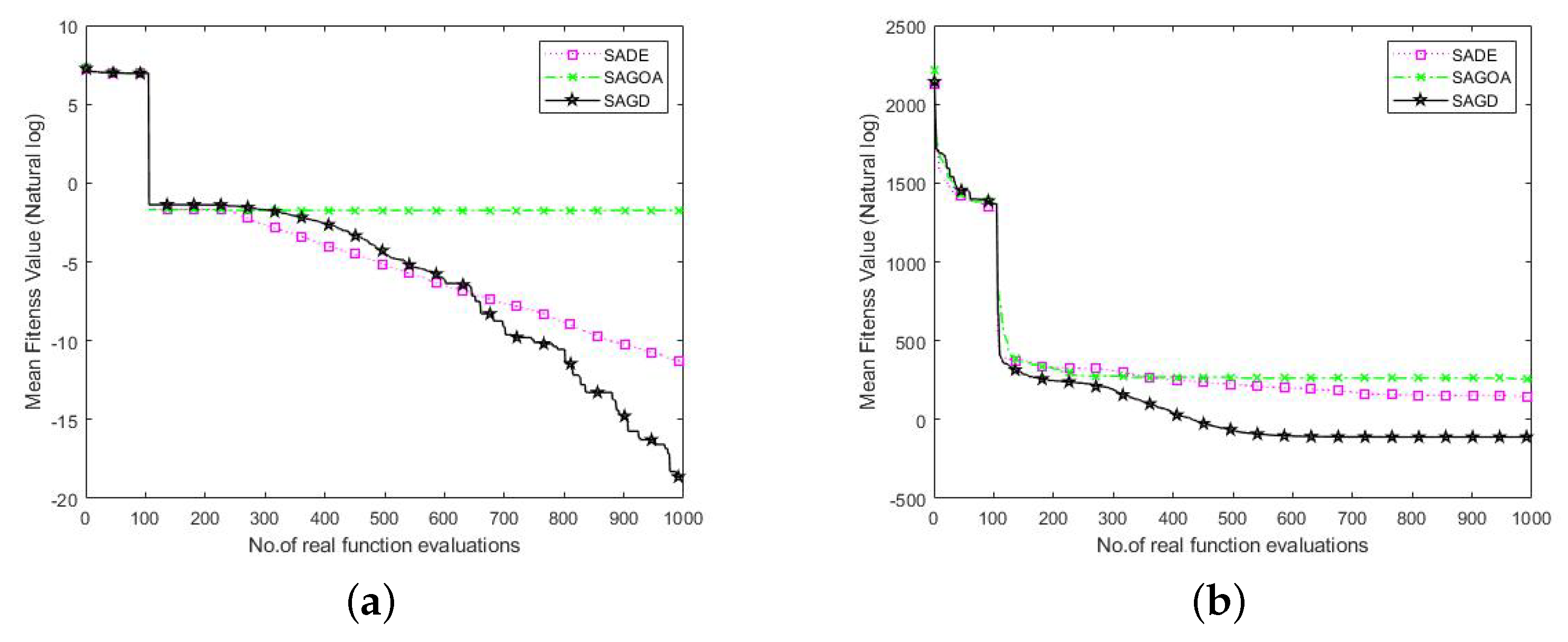

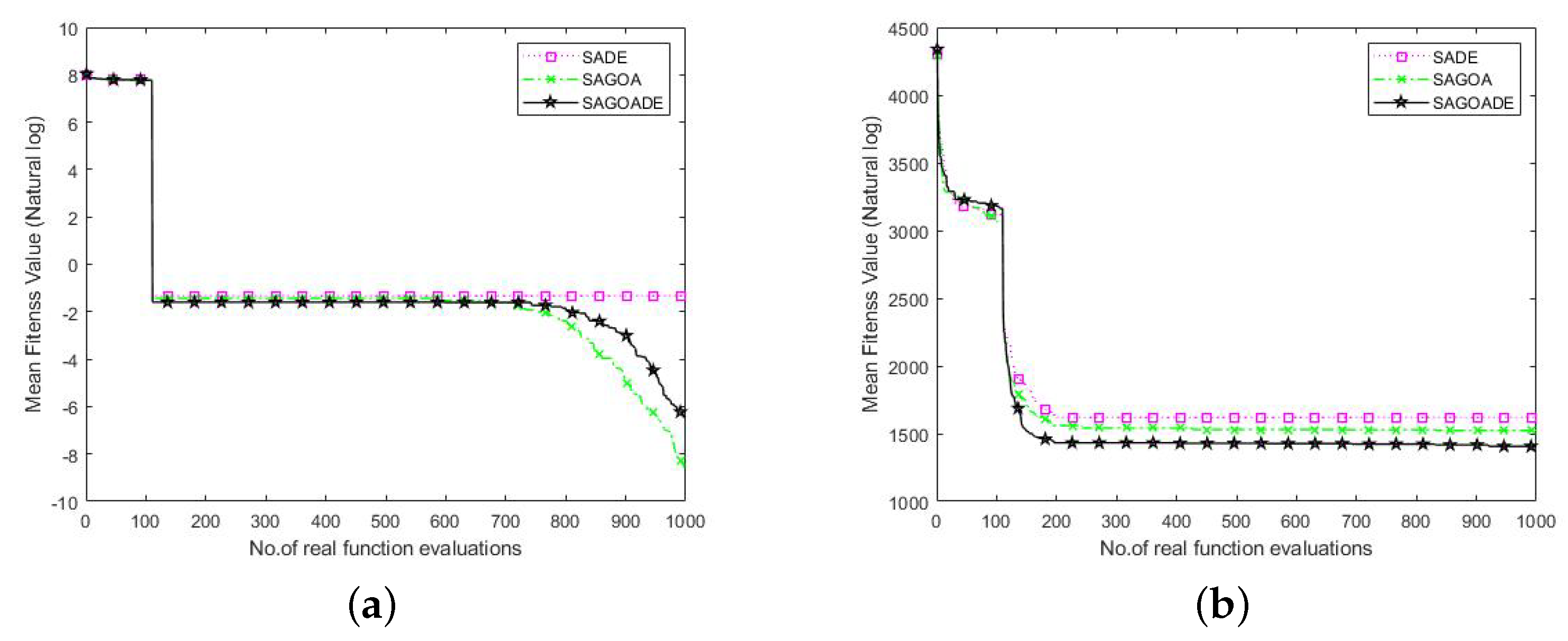

The SAGD algorithm was tested in seven benchmark functions and compared with other surrogate-assisted and meta-heuristic algorithms, including statistical analysis and iterative curves. The SAGD algorithm was applied to the 3D WSN node deployment problem to improve the network signal coverage.

The rest of this paper is organized as follows.

Section 2 introduces the RBF surrogate model, GOA, DE algorithm, and 3D WSN node deployment problem.

Section 3 describes the proposed surrogate-assisted hybrid meta-heuristic algorithm based on the historical surrogate model add-point strategy.

Section 4 compares the SAGD algorithm with other algorithms on seven benchmark functions and shows how it has been applied to the 3D WSN node deployment problem. Finally, conclusions are given in

Section 5.

3. Proposed SAGD Method

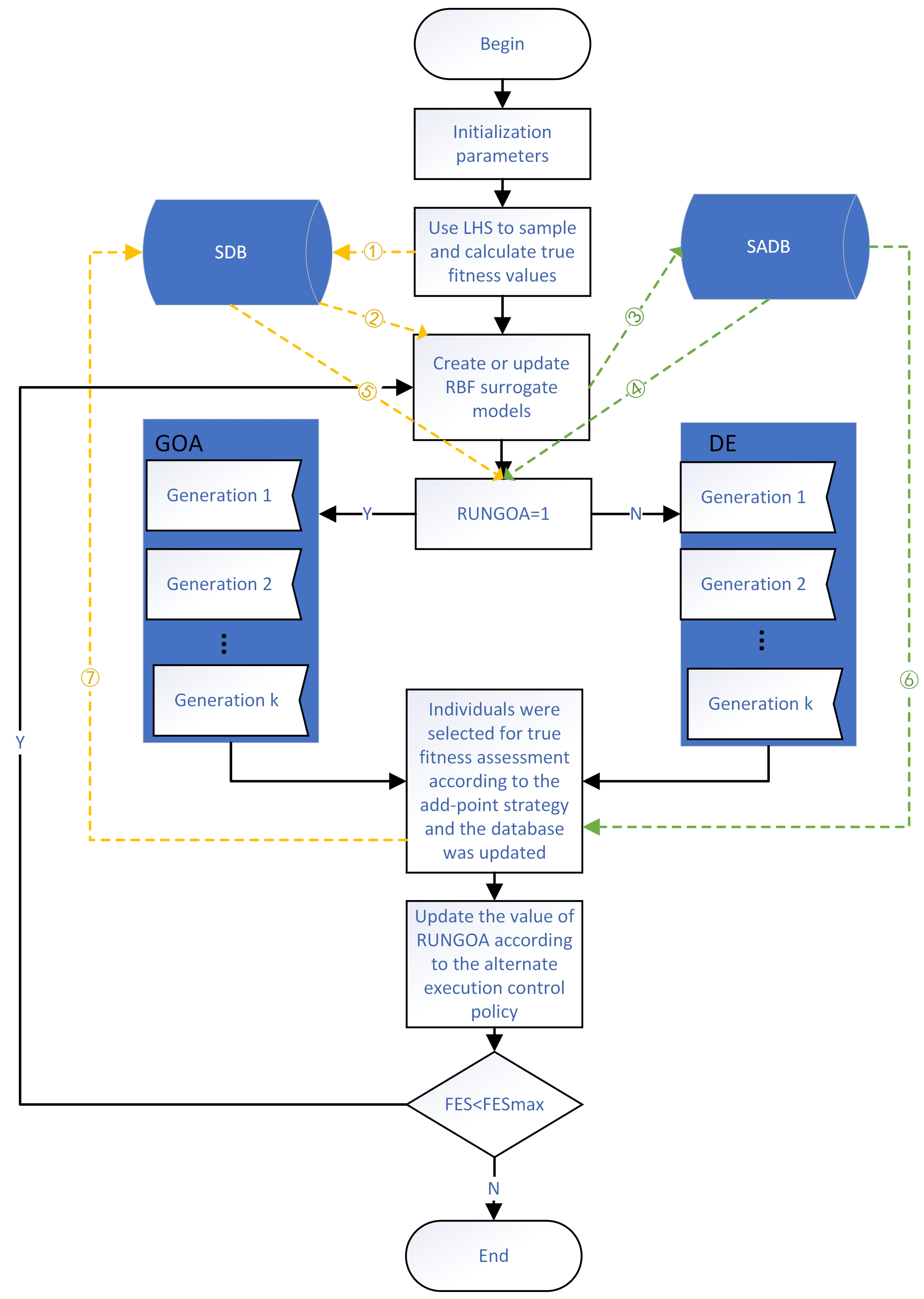

This section introduces our proposed algorithm, which consists of four main parts: population initialization, surrogate model building or updating, execution of the hybrid meta-heuristic algorithm, and execution of the add-point strategy. The general framework of the algorithm SAGD is given in

Figure 2, where the sample database (SDB) holds the data samples and their true fitness values, and the surrogate model database (SADB) keeps the information of all the surrogate models. The black line represents the algorithm stream, the green dashed line represents the surrogate model data stream, and the yellow dashed line represents the sample data stream.

First, in the population initialization phase, we initialize the solution space using the LHS method, where the LHS method uses the lhsdesign function that comes with MATLAB. The fitness values are then calculated for all individuals and the mean individual using the true fitness function. All individuals, the mean individual, and their fitness values are saved to the SDB, as shown in flow ➀ of

Figure 2, for subsequent surrogate modeling and meta-heuristic algorithm initialization. The number of initial individuals is consistent with the population size of the meta-heuristic algorithm. The population size, ps, was set to 5 × D for the expensive optimization problems in 10, 20, and 30 dimensions. For the expensive optimization problems in 50 and 100 dimensions, the population size ps was set to

.

The second step is the creation or updating of the surrogate model, where the data in the SDB are used for modeling, as shown in flow ➁ in

Figure 2. The predictive power of the surrogate model is very important for the whole algorithm, so we want to build a good surrogate model with good performance. In general, the global surrogate model can fit the contour of the problem well, but it is difficult to apply the global surrogate model for high-dimensional problems, so we used local data to build the surrogate model in this study. The local surrogate model can speed up the search for promising regions and improve the accuracy of the surrogate model. When selecting the data for building the local surrogate model, the method of sample selection and the size of the data volume are both important. Here, we used the sample of neighbors of the current population in the SDB to build the local radial basis function surrogate model; the number of neighbors per individual was set to 5 × D for 10, 20, and 30 dimensions; and the number of neighbors was set to D for 50 and 100 dimensions, as shown in Algorithm 3. When updating the local surrogate model, we saved the information of the surrogate model at this time to the surrogate model database SADB and then updated the surrogate model. At the same time, the information was not saved when the surrogate model was built for the first time, as shown in flow ➂ in

Figure 2.

| Algorithm 3 Pseudo-code for constructing a local radial basis function model. |

- 1:

if update the local surrogate model then - 2:

The information of the local surrogate model at this time is kept for the add-point strategy; - 3:

end if - 4:

for each do - 5:

Use Euclidean distance to find the n nearest neighbors of the individual from the database and form the set ; - 6:

end for - 7:

Merge the sets generated by each individual to form the training set ; - 8:

Constructing a new local surrogate model with the training set ;

|

The third step is to evolve the population using GOA and DE algorithms. The fitness function used here is the function fitted by the RBF surrogate model in the third step, as shown in flow ➃ in

Figure 2. The GOA has a strong global search ability and better performance in high dimensions, and the position update model of the GOA can make its escape from a local optimum. Still, the GOA is more complicated. DE has good local searching and is fast. Still, it mostly perturbs the optimal individual, so the effect is not ideal when solving high-dimensional multimodal problems, and it will fall into a local optimum. Therefore, we combine the GOA and DE; i.e., we use the GOA or DE to evolve alternately. We propose a control strategy for alternate execution of the algorithms, where we start the DE algorithm if the locations of the individuals in the population updated by the GOA are present in the SDB and vice versa. We start the DE algorithm if the GOA does not find a better solution, and vice versa.

Here we add the optimal restart strategy based on generations after performing K generations. We select the individuals in the population at this time by the add-point strategy. We add the selected individual and the true fitness value to the SDB. At this time, the data in the database have changed. We select the best ps individuals from the database as the initialized population of the meta-heuristic algorithm to restart the evolution, as shown in flow ➄ in

Figure 2. This generation-based optimal restart strategy prevents the GOA and DE from being misled by the errors generated by the fitted surrogate models. Using the best individual information in the SDB helps search for promising regions quickly. Again, because the local RBF model is updated every K generations, both meta-heuristic algorithms can fully explore the approximate fitness landscape.

As the evaluation of fitness is time-consuming in many complex problems, it is unacceptable for us to evaluate the true fitness value for unimportant individuals or individuals with no future, so it is important to select the appropriate individuals for evaluation. After K generations of meta-heuristic algorithm updates, our new add-point strategy for selecting appropriate individuals for true fitness evaluation kicks in. As the surrogate model is a fit to the real function, the historical surrogate model also contains useful information, and the predictive ability of the new surrogate model is usually better than that of the historical surrogate model. Thus, we use the information from the surrogate model database SADB for the sample selection, as shown in flow ➅ in

Figure 2. If the fitness value obtained on the new surrogate model is better than that obtained on the historical surrogate model, the individual is proved to be more promising.

First, select the optimal individual in the population optimized by the GOA and DE algorithms, then randomly generate an integer tpc in the range of [1, ps] and calculate the average value of the first tpc individuals. The fitness values of the optimal individuals and the mean individuals are calculated using the historical surrogate model and the new surrogate model, respectively. Suppose the new surrogate model’s fitness value is better than the historical surrogate model’s. In that case, the fitness value of this individual on the true fitness function is calculated, and this individual and its true fitness value are saved in the SDB. Finally, suppose both the optimal individual and the mean individual are not added to the SDB. In that case, the fitness values of the new surrogate model for both individuals are inferior to the fitness values of the historical surrogate model. One individual is randomly selected among the first third of the entire population to calculate its true fitness value, and the random individual and its fitness value are saved to the SDB, as shown in flow ➆ in

Figure 2. The pseudo-code of add-point strategy based on historical surrogate model is given in Algorithm 4.

| Algorithm 4 Add-point strategy based on historical surrogate model information. |

- 1:

Historical surrogate model information has been saved in SADB - 2:

Obtain the best individual in the current population (best), the mean of the top tpc individuals (mean), and the random individual in the top third (rand). - 3:

The fitness values of the optimal individual, the mean individual and the random individual were calculated using the new RBF surrogate model and the historical RBF surrogate model , respectively. - 4:

if then - 5:

Then the best individual and its true fitness value are saved to the SDB. - 6:

end if - 7:

if then - 8:

Save the mean individual and its true fitness value to SDB. - 9:

end if - 10:

if Both the best individual and the mean individual were not added to the SDB then - 11:

Then the rand individual and its true fitness values are saved to the SDB. - 12:

end if

|

It is worth noting that one or two sample points are added at a time in this add-point strategy. In the above steps, population initialization and surrogate model building are run only once. Then, surrogate model updating, hybrid meta-heuristic algorithm optimization, and the add-point strategy are executed iteratively. The process stops when the actual number of function calculations reaches the specified maximum number. We define that

when

, and

when

. Algorithm 5 gives the pseudo-code of the proposed SAGD algorithm.

| Algorithm 5 Pseudo-code for the SAGD algorithm. |

- Input:

= 1; = ps + 1; = 0; = 30; : random values between 0 and 1; - Output:

Optimal solution and its fitness value; - 1:

The solution space is initialized by Latin hypercube sampling; - 2:

Use the real fitness function to calculate the fitness value for all individuals and the average individual; - 3:

whiledo - 4:

Initialize the population with the first ps samples in the SDB; - 5:

if then - 6:

Preserving the information of the local surrogate model and constructing the local surrogate model using Algorithm 3; - 7:

else - 8:

Putting populations and mean individuals and their true fitness values into a database; - 9:

end if - 10:

while do - 11:

if then - 12:

for do - 13:

if then - 14:

Execution of Equation ( 7); - 15:

else - 16:

Execution of Equation ( 16); - 17:

end if - 18:

A local RBF surrogate model is used to estimate the fitness values of the evolved individuals and the original individuals, and the individuals with high fitness values are retained; - 19:

end for - 20:

gen = gen + 1; - 21:

else - 22:

for do - 23:

Generation of a mutated individual by Equation ( 22); - 24:

Generation of test individuals by Equation ( 25); - 25:

A local RBF surrogate model is used to estimate the fitness values of the evolved individuals and the original individuals, and the individuals with high fitness values are retained; - 26:

end for - 27:

gen = gen + 1; - 28:

end if - 29:

end while - 30:

if When the meta-heuristic algorithm updates the location of the population individuals that are all present in the SDB then - 31:

then invert the value of RunGOA; - 32:

else Calculating the true fitness value by selecting individuals using the add-point strategy of Algorithm 4 to update the SDB; - 33:

if The meta-heuristic algorithm did not find a better solution then - 34:

Invert the value of RunGOA; - 35:

else - 36:

Retain the value of RunGOA; - 37:

end if - 38:

end if - 39:

end while

|