Abstract

Inspired by the bamboo growth process, Chu et al. proposed the Bamboo Forest Growth Optimization (BFGO) algorithm. It incorporates bamboo whip extension and bamboo shoot growth into the optimization process. It can be applied very well to classical engineering problems. However, binary values can only take 0 or 1, and for some binary optimization problems, the standard BFGO is not applicable. This paper firstly proposes a binary version of BFGO, called BBFGO. By analyzing the search space of BFGO under binary conditions, the new curve V-shaped and Taper-shaped transfer function for converting continuous values into binary BFGO is proposed for the first time. A long-mutation strategy with a new mutation approach is presented to solve the algorithmic stagnation problem. Binary BFGO and the long-mutation strategy with a new mutation are tested on 23 benchmark test functions. The experimental results show that binary BFGO achieves better results in solving the optimal values and convergence speed, and the variation strategy can significantly enhance the algorithm’s performance. In terms of application, 12 data sets derived from the UCI machine learning repository are selected for feature-selection implementation and compared with the transfer functions used by BGWO-a, BPSO-TVMS and BQUATRE, which demonstrates binary BFGO algorithm’s potential to explore the attribute space and choose the most significant features for classification issues.

1. Introduction

Nowadays, with the rapid growth of the computer industry, a wide variety of data has been affected. The high speed of development has led to a discontinuous growth in the dimensionality and sample size of the data collected. Managing these data is becoming increasingly difficult. In the early stages of computing, attempts were made to manage these data sets using manual management, but as features in the data set increased, this approach became impractical [1,2]. With further developments, data mining and machine learning application techniques have been developed. Practical applications such as statistical analysis, neural networks and pattern recognition also have surfaced [3,4,5,6]. However, the data collected are often accompanied by high noise levels, mainly caused by the immaturity of the technology used to collect the data and the provenance of the data themselves. There is no doubt that extracting useful content and patterns from such large and noisy data is an extremely challenging task [7].

Feature selection (FS) is an effective method for reducing dimensionality and removing noisy and unreliable data. The aim is to remove unnecessary features from the whole feature set and finally obtain a representative subset [8]. FS is very important and essential for data scientists and machine learning practitioners. A good feature-selection method can simplify models, improve learning accuracy, reduce runtime and help understand the underlying structure of the data, which can significantly influence further improved models and algorithms. A high-quality sample is key to training a classifier. The performance of a classifier is directly influenced by the presence of redundant or irrelevant features in the sample [9].

A realistic data set is usually represented by a collection of data containing plenty of features, not all of which are useful for classification. Redundant, irrelevant features can reduce classification accuracy. As the dimensionality of the data rises and the search space expands, selecting the best subset of features becomes increasingly challenging. In general, the enumeration method cannot solve the problem of finding the optimal subset of features, so some strategies are needed to find the subset of features, and the popular search strategies are global search, heuristic search and random search [10,11,12]. Although existing search techniques have achieved good results in feature selection, there is still a high probability of slipping into a local optimum. Therefore, to solve the feature selection-problem more effectively, a direct and effective search strategy is needed.

The heuristic optimization algorithm is a common optimization method to solve optimization problems. It has a high search power and search speed for NP problems, which can obtain a better solution in polynomial time [13,14]. It solves feature selection by converting successive optimization algorithms into binary versions using transfer functions (tfs).

Heuristic algorithms are inspired by nature, social behavior or the behavior of groups of organisms [15]. It puts forward feasible solutions to optimization problems by imitating natural phenomena and biological behaviors, but the quality of solutions is very different. The original heuristics suffered from the following problems: they rely too much on information about the organization of the algorithm, have low applicability and can easily slip into a local optimum solution. With the development of heuristics, meta-heuristics have emerged that are different from the original heuristics, adding the idea of random search and possessing generality compared to traditional heuristics. Although the meta-heuristic algorithm is improved compared with the original heuristic algorithm, neither is guaranteed to obtain an optimal global solution, and due to the addition of the idea of random search, repeated executions may converge to a globally optimal solution. There are four main categories of meta-heuristic algorithms based on the type of inspiration: evolution-based algorithms, group intelligence-based algorithms, human-based algorithms, physics and chemistry-based algorithms [16]. The main inspiration for evolution-based algorithms comes from the evolutionary law of survival of the fittest (Darwin’s law).

Genetic algorithms (GA) [17,18,19] are the main representatives, such as differential evolution (DE) [20,21,22] and quasi-affine transformation evolution (QUATRE) [23,24,25]. Population intelligence optimization algorithms simulate group intelligence to reach a globally optimal solution. Each group in the algorithm represents a population of organisms that, through the cooperative behaviour of group members, can accomplish tasks that are impossible for individuals. Examples include Particle Swarm Optimization (PSO) [26], Cat Swarm Optimization (CSO) [27], Fish Migration Optimization (FMO) [28], Whale Optimization Algorithm (WOA) [29,30,31], etc. Human behavior, including teaching, social, learning, emotional and managerial behavior, is a major source of inspiration for human-based algorithms. Examples include teaching and learning-based optimization (TLBO) [32], social learning optimization (SLO) [33], social-based algorithms (SBA) [34], etc. Physical rules and chemical reflections in the universe inspire physics and chemistry-based algorithms. Examples include simulated annealing (SA) [35], gravitational local search (GLSA) [36], etc.

Nonetheless, discrete problems are always popular among optimization problems, such as feature selection and shop floor scheduling problems. Continuous optimization algorithms are not suitable for solving such problems, so there is a need to convert continuous optimization algorithms into discrete versions. So far, scholars have proposed many binary versions of algorithms applied to feature selection, while many scholars have also implemented improvements to existing binary algorithms and achieved better results. For example, the classical PSO, GWO and PIO algorithms have been successfully applied to feature selection. Hu et al. improved BGWO by introducing a new transfer function to replace the S-shaped function. Then, a new parametric equation and an improved transfer function were proposed to improve the quality of the solution [37]. Tian et al. analysed BPIO, introduced four new transfer functions along with an improved velocity update equation, and successfully implemented feature selection with better results [38]. Liu et al. devised an improved multi-swarm PSO (MSPSO) to solve the feature-selection problem while combining SVM with F-score methods to improve generalization [39]. However, many metaheuristics have been redesigned without consideration of the problem of sliding into local optima.

Bamboo Forest Growth Optimization (BFGO) is a meta-heuristic algorithm inspired by the bamboo growth process, recently proposed by Chu et al. It is applied to wireless sensor networks (WSNs) and has been effective in reducing energy consumption and improving network performance [40]. This research aims to propose a BFGO with a binary version for the application of discrete optimization problems such as FS. This paper converts the algorithm to a binary version using transfer functions, the better-known ones being the S−type transfer function family and the V−type transfer function family [41]. A novel type of transfer function is also introduced: the Taper-shaped transfer function [42]. The 23 benchmark functions test and compare the performance of different types of transfer functions. The evaluation of the Binary Bamboo Forest Growth Optimization (BBFGO) algorithm against cutting-edge, sophisticated and efficient algorithms shows that the proposed BBFGO possesses optimal or sub-optimal solutions to the problem of finding optimal values. The main contributions are as follows.

- The first binary bamboo forest growth optimization algorithm (BBFGO) is proposed.

- Based on a mathematical analysis approach, the first analysis is carried out for the search space of binary BFGO. Based on the results of this analysis, the V−transfer function is stretched in two ways, two new curvature V−transfer functions for binary BFGO are proposed and the new curvature V−transfer function is successfully verified to have better performance in the test function.

- The long-mutation strategy is introduced to the original BBFGO to avoid solution stagnation, and a new mutation approach is proposed.

- BBFGO and BBFGO with the new mutation method are compared in test functions with advanced algorithms, and it is confirmed that the long-mutation strategy of the new mutation method improves the performance of BBFGO. Compared with the advanced algorithm, the new mutation strategy leads BBFGO to complete the reversal.

- BBFGO is applied to feature selection and compared with cutting-edge algorithms, which performs well in low and high dimensional classification accuracy. In particular, it is more competitive on high-dimensional data sets.

The paper is organized as follows: Section 2 introduces bamboo forest growth optimization, Section 3 presents a concrete implementation of binary bamboo forest growth optimization based on mathematical analysis, proposing three classes of transfer functions, BBFGO-S, BBFGO-V, BBFGO-T, and introducing a novel mutation approach to prevent the optimization process from stalling. Section 4 shows the experimental results of the families of BBFGO transfer functions compared to BPSO-TVMS, BGWO-a and BQUATRE and the effect of ABBFGO on the performance improvement. Section 5, ABBFGO-S, ABBFGO-V and ABBFGO-T algorithms are used for feature selection and compared with the three advanced algorithms in Section 4.

2. Bamboo Forest Growth Optimization

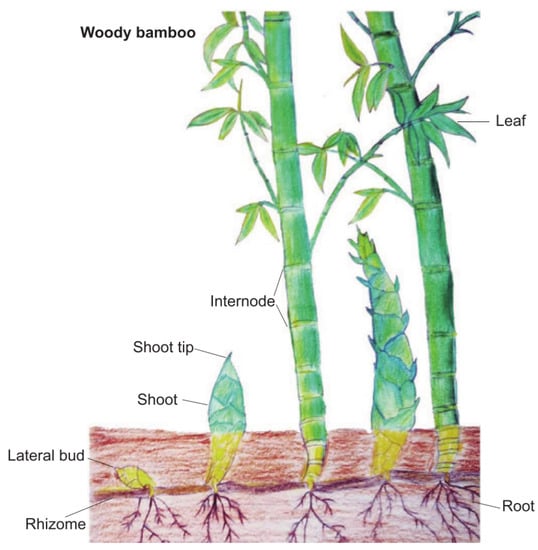

FS is an effective method for dimensionality reduction of data, which is widely used and plays an important role in machine learning and pattern recognition. By reducing the dimensionality of the data set, the computational speed of the model is improved. This section focuses on bamboo forest growth optimization. Bamboo is a fast-growing herb and one of the world’s fastest-growing plants. Bamboo has underground rhizomes, also known as bamboo whips, which grow horizontally and produce roots on the nodes called whip roots. Each node has a shoot that has the opportunity to grow into a new whip or bamboo shoot. The new bamboo whip will continue to spread underground, and the bamboo shoot will break through the soil and develop into a bamboo pole, and then gradually develop into a bamboo forest. Figure 1 shows the specific structure of the bamboo. Bamboo whip plays an important role in the overall growth of bamboo forests, and it expands the living area of bamboo and provides nutrients for the growth of bamboo. According to Guihua Jin [43], bamboo has unique growth characteristics compared to other grasses because the tall stems of bamboo grow rapidly within 2–3 months with a slow and slow growth rhythm. This trait may help them adapt to the environment and stand out from the competition to survive. The growth of a bamboo forest can be divided into two stages: (a) bamboo whip extension; (b) bamboo shoot growth. In addition, a bamboo forest can correspond to more than one bamboo whip, and a bamboo whip can only belong to one bamboo.

Figure 1.

The structure of bamboo.

Recently, Chu and Feng et al. proposed a novel optimization method inspired by the growth behavior of bamboo forests: bamboo forest growth optimization (BFGO). It views the global extension of the bamboo whip as the development phase of the algorithm and the growth of bamboo shoots as the exploration phase of the algorithm, where the shoots emerge through the soil to become bamboo shoots, and the emerged bamboo shoots have only a small probability of growing into bamboo.

1. Extension of the Bamboo Whip

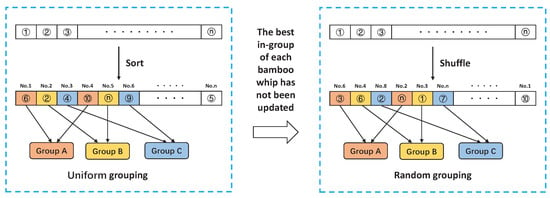

Based on the relationship between bamboo forest and bamboo whip, the concept of clustering is added to the algorithm. While optimizing the algorithm, individuals are grouped by memes, and dynamic adjustment is made between uniform grouping and random grouping. The uniform grouping is based on individual fitness, and the fitness is arranged in descending order. All the individuals in the initial population are arranged from high to low according to the fitness function value to form a sequence, and then the meme group is classified to divide the bamboo forest into multiple meme groups evenly. Random grouping is used when the renewal of the best individuals of the bamboo whip within each group has all stalled and will re-break up the individuals for random assignment. The idea of meme grouping is shown in Figure 2.

Figure 2.

The idea of meme grouping.

The direction of the extension of the bamboo whip underground is influenced by three factors: group cognition, bamboo whips memory and bamboo forest centre, which correspond to global optimal, intra-group optimal and bamboo forest centre, respectively. The formula for the centre position is shown in Equation (1). The formula for the extension direction is shown in Equations (2)–(4).

where represents the ith bamboo shoot position on the k bamboo whip, is the current bamboo shoot position, is the global optimal bamboo shoot position, is the optimal bamboo shoot position on the k bamboo whip and is the central position of the bamboo forest. Moreover, , and represent the degree of extension of the current bamboo shoot position to , and , respectively. The formula for the update is shown in Equation (5).

where Q is a crucial parameter impacting the step size of the algorithm development and steadily reduces from 2 to 0 as the number of iterations grows, t is the current iteration, and T indicates the maximum number of iterations; is a random number from 0 to 1. Taking a random number and comparing it with 0.4 and 0.8 to determine the direction of extension of the next generation of solutions ensures the diversity of solutions and enhances the algorithm’s ability to find the best.

2. Shoot Growth of the Bamboo

As we all know, the growth of trees is inevitably affected by many random factors. During the whole growth process, these factors have a large and small impact on them, both individually and comprehensively. At present, it is not possible to accurately determine all of them. Even if they can be measured, the relationship between the factors is also random. Therefore, when describing the tree growth process, the tree-measuring factors are generally regarded as random variables, and the tree growth process is described as a random process. Due to the interference of random factors and the different site conditions, the cumulative growth amount of different bamboo at a specific time t is randomly changed, which shows that the growth process of bamboo is random. Based on the characteristics of bamboo shoot growth, Shi et al. constructed a stochastic process model of bamboo shoot growth by using stochastic process theory and the Sloboda growth equation [44]. There are two stages to the growth process: the slow growth stage and the fast growth stage. Combined with this model, the bamboo shoot growth stages grow as shown in Equation (7).

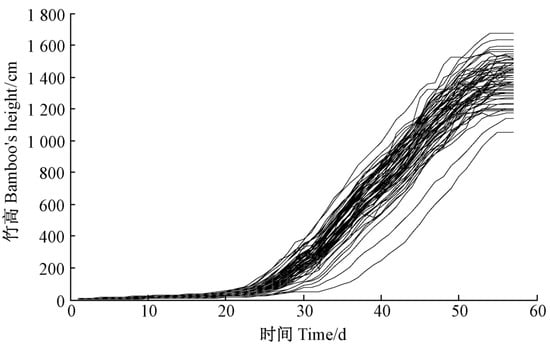

The shape of the bamboo growth increment model is shown in Figure 3. The high growth of the bamboo shoot stage is completed in about 55 days, and the growth of the bamboo shoot stage can be divided into 2 stages around the 25th day: 1–25 days is the first stage, in which the growth of bamboo is relatively gentle, 25–55 is the second stage, in which the bamboo shows explosive growth. Moreover, represents the maximum bamboo height in a particular growth environment, varying with the growth environment; b is the bamboo measurement factor, a random variable; and is the shape parameter of the model, independent of the environmental conditions.

Figure 3.

Growth curve of bamboo shoots.

According to the incremental calculation of changes at different times, the calculation equation is shown in Equation (8). In the multi-dimensional case, the result of Equation (8) is a vector.

where represents the relationship between the increment between the two generations and and , the denominator represents the distance between and , and indicates the cumulative length of the bamboo growth within the t-th generation.

The individual renewal of bamboo shoots at this stage is shown in Equations (9) and (10). In the multi-dimensional case, the result of Equation (10) is a vector.

where represents the ratio relationship between and the distance between and , which varies to a large extent in the early stages of exploration and stabilizes or even remains constant in the later stages of exploration as converges extremely closely to and . This results in a more extensive exploration in the early stages and a slow growth towards convergence in the later stages.

In Equation (9) ‘+’ means the distance increases and ‘−’ means the distance decreases, increasing the capacity to search for the optimal solution by expanding the search range and balancing global exploitation and local exploration. The pseudocode of BFGO is shown in Algorithm 1.

| Algorithm 1 Pseudocode of BFGO |

|

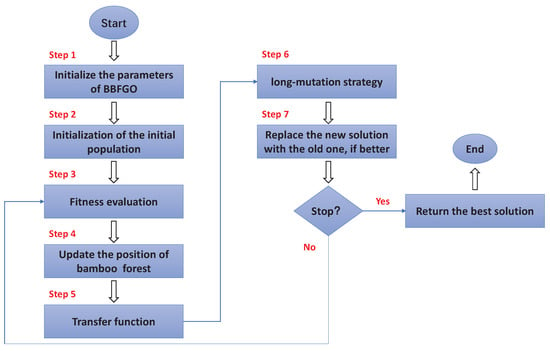

3. Binary Bamboo Forest Growth Optimization Algorithms

In bamboo forest optimization, bamboo constantly changes its position in space. In some special problems, such as feature selection, the solution is limited to binary 0, 1 values, which inspires a special version of BBFGO. BFGO is a novel algorithm for population evolution by updating positional information through optimal global directions, intra-group optimal directions and central direction guides. BFGO incorporates the idea of clustering to achieve co-competition between multiple groups and has a stronger merit-seeking capability compared to other meta-heuristics. The mechanism for converting continuous BFGO to binary BFGO is explained in Section 3.1. The advanced binary BFGO framework with integrated long-mutations is presented in Section 3.2.

3.1. Binary Bamboo Forest Growth Optimization (BBFGO)

The standard bamboo forest growth optimization algorithm has continuous solutions and can update the equations without restriction constraints, but for feature selection, the search space needs to be set up as a hypercube, which means that the elements of each solution need to be integrated as 0 or 1. The graphical interpretation of BBFGO is shown in Figure 4.

Figure 4.

The graphical interpretation of BBFGO.

3.1.1. Mathematical Analysis

Under the constraints of the binary condition, the positions of the bamboo whips and shoots cannot be moved arbitrarily in space, so it is necessary to consider the position structures that belong only to the binary BFGO. For the sake of a simple description of the mathematical model, only the one-dimensional case is considered after analyzing the range of values of the individual parameters. From Equation (6), Q ∈ (0, 2), and from Equations (1)–(3), , and are all ∈ (0, 1), and is a random number between 0 and 1, so ∈ (0, 1).

In the bamboo whip extension stage, the globally optimal extension direction in Equation (4) is first analysed. Since and only take 0 or 1, there are four occurrences of calculating the next generation position, and the value of is calculated as follows.

(1) if = 0 and = 0

= + Q × ( × − ) × = 0

(2) if = 0 and = 1

= + Q × ( × − ) × = Q × (−1) ×

since Q ∈ (0, 2), ∈ (0, 1), then = (−Q × ) ∈ (−2, 0).

(3) if = 1 and = 0

= + Q × ( × − ) × = 1 + (Q × × )

since Q ∈ (0, 2), ∈ (0, 1), ∈ (0, 1), then = 1 + (Q × × ) ∈ (1, 3).

(4) if = 1 and = 1

= + Q × ( × − ) × = 1 + (Q × ( − 1) × )

since Q ∈ (0, 2), ∈ (0, 1), ∈ (0, 1), then = 1 + (Q × ( − 1) × ) ∈ (−1, 1).

From the above analysis, it can be obtained that ∈ (−2, 3). Similarly, when calculating the optimal extension direction within the group, as the constituent elements of the solution are only 0 or 1, then the analysis method is the same as above, and ∈ (−2, 3) is obtained. When calculating the central extension direction, the formula for C(k) is given by Equation (1), where is composed of 0 or 1, then the final result of C(k) ∈ (0, 1), which is brought into the analysis process, the final result is ∈ (−2, 3). In summary, it can be concluded that ∈ (−2, 3).

To avoid the search agent missing better solutions due to too large a step, the step size of the exploration in the bamboo shoot growth phase is therefore limited. The accumulation at a specific moment is restricted in Equation (7) to the interval [0, 1].

Since takes values only 0 and 1 and ∈ [0, 1] and ∈ (0, 1), in the one-dimensional case there are two occurrences and the value of is calculated as follows:

(1) if = 0

= ± × = 0 ± ×

since ∈ [0, 1], ∈ (0, 1), then ∈ (−1, 1).

(2) if = 1

= ± × = 1 ± ×

since ∈ [0, 1], ∈ (0, 1), then ∈ (−2, 2).

Through the analysis of the two stages, it can be concluded that ∈ (−2, 3) and ∈ (−2, 2). The final results will be used for further discussion of the transfer function.

3.1.2. BBFGO with Transfer Functions

In BFGO, the solutions represented by the bamboo whip and the bamboo shoot are both continuous values, however, FS is a binary optimization problem where the continuous solution needs to be converted to a discrete solution. The transfer function is one of the dominant methods for solving this type of problem. Transfer functions can map continuous values to the interval 0–1 and then update to binary values of 0 or 1 depending on the probability.

In this study, seven tfs are used for this conversion task. Of these seven tfs, two belong to the S-shaped, three to the V-shaped and two to the Taper-shaped classes. The key job of these tfs is to determine the probability of updating the value of an element to 1 or 0. In FS, the solution consists of either 0 or 1. The pseudocode of BBFGO is shown in Algorithm 2. The main three classes of tfs are described as follows:

S-shaped transfer function (S-tf):

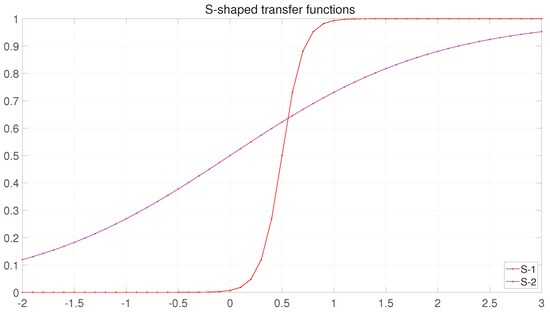

The curves of the original sigmoid function and another variant are shown in Figure 5. The transfer vector (S) is calculated according to S-shaped using Equations (11)–(13).

Figure 5.

The curves of S-shaped transfer functions.

After obtaining by Equation (5), it is updated through Equations (11)–(13). Similarly, , which is obtained from Equation (9), is updated by Equations (11)–(13).

where is the element of the dth dimension in the ith solution and is the probability value of the S-tf based on the mapping of the element of the dth dimension in the ith solution. Whether the final element takes 0 or 1 is determined by Equation (13), with rand being a random value between 0 and 1.

V-shaped transfer function (V-tf):

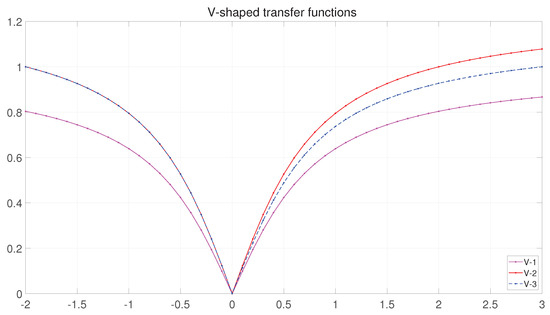

The curves of the original V-tf and the two variants are shown in Figure 6. The transfer vector (V) is calculated according to V-shaped using Equations (14)–(16):

Figure 6.

The curves of V-shaped transfer functions.

From the curve V−1 in Figure 6, it can be seen that in the binary BFGO, the bounded maxima of in the interval [−2, 3] are 0.8038, and 0.8669, respectively, indicating that, even when the bounded values of −2 or 3 of the search space of the search agent are reached, there are still probabilities of 0.1962 and 0.1331, respectively, that 1 is not reached. This contradicts our initial aim to make the search agent’s value large when its large probability of its becoming 1 contradicts this. To resolve this situation, a method of stretching the transfer function is adopted. Since the interval is not symmetric, two approaches to stretching are taken.

where is the probability value of the V-tf based on the mapping of the element of the dth dimension in the ith solution. The elements take the value of 0 or 1 as determined by Equation (17).

Taper-shaped transfer function(T-tf):

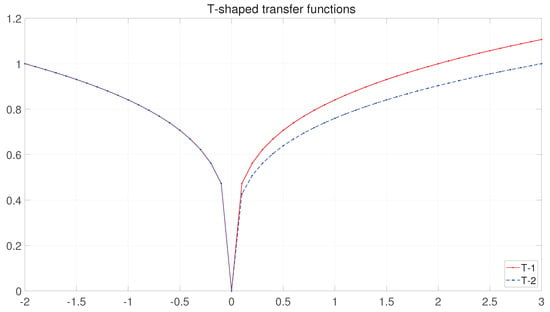

The T-tf is a novel transfer function, a primary function constructed from a power function. Its uniform formula is shown in the Equation (18).

where A is a positive real number and n can determine the curvature of the function.

Since the function curve resembles the taper’s tip, it is called the Taper-shaped transfer function. The T-tf has a beneficial effect on the execution time of the elemental discretization process compared to the S-tf consisting of an exponential function and the V-tf consisting of a trigonometric or inverse trigonometric function.

Since the search space of the T-tf is determined by A in Equation (18), however, the analysis in Section 3 shows that the search space of the binary BFGO is a non-symmetric interval, being [−2, 3]. Therefore two different curvature Taper-shaped transfer functions (T-tfs) are proposed. The curves are shown in Figure 7. The transfer vector (T) is calculated according to Taper-shaped using Equations (19) and (20):

where is the probability value of the T-tf based on the mapping of the element of the dth dimension in the ith solution. The elements take the value of 0 or 1 as determined by Equation (21).

| Algorithm 2 Pseudocode of BBFGO |

|

Figure 7.

The curves of Taper-shaped transfer functions.

3.2. Advanced Binary Bamboo Forest Growth Optimization (ABBFGO)

When a solution is good enough, it will attract other search agents, who will quickly converge towards that solution position. However, there are many local optima in the optimization process. Once BBFGO is stuck in a local trap, all search agents are exploited within a narrow region. The group’s diversity for this feature is discarded, and the best solution is not updated for a while. To break out of the local trap, advanced BFGO (ABBFGO) uses a long-mutation strategy to avoid the algorithm entering stagnation.

Long-mutations, similar to variations in genetic algorithms, are added to improve the global search. The mutation is an important component of evolutionary algorithms because it prevents populations from losing diversity and ensures that wide search space is covered. The long-mutation differs from the short-mutation in that the short-mutation randomly selects a dimension of the solution to mutate, whereas the long-mutation mutates every dimension of the solution.

In this, a new mutation strategy is proposed, which uses a strategy influenced by elites to change the position of the bamboo. The elites are divided into historical elites and contemporary elites, where the historical elites consist of the best solutions within the historical group, and the best solutions within the group are saved to the historical elites when the algorithm iterates to update the global optimum, and the contemporary elites consist of the global optimum and the best solutions within the group in the most recent iteration. When the number of iterations exceeds 10 and the global optimum does not change within three generations, the long-mutation strategy is used to try to escape the trap.

In feature selection, 1 indicates that the feature is used and 0 indicates that the feature is not used. Feature selection aims to achieve high accuracy in classification while selecting as few features as possible. So the solution consists of either 0 or 1. The pseudocode of ABBFGO is shown in Algorithm 3. The long-mutation process considers the influence of contemporary and historical elites on mutation, so each elite type produces one solution, and the following strategy is used when mutating each dimension.

where indicates the element in the d-dimension of the long-mutation generating solution. is the rate at which the feature is not selected in the d-dimension of the historical elite pool.

where indicates the element in the d-dimension of the long-mutation generating solution. , is two randomly selected elements in dimension d of the contemporary elite pool whose values are either 0 or 1.

| Algorithm 3 Pseudocode of ABBFGO |

|

4. Experimental Results and Analysis

In this section, the simulation experiment process of BBFGO is mainly introduced. The main purpose is to reveal the effects of various transfer functions and stretched transfer functions on the performance of binary BFGO through 23 benchmark test functions. Table 1 describes the basic information of these 23 benchmark functions. Single-peak functions (1–7), multi-peak functions (8–13) and fixed-dimensional functions (14–23) are examples of reference functions. Furthermore, opt is the minimum value that the test function can reach in theory; parameter space is the search space of the search agent; Dim is the dimension of the function.

Table 1.

The details of the benchmark functions.

To validate the results, BBFGO is compared with the original V-tf, the V-tf after two different ways of stretching and the T-tf. Table 2 shows details of the comparison methods. Table 3 shows information about the parameters of the optimization algorithm recommended and used in solving the examples. Each algorithm has a population size of 30. The maximum iteration is 500 times, and the experiment is run 30 times.

Table 2.

The details of the comparison methods.

Table 3.

Parameter settings of the algorithms used for comparison in the current study.

4.1. Experimental Analysis of the Transfer Functions and ABBFGO

Table 4 shows the results using different transfer function family algorithms and ABBFMO. A total of 23 benchmark test functions can achieve the minimum values shown in the Opt column of Table 1 for a given continuous solution space, however when testing the BBFGO family of algorithms, the solution space is hypercubic, meaning that the solutions all consist of binary values 0 and 1, so the optimum values that can be achieved under these conditions are not the same. The experimental data in Table 2 are marked in red if an algorithm achieves the minimum of the two-value condition or performs best in the test function. Experimental data where ABBFGO is more effective than BBFGO are marked in blue.

Table 4.

The statistical results of the BBFGO* family algorithms.

The single-peak test function without local traps is used to test the convergence performance of the different algorithms. If an algorithm performs well in the single-peak test function, it can be shown to have a strong convergence exploitation ability. The first seven functions in Table 4 show that the traditional sigmoid transformation function performs the least well in terms of convergence capability. In , , and , all except BBFGO reach the theoretical minimum in the binary condition. For , BBFGO-S and BBFGO-V1 reach the theoretical minimum, and the two stretched V2 and V3 do not outperform V1 in terms of optimal values, but this difference is not significant. In , all algorithms do not reach the theoretical optimum and are some distance away from the optimum. BBFGO obtains the worst result at 124.4, but V2 and V3 are stronger than V1 in terms of effect. T1 is closest to the optimum at 1.9333. In , the best result is obtained by the stretched V2, which shows that stretching the transfer function to the interval [0, 1] helps to improve the BFGO-V convergence development capability.

As the multi-peak function has many local optima, it can be used to test the performance of different algorithms to jump out of the local trap. For the test functions, BBFGO performs the worst, and other algorithms achieve theoretical minima in , , and . It indicates that BBFGO* has better performance in jumping out of local traps. For and , it can be seen that the stretched V2 and V3 outperform V1. It shows that stretching V-tf also decreases the likelihood of the binary BFGO slipping into a local optimum. BBFGO* performs very well in all the fixed-dimensional functions, except T2, which does not search for a theoretical minimum in . It illustrates two things: firstly, it reflects the excellent performance of BBFGO*. The second is that there is a limit to how much the transfer function can improve BBFGO performance, especially in functions with fewer local minima or lower dimensionality.

ABBFGO beats BBFGO in 12 out of 23 test functions, which are focused on single and multi-peak test functions. The long-mutation method using elite learning can help change the search space in case the algorithm becomes stuck. ABBFGO outperforms BBFGO significantly, demonstrating the effectiveness of the new mutation method.

4.2. Experimental Results for Cutting-Edge Algorithms

BQUATRE is a novel binary algorithm inspired by matrix iteration, Binary QUATRE (BQUATRE) is a binary version that can be used to solve binary application problems [46]. Binary Grey Wolf Optimizer (BGWO) extends the application of the GWO algorithm and is applied to binary optimization issues. In BGWO-a, new a-parameters are used to control the values of A and D, the ability to balance global and local search and the use of a new transfer function to improve the quality of the solution [37]. BPSO-TVMS introduces a new time-varying mirror S-shaped transfer function to enhance global exploration and local exploitation in the algorithm [45]. In Table 5, BBFGO and ABBFGO are compared with these novel improved algorithms, where red font indicates that BBFGO and ABBFGO were defeated, green font indicates that BBFGO is defeated but not ABBFGO, and blue font indicates that both BBFGO and ABBFGO won.

Table 5.

The statistical results of BQUATRE, BGWO-a and BPSO-TVMS.

As can be seen in Table 5, in the single-peak test function, all the data colors are green except for , where BBFGO, which had a poor effect, reverses under the long-mutation strategy, further demonstrating the effectiveness of the strategy. The effect of the new mutation in the multi-peak test function is also unquestionable, with only the function failing to beat BQUATRE. BBFGO and ABBFGO outperform BPSO-TVMS in and . ABBFGO takes advantage of the fact that, if the strategy falls into a local trap in the update iteration, it will take the direction of the overall elite to learn to jump out of that trap and search for another better value. Although it helps BBFGO improve its capacity to escape from local optima, this strategy has a limit to the improvement. For example, in and , it does not help BBFGO to achieve the search for the theoretical minimum. It also illustrates the importance of the transfer function in another way. It is the reason why this paper focuses on both the transfer function and the strategy at the same time. All of the above algorithms perform well in the fixed-dimensional test functions, with only BGWO-a performing poorly in and .

5. Apply to Feature Selection

Dealing with enormous data owing to their size is extremely challenging due to the abundance of noise and unnecessary aspects in data mining, and which features apply to a learning algorithm is unknown, it is essential to pick the pertinent features from the set of features that the learning algorithm will find useful. As a result, the data set’s characteristics must be reduced. The majority of studies focus on techniques with great accuracy and few characteristics. This section uses the wrapper approach to feature selection.

5.1. Datasets Description

The data sets were taken from the UCI machine learning repository [47], and the details of these data sets are shown in Table 6. Table 6 shows the main characteristics of these data sets in terms of the number of features, number of instances and number of classes. The selected data sets were categorized by dimensionality as low-dimensional and high-dimensional data sets, which varied in the number of features and instances and could be used as a sample of the many problems tested. The more novel data set details are as follows: the data set of Turkish Music Emotion in Table 6 is designed as a discrete model, and there are four classes in the data set: happy, sad, angry and relaxed; the data set of LSVT Voice Rehabilitation includes standard perturbation analysis methods, wavelet-based features, fundamental frequency-based features and tools used to mine nonlinear time-series; the data set of Musk (Version 1) describes a set of 92 molecules of which 47 are judged by human experts to be musks, and the remaining 45 molecules are judged to be non-musks. The goal is to learn to predict whether new molecules will be musks or non-musks; the data set of Dermatology contains 34 attributes, 33 of which are linear-valued and one of them is nominal, the diseases in this group are psoriasis, seboreic dermatitis, lichen planus, pityriasis rosea, chronic dermatitis and pityriasis rubra pilaris; instances in the data set of Ionosphere are described by two attributes per pulse number, corresponding to the complex values returned by the function resulting from the complex electromagnetic signal.

Table 6.

The details of the testing data sets.

5.2. Simulation Results

Several cutting-edge algorithms are chosen for comparison tests with the proposed binary BFGO algorithms to confirm the performance in various dimensions, and deeply examine the application. By examining the results of the experiments, such as the accuracy and the number of features selected, and fitness function values for specific evaluation criteria, it is possible to compare the merits of the BBFGO* with other algorithms. The benefits of the proposed binary BFGO algorithm and other algorithms may be compared by examining several outcomes from the feature-selection experiment, such as accuracy, number of feature selections and fitness function values for specific evaluation criteria.

5.2.1. KNN and K-Fold Validation

A fundamental and straightforward machine learning method called the K-Nearest Neighbor (KNN) algorithm classifies data by calculating the separation between various eigenvalues. In KNN classification, a classification population is produced from an input learning instance. The category of an object’s neighbours determines its classification. The category given to the object is determined by the K nearest neighbours’ most common classification (K is a positive number, typically smaller). The calculation method is shown in Equation (23).

where l has two values, 1 and 2. When l = 1 denotes the Manhattan distance and when l = 2 denotes the Euclidean distance. x and are the two input instances for calculating the distance.

In machine learning modelling, to reduce the probability of overfitting problems without adjusting the model parameters in the test data. The original data set is randomly divided into K parts for K-fold cross validation. One of the K parts is utilized as test data, while the remaining K-1 parts are used as training data. The experiment is run K times, and in the end, the average value of the K experimental outcomes is calculated.

5.2.2. Evaluation Criteria

There are many metrics to judge the merits of an algorithm in feature selection, for example, classification accuracy and the number of feature subsets. However, if only classification accuracy is chosen as an evaluation metric, there is no guarantee that the number of subsets is small, so one influence cannot be considered alone. Suitable evaluation metrics need to be constructed to reconcile the balance of factors. In the simulations, the following criteria were used for evaluation.

where is the classification error after completing cross-validation. NoSF is the subset feature after feature selection and NoAF is the number of features for the data set. and balancing the classification accuracy and the number of subsets, with being 0.99 and being 0.01.

5.2.3. Result Analysis

In the analysis of the results of the binary BFGO algorithm, ABBFGO, ABBFGO-S, ABBFGO-V2, ABBFGO-V3, ABBFGO-T1, ABBFGO-T2, BPSO-TVMS, BQUATRE and BGWO-a were compared. They were run 15 times on each selected data set, with 100 iterations each. The population size in each population is 30. The value of K-Fold parameter in cross validation is 10. The K value in KNN is 5, and the error rates corresponding to individuals were calculated using the five-nearest neighbour approach.

As can be seen from Table 7, ABBFGO and ABBFGO-V3 beat all other algorithms once, ABBFGO-V2 beat all other algorithms six times, and ABBFGO-T2 beat all other algorithms four times in completing the classification correctly. ABBFGO-V2 is also at a good level for feature subsets and meets the feature-selection requirements. In Table 8, ABBFGO-V2 has an overall ranking of 20. Collectively ABBFGO-V2 performs best, with the best classification accuracy and feature subsets. A comparison of the classification accuracy of the Advanced binary BFGO algorithm family (ABBFGO*) with that of BPSO-TVMS, BQUATRE, and BGWO-a shows that the difference between the two is not significant on some low-dimensional data sets, with the former only slightly ahead of the latter, e.g., ABBFGO, the best performer in Cancer, is 0.0017 ahead of BGWO-a. It is partly due to the fact that there are only two categories in Cancer, or it may be that the small dimensionality of the data set results in a transfer function with a mutation strategy that does not give the better performance of binary BFGO. Glass has the same number of features as Cancer, but Glass has six categories, resulting in a difference in the accuracy of 0.0138 between ABBFGO-T1 and BPSO-TVMS. However, the difference is more pronounced in the high-dimensional data set, where ABBFGO* is superior, with the best ABBFGO-V2 being 0.0516 ahead of BGWO-a in the Turkish Music Emotion, as well as in the Musk (Version 1), Dnatest, LSVT Voice Rehabilitation and Sonar data sets. The differences were also more pronounced. For the tapered-shaped transfer function, it can be seen in Table 7 that T2 with lower curvature has more advantages than T1 on the high-dimensional data sets of Turkish Music Emotion, Musk (Version 1), Dnatest and LSVT Voice Rehabilitation. From Table 9, it is known that the P-value of the Feldman test is greater than 5% in all 12 data sets, so it can be concluded that there is no significant difference between the algorithms, and the data are considered plausible. Based on the above analysis, it is believed that the transfer function and the long-mutation strategy of the new mutation mode give the binary BFGO stronger performance, which makes it more competitive compared with other advanced algorithms in the high-dimensional multi-type data sets.

Table 7.

The accuracy and number of the compared algorithms.

Table 8.

The fitness of the compared algorithms.

Table 9.

The result of Friedman test on feature selection.

6. Conclusions

The bamboo forest growth optimization algorithm, inspired by the growth process of bamboo forests, successfully solves many optimization problems. This paper focuses on the analysis of tfs and mutation strategies. Based on the analysed search space and the characteristics of the transfer functions, two different curvatures, V-tfs and T-tfs, are proposed. To avoid the stagnation of the binary BFGO algorithm, the long-mutation strategy with a novel mutation approach is introduced. The newly constructed tfs and the new mutation strategy are tested in 23 benchmark test functions. The experiments show that the newly constructed transfer function has better performance and that the binary BFGO with the long-mutation strategy with a novel mutation has a significant advantage in solving the optimization problem. Feature selection is an important optimization problem and ABBFGO, ABBFGO-S, ABBFGO-V2, ABBFGO-V3, ABBFGO-T1, and ABBFGO-T2 are selected to complete the feature selection and compared with three cutting-edge algorithms, BPSO-TVMS, BQUATRE and BGWO-a. The experiments show that the long-mutation strategy of the transfer function with the new mutation method gives a stronger performance of binary BFGO, and the extent of this improvement is particularly striking on high-dimensional data sets. As this is the first application of BFGO to the discrete domain, much about the capabilities of BFGO has yet to be fully explored and it has more room for development.

Author Contributions

Conceptualization, L.Y.; methodology, P.H.; validation, B.Y.; formal analysis, H.Y.; investigation, L.Y.; resources, S.-C.C.; data curation, P.H.; writing—original draft, L.Y.; writing—review and editing, J.-S.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data involved in this study are all public data, which can be found here: http://archive.ics.uci.edu/ml/index.php (accessed on 5 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tang, J.; Alelyani, S.; Liu, H. Feature Selection for Classification: A Review. Data Classification: Algorithms and Applications. 2014, p. 37. Available online: https://www.cvs.edu.in/upload/feature_selection_for_classification.pdf (accessed on 8 September 2022).

- Abualigah, L.; Diabat, A. Chaotic binary group search optimizer for feature selection. Expert Syst. Appl. 2022, 192, 116368. [Google Scholar]

- Yang, L.; Xu, Z. Feature extraction by PCA and diagnosis of breast tumors using SVM with DE-based parameter tuning. Int. J. Mach. Learn. Cybern. 2019, 10, 591–601. [Google Scholar] [CrossRef]

- Zeng, A.; Li, T.; Liu, D.; Zhang, J.; Chen, H. A fuzzy rough set approach for incremental feature selection on hybrid information systems. Fuzzy Sets Syst. 2015, 258, 39–60. [Google Scholar]

- Li, G.; Zhao, J.; Murray, V.; Song, C.; Zhang, L. Gap analysis on open data interconnectivity for disaster risk research. Geo-Spat. Inf. Sci. 2019, 22, 45–58. [Google Scholar]

- Abualigah, L.M.; Khader, A.T.; Hanandeh, E.S. A combination of objective functions and hybrid krill herd algorithm for text document clustering analysis. Eng. Appl. Artif. Intell. 2018, 73, 111–125. [Google Scholar]

- Arafat, H.; Elawady, R.M.; Barakat, S.; Elrashidy, N.M. Different feature selection for sentiment classification. Int. J. Inf. Sci. Intell. Syst. 2014, 1, 137–150. [Google Scholar]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 2017, 50, 1–45. [Google Scholar]

- Chandrashekar, G.; Sahin, F. A survey on feature selection methods. Comput. Electr. Eng. 2014, 40, 16–28. [Google Scholar] [CrossRef]

- Dash, M.; Liu, H. Feature selection for classification. Intell. Data Anal. 1997, 1, 131–156. [Google Scholar] [CrossRef]

- Liu, Y.; Tang, F.; Zeng, Z. Feature selection based on dependency margin. IEEE Trans. Cybern. 2014, 45, 1209–1221. [Google Scholar] [CrossRef]

- Liu, H.; Yu, L. Toward integrating feature selection algorithms for classification and clustering. IEEE Trans. Knowl. Data Eng. 2005, 17, 491–502. [Google Scholar]

- Sharma, M.; Kaur, P. A comprehensive analysis of nature-inspired meta-heuristic techniques for feature selection problem. Arch. Comput. Methods Eng. 2021, 28, 1103–1127. [Google Scholar]

- SS, V.C.; HS, A. Nature inspired meta heuristic algorithms for optimization problems. Computing 2022, 104, 251–269. [Google Scholar] [CrossRef]

- Beheshti, Z.; Shamsuddin, S.M.H. A review of population-based meta-heuristic algorithms. Int. J. Adv. Soft Comput. Appl 2013, 5, 1–35. [Google Scholar]

- Osman, I.H.; Kelly, J.P. Meta-heuristics: An overview. In Meta-Heuristics; Springer: Berlin/Heidelberg, Germany, 1996; pp. 1–21. [Google Scholar]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Sayed, S.; Nassef, M.; Badr, A.; Farag, I. A nested genetic algorithm for feature selection in high-dimensional cancer microarray datasets. Expert Syst. Appl. 2019, 121, 233–243. [Google Scholar] [CrossRef]

- Mellit, A.; Kalogirou, S.A.; Drif, M. Application of neural networks and genetic algorithms for sizing of photovoltaic systems. Renew. Energy 2010, 35, 2881–2893. [Google Scholar] [CrossRef]

- Ilonen, J.; Kamarainen, J.K.; Lampinen, J. Differential evolution training algorithm for feed-forward neural networks. Neural Process. Lett. 2003, 17, 93–105. [Google Scholar] [CrossRef]

- Hancer, E.; Xue, B.; Zhang, M. Differential evolution for filter feature selection based on information theory and feature ranking. Knowl.-Based Syst. 2018, 140, 103–119. [Google Scholar]

- Zhang, Y.; Gong, D.W.; Gao, X.Z.; Tian, T.; Sun, X.Y. Binary differential evolution with self-learning for multi-objective feature selection. Inf. Sci. 2020, 507, 67–85. [Google Scholar] [CrossRef]

- Pan, J.S.; Meng, Z.; Xu, H.; Li, X. QUasi-Affine TRansformation Evolution (QUATRE) algorithm: A new simple and accurate structure for global optimization. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Morioka, Japan, 2–4 August 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 657–667. [Google Scholar]

- Meng, Z.; Pan, J.S.; Xu, H. QUasi-Affine TRansformation Evolutionary (QUATRE) algorithm: A cooperative swarm based algorithm for global optimization. Knowl.-Based Syst. 2016, 109, 104–121. [Google Scholar]

- Liu, N.; Pan, J.S.; Nguyen, T.T. A bi-population QUasi-Affine TRansformation Evolution algorithm for global optimization and its application to dynamic deployment in wireless sensor networks. EURASIP J. Wirel. Commun. Netw. 2019, 2019, 175. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Chu, S.C.; Tsai, P.W.; Pan, J.S. Cat swarm optimization. In Proceedings of the Pacific Rim International Conference on Artificial Intelligence, Guilin, China, 7–11 August 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 854–858. [Google Scholar]

- Pan, J.S.; Tsai, P.W.; Liao, Y.B. Fish migration optimization based on the fishy biology. In Proceedings of the 2010 IEEE Fourth International Conference on Genetic and Evolutionary Computing, Shenzhen, China, 13–15 December 2010; pp. 783–786. [Google Scholar]

- Xing, J.; Zhao, H.; Chen, H.; Deng, R.; Xiao, L. Boosting Whale Optimizer with Quasi-Oppositional Learning and Gaussian Barebone for Feature Selection and COVID-19 Image Segmentation. J. Bionic Eng. 2022, 1–22. [Google Scholar] [CrossRef]

- Jiang, F.; Wang, L.; Bai, L. An improved whale algorithm and its application in truss optimization. J. Bionic Eng. 2021, 18, 721–732. [Google Scholar] [CrossRef]

- Fang, L.; Liang, X. A Novel Method Based on Nonlinear Binary Grasshopper Whale Optimization Algorithm for Feature Selection. J. Bionic Eng. 2022, 20, 237–252. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar]

- Liu, Z.Z.; Chu, D.H.; Song, C.; Xue, X.; Lu, B.Y. Social learning optimization (SLO) algorithm paradigm and its application in QoS-aware cloud service composition. Inf. Sci. 2016, 326, 315–333. [Google Scholar]

- Ramezani, F.; Lotfi, S. Social-based algorithm (SBA). Appl. Soft Comput. 2013, 13, 2837–2856. [Google Scholar]

- Kirkpatrick, S.; Gelatt, C.D., Jr.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar]

- Webster, B.; Bernhard, P.J. A Local Search Optimization Algorithm Based on Natural Principles of Gravitation. Technical Report. 2003. Available online: http://hdl.handle.net/11141/117 (accessed on 8 September 2022).

- Hu, P.; Pan, J.S.; Chu, S.C. Improved binary grey wolf optimizer and its application for feature selection. Knowl.-Based Syst. 2020, 195, 105746. [Google Scholar]

- Pan, J.S.; Tian, A.Q.; Chu, S.C.; Li, J.B. Improved binary pigeon-inspired optimization and its application for feature selection. Appl. Intell. 2021, 51, 8661–8679. [Google Scholar]

- Liu, Y.; Wang, G.; Chen, H.; Dong, H.; Zhu, X.; Wang, S. An improved particle swarm optimization for feature selection. J. Bionic Eng. 2011, 8, 191–200. [Google Scholar] [CrossRef]

- Feng, Q.; Chu, S.C.; Pan, J.S.; Wu, J.; Pan, T.S. Energy-Efficient Clustering Mechanism of Routing Protocol for Heterogeneous Wireless Sensor Network Based on Bamboo Forest Growth Optimizer. Entropy 2022, 24, 980. [Google Scholar]

- Mirjalili, S.; Lewis, A. S-shaped versus V-shaped transfer functions for binary particle swarm optimization. Swarm Evol. Comput. 2013, 9, 1–14. [Google Scholar]

- He, Y.; Zhang, F.; Mirjalili, S.; Zhang, T. Novel binary differential evolution algorithm based on Taper-shaped transfer functions for binary optimization problems. Swarm Evol. Comput. 2022, 69, 101022. [Google Scholar]

- Jin, G.; Ma, P.F.; Wu, X.; Gu, L.; Long, M.; Zhang, C.; Li, D.Z. New Genes Interacted with Recent Whole-Genome Duplicates in the Fast Stem Growth of Bamboos. Mol. Biol. Evol. 2021, 38, 5752–5768. [Google Scholar] [CrossRef]

- Shi, Y.; Liu, E.; Zhou, G.; Shen, Z.; Yu, S. Bamboo shoot growth model based on the stochastic process and its application. Sci. Silvae Sin. 2013, 49, 89–93. [Google Scholar]

- Beheshti, Z. A time-varying mirrored S-shaped transfer function for binary particle swarm optimization. Inf. Sci. 2020, 512, 1503–1542. [Google Scholar] [CrossRef]

- Liu, F.F.; Chu, S.C.; Wang, X.; Pan, J.S. A Novel Binary QUasi-Affine TRansformation Evolution (QUATRE) Algorithm and Its Application for Feature Selection. In Proceedings of the Advances in Intelligent Systems and Computing, Hangzhou, China, 29–31 May 2021; Springer Nature: Singapore, 2022; pp. 305–315. [Google Scholar]

- Asuncion, A.; Newman, D. UCI Machine Learning Repository. 2007. Available online: https://www.semanticscholar.org/paper/%5B7%5D-A.-Asuncion-and-D.-J.-Newman.-UCI-Machine-Aggarwal-Han/ea2be4c9913e781e7930cc2d4a0b2021a6a91a44 (accessed on 8 September 2022).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).