Estimation of Large-Dimensional Covariance Matrices via Second-Order Stein-Type Regularization

Abstract

1. Introduction

- The second-order Stein-type estimator is modeled as a quadratic polynomial concerning the SCM and an almost surely (a.s.) positive definite target matrix. For the spherical and diagonal target matrices, the MSEs between the second-order Stein-type estimator and the actual covariance matrix are unbiasedly estimated under Gaussian distribution.

- We formulate the second-order Stein-type estimators for the two target matrices as convex quadratic programming problems. Then, the optimal second-order Stein-type estimators are immediately obtained.

- Some numerical simulations and application examples are provided for comparing the proposed second-order Stein-type estimators with the existing linear and nonlinear shrinkage estimators.

2. Notation, Motivation, and Formulation

3. Optimal Second-Order Stein-Type Estimators

3.1. Target Matrices

3.2. Available Loss Functions

3.3. Optimal Second-Order Stein-Type Estimators

4. Numerical Simulations and Real Data Analysis

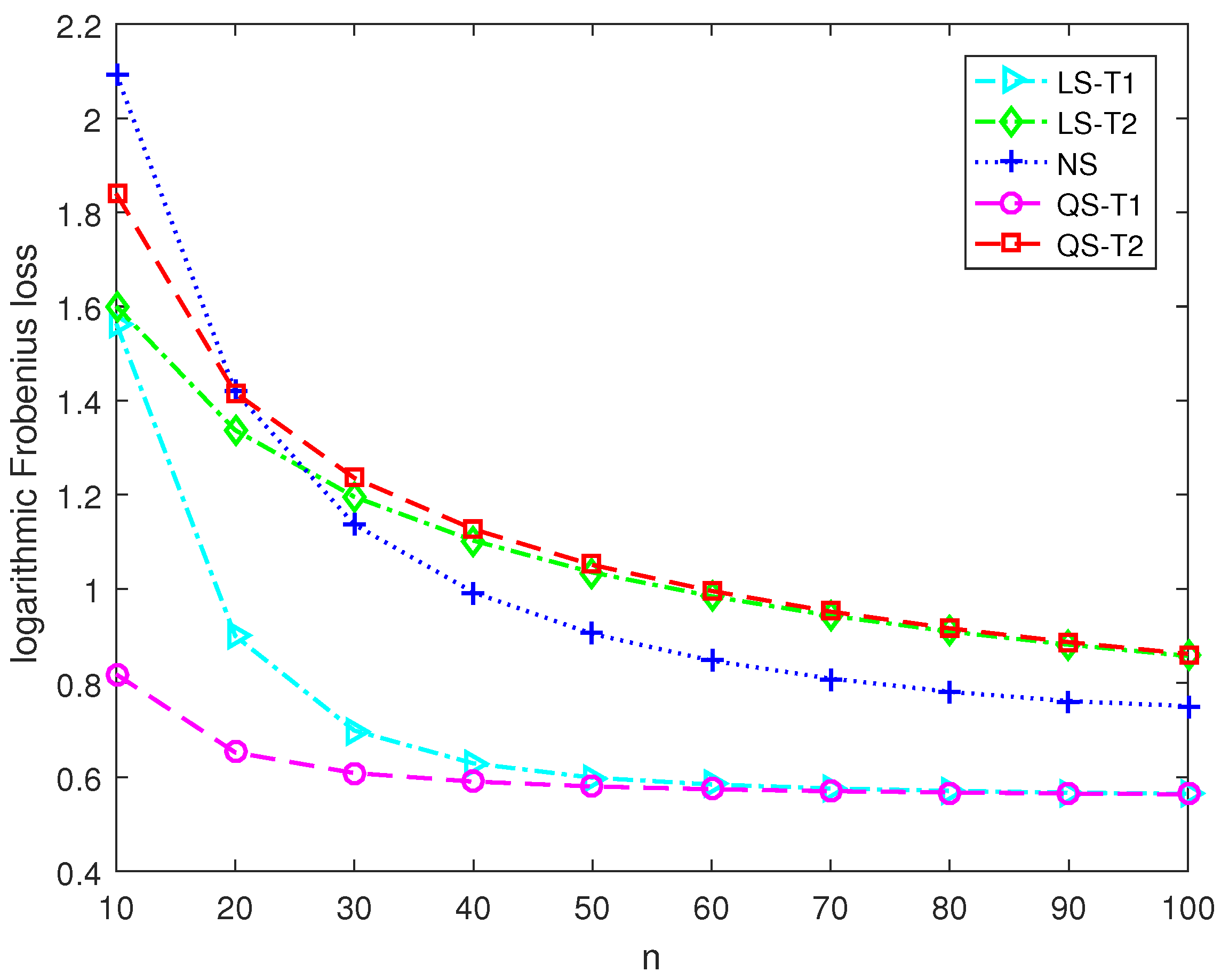

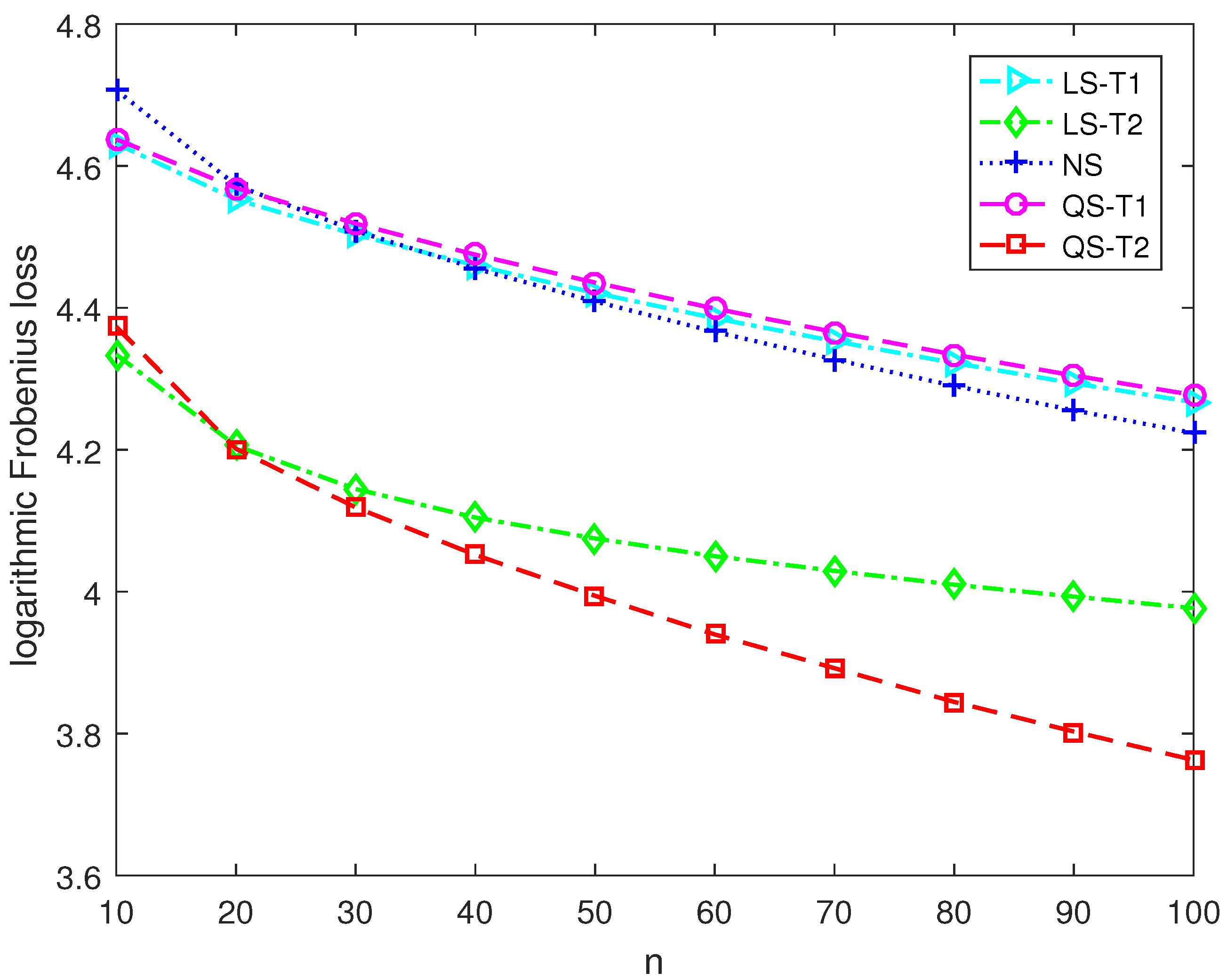

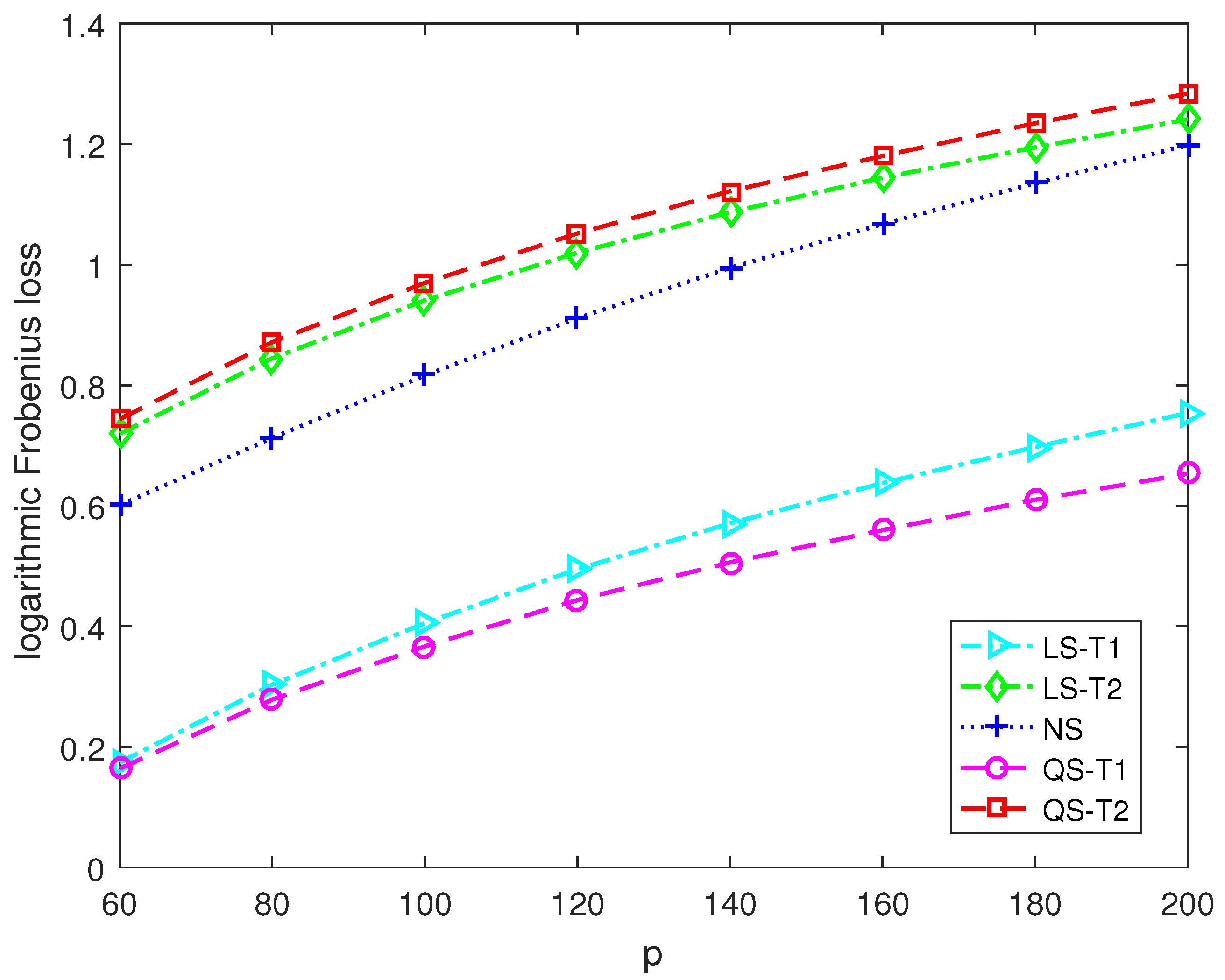

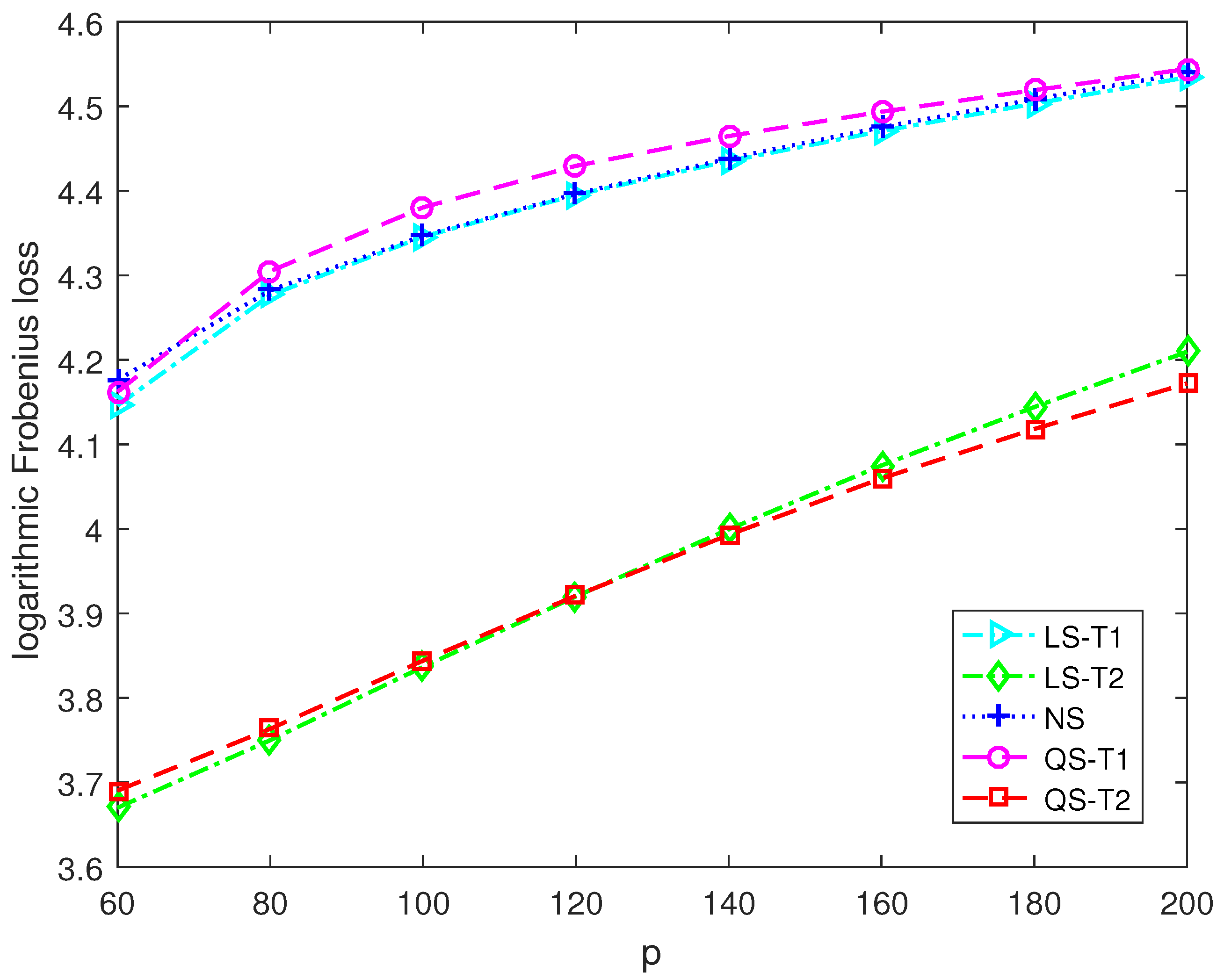

4.1. MSE Performance

- (1)

- Model 1: with and for ,

- (2)

- Model 2: with and for .

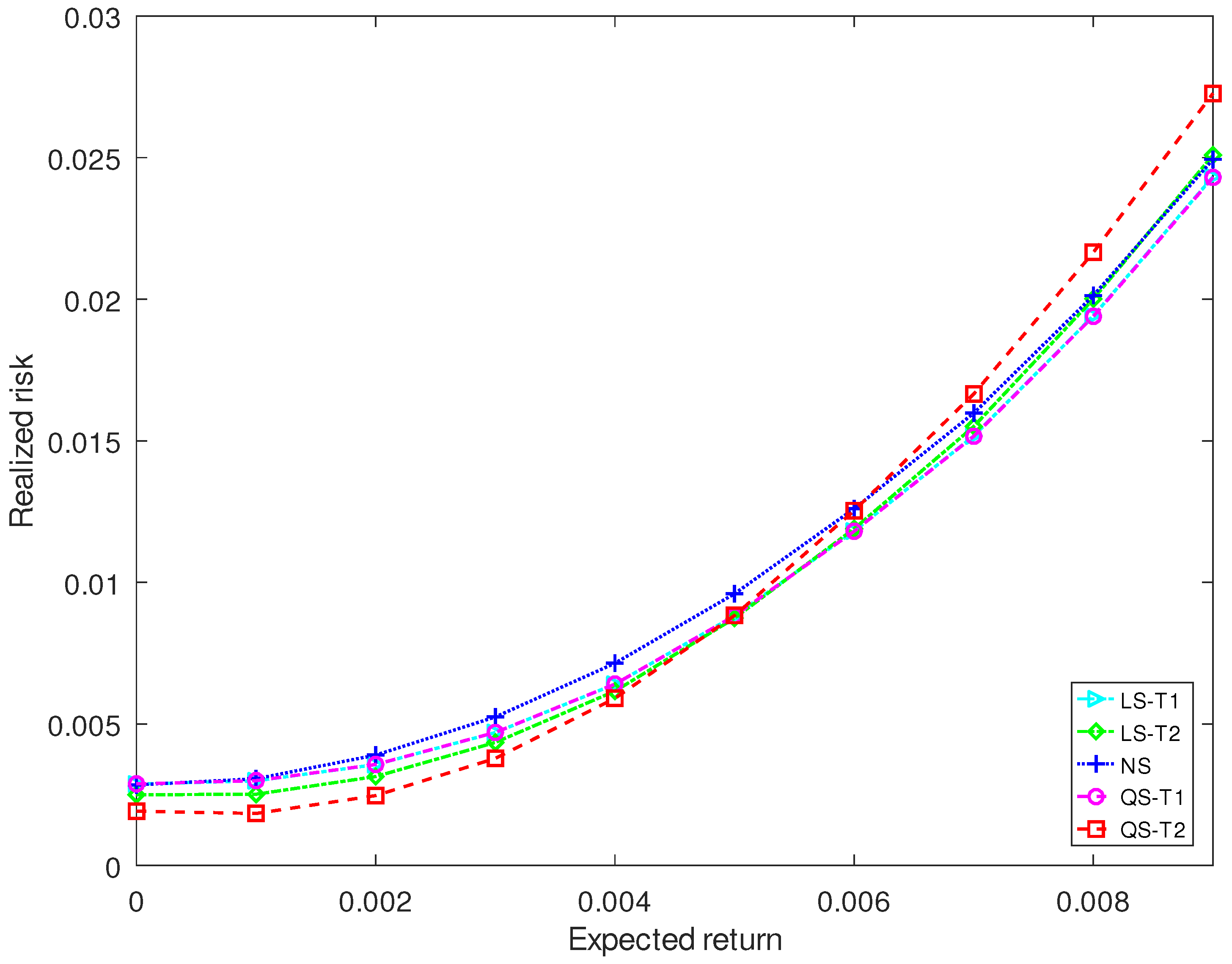

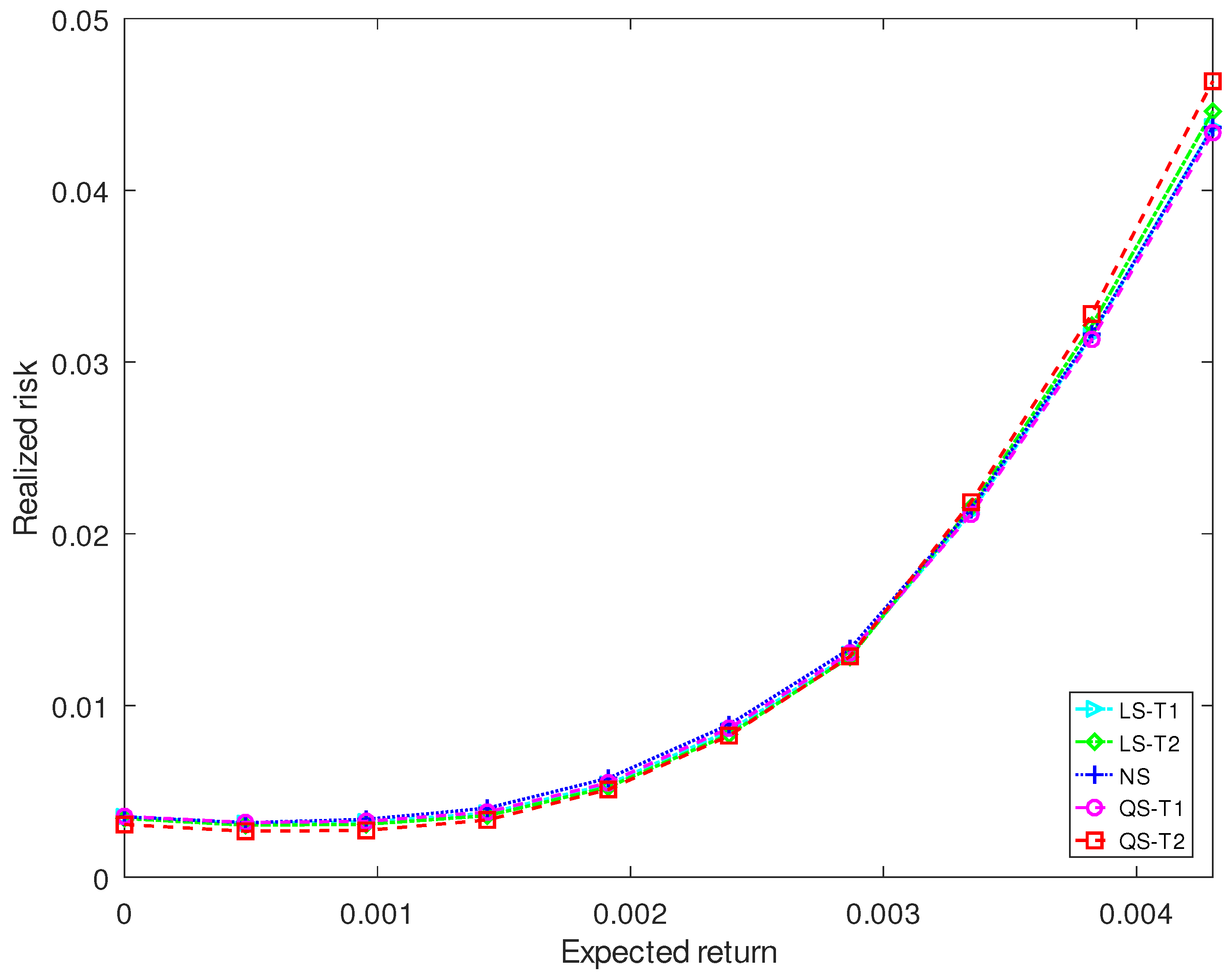

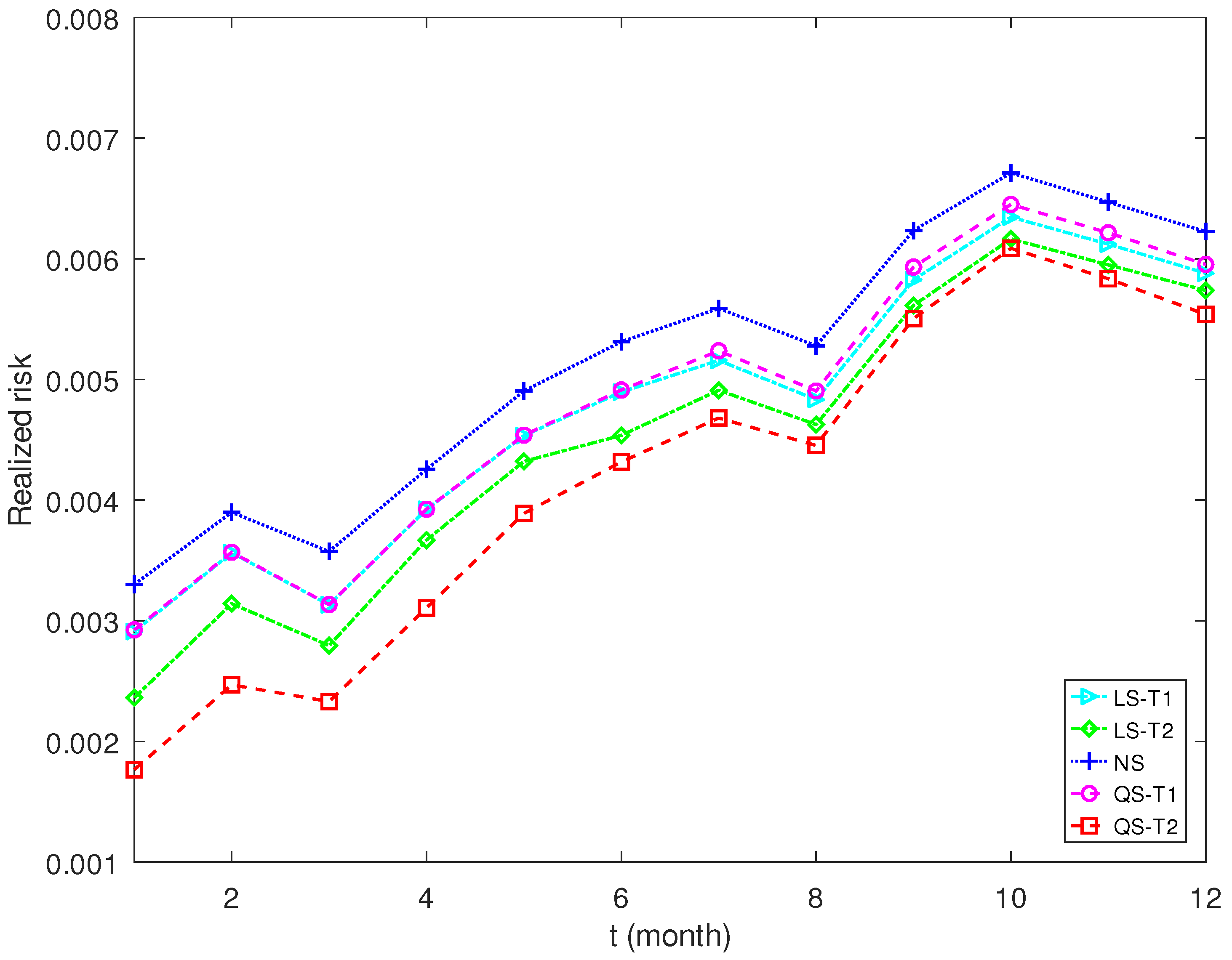

4.2. Portfolio Selection

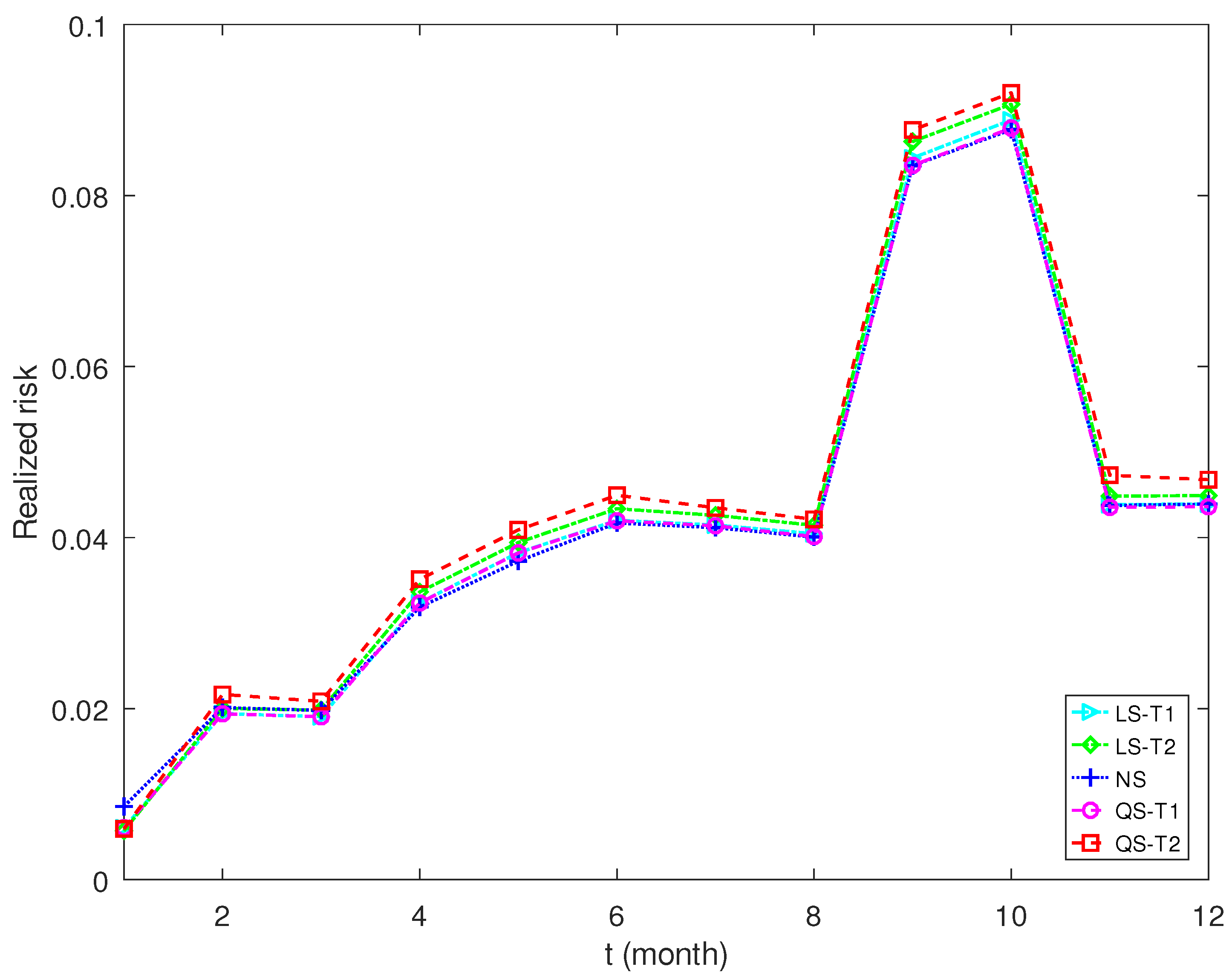

4.3. Discriminant Analysis

5. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Fan, J.; Liao, Y.; Mincheva, M. Large covariance estimation by thresholding principal orthogonal complements. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2013, 75, 603–680. [Google Scholar] [CrossRef] [PubMed]

- Bodnar, T.; amd Yarema Okhrin, S.D.; Parolya, N.; Schmid, W. Statistical inference for the expected utility portfolio in high dimensions. IEEE Trans. Signal Process. 2021, 69. [Google Scholar] [CrossRef]

- Vershynin, R. How close is the sample covariance matrix to the actual covariance matrix? J. Theor. Probab. 2012, 25, 655–686. [Google Scholar] [CrossRef]

- Cai, T.T.; Han, X.; Pan, G. Limiting laws for divergent spiked eigenvalues and largest nonspiked eigenvalue of sample covariance matrices. Ann. Stat. 2020, 48, 1255–1280. [Google Scholar] [CrossRef]

- Wu, W.; Pourahmadi, M. Banding sample autocovariance matrices of stationary progress. Stat. Sin. 2009, 19, 1755–1768. [Google Scholar]

- Fan, J.; Liu, H.; Wang, W. Large covariance estimation through elliptical factor models. Ann. Stat. 2018, 46, 1383–1414. [Google Scholar] [CrossRef]

- Cai, T.T.; Yuan, M. Adaptive covariance matrix estimation through block thresholding. Ann. Stat. 2012, 40, 2014–2042. [Google Scholar] [CrossRef]

- Cao, Y.; Lin, W.; Li, H. Large covariance estimation for compositional data via composition-adjusted thresholding. J. Am. Stat. Assoc. 2019, 114, 759–772. [Google Scholar] [CrossRef]

- Bodnar, O.; Bodnar, T.; Parolya, N. Recent advances in shrinkage-based high-dimensional inference. J. Multivar. Anal. 2021, 188, 104826. [Google Scholar] [CrossRef]

- Raninen, E.; Ollila, E. Coupled regularized sample covariance matrix estimator for multiple classes. IEEE Trans. Signal Process. 2021, 69, 5681–5692. [Google Scholar] [CrossRef]

- Chen, Y.; Wiesel, A.; Eldar, Y.C.; Hero, A.O. Shrinkage algorithms for MMSE covariance estimation. IEEE Trans. Signal Process. 2010, 58, 5016–5029. [Google Scholar] [CrossRef]

- Fisher, T.J.; Sun, X. Improved Stein-type shrinkage estimators for the high-dimensional multivariate normal covariance matrix. Comput. Stat. Data Anal. 2011, 55, 1909–1918. [Google Scholar]

- Hannart, A.; Naveau, P. Estimating high dimensional covariance matrices: A new look at the Gaussian conjugate framework. J. Multivar. Anal. 2014, 131, 149–162. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, X.; Zhao, S. A covariance matrix shrinkage method with Toeplitz rectified target for DOA estimation under the uniform linear array. Int. J. Electron. Commun. (AEÜ) 2017, 81, 50–55. [Google Scholar] [CrossRef]

- Lancewicki, T.; Aladjem, M. Multi-target shrinkage estimation for covariance matrices. Trans. Signal Process. 2014, 62, 6380–6390. [Google Scholar] [CrossRef]

- Tong, J.; Hu, R.; Xi, J.; Xiao, Z.; Guo, Q.; Yu, Y. Linear shrinkage estimation of covariance matrices using low-complexity cross-validation. Signal Process. 2018, 148, 223–233. [Google Scholar] [CrossRef]

- Yuasa, R.; Kubokawa, T. Ridge-type linear shrinkage estimation of the mean matrix of a high-dimensional normal distribution. J. Multivar. Anal. 2020, 178, 104608. [Google Scholar] [CrossRef]

- Ledoit, O.; Wolf, M. Analytical nonlinear shrinkage of large-dimensional covariance matrices. Ann. Stat. 2020, 48, 3043–3065. [Google Scholar] [CrossRef]

- Mestre, X. On the asymptotic behavior of the sample estimates of eigenvalues and eigenvectors of covariance matrices. IEEE Trans. Signal Process. 2008, 56, 5353–5368. [Google Scholar] [CrossRef]

- Ledoit, O.; Wolf, M. A well-conditioned estimator for large-dimensional covariance matrices. J. Multivar. Anal. 2004, 88, 365–411. [Google Scholar] [CrossRef]

- Ikeda, Y.; Kubokawa, T.; Srivastava, M.S. Comparison of linear shrinkage estimators of a large covariance matrix in normal and non-normal distributions. Comput. Stat. Data Anal. 2016, 95, 95–108. [Google Scholar]

- Cabana, E.; Lillo, R.E.; Laniado, H. Multivariate outlier detection based on a robust Mahalanobis distance with shrinkage estimators. Stat. Pap. 2021, 62, 1583–1609. [Google Scholar] [CrossRef]

- Ledoit, O.; Wolf, M. Shrinkage estimation of large covariance matrices: Keep it simple, statistician? J. Multivar. Anal. 2021, 186, 104796. [Google Scholar] [CrossRef]

- Tanaka, M.; Nakata, K. Positive definite matrix approximation with condition number constraint. Optim. Lett. 2014, 8, 939–947. [Google Scholar] [CrossRef]

- Gupta, A.K.; Nagar, D.K. Matrix Variate Distributions; Chapman & Hall/CRC: Boca Raton, FL, USA, 2000. [Google Scholar]

- Srivastava, M.S. Some tests concerning the covariance matrix in high dimensional data. J. Jpn. Stat. Soc. 2005, 35, 251–272. [Google Scholar] [CrossRef]

- Li, J.; Zhou, J.; Zhang, B. Estimation of large covariance matrices by shrinking to structured target in normal and non-normal distributions. IEEE Access 2018, 6, 2158–2169. [Google Scholar] [CrossRef]

- Fisher, T.J.; Sun, X.; Gallagher, C.M. A new test for sphericity of the covariance matrix for high dimensional data. J. Multivar. Anal. 2010, 101, 2554–2570. [Google Scholar] [CrossRef]

- Lehmann, E.L. Elements of Large-Sample Theory; Springer: New York, NY, USA, 1999. [Google Scholar]

- Bai, Z.; Silverstein, J.W. Spectral Analysis of Large Dimensional Random Matrices; Springer: New York, NY, USA, 2010. [Google Scholar]

- Markowitz, H. Portfolio selection. J. Financ. 1952, 7, 77–91. [Google Scholar]

- Pantaleo, E.; Tumminello, M.; Lillo, F.; Mantegna, R. When do improved covariance matrix estimators enhance portfolio optimization? An empirical comparative study of nine estimators. Quant. Financ. Pap. 2010, 11, 1067–1080. [Google Scholar] [CrossRef]

- Joo, Y.C.; Park, S.Y. Optimal portfolio selection using a simple double-shrinkage selection rule. Financ. Res. Lett. 2021, 43, 102019. [Google Scholar] [CrossRef]

| 15 | 20 | 25 | 30 | 35 | 40 | 45 | 50 | |

|---|---|---|---|---|---|---|---|---|

| LS-T1 | 0.5165 | 0.5530 | 0.5646 | 0.5826 | 0.5870 | 0.5956 | 0.5981 | 0.6054 |

| LS-T2 | 0.6262 | 0.6459 | 0.6607 | 0.6714 | 0.6796 | 0.6892 | 0.6918 | 0.6971 |

| NS | 0.4931 | 0.4842 | 0.4701 | 0.4688 | 0.4544 | 0.4458 | 0.4285 | 0.4304 |

| QS-T1 | 0.5124 | 0.5465 | 0.5600 | 0.5761 | 0.5833 | 0.5938 | 0.5953 | 0.6025 |

| QS-T2 | 0.6336 | 0.6539 | 0.6678 | 0.6747 | 0.6840 | 0.6902 | 0.6916 | 0.6957 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, B.; Huang, H.; Chen, J. Estimation of Large-Dimensional Covariance Matrices via Second-Order Stein-Type Regularization. Entropy 2023, 25, 53. https://doi.org/10.3390/e25010053

Zhang B, Huang H, Chen J. Estimation of Large-Dimensional Covariance Matrices via Second-Order Stein-Type Regularization. Entropy. 2023; 25(1):53. https://doi.org/10.3390/e25010053

Chicago/Turabian StyleZhang, Bin, Hengzhen Huang, and Jianbin Chen. 2023. "Estimation of Large-Dimensional Covariance Matrices via Second-Order Stein-Type Regularization" Entropy 25, no. 1: 53. https://doi.org/10.3390/e25010053

APA StyleZhang, B., Huang, H., & Chen, J. (2023). Estimation of Large-Dimensional Covariance Matrices via Second-Order Stein-Type Regularization. Entropy, 25(1), 53. https://doi.org/10.3390/e25010053