An Enhanced Steganography Network for Concealing and Protecting Secret Image Data

Abstract

:1. Introduction

- (1)

- They are always designed for concealing a small amount of bit-level messages (<0.4 bpp), where the payload capacity is measured in bits-per-pixel (bpp), thus they cannot meet the requirement of concealing the image data.

- (2)

- They are always designed relying on domain knowledge and hand-crafted features with low dimensions, which cannot depict the target’s high-order statistical characteristics accurately [14], thus greatly limiting the steganographic performance. In addition, artificial design relying on domain knowledge is not only expensive, but also laborious and time-consuming.

- We designed a novel steganography network for protecting secret image data, our simple but effective designs greatly improve the steganographic performance, almost without increasing the computation complexity, thus our method achieves high security with lower computational complexity when compared with existing methods.

- We propose the use of long skip concatenation in order to preserve more raw information, which greatly improves the hidden image’s quality. In addition, we propose a novel strategy, namely non-activated feature fusion (NAFF), in order to provide stronger supervision for synthetizing higher-quality hidden images and recovered images.

- We introduced the attention mechanism into image steganography and designed an enhanced module in order to reconstruct and enhance the hidden image’s salient target, which can effectively cover up and obscure the embedded secret content in order to enhance the visual security.

- We designed a hybrid steganography loss function that is composed of pixel domain loss and structure domain loss in order to comprehensively boost the hidden image’s quality, which greatly weakens the embedded secret content and enhances the visual security.

2. Methods

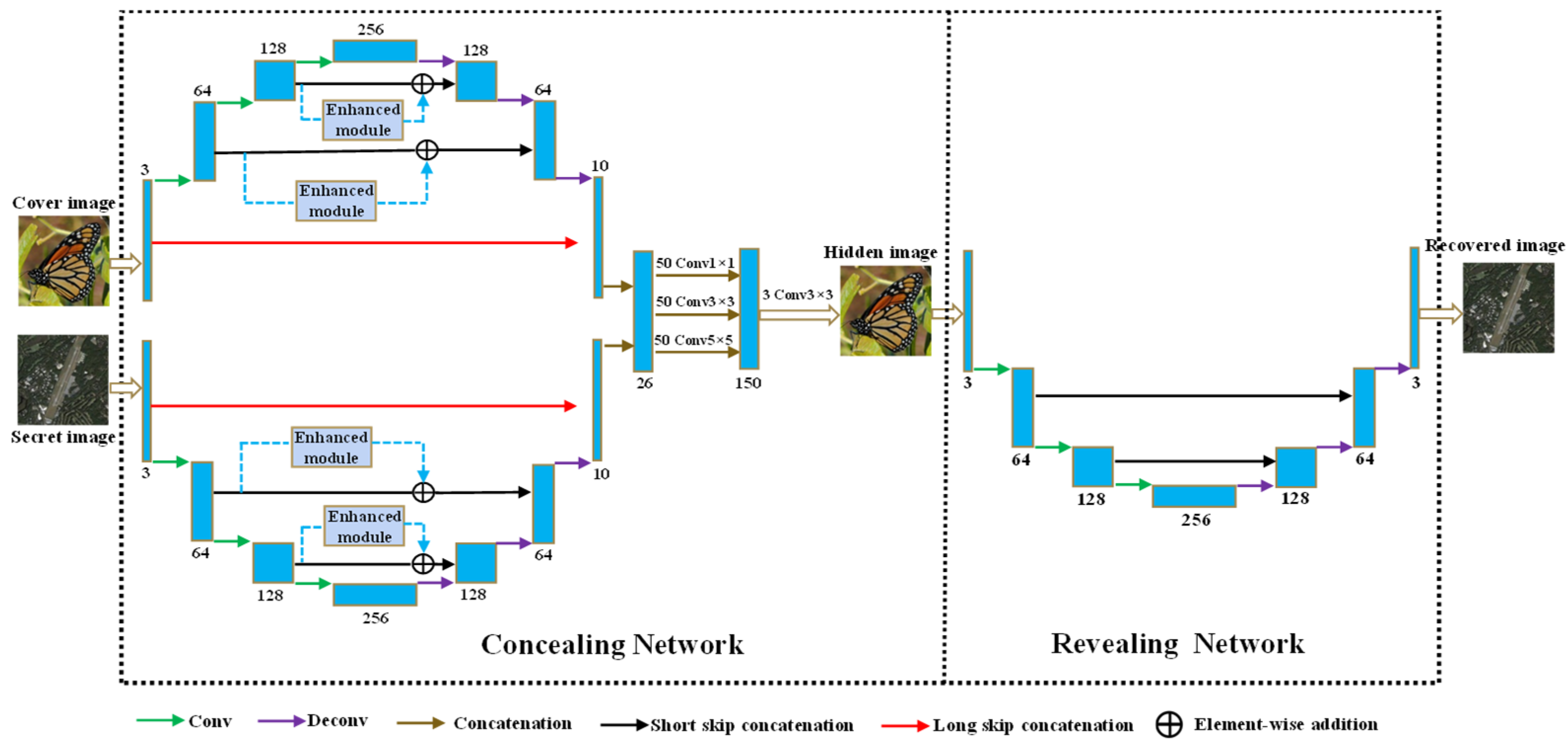

2.1. Overall Architecture

2.2. Long Skip Concatenation

2.3. NAFF Strategy

2.4. Enhanced Module

- (1)

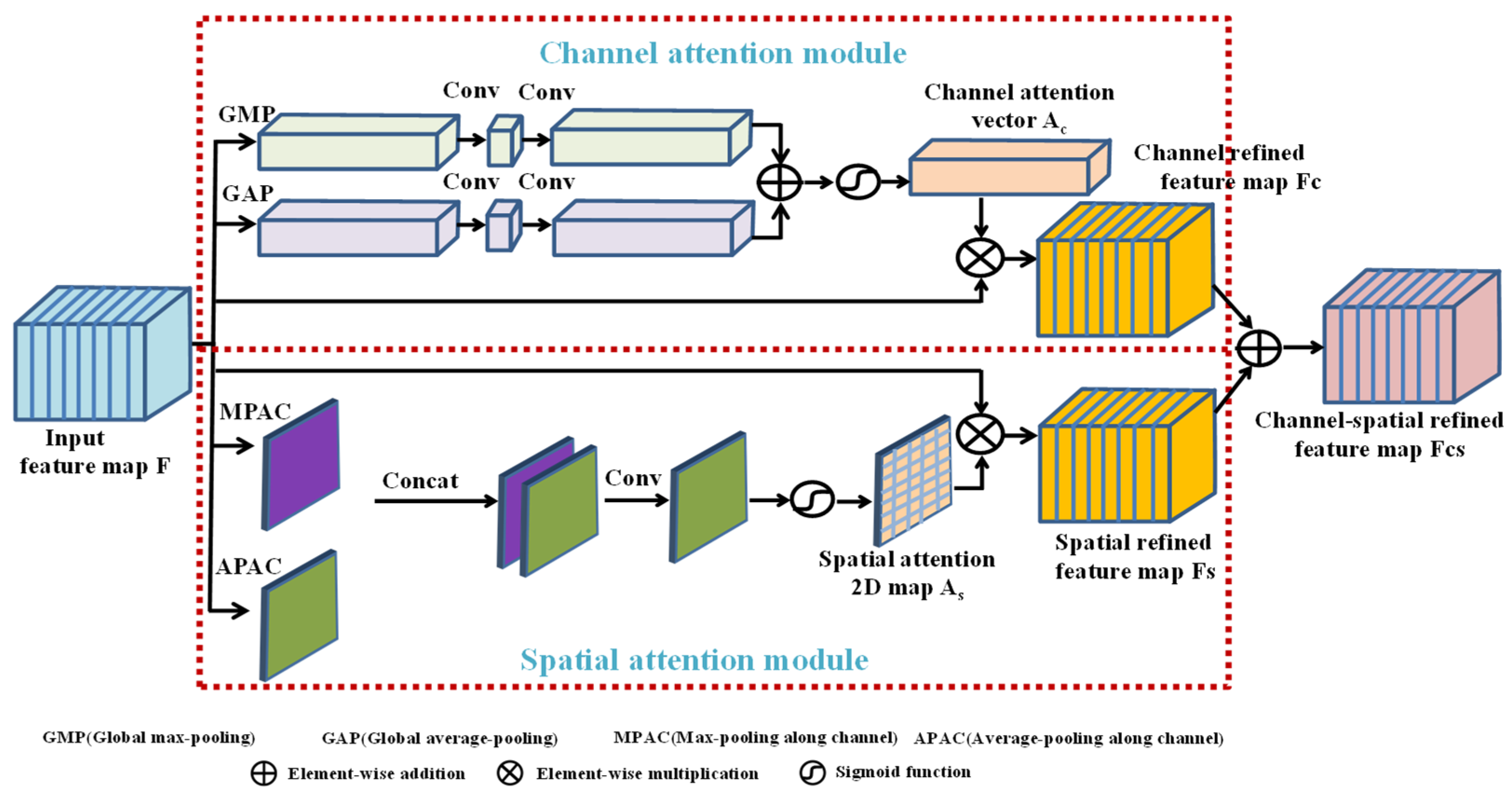

- Channel attention module: To ensure the hidden image and the recovered image are visually similar to the original cover image and secret image, more attention should be paid to the salient regions, such as high-frequency texture, contour and edge that play crucial roles in the visual appearance. Therefore, global max-pooling (GMP) and global average-pooling (GAP), shown in Figure 2, are simultaneously applied to squeeze and aggregate the information from each channel of the input feature map. GMP can retain the most significant feature of all the channels, while GAP can be used as an auxiliary to gather other valuable information and retain the global information of all the channels. Assume that the input feature map is fed into the channel attention module; after squeezing operations of GMP and GAP, two channel vectors with the size of can be obtained (Generally, the channel number represented by is a large value, but the module’ complexity can be further reduced by using two continuous Conv 1 × 1 layers. The channel number of the first Conv 1 × 1 operation is , where is the reduction ratio of the channel number, and the channel number of the second Conv 1 × 1 operation is . The Relu activation function is used between these two Conv 1 × 1 operations to further enhance the ability of extracting non-linear features. For simplicity, the above operations can be represented by a symbol called ). Two channel vectors produced by are added and then activated by the Sigmoid activation function to generate a channel attention vector as . Finally, we can obtain the channel refined feature map as follows:where is the Sigmoid function; and indicate element-wise multiplication and element-wise addition, respectively.

- (2)

- Spatial attention module: As shown in Figure 2, max-pooling along channel (MPAC) and average-pooling along channel (APAC) are simultaneously applied to extract the salient features and global information in the spatial dimension. Assume that the input feature map is fed into the spatial attention module, and two 2D feature maps with the size of can be obtained after applying MPAC and APAC. Then, these two feature maps are concatenated into a hybrid feature map, which is then fed into a convolution layer with a Sigmoid activation function following to generate a spatial attention 2D map as . Finally, we can obtain the spatial refined feature map as follows:where represents the concatenation operation; represents the convolution operation with the kernel size of 7 × 7, which means a large receptive field.

2.5. Hybrid Loss Function

3. Experiments and Analysis

3.1. Experimental Platform and Setup

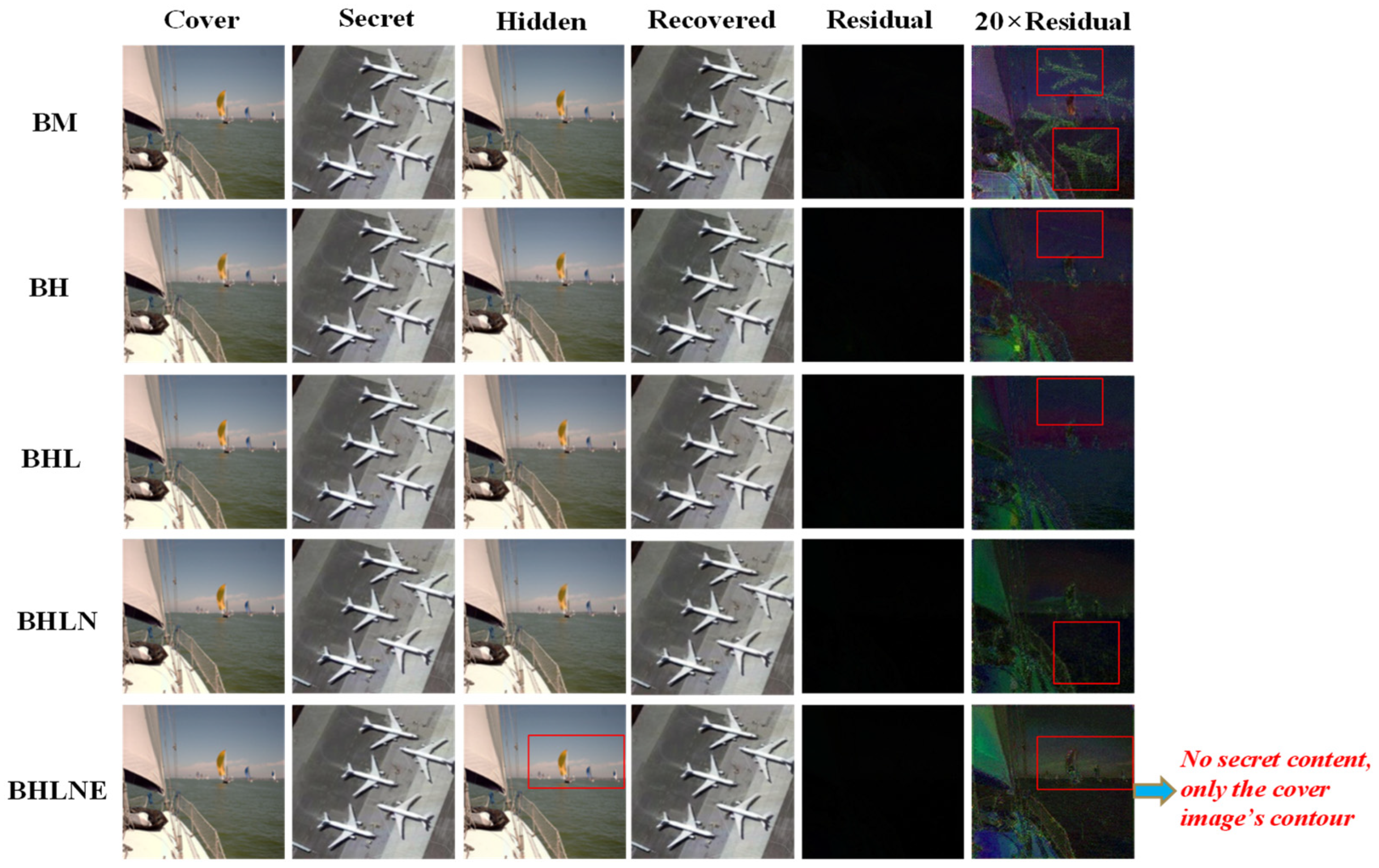

3.2. Ablation Study

3.3. Comparison Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Aazam, M.; Zeadally, S.; Harras, K.A. Deploying Fog Computing in Industrial Internet of Things and Industry 4.0. IEEE Trans. Ind. Inf. 2018, 14, 4674–4682. [Google Scholar] [CrossRef]

- Howe, J.; Khalid, A.; Rafferty, C.; Regazzoni, F.; O’Neill, M. On Practical Discrete Gaussian Samplers for Lattice-Based Cryptography. IEEE Trans. Comput. 2018, 67, 322–334. [Google Scholar] [CrossRef]

- Yan, B.; Xiang, Y.; Hua, G. Improving the Visual Quality of Size-Invariant Visual Cryptography for Grayscale Images: An Analysis-by-Synthesis (AbS) Approach. IEEE Trans. Image Process 2019, 28, 896–911. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Cheng, L.M.; Su, T. Multivariate Cryptography Based on Clipped Hopfield Neural Network. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 353–363. [Google Scholar] [CrossRef] [PubMed]

- Sharifzadeh, M.; Aloraini, M.; Schonfeld, D. Adaptive Batch Size Image Merging Steganography and Quantized Gaussian Image Steganography. IEEE Trans. Inf. Forensics Secur. 2019, 15, 867–879. [Google Scholar] [CrossRef]

- Li, W.; Zhou, W.; Zhang, W.; Qin, C.; Hu, H.; Yu, N. Shortening the Cover for Fast JPEG Steganography. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 1745–1757. [Google Scholar] [CrossRef]

- Lu, W.; Chen, J.; Zhang, J.; Huang, J.; Weng, J.; Zhou, Y. Secure Halftone Image Steganography Based on Feature Space and Layer Embedding. IEEE Trans. Cybern 2022, 52, 5001–5014. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, W.; Li, W.; Yu, X.; Yu, N. Non-Additive Cost Functions for Color Image Steganography Based on Inter-Channel Correlations and Differences. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2081–2095. [Google Scholar] [CrossRef]

- Mielikainen, J. LSB matching revisited. IEEE Signal Process. Lett. 2006, 13, 285–287. [Google Scholar] [CrossRef]

- Pevný, T.; Filler, T.; Bas, P. Using High-Dimensional Image Models to Perform Highly Undetectable Steganography. In Proceedings of the International Workshop on Information Hiding, Calgary, AB, Canada, 28–30 June 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 161–177. [Google Scholar]

- Holub, V.; Fridrich, J. Designing steganographic distortion using directional filters. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Tenerife, Spain, 2–5 December 2012; pp. 234–239. [Google Scholar]

- Holub, V.; Fridrich, J.; Denemark, T. Universal distortion function for steganography in an arbitrary domain. EURASIP J. Inf. Secur. 2014, 1, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Su, W.; Ni, J.; Li, X.; Shi, Y.Q. A New Distortion Function Design for JPEG Steganography Using the Generalized Uniform Embedding Strategy. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 3545–3549. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Tang, W.; Tan, S.; Li, B.; Huang, J. Automatic Steganographic Distortion Learning Using a Generative Adversarial Network. IEEE Signal Process. Lett. 2017, 24, 1547–1551. [Google Scholar] [CrossRef]

- Shi, H.; Dong, J.; Wang, W.; Qian, Y.; Zhang, X. SSGAN: Secure steganography based on generative adversarial networks. In Proceedings of the Pacific Rim Conference on Multimedia, Harbin, China, 28–29 September 2017; Springer: Cham, Switzerland, 2017; pp. 534–544. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. Available online: http://arxiv.org/abs/1701.07875 (accessed on 6 December 2017).

- Zhu, J.; Kaplan, R.; Johnson, J.; Fei-Fei, L. HiDDeN: Hiding data with deep networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 657–672. [Google Scholar]

- Zhang, K.A.; Cuesta, A.; Infante, L.X.; Veeramachaneni, K. SteganoGAN: High Capacity Image Steganography with GANs. Available online: http://arxiv.org/abs/1901.03892 (accessed on 30 January 2019).

- Rahim, R.; Nadeem, S. End-to-end trained CNN encode-decoder networks for image steganography. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 723–729. [Google Scholar]

- Duan, X.; Jia, K.; Li, B.; Guo, D.; Zhang, E.; Qin, C. Reversible Image Steganography Scheme Based on a U-Net Structure. IEEE Access 2019, 7, 9314–9323. [Google Scholar] [CrossRef]

- Baluja, S. Hiding Images within Images. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1685–1697. [Google Scholar] [CrossRef]

- Chen, F.; Xing, Q.; Liu, F. Technology of Hiding and Protecting the Secret Image Based on Two-Channel Deep Hiding Network. IEEE Access 2020, 8, 21966–21979. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, C.; Guo, H.; Wang, J.; Zhao, X.; Lu, H. Attention CoupleNet: Fully Convolutional Attention Coupling Network for Object Detection. IEEE Trans. Image Process 2019, 288, 113–126. [Google Scholar] [CrossRef]

- Ji, J.; Xu, C.; Zhang, X.; Wang, B.; Song, X. Spatio-Temporal Memory Attention for Image Captioning. IEEE Trans. Image Process 2020, 29, 7615–7628. [Google Scholar] [CrossRef]

- Yu, C.; Hu, D.; Zheng, S.; Jiang, W.; Li, M.; Zhao, Z.Q. An improved steganography without embedding based on attention GAN. Peer--Peer Netw. Appl. 2021, 14, 1446–1457. [Google Scholar] [CrossRef]

- Tan, J.; Liao, X.; Liu, J.; Cao, Y.; Jiang, H. Channel Attention Image Steganography with Generative Adversarial Networks. IEEE Trans. Netw. Sci. Eng. 2022, 9, 888–903. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Oveis, A.H.; Giusti, E.; Ghio, S.; Martorella, M. A Survey on the Applications of Convolutional Neural Networks for Synthetic Aperture Radar: Recent Advances. IEEE Aerosp. Electron. Syst. Mag. 2022, 37, 18–42. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Everingham, M.; VanGool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

| Frameworks | PSNR | SSIM | PSNR | SSIM | Computation Complexity/GMac |

|---|---|---|---|---|---|

| BM | 38.41 | 0.9841 | 35.57 | 0.9817 | 28.4 |

| BH | 38.57 | 0.9912 | 38.97 | 0.9914 | 28.4 |

| BHL | 40.46 | 0.9925 | 38.87 | 0.9912 | 29.1 |

| BHLN | 41.23 | 0.9937 | 38.92 | 0.9914 | 29.1 |

| BHLNE | 41.74 | 0.9941 | 39.23 | 0.9915 | 29.1 |

| Methods | PSNR | SSIM | PSNR | SSIM | Computation Complexity/GMac |

|---|---|---|---|---|---|

| LSB | 33.14 | 0.8980 | 27.75 | 0.9012 | - - |

| Rehman [20] | 33.53 | 0.9387 | 28.24 | 0.9332 | 3.1 |

| Duan [21] | 35.96 | 0.9514 | 35.34 | 0.9601 | 66.8 |

| Baluja [22] | 37.24 | 0.9608 | 35.78 | 0.9634 | 30.9 |

| Chen [23] | 41.51 | 0.9902 | 38.32 | 0.9842 | 87.6 |

| Our | 41.74 | 0.9941 | 39.23 | 0.9915 | 29.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, F.; Xing, Q.; Sun, B.; Yan, X.; Cheng, J. An Enhanced Steganography Network for Concealing and Protecting Secret Image Data. Entropy 2022, 24, 1203. https://doi.org/10.3390/e24091203

Chen F, Xing Q, Sun B, Yan X, Cheng J. An Enhanced Steganography Network for Concealing and Protecting Secret Image Data. Entropy. 2022; 24(9):1203. https://doi.org/10.3390/e24091203

Chicago/Turabian StyleChen, Feng, Qinghua Xing, Bing Sun, Xuehu Yan, and Jingwen Cheng. 2022. "An Enhanced Steganography Network for Concealing and Protecting Secret Image Data" Entropy 24, no. 9: 1203. https://doi.org/10.3390/e24091203

APA StyleChen, F., Xing, Q., Sun, B., Yan, X., & Cheng, J. (2022). An Enhanced Steganography Network for Concealing and Protecting Secret Image Data. Entropy, 24(9), 1203. https://doi.org/10.3390/e24091203