Abstract

Augmented IIR filter adaptive algorithms have been considered in many studies, which are suitable for proper and improper complex-valued signals. However, lots of augmented IIR filter adaptive algorithms are developed under the mean square error (MSE) criterion. It is an ideal optimality criterion under Gaussian noises but fails to model the behavior of non-Gaussian noise found in practice. Complex correntropy has shown robustness under non-Gaussian noises in the design of adaptive filters as a similarity measure for the complex random variables. In this paper, we propose a new augmented IIR filter adaptive algorithm based on the generalized maximum complex correntropy criterion (GMCCC-AIIR), which employs the complex generalized Gaussian density function as the kernel function. Stability analysis provides the bound of learning rate. Simulation results verify its superiority.

1. Introduction

Complex-valued adaptive filtering algorithm has a wide range of engineering applications in radio systems [1], system identification [2], environment signal processing [3], and other fields. Generally speaking, complex-valued adaptive filtering algorithm is an extension of the real-valued adaptive filtering algorithm. When the complex signal is second-order circular (or proper) [3], the performance of adaptive filter is optimal. For second-order circular signals, the covariance matrix is second-order statistics. A complex-valued random variable is second-order circular if its first and second-order statistics are rotation-invariant in the complex plane, but in most cases, complex signals are noncircular (or improper) [3].

In order to suit both proper and improper complex-valued signals, augmented complex statistics are proposed. There are quantities of adaptive filtering algorithms based on augmented complex statistics, such as augmented complex least mean square (ACLMS) [4], augmented complex adaptive infinite impulse response (IIR) algorithm (ACA-IIR) [5], diffusion augmented complex adaptive IIR algorithm (DACA-IIR) [6], and incremental augmented complex adaptive IIR algorithm (IACA-IIR) [7]. These adaptive filtering algorithms are based on the mean square error (MSE) criterion, which is mathematically tractable, computationally simple, and optimal under Gaussian assumptions [8]. However, the MSE-based algorithm may perform poorly or encounter instability problems when the signal is disturbed by non-Gaussian noise [9,10]. From a statistical point of view, the mean square error is not sufficient to capture all possible information in a non-Gaussian signal. In practical applications, non-Gaussian noise is common. For example, some sources of non-Gaussian impulse noise are non-synchronization in digital recording, motor ignition noise in internal combustion engines, and lightning spikes in natural phenomena [11,12].

Entropy generally describes a measure of uncertainty of a real random variable, and as a means of a functional analysis method, entropy of a signal can define the noise without using a threshold criterion [13]. Correntropy is an extension of entropy, which is a quantity of how similar two random complex variables are in a neighborhood of the joint space controlled by the kernel bandwidth. Compared with the MSE-based algorithms, correntropy algorithm is superior. On the whole, the correntropy uses the Gaussian function as the kernel function [14,15], because it is smooth and strictly positive definite. However, the Gaussian kernel is not always an appropriate choice. Recently, He et al. [16] and Chen et al. [17] extended it to more general cases and proposed the generalized maximum correntropy criterion (GMCC) algorithm, which has strong generality and flexibility, and Qian et al. [18] proposed a GMCCC algorithm based on the generalized maximum complex correntropy criterion, which uses the complex generalized Gaussian density(CGGD) function as a kernel of the complex correntropy. It succeeds in the excellent characteristics of GMCC and can deal with complex signals at the same time. These correntropy algorithms are finite impulse response (FIR) wide linear adaptive filtering algorithms, but when FIR filters need to use a large number of coefficients to obtain satisfactory filtering performance, FIR wide linear models may not always be appropriate.

Unlike the FIR counterpart, the memory depth of an IIR filter is independent of the filter order and the number of coefficients [19]. Alternatively, an IIR filter generally requires considerably fewer coefficients than the corresponding FIR filter to achieve a certain level of performance. Thus, the IIR adaptive filters are suitable for systems with memory, such as autoregressive moving average (ARMA) models. Navarro-Moreno et al. [20] developed an ARMA widely linear model with fixed coefficients. To derive a recursive algorithm for augmented complex adaptive IIR filtering, Took et al. [7] proposed the ACA-IIR to learn the parameters of a widely linear ARMA model.

Based on the generalized maximum complex correntropy criterion (GMCCC) and widely linear ARMA model, we propose a GMCCC algorithm variant, namely the GMCCC augmented adaptive IIR filtering algorithm (GMCCC-AIIR). We show that the GMCCC-AIIR is very flexible, with ACA-IIR, GMCCC, and ACLMS as its special cases. Stability analysis shows that GMCCC-AIIR always converges when the step-size satisfies the theoretical bound. Simulation results demonstrate the superiority of the GMCCC-AIIR algorithm.

The organization of the paper is as follows: Section 2 introduces and describes the augmented IIR system. Section 3 defines the generalized complex correntropy, derives the GMCCC-AIIR algorithm, and introduces a reduced-complexity version of the proposed algorithm. Section 4 provides the analysis on the bounds of the step-size for convergence. The superiority of the GMCCC-AIIR algorithm is verified by simulations in Section 4, and the conclusion is drawn in Section 5.

2. Augmented IIR System

The signals used in communications are usually complex circular, whereas the class of signals made complex by convenience of representation become more general, and such signals are often noncircular. For the stochastic modeling of this kind of signal, Picinbono et al. introduce a widely linear moving average (MA) model, which is given by [21]:

where and are filter coefficients. Based on this widely linear model, an ACLMS algorithm was proposed [22].

Since the FIR generalized linear model is not always an optimal choice, Moreno et al. introduce a fixed coefficient ARMA generalized linear model [20].

where , are the coefficients of feedback and its conjugation, p and q are the orders of the AR and MA parts, respectively. The model provides a theoretical basis for the proposed recursive algorithm for training adaptive IIR filters.

To introduce a recursive algorithm of augmented complex adaptive IIR filter, Took et al. [5] give the output of the widely linear IIR filter in the following form:

where M is the order of the feedback and N is the length of the input. This model can be simplified as follows:

where:

3. Generalized Complex Correntropy and GMCCC-AIIR Algorithm

3.1. Generalized Complex Correntropy

For two complex variables and , complex Correntropy is defined as [23]:

where are real variables, is the kernel function.

For the Gaussian kernel in the complex field [23], the kernel function can be expressed as:

where is the kernel width.

In this paper, we employ a CGGD function as the kernel function, and it’s corresponding correntropy is named generalized complex correntropy [18]:

where is the shape parameter, is the kernel width, , .

In this way, generalized complex correntropy can be written as:

The samples are finite in reality, so we estimate the generalized complex correntropy by sample mean.

where .

Instead of the correntropy in data analysis, the correntropic loss is often used, so we define the generalized complex correntropic loss as:

Then, when the sample is finite, the generalized complex correntropic can be expressed as:

There are some properties of generalized complex correntropy [18].

Property 1.

is symmetric, i.e.,

Property 2.

is bounded with and achieves its maximum when

We can get , it is symmetric and achieves its minimum when on the basis of Properties 1 and 2.

Property 3.

Given , the following conclusions about are true:

- When , is convex at any with ;

- When , is non-convex at any with .

3.2. GMCCC-AIIR Algorithm

Based on properties of generalized complex correntropy, we define the cost function of the GMCCC-AIIR algorithm as:

where .

We can infer from (4) that , so . Then, we search for the optimal solution by stochastic gradient descent method, i.e.,

where the option of the kernel bandwidth alpha is according to property 3, so that the cost function is convex and the result of the stochastic gradient descent method is the global optimal solution rather than the local optimal one.

The gradients can be computed as: [24]

where:

The gradient vectors (17) and (18) can be written as:

where and are the real and the imaginary part of complex quantities respectively, and . To calculate the gradient in (16), items in (17), (18) must be calculated separately, such as:

The feedback in the IIR system leads to the recursions on the right side of (21) and (22). These are the derivatives of the past values to present weights, which are impossible to compute. To avoid this problem, for a small step-size, we can approximate that:

Thus, the gradient can be written in the following forms,

and for the gradient vector in (18), similarly, we have,

So the GMCCC-AIIR can be expressed in the form as:

3.3. GMCCC-AIIR as a Generalization of ACA-IIR and ACLMS

When and degenerate to:

i.e., the classical ACA-IIR algorithm. On this basis, when feedback within the GMCCC-AIIR is cancelled, that is, the partial derivatives on the right-hand side of (25), (27), (29) and (31) vanish for the widely linear FIR filter, yielding:

As desired, the GMCCC-AIIR algorithm (32) now simplifies into the ACLMS algorithm for FIR filters, given by [22]:

Reduce the Computational Complexity of AGMCCC-IIR

The weight update of AGMCCC-IIR has a large amount of calculation, and it requires recursions for the sensitivities and . However, this can be simplified to updating only eight sensitivities by the approximation (23). For example,

Further, we define as follows:

For a small step-size, and approximate to and , hence . can be approximated, i.e.,

We only need to update for the sensitivity . This approximation also applies for all other sensitivities.

4. Convergence of AGMCCC-IIR

For convenience, we write the algorithm (32) as:

is defined as the unknown system parameter, and . In this way,

Thus,

We know that the step-size is a small positive constant. If the system converges when , we can approximate . It can be inferred that:

5. Simulation

In this section, we present simulation results to confirm the theoretical conclusions drawn in previous sections. We demonstrate the superiority of the GMCCC-AIIR algorithm compared with the ACA-IIR algorithm in non-Gaussian noise. All the system parameters, signal noise, and input signals are complex valued. The unknown augmented IIR system is given by:

The real part and the imaginary part of the input signal x are Gaussian distributed, with zero mean and unit variance. One hundred Monte Carlo simulations were ran. To evaluate estimation accuracy, the mean square deviation (MSD) is defined by .

5.1. Complex Non-Gaussian Noise Models

Unlike Gaussian noise, non-Gaussian noise is a random process which the probability distribution function (pdf) of non-Gaussian noise does not satisfy the normal distribution. Generally speaking, the non-Gaussian noise distributions can be divided into two categories: light-tailed (e.g., binary, uniform, etc.) and heavy-tailed (e.g., Cauchy, mixed Gaussian, alphastable, etc.). In the following experiments, four common non-Gaussian noise models, including Cauchy noise, mixed Gaussian noise, alpha-stable noise, and Student’s t noise, are selected for performance evaluation, and the additive complex noise can be written as: , where and are obedient to different distributions in different non-Gaussian noise models. The descriptions of these non-Gaussian noise are the following.

5.1.1. Mixed Gaussian Noise

The mixed Gaussian noise model is given by [25]:

where denotes the Gaussian distributions with mean values and variances , and is the mixture parameter. Usually, one can set to a small value and to represent the impulsive noise. Thus, we define the mixed Gaussian noise parameter vector as .

5.1.2. Alpha-Stable Noise

The alpha-stable distribution is often used to model the probability distribution of heavy-tailed impulse noise. It is a more generalized Gaussian distribution, or Gaussian distribution is a special case of alpha-stable distribution. It is compatible with many signals in practice, such as noise in telephone lines, atmospheric noise, and backscattering echos in radar systems; even the modeling of economic time series is very successful. The characteristic function of the alpha-stable noise is defined as [26,27]:

in which:

From (49) and (50), one can observe that a stable distribution is completely determined by four parameters: (1) the characteristic factor ; (2) the symmetry parameter ; (3) the dispersion parameter ; and (4) the location parameter . Both and obey the alpha-stable distribution, so we define the alpha-stable noise parameter vector as .

It is worth mentioning that, in the case of , the alpha-stable distribution coincides with the Gaussian distribution, while is the same as the Cauchy distribution.

5.1.3. Cauchy Noise

The PDF of the Cauchy noise is [28]:

5.1.4. Student’s T Noise

The PDF of the Student’s t noise is [29]:

where n is the degree of freedom, denotes the Gamma function.

5.2. Augmented Linear System Identification

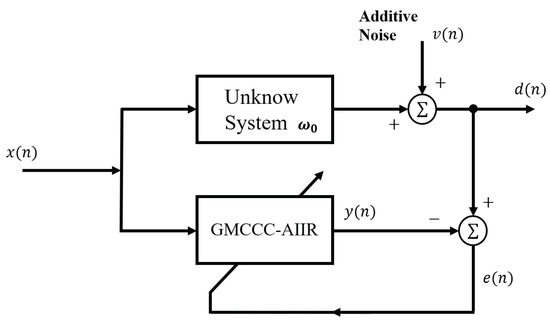

Figure 1 is the block diagram of the system identification, and the length of the adaptive filter is equal to the unknown system impulse response.

Figure 1.

System Identification Configuration.

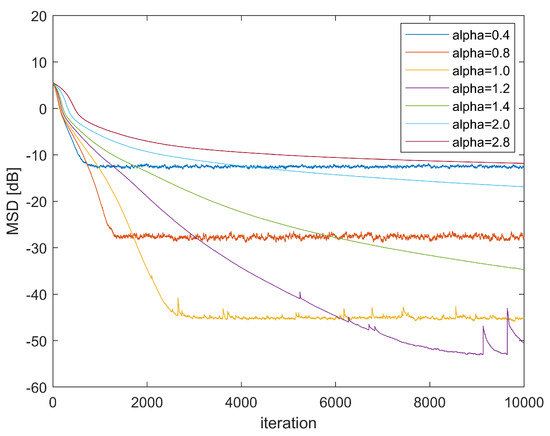

First, we demonstrate how kernel bandwidth affects the convergence performance of GMCCC-AIIR. Figure 2 shows the convergence curves of GMCCC-AIIR with different , in which the noise chooses mixed Gaussian noise and . Obviously, the choice of kernel bandwidth has a significant effect on the convergence. In this example, the convergence performance and convergence speed of the proposed algorithm get better when decreases. Generally speaking, small bandwidth is more robust to impulse noise without considering the convergence rate, and the performance of the algorithm is optimal when .

Figure 2.

Learning Curve in different .

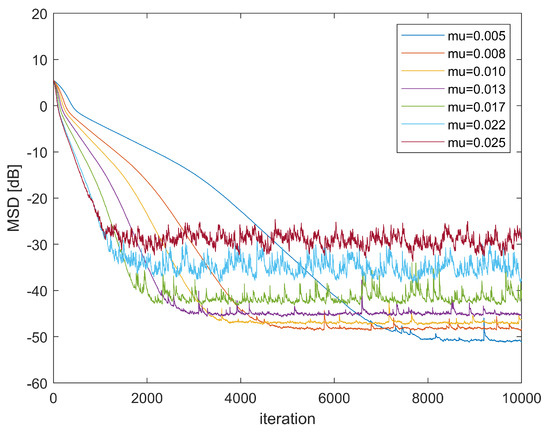

Second, the stability of GMCCC-AIIR at different step sizes is investigated. Figure 3 shows the convergence performance for different step sizes. The noise is still the mixed Gaussian noise and . The simulation results show that when the step size is large, such as , the convergence performance gets worse, and the GMCCC-AIIR will diverge if step size continues to increase, which confirms the correctness of the stability analysis in Section 4.

Figure 3.

Learning Curve in different .

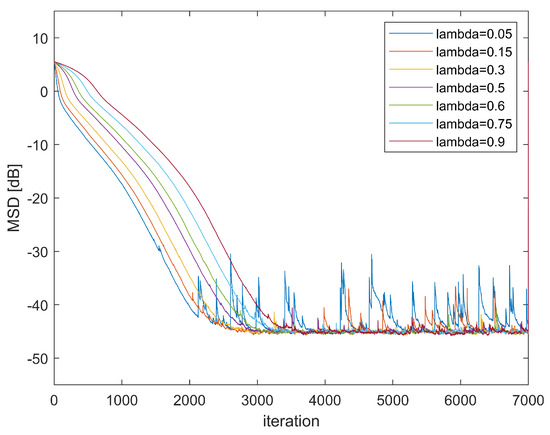

Third, we introduce how the parameter will affect the performance of the algorithm. Figure 3 shows the learning curve of GMCCC-AIIR with different . The noise is mixed Gaussian noise and . We can see from Figure 4 that when the parameter increases, the convergence speed will slow down. However, when is too small, the GMCCC-AIIR algorithm is approximate to the ACA-IIR, and the convergence performance is poor in the non-Gaussian noise model. Therefore, we should choose the appropriate parameter according to different situations.

Figure 4.

Learning Curve in different .

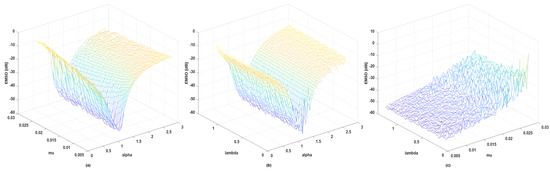

Fourth, we introduce how pairwise parameters , , and affect the steady-state excess MSD (EMSD) and give 3D diagrams of EMSD. The number of iterations is increased to 15,000 to ensure the convergence of the algorithm, and the additive noise is still mixed Gaussian noise. EMSD equals the average MSD of the last 1000 iterations. Figure 5 shows that EMSD of GMCCC-AIIR mainly depends on and . The performance of the algorithm get worse when increases and approaches 1, the algorithm performs best. Combined with Figure 4 and Figure 5b,c, mainly affects the convergence speed of the GMCCC. When approaches 0, the robustness of the algorithm to non-Gaussian noise gets worse, the outliers of EMSD increase, and the algorithm may even diverge.

Figure 5.

EMSD with different pairwise parameters (a) and () (b) and () (c) and ().

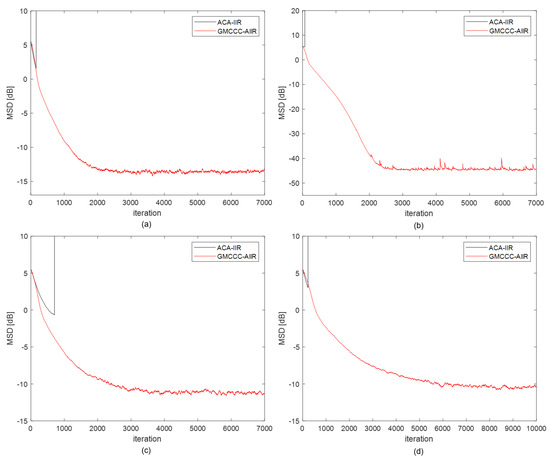

Fifth, we compare the performance of GMCCC-AIIR and ACA-IIR under four noise distributions. In the simulation, the mixed Gaussian noise and alpha-stable noise parameters are set separately at and , the freedom parameter of student noise n is set to 2, and the Cauchy noise is the standard form. The step-sizes are chosen such that both algorithms have almost the same initial convergence speed. The simulation results are shown in Figure 6. As expected, the proposed GMCCC-AIIR algorithm can achieve better steady-state performance than ACA-IIR significantly in these non-Gaussian noise models. The ACA-IIR algorithm diverges after encountering impulse and the MSD approaches infinity at this time. The convergence process cannot be well observed when trying to display the ACA-IIR and the GMCCC-AIIR learning curve in the same coordinate system. Therefore, we limit the height of all simulation results.

Figure 6.

Learning Curve with different noise: (a) alpha-stable noise, (b) mixed Gaussian noise, (c) Cauchy noise, (d) Student’s t noise.

6. Conclusions

In this paper, we propose an adaptive algorithm for augmented IIR filter based on generalized maximum complex correlation entropy criterion. We study the convergence performance, providing the bound for the step size. Moreover, computational complexity is reduced by making use of the redundancy in the state vector of the filter. We also prove that ACA-IIR and ACLMS are special cases of GMCCC-AIIR. The simulation results verify the theoretical conclusion and show how parameters affect the convergence performance of GMCCC-AIIR and superiority of the GMCCC-IIR algorithm compared with the MSE-based algorithm ACA-IIR when the noise is non-Gaussian distribution.

Author Contributions

Conceptualization, H.Z. and G.Q.; methodology, H.Z.; software, H.Z. and G.Q.; validation, G.Q.; formal analysis, H.Z.; investigation, G.Q.; resources, H.Z. and G.Q.; data curation, H.Z.; writing—original draft preparation, H.Z; writing—review and editing, G.Q.; visualization, H.Z. and G.Q.; supervision, H.Z. and G.Q.; project administration, G.Q.; funding acquisition, G.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Chongqing Municipal Training Program of Innovation and Entrepreneurship for Undergraduates (grant no. S202210635280).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pascual Campo, P.; Anttila, L.; Korpi, D.; Valkama, M. Cascaded Spline-Based Models for Complex Nonlinear Systems: Methods and Applications. IEEE Trans. Signal Process. 2021, 69, 370–384. [Google Scholar] [CrossRef]

- Huang, F.; Zhang, J.; Zhang, S. Complex-valued proportionate affine projection Versoria algorithms and their combined-step-size variants for sparse system identification under impulsive noises. Digit. Signal Process. 2021, 118, 103209. [Google Scholar] [CrossRef]

- Mandic, D.P.; Goh, V.S.L. Complex valued nonlinear adaptive filters: Noncircularity, widely linear and neural models. In Adaptive and Learning Systems for Signal Processing, Communications, and Control; Wiley: Chichester, UK, 2009. [Google Scholar]

- Javidi, S.; Pedzisz, M.; Goh, S.L.; Mandic, D. The Augmented Complex Least Mean Square Algorithm with Application to Adaptive Prediction Problems; Citeseer: Princeton, NJ, USA, 2008; p. 4. [Google Scholar]

- Took, C.C.; Mandic, D.P. Adaptive IIR Filtering of Noncircular Complex Signals. IEEE Trans. Signal Process. 2009, 57, 4111–4118. [Google Scholar] [CrossRef]

- Khalili, A. Diffusion augmented complex adaptive IIR algorithm for training widely linear ARMA models. Signal Image Video Process. 2018, 12, 1079–1086. [Google Scholar] [CrossRef]

- Khalili, A.; Rastegarnia, A.; Bazzi, W.M.; Rahmati, R.G. Incremental augmented complex adaptive IIR algorithm for training widely linear ARMA model. Signal Image Video Process. 2017, 11, 493–500. [Google Scholar] [CrossRef]

- Sayed, A.H. Fundamentals of Adaptive Filtering; IEEE Press Wiley-Interscience: New York, NY, USA, 2003. [Google Scholar]

- Chen, B.; Zhu, Y.; Hu, J.; Príncipe, J.C. System Parameter Identification: Information Criteria and Algorithms, 1st ed.; Elsevier: London, UK; Waltham, MA, USA, 2013. [Google Scholar]

- Principe, J.C.; Xu, D.; Iii, J.W.F. Information-Theoretic Learning; Springer: Berlin/Heidelberg, Germany, 2010; p. 62. [Google Scholar]

- Plataniotis, K.N.; Androutsos, D.; Venetsanopoulos, A.N. Nonlinear Filtering of Non-Gaussian Noise. J. Intell. Robot. Syst. 1997, 19, 207–231. [Google Scholar] [CrossRef]

- Weng, B.; Barner, K. Nonlinear system identification in impulsive environments. IEEE Trans. Signal Process. 2005, 53, 2588–2594. [Google Scholar] [CrossRef]

- Schimmack, M.; Mercorelli, P. An on-line orthogonal wavelet denoising algorithm for high-resolution surface scans. J. Frankl. Inst. 2018, 355, 9245–9270. [Google Scholar] [CrossRef]

- Liu, W.; Pokharel, P.P.; Principe, J.C. Correntropy: Properties and Applications in Non-Gaussian Signal Processing. IEEE Trans. Signal Process. 2007, 55, 5286–5298. [Google Scholar] [CrossRef]

- Liu, X.; Chen, B.; Zhao, H.; Qin, J.; Cao, J. Maximum Correntropy Kalman Filter With State Constraints. IEEE Access 2017, 5, 25846–25853. [Google Scholar] [CrossRef]

- He, Y.; Wang, F.; Yang, J.; Rong, H.; Chen, B. Kernel adaptive filtering under generalized Maximum Correntropy Criterion. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 1738–1745. [Google Scholar] [CrossRef]

- Chen, B.; Xing, L.; Zhao, H.; Zheng, N.; Príncipe, J.C. Generalized Correntropy for Robust Adaptive Filtering. IEEE Trans. Signal Process. 2016, 64, 3376–3387. [Google Scholar] [CrossRef] [Green Version]

- Qian, G.; Wang, S. Generalized Complex Correntropy: Application to Adaptive Filtering of Complex Data. IEEE Access 2018, 6, 19113–19120. [Google Scholar] [CrossRef]

- Li, K.; Principe, J.C. Functional Bayesian Filter. IEEE Trans. Signal Process. 2022, 70, 57–71. [Google Scholar] [CrossRef]

- Navarro-Moreno, J. ARMA Prediction of Widely Linear Systems by Using the Innovations Algorithm. IEEE Trans. Signal Process. 2008, 56, 3061–3068. [Google Scholar] [CrossRef]

- Picinbono, B.; Chevalier, P. Widely linear estimation with complex data. IEEE Trans. Signal Process. 1995, 43, 2030–2033. [Google Scholar] [CrossRef]

- Mandic, D.; Javidi, S.; Goh, S.; Kuh, A.; Aihara, K. Complex-valued prediction of wind profile using augmented complex statistics. Renew. Energy 2009, 34, 196–201. [Google Scholar] [CrossRef]

- Guimarães, J.P.F.; Fontes, A.I.R.; Rego, J.B.A.; de M. Martins, A.; Príncipe, J.C. Complex Correntropy: Probabilistic Interpretation and Application to Complex-Valued Data. IEEE Signal Process. Lett. 2017, 24, 42–45. [Google Scholar] [CrossRef]

- Shynk, J. A complex adaptive algorithm for IIR filtering. IEEE Trans. Acoust. Speech Signal Process. 1986, 34, 1342–1344. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, B.; Príncipe, J.C. Kernel adaptive filtering with maximum correntropy criterion. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 2012–2017. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Qiu, T.; Song, A.; Tang, H. A novel correntropy based DOA estimation algorithm in impulsive noise environments. Signal Process. 2014, 104, 346–357. [Google Scholar] [CrossRef]

- Wu, Z.; Peng, S.; Chen, B.; Zhao, H. Robust Hammerstein Adaptive Filtering under Maximum Correntropy Criterion. Entropy 2015, 17, 7149–7166. [Google Scholar] [CrossRef] [Green Version]

- Chen, B.; Xing, L.; Liang, J.; Zheng, N.; Príncipe, J.C. Steady-State Mean-Square Error Analysis for Adaptive Filtering under the Maximum Correntropy Criterion. IEEE Signal Process. Lett. 2014, 21, 880–884. [Google Scholar] [CrossRef]

- Wang, J.; Dong, P.; Shen, K.; Song, X.; Wang, X. Distributed Consensus Student-t Filter for Sensor Networks With Heavy-Tailed Process and Measurement Noises. IEEE Access 2020, 8, 167865–167874. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).