1. Introduction

It is well known that estimating (Shannon) entropies from finite samples is not trivial. If one naively replaces the probability

to be in “box”

i by the observed frequency,

, statistical fluctuations tend to make the distribution look less uniform, which leads to an underestimation of the entropy. There have been numerous proposals on how to estimate and eliminate this bias [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22]. Some make quite strong assumptions [

5,

7]; others use Bayesian methods [

6,

11,

12,

19,

21,

22]. As pointed out in [

4,

13,

14,

17], one can devise estimators with arbitrarily small bias (for sufficiently large

N and fixed

), but these will then have very large statistical errors. As conjectured in [

4,

13,

14,

15,

17], the variance of any estimator whose bias vanishes will have a diverging variance.

Another widespread belief is that Bayesian entropy estimators cannot be outperformed by non-Bayesian ones for severely undersampled cases. The problem with Bayesian estimators is of course that they depend on a good choice of prior distributions, which is not always easy, and they tend to be slow. One positive feature of a non-Bayesian estimator proposed in [

14] is that it is extremely fast since it works precisely like the ‘naive’ (or maximum-likelihood) estimator, except that the logarithms used there are replaced by a function

defined on integers, which can be precomputed by means of a simple recursion. While the estimator of [

14] seems in general to be a reasonable compromise between bias and variance, it was shown in [

15] that it can be improved—as far as bias is concerned, at the cost of increased variance—by generalizing

to a one-parameter family of functions

.

In the present paper, we show that the Grassberger–Schürmann approach [

14,

15] can be further improved by using different functions

for each different realization

i of the random variable. Indeed, the

can be chosen such that the estimator is completely free of bias and yet has a finite variance—although, to be honest, the optimal parameters

can be found only if the exact distribution is known (in which case also the entropy can be computed exactly). We show that—even if the exact, optimal

is not known—the new estimator can reduce the bias very much, without inducing unduly large variances, provided the distribution is sufficiently much undersampled.

We test the proposed estimator numerically with simple examples, where we produce bias-free entropy estimates, e.g., from pairs of ternary variables, something which, to my knowledge, is not possible with any Bayesian method. We also use it for estimating mutual information (MI) in cases where one of the two variables is binary, and the other one can take very many values. In the limit of severe undersampling and of no obvious regularity in the true probabilities, MI cannot be estimated unambiguously. In that limit, the present algorithm seems to choose systematically a different outcome from Bayesian methods for reasons that are not yet clear.

2. Basic Formalism

In the following, we use the notation of [

14]. As in this reference, we consider

“boxes” (states, possible experimental outcomes, etc.) and

points (samples, events, and particles) distributed randomly and independently into the boxes. We assume that each box has weight

(

) with

. Thus each box

i will contain a random number

of points, with

. Their joint distribution is multinomial,

while the marginal distribution in box

i is binomial,

Our aim is to estimate the entropy,

with

, from an observation of the numbers

(in the following, all entropies are measured in “natural units”, not in bits). The estimator

will of course have both statistical errors and a bias, i.e., if we repeat this experiment, the average of

will, in general, not be equal to

H,

as will also be its variance

. Notice that for computing

, we need only Equation (

2), not the full multinomial distribution of Equation (

1). However, if we want to compute this variance, we additionally need the joint marginal distribution in two boxes,

in order to compute the covariances between different boxes. Notice that these covariances were not taken into account in [

13,

17], whence the variance estimations in these papers are, at best, approximate.

In the following, we are mostly interested in the case where we are close to the limit

, with

(the average number of points per box) being finite and small. In this limit, the variance will go to zero (because essentially one averages over many boxes), but the bias will remain finite. The binomial distribution, Equation (

2), can be replaced then by a Poisson distribution

However, as we shall see, it is in general not good advice to jump right to this limit, even if we are close to it. More generally, we shall therefore also be interested in the case of large but finite N, where also the variance is positive, and we will discuss the balance between demanding minimal bias versus minimal variance.

Indeed it is well known that the

naive (or ‘maximum-likelihood’) estimator, obtained by assuming

without fluctuations,

is negatively biased,

.

In order to estimate

H, we have to estimate

or equivalently

for each

i. Since the distribution of

depends, according to Equation (

2), on

only, we can make the rather general ansatz [

4,

14] for the estimator

with a yet unknown function

. Notice that

becomes with this ansatz a sum over strictly positive values of

. Effectively this means that we have assumed that observing an outcome

does not give any information: if

, we do not know whether this is because of statistical fluctuations or because

for that particular

i.

The resulting entropy estimator is then [

14]

with the overbar indicating an average over all boxes,

Its bias is

with

being the expectation value for a typical box (in the following we shall suppress the box index

i to simplify notation, wherever this makes sense).

In the following,

is the digamma function, and

is an exponential integral (Ref. [

23], paragraph 5.1.4). It was shown in [

14] that

which simplifies in the Poisson limit (

,

z fixed) to

Equations (

14) and (

15) are the starting points of all further analysis. In [

14], it was proposed to re-write Equation (

15) as

where

The advantages are that

can be evaluated very easily by recursion (here

is the Euler–Mascheroni constant),

and

, and neglecting the second term,

gives an excellent approximation unless

z is exceedingly small, i.e., unless the numbers of points per box are very small such that the distribution is very severely undersampled. Thus the entropy estimator proposed in [

14] was simply

Furthermore, since

is strictly positive, neglecting it gives a negative bias in

, and one can show rigorously that this bias is smaller than that of [

1,

3].

3. Schürmann and Generalized Schürmann Estimators

The easiest way to understand the Schürmann class of estimators [

15] is to define, instead of

, a one-parameter family of functions

Notice that and .

Let us first discuss the somewhat easier Poissonian limit, where

which gives

Using—to achieve greater flexibility—different parameters

for different boxes, and neglecting the second term in the last line of Equation (

20), we obtain finally by using Equation (

3)

Indeed, the last term in Equation (

20) can always be neglected for sufficiently large

a because

for any real

.

Equation (

22) might suggest that using larger

would always give an improvement because bias is reduced, but this would not take into account that larger

might lead to larger variances. However, the optimal choices of the parameters

are not obvious. Indeed, in spite of the ease of derivations in the Poissonian limit, it is much better to avoid it and to use the exact binomial expression.

For the general binomial case, the algebra is a bit more involved. By somewhat tedious but straightforward algebra, one finds that

One immediately checks that this reduces, in the limit (

,

z fixed), to Equation (

20). On the other hand, by substituting

in the integral, we obtain

Finally, by combining with Equation (

14), we find [

15]

and, using again Equation (

3),

with a correction term which is

times a sum over the integrals in Equation (

26). This correction term vanishes, if all integration ranges vanish. This happens when

for all

i, or

This is a remarkable result, as it shows that in principle, there exists always an estimator which has zero bias and yet finite variance. In [

15], one single parameter

a was used, which is why we call our method a generalized Schürmann estimator.

When all box weights are small,

for all

i, then these bias-optimal values

are very large. However, for two boxes with

, e.g., the bias vanishes already for

, i.e., for the estimator of Grassberger [

14]!

In order to test the latter, we drew

triplets of random bits (i.e.,

,

), and estimated

and

for each triplet. From these, we computed averages and variances, with the results

bits and

bits. We should stress that the latter requires the precise form of Equation (

27) to be used, with

neither replaced by

nor by

.

Since there is no free lunch, there must of course be some problems in the limit when parameters

are chosen to be nearly bias-optimal. One problem is that one cannot, in general, choose

according to Equation (

28), because the

is unknown. In addition, it is in this limit (and more generally when

) that variances blow up. In order to see this, we have to discuss in more detail the properties of the functions

.

According to Equation (

19),

is a sum of two terms, both of which can be computed, for all positive integer

n, by recursion. The digamma function

satisfies

Let us denote the second term in Equation (

19) as

. It satisfies the recursion

Thus, while

is monotonic and slowly increasing,

has alternating sign and increases, for

, exponentially with

n. As a consequence, also

is non-monotonic and diverges exponentially with

n, whenever

. Therefore an estimator such as

gets, unless all

are very small, increasingly large contributions of alternating signs. As a result, the variances will blow up, unless one is very careful to keep a balance between bias and variance.

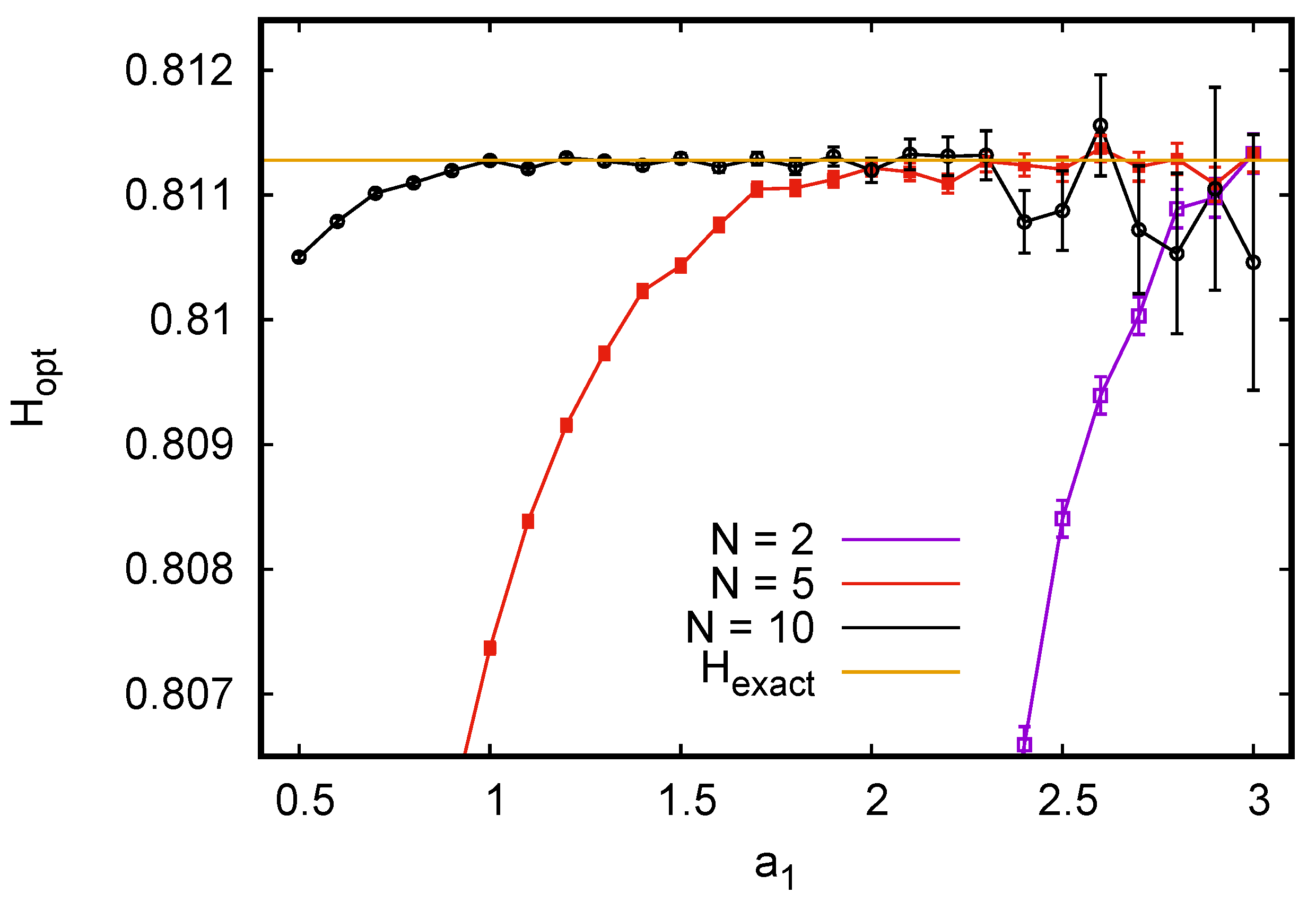

To illustrate this, we drew tuples of independent and identically distributed binary variables

with

and

. For

, we chose

because this should minimize the bias and should not create problems with the variance. We should expect such problems, however, if we would take

, although this would reduce the bias to zero. Indeed we found for

that the variance of the estimator exploded for all practical purposes as soon as

, while the results were optimal for

(bias and statistical error were both

for

tuples). On the other hand, for pairs (

), we had to use much larger values of

for optimality, and

gave indeed the best results (see

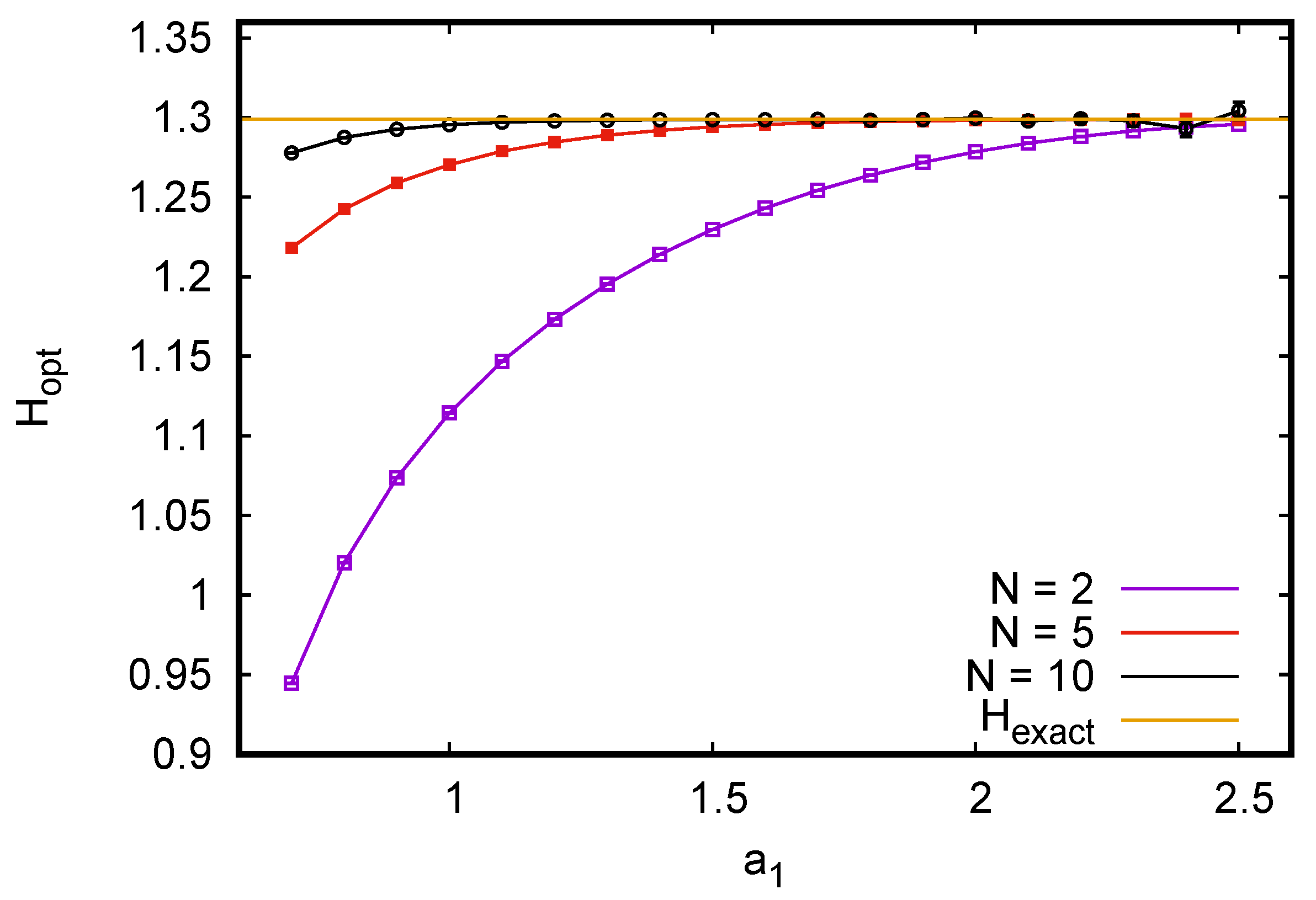

Figure 1). A similar plot for ternary variables is shown in

Figure 2, where we see again that

a-values near the bias-optimal ones gave estimates with zero almost zero bias and acceptable variance for the most undersampled case

. Again, using the the exact bias-optimal values would have given unacceptably large variances for large

N.

The message to be learned from this is that we should always keep all sufficiently small such that is not much larger than 1 for any of the observed values of .

4. Estimating Mutual and Conditional Information

Finally, we apply our estimator to two problems of mutual information (MI) estimation discussed in [

22] (actually, the problems were originally proposed by previous authors, but we shall compare our results mainly to those in [

22]). In each of these problems, there are two discrete random variables:

X has many (several thousand) possible values, while

Y is binary. Moreover, the marginal distribution of

Y is uniform,

, while the

X distributions are highly non-uniform. Finally—and that is crucial—the joint distributions show no obvious regularities.

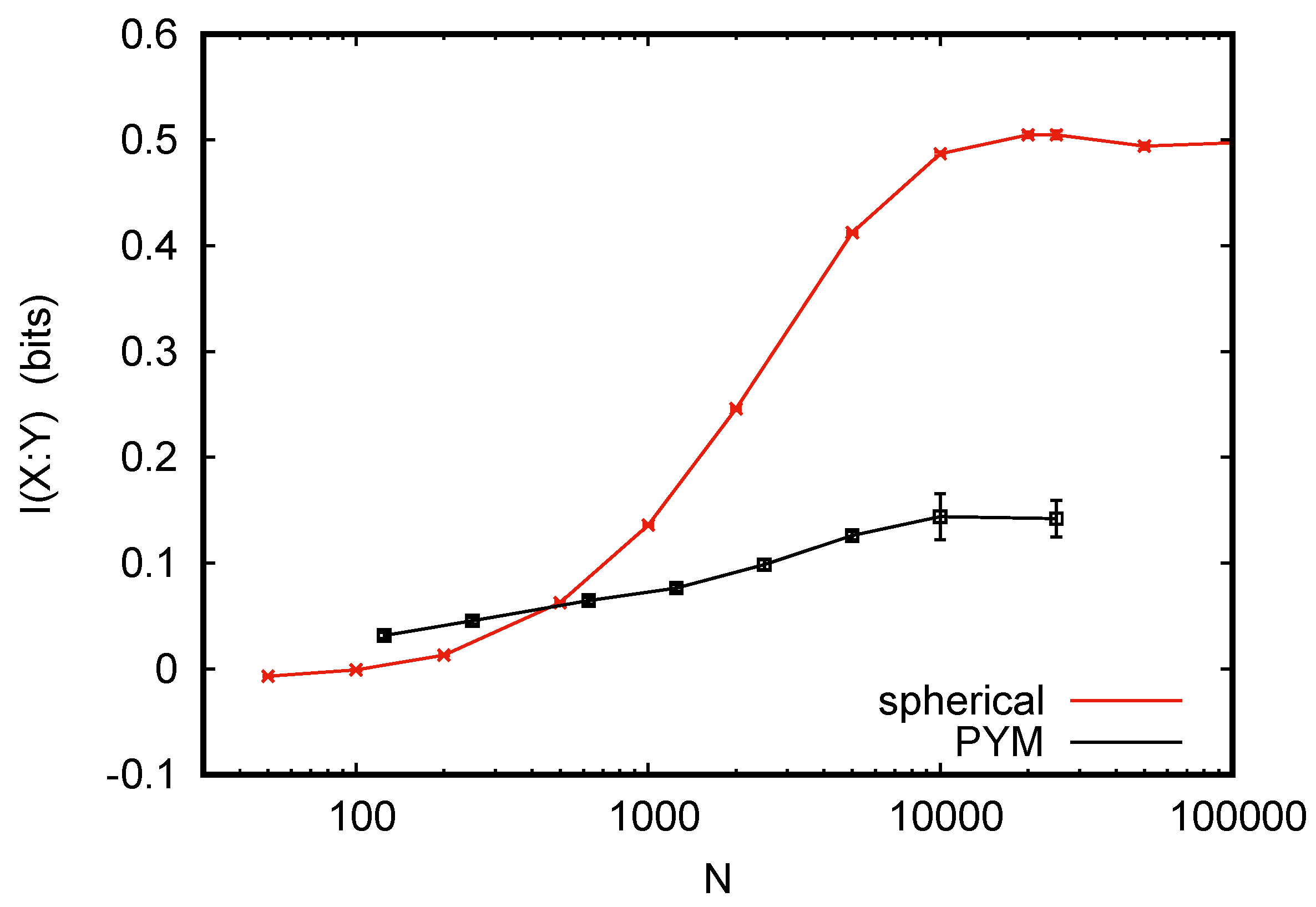

The MI is estimated as . Since bit, the problem essentially burns down to estimate the conditional probabilities . The data are given in terms of a large number of independent and identically distributed sampled pairs (250,000 pairs for problem I, called ‘PYM’ in the following, and 50,000 pairs for problem II, called ‘spherical’ in the following). The task is to draw random subsamples of size N, to estimate the MI from each subsample, and to calculate averages and statistical widths from these estimates.

Results are shown in

Figure 3. For large

N, our data agree perfectly with those in [

22] and in the previous papers cited in [

22]. However, while the MI estimates in these previous papers all increase with decreasing

N, and those in [

22] stay essential constant (as we would expect, since a good entropy estimator should not depend on

N, and conditional entropies should decrease with

N for not so good estimators), our estimated MI decreases to zero for small

N.

This looks at first sight like a failure of our method, but it is not. As we said, the joint distributions show no regularities. For small N most values of X will show up at most once, and if we write the sequence of values in a typical tuple, it will look like a perfectly random binary string. The modeler knows that it actually is not random, because there are correlations between X and Y. However, no algorithm can know this, and any good algorithm should conclude that bit. Why, then, was this not found in the previous analyses? In all these, Bayesian estimators were used. If the priors used in these estimators were chosen in view of the special structures in the data (which are, as we should stress again, not visible from the data, as long as these are severely undersampled), then the algorithms can, of course, make use of these structures and avoid the conclusion that bit.

5. Conclusions

In conclusion, we gave an entropy estimator with zero bias and finite variance. It builds on an estimator by Schürmann [

15], which itself is a generalization of [

14]. It involves a real-valued parameter for each possible realization of the random variable, and bias is reduced to zero by choosing these parameters properly. However, this choice would require that we know already the distribution, which is of course not the case. Nevertheless we can reduce the bias very much for severely undersampled cases. In cases with moderate undersampling, choosing these zero-bias parameters would give very large variances and would thus be useless. Nevertheless, by judicious parameter choices, we can obtain extremely good entropy estimates. Finding good parameters is non-trivial, but is made less difficult by the fact that the method is very fast.

Finally, we pointed out that Bayesian methods, which have been very popular in this field, have the danger of choosing “too good” priors, i.e., choosing priors which are not justified by the data themselves and are thus misleading, although both the bias and the observed variances seem to be small.

I thank Thomas Schürmann for the numerous discussions, and Damián Hernández for both discussions and for sending me the data for

Figure 3.