Abstract

The generalized likelihood ratio test (GLRT) for composite hypothesis testing problems is studied from a geometric perspective. An information-geometrical interpretation of the GLRT is proposed based on the geometry of curved exponential families. Two geometric pictures of the GLRT are presented for the cases where unknown parameters are and are not the same under the null and alternative hypotheses, respectively. A demonstration of one-dimensional curved Gaussian distribution is introduced to elucidate the geometric realization of the GLRT. The asymptotic performance of the GLRT is discussed based on the proposed geometric representation of the GLRT. The study provides an alternative perspective for understanding the problems of statistical inference in the theoretical sense.

1. Introduction

The problem of hypothesis testing under statistical uncertainty arises naturally in many practical contexts. In these cases, the probability density functions (PDFs) under either or both hypotheses need not be completely specified, resulting in the inclusion of unknown parameters in the PDFs to express the statistical uncertainty in the model. The class of hypothesis testing problems with unknown parameters in the PDFs is commonly referred to as composite hypothesis testing [1]. The generalized likelihood ratio test (GLRT) is one of the most widely used approaches in composite hypothesis testing [2]. It involves estimating the unknown parameters via the maximum likelihood estimation (MLE) to implement a likelihood ratio test. In practice, the GLRT appears to be asymptotically optimal in the sense of the Neyman–Pearson criterion and usually gives satisfactory results [3]. As the GLRT combines both estimation and detection to deal with the composite hypothesis testing problem, its performance, in general, will depend on the statistical inference performance of these two aspects. However, in the literature, there is no general analytical result associated with the performance of the GLRT [1].

In recent years, the development of new theories in statistical inference has been characterized by the emerging trend of geometric approaches and their powerful capabilities, which allows one to analyze statistical problems in a unified perspective. It is important to link the GLRT to the geometrical nature of estimation and detection, which provides a new viewpoint on the GLRT. The general problem of composite hypothesis testing involves a decision between two hypotheses where the PDFs are themselves functions of unknown parameters. One approach to the understanding of performance limitations of statistical inference is via the theory of information geometry. In this context, the family of probability distributions with a natural geometrical structure is defined as a statistical manifold [4]. Information geometry studies the intrinsic properties of statistical manifolds which are endowed with a Riemannian metric and a family of affine connections derived from the log-likelihood functions of probability distributions [5]. It provides a way of analyzing the geometrical properties of statistical models by regarding them as geometric objects.

The geometric theory of statistics was firstly introduced in the 1940s by Rao [6], where the Fisher information matrix was regarded as a Riemannian metric on the manifold of probability distributions. Then, in 1972, a one-parameter family of affine connections was introduced by Chentsov in [7]. Meanwhile, Efron [8] defined the concept of statistical curvature and discussed its basic role in the high-order asymptotic theory of statistical inference. In 1982, Amari [5,9] developed a duality structure theory that unified all of these theories in a differential-geometric framework, leading to a large number of applications.

In the area of hypothesis testing, the geometric perspectives have acquired relevance in the analysis and development of new approaches to various testing and detection contexts. For example, Kass and Vos [10] provided a detailed introduction to the geometrical foundations of asymptotic inference of curved exponential families. Garderen [11] presented a global analysis of the effects of curvature on hypothesis testing. Dabak [12] induced a geometric structure on the manifold of probability distributions and enforced a detection theoretic specific geometry on it, while Westover [13] discussed the asymptotic limit in the problems of multiple hypothesis testing from the geometrical perspective. For the development of new approaches to hypothesis testing, Hoeffding [14] proposed an asymptotically optimal test for multinomial distributions in which the testing can be denoted in terms of the Kullback–Leibler divergence (KLD) between the empirical distribution of the measurements and the null hypothesis, where the alternate distribution is unrestricted. In the aspect of signal detection, Barbaresco et al. [15,16,17] studied the geometry of Bruhat–Tits complete metric space and upper-half Siegel space and introduced a matrix constant false alarm rate (CFAR) detector which improves the detection performance of the classical CFAR detection.

As more and more new analyses and new approaches have benefited from the geometric and information-theoretic perspectives of statistics, it appears to be important to clarify the geometry of existing problems that is promising to gain new ways to deal with the statistical problems. In this paper, a geometric interpretation of the GLRT is sought from the perspective of information geometry. Two pictures of the GLRT are presented for the cases where unknown parameters are and are not the same under each hypothesis, respectively. Under such an interpretation, both detection and estimation associated with the GLRT are regarded as geometric operations on the statistical manifold. As a general consideration, curved exponential families [9], which include a large number of the most common used distributions, are taken into account as the statistical model of hypothesis testing problems. A demonstration of one-dimensional curved Gaussian distribution is introduced to elucidate the geometric realization of the GLRT. The geometric structure of the curved exponential families developed by Efron [8] in 1975 and Amari [9] in 1982 provides a theoretical foundation for the analysis. The geometric formulation of the GLRT presented in this paper makes it possible for several advanced notions and conclusions in the information geometry theory to be transferred and applied to the performance analysis of the GLRT.

The main contributions of this paper are summarized as follows:

- A geometric interpretation of the GLRT is proposed based on the differential geometry of curved exponential families and duality structure theory developed by Amari [9]. Two geometric pictures of the GLRT are presented in the theoretical sense, which provides an alternative perspective for understanding the problems of statistical inference.

- The asymptotic performance of the GLRT is discussed based on the proposed geometric representation of the GLRT. The information loss when performingthe MLE using a finite number of samples is related to the flatness of the submanifolds determined by the GLRT model.

In the next section, alternative viewpoints on the likelihood ratio test and the maximum likelihood estimation are introduced from the perspective of information theory. The equivalences between the Kullback–Leibler divergence, likelihood ratio test, and the MLE are highlighted. The principles of information geometry are briefly introduced in Section 3. In Section 4, the geometric interpretation of the GLRT is presented in consideration of the geometry of curved exponential families. We present an example of the GLRT where a curved Gaussian distribution with one unknown parameter is involved, and a further discussion on the geometry of the GLRT. Finally, conclusions are obtained in Section 5.

2. Information-Theoretic Viewpoints on Likelihood Ratio Test and Maximum Likelihood Estimation

In statistics, the likelihood ratio test and maximum likelihood estimation are two fundamental concepts related to the GLRT. The likelihood ratio test is a very general form of testing model assumptions, while the maximum likelihood estimation is one of the most common approaches to parameter estimation. Both of them are associated with the Kullback–Leibler divergence [18], which is equivalent to the relative entropy [19] in information theory.

For a sequence of observations which is independently and identically distributed (i.i.d.), the binary hypothesis testing problem is used to decide whether this sequence originates from the null hypothesis or the alternative hypothesis with probability distributions and , respectively. The likelihood ratio is given by

Assume is the empirical distribution (frequency histogram acquired via Monte Carlo tests) of observed data. For large N, in accordance with the strong law of large numbers [20], the log likelihood ratio test in the Neyman–Pearson formulation is equivalent to

where denotes that the test is to decide if “>” is satisfied, or to decide , and vice versa. The quantity

is the KLD from to . Note that x is dropped from the notion D for simplifying the KLD expression without confusion.

Equation (2) indicates that the likelihood ratio test is equivalent to choosing the hypothesis that is “closer” to the empirical distribution in the sense of the KLD. The test can be referred to as a generalized minimum dissimilarity detector in a geometric viewpoint.

Now, consider another, slightly different problem where the observations are from a statistical model represented by with unknown parameters . The problem is to estimate the unknown parameters based on observations . The likelihood function for the underlying estimation problem is

In a similar way, for large N, maximizing the likelihood (4) to find the maximum likelihood estimate of is equivalent to finding , which minimizes the KLD , i.e.,

where is used as a surrogate for .

The above results provide an information-theoretic view to the problem of hypothesis testing and maximum likelihood estimation in statistics. From the perspective of information difference, these results have profound geometric meanings and can be geometrically analyzed and viewed in the framework of information geometry theory, from which additional insights into the analysis of these statistical problems, as well as their geometric interpretations, are obtained.

3. Principles of Information Geometry

3.1. Statistical Manifold

Information geometry studies the natural geometric structure of the parameterized family of probability distributions specifying by a parameter vector , in which is the samples of a random variable . When the probability measure on the sample space is continuous and differentiable and the mapping is injective [5], the family S is considered as a statistical manifold with as its coordinate system [4].

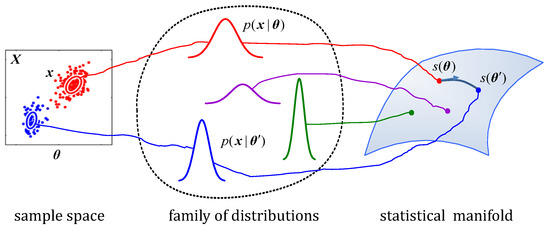

Figure 1 demonstrates the diagram of a statistical manifold. For a given parameter vector , the measurement in the sample space is an instantiation of a probability distribution . Each in the family of distributions is specified by a point on the manifold S. The n-dimensional statistical manifold is composed of the parameterized family of probability distributions with as a coordinate system of S.

Figure 1.

Diagram of a statistical manifold. and denote parameters of the family of distributions from different samples . The connection on the statistical manifold S represents a geodesic (the shortest line) between points and . The length of the geodesic serves as a distance measure between two points on the manifold. The arrow on the geodesic starting from the point denotes the tangent vector, which gives the direction of the geodesic.

Various families of probability distributions correspond to specific structures of the statistical manifold. Information geometry takes the statistical properties of samples as the geometric structure of a statistical manifold, and utilizes differential geometry methods to measure the variation of information contained in the samples.

3.2. Fisher Information Metric and Affine Connections

The metric and connections associated with a manifold are two important concepts in information geometry. For a statistical manifold consisting of a parameterized family of probability distributions, the Fisher information matrix (FIM) is usually adopted as a Riemannian metric tensor of the manifold [6], which is defined by the inner product between tangent vectors at a point on the manifold. It is denoted by , where

where is considered as a basis for the vector space of random variable . The tangent space of S at , denoted as , is identified as the vector space. Based on the above definition, the FIM metric determines how the information distance is measured on the statistical manifold.

When considering the relationships between two tangent spaces and at two neighboring points and (d is the differential operator), an affine connection is defined by which the two tangent spaces become feasible for comparison. When the connection coefficients are all identically 0, then S is flat manifold that “locally looks like” a Euclidean space with zero curvatures everywhere. The most commonly used connection is called -connections [9],

where denotes the connection coefficients with , and

In (7), corresponds to the Levi–Civita connection, while defines the e-connection and defines the m-connection. Under the e-connection and m-connection, an exponential family with natural parameter coordinate and a mixture family with expectation parameter coordinate are both flat manifolds [9]. Statistical inference with respect to the exponential family greatly benefits from the geometric properties of the flat manifold. By using the methods of differential geometry, many additional insights into the intrinsic structure of probability distributions can be obtained, which opens a new perspective on the analysis of statistical problems. In the next section, a geometric interpretation of the GLRT and further discussions are sought based on the principles of information geometry.

4. Geometry of the Generalized Likelihood Ratio Test

As a general treatment, the curved exponential families, which encapsulate many important distributions for real-world problems, are considered as the statistical model for the hypothesis testing problems discussed in this paper. In this section, the MLE solution to parameter estimation for curved exponential families is derived. We then present two pictures of the GLRT, which are sketched based on the geometric structure of the curved exponential families developed by Efron [8] in 1975 and Amari [9] in 1982, to illustrate the information geometry of the GLRT. An example of the GLRT for a curved Gaussian distribution with a single unknown parameter is given, which is followed by a further discussion on the geometric formulation of the GLRT.

4.1. The MLE Solution to Statistical Estimation for Curved Exponential Families

Exponential families contain lots of the most commonly used distributions, including the normal, exponential, Gamma, Beta, Poisson, Bernoulli, and so on [21]. The curved exponential families are the distributions whose natural parameters are nonlinear functions of “local” parameters. The canonical form of a curved exponential family [9] is expressed as

where is a vector of samples, are the natural parameters, are local parameters standing for the parameters of interest to be estimated, which is specified by (9), while denote sufficient statistics with respect to , which take values from the sample space X. is the potential function of the curved exponential family and it is found from the normalization condition , i.e.,

The term “curved” is due to the fact that the curved exponential family in (9) is a submanifold of the canonical exponential family by the embedding .

Let be the log-likelihood and be the Jacobian matrix of the natural parameter . According to (9),

where is the expectation of the sufficient statistics , i.e.,

and is called the expectation parameter, which defines a distribution of mixture family [4]. The natural parameter and expectation parameter are connected by the Legendre transformation [9], as

where is defined by

Therefore,

Thus, the maximum likelihood estimator of the local parameter in (9) can be obtained by the following likelihood equation:

Equation (16) indicates that the solution to the MLE can be found by mapping the data onto orthogonally to the tangent of . As and live in two different spaces and , the inner product between dual spaces is defined as with a metric . For the flat manifold, the identity matrix serves as the metric . By analogy with the MLE for the universal distribution given by (5), Hoeffding [14] presented another interpretation for the MLE of the curved exponential family. In the interpretation, represents a point in which is located closest to the data point in the sense of the Kullback–Leibler divergence, i.e.,

where denotes the Kullback–Leibler divergence from the multivariate joint distributions of to .

Based on the above analysis, there are two important spaces related to a curved exponential family. One is called the natural parameter space, denoted by , which denotes the enveloping space including all the distributions of exponential families, and the other is called the expectation parameter space, denoted by , denoting the dual space of . The two spaces are “dual” with each other and flat under the e-connection and m-connection, respectively. The curved exponential family (9) is regarded as submanifolds embedded in the two spaces, and the data can also be immersed in these spaces in the form of sufficient statistics . Consequently, the estimators, such as the MLE given by (16), associated with the curved exponential families can be geometrically performed in the two spaces.

4.2. Geometric Demonstration of the Generalized Likelihood Ratio Test

As mentioned earlier, the GLRT is one of the most widely used approaches in composite hypothesis testing problems with unknown parameters in the PDFs. The data have the PDF under hypothesis and under hypothesis , where and are unknown parameters under each hypothesis. The GLRT enables a decision by means of replacement of the unknown parameters by their maximum likelihood estimates (MLEs) to implement a likelihood ratio test. The GLRT decides if

where is the MLE of (by maximizing ).

From the perspective of information geometry, the probability distribution is an element of the parameterized family of PDFs , where is the parameter set. For the curved exponential family , it can be regarded as a submanifold embedding in the natural parameter space , which includes all the distributions of exponential families. The curved exponential family S can be represented by a curve (or surface) embedded in the enveloping space by the nonlinear mapping . The expectation parameter space of S is a dual flat space to the natural parameter space , while the “realizations” of sufficient statistics of the distribution can be immersed in this space. Consequently, the MLE is performed in the space by mapping the samples onto the submanifold specified by under the m-projection.

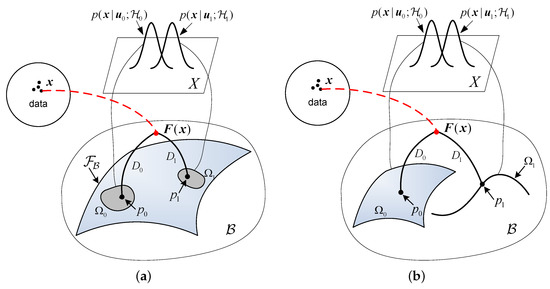

As the parameters and , as well as their dimensionalities, may or may not be the same under the null and alternative hypotheses, two pictures of the GLRT are presented for the two cases: one is with the same unknown parameters under each hypotheses and the other is with different parameters or different dimensionalities. The picture for the first case is illustrated in Figure 2a. In this case, distributions under two hypotheses share the same form and the same unknown parameter . However, the parameter takes different value sets under different hypotheses. The family of can be smoothly embedded as a surface specified by in the space . The hypotheses with unknown define two “uncertainty volumes” and on the submanifold . These volumes are the collections of probability distributions specified by the value sets of the unknown parameter . The measurements are immersed in in the form of sufficient statistics . Consequently, the MLE can be found by “mapping” the samples onto the uncertainty volumes and on . The points and in Figure 2 are the corresponding projections, i.e., the MLEs of the unknown parameter under two hypotheses. As indicated in (17), the MLEs can also be obtained by finding the points on and which are located closest to the data point in the sense of KLD, i.e.,

and the corresponding minimum KLDs can be represented by

respectively.

Figure 2.

Geometry of the GLRT. (a) The case for the same unknown parameters under two hypotheses. (b) The case for different unknown parameters and different dimensionalities under two hypotheses.

It should be emphasized that the above “mapping” is a general concept. When the parameters to be estimated are not restricted by a given “value set”, the MLE is simply obtained by maximizing the likelihood and the projections will fall onto the submanifold . However, if the parameters to be estimated are restricted in a given “value set”, the MLE should be operated by maximizing the likelihood with respect to the given parameter space. In the case where the projections fall outside the “uncertainty volumes”, the MLE solutions are given by those points which are closest to the data point described by (19).

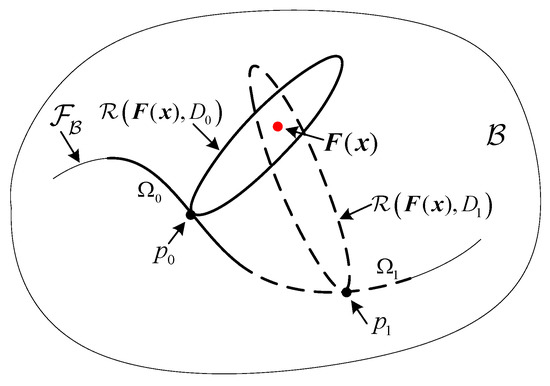

Let be a divergence sphere centered at with radius ; that is, the submanifold of the enveloping space consisting of points for which the KLD is equal to . Denote this divergence sphere by

Then, the closest point in (19) may be more easily found via the divergence sphere with center and radius tangent to at , as illustrated in Figure 3. Consequently, according to (2), the GLRT can be geometrically performed by comparing the difference between the minimum KLDs and with a threshold , i.e.,

Figure 3.

Illustration of the mapping via divergence spheres.

In practice, the Neyman–Pearson criterion is commonly employed to determine the threshold in (22) and the detector is of maximum probability of detection under a given probability of false alarm . As a commonly used performance index, the missingprobability usually decays exponentially as the sample size increases. The rate of exponential decay can be represented by [22], as

Based on Stein’s lemma, for a constant false-alarm constraint, the best error exponent is related to the Kullback–Leibler divergence from to [23], i.e.,

and

where ≐ denotes the first-order equivalence in the exponent. For example,

In the above sense, the KLD from to is equivalent to the signal-to-noise ratio (SNR) of the underlying detection problem. Therefore, information geometry offers an insightful geometrical explanation for the detection performance of a Neyman–Pearson detector.

In the second case, the dimensionality of the unknown parameters and is different, while the dimensionality of the enveloping spaces is common for both hypotheses due to the same measurements . However, the hypotheses may correspond to two separated submanifolds, and , embedded in caused by the different dimensionality between the unknown parameters. As illustrated in Figure 2b, a surface and a curve are used to denote the submanifolds and , corresponding to the two hypotheses, respectively. Similar to the first case, the GLRT with different unknown parameters may also be geometrically interpreted.

4.3. A Demonstration of One-Dimensional Curved Gaussian Distribution

Consider the following detection problem:

The measurement originates from a curved Gaussian distribution

where a is a positive constant and u is an unknown parameter.

The probability density function of the measurement is

By reparameterization, the probability density function can be represented in the general form of a curved exponential family as

where and the potential function is

The above distributions with local parameter u correspond to a one-dimensional curved exponential family embedded in the natural parameter space . The natural coordinates are

which defines a parabola (denoted by )

in . The underlying distribution (28) can also be represented in the expectation parameter space with expectation coordinates

which also defines a parabola (denoted by )

in .

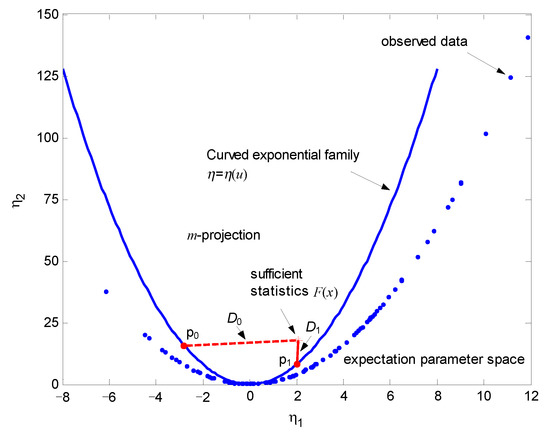

The sufficient statistics obtained from samples x can be represented by

Figure 4 shows the expectation parameter space and illustrates geometric interpretation of the underlying GLRT, where the blue parabola in the figure denotes embedding of the curved Gaussian distribution with parameter u. The submanifolds associated with two hypotheses can be geometrically represented by the blue parabolas (specified by and , respectively). Without loss of generality, assume that . The blue dots signify observations (measurements) in the expectation parameter space with the coordinates . The statistical mean of the measurements are used to calculate the sufficient statistics which are denoted by a red asterisk. The MLEs of parameter u under two hypotheses are obtained by finding the points on the two submanifolds which are closest to the data point in the sense of KLD. According to (22), the GLRT can be geometrically performed by comparing the difference between the minimum KLDs and with a threshold .

Figure 4.

The geometric interpretation of the GLRT for one-dimensional curved Gaussian distribution.

4.4. Discussions

The geometric formulation of the GLRT presented above provides additional insights into the GLRT. To the best of our knowledge, there is no general analytical result associated with the performance of the GLRT in the literature [1]. The asymptotic analysis is only valid under the conditions that (1) the data sample size is large; and (2) the MLE asymptotically attains the Cramér-Rao lower bound (CRLB) of the underlying estimation problems.

It is known that the MLE with natural parameters is a sufficient statistic for an exponential family, and achieves the CRLB if a suitable measurement function is chosen for the estimation [8]. For the curved exponential families the MLE is not, in general, an efficient estimator, which means that the variance of MLE may not achieve CRLB with a finite number of samples. This indicates that when using a finite number of samples there will be a deterioration in performance for both MLE and GLRT when the underlying statistical model is a curved exponential family. There will be an inherent information loss (compared with the Fisher information) when implementingan estimation process if the statistical model is of nonlinearity. Roughly speaking, if the embedded submanifold in Figure 2a and , in Figure 2b are curved, the MLEs will not achieve the CRLB due to the inherent information loss caused by the non-flatness of the statistical model. The information loss may be quantitatively calculated using the e-curvature of the statistical model [9].

Consequently, if the statistical model associated with a GLRT is not flat, i.e., the submanifolds shown in Figure 2 are curved, there will be a deterioration in performance for the GLRT using a finite number of samples. As sample size N increases, the sufficient statistics will be better matched to the statistical model and thus closer to the submanifolds (see Figure 2), and the divergence from data to the submanifold associated with the true hypothesis will be shorter. Asymptotically, as , the sufficient statistics will fall onto the submanifold associated with the true hypothesis , so that the corresponding divergence reduces to zero. By then, the GLRT achieves a perfect performance.

5. Conclusions

In this paper, the generalized likelihood ratio test is addressed from a geometric viewpoint. Two pictures of the GLRT are presented in the philosophy of the information geometry theory. Both the detection and estimation associated with a GLRT are regarded as geometric operations on the manifolds of a parameterized family of probability distributions. As demonstrated in this work, the geometric interpretation of GLRT provides additional insights in the analysis of GLRT.

Potentially, more constructive analysis can be generalized based on the information geometry of GLRT. For example, the error exponent defined by (24) and (25) provides a useful performance index for the detection process associated with GLRT. When and in (24) are the estimates of an MLE (rather than the true values) of unknown parameters under each hypothesis, there may be a deterioration in performance in the estimation process. Determining how to incorporate such an “estimation loss” into the error exponent is an issue. Another open issue is the GLRT with PDFs of different forms for each hypothesis, which leads to a different distribution embedding associated with each hypothesis.

Author Contributions

Y.C. proposed the original ideas and performed the research. H.W. conceived and designed the demonstration of one-dimensional curved Gaussian distribution. X.L. reviewed the paper and provided useful comments. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under grant No. 61871472.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kay, S.M. Fundamentals of Statistical Signal Processing (Volume II): Detection Theory; Prentice Hall PTR: Hoboken, NJ, USA, 1993. [Google Scholar]

- Liu, W.; Liu, J.; Hao, C.; Gao, Y.; Wang, Y. Multichannel adaptive signal detection: Basic theory and literature review. Sci. China Inf. Sci. 2022, 65, 5–44. [Google Scholar] [CrossRef]

- Levitan, E.; Merhav, N. A competitive Neyman-Pearson approach to universal hypothesis testing with applications. IEEE Trans. Inform. Theory 2002, 48, 2215–2229. [Google Scholar] [CrossRef]

- Amari, S. Information geometry on hierarchy of probability distributions. IEEE Trans. Inform. Theory 2001, 47, 1701–1711. [Google Scholar] [CrossRef]

- Amari, S.; Nagaoka, H. Methods of Information Geometry (Translations of Mathematical Monographs); American Mathematical Society and Oxford University Press: New York, NY, USA, 2000. [Google Scholar]

- Rao, C.R. Information and accuracy attainable in the estimation of statistical parameters. Bull. Calcutta Math. Soc. 1945, 37, 81–91. [Google Scholar]

- Chentsov, N.N. Statistical Decision Rules and Optimal Inference; English Translation; American Mathematical Society: Providence, RI, USA, 1982. [Google Scholar]

- Efron, B. Defining the curvature of a statistical problem (with applications to second order efficiency). Ann. Stat. 1975, 3, 1189–1242. [Google Scholar] [CrossRef]

- Amari, S. Differential geometry of curved exponential families-curvatures and information loss. Ann. Stat. 1982, 10, 357–385. [Google Scholar] [CrossRef]

- Kass, R.E.; Vos, P.W. Geometrical Foundations of Asymptotic Inference; John Wiley & Sons Inc: Hoboken, NJ, USA, 1997. [Google Scholar]

- Garderen, K.J.V. An alternative comparison of classical tests: Assessing the effects of curvature. In Applications of Differential Geometry to Econometrics; Cambridge University Press: Cambridge, UK, 2000; pp. 230–280. [Google Scholar]

- Dabak, A.G. A Geometry for Detection Theory. Ph.D. Thesis, Rice University, Houston, TX, USA, 1993. [Google Scholar]

- Westover, M.B. Asymptotic geometry of multiple hypothesis testing. IEEE Trans. Inform. Theory 2008, 54, 3327–3329. [Google Scholar] [CrossRef] [PubMed]

- Hoeffding, W. Asymptotically optimal tests for multinomial distributions. Ann. Math. Statist. 1965, 36, 369–408. [Google Scholar] [CrossRef]

- Barbaresco, F. Innovative tools for radar signal processing based on Cartan’s geometry of SPD matrices & information geometry. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008. [Google Scholar]

- Barbaresco, F. New foundation of radar Doppler signal processing based on advanced differential geometry of symmetric spaces: Doppler matrix CFAR and radar application. In Proceedings of the International Radar Conference, Bordeaux, France, 12–16 October 2009. [Google Scholar]

- Barbaresco, F. Robust statistical radar processing in Fréchet metric space: OS-HDR-CFAR and OS-STAP processing in Siegel homogeneous bounded domains. In Proceedings of the International Radar Symposium, Leipzig, Germany, 7–9 September 2011. [Google Scholar]

- Kullback, S. Information Theory and Statistics; Dover Publications: New York, NY, USA, 1968. [Google Scholar]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; Wiley: New York, NY, USA, 1991. [Google Scholar]

- Etemadi, N. An elementary proof of the strong law of large numbers. Probab. Theory Relat. Fields 1981, 55, 119–122. [Google Scholar] [CrossRef]

- Garcia, V.; Nielsen, F. Simplification and hierarchical representations of mixtures of exponential families. Signal Process. 2010, 90, 3197–3212. [Google Scholar] [CrossRef]

- Sung, Y.; Tong, L.; Poor, H.V. Neyman-Pearson detection of Gauss-Markov signals in noise: Closed-form error exponent and properties. IEEE Trans. Inform. Theory 2006, 52, 1354–1365. [Google Scholar] [CrossRef]

- Chamberland, J.; Veeravalli, V.V. Decentralized detection in sensor networks. IEEE Trans. Signal Process. 2003, 51, 407–416. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).