Communication Efficient Algorithms for Bounding and Approximating the Empirical Entropy in Distributed Systems

Abstract

1. Introduction

- Often, a sudden change in the entropy indicates a phase change in the underlying system, for example a DDoS (Distributed Denial of Service) attack [5]. To this end, it typically suffices to bound the entropy, since its precise value is not required.

- A good measure of similarity between two datasets is the difference between their aggregated and individual entropies. For example, if two collections of text are of a similar nature, their aggregate entropy will be similar to the individual ones, and if they are different, the aggregated entropy will be substantially larger. Here, too, it suffices to approximate the global entropy, or bound it from above or below in order to reach a decision.

2. Previous Work

- Distributed computing nodes denoted by , with holding a “local” data vector . The nodes can communicate, either directly or via a “coordinator” node.

- A scalar-valued function , defined on pairs of real-valued vectors.

- PCA (Principle Component Analysis) sketch: given a large subset , one wishes to quickly estimate the distance of vectors from an underlying structure which S is sampled from (a famous example is images of a certain type [8]). To this end, S is represented by a smaller set, consisting of the dominant eigenvectors of S’s scatter matrix, and the distance is estimated by the distance of the vector from the subspace spanned by these vectors.

- In the analysis of streaming data, some important sketches were developed, in order to handle large and dynamic streams, by only preserving salient properties (such as the number of distinct items, frequency, and the norm). It is beyond the scope of this paper to describe these sketches, so we refer to the literature [9].

- is much smaller than X.

- Knowledge of allows one to approximate the empirical Shannon entropy of .

3. Dynamic Bounds and Communication Reduction

3.1. Problem, Motivation, and an Example

- Given nodes , each holding a probability vector (i.e., all values are positive and sum to 1), approximate the entropy of the average of , while maintaining low communication overhead.

- Determining whether the inequality holds for some user-defined constant L.

- Determining whether the inequality holds for some user-defined constant U.

- Let ;

- Let .

- 1.

- :Since , the intervals and are disjoint. By applying the Lagrange Mean Value Theorem, for some and :Since is decreasing and , we immediately obtain that . It follows that:

- 2.

- :Observing the disjoint intervals , . The sought inequality, following the Lagrange Mean Value Theorem for , as in the case above, is:

- 1.

- If Δ is added to any value of X, the maximal increase of its entropy will occur when Δ is added to the minimal value of X.

- 2.

- If Δ is added to any value of X, the minimal increase of its entropy will occur when Δ is added to the maximal value of X.

3.2. Upper Bound

- ordered set of largest k values of X;

- the coordinates of the values in , or formally .

- The sum of all values not in , i.e., , will be referred to as the mass of the local vector that remains available to be distributed among coordinates. It will be denoted by m in the following algorithms.

- , since contains the largest values of X (where denotes set difference).

- 1.

- . In this case, most (or all) the information of X is broadcast by the message, and the entropy can be computed accurately without need for a bound.

- 2.

- , where . In this case, there is no need to run the proposed algorithm; the constraint maximization will always result is an “optimal” target—the uniform vector with the value , whose entropy we know is maximal w.r.t its sum.

| Algorithm 1: Upper Entropy Bound for Two Nodes |

|

- ;

- .

- : Note that , since their sum is equal, and has had no further additions. In addition, since and is the uniform vector, . It follows that .

- : there exists a subset for which every is greater than the corresponding value of . Let . For every , there exists a value in s.t , since . Let be Z after is subtracted from each and added to some as described above. By Lemma 1, . It also holds that , and that , since they both sum to , and is a uniform vector (whose entropy is maximal). Therefore, .

- : this case can be immediately omitted; it is an impossibility to feasibly subtract a value from or increase the value of above .

| Algorithm 2: Binary Search Upper Entropy Bound for Two Nodes |

|

3.3. Lower Bound

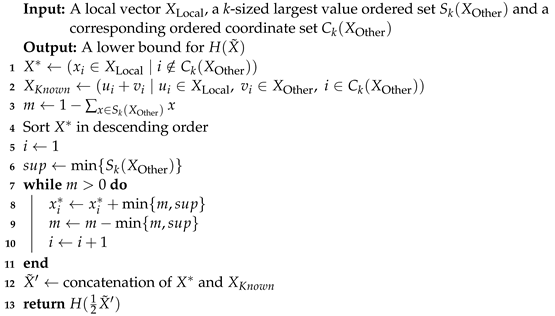

| Algorithm 3: Lower Entropy Bound for Two Nodes |

|

3.4. Multiparty Bounds

- Input: In addition to the local vector , the k-sized largest value sets and corresponding ordered coordinate sets —instead of single sets.

- The sum to be added to all coordinates of , m will be , since there are t local vectors to process and construct, while local vector contributes .

- The return value is now , since we have summed t additional vectors into and .

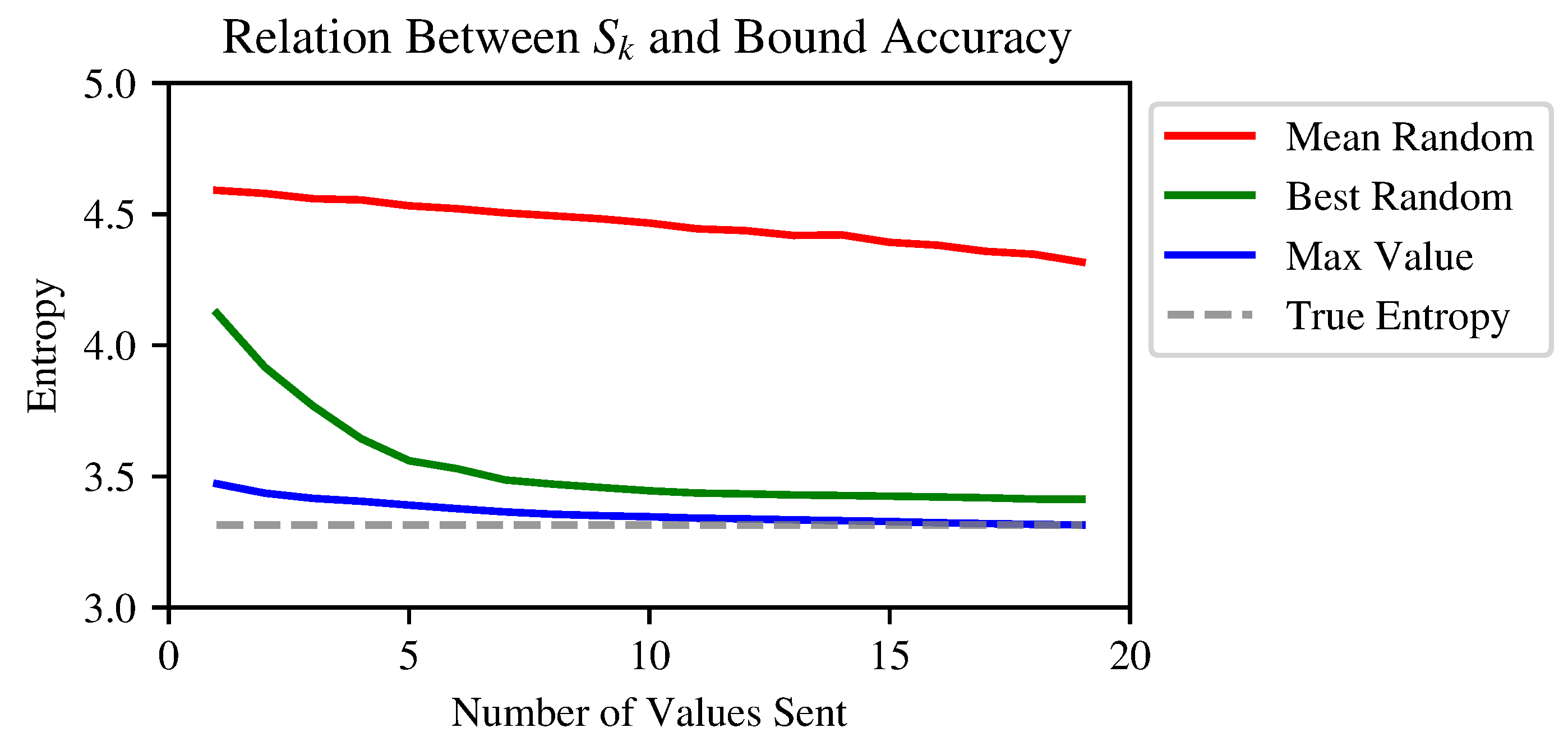

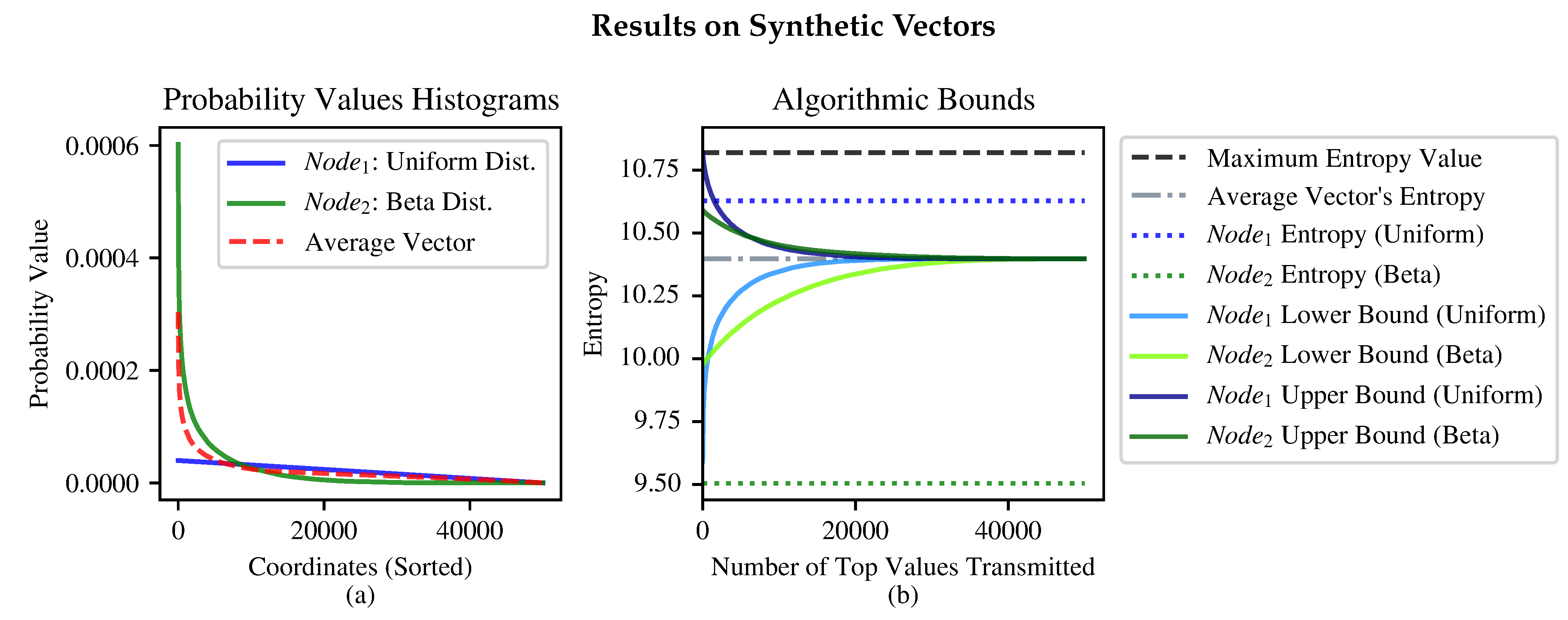

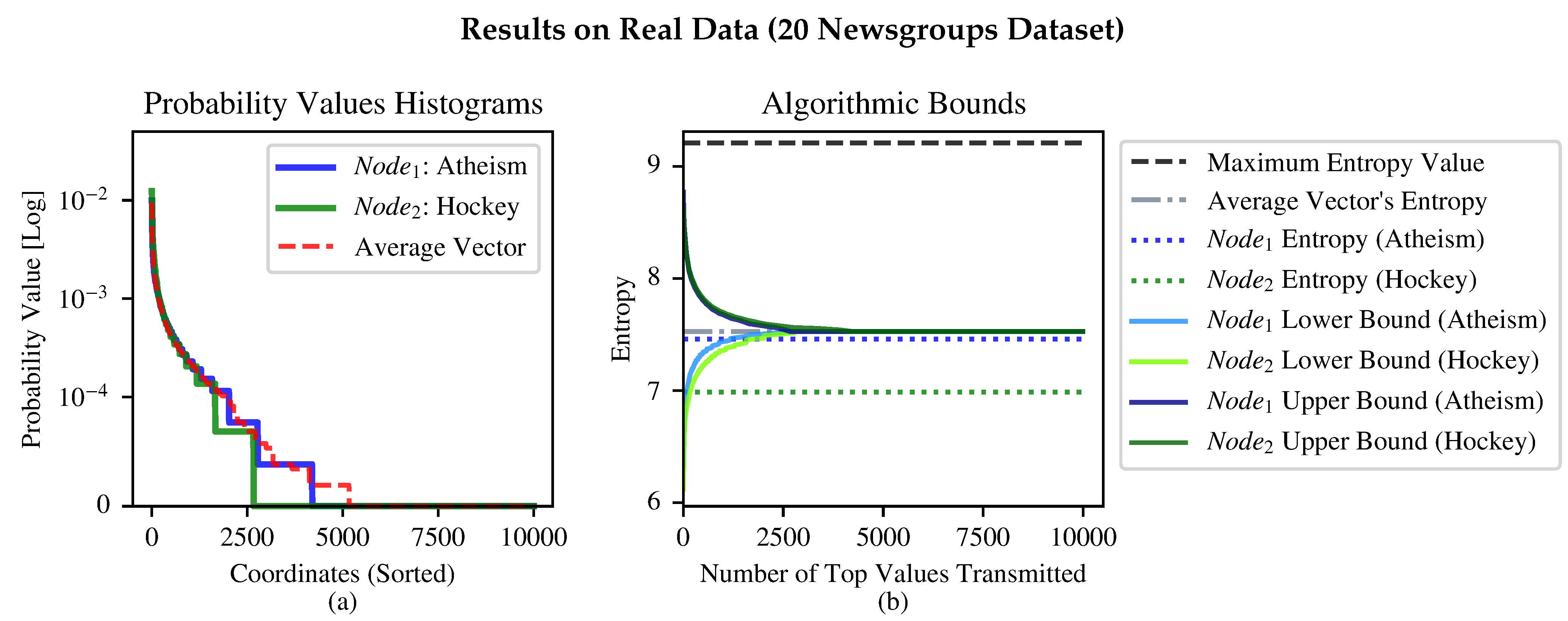

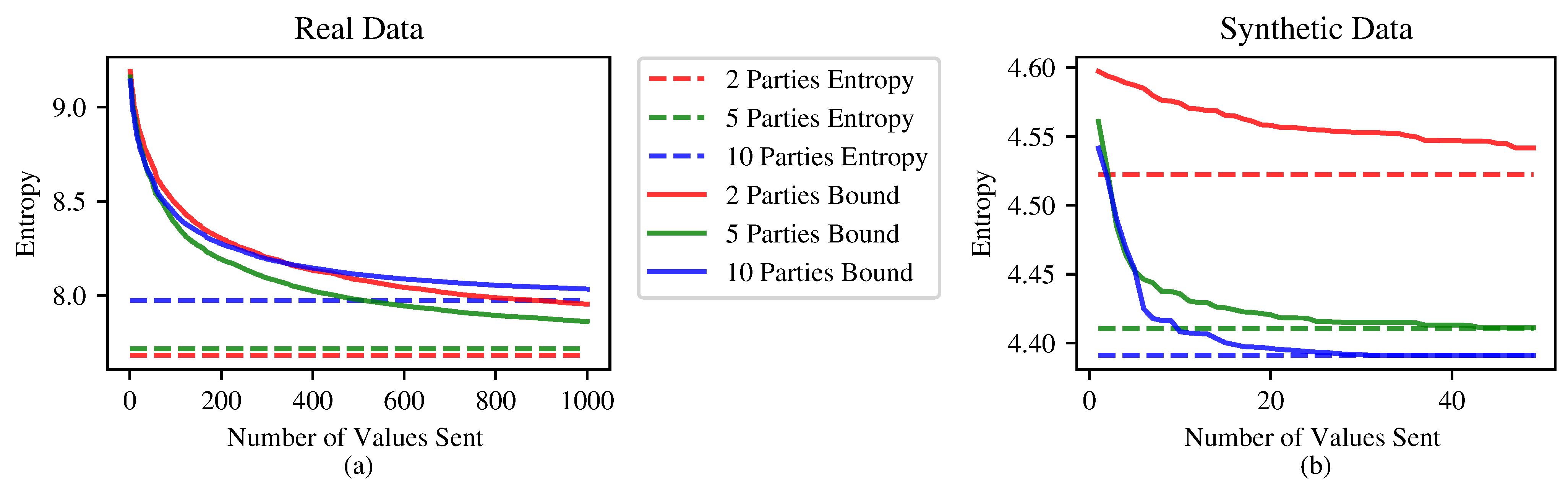

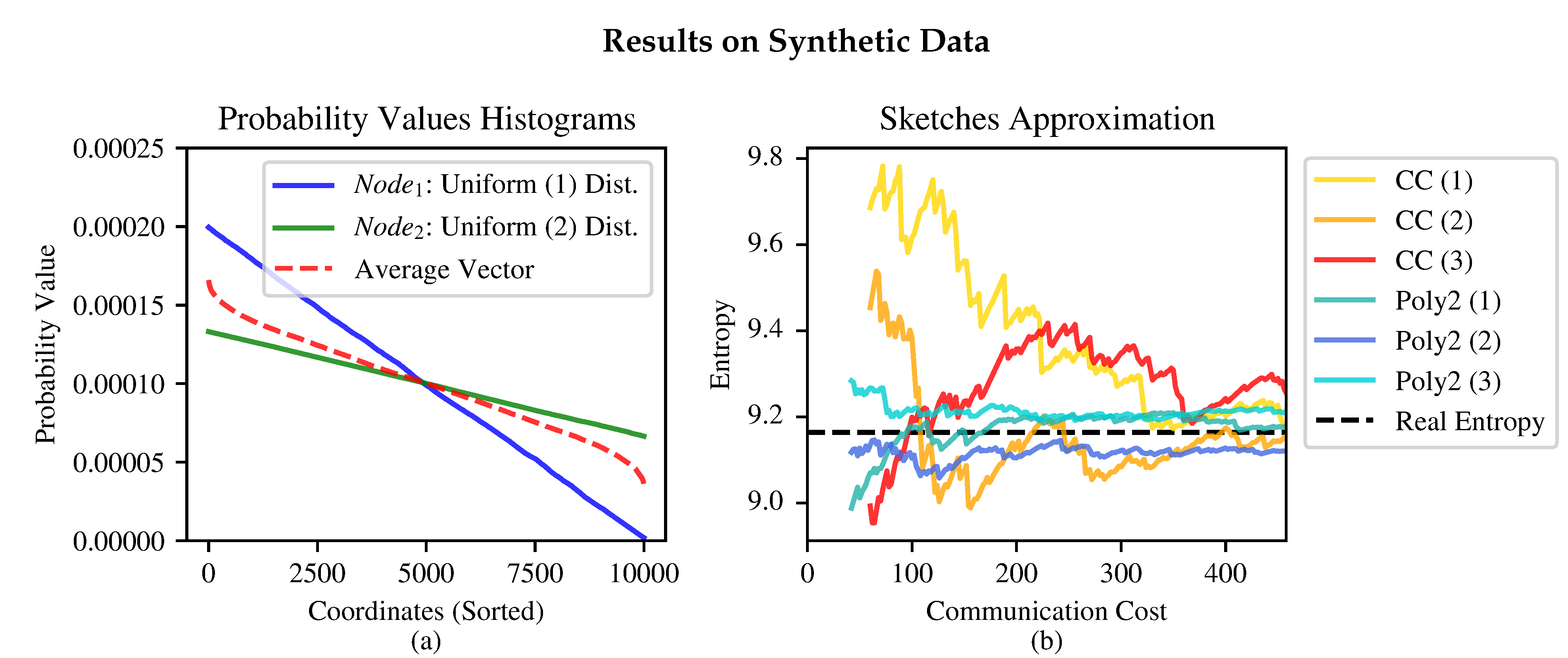

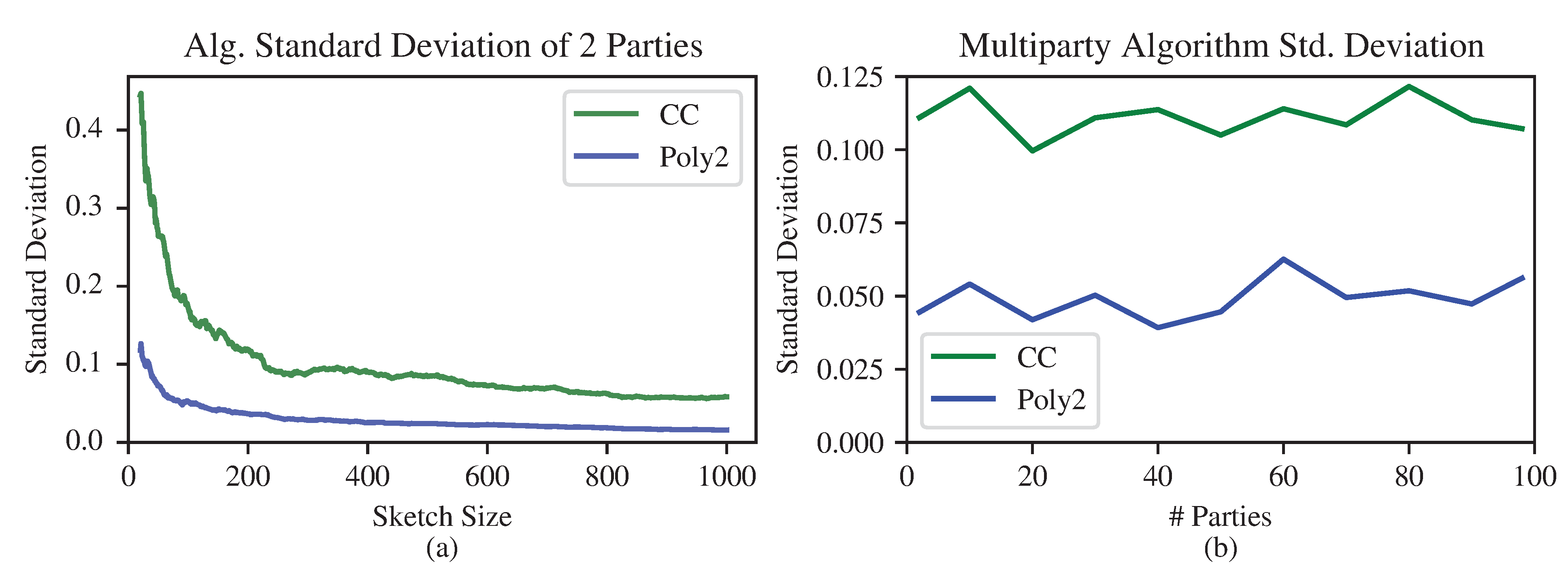

3.5. Experimental Results

4. Entropy Approximation

4.1. The Clifford-Cosma Sketch

4.2. Sketch Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

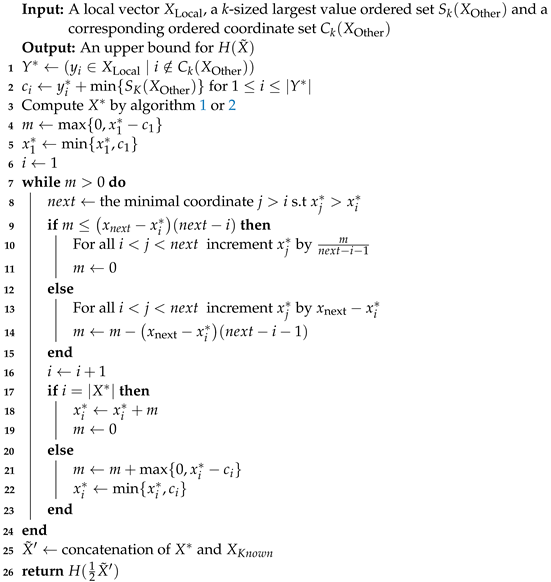

Appendix A. Improving the Upper Bound

| Algorithm A1: Tight Entropy Upper Bound for Two Nodes |

|

References

- Cormode, G. The continuous distributed monitoring model. SIGMOD Rec. 2013, 42, 5–14. [Google Scholar] [CrossRef]

- Censor-Hillel, K.; Dory, M. Distributed Spanner Approximation. SIAM J. Comput. 2021, 50, 1103–1147. [Google Scholar] [CrossRef]

- Li, M.; Andersen, D.G.; Smola, A.J.; Yu, K. Communication Efficient Distributed Machine Learning with the Parameter Server. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 19–27. [Google Scholar]

- Vajapeyam, S. Understanding Shannon’s Entropy metric for Information. arXiv 2014, arXiv:1405.2061. [Google Scholar]

- Li, L.; Zhou, J.; Xiao, N. DDoS Attack Detection Algorithms Based on Entropy Computing. In Proceedings of the Information and Communications Security, 9th International Conference, ICICS 2007, Zhengzhou, China, 12–15 December 2007; Qing, S., Imai, H., Wang, G., Eds.; Lecture Notes in Computer Science. Springer: Berlin, Germany, 2007; Volume 4861, pp. 452–466. [Google Scholar] [CrossRef]

- Yehuda, G.; Keren, D.; Akaria, I. Monitoring Properties of Large, Distributed, Dynamic Graphs. In Proceedings of the 2017 IEEE International Parallel and Distributed Processing Symposium, IPDPS 2017, Orlando, FL, USA, 29 May–2 June 2017; pp. 2–11. [Google Scholar] [CrossRef]

- Alon, N.; Klartag, B. Optimal Compression of Approximate Inner Products and Dimension Reduction. In Proceedings of the 58th IEEE Annual Symposium on Foundations of Computer Science, FOCS 2017, Berkeley, CA, USA, 15–17 October 2017; pp. 639–650. [Google Scholar] [CrossRef]

- Turk, M.A.; Pentland, A. Face recognition using eigenfaces. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 1991, Lahaina, Maui, HI, USA, 3–6 June 1991; pp. 586–591. [Google Scholar] [CrossRef]

- Data-Centric Systems and Applications; Garofalakis, M.N.; Gehrke, J.; Rastogi, R. (Eds.) Data Stream Management—Processing High-Speed Data Streams; Springer: Berlin, Germany, 2016. [Google Scholar] [CrossRef]

- Gabel, M.; Keren, D.; Schuster, A. Anarchists, Unite: Practical Entropy Approximation for Distributed Streams. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; ACM: New York, NY, USA, 2017; pp. 837–846. [Google Scholar] [CrossRef]

- Alfassi, Y.; Gabel, M.; Yehuda, G.; Keren, D. A Distance-Based Scheme for Reducing Bandwidth in Distributed Geometric Monitoring. In Proceedings of the 37th IEEE International Conference on Data Engineering, ICDE 2021, Chania, Greece, 19–22 April 2021; pp. 1164–1175. [Google Scholar] [CrossRef]

- Sharfman, I.; Schuster, A.; Keren, D. A geometric approach to monitoring threshold functions over distributed data streams. ACM Trans. Database Syst. 2007, 32, 23. [Google Scholar] [CrossRef]

- Lang, K. Newsweeder: Learning to filter netnews. In Machine Learning Proceedings 1995; Elsevier: Amsterdam, The Netherlands, 1995; pp. 331–339. [Google Scholar]

- Clifford, P.; Cosma, I. A simple sketching algorithm for entropy estimation over streaming data. In Proceedings of the Sixteenth International Conference on Artificial Intelligence and Statistics, AISTATS 2013, JMLR.org, JMLR Workshop and Conference Proceedings, Scottsdale, AZ, USA, 29 April–1 May 2013; Volume 31, pp. 196–206. [Google Scholar]

- Harvey, N.J.A.; Nelson, J.; Onak, K. Sketching and Streaming Entropy via Approximation Theory. In Proceedings of the 49th Annual IEEE Symposium on Foundations of Computer Science, FOCS 2008, Philadelphia, PA, USA, 25–28 October 2008; pp. 489–498. [Google Scholar] [CrossRef][Green Version]

- Johnson, W.; Lindenstrauss, J. Extensions of Lipschitz mappings into a Hilbert space. Conf. Mod. Anal. Probab. 1982, 26, 189–206. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shahar, A.; Alfassi, Y.; Keren, D. Communication Efficient Algorithms for Bounding and Approximating the Empirical Entropy in Distributed Systems. Entropy 2022, 24, 1611. https://doi.org/10.3390/e24111611

Shahar A, Alfassi Y, Keren D. Communication Efficient Algorithms for Bounding and Approximating the Empirical Entropy in Distributed Systems. Entropy. 2022; 24(11):1611. https://doi.org/10.3390/e24111611

Chicago/Turabian StyleShahar, Amit, Yuval Alfassi, and Daniel Keren. 2022. "Communication Efficient Algorithms for Bounding and Approximating the Empirical Entropy in Distributed Systems" Entropy 24, no. 11: 1611. https://doi.org/10.3390/e24111611

APA StyleShahar, A., Alfassi, Y., & Keren, D. (2022). Communication Efficient Algorithms for Bounding and Approximating the Empirical Entropy in Distributed Systems. Entropy, 24(11), 1611. https://doi.org/10.3390/e24111611