A Formal Framework for Knowledge Acquisition: Going beyond Machine Learning

Abstract

1. Introduction

1.1. The Present Article

1.2. Related Work

2. Possible Worlds, Propositions, and Discernment

3. Probabilities

3.1. Degrees of Beliefs and Sigma Algebras

3.2. Bayes’ Rule and Posterior Probabilities

3.3. Expected Posterior Beliefs

4. Learning

4.1. Active Information for Quantifying the Amount of Learning

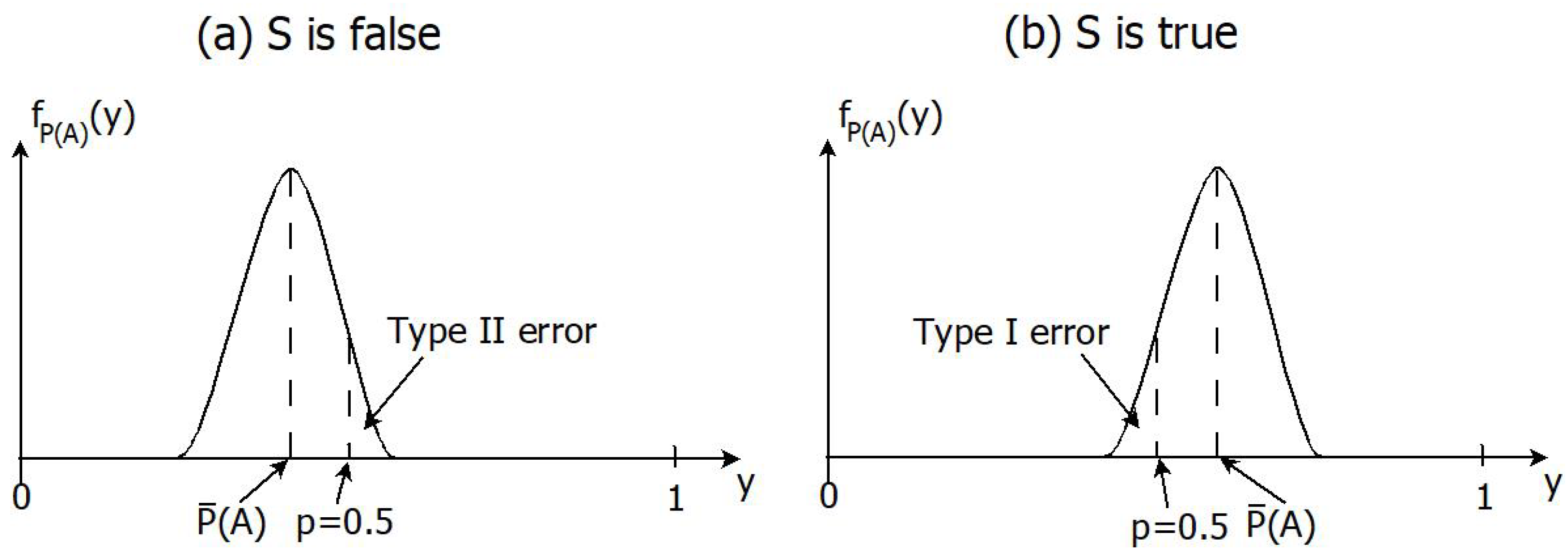

4.2. Learning as Hypothesis Testing

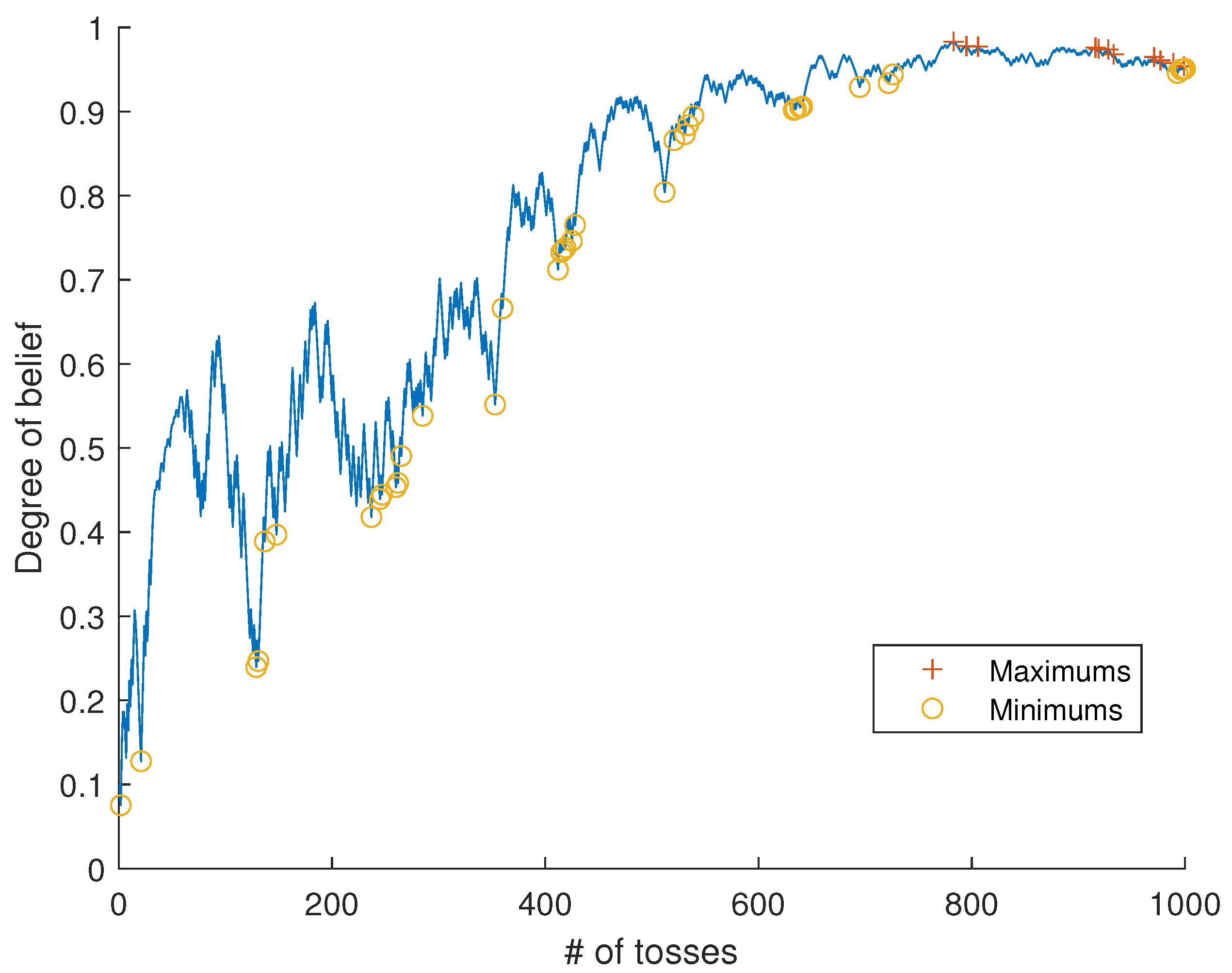

4.3. The Bayesian Approach to Learning

4.4. Test Statistic When Is Unknown

5. Knowledge Acquisition

5.1. Knowledge Acquisition Goes beyond Learning

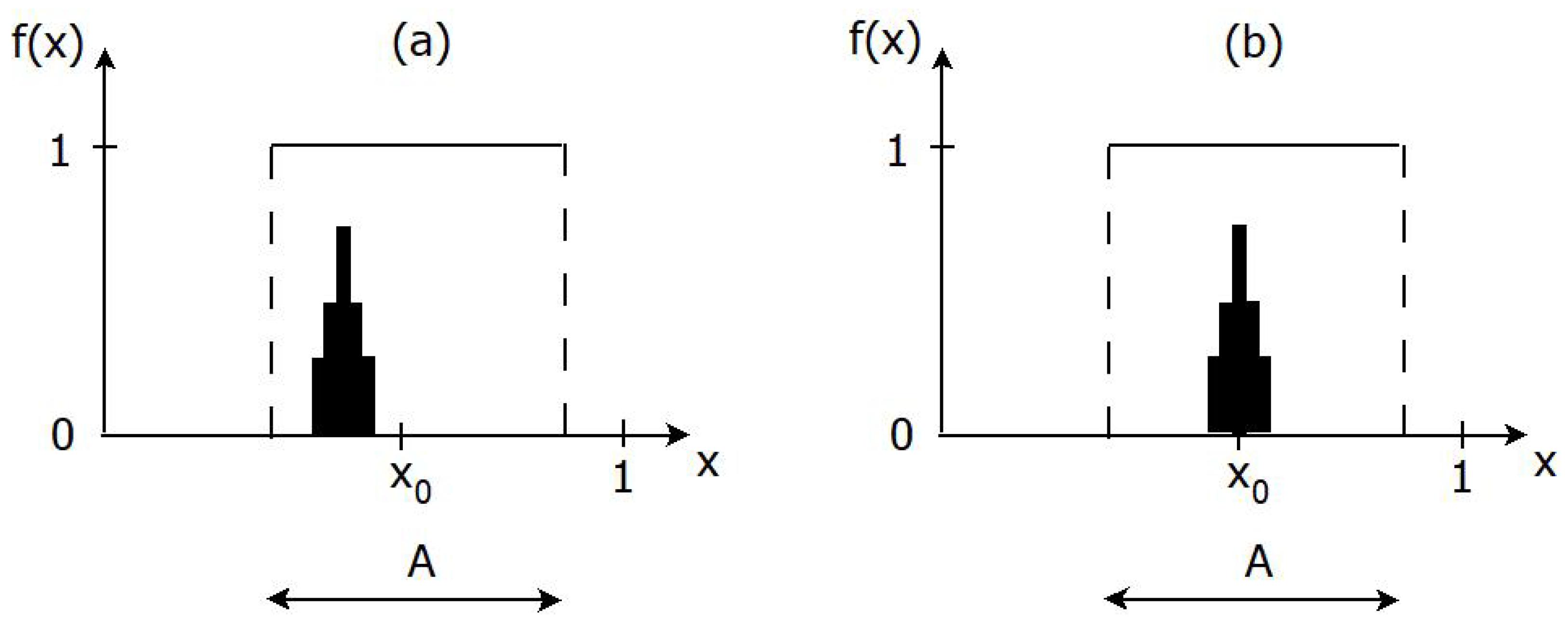

5.2. A Formal Definition of Knowledge Acquisition

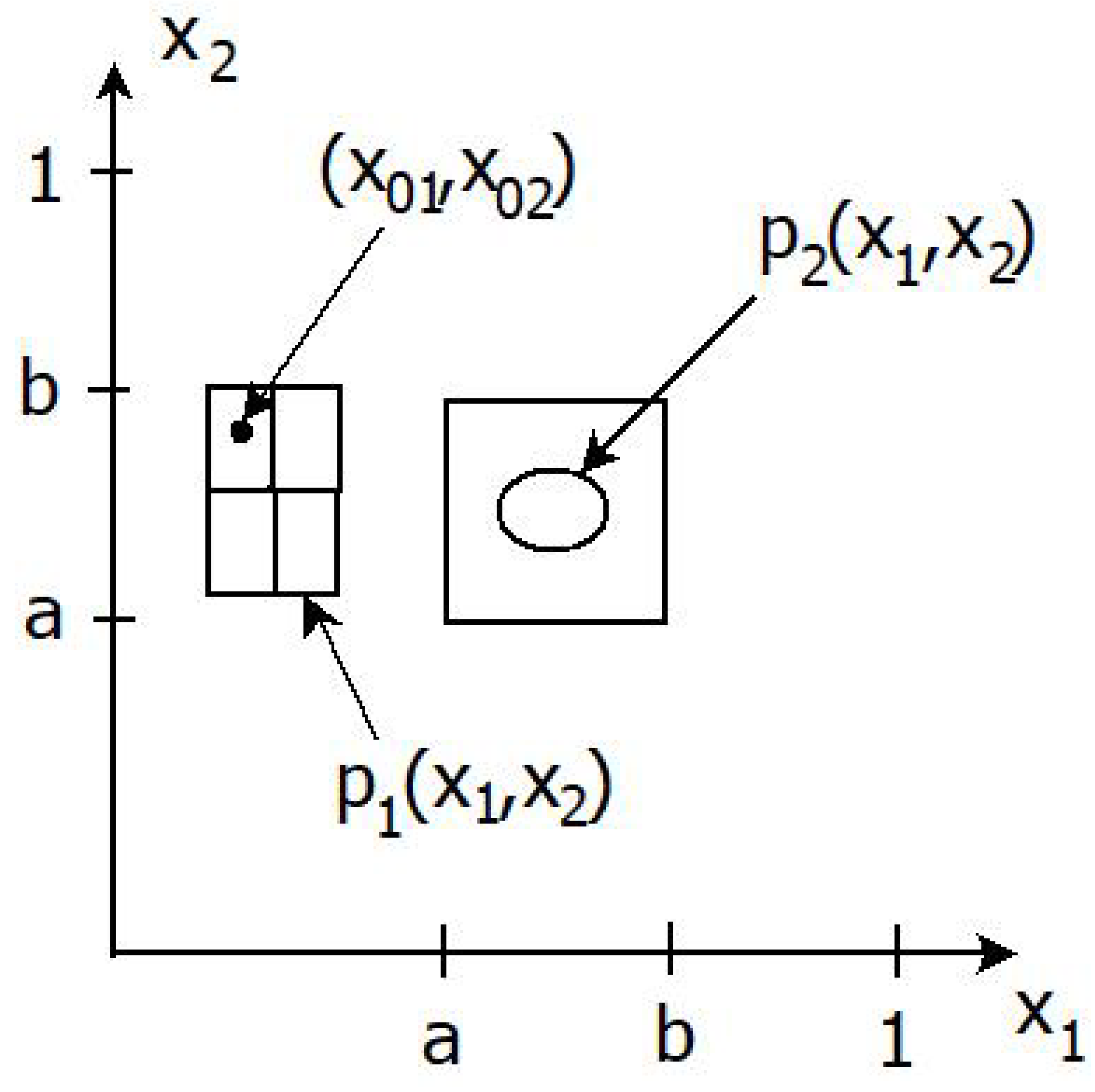

- If , we use the Euclidean distance between as metric.

- If consists of all binary sequences of length q, then is the Hamming distance between and .

- If is a finite categorical space, we put

6. Learning and Knowledge Acquisition Processes

6.1. The Process of Discernment and Data Collection

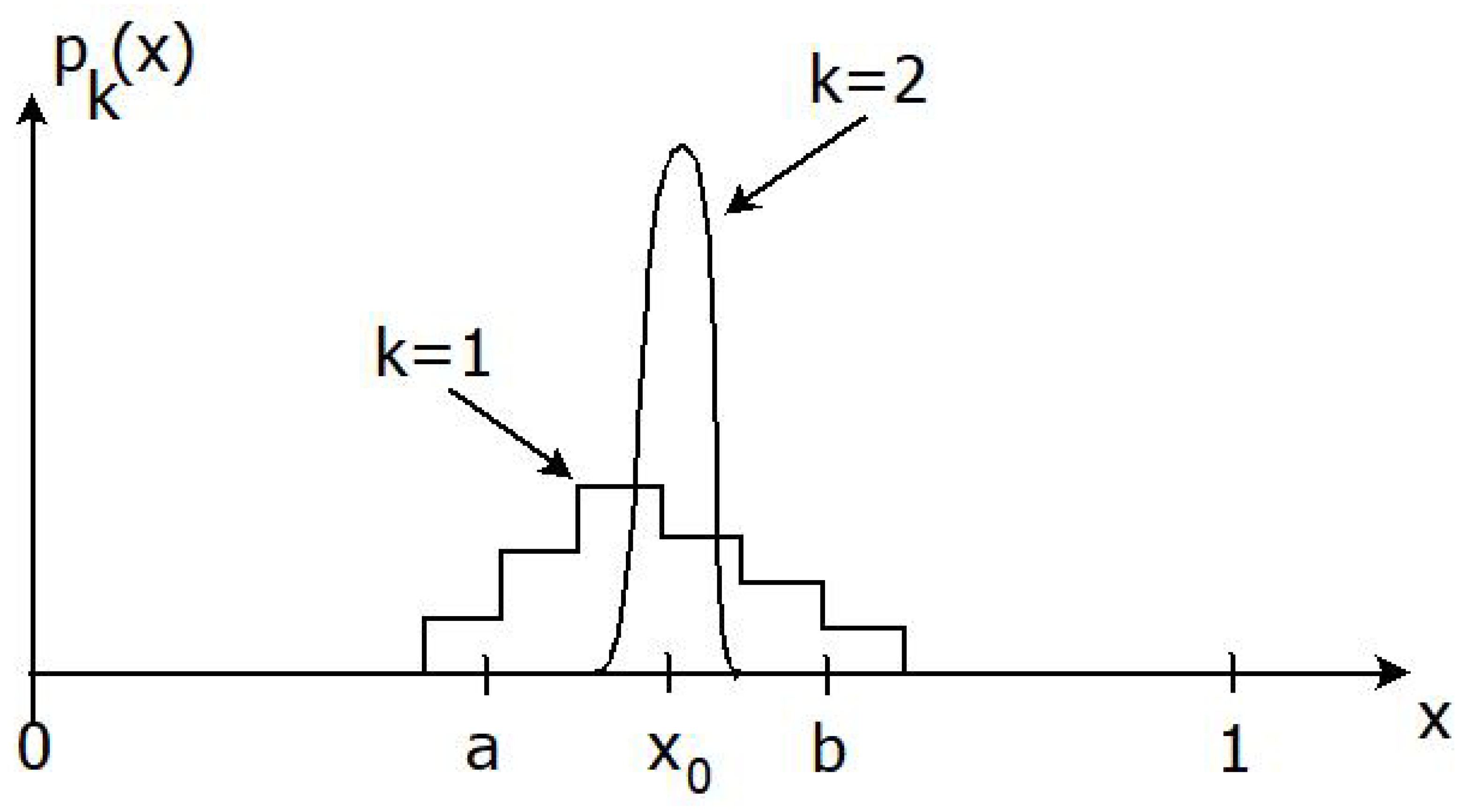

6.2. Strong Learning and Knowledge Acquisition

6.3. Weak Learning and Knowledge Acquisition

7. Asymptotics

7.1. Asymptotic Learning and Knowledge Acquisition

7.2. Bayesian Asymptotic Theory

8. Examples

9. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Piech, C.; Bassen, J.; Huang, J.; Ganguli, S.; Sahami, M.; Guibas, L.J.; Sohl-Dickstein, J. Deep Knowledge Tracing. In Proceedings of the Neural Information Processing Systems (NIPS) 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 505–513. [Google Scholar]

- Pavese, C. Knowledge How. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2021. [Google Scholar]

- Agliari, E.; Pachón, A.; Rodríguez, P.M.; Tavani, F. Phase transition for the Maki-Thompson rumour model on a small-world network. J. Stat. Phys. 2017, 169, 846–875. [Google Scholar] [CrossRef]

- Lyons, R.; Peres, Y. Probability on Trees and Networks; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’ networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Embreston, S.E.; Reise, S.P. Item Response Theory for Psychologists; Psychology Press: New York, NY, USA, 2000. [Google Scholar]

- Stevens, S.S. On the Theory of Scales of Measurement. Science 1946, 103, 677–680. [Google Scholar] [CrossRef] [PubMed]

- Thompson, B. Exploratory and Confirmatory Factor Analysis: Understanding Concepts and Applications; American Psychological Association: Washington, DC, USA, 2004. [Google Scholar]

- Gettier, E.L. Is Justified True Belief Knowledge? Analysis 1963, 23, 121–123. [Google Scholar] [CrossRef]

- Ichikawa, J.J.; Steup, M. The Analysis of Knowledge. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2018. [Google Scholar]

- Hájek, A. Probability, Logic, and Probability Logic. In The Blackwell Guide to Philosophical Logic; Goble, L., Ed.; Blackwell: Hoboken, NJ, USA, 2001; Chapter 16; pp. 362–384. [Google Scholar]

- Demey, L.; Kooi, B.; Sack, J. Logic and Probability. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2019. [Google Scholar]

- Hájek, A. Interpretations of Probability. In The Stanford Encyclopedia of Philosophy; Zalta, E.N., Ed.; Metaphysics Research Lab, Stanford University: Stanford, CA, USA, 2019. [Google Scholar]

- Savage, L. The Foundations of Statistics; Wiley: Hoboken, NJ, USA, 1954. [Google Scholar]

- Swinburne, R. Epistemic Justification; Oxford University Press: Oxford, UK, 2001. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning and Inference, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Berger, J. Statistical Decision Theory and Bayesian Analysis, 2nd ed.; Springer: New York, NY, USA, 2010. [Google Scholar]

- Dembski, W.A.; Marks, R.J., II. Bernoulli’s Principle of Insufficient Reason and Conservation of Information in Computer Search. In Proceedings of the 2009 IEEE International Conference on Systems, Man, and Cybernetics, San Antonio, TX, USA, 11–14 October 2009; pp. 2647–2652. [Google Scholar] [CrossRef]

- Dembski, W.A.; Marks, R.J., II. Conservation of Information in Search: Measuring the Cost of Success. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2009, 5, 1051–1061. [Google Scholar] [CrossRef]

- Díaz-Pachón, D.A.; Marks, R.J., II. Generalized active information: Extensions to unbounded domains. BIO-Complexity 2020, 2020, 1–6. [Google Scholar] [CrossRef]

- Shafer, G. Belief functions and parametric models. J. R. Stat. Soc. Ser. B 1982, 44, 322–352. [Google Scholar] [CrossRef]

- Wasserman, L. Prior envelopes based on belief functions. Ann. Stat. 1990, 18, 454–464. [Google Scholar] [CrossRef]

- Dubois, D.; Prade, H. Belief functions and parametric models. Int. J. Approx. Reason. 1992, 6, 295–319. [Google Scholar] [CrossRef]

- Denoeux, T. Decision-making with belief functions: A review. Int. J. Approx. Reason. 2019, 109, 87–110. [Google Scholar]

- Hopkins, E. Two competing models of how people learn in games. Econometrica 2002, 70, 2141–2166. [Google Scholar] [CrossRef]

- Stoica, G.; Strack, B. Acquired knowledge as a stochastic process. Surv. Math. Appl. 2017, 12, 65–70. [Google Scholar]

- Taylor, C.M. A Mathematical Model for Knowledge Acquisition. Ph.D. Thesis, University of Virginia, Charlottesville, VA, USA, 2002. [Google Scholar]

- Popper, K. The Logic of Scientific Discovery; Hutchinson: London, UK, 1968. [Google Scholar]

- Jaynes, E.T. Prior Probabilities. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 227–241. [Google Scholar] [CrossRef]

- Hössjer, O. Modeling decision in a temporal context: Analysis of a famous example suggested by Blaise Pascal. In The Metaphysics of Time, Themes from Prior. Logic and Philosophy of Time; Hasle, P., Jakobsen, D., Øhrstrøm, P., Eds.; Aalborg University Press: Aalborg, Denmark, 2020; Volume 4, pp. 427–453. [Google Scholar]

- Kowner, R. Nicholas II and the Japanese body: Images and decision-making on the eve of the Russo-Japanese War. Psychohist. Rev. 1998, 26, 211–252. [Google Scholar]

- Hössjer, O.; Díaz-Pachón, D.A.; Chen, Z.; Rao, J.S. Active information, missing data, and prevalence estimation. arXiv 2022, arXiv:2206.05120. [Google Scholar] [CrossRef]

- Díaz-Pachón, D.A.; Hössjer, O. Assessing, testing and estimating the amount of fine-tuning by means of active information. Entropy 2022, 24, 1323. [Google Scholar] [CrossRef]

- Szostak, J.W. Functional information: Molecular messages. Nature 2003, 423, 689. [Google Scholar] [CrossRef] [PubMed]

- Thorvaldsen, S.; Hössjer, O. Using statistical methods to model the fine-tuning of molecular machines and systems. J. Theor. Biol. 2020, 501, 110352. [Google Scholar] [CrossRef] [PubMed]

- Díaz-Pachón, D.A.; Sáenz, J.P.; Rao, J.S. Hypothesis testing with active information. Stat. Probab. Lett. 2020, 161, 108742. [Google Scholar] [CrossRef]

- Montañez, G.D. A Unified Model of Complex Specified Information. BIO-Complexity 2018, 2018, 1–26. [Google Scholar] [CrossRef]

- Yik, W.; Serafini, L.; Lindsey, T.; Montañez, G.D. Identifying Bias in Data Using Two-Distribution Hypothesis Tests. In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, Oxford, UK, 19–21 May 2021; ACM: New York, NY, USA, 2022; pp. 831–844. [Google Scholar] [CrossRef]

- Kallenberg, O. Foundations of Modern Probability, 3rd ed.; Springer: New York, NY, USA, 2021; Volume 1. [Google Scholar]

- Ghosal, S.; van der Vaart, A. Fundamentals of Nonparametric Bayesian Inference; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Shen, W.; Tokdar, S.T.; Ghosal, S. Adaptive Bayesian multivariate density estimation with Dirichlet mixtures. Biometrika 2013, 100, 623–640. [Google Scholar] [CrossRef]

- Barron, A.R. Uniformly Powerful Goodness of Fit Tests. Ann. Stat. 1989, 17, 107–124. [Google Scholar] [CrossRef]

- Freedman, D.A. On the Asymptotic Behavior of Bayes’ Estimates in the Discrete Case. Ann. Math. Stat. 1963, 34, 1386–1403. [Google Scholar] [CrossRef]

- Cam, L.L. Convergence of Estimates Under Dimensionality Restrictions. Ann. Stat. 1973, 1, 38–53. [Google Scholar] [CrossRef]

- Schwartz, L. On Bayes procedures. Z. Wahrscheinlichkeitstheorie Verw Geb. 1965, 4, 10–26. [Google Scholar] [CrossRef]

- Cam, L.L. Asymptotic Methods in Statistical Decision Theory; Springer: New York, NY, USA, 1986. [Google Scholar]

- Lehmann, E.L.; Casella, G. Theory of Point Estimation, 2nd ed.; Springer: New York, NY, USA, 1998. [Google Scholar]

- Agresti, A. Categorical Data Analysis, 3rd ed.; Wiley: Hoboken, NJ, USA, 2013. [Google Scholar]

- Robins, J.M. The analysis of Randomized and Nonrandomized AIDS Treatment Trials Using A New Approach to Causal Inference in Longitudinal Studies. In Health Service Research Methodology: A Focus on AIDS; Sechrest, L., Freeman, H., Mulley, A., Eds.; U.S. Public Health Service, National Center for Health Services Research: Washington, DC, USA, 1989; pp. 113–159. [Google Scholar]

- Manski, C.F. Nonparametric Bounds on Treatment Effects. Am. Econ. Rev. 1990, 80, 319–323. [Google Scholar]

- Ding, P.; VanderWeele, T.J. Sensitivity Analysis Without Assumptions. Epidemilogy 2016, 27, 368–377. [Google Scholar] [CrossRef]

- Sjölander, A.; Hössjer, O. Novel bounds for causal effects based on sensitivity parameters on the risk difference scale. J. Causal Inference 2021, 9, 190–210. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Ratcliff, R.; Smith, P.L. A Comparison of Sequential Sampling Models for Two-Choice Reaction Time. Psychol. Rev. 2004, 111, 333–367. [Google Scholar] [CrossRef]

- Chen, W.J.; Krajbich, I. Computational modeling of epiphany learning. Proc. Natl. Acad. Sci. USA 2017, 114, 4637–4642. [Google Scholar] [CrossRef] [PubMed]

- Corbett, A.T.; Anderson, J.R. Knowledge Tracing: Modeling the Acquisition of Procedural Knowledge. User Model. User-Adapt. Interact. 1995, 4, 253–278. [Google Scholar] [CrossRef]

- Oka, M.; Okada, K. Assessing the Performance of Diagnostic Classification Models in Small Sample Contexts with Different Estimation Methods. arXiv 2022, arXiv:2104.10975. [Google Scholar]

- Hirscher, T. Consensus Formation in the Deffuant Model. Ph.D. Thesis, Division of Mathematics, Department of Mathematical Sciences, Chalmers University of Technology, Gothenburg, Sweden, 2014. [Google Scholar]

- Murphy, K.P. Dynamic Bayesian Networks: Representation, Inference and Learning. Ph.D. Thesis, University of California, Berkeley, CA, USA, 2002. [Google Scholar]

- Marshall, P. Biology transcends the limits of computation. Prog. Biophys. Mol. Biol. 2021, 165, 88–101. [Google Scholar] [CrossRef] [PubMed]

- Atkinson, S.; Williams, P. Quorum sensing and social networking in the microbial world. J. R. Soc. Interface 2009, 6, 959–978. [Google Scholar] [CrossRef] [PubMed]

- Shapiro, J.A. All living cells are cognitive. Biochem. Biophys. Res. Commun. 2020, 564, 134–149. [Google Scholar] [CrossRef]

- Ewert, W.; Dembski, W.; Marks, R.J., II. Algorithmic Specified Complexity in the Game of Life. IEEE Trans. Syst. Man Cybern. Syst. 2015, 45, 584–594. [Google Scholar] [CrossRef]

- Díaz-Pachón, D.A.; Hössjer, O.; Marks, R.J., II. Is Cosmological Tuning Fine or Coarse? J. Cosmol. Astropart. Phys. 2021, 2021, 020. [Google Scholar] [CrossRef]

- Díaz-Pachón, D.A.; Hössjer, O.; Marks, R.J., II. Sometimes size does not matter. arXiv 2022, arXiv:2204.11780. [Google Scholar]

- Zhao, X.; Plata, G.; Dixit, P.D. SiGMoiD: A super-statistical generative model for binary dataP. PLoS Comput. Biol. 2021, 17, e1009275. [Google Scholar] [CrossRef]

- Stephens, P.A.; Buskirk, S.W.; Hayward, G.D.; del Río, C.M. Information theory and hypothesis testing: A call for pluralism. J. Appl. Ecol. 2005, 42, 4–12. [Google Scholar] [CrossRef]

- Szucs, D.; Ioannidis, J.P.A. When Null Hypothesis Significance Testing Is Unsuitable for Research: A Reassessment. Front. Hum. Neurosci. 2017, 11, 390. [Google Scholar] [CrossRef] [PubMed]

- Cox, R.T. The Algebra of Probable Inference; Johns Hopkins University Press: Baltimore, MD, USA, 1961. [Google Scholar]

- Jaynes, E.T. Probability Theory: The Logic of Science; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hössjer, O.; Díaz-Pachón, D.A.; Rao, J.S. A Formal Framework for Knowledge Acquisition: Going beyond Machine Learning. Entropy 2022, 24, 1469. https://doi.org/10.3390/e24101469

Hössjer O, Díaz-Pachón DA, Rao JS. A Formal Framework for Knowledge Acquisition: Going beyond Machine Learning. Entropy. 2022; 24(10):1469. https://doi.org/10.3390/e24101469

Chicago/Turabian StyleHössjer, Ola, Daniel Andrés Díaz-Pachón, and J. Sunil Rao. 2022. "A Formal Framework for Knowledge Acquisition: Going beyond Machine Learning" Entropy 24, no. 10: 1469. https://doi.org/10.3390/e24101469

APA StyleHössjer, O., Díaz-Pachón, D. A., & Rao, J. S. (2022). A Formal Framework for Knowledge Acquisition: Going beyond Machine Learning. Entropy, 24(10), 1469. https://doi.org/10.3390/e24101469