1. Introduction

The accelerated evolution of sequencing technologies has generated significant growth in the number of sequence data [

1], opening up new opportunities and creating new challenges for biological sequence analysis. To take advantage of the increased predictive power of machine learning (ML) algorithms, recent works have investigated the use of these algorithms to analyze biological data [

2,

3].

The development of effective methods for sequence analysis, through ML, benefits the research advancement in new applications [

4,

5], such as understanding several problems [

4,

5], e.g., cancer diagnostics [

6], development of CRISPR-Cas systems [

7], drug discovery and development [

8] and COVID-19 diagnosis [

9]. Nevertheless, ML algorithms applied to the analysis of biological sequences present challenges, such as feature extraction [

10]. For non-structured data, as is the case of biological sequences, feature extraction is a key step for the success of ML applications [

11,

12,

13].

Previous works have shown that universal concepts from Information Theory (IT), originally proposed by Claude Shannon (1948) [

14], can be used to extract relevant information from biological sequences [

15,

16,

17]. According to [

18], an IT-based analysis of symbolic sequences is of interest in various study areas, such as linguistics, biological sequence analysis or image processing, whose relevant information can be extracted, for example, by Shannon’s uncertainty theory [

19].

Studies have investigated the analysis of biological sequences with Shannon entropy in a wide range of applications [

19,

20,

21]. Given their large applicability, according to [

22], it is important to explore the possibility of generalized entropies, such as Tsallis [

23,

24], which was proposed to generalize the Boltzmann/Gibbs’s traditional entropy to non-extensive physical systems [

25]. This class of generalized entropy has been used for different problems, e.g., image analysis [

25,

26], inference of gene regulatory networks [

27], DNA analysis [

20] induction of decision trees [

28] and classification of epileptic seizures [

29].

In [

25], the authors proposed a new image segmentation method using Tsallis entropy. Later, Ref. [

26] showed a novel numerical approach to calculate the Tsallis entropic index feature for a given image. In [

27], the authors introduced the use of generalized entropy for the inference of gene regulatory networks. DNA analysis using entropy (Shannon, Rényi and Tsallis) and phase plane concepts was presented in [

20], while [

28] used the concept of generalized entropy for decision trees. Recently, Ref. [

29] investigated a novel single feature based on Tsallis entropy to classify epileptic seizures. These studies report a wide range of contributions to the use of Tsallis entropy in different domains. To the best of our knowledge, this paper is the first work proposing its use as a feature (feature extraction) to represent distinct biological sequences. Additionally, it presents the first study of different Tsallis entropic indexes and their effects on classical classifiers.

A preliminary version of this proposal was presented in [

5]. Due to the favorable results obtained, we created a code to extract different descriptors available in a new programming package, called MathFeature [

13], which implements mathematical descriptors for biological sequences. However, until now, we have not studied Tsallis entropy in depth, e.g., its effect, its application to other biological sequence datasets and its comparison with other entropy-based descriptors, e.g., Shannon. Thus, in this paper, we investigate the answers to the following questions:

Question 1 (Q1): Are Tsallis entropy-based features robust for extracting information from biological sequences in classification problems?

Question 2 (Q2): Does the entropic index affect the classification performance?

Question 3 (Q3): Is Tsallis entropy as robust as Shannon entropy for extracting information from biological sequences?

We are evaluating robustness in terms of performance, e.g., accuracy, recall and F1 score, of the feature vectors extracted by our proposal on different biological sequence datasets. Finally, this study makes the following main research contributions: we propose an effective feature extraction technique based on Tsallis entropy, being robust in terms of generalization, and also potentially representative for collecting information in fewer dimensions for sequence classification problems.

2. Literature Review

In this section, we develop a systematic literature review to present and summarize feature extraction descriptors for biological sequences (DNA, RNA, or protein). This review aims to report the need and lack of studies with mathematical descriptors, such as entropy, evidencing the contribution of this article. This section followed the Systematic Literature Review (SLR) Guidelines in Software Engineering [

30], which, according to [

30,

31], allows a rigorous and reliable evaluation of primary studies within a specific topic. We base our review on recommendations from previous studies [

30,

31,

32].

We propose to address the following problem:

How can we numerically represent a biological sequence (such as DNA, RNA, or protein) in a numeric vector that can effectively reflect the most discriminating information in a sequence? To answer this question, we reviewed ML-based feature extraction tools (or packages, web servers and toolkits) that aim, as a proposal, to provide several feature descriptors for biological sequences—that is, without a defined scope, and, therefore, generalist studies. Moreover, we used the following electronic databases: ACM Digital Library, IEEE Xplore Digital Library, PubMed and Scopus. We chose the Boolean method [

33] to search primary studies in the literature databases. The standard search string was:

(“feature extraction” OR “extraction” OR “features” OR “feature generation” OR “feature vectors”) AND (“machine” OR “learning”) AND (“tool” OR “web server” OR “package” OR “toolkit”) AND (“biological sequence” OR “sequence”).Due to different query languages and limitations between the scientific article databases, there were some differences in the search strings. Therefore, our first step was to apply search keys to all databases, returning a set of 1404 studies. Furthermore, we used the Parsifal tool to assist our review and obtain better accuracy and reliability. Thereafter, duplicate studies were removed, returning an amount of 1097 titles (307 duplicate studies).

Then, we performed a thorough analysis of the titles, keywords and abstracts, according to inclusion and exclusion criteria: (1) Studies in English, (2) Studies with different feature extraction techniques, (3) Studies with generalist tools and (4) Studies published in journals. We accepted 28 studies (we rejected, 1069). Finally, after pre-selecting the studies, we performed a data synthesis, to apply an assessment based on the quality criteria: (1) Are the study aims specified? (2) Study with different proposals/results? (3) Study with complete results?

Hence, of the 28 studies, 3 were eliminated, leading to a final set of 25 studies (see

Supplementary Table S1). As previously mentioned, we assessed generalist tools for feature extraction, since this type of study would provide several descriptors, presenting an overview of ways to numerically represent biological sequences (which would not be possible by evaluating studies dedicated to some specific problem). As expected, we found more than 100 feature descriptors. We chose to divide them into large groups (16 groups—these were defined based on all studies), as shown in

Supplementary Table S2. Then, we created

Table 1 with all the feature descriptors found in the 25 studies (see the complete table in

Supplementary Table S3). As can be seen, no study provides mathematical descriptors, such as Tsallis entropy, reinforcing the contribution of our proposal.

3. Information Theory and Entropy

According to [

34], IT can be defined as a mathematical treatment of the concepts, parameters, and rules related to the transmission and processing of information. The IT concept was first proposed by Claude Shannon (1948) in the work entitled “A Mathematical Theory of Communication” [

14], where he showed how information could be quantified with absolute precision. The entropy originating from IT can be considered a measure of order and disorder in a dynamic system [

14,

25]. However, to define information and entropy, it is necessary to understand

random variables, which, in probability theory, is a mathematical object that can take on a finite number of different states

with previously defined probabilities

[

35]. According to [

5], for a discrete random variable

R taking values in

with probabilities

, represented as

, we can define self-information or information as [

26]

Thus, the Shannon entropy

is defined by

Here,

N is the number of possible events and

the probability that event

n occurs. Fundamentally, with Shannon entropy, we can reach a single value that quantifies the information contained in different observation periods [

36]. Furthermore, it is important to highlight that the Boltzmann/Gibbs entropy was redefined by Shannon as a measure of uncertainty [

25]. This formalism, known as Boltzmann–Gibbs–Shannon (BGS) statistics, has often been used to interpret discrete and symbolic data [

18]. Moreover, according to [

25,

37], if we decompose a physical system into two independent statistical subsystems A and B, the Shannon entropy has the extensive property (additivity)

According to [

38], complementary information on the importance of specific events can be generated using the notion of generalized entropy, e.g., outliers or rare events. Along these lines, Constantino Tsallis [

23,

24] proposed a generalized entropy of the BGS statistics, which can be defined as follows:

Here,

q is called the entropic index, which, depending on its value, can represent various types of entropy. Depending on the value of

q, three different entropies can be defined [

25,

37]:

Superextensive entropy

:

Extensive entropy

:

Subextensive entropy

:

When

, the Tsallis entropy is superextensive; for

, it is extensive (e.g., leads to the Shannon entropy), and for

, it is subextensive [

39]. Therefore, based on these differences, it is important to explore the possibility of generalized entropies [

22,

28,

40]. Another notable generalized entropy is the Rényi entropy, which generalizes the Shannon entropy, the Hartley entropy, the collision entropy and the min-entropy [

41,

42]. The Rényi entropy can be defined as follows:

As in the Tsallis entropy, leads to Shannon entropy.

4. Materials and Methods

In this section, we describe the experimental methodology adopted for this study, which is divided into five stages: (1) data selection; (2) feature extraction; (3) extensive analysis of the entropic index; (4) performance analysis; (5) comparative study.

4.1. A Novel Feature Extraction Technique

Our proposal is based on the studies of [

5,

20]. To generate our probabilistic experiment [

15], we use a known tool in biology, the k-mer. In this method, each sequence is mapped in the frequency of neighboring bases

k, generating statistical information. The k-mer is denoted in this work by

, corresponding to Equation (

9).

Here, each sequence (

) was assessed with frequencies of

, in which

is the number of occurrences with length

k in a sequence (

) with length

N; the index

refers to an analyzed substring (e.g.,

, for

). Here, after counting the absolute frequencies of each

k, we generate relative frequencies and then apply Tsallis entropy to generate the features. In the case of protein sequences, index

i is

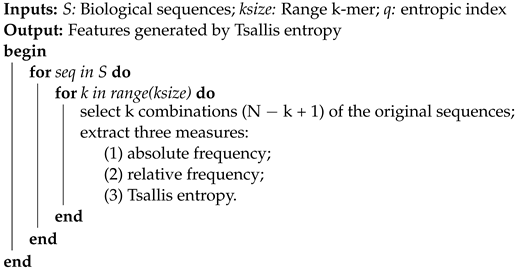

. For a better understanding, Algorithm 1 demonstrates our pseudocode.

| Algorithm 1: Pseudocode of the Proposed Technique |

![Entropy 24 01398 i001 Entropy 24 01398 i001]() |

This algorithm is divided into five steps: (1) each sequence is mapped to

; (2) extraction of the absolute frequency of each

; (3) extraction of the relative frequency of each

based on absolute frequency; (4) extraction of the Tsallis entropy, based on the relative frequency for each

—see Equation (

4); (5) generation, for each

, of an entropic measure. Regarding interpretability, each entropic measure represents a

, e.g., 1-mer = frequency of A, C, T, G. In other words, analyzing the best measures—for example, through a feature importance analysis—we can determine which

are more relevant to the problem under study, providing an indication of which combination of nucleotides or amino acids contributes to the classification of the sequences.

4.2. Benchmark Dataset and Experimental Setting

To validate the proposal, we divided our experiments into five case studies:

Case Study I: Assessment of the Tsallis entropy and the effect of the entropic index

q, generating 100 feature vectors for each benchmark dataset with 100 different

q parameters (entropic index). The features were extracted by Algorithm 1, with

q varying from 0.1 to 10.0 in steps of 0.1 (except 1.0, which leads to the Shannon entropy). The goal was to find the best values for the parameter

q to be used in the experiments. For this, three benchmark datasets from previous studies were used [

5,

43,

44]. For the first dataset (D1), the selected task was long non-coding RNAs (lncRNA) vs. protein-coding genes (mRNA), as in [

45], using a set with mRNA and lncRNA sequences (500 for each label—benchmark dataset [

5]). For the second dataset (D2), a benchmark set from [

5], the selected task was the induction of a classifier to distinguish circular RNAs (cirRNAs) from other lncRNAs using 1000 sequences (500 for each label). The third dataset (D3) is for Phage Virion Protein (PVP) classification, from [

44], with 129 PVP and 272 non-PVP sequences.

Case Study II: We use the best parameters (

entropic index—found in case study I) to evaluate its performance on new datasets: D4—Sigma70 Promoters [

46] (2141 sequences), D5—Anticancer Peptides [

47] (344 sequences) and D6—Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2, 24815 sequences) [

13].

Case Study III—Comparing Tsallis with Shannon Entropy: As a baseline of the comparison between methods, we use Shannon entropy, as we did not find any article studying the form of proposed classification with Tsallis entropy and the effect of the entropic parameter with different classifiers. In this experiment, we use D1, D2, D3, D4, D5 and D6.

Case Study IV—Comparing Generalized Entropies: To better understand the effectiveness of generalized entropies for feature extraction, we evaluated Tsallis with the Rényi entropy. In this case, the evaluations of the two approaches were conducted by using the experiments from case study I, changing the entropic index for generating the datasets from 0.1 to 10.0 in steps of 0.1, and inducing the CatBoost classifier. In addition, the datasets used were D1, D2 and D3.

Case Study V—Dimensionality Reduction Analysis: Finally, we assessed our proposal with other known techniques of feature extraction and dimensionality reduction, e.g., Singular Value Decomposition (SVD) [

48] and Uniform Manifold Approximation and Projection (UMAP) [

49], using datasets D1, D2, D3 and D5. We also added three new benchmark datasets provided by [

50] to predict recombination spots (D7) with 1050 sequences (it contained 478 positive sequences and 572 negative sequences) and for the HIV-1 M pure subtype against CRF classification (D8) with 200 sequences (it contained 100 positive and negative sequences) [

51]. In addition, we also used a multiclass dataset (D9) containing seven bacterial phyla with 488 small RNA (sRNA), 595 transfer RNA (tRNA) and 247 ribosomal RNA (rRNA) from [

52]. Moreover, to apply SVD and UMAP, we kept the same feature descriptor by k-mer frequency.

For data normalization in all stages, we used the min–max algorithm. Furthermore, we investigated five classification algorithms, such as Gaussian Naive Bayes (GaussianNB), Random Forest (RF), Bagging, Multi-Layer Perceptron (MLP) and CatBoost. To induce our models, we randomly divided the datasets into ten separate sets to perform 10-fold cross-validation (case study I and case study V) and hold-out (70% of samples for training and 30% for testing—case study II, case study III, and case study IV). Finally, we assessed the results with accuracy (ACC), balanced accuracy (BACC), recall, F1 score and Area Under the Curve (AUC). In D9, we considered metrics suitable for multiclass evaluation.

5. Results and Discussion

5.1. Case Study I

As aforementioned, we induced our classifiers (using 10-fold cross-validation) across all feature vectors generated with 100 different

q parameters (totaling 300 vectors (3 datasets times 100 parameters)). Thereby, we obtained the results presented in

Table 2. This table shows the best and worst parameter (entropic parameter

q) of each algorithm in the three benchmark datasets, taking into account the ACC metric.

Thereby, evaluating each classifier, we observed that the CatBoost performed best in all datasets, with 0.9440 (

), 0.8300 (

), 0.7282 (

) in D1, D2 and D3, respectively. The other best classifiers were RF, with 0.9430 (

q = 0.4 − D1) and 0.8220 (

q = 5.3 − D2), followed by Bagging, MLP, and GaussianNB. Furthermore, in general, we noticed that the best results presented parameters between

, i.e., when the Tsallis entropy was subextensive. Along the same lines, it can be observed in

Table 2 that the worst parameters are between

, when the Tsallis entropy is also subextensive. However, for a more reliable analysis, we plotted graphs with the results of all tested parameters (

to

in steps of

), as shown in

Figure 1.

A large difference can be observed in the entropy obtained by each parameter q, mainly in benchmark D3. Thereby, analyzing D1 and D2, we noticed a pattern of robust results until , for the best classifiers in both datasets. However, as the q parameter increases, the classifiers are less accurate. On the other hand, if we look at D3, the entropy obtained for each parameter q presents a much greater variation, but following the same drop with parameters close to . Regarding the superextensive entropy , some cases showed robust results; however, most classifiers behaved better with the subextensive entropy.

5.2. Case Study II

After substantially evaluating the entropic index, our findings indicated that the best parameters were among

. Thereby, we generated new experiments using five parameters to test their efficiency in new datasets, with

, as shown in

Table 3 (sigma70 promoters—D4),

Table 4 (anticancer peptides—D5) and

Table 5 (SARS-CoV-2—D6). Here, we generated the results with the two best classifiers (RF and Catboost—best in bold).

Assessing each benchmark dataset, we note that the best results were of ACC: 0.6687 and AUC: 0.6108 in D4 (RF,

), ACC: 0.7212 and AUC: 0.7748 in D5 (RF,

), and ACC: 1.0000 and AUC: 1.0000 in D5 (RF and CatBoost,

). Once more, the results confirm that the best parameters are in the range of

, indicating a good choice when using Tsallis entropy. The perfect classification at D6 is supported by other studies in the literature [

53,

54,

55]. Nevertheless, after testing the Tsallis entropy on six benchmark datasets, we noticed an indication that this approach behaves better with longer sequences, e.g., D1 (mean length ≈ 751 bp), D2 (mean length ≈ 2799 bp), and D6 (mean length ≈ 10,870 bp) showed robust results, while D3 (mean length ≈ 268 bp), D4 (mean length ≈ 81 bp), and D5 (mean length ≈ 26 bp) showed less accurate results. Nonetheless, Tsallis entropy could contribute to hybrid approaches, as our proposal achieved relevant results in four datasets.

5.3. Case Study III—Comparing Tsallis with Shannon Entropy

Here, we used Shannon entropy as a baseline for comparison, according to

Table 6. Various studies have covered the biological sequence analysis with Shannon entropy, in the most diverse applications. For a fair analysis, we reran the experiments on all datasets (case study I and II, six datasets), using hold-out, with the same train and test partition for both approaches. Once more, we used the best classifiers in case study II (RF and CatBoost), but, for a better understanding, we only show the best result in each dataset.

According to

Table 6, our proposal with Tsallis entropy showed better results of ACC (5 wins), recall (4 wins), F1 score (5 wins), and BACC (5 wins) than Shannon entropy in five datasets, falling short only on D6, with a small difference of

. Analyzing each metric individually, we observed that the best Tsallis parameters resulted in an F1 score gain compared to Shannon entropy of

and

in D4 and D5, respectively. Other gains were repeated in ACC, recall, and BACC. In the overall average, our proposal achieved improvements of 0.51%, 1.52%, 1.34%, and 0.62% in ACC, recall, F1 score, and BACC, respectively. Despite a lower accuracy in D3 and D4, this approach alone delivered a BACC of 0.6342 and 0.5845, i.e., it is a supplementary methodology to combine with other feature extraction techniques available in the literature. Based on this, we can state that Tsallis entropy is as robust as Shannon entropy for extracting information from biological sequences.

5.4. Case Study IV—Comparing Generalized Entropies

According to the Tsallis entropy results, wherein it overcame Shannon entropy, we realized the strong performance of generalized entropy as a feature descriptor for biological sequences. For this reason, we also evaluated the influence of another form of generalized entropy, such as Rényi entropy [

42], as a good feature descriptor for biological sequences. Here, we investigated the performance of Tsallis and Rényi entropy, changing the entropic index for D1, D2, and D3. Moreover, we have chosen the best classifier from case study I (CatBoost).

When considering the same reproducible environment for the experiment, the performance peak was the same for both methods, as we can see in

Figure 2, with graphs containing accuracy performance results for all the entropic index values (from 0.1 to 10.0). Regarding the best classification performance, for D1 (

Figure 2a), we had ACC: 0.9600, recall: 0.9667, F1 score: 0.9603, and BACC: 0.9600; for D2 (

Figure 2b), we obtained ACC: 0.8300, recall: 0.7733, F1 score: 0.8198, and BACC: 0.8300; and for D3 (

Figure 2c), we had ACC: 0.7521, recall: 0.359, F1 score: 0.4828, and BACC: 0.649. As seen earlier, Tsallis entropy performs poorly from a specific entropy index onwards, but Rényi entropy demonstrates more consistent performance when compared to Tsallis, representing a possible alternative. Nevertheless, the results again highlight the promising use of generalized entropies as a feature extraction approach for biological sequences.

5.5. Case Study V—Dimensionality Reduction

In this last case study, we compared our proposal with other known techniques for feature extraction and dimensionality reduction in the literature, using the same representation of the biological sequences, the frequency. In particular, for each DNA/RNA sequence, we generated from to , while, for proteins, we generated it until , considering the high number of combinations with amino acids. All datasets used have around 1000 biological sequences, considering the prohibitive computational cost to deal with the approach. In this study, our objective was to use SVD and UMAP to reduce the dimensionality of the feature vector by extracting new features, as we did in our approach. However, high values of k present high computational costs, due to the amount of generated features, e.g., in DNA (4096 features) and in protein (8000 features).

From previous case studies, we realized that the feature extraction with Tsallis entropy provided interesting results. Thereby, we extended our study, applying SVD and UMAP in the datasets with

frequencies, reducing them to 24 components, comparable to the dimensions generated in our studies. Fundamentally, UMAP can deal with sparse data, as can SVD, which is known for its efficiency in dealing with this type of data [

56,

57,

58]. Both reduction methods can be used in the context of working with high-dimensional data. Although UMAP is widely used for visualization [

59,

60], the reduction method can be used for feature extraction, which is part of an ML pipeline [

61]. UMAP can also be used with raw data, without needing to adopt another reduction technique before using it [

58]. We induced the CatBoost classifier using 10-fold cross-validation. We obtained the results listed in

Table 7.

As can be seen, Tsallis entropy achieved five wins, against two for SVD and zero for UMAP, taking into account the ACC. In addition, in the general average, we obtained a gain of more than 18% in relation to SVD and UMAP in ACC, indicating that our approach can be potentially representative for collecting information in fewer dimensions for sequence classification problems.