1. Introduction

The analysis of ordinal patterns is one of the most used tools to characterize irregular (whether stochastic or deterministic) signals [

1]. Instead of decomposing a given signal in terms of templates defined a priori, as, for example, in the case of Fourier modes, one lets the time series suggest the relevant motifs of a given length

m.

Possibly the most relevant known quantifier that can be built by classifying ordinal patterns is the so-called permutation entropy (PE) [

2], which quantifies the diversity of motifs, or symbolic sequences, by encoding

m-dimensional sections of a time series into permutations. The growth rate of PE with

m coincides asymptotically (i.e., for

) with the Kolmogorov–Sinai (KS) entropy, thus giving appeal to this concept, which does not require the definition of any preassigned partition of the phase space. The possibility of straightforwardly computing PE out of the observed occurrence frequency of symbolic sequences is balanced by it being heavily affected by finite-size corrections. They are due to the entropy increase originating from the implicit refinement of the phase-space partition when the window length is increased (a phenomenon entirely different from the entropy increase associated with a finite KS entropy). These spurious effects can be either removed by assuming an a priori knowledge of the fractal structure of the underlying attractor [

3], or by reconstructing, for any given value of

m, the Markov process that describes the time succession of symbolic sequences [

4].

The increasing rate of PE is a well-defined concept whenever the time series is strictly deterministic. However, experimental signals are typically affected by noise, which may be so strong as to mask the underlying chaotic nature. For this reason, attention has been devoted to methods to distinguish more or less stochastic processes. In a notable attempt [

5], it was proposed to accompany the estimate of PE with a second quantifier that measures the distance between the observed distribution of the occurrence probabilities of the symbolic sequences from the uniform distribution expected for a perfectly random process.

In this paper, we focus on deterministic signals affected by a weak observational, i.e., additive, noise, with the goal of unveiling general scenarios and possibly using them as a springboard to tackle more complex problems. For this purpose, we study here four dynamical systems: (i) the Hénon map, as one of the prototypical examples of chaotic dynamics; (ii) the tent map, a simple model that offers chances to derive analytical results; (iii) the Hénon map with delay, also referred to as the generalized 3D Hénon map, which makes up a hyperchaotic system to investigate the generality of the scenario; and (iv) the Mackey–Glass model, which provides a more realistic continuous time system.

We first quantify the effect of additional noise by determining the PE for different values of the window length m and the noise amplitude . We find that, in the small noise limit, the entropy increases according to a power law as , where the exponents , k depend on, and are therefore characteristic of, the underlying dynamical system. A multifractal analysis is then implemented, where the number of symbolic sequences characterized by (approximately) the same probability is determined. As a result, the whole population can be split into two “phases”, the former consisting of the original, truly deterministic, symbolic sequences and the latter of new symbolic sequences, which are noise-induced by “twisting” the original ones. Finally, a more quantitative analysis is performed to clean the noise-induced sequences via a supervised approach that allows assembling them back to their respective “ancestor” sequences. This cleaning process yields a decrease in the PE, which comes closer to the expected, purely deterministic value.

The paper is organized as follows. The scaling analysis of the PE increase as a function of the window length and noise amplitude is discussed in

Section 2, while the multifractal analysis is introduced and illustrated in

Section 3. The attempted reconstruction of the deterministic value of entropy upon reassignment of noise-induced symbolic sequences is the topic of

Section 4, while the remaining open problems are presented in the final

Section 5.

2. Permutation Entropy and Its Noise-Induced Scaling Behavior

Let denote a sequence of scalar variables, which may either arise from the iteration of a discrete-time map, or by sampling a continuous-time signal. In this paper, four dynamical systems are considered:

the Hénon map,

the tent map,

the generalized 3D Hénon map,

and the Mackey–Glass model,

In the case of the tent map, to avoid the well-known issue of periodicity due to the finite precision of floating-point representation, a realization of a random Gaussian variate

, with

, is dynamically added to the outcome

at step

n. If the result of this last operation is >1 (<0), an amount equal to 1 is further subtracted (added) in order to keep the final result within the interval

. However, due to the standard deviation of the Gaussian perturbation and the length of the computed time series (see below), these corrections are definitely unlikely (

). In the case of the Mackey–Glass model, the integration of the differential equation is carried out via a Runge–Kutta fourth-order algorithm upon sampling the

x variable with a unitary sampling rate (

). In all cases, the starting point is randomly set, and the first

evolution steps are discarded from further analysis to avoid transient effects. The length of each sequence is

. Due to this large value, random fluctuations of the different parameters numerically assessed out of the time series are deemed to be negligible [

6,

7]. The average value of the

x variables are 0.36, 0.5, 0.39, and 0.9, respectively.

Let

be a trajectory—or window—composed of

m consecutive observations. According to the procedure devised by Bandt and Pompe [

2], the window

is encoded as the symbolic sequence (permutation), henceforth also referred to as word,

, where the integer

belongs, like the index

j, to the range

and corresponds to the rank, from the smallest to the largest, of

within

. In the unlikely event of an equality between two or more observations, the relative rank is provided by the index

j. This way, the very same word

W encodes trajectories all exhibiting the same temporal pattern, without paying attention to the actual values taken by the variables

. Assuming a sufficiently long time series and denoting with

the rate with which a word

W is observed therein, PE is defined as

where the sum is extended on the set of all possible words, whose cardinality is

(we assume here

).

In order to investigate the variation of PE due to the presence of a weak observational noise, we add to each observation

a realization of a random variable

uniformly distributed within the interval

so that its standard deviation is equal to

. Observational noise is effective insofar that it modifies the ordering of the

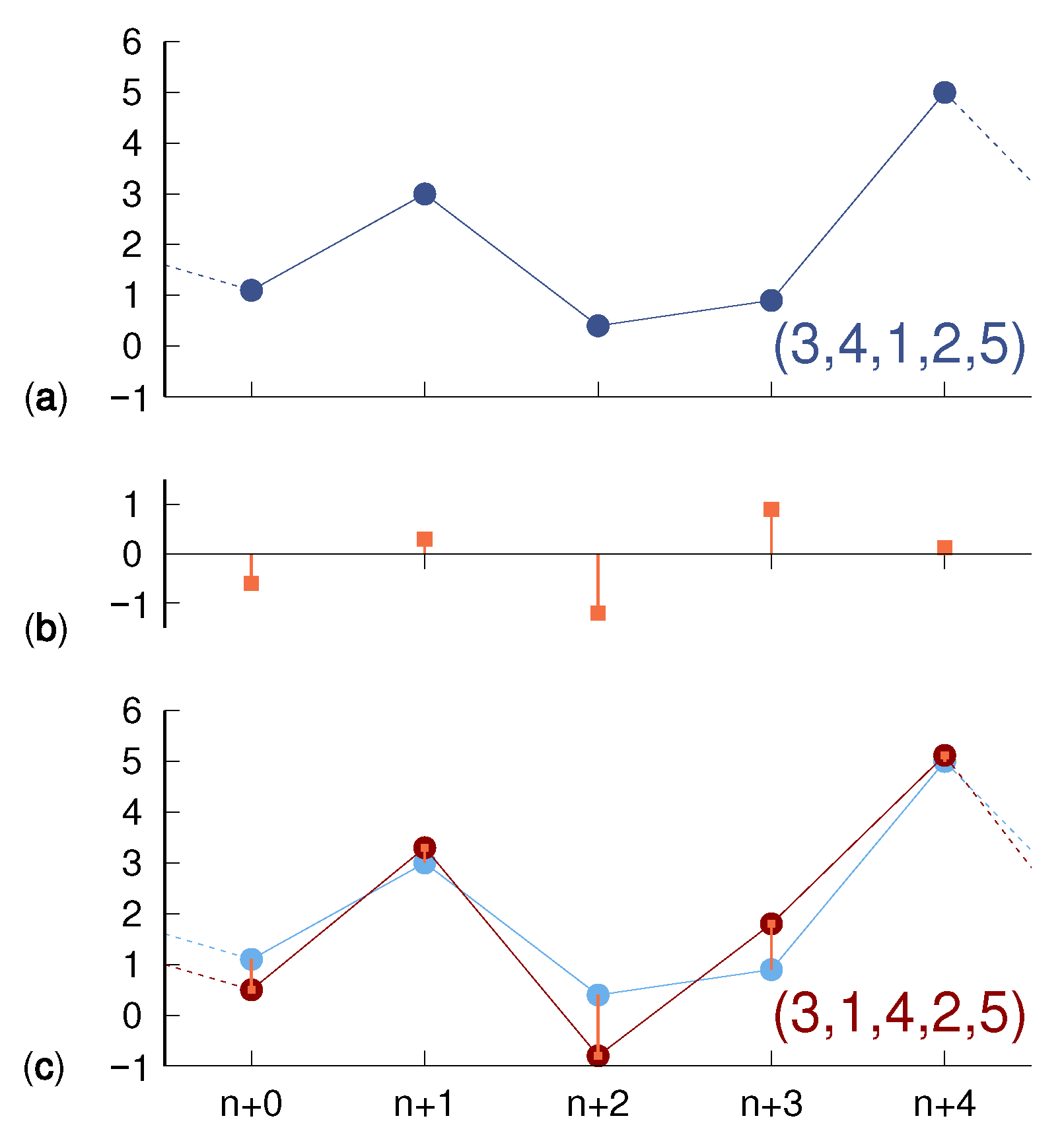

values in a given window. In the example of

Figure 1, a noiseless trajectory, encoded as

, yields, under the effect of random fluctuations, a new trajectory, which is now encoded as

: the neighboring symbols 1 and 4 are swapped due to their initial separation 0.2 being overcome by “counterpropagating” shifts induced by observational noise. This leads to a diffusion of the observed occurrence frequency of a given sequence toward neighboring ones, including those that might go unvisited in the absence of noise, and therefore induces an entropy increase

given by

In this equation,

is the PE computed according to Equation (

1) on rates

observed on a time series affected by a noise of amplitude

, whereas

corresponds to the noiseless case.

One expects the entropy increase to depend on the noise strength rescaled to the typical separation between consecutive values along the window (after reordering) since represents the size of the “barrier” to be overcome in order for the encoding of the ordinal pattern to change. By considering that the variables of our dynamical systems are all of order , we expect (more precisely ), and we therefore expect the effective noise to be equal to .

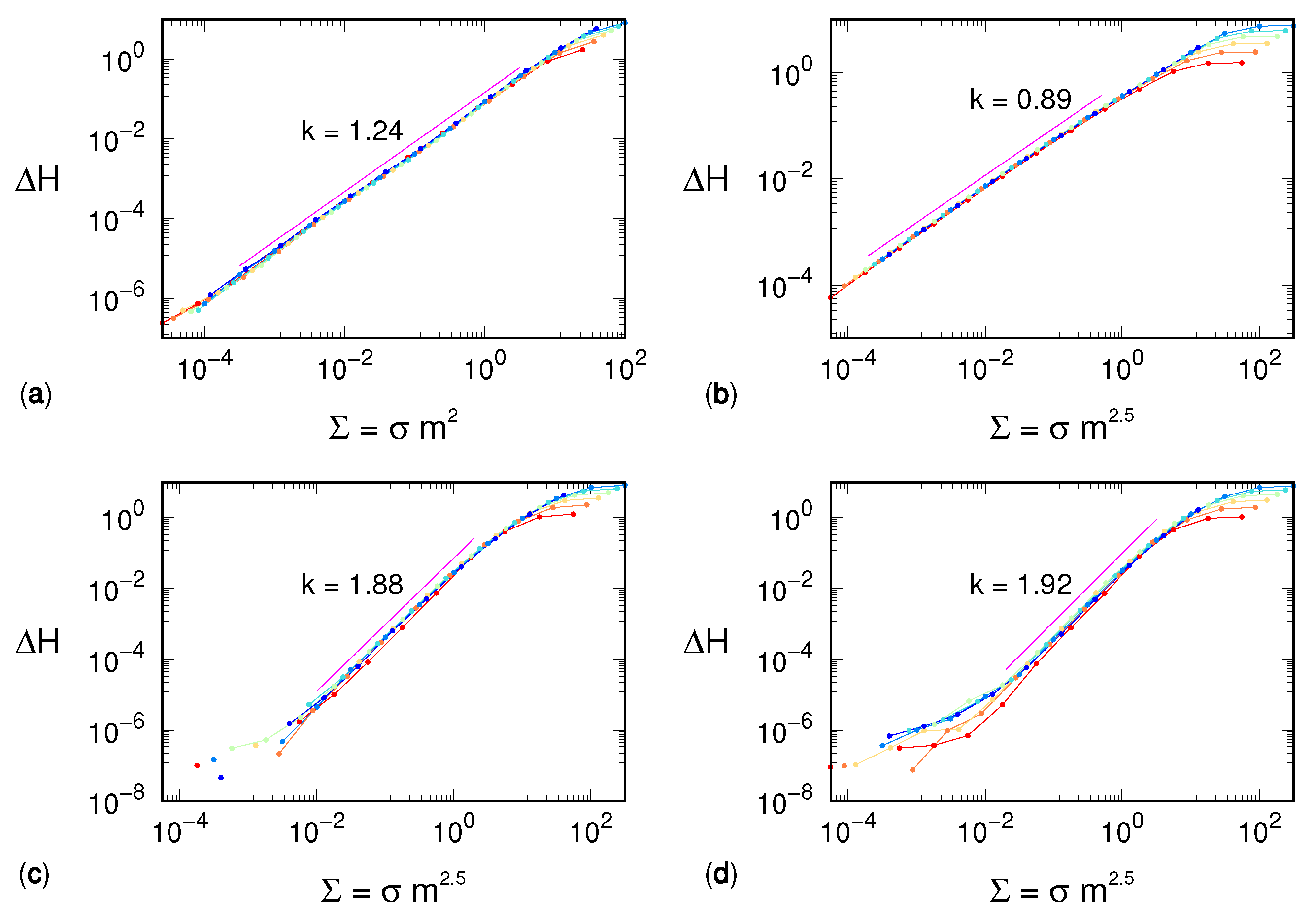

Simulations performed for different noise amplitudes and window lengths reveal a different scenario. The entropy increase depends on

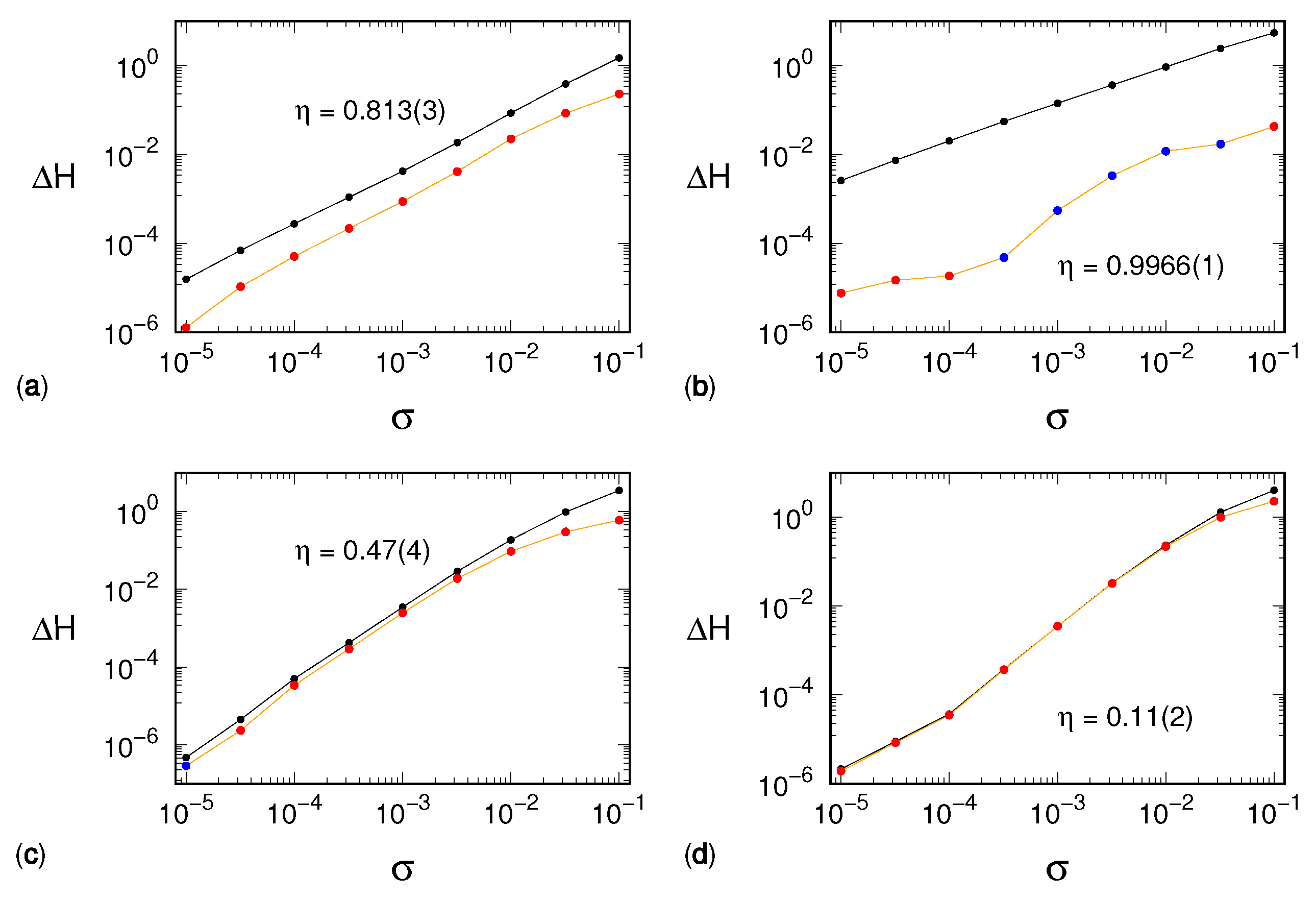

, as clearly attested by the very good “data collapse” shown in

Figure 2, which extends over a wide range of scales for each one of the four selected models.

The exponent

is not only model-dependent—being equal to 2 for the Hénon map, and approximately to 2.5 for the three other dynamical systems—but it is strictly larger than 1. This observation suggests that our mean-field argument is too naive and the distribution of separations between consecutive variables must be included in the reasoning. We are then driven toward considerations similar to those that allowed refining the estimate of the KS entropy in Ref. [

3], though it is not clear how to transform them into quantitative arguments.

An additional remarkable result revealed by our simulations is that in all cases, the entropy increase scales as

where both the factor

b and the exponent

k are distinctive features of the underlying dynamical system. The lower quality of the collapse for small values of

is most likely an effect of poor statistics: the smaller the deviation, the larger the number of points required to compute significant differences between the populations of the symbolic sequences. On the other side, namely for large values of

, the overlap between curves at different

m values has to stop due to the finiteness of

, which cannot exceed

and thus leads to

m-dependent saturation.

We also note that the exponent k increases with the dynamical complexity of the signal. We can argue as follows: noise increases the entropy since it redistributes the mass of each symbolic sequence toward the neighboring ones. In the following sections, we investigate this redistribution.

3. Multifractal Analysis

In the previous section, we described how noise affects entropy. Here, we focus on a more detailed description by reconstructing the distribution of the different words for different noise amplitudes. This is essentially an application of the so-called multifractal formalism [

8]. We consider a single

m value, namely,

: this choice, while being still computationally manageable, provides a sufficiently large number of possible symbolic sequences (≈3.6

) so that the related set can be considered as being continuous.

For a given noise amplitude

, we first build a histogram where the occupation

of the

i-th bin is given by the number of different symbolic sequences whose logarithm of the observed probability lies in the interval

(

is the logarithmic scale bin width):

Unvisited symbolic sequences are not taken into account. Because all simulations were carried out by analyzing time series consisting of points, the minimum possible value of is approximately −22.1. The histograms were thus constructed by splitting the range into 25 bins, so that . (This choice essentially corresponds to the result of the application of Freedman–Diaconis’ rule).

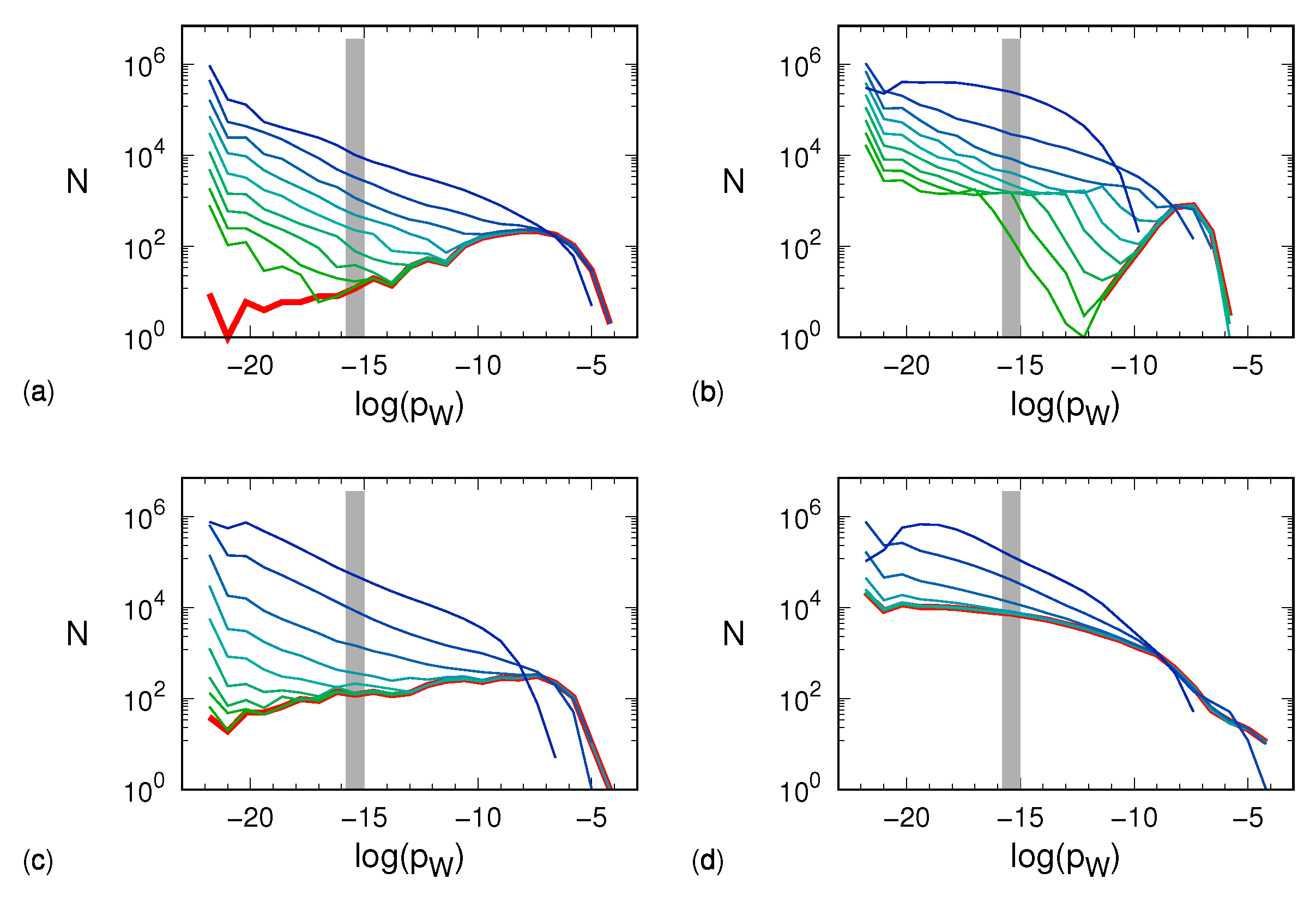

The results are plotted in

Figure 3 for the four different models and different noise amplitudes. It is worth remarking that the logarithmic binning used in the analysis of this section is both an ingredient of the multifractal formalism and a way to cope with the interval of occurrence probabilities, which covers about eight decades. We also note that the sum of the bin populations—and thus a fortiori the population of each single bin—cannot exceed

. This observation is relevant when considering the progressive effect of noise on the distributions of the different words.

The data in panel (a) refer to the Hénon attractor. The red curve corresponds to the zero-noise limit in which we see a relatively small number of unpopulated words. As long as the noise is small (≲), its effect mostly turns out to yield a large number of very lightly populated sequences, whereas the distribution of heavier sequences is basically untouched. Quantitative changes can be appreciated when the noise amplitude reaches 0.1. The tent map exhibits a similar scenario. Here, the zero-noise histogram is well confined between and . At a noise amplitude of ∼, the noise-induced probability shuffling becomes so effective as to erode and leftward shift the most populated bins of the noiseless distribution. The same erosion is visible in the plots of the generalized 3D Hénon map, which, on the lower side, shows a behavior more similar to that of the Hénon map. The Mackey–Glass model reveals a partially different scenario. In the noiseless case, light boxes are more “popular” than for the other dynamical systems, which makes the distribution more robust to weak noise. However, at a noise amplitude of ∼, both the erosion and the leftward shift of the most populated bins set on. Altogether, we see that the more or less pronounced presence of a low-probability tail in the various distributions reflects in a smaller or larger robustness to noise (the k exponent introduced in the previous section).

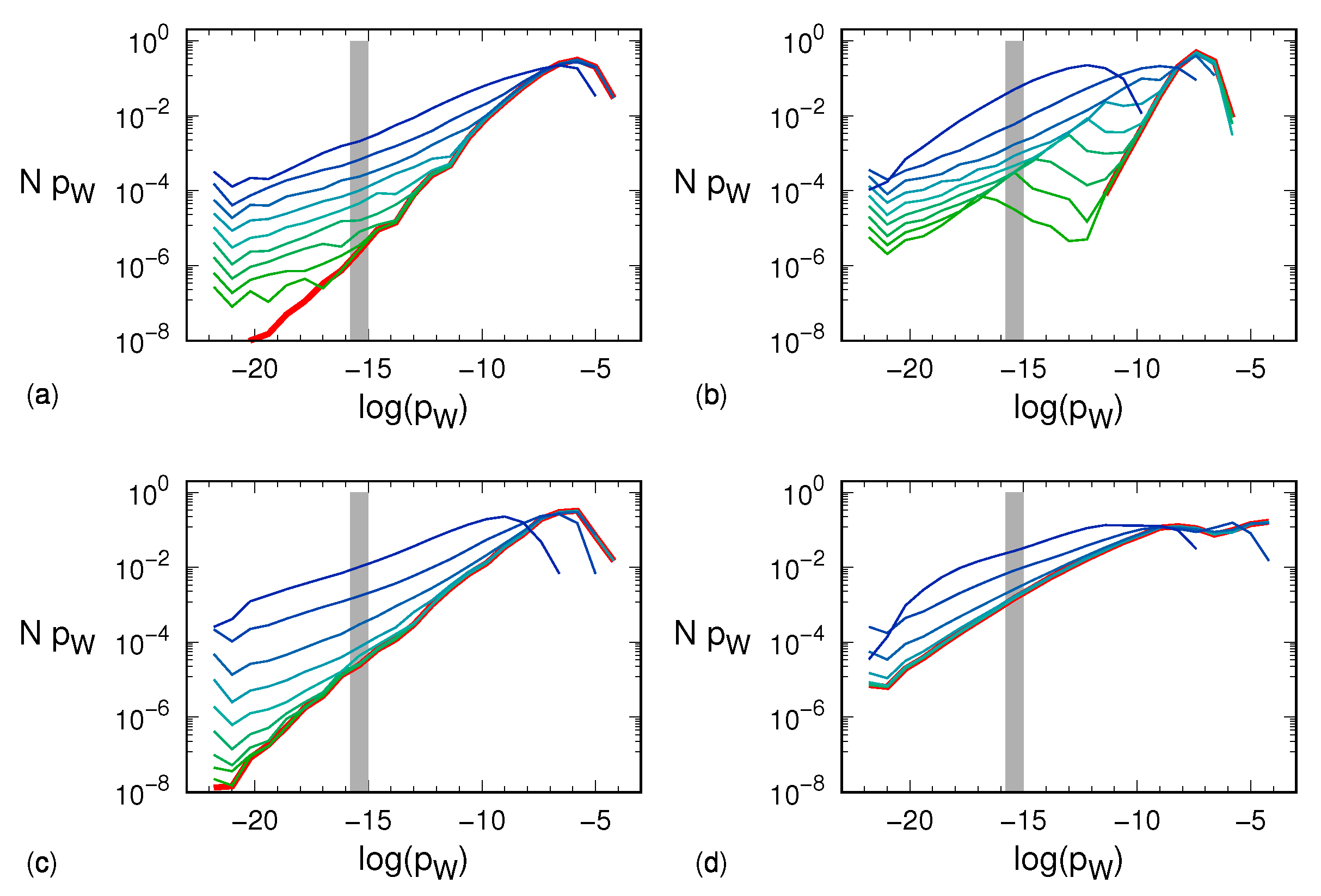

The same data shown in

Figure 3 can be presented by multiplying

N by

p so as to have a measure of the actual weight of sequences with probability

p. The results are plotted in

Figure 4, where one can indeed appreciate the smallness of the contribution arising from the very large number of light sequences.

4. Assessing the Entropy Increase via Distribution Reconstruction

As already argued in the previous section, observational noise increases the entropy both because it induces new sequences and because it “democratizes” their distribution. In this section, we discuss a method to possibly minimize the entropy increase. The method is based on the idea of moving back the content of the new sequences to the corresponding “ancestors”. The method is supervised, as knowledge of the symbolic sequence occurring in the zero-noise case is assumed. The ancestor of a new sequence is a noiseless sequence that is identified according to a suitable distance criterion, as follows.

Several definitions of distance between permutations have been proposed [

9]. One possibility is the Hamming distance, which corresponds to number of positions in which the two permutations differ. Another possibility is the transposition distance [

10], namely the minimum number of swappings between non-necessarily contiguous positions to make the first permutation coincide with the second. We use here the so-called deviation distance [

11], which is a metric and is computed in a number of steps

. Given the two words

and

, their deviation distance is

where

is the Kronecker delta. In practice, for each symbol in the first word, we search for the position of the same symbol in the second word, we determine the absolute separation between the two positions, and we sum all of them up. The factor

is justified by the sum being necessarily an even number due to the double occurrence of the same pair

(in the scientific literature, this factor is either absent or replaced by

). Consequently, the minimum deviation distance is 1, occurring in the case of a single swapping in a pair of contiguous positions, while the maximum one can be shown [

11] to be

if

m is even and

if

m is odd. For

as here considered, the maximal deviation distance is therefore 25. It is worth noting that this metric is preferable due to the presence of the weight

, which, whenever the corresponding

is one, is “naturally” proportional to the noise amplitude.

Let be the set of words observed for a noise amplitude . The set is then the deterministic set of words, namely those observed for zero noise, which are henceforth considered the ancestors. We now scan the difference set , looking for the words that are at deviation distance from possible ancestors. If a word has two or more ancestors, the “kinship” priority among these last is given to the most populated one (where the original population matters, i.e., prior to the absorption step that follows here). This ancestor “absorbs” the population of W, which is eventually removed from the process. Repeating the procedure by progressively increasing the deviation distance d up to its maximum value leads to a complete absorption of the population of the difference set back into the set .

While the procedure described above putatively puts back the amount of mass flown away because of the noise, it does not counter the democratization process of the original distribution. The entropy reduction is then expected to be partial and more effective, the larger the difference set

. For this last reason, we expect the reconstruction to work better with the Hénon and tent maps than with the generalized 3D Hénon map and the Mackey–Glass model. The numerical results shown in

Figure 5 confirm this prediction.

The figure shows the entropy increase

after having coalesced the distribution on the set

(i.e., subtracting

from

). The efficiency of this strategy can be quantified by the parameter

where

is the number of noise amplitudes considered and such that both

and

are positive. The uncertainty on

is assessed as the standard deviation of the ratios. It is easily seen that if the method is perfectly efficient, i.e., if it cancels the noise-induced entropy increase, then

. On the contrary, if it is completely inefficient, it holds

. From the data reported in

Figure 3, we see that the efficiency strongly declines when the dynamical complexity increases, being excellent for the tent map (

) and, on the other hand, very modest for the Mackey–Glass model (

). We further comment on this performance decline in the following section.

5. Discussion and Open Problems

In this paper, we have analyzed in a quantitative way the PE increase induced by a weak observational noise. We find that , where can be interpreted as the effective amplitude of the noise ( is the noise amplitude, and m is the length of the symbol sequence).

We have no theoretical arguments to justify the numerically assessed values of the and k exponents, which turn out to be model-dependent. We are only able to qualitatively argue that they are related to the structure of the boundary of the set of deterministic (noiseless) sequences: the “longer” the boundary, the higher the set of new, spurious sequences generated by transposition errors induced by observational noise. In fact, we are only able to conjecture that , so that a larger m value corresponds to a smaller distance between consecutive values of the sampled variable. In three out of the four models, , while in the last one.

In order to shed some light on the growth of , we have studied the distribution of symbolic sequences according to their occurrence probability. The analysis allowed identifying a large number of poorly visited sequences, which are made accessible by noise. In the tent map and, to some extent, in the Hénon map, the diffusion of probability toward new, spurious sequences is responsible for a large fraction of . This is much less the case in the two other models. As discussed in the previous section, the elimination of the spurious sequences—at least via the algorithm proposed above—has proven to be inefficient in reconstructing the noiseless entropy whenever the dynamical complexity increases. A major reason appears to be the fact that “internal” diffusion among deterministic sequences is much stronger than the “external” diffusion toward new, spurious ones.

Quantifying the internal diffusion process is not easy because, given any two words

and

belonging to the deterministic set

, one should distinguish between noise-induced moves from

to

and vice versa. Since the entropy variation is due to population changes induced by the unbalance between incoming and outgoing fluxes, we have, therefore, opted for an indicator that can be easily estimated. To this purpose, we split all the words belonging to

into two groups: the group of the words that increase their mass under the action of noise, and the group of those that lose mass. By denoting with

the mass gain of the first group, and with

the (positive) mass loss of the second one, it is clear that

where the last term

stands for the mass migration toward the new sequences and is countered for by the method described in the previous section.

A possible proxy measure to assess the internal diffusion would then be

, whose entity can only stem from mass redistribution within

. However, it is more convenient to rely again on a relative measure. Hence, we propose to normalize

by means of

and to use the rescaled parameter

to quantify the relevance of the internal diffusion with respect to the global loss experienced by the first group. Due to Equation (

5), it immediately follows that the complementary, “escape” parameter

, defined as

quantifies the relevance of the mass losses toward the set of the new, spurious sequences with respect to the global loss experienced by the first group. Values of

(

) close to one (zero) hint at a dominating democratization progress that occurs within the deterministic set

. On the contrary, as

(

) tends to zero (one), the escape process of the population out of the deterministic set

progressively dominates.

Altogether, the numerical results discussed above suggest that the structure of the ensemble of noiseless sequences in the abstract space of ordinal patterns plays an important role. If the ensemble is fragmented and characterized by a long boundary, the effect of noise is mostly quantified by the emergence of new sequences. This is, for example, the case of the tent map. If the ensemble is compact and characterized by a short boundary, the effect of noise mostly leads to a redistribution of probabilities. This is what happens in the Mackey–Glass model.

Figure 6 shows the plots of the escape parameter for all dynamical systems, confirming this last expectation as well as the interpretation provided above for the different kind of noise robustness shown by each dynamical system.

In general, we found that noise-induced diffusion among existing sequences represents a serious obstacle to the reconstruction of the noiseless PE. This is especially true for high-dimensional systems, where a large number of sequences are unavoidably visited, even in the absence of noise. Operating with a larger window length m would mitigate the problem (here, most of our simulations, refer to ), but at the cost of having to deal with a much longer time series so as to ensure enough statistics. A better strategy might be to restrict the analysis to the most populated sequences, which are definitely more robust to noise.