Forest Fire Detection via Feature Entropy Guided Neural Network

Abstract

:1. Introduction

2. Method

2.1. Cross Entropy Loss Function Guided by Feature Entropy

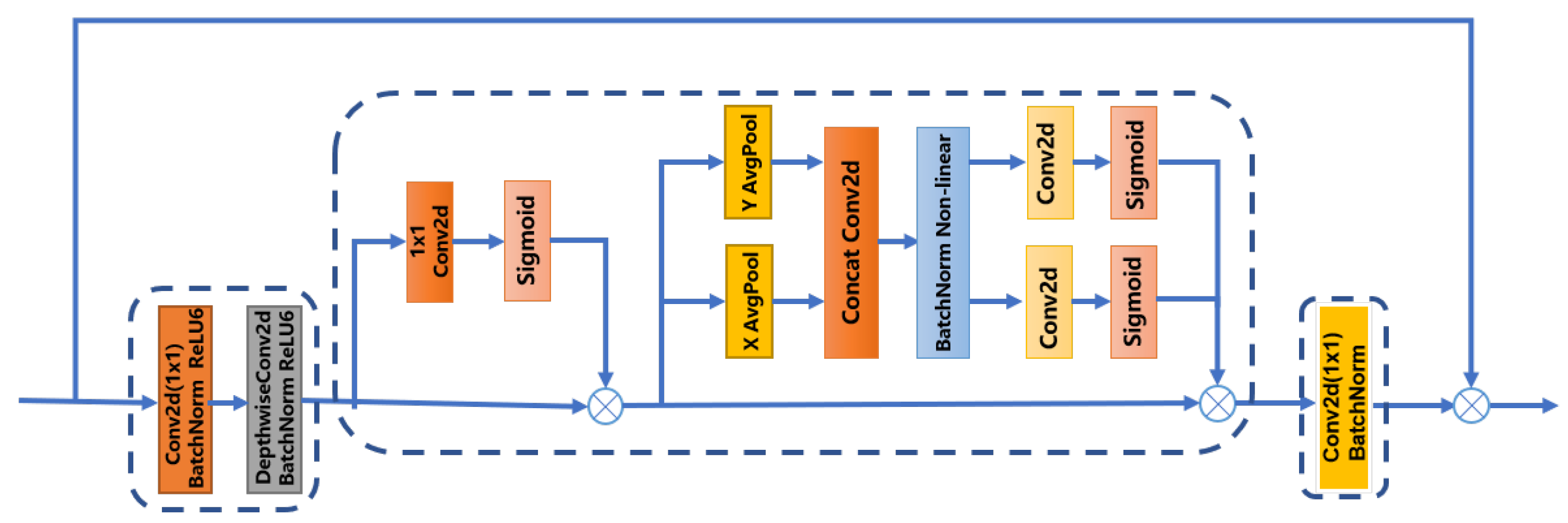

2.2. FireColorNet

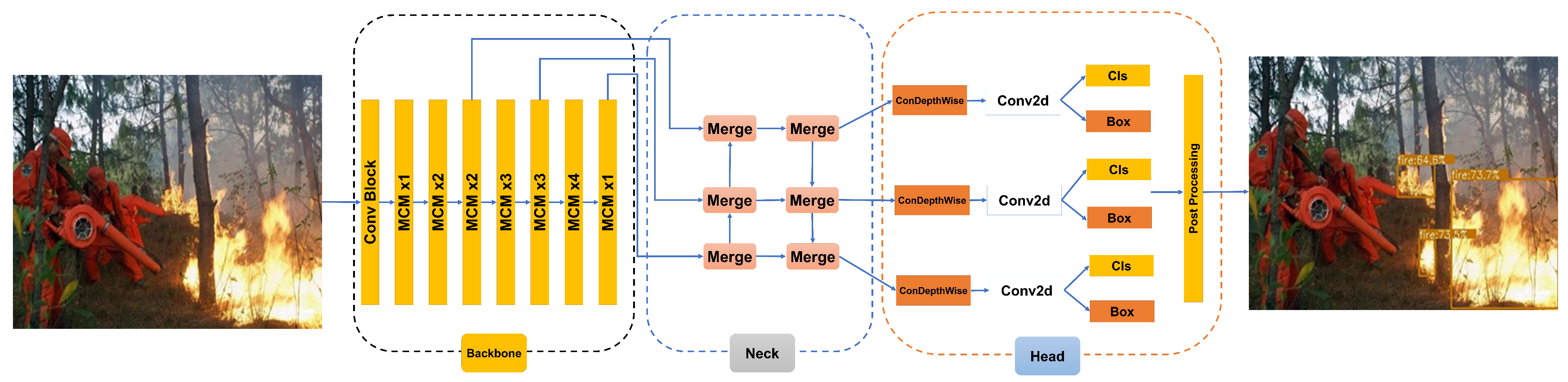

2.3. Forest Fire Detection Algorithm Based on FireColorNet

3. Dataset Preparation

4. Experiments

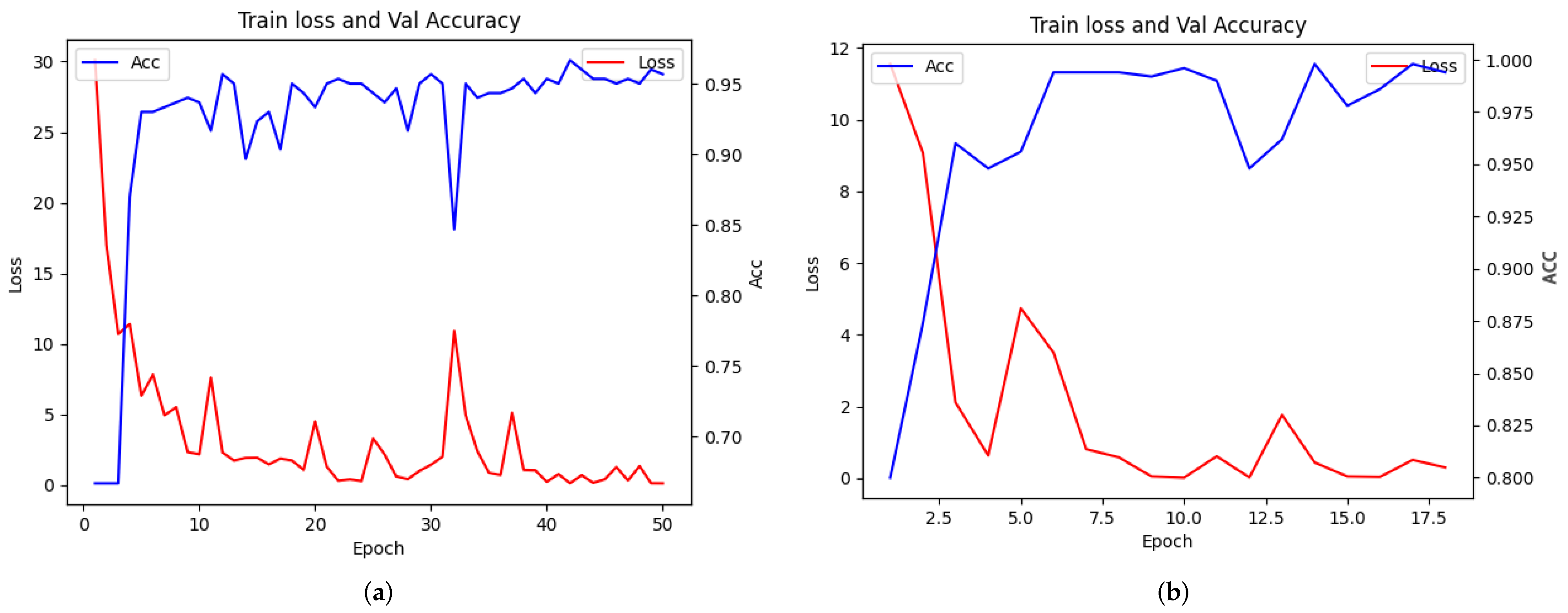

4.1. Experimental Results

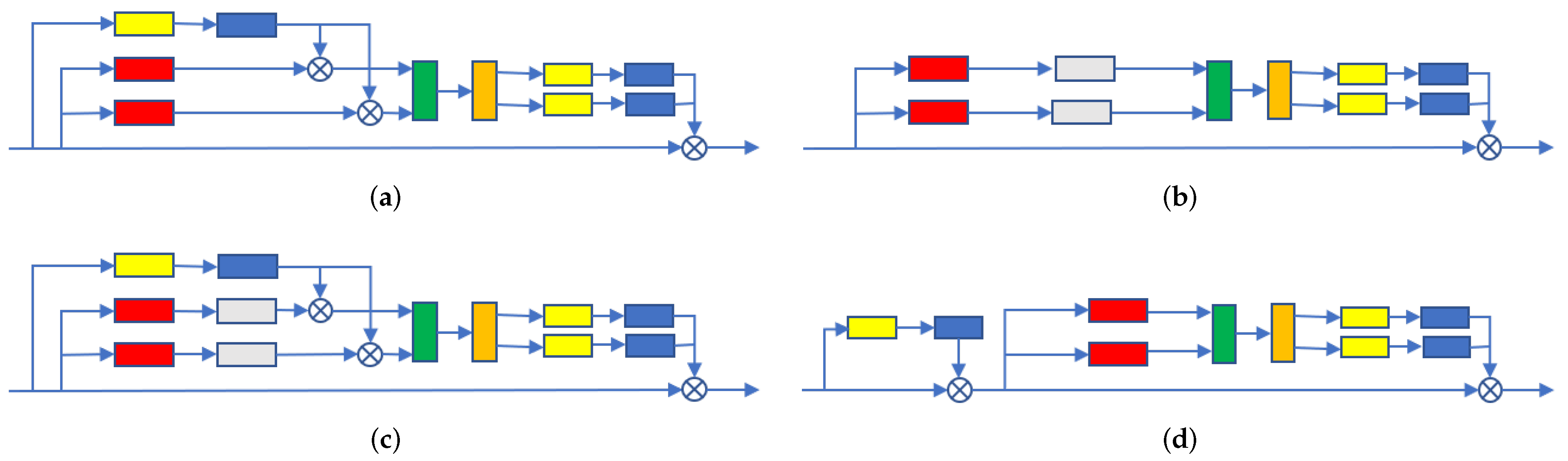

4.2. Ablation Studies

4.3. Comparison with Other Methods

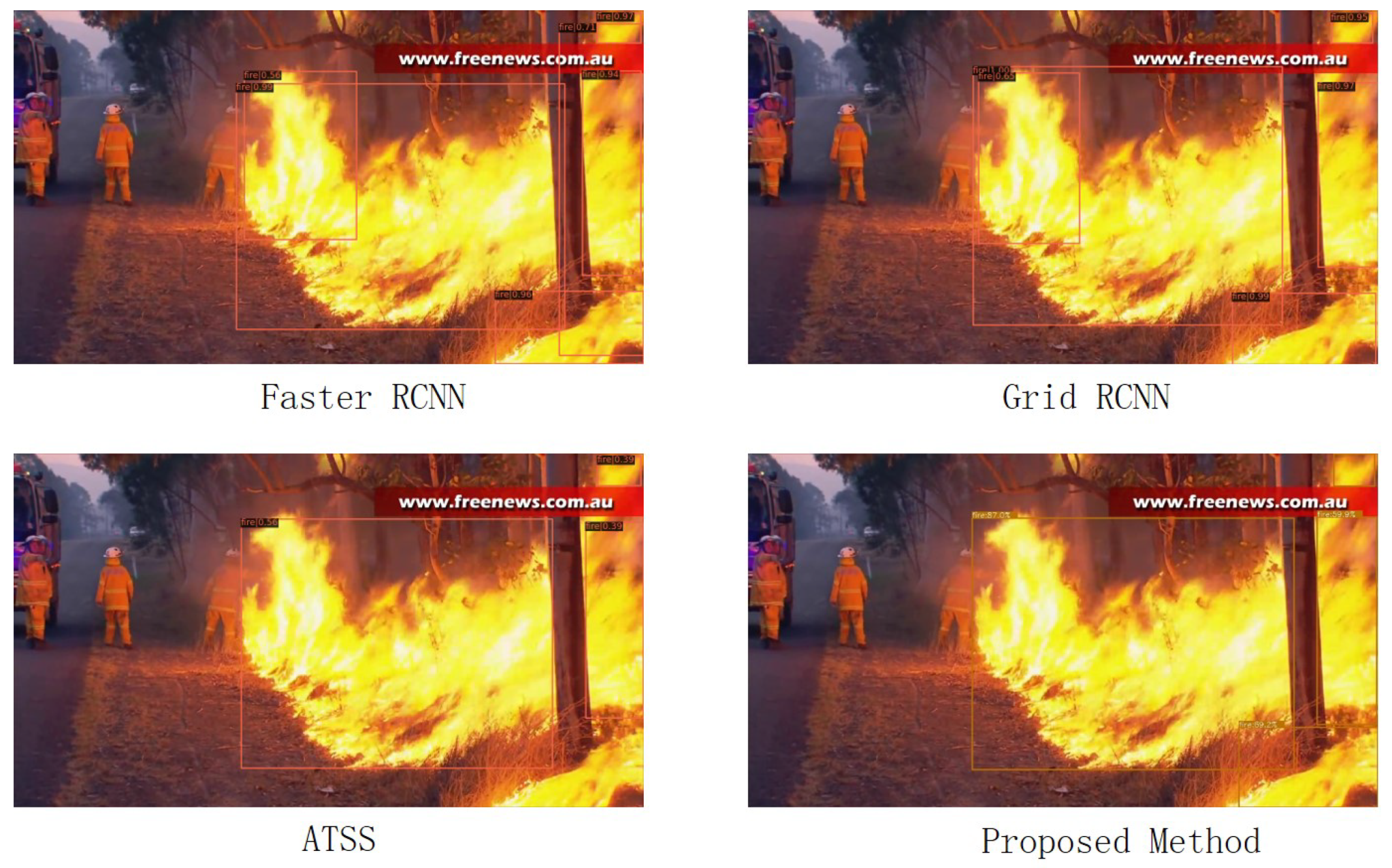

4.4. Visualize Prediction Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jadon, A.; Omama, M.; Varshney, A.; Ansari, M.S.; Sharma, R. FireNet: A specialized lightweight fire & smoke detection model for real-time IoT applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.G.; Yang, Y.; Ji, X.H. Flame detection algorithm based on a saliency detection technique and the uniform local binary pattern in the YCbCr color space. Signal Image Video Process. 2016, 10, 277–284. [Google Scholar] [CrossRef]

- Gong, F.; Li, C.; Gong, W.; Li, X.; Song, T. A Real-Time Fire Detection Method from Video with Multifeature Fusion. Comput. Intell. Neurosci. 2019, 2019, 1–17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, L.; Li, H.; Wang, F.; Jie, L.; Sikandar, A.; Hong, Z. A Flame Detection Method Based on Novel Gradient Features. J. Intell. Syst. 2018, 29, 773–786. [Google Scholar]

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the 2004 International Conference on Image Processing, ICIP ’04, Singapore, 24–27 October 2004. [Google Scholar]

- Rinsurongkawong, S.; Ekpanyapong, M.; Dailey, M.N. Fire detection for early fire alarm based on optical flow video processing. In Proceedings of the 2012 9th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Phetchaburi, Thailand, 16–18 May 2012; pp. 1–4. [Google Scholar]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the 2016 International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016; Atlantis Press: Paris, France, 2016. [Google Scholar]

- Sharma, J.; Granmo, O.C.; Goodwin, M.; Fidje, J.T. Deep convolutional neural networks for fire detection in images. In Proceedings of the International Conference on Engineering Applications of Neural Networks; Springer: Berlin/Heidelberg, Germany, 2017; pp. 183–193. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2016; pp. 770–778. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional Neural Networks based Fire Detection in Surveillance Videos. IEEE Access 2018, 2018, 18174–18183. [Google Scholar] [CrossRef]

- Khan, M.; Jamil, A.; Lv, Z.; Paolo, B.; Yang, P.; Wook, B.S. Efficient Deep CNN-Based Fire Detection and Localization in Video Surveillance Applications. IEEE Trans. Syst. Man Cybernet. Syst. 2018, 49, 1419–1434. [Google Scholar]

- Zhao, H.; Kong, X.; He, J.; Qiao, Y.; Dong, C. Efficient image super-resolution using pixel attention. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 56–72. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- RangiLyu. RangiLyu/Nanodet. 2021. Available online: https://github.com/RangiLyu/nanodet (accessed on 8 May 2021).

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. arXiv 2020, arXiv:2006.04388. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- DeepQuestAI. DeepQuestAI/Fire-Smoke-Dataset. 2019. Available online: https://github.com/DeepQuestAI/Fire-Smoke-Dataset (accessed on 8 May 2021).

- CAIR. cair/Fire-Detection-Image-Dataset. 2017. Available online: https://github.com/cair/Fire-Detection-Image-Dataset (accessed on 8 May 2021).

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Ultralytics. ultralytics/yolov5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 8 May 2021).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

| Samples | Sample 1 | Sample 2 | Sample 3 | Sample 4 |

|---|---|---|---|---|

| image information entropy | 15.0934 | 19.5073 | 22.9191 | 22.3601 |

| Settings | AP | AP50 | AP75 | ||

|---|---|---|---|---|---|

| SE/Baseline | 0.422 | 0.781 | 0.409 | ||

| PA | 0.446 | 0.821 | 0.415 | ||

| MCM(Ours) | 0.465 | 0.828 | 0.463 |

| Settings | AP | AP50 | AP75 |

|---|---|---|---|

| Variants1/Ours | 0.426 | 0.791 | 0.404 |

| Variants2/Ours | 0.434 | 0.796 | 0.426 |

| Variants3/Ours | 0.426 | 0.767 | 0.426 |

| Variants4/Ours | 0.465 | 0.828 | 0.463 |

| Algorithm | AP | AP50 | AP75 |

|---|---|---|---|

| Yolov3 | 0.407 | 0.767 | 0.413 |

| Yolov5(s) | 0.383 | 0.727 | 0.357 |

| Faster-RCNN | 0.433 | 0.784 | 0.432 |

| Grid R-CNN | 0.434 | 0.781 | 0.420 |

| ATSS | 0.432 | 0.800 | 0.398 |

| Proposed Method | 0.465 | 0.828 | 0.463 |

| Methods | Dataset1 | Dataset2 | ||||

|---|---|---|---|---|---|---|

| Precision | Recall | Accuracy | Precision | Recall | Accuracy | |

| GoogleNet | 0.8545 | 0.9400 | 0.9267 | 0.6090 | 0.8636 | 0.8833 |

| Modified Vgg16 | 0.8763 | 0.8500 | 0.9100 | 0.6103 | 0.7545 | 0.8771 |

| Modified ResNet50 | 0.8857 | 0.9300 | 0.9367 | 0.6129 | 0.8636 | 0.8848 |

| FireNet | 0.8557 | 0.8300 | 0.8967 | 0.4857 | 0.8416 | 0.8309 |

| Ours | 0.9462 | 0.8800 | 0.9433 | 0.6846 | 0.9273 | 0.9155 |

| Ours (Entropy) | 0.9674 | 0.8900 | 0.9533 | 0.7239 | 0.8818 | 0.9232 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, Z.; Min, F.; He, W.; Fang, W.; Lu, T. Forest Fire Detection via Feature Entropy Guided Neural Network. Entropy 2022, 24, 128. https://doi.org/10.3390/e24010128

Guan Z, Min F, He W, Fang W, Lu T. Forest Fire Detection via Feature Entropy Guided Neural Network. Entropy. 2022; 24(1):128. https://doi.org/10.3390/e24010128

Chicago/Turabian StyleGuan, Zhenwei, Feng Min, Wei He, Wenhua Fang, and Tao Lu. 2022. "Forest Fire Detection via Feature Entropy Guided Neural Network" Entropy 24, no. 1: 128. https://doi.org/10.3390/e24010128

APA StyleGuan, Z., Min, F., He, W., Fang, W., & Lu, T. (2022). Forest Fire Detection via Feature Entropy Guided Neural Network. Entropy, 24(1), 128. https://doi.org/10.3390/e24010128