Abstract

Inferring the value of a property of a large stochastic system is a difficult task when the number of samples is insufficient to reliably estimate the probability distribution. The Bayesian estimator of the property of interest requires the knowledge of the prior distribution, and in many situations, it is not clear which prior should be used. Several estimators have been developed so far in which the proposed prior us individually tailored for each property of interest; such is the case, for example, for the entropy, the amount of mutual information, or the correlation between pairs of variables. In this paper, we propose a general framework to select priors that is valid for arbitrary properties. We first demonstrate that only certain aspects of the prior distribution actually affect the inference process. We then expand the sought prior as a linear combination of a one-dimensional family of indexed priors, each of which is obtained through a maximum entropy approach with constrained mean values of the property under study. In many cases of interest, only one or very few components of the expansion turn out to contribute to the Bayesian estimator, so it is often valid to only keep a single component. The relevant component is selected by the data, so no handcrafted priors are required. We test the performance of this approximation with a few paradigmatic examples and show that it performs well in comparison to the ad-hoc methods previously proposed in the literature. Our method highlights the connection between Bayesian inference and equilibrium statistical mechanics, since the most relevant component of the expansion can be argued to be that with the right temperature.

1. Introduction

Systems with a large number of states appear ubiquitously in the era of big data. Often, the number of available observations—which, in this paper are referred to as “samples”—is smaller than the number of states, sometimes considerably smaller. Although in these circumstances estimating the full probability distribution of the system is highly unreliable, here we show that even in the severe undersampled regime, fairly accurate inferences are often still possible. The clue is that, if only a single property of the system needs to be inferred, or maybe just a few properties, the complete description of the probability distribution is unnecessary. Examples of desired properties may be, for instance, the information processing capacity, the correlation between pairs of variables, or the diversity of reachable states in the system.

Let k be the number of available states of a discrete system, and the (unknown) probability distribution that governs the occupation of the states. The number of samples n is assumed to be small compared with the effective number of states , where is the entropy of the system. Still, n is assumed to be large enough so that, occasionally, some of the states are sampled more than once. In mathematical terms, this means that n is at least as large as the square root of the effective number of states; that is, [1]. For even smaller samples, most of the states remain unsampled, and the few states that are sampled are likely to get no more than a single sample. When n reaches the order of , the probability that a small fraction of the states get more than a single sample becomes large [1]. We are interested in estimating a property whose functional form in terms of is known. The naïve approach—which we here discourage—would be to first estimate and then evaluate in the estimated value. This strategy is called the “plug-in” estimator. Although it may work for properties that depend linearly on the probabilities, it is often appallingly biased in nonlinear cases—in particular, for information-related properties that typically bear a logarithmic dependence on . To overcome this problem, some idiosyncratic solutions have emerged during the last decades that are valid for particular properties, such as entropy [2,3,4,5,6] and mutual information [7,8,9].

Here, we develop a principled way to approach this problem for properties that may be arbitrary, as long as they vary smoothly with . The clue relies on the fact that the simplex containing the set of possible -vectors can be foliated by the level surfaces of . If only the value of F matters, estimating the full is excessive; the only inference that needs to be made is about the level surface to which belongs. This is a one-dimensional inference problem and hence is much simpler than the k-dimensional problem of estimating . We here estimate F within a Bayesian framework, using a prior distribution that weighs the different level surfaces according to a MaxEntropy principle.

The main contributions of the paper are as follows:

- -

- A new method to construct Bayesian estimators is proposed;

- -

- The method is applicable to arbitrary quantities ;

- -

- A conceptual framework is provided, in which the one-dimensional nature of the problem is exploited;

- -

- The search of the prior is restricted to the level surfaces of ;

- -

- The Maxentropy principle is employed to capitalize both what we know and what we do not know about the prior;

- -

- The performance of the method is tested in known examples;

- -

- The conceptual connection with equilibrium statistical mechanics is highlighted.

The paper is organized as follows. In the next section, the theoretical Bayesian framework is described in general: firstly, pointing out that only certain aspects of the prior matter in the inference of the property; secondly, using the symmetries of the property and our ignorance about the underlying distribution to constrain the search for the prior; thirdly, by finally constructing the prior with a MaxEntropy principle; and finally, showing how this approach relates to equilibrium statistical mechanics. In the third section, the method is applied to different example properties, including mutual information and entropy. Finally, in the last section, the implications and perspectives of the work are discussed and summarized.

2. Rationale of the Inference Process

Section 2 develops the theoretical underpinnings of the new estimator proposed in this paper.

2.1. A Bayesian Approach to the Inference Problem

In this subsection, we define the estimator to be used and formulate the problem within the Bayesian framework. The search for the prior is established as the main target of the paper.

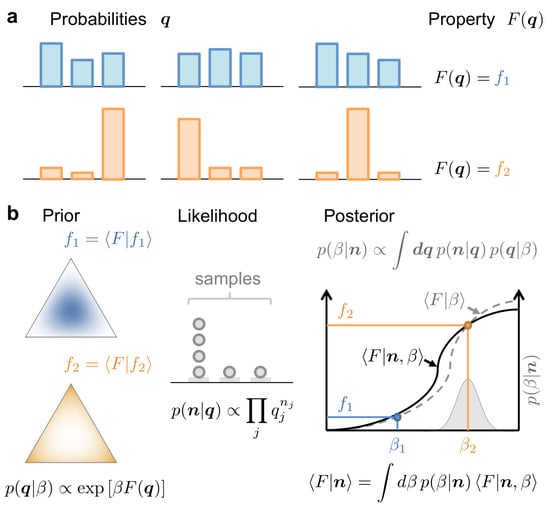

Our goal is to estimate a property of a stochastic system composed of a large number k of states, when we only have access to n samples, with . The probabilities constitute a complete description of the system (see Figure 1).

Figure 1.

Conceptual framework used to estimate the property of a stochastic system with probabilities from a limited number of observations, or samples . (a) Several probabilities can produce the same value of the property. All such -vectors belong to the same level surface of . (b) Left: Example case in which the property has circular level surfaces on the simplex embedded in three-dimensional space. Two members of the family of base functions used to expand the prior are shown, containing the different vectors shown in (a). These two members are solutions of the Maxentropy problem with two different expected values f of the property. Middle: The histogram generated by the sampled data produces a likelihood that selective favors a specific range of surface levels. Right: The most favored f value (or equivalently, value) is the one for which the prior and posterior estimates of the property coincide.

We choose to work with the estimator defined as the expected value of the property when conditioned by the measured data . The average is calculated with the posterior distribution , and the k-dimensional vector has components , with equal to the number of samples in which the system was observed in the ith state. Therefore,

Throughout the paper, all integrals in should be restricted to the space where is defined: the simplex embedded in , or the cartesian product of several simplexes, in the multivariate case. The estimator of Equation (1) minimizes the mean square error of the estimation [10]. Using Bayes’ rule, the posterior can be written in terms of the likelihood and the prior , so that

The likelihood is the only factor that depends on the sampled data , and for discrete states, it is a multinomial distribution, namely

The only factor that still needs to be defined is the prior , and the strategy underlying this choice is the topic of this paper. We want the prior to produce an inductive bias that acknowledges our ignorance about . Such bias will be useful to overcome the scarcity of samples, but it will only be suitable to estimate the specific of the problem at hand and not others. In other words, the prior proposed here is individually tailored for .

2.2. Defining the Prior on the Level Surfaces of the Property

In this subsection, the apparent high-dimensionality of the problem is shown to be illusory. The prior we are searching for, although defined in a high-dimensional simplex, is here shown to affect the result of the inference process only through its integrated value inside each level surface of the quantity . In other words, the variations of inside the level surfaces of are irrelevant since they have no influence on the value of the estimator . These level surfaces can be indexed by a single-dimensional parameter, which will later allow us to drastically simplify the search for the prior.

To show this point, we start by noting that is defined on the simplex embedded in . We introduce a change of variables to perform the integral of Equation (2). Specifically, the first two coordinates of the new variables are chosen to be

and the remaining coordinates may be chosen arbitrarily, as long as the transformation be invertible. In the new variables,

Equation (4) shows that it does not matter how the prior distributes the density inside the manifold obtained by intersecting the level surface f of the property F with a level surface ℓ of the likelihood L. Only the integral of inside has an effect on . Therefore, we opt to consider only priors that are constant inside , since for any prior not constant in , a prior that is constant in exists that produces the same estimation . Our task is therefore to design how the prior changes with f and ℓ. A crucial point is that the level surfaces of vary with the data . Yet, by definition, a prior cannot depend on the measured data. Therefore, we only work with priors that, when written in the -coordinates, only depend on through . In other words, we assume that a function g exists such that . By limiting the search to priors of this type, for each value of f, we impose maximal uncertainty about , as dictated by a maxentropy principle.

As a side remark, we point out that the assumption does not imply that, when written in the -coordinates, the prior must be independent of , since the Jacobian of the transformation of variables may well depend on ℓ. Moreover, the marginal prior may also depend on ℓ, since the level surface in which Equation (4) is calculated depends on . Therefore, is allowed to depend on ℓ, but only due to the geometrical structure that the delta function in restricts the integration region of Equation (4).

2.3. Decomposing the Prior as a Linear Combination of a Family of Base Priors

In this subsection, we exploit the fact that to decompose the prior as a linear combination of a collection of base priors indexed by a single real number f. The decomposition allows us to reduce the search of a prior defined in a high-dimensional simplex to the search of a density defined as a function of f. This step brings about a dramatic simplification, but more importantly, it reduces the relevance of the subjective aspect involved in the selection of priors. We show here that the range of f-values that are relevant to the inference process (and thereby, the base priors that actually matter) is only partially defined by , which is the only element still containing subjective choices. In many applications, the sampled data n will turn out to be the truly defining factor in selecting the base priors that participate in the inference process.

We start to demonstrate these statements by noting that the set of priors for which a function exists such that is equal to all the distributions of the form

that can be obtained by varying . Equation (5) implies that the problem of specifying can be reduced to the problem of specifying , which is a drastically simpler object, given that has dimensions, whereas f has only a single dimension.

We now show that, when the number of samples is sufficiently large, the determination of g becomes unnecessary, since for large k, the measured data often enter into the likelihood in such a way that only a small range of f-values remains with significantly non-zero probability. Therefore, all the g-functions that do not vary drastically within the permitted range give rise to the same estimation. In such cases, the data alone dictate the value of the estimator through the likelihood, making the discussion about priors essentially inconsequential. We now prove these statements. Replacing Equation (5) in (2), the estimator becomes

with

where is the Kullback–Leibler divergence [11] between the distributions and , and the ith component of the vector is . The divergence is always non-negative, and it only vanishes when the two distributions coincide [12]. Therefore, if the number of samples n is sufficiently large, the result of the integral is significantly different from zero only for level surfaces that pass close enough to the sampled frequencies and is maximal for the one that contains the sampled frequencies. The data thereby select the range of f values that are compatible with the observations. The factor n in the exponent of Equation (7) implies that the allowed range of f-values becomes increasingly narrow as the number of samples grows. The crucial point in this reasoning is that for sufficiently narrow ranges, the shape of the prior becomes irrelevant. If the range is much narrower than the typical scales in which varies, for all practical matters, is constant within this range, and has no bearing in the estimation. This is the situation in which we no longer need to bother about the prior.

When that limit is reached, the likelihood becomes a delta distribution, and the only -vector that contributes to a given f-value is . In this extreme case, when is uniform, the estimator converges to the plug-in estimator. Yet, the plug-in estimator is only justified when the likelihood indeed reaches the delta-like behavior, which only happens for . Before this limit, the plug-in estimator completely neglects the width of the peak of the likelihood around the sampled frequencies and thereby the possibility that a whole collection of -vectors in the vicinity of contribute to each f. Allowing for this possibility is important since, in the undersampled regime, there is a large degree of uncertainty about the true that generated the data . By neglecting this uncertainty, the plug-in estimator produces the undesired biases mentioned before. The obvious solution is not to neglect the width of the likelihood. However, if no approximations are made, it is difficult to calculate or even to estimate analytically, given that Equation (7) involves an integral over an arbitrarily shaped manifold.

To overcome this problem, we would like to design another estimator that retains insensitivity to the prior but still gives a chance for a collection of vectors to contribute to each f. The size of this collection is determined by the width of the likelihood; that is, by the total number of samples n, and the obtained frequencies . The averaged contributions of all the vectors that have non-vanishing likelihoods produces an estimated that may be either smaller or larger than the plug-in value. In other words, all the -vectors different from may tend to either increase or decrease F as compared with , and the direction and size of this shift depends on the behavior of around . In what follows, we propose an alternative expansion of the prior different from Equation (5), which is formulated in terms of a parameter that controls the relative weight of the level surfaces that tend to increase vs. those that tend to decrease F.

Using the previous analysis as an inspiration, from now on, we consider a narrower set of priors that is a proper subset of the one defined by Equation (5). A restriction in the set of possible priors does not invalidate the analysis of how the width of the likelihood depends on the sampled data, it only reduces the set from which priors are selected. We begin by proposing the decomposition

for a conveniently chosen family of priors that now need not coincide with but are still assumed to only depend on through the property . In other words, we assume that a family of normalizable functions exists such that

Before continuing, it is important to note that in the new decomposition of Equation (8), the parameter f can no longer be identified as exactly the value of the property in a given level surface, since the delta functions are no longer present to restrict to a single level surface of . Therefore, from now on, f should be regarded as a formal label that parametrizes the set of base functions used in the expansion. Yet, later on, we choose a family of base functions for which there is still a connection between f and , but the connection turns out to be probabilistic (see next subsection).

By inserting Equation (8) in Equation (2), the estimator becomes

where we have introduced the likelihood of the data for each value of the parameter,

With this definition, the estimator reads

where

represents the amount of evidence in favor of each f-value provided by the measured data , and

is the estimation of the property conditional on the measured data and the parameter f.

Equation (10) is homologous to Equation (6) for base priors that are not delta distributions. Using Bayes’ rule (Equation (11)), the evidence can be written in terms of the marginal likelihood of Equation (9). This evidence is defined by an integral in -space that contains the multinomial likelihood embodying the Kullback–Leibler divergence. Therefore, just as before, the data still select a range of f-values; only now, we cannot identify f as an instantiation of F on one specific region. Still, the value of f that maximizes the posterior is the one that makes the largest contribution to the integral in Equation (10). Before, only keeping the optimal f-value yielded to almost the plug-in estimator (except for the effect of ). In the present case, only keeping the optimal f-value means to replace the integral in Equation (10) by the evaluation of at the value of that maximizes . This procedure is henceforth denominated the MAP estimator, for Maximum A Posteriori. With the present decomposition, this procedure does not yield the plug-in estimator, because contains an integral that sweeps through a whole range of level surfaces. For the family of base priors selected in the following subsection, the MAP estimator performs substantially better than the plug-in estimator and often also better than custom-made estimators designed for specific properties. In those cases in which the shape of can be argued to play no relevant role, the MAP estimator can be replaced by an empirical Bayes estimator, in which the selected value maximizes the marginal likelihood instead of the marginal posterior .

2.4. A Maxentropy Strategy to Select the Base of Functions to Expand the Prior

In this subsection, we select the family of distributions that serve as a base to expand the prior , as dictated by Equation (8). To do so, we employ a Maxentropy principle, maximizing the uncertainty about under the constraint that the expected value of be f (Equation (13)).

Before, when the expansion in delta distributions was used, each element of the base was associated with a single f value, equal to the property F evaluated on the corresponding level surface. A natural relaxation of this condition, while still fulfilling the requirement that the elements have the same level surfaces as , is to demand that f be the expected value of , when the average is weighted by . If, after imposing this requirement, we insist that the base functions have no additional structure, then the shape of can be derived from the Maxentropy principle [13,14], in which the differential entropy is maximized, conditioned to the restriction

The solution of this maximization problem is

where the hyperparameter is a function of f; that is, of the expected value of the property. The correspondence between each f-value and each -value implies that both these parameters constitute valid tags to designate a member of the base. For this reason, in what follows, we use the two parametrizations interchangeably, depending on what we want to stress. We warn the reader that we pass from one to the other, tagging the elements of the base equivalently as or as , understanding that there is a one-to-one mapping between f and . In the nomenclature of Amari [15,16], the parameter appearing linearly in the exponent of the distribution (for us, ) is referred to as an exponential system of coordinates of the space of parameters. When f is used, the coordinates are called mixed. In equilibrium statistical mechanics, for example, is often proportional to the negative of the inverse of the temperature, whereas f is proportional to the mean energy of a state—and of course, the two are related.

In the exponential family of Equation (14), all level surfaces of contribute to each , but some are more relevant than others. If is large and positive, is dominated by the level surface with maximal and rapidly dies out when we depart from it. The mean value of weighted by this is equal to the maximum of F on the simplex. If , all level surfaces contribute uniformly. If is large and negative, the level surface with minimal has maximal relevance, and the mean value of is equal to the minimum of F on the simplex. Therefore, operates as a tuning knob that raises or lowers the relevance of different level surfaces, ranking them by the value of F. As happened when expanding the prior in a base of delta functions, the mean value of F shifts from its minimum to its maximum by adjusting the parameter. However, in contrast with the delta case, the expansion in exponential functions allows each element of the base to spread out to different level surfaces, allowing a whole diversity of surfaces to contribute to each .

2.5. The Estimation of the Property for Each Member of the Base

In this subsection, we show how the problem of estimating for each fixed value is formally equivalent to obtaining the mean value of a certain physical quantity in statistical mechanics. In this analogy, the data n of the inference problem produce exponential distortions of the partition function of the Statistical Mechanical formulation.

The posterior distribution conditioned on a specific member of the base is

Replacing this expression in Equation (12)

where the posterior partition function is calculated with the product of the prior distribution and the likelihood . Just as in statistical mechanics [13], this integral is equal to

Moreover, following the same reasoning used in statistical mechanics, the variance of the estimation reads

Importantly, in several applications, the partition function can be calculated analytically. In those cases where an analytical treatment is impossible, the shape of can often be well approximated by one-dimensional numerical integrals, which still enables the computation of .

2.6. Considering Different Values

In this subsection, we demonstrate that out of all the possible -values, the one that has maximal influence on the estimation process (or equivalently, the one that tags the most relevant base prior) depends on two independent factors. The first factor depends on the prior density , comprising all the subjective elements of the inference process. The second factor is that for which the prior and posterior estimations of coincide and is entirely determined by the sampled data n. This result allows us to determine the role played by the different factors entering in the inference process.

Equation (10) states that the full estimation results from integrating in , each contribution weighted by the evidence . Thus, we now analyze this evidence.

When is given by Equation (14), the evidence of Equation (11) becomes

where the constant of proportionality does not depend on .

The first term of Equation (17) contains the prior expectations on . The second one is the marginal likelihood and describes the way in which the data favor a specific range of level surfaces. It is equal to the ratio of the posterior and the prior partition functions. The value that makes the largest contribution to this ratio, henceforth called , can be found by taking the derivative of the logarithm of the ratio and setting it to zero,

Therefore, is the parameter for which the prior and posterior estimations of the property coincide. This idea is illustrated in the last panel in Figure 1.

3. Applications to Different Properties

In this section, we apply the method to estimate the marginal probability of a bivariate distribution (Section 3.1), the amount of mutual information (Section 3.2), and the entropy (Section 3.3). All these quantities are examples of properties that have been extensively discussed in the literature [3,7,17,18,19]. Yet, as far as we know, all the methods developed so far—at least, those that perform decently in the severe undersampled regime—were idiosynchratically tailored to each specific property, whereas the method proposed here is general. In this subsection, we compare its performance to the more handcrafted approaches.

3.1. Marginal Probability

As a first example, we consider a system whose states are tagged by two labels: x, with many equiprobable states, and , with only two. The property we are interested in estimating is the marginal probability of , denoted as , defined as

were is the number of x-values. Since we assume all x-values have the same probability, here, represents the collection of conditional probabilities . This case constitutes a toy example, since the plug-in estimator is unbiased and efficient, irrespective of the value of . Still, we discuss this case to exemplify the procedure described in the previous section.

According to this procedure, the base functions used to expand the prior are solutions of a Maxentropy problem conditioned to have , which in exponential coordinates reads (Equation (14)),

For each , this solution factorizes into independent terms. The exponential function favors either small or large values of , depending on the sign of . As the likelihood also factorizes , so does the posterior,

where is the confluent hypergeometric function [20]. Then, the partition function corresponds to . The prior partition function can always be obtained from by setting .

In Equation (20), the exponential factor is inherited from the prior . In general, in the method proposed here, each biases the estimation towards the corresponding f value in order to get an unbiased estimate of the property when all the values are considered.

With the partition function, we derive the estimate for the property of each member of the family as

The parameter that makes a maximal contribution to the estimation is the one for which , as established in Equation (18). This equations is often difficult to solve analytically. However, in the present case, it can be easily solved in the extreme undersampled regime () with no coincidences (), where there is a fraction of x states with and a fraction of states with . This condition translates into

where . Taking into account the contiguous relations of the confluent hypergeometric function, this equation leads to , which is the naïve, plug-in, unbiased estimator. Usually for other properties, the plug-in estimator provides quite poor results in the severe undersampled regime, and there is no clear path to a low bias estimator. We believe that the proposed approach, focused on priors defined on the level surfaces, can be useful in these cases. In the two following subsections, we analyze other more challenging properties such as the mutual information and the entropy.

3.2. Mutual Information

The estimation of the amount of mutual information in a severely undersampled discrete system is a difficult task [2,21,22,23,24,25]. Several of the approaches developed previously [3,7] are based on separate estimators for the total and conditional entropies. Taking a different path, in a previous publication, we also developed a method that was focused on the dispersion of the conditional probabilities in relation to the marginal [8]. The performance was good, but still, the method was only applicable to mutual information.

In order to test the new method, we work with the same system introduced in the previous subsection. We assume , and all x-values have with equal probability . The two states for the label are assumed to have equal marginal probabilities, (generalizations are explored in [8]). For this system, we want to find the mutual information , namely

As in the previous example, the property depends on the set of conditional probabilities .

The prior of Equation (14) reads

Again, the prior factorizes. The individual factors are

This prior resembles the beta distribution proposed in [8] but has non-constant exponents. Each posterior reads

This functional form does not allow us to continue the problem in an analytically tractable fashion. Yet, the factorization makes the problem uni-dimensional, so it is easy to calculate the partition function for different pairs of and the corresponding expectation values. Indeed, the partition function factorizes as .

The estimated value of the amount of mutual information of each -value—the member’s guess— can be obtained with Equation (16), and the -value that maximizes the marginal likelihood—the member’s evidence— from Equation (18). Interestingly, not all x-states contribute to the marginal likelihood,

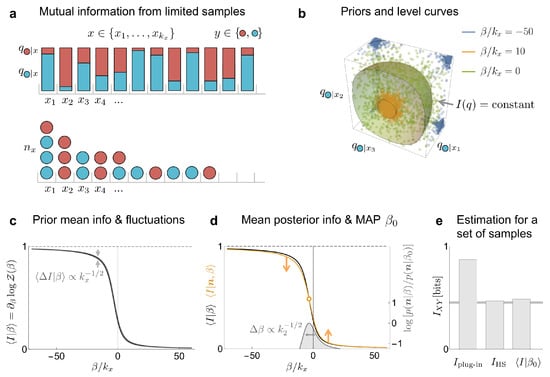

since the only non-vanishing terms are those in which the partition function associated to the x state is not proportional to the prior partition function; that is, varies with . For mutual information, this condition is only met by x states with coincidences (). In fact, only when coincidences begin to appear () can the sampled data start to provide evidence about a non-vanishing mutual information. No such condition was found in the previous section, since there, every sampled x-state contributed to the estimation of the marginal probability. This discrepancy stems from the fact that, while the marginal probability depends on the first moment of the set of conditional probabilities, the mutual information relates to the second moment, or the amount of dispersion, of this set [8]. We show a toy example that illustrates this approach in Figure 2. In this example, the proposed method performs as well as some of the best known estimators (Figure 2e) designed for such undersampled regime.

Figure 2.

Toy example used to illustrate the estimation of the amount of mutual information in the same system as in Section 3.1. (a) The system is governed by a bivariate probability distribution, with states characterized by two labels: x and y. There are many x states, , and all are equally probable; that is, . The variable y is binary, and its two values are depicted as red and blue, corresponding to 1 and 0, respectively. The conditional probabilities were sampled from a symmetric beta distribution with parameter . The goal is to estimate the mutual information from n samples, with . (b) The level surfaces of the mutual information, as well as some sampled -values, are displayed in a three-dimensional subspace of the full -space, for different values of the hyperparameter . (c) Prior mean mutual information as a function of the scaled hyperparameter , and its fluctuations for . (d) Prior and posterior mean mutual information as a function of the scaled hyperparameter for samples. The intersection of these curves corresponds to the MAP estimation . In gray, the posterior marginal evidence for the hyperparameter, whose width decreases as the square root of the number of states with at least two samples, . (e) Comparison of the estimation of mutual information between different methods for the considered set of samples ( is the estimator from [8]). The horizontal line corresponds to the true value of the mutual information.

3.3. Entropy

Finally, we consider the estimation of entropy, for which multiple estimators have been proposed in the past [1,3,4,5,6,26,27,28]. The entropy is defined as

Here, we use the natural logarithm for analytical convenience. We assume that the number of accessible states is unknown and that the number of effective states is quite large, so that .

Once more, we expand the prior in a family whose members are tagged with and use Equation (14) to express each member of the family as

where belongs to the simplex . We can compare this prior to that used by NSB [3], a symmetric Dirichlet . Although there are some similarities, the prior in Equation (29) has non-constant exponents, which hamper the analytical tractability. The posterior reads

To calculate the member’s estimation and the marginal likelihood , the partition function is needed.

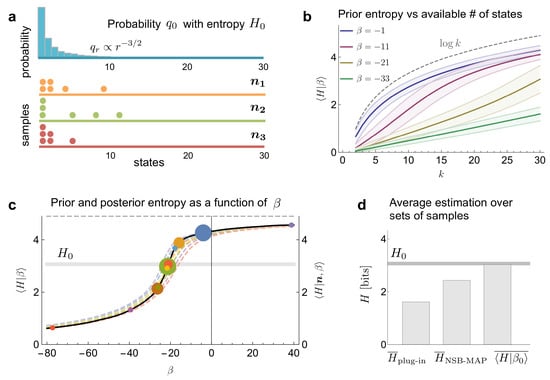

We have found no evident way to solve the partition function analytically. Yet, its shape can be obtained by numerically integrating the partial convolutions that the delta over the simplex implies. With this result, we were able to obtain the prior and posterior estimates of the entropy, as well as the optimal (Figure 3c). The MAP estimator of the entropy obtained with this procedure, even if numerical, was more accurate than the results derived from all previous methods we know of (Figure 3d).

Figure 3.

Entropy estimation in a toy example. (a) A distribution has ranked probabilities that decrease with a power law () within a finite number of states (). Distributions such as these represent a challenge for the estimation of entropy. Three possible sets of samples are displayed. (b) Prior mean entropy, plus/minus a standard deviation, as a function of the available number of states k for several values of the hyperparameter . (c) Prior and posterior mean entropy as function of for the different possible sets of multiplicities that can be obtained from samples (three examples shown in panel a, with matching colors). The intersections between prior and posterior mean entropy (MAP estimation) are marked with circles. The size of the circles is proportional to the likelihood of the corresponding multiplicity (a minimum size is imposed, for visibility). (d) Comparison of the average estimation of entropy between different methods over all the multiplicity sets (discarding the set with no coincidences, and the set with all samples in one state), weighting according to their likelihood. The horizontal line corresponds to the true value of the entropy.

4. Discussion

In this paper, we have proposed a novel Bayesian estimator to make an inference about a one-dimensional property of a high-dimensional system. Our work focused on the selection of the prior. The method takes advantage of the drastic reduction of dimensionality induced by the one-dimensional nature of the property of interest and employs a Maxentropy argument to eliminate the remaining indeterminate factors, thereby explicitly exploiting both what we know and what we do not know about the system. The approach is general in the sense that it can be applied to arbitrary properties and has excellent performance when compared to previous methods. Here, we discuss and summarize the main points of the paper.

Conditions of Validity

When inferring the value of properties , three sampling regimes are relevant. If the number of samples n is significantly smaller than , the sample is likely to contain no coincidences [1], and in this regime, no inference is possible. This study becomes useful when , but still, the order of magnitude of n is not significantly larger than . For a still larger n, the estimation of the probabilities begins to be feasible, so it begins to make sense to consider the plug-in estimator.

Identifying the Factors That Matter When Searching for the Prior

The Bayesian approach is argued to be the only rational way to infer the value of an unknown property. Yet, the success of Bayesian inference relies on an adequate choice of the prior. If an unjustified prior is used, only because there are no evident reasons to choose a specific prior, the result of the inference is not guaranteed to make sense. It has been argued that more than a drawback of the Bayesian approach, this sensitivity to the prior stresses the fact that it is impossible to make inference without assumptions [29]. Yet, it is often the case that selecting a prior is difficult, and this is problematic when the result is too sensitive to the choice, which is often the case in the severe undersampled regime. Without intending to diminish the relevance of priors, in this paper, we have shown that not all aspects of the prior distribution are relevant, and thereby we hope to focus the efforts of the selection process on the relevant aspects. For example, we have proved that all the modulations of the prior within the intersection between a level surface of the property and a level surface of the likelihood bear no consequences on the result of the inference. This observation, and the understanding that the prior distribution cannot depend explicitly on the sampled data, reduce the search of a high-dimensional prior to a one-dimensional prior . Moreover, in many circumstances, even the detailed definition of may be more than required, although this statement only holds when the sampled data contain a non-negligible fraction of coincidences. When this condition holds, the data themselves select the range of f-values that actually contribute to the estimation. Therefore, only the variations of the prior distribution within this range actually matter.

The Core of the Approach: Decomposing the Prior in a Conveniently Chosen Base of Priors

To make this selective role of the data explicit, and at the same time to be able to get analytical results, in this paper, we proposed the expansion of the prior distribution as a linear combination of base prior functions, each of which was required to have a different expected value of the property under study. By imposing no additional structure on the base priors (this requisite was instantiated by Maxentropy), the distributions of the base were derived to depend exponentially on the property under study. The most relevant element of the base (at least, inasmuchas the relevance was dictated by the data and not by ) was shown to be the one for which the prior and posterior estimation of the property coincided (Equation (18)). This result is similar to the equality between the prior and posterior mean energy reported in the framework of statistical inference [30], and it relates to a scenario of parameter mismatch, as well as to the algorithm of expectation maximisation [31]. Even if the ratio of partition functions sometimes cannot be calculated analytically, the goal of this paper is to reveal the formal similarity between the two problems and thereby to prompt the reader to consider the battery of numerical algorithms that have been developed in expectation maximization to solve the type of one-dimensional problem posed here.

Assessing the Effectiveness of the Method

In Section 3, we tested the performance of the method for three well-known properties, for which many estimators exist. Even though our method is general, it accomplished good results when compared to those produced by other methods specifically designed for each individual property. Interestingly, the performance was good even using a single -value; namely, the one for which the marginal likelihood was maximal, without needing to integrate in , and therefore, without needing to define the prior . One fortunate ingredient was given by the fact that in those examples, as well as in many cases of interest, the property to be estimated was additive and symmetric in the components . Properties with these characteristics can be written as , for some function . When the effective number of states grows, the constraint imposed by the normalization condition becomes increasingly irrelevant, so the sampled can be assumed to be fairly independent of one another. In this case, the central limit theorem implies that the relative fluctuations of the property decay as the inverse of the square root of the effective number of states. Therefore, each member of the base makes a rather definite posterior guess with minimal fluctuations, implying that even though the states spread all over the simplex, the posterior property is sharply defined within the state, as happened with the expansion in delta-like priors.

Future Perspectives

There are several directions in which this work can be continued. From the strictly theoretical point of view, in Section 2.3, we argued that the optimal decomposition makes use of prior base distributions, the density of which is compressed inside a level surface, and there, the density is constant (Equation (5)). This choice, however, makes the obtainment of the partition function in analytical form unfeasible, since the integrals that need to be calculated are typically intractable. Therefore, we have opted to expand the prior in a base composed of distributions of fixed mean value. Future work should either demonstrate the optimality of this choice or suggest other possibilities.

It is also important to make a more systematic characterization of the relevant statistics in the data. For example, here, we have shown that the estimation of mutual information is only sensitive to states that have been sampled more than once. What is the relative effect of states sampled times? Which are the relevant statistics determining the estimation of other properties different from mutual information? More importantly, are there thresholds in the amount of sampled data for the possibility to make inference at all [30]? How do these thresholds depend on the property to be estimated?

From a more practical perspective, the excellent performance obtained for estimations of entropy and mutual information should also be evaluated in broader conditions; for example, for non-uniform marginals, or for sets with arbitrary cardinality. Moreover, the method should also be applied to a broader collection of properties, such as the estimation of Pearson correlation coefficients, slopes in linear regression, skewness, kurtosis, conditional mutual information [12], transfer entropy [32], etc.

Conclusions

In summary, the method proposed here has the following attractive properties:

- (a)

- It offers a general framework to select priors that is valid for arbitrary properties ;

- (b)

- It performs well in the estimation of well-known quantities, as marginal distributions, mutual information and entropy, with performance comparable or even better than previous estimators individually tailored for each property;

- (c)

- It reveals the formal similarity between inference problems and equilibrium statistical mechanics. In particular, the parameter is analogous to the inverse temperature of statistical physics, and the two alternative expansions of the prior (in a delta-like or an exponential-like base) correspond to the microcanonical and the canonical ensembles, respectively.

Author Contributions

Conceptualization, D.G.H. and I.S.; methodology, D.G.H. and I.S., software, D.G.H.; validation, D.G.H.; formal analysis, D.G.H. and I.S.; writing, D.G.H. and I.S.; visualization, D.G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Universidad Nacional de Cuyo (Grant 06 / C589) and Agencia Nacional de Promoción Científica y Tecnológica (Grants PICT Raíces 2016 1004 and PICT 2019 2113).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The running costs of this study were covered by Consejo Nacional de Investigaciones Científicas y Técnicas and Comisión Nacional de Energía Atómica of Argentina.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nemenman, I. Coincidences and estimation of entropies of random variables with large cardinalities. Entropy 2011, 13, 2013–2023. [Google Scholar] [CrossRef] [Green Version]

- Kraskov, A.; Stögbauer, H.; Grassberger, P. Estimating mutual information. Phys. Rev. E 2004, 69, 066138. [Google Scholar] [CrossRef] [Green Version]

- Nemenman, I.; Bialek, W.; van Steveninck, R.d.R. Entropy and information in neural spike trains: Progress on the sampling problem. Phys. Rev. E 2004, 69, 056111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Archer, E.; Park, I.M.; Pillow, J.W. Bayesian entropy estimation for countable discrete distributions. J. Mach. Learn. Res. 2014, 15, 2833–2868. [Google Scholar]

- Chao, A.; Wang, Y.; Jost, L. Entropy and the species accumulation curve: A novel entropy estimator via discovery rates of new species. Methods Ecol. Evol. 2013, 4, 1091–1100. [Google Scholar] [CrossRef]

- Grassberger, P. Entropy estimates from insufficient samplings. arXiv 2003, arXiv:physics/0307138. [Google Scholar]

- Archer, E.; Park, I.M.; Pillow, J.W. Bayesian and quasi-Bayesian estimators for mutual information from discrete data. Entropy 2013, 15, 1738–1755. [Google Scholar] [CrossRef]

- Hernández, D.G.; Samengo, I. Estimating the Mutual Information between Two Discrete, Asymmetric Variables with Limited Samples. Entropy 2019, 21, 623. [Google Scholar] [CrossRef] [Green Version]

- Contreras-Reyes, J.E. Mutual information matrix based on asymmetric Shannon entropy for nonlinear interactions of time series. Nonlinear Dyn. 2021, 104, 3913–3924. [Google Scholar] [CrossRef]

- Lehmann, E.L.; Casella, G. Theory of Point Estimation; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 22, 79–86. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Jaynes, E.T. Information Theory and Statistical Mechanics. Phys. Rev. 1957, 108, 171–190. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information Theory and Statistical Mechanics II. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Amari, S.I.; Hiroshi, N. Methods of Information Geometry; Oxford University Press: Oxford, UK, 2000. [Google Scholar]

- Amari, S.I. Information Geometry on Hierarchy of Probability Distributions. IEEE Trans. Inf. Theory 2001, 47, 1701–1711. [Google Scholar] [CrossRef] [Green Version]

- Panzeri, S.; Treves, A. Analytical estimates of limited sampling biases in different information measures. Netw. Comput. Neural Syst. 1996, 7, 87. [Google Scholar] [CrossRef] [PubMed]

- Samengo, I. Estimating probabilities from experimental frequencies. Phys. Rev. E 2002, 65, 046124. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paninski, L. Estimation of entropy and mutual information. Neural Comput. 2003, 15, 1191–1253. [Google Scholar] [CrossRef] [Green Version]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables; Dover Publications: New York, NY, USA, 1965. [Google Scholar]

- Montemurro, M.A.; Senatore, R.; Panzeri, S. Tight data-robust bounds to mutual information combining shuffling and model selection techniques. Neural Comput. 2007, 19, 2913–2957. [Google Scholar] [CrossRef]

- Kolchinsky, A.; Tracey, B.D. Estimating mixture entropy with pairwise distances. Entropy 2017, 19, 361. [Google Scholar] [CrossRef] [Green Version]

- Belghazi, M.I.; Baratin, A.; Rajeshwar, S.; Ozair, S.; Bengio, Y.; Courville, A.; Hjelm, D. Mutual information neural estimation. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 531–540. [Google Scholar]

- Safaai, H.; Onken, A.; Harvey, C.D.; Panzeri, S. Information estimation using nonparametric copulas. Phys. Rev. E 2018, 98, 053302. [Google Scholar] [CrossRef] [Green Version]

- Holmes, C.M.; Nemenman, I. Estimation of mutual information for real-valued data with error bars and controlled bias. Phys. Rev. E 2019, 100, 022404. [Google Scholar] [CrossRef] [Green Version]

- Miller, G. Note on the bias of information estimates. In Information Theory in Psychology: Problems and Methods; Free Press: Glencoe, IL, USA, 1955. [Google Scholar]

- Chao, A.; Shen, T.J. Nonparametric estimation of Shannon’s index of diversity when there are unseen species in sample. Environ. Ecol. Stat. 2003, 10, 429–443. [Google Scholar] [CrossRef]

- Berry II, M.J.; Tkačik, G.; Dubuis, J.; Marre, O.; da Silveira, R.A. A simple method for estimating the entropy of neural activity. J. Stat. Mech. Theory Exp. 2013, 2013, P03015. [Google Scholar] [CrossRef]

- MacKay, D. Information Theory, Inference and Learning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zdeborová, L.; Krzakala, F. Statistical physics of inference: Thresholds and algorithms. Adv. Phys. 2016, 65, 453–552. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–22. [Google Scholar]

- Staniek, M.; Lehnertz, K. Symbolic transfer entropy. Phys. Rev. Lett. 2008, 100, 158101. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).