2. Multivariate Gaussian Distributions

In the classification of large sets of digital data objects, a common practical choice is the numerical representation of individual features through multivariate Gaussian distributions, which have a maximal entropy property among distributions with a given mean vector and covariance matrix. Then we have, as described below, an information metric on the space of such multivariate Gaussian probability density functions and we can retrieve all objects with a given feature near to that of a chosen object.

The

k-variate Gaussian distributions have the parameter space

with probability density functions

given by:

where

is a possible value for the random variable,

a

k-dimensional mean vector, and

is the

positive definite symmetric covariance matrix, for features with

k-dimensional representation [

4]. In such cases the parameters are obtained using maximum likelihood estimation, as was the case for face recognition applications [

3]. The Riemannian manifold of the family of

k-variate Gaussians for a given

k is well understood through information geometric study using the Fisher information metric. For an introduction to information geometry and a range of applications see [

5,

6,

7].

The Fisher information metric is a Riemannian metric defined on a smooth statistical manifold whose points are probability measures from a probability density function. The Fisher metric determines the geodesic distance between between points in this Riemannian manifold. Given a statistical manifold with coordinates

, and a probability density function

as a function of

, the Fisher information metric is defined as:

which can be understood as the infinitesimal form of the relative entropy and it is also related to the Kullback-Leibler divergence [

6,

7]. Moreover, a closed-form solution for the Fisher information distance for

k-variate Gaussian distributions is still unknown [

8]. The entropy of the

k-variate Gaussian (

1) is maximal for a given covariance

and mean

and it is independent of translations of the mean

The natural norm on mean vectors is

and the eigenvalues

of

yield a norm on covariances:

The information distance, that is the length of a geodesic, between two k-variate Gaussians and is the infimum over the length of curves from to It is known analytically in three particular cases:

Diagonal covariance matrix:

Here

is a diagonal covariance matrix with null covariances [

8]:

Common covariance matrix:

Here

is a positive definite symmetric quadratic form and gives a norm on the difference vector of means:

Common mean vector:

In this case we need a positive definite symmetric matrix constructed from

and

to give a norm on the space of differences between covariances. The appropriate information metric is given by Atkinson and Mitchell [

9] from a result attributed to S.T. Jensen, using

In principle, (

8) yields all of the geodesic distances since the information metric is invariant under affine transformations of the mean [

9] Appendix 1; see also the article of P. S. Eriksen [

10].

In cases where we have only empirical frequency distributions, and empirical estimates of moments, we can use the Kullback-Leibler divergence, also called relative entropy, between two

k-variate distributions

with given mean and covariance matrices, its square root yields a separation measurement [

11,

12]:

This is not symmetric, so to obtain a distance we can take the average KL-distance in both directions:

The Kullback-Leibler distance tends to the Fisher information distance as two distributions become closer together; conversely it becomes less accurate as they move apart. Using only the first and last term in (

11) together with (

10), we define a divergence

by

The Kullback-Leibler divergence does in fact induce the Fisher metric [

5,

6]. However, there are other geometries with known closed-form solutions for the geodesic distance between

k-variate Gaussians such as the one defined by the

-Wasserstein metric which is derived by the optimal transport problem in which the mass of one distribution is moved to the other [

13]. In this geometry, the space of Gaussian measures on a Euclidean space is geodesically convex and corresponds to a finite dimensional manifold since Gaussian measures are parameterized by means and covariance matrices. By restricting it to the space of Gaussian measures inside the

-Wasserstein space, giving a Riemannian manifold which is geodesically convex, several authors derived a closed-form solution for the distance between two such Gaussian measures

, for example Takatsu [

13]:

Additionally, Bhatia et al. [

14] used the Bures-Wasserstein distance on the space of

k-variate Gaussian distributions with zero means in the form:

4. Face Recognition Experiments

The distance between two Gaussian distributions lying in the Riemannian manifold of k-variate Gaussians is given by the arc length of a minimizing geodesic curve which connects both Gaussians. Moreover, geodesics are intrinsic geometric objects and they are invariant under smooth transformations of coordinates, so in particular the length of a segment is invariant under scale changes of the random variables, from which the mean vectors and covariances are computed.

Consequently, geodesic distances play the role of a natural dissimilarity metric in biometric applications which represent features by probability distributions such as face recognition [

2,

3]. In such applications, landmark topologies can be used to locate and extract compact biometric features from characteristic face locations in high resolution colour face images [

16,

17].

Since an analytic form for the geodesic distance in the Riemannian manifold of k-variate Gaussians is currently unknown, here we approximate it by constructing approximations applied in a set of face recognition experiments with features represented as k-variate Gaussians.

In order to extract efficient features for face recognition, we used the FEI Face database [

18], which provides colour (RGB) face images with

pixels. The database images were taken against a white homogenous background, with the head in the upright position, turning from left to right, and there are varying illumination conditions and face expressions. Since the images are 3-channeled (RGB), so here

.

Also, we made use of another challenging database, namely the FERET Face Database [

19], which provides colour (RGB) face images with

pixels organized in several subsets with specific head pose, expression, age, and illumination conditions.

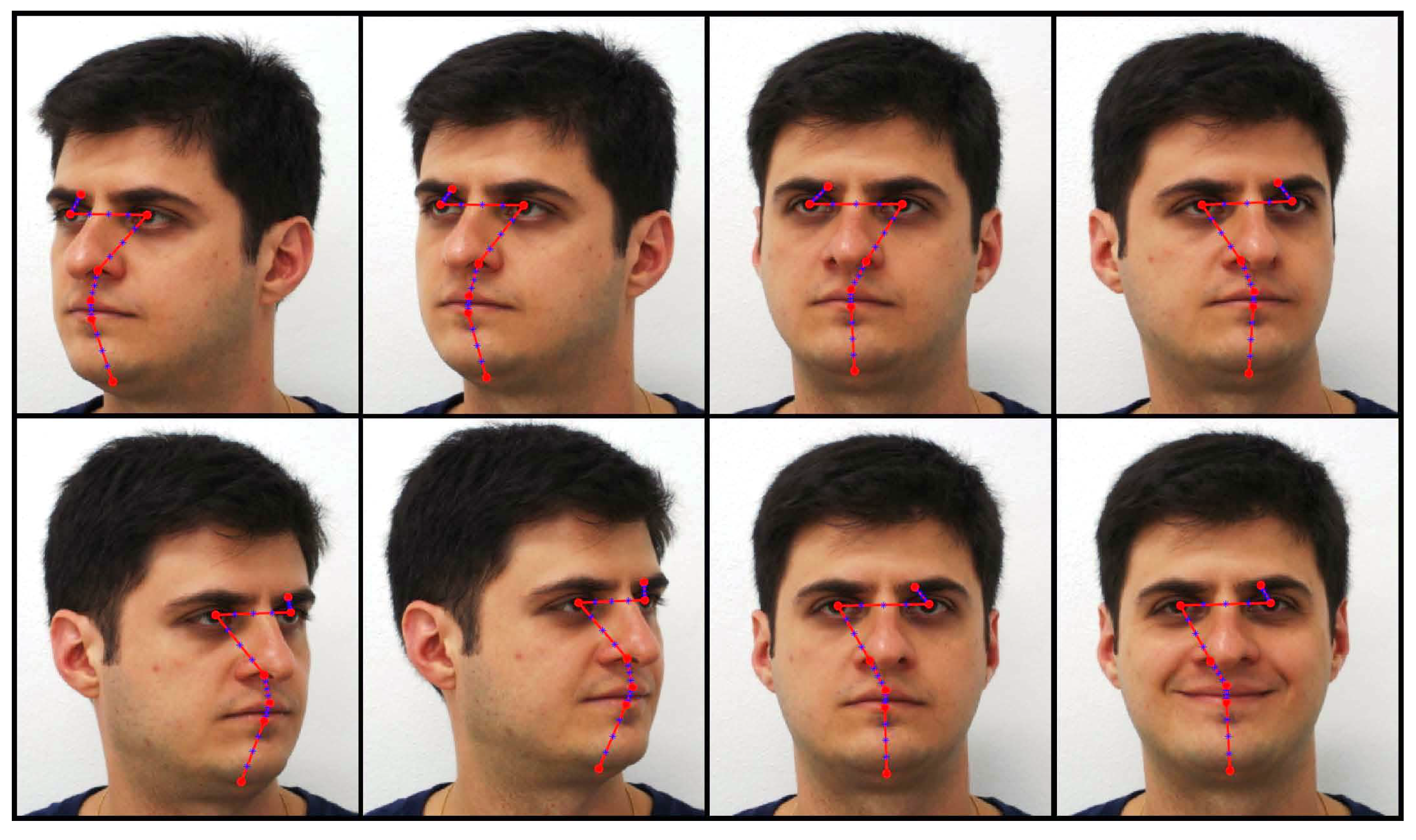

To extract meaningful features from face images of both databases, we adopted the landmark topology presented in

Figure 1 with seven landmarks at characteristic face locations such as eyebrows, eyes, nose, mouth and chin (in red dots), together with three equally spaced interpolated landmarks between each pair of consecutive landmarks (in blue), leading to a total of

landmarks for each face image. Next, all pixels inside squared patches with size

centred at each landmark location are extracted, leading to a feature space dimensionality of 3025 pixels.

However, it is possible to reduce this high-dimensionality feature space and preserve its discriminative properties by representing each landmark ℓ by the 3-dimensional mean and the 3-variate covariance matrix of each extracted face patch, using images with three colour channels (RGB). Accordingly, each landmark is represented by 9 dimensions (3 from the mean and 6 from the covariance matrix since it is symmetric). As result, the original feature space dimensionality is reduced to 225. Experimentally, the optimally small landmark topology, interpolated landmark number L and vicinity size were determined, leading to the landmark number and square patches with size pixels.

Therefore, by representing each face image as an ordered sequence of probability distributions as in previous approaches [

2,

3], dissimilarities between distinct face images were scored by summing geodesic distances between 3-variate Gaussians representative of corresponding landmarks of pairs of face images

x and

y. Differently here, we obtained an improved score function for dissimilarities between face images by multiplying the geodesics between corresponding landmarks as follows. We define the functions:

where

and

represent 3-variate Gaussians,

and

, respectively,

ℓ is the

landmark from a total of

L landmarks, and

,

,

,

are score functions applicable to images

x and

y. Clearly, in our experiments we cannot use the Bures-Wasserstein distance, Equation (

13), since we measure varying means for our RGB variables, but the Wasserstein distance, Equation (

12), is suitable and we tested it with the score in Equation (

22). Equation (

12) might be worth investigating further in future work, as might be a hybrid distance,

using Equations (

7) and (

13).

All the aforementioned scores define face dissimilarities as products of individual landmark dissimilarities given by geodesic distances. However, by considering a face matching problem, it is possible to convert such dissimilarities between landmarks into probabilities of landmarks not matching, as follows:

where

m represents the

m-th candidate face image from a total of

M available face images, and

G is a chosen dissimilarity metric. Then, the problem of finding the face image

which is more similar to

is converted into the problem of finding the face image

which has the least probability of not matching

. This probability is defined as the joint probability of not matching for all landmarks, i.e., the product of the probabilities of not matching each landmark

ℓ as follows:

We can also provide an informal interpretation of our three methods: joint probabilities, sums or products of geodesic distances over the set of landmarks. By defining the problem of matching one face to another in terms of corresponding landmark dissimilarities, such dissimilarities are converted into probabilities of landmarks not matching as previously presented. Then, by multiplying individual probabilities of landmarks not matching, we obtain the joint probability of all landmarks not matching together at the same time. However, the sum of such probabilities of distinct sequenced events does not have much statistical meaning in our case. Accordingly, by multiplying the landmark dissimilarities, the impact of very similar landmarks is greatly increased as well as very dissimilar landmarks, and the same occurs in the joint probability which also multiplies such landmark dissimilarities. Finally, the product of geodesics has a formulation very similar to the joint probability up to a normalizing factor unique for each test face image.

Finally, the classification procedure is according to the nearest neighbour rule, which means that a new test face sample is attributed to the database individual which presents the training sample that minimizes the chosen score function

,

,

,

, or joint probability

,

,

,

. Even with large datasets, this classification rule has presented low computational complexity due to the fact that we calculate geodesic distance approximations between

k-variate Gaussians, with a small

k value, i.e.,

, allowing the proposed method to operate near real time as further detailed [

2].

In order to validate these new score functions and our geodesic product distance approximations, face recognition experiments were performed to compare our methods with state-of-the-art comparative methods. In the experiments with the FEI face database [

18], the first 100 individuals were selected considering the eight head poses indicated in

Figure 1, which include the frontal neutral and smiling expressions. Ten runs were performed with the selected database images, and in each run, seven head poses per individual were randomly selected for training, and the remaining one was selected for testing. The averaged recognition rates for the proposed method and comparative methods are presented in

Table 1, with all methods using features extracted from the landmark topology shown in

Figure 1.

Additionally, an extended set of experiments was performed in the FERET face database [

19] by using the first 150 individuals which present the subsets

,

,

,

,

and

, which are like the head poses and face expressions in

Figure 1. Ten runs were performed with the selected database images, and in each run, five head poses per individual were randomly selected for training, and the remaining one was selected for testing. The averaged recognition rates for the proposed method and comparative methods are also presented in

Table 1, with all methods using features extracted from the landmark topology shown in

Figure 1. Some of the comparative methods presented in this Table have parameters, so the parameter values which maximized their recognition rates were experimentally determined to obtain their final recognition rates. Those methods are outlined briefly below.

The Eigenfaces method [

20] linearly approximates the inherently non-linear face manifold by creating a orthogonal linear projection which best preserves the global feature geometry. On other hand, the Fisherfaces method [

21] determines a linear projection which maximizes the between class covariance while minimizing the within class covariance, leading to a better class separation. Furthermore, the method Customized Orthogonal Laplacianfaces (COLPP) [

17] obtains an orthogonal linear projection onto a discriminative linear space, which better preserves both the data and class geometry.

In another linear approach, the Multi-view Discriminant Analysis (MvDA) method [

22] seeks for a single discriminant common space for multiple views in a non-pairwise manner by jointly learning multiple view-specific linear transforms. In the CCA method [

23], multiple feature vectors are fused to produce a feature vector that is more robust to the weakness of each individual vector. And the Coupled Discriminant Multi-manifold Analysis (CDMMA) method [

24] explores the neighbourhood information as well as the local geometric structure of the multi-manifold space.

Although the linear approach is simple and efficient, it is also possible to approximate the non-linear face manifold by using non-linear approaches like the Enhanced ASM method [

16], which estimates the most discriminative landmarks and scores face similarities by summing probabilities associated to each landmark, taking advantage of this natural multi-modal feature representation. It turned out that the geodesic sum method [

2,

3] improves on this approach by more accurately scoring face dissimilarities by summing geodesic distances between corresponding landmarks of distinct face images. The experimental results presented in

Table 1 include our new methods, geodesic products using the score functions

,

,

,

, and joint probabilities using

,

,

,

, which use our geodesic distance approximations between landmarks on face images. Finally, we performed experiments with the method CM (Continuous Model) [

25], summing dissimilarities from corresponding landmarks by using Mahalanobis distance.

Table 1.

Averaged recognition rates of comparative face recognition methods in the FEI Face database [

18] and the FERET Face database [

19] using colour (RGB) face images and the landmark topology presented in

Figure 1.

Table 1.

Averaged recognition rates of comparative face recognition methods in the FEI Face database [

18] and the FERET Face database [

19] using colour (RGB) face images and the landmark topology presented in

Figure 1.

| Method | FEI | FERET |

|---|

| Joint Probabilities (with ) Equation (17) | 99.50% | 97.13% |

| Joint Probabilities (with ) Equation (18) | 99.50% | 96.86% |

| Joint Probabilities (with ) Equation (6) | 90.00% | 79.26% |

| Joint Probabilities (with ) Equation (12) | 96.70% | 87.06% |

| Geodesic Products (with ) Equation (17) | 99.50% | 97.13% |

| Geodesic Products (with ) Equation (18) | 99.50% | 96.86% |

| Geodesic Products (with ) Equation (6) | 90.00% | 79.26% |

| Geodesic Products (with ) Equation (12) | 96.70% | 87.06% |

| Geodesic Sums [3], uses Equation (17) | 99.50% | 96.80% |

| Geodesic Sums [3], uses Equation (18) | 99.50% | 96.73% |

| Geodesic Sums [3], uses Equation (6) | 88.40% | 75.93% |

| Geodesic Sums [3], uses Equation (12) | 87.30% | 81.40% |

| CM [25] | 98.60% | 92.33% |

| Enhanced ASM [16] | 89.20% | 69.20% |

| CCA [23] | 70.90% | 29.06% |

| CDMMA [24] | 37.70% | 12.26% |

| MvDA [22] | 44.40% | 20.13% |

| COLPP [17] | 96.10% | 88.66% |

| LDA [21] | 87.20% | 66.00% |

| Eigenfaces [20] | 82.20% | 52.00% |