On Compressed Sensing of Binary Signals for the Unsourced Random Access Channel

Abstract

1. Introduction

- Storage. Storing a sparse matrix requires fewer memory resources than storing a dense unstructured matrix, such as a matrix sampled from the i.i.d. Gaussian ensemble. We remark, however, that the AMP algorithm often works very well for compressed sensing of binary signals even when the Gaussian i.i.d. matrix A is replaced with a sensing matrix that is dense yet easy to store. For example, Amalladinne et al. [20] suggested taking A as a sub-sampled Hadamard matrix.

- Joint source-channel coding with local updates. Consider the problem of storing a sparse binary vector with Hamming weight at most k, in an array of n noisy memory cells. By noisy memory cells, we mean that the value read from memory cell i is modelled as , where is the stored value and is additive noise, e.g. Gaussian. This is a reasonable model for magnetic recording (ignoring intersymbol interference) [21] and for flash memories (ignoring further impairments like cross talk) [22]. Note that this is actually a joint-source channel coding problem where the source is , the channel is Gaussian and can be used n times, and the distortion measure is Hamming distortion. It is often desirable to use update efficient schemes. In such schemes changing one bit in the input vector , should correspond to changing the content of a small number of memory cells (see, e.g., [23]). When the encoding scheme is , an update in one coordinate of , say , corresponds to adding (removing) the column of A to (from) . If each column has a small number of nonzero entries, the update involves changing the stored value in a small number of cells. Thus, using a matrix A with sparse columns is highly desirable.

- Group testing. In group testing, the goal is to detect a set of at most k defective items from M possible items. To this end, we designate by the vector whose nonzero entries are defective. We have n measurements of , each corresponding to a different “pool”. Each pool is a subset of , and the corresponding measurement is obtained by passing the number of defective items in the pool, denoted by ℓ, through some noisy channel (see, Definitions 3.1 and 3.3 in [24]). The typical case is that the channel depends on the number of defective items ℓ only through the indicator on the event , but the general model allows the measurement to be distributed as , where . Thus, with this model the design of the group testing scheme corresponds to designing a binary sensing matrix , and the measurements are . Using pools, corresponding to the rows of A, with small Hamming weight, results in simpler tests. For example, the original application for which the group testing framework was developed was detection of syphilis among a large group of patients, using a small number of tests. Using pools with small Hamming weight means that we need to mix samples from fewer patients in each pool, which results in less work for the lab technician.

2. Compressed Sensing of Binary Signals

2.1. Sensing Matrices from LDPC Codes

- One side of the graph has M vertices, which we call “variables” (the left side), and the other has n vertices, called “factors” (the right side).

- For simplicity, assume . Each variable has degree , meaning it is connected to exactly factors; each factor has degree s. Thus, there are exactly edges in the graph.

- The edges of are sampled according to the following procedure. The procedure runs in rounds, so that in every round one introduces new factors (we assume is integer for simplicity), by randomly partitioning the variables into parts of size s each, namely,For every new factor introduced in this round, one adds an edge between i and all the variables in the corresponding .

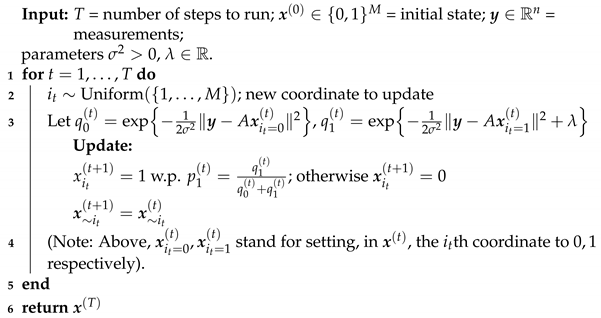

2.2. MCMC Algorithm for Recovery

| Algorithm 1: Glauber dynamics for binary compressed sensing. |

|

3. Simulation Results

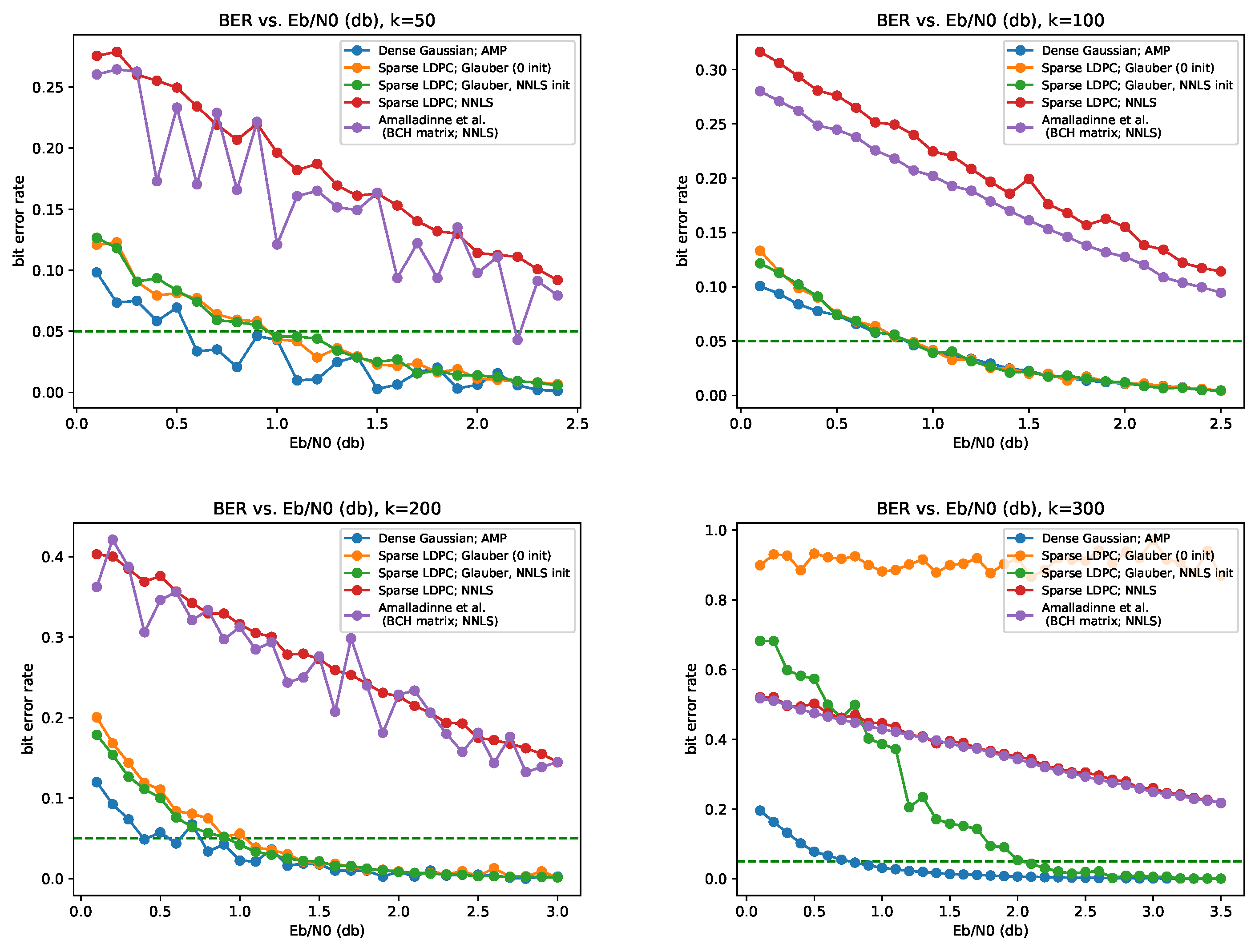

3.1. Performance in Compressed Sensing of Binary Signals

- The scheme of Amalladinne et al. [7]: A based on BCH codes, and NNLS decoder. To obtain a binary estimator from the NNLS solution, we simply assign every entry to its closest binary value (that is, according to whether it is smaller or greater than ).

- A given by a sparse LDPC matrix, with parameters (consequently, ), under the following decoding algorithms:

- (a)

- NNLS.

- (b)

- Glauber dynamics with initialization at .

- (c)

- Glauber dynamics, with initialized at the NNLS solution.

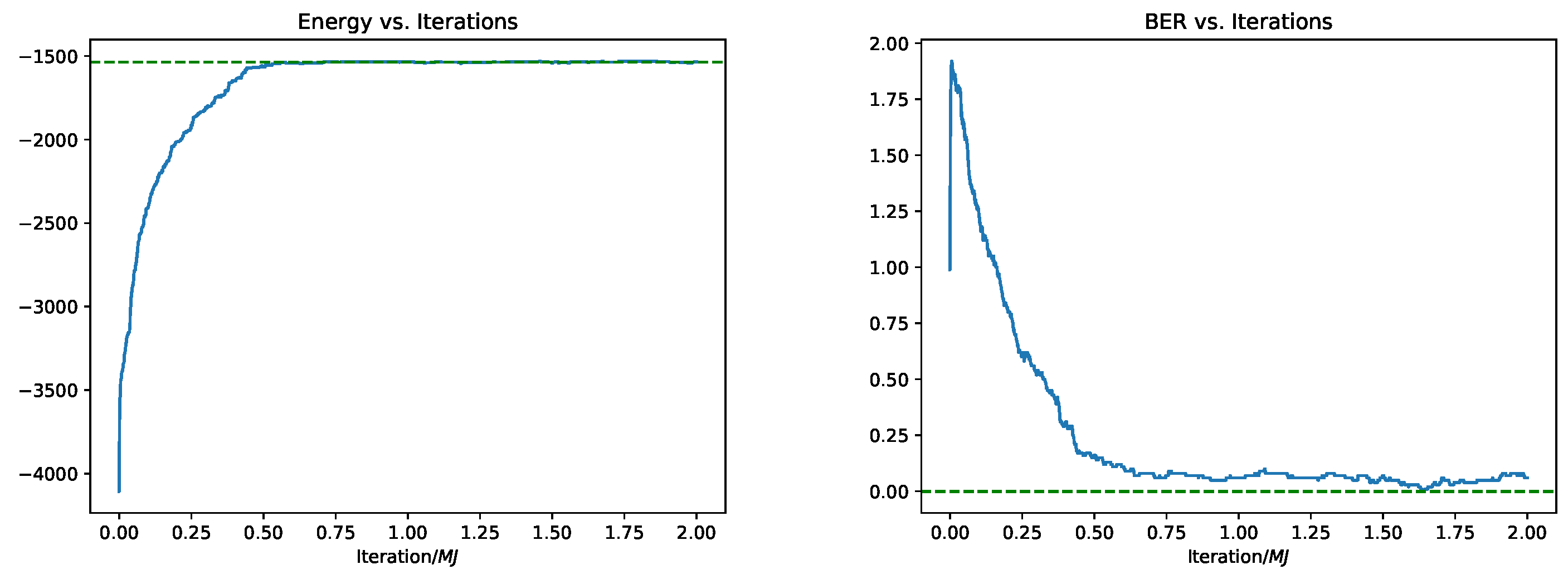

When using Glauber dynamics, we always let it run for iterations. - A is a dense random i.i.d. Gaussian matrix of mean 0 and variance , with Approximate Message Passing (AMP) decoder (thus, ; of course, in the experiments, the noise level is normalized according to the appropriate choice of ). The denoiser used in AMP is the optimal denoiser for the i.i.d. Bernoulli source, essentially as proposed by Fengler et al. [70]. AMP is a state-of-the-art algorithm for compressed sensing of binary signals, and is our main benchmark. For convenience, the exact implementation details of AMP are given in Appendix B.

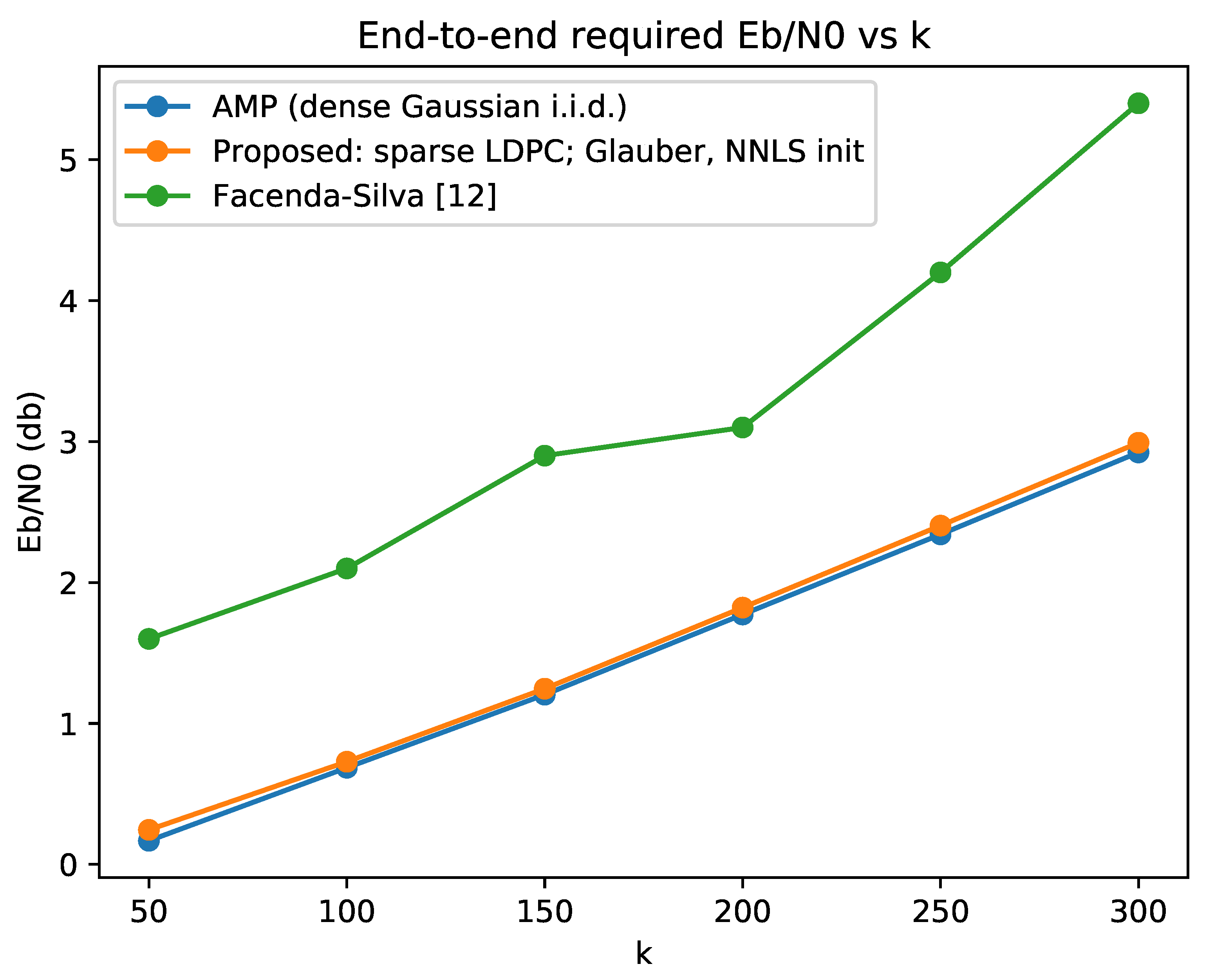

3.2. End-to-End Performance in Grant-Based Random Access

- Phase 1: Each active user transmits the first J bits of its message over channel uses. To that end, we use a sensing matrix A drawn from the ensemble, with . Each active user chooses one of the columns of A, corresponding to the first J bits in its message, scales it by and transmits them over the channel. Since there are k active users, the channel output after uses is . The vector consists of entries in (all non-negative integers) and satisfies . If all k active users chose messages that begin with a different string of J bits, the vector will further be in . For our choices of J and k described below, typically almost all entries of will be binary. The basestation (which is now the receiver) applies Algorithm 1 to estimate . In the end, we compute for any , and output a list consisting of the k coordinates with the highest .

- Phase 2: The basestation applies a set partitioning scheme for collision-free feedback, as described in [71], for broadcasting to the users a list of the k strings of J prefixes it has decoded in phase 1. Naively, this would require broadcasting a message of bits. However, as shown in [71] using a more intelligent scheme, this can information theoretically be done with about bits, and practical schemes can encode this information using less than bits. Each active user decodes the message transmitted by the basestation and finds the location of the J bits prefix of its message within the list of k prefixes that was transmitted.

- Phase 3: The remaining channel uses are split to k slots, each of length . Each active user transmits the remaining bits of its message during the slot whose index it has decoded in Phase 2. To this end, off-the-shelf point-to-point codes are used. Active users that did not find their J bits prefix in the list of Phase 2, do not transmit a thing in Phase 3.

- (i)

- Another active user chose a message with the same J bits prefix, causing a collision in Phase 1 above.

- (ii)

- The J bits prefix of the user’s message did not enter the list produced by the basestation in Phase 2.

- (iii)

- The user failed to decode the message sent from the basestation in Phase 2.

- (iv)

- There was a decoding error in the point-to-point transmission of that user in Phase 3.

4. Conclusions and Additional Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Omitted Proofs

Appendix A.1. Proof of Lemma 1

Appendix A.2. Proof of Lemma 2

- Distance and neighbors on the hypercube: Denote by the M-dimensional hypercube. has a natural graph structure: two vertices are neighbors, denoted , iff they differ in exactly one coordinate. Denote by the Hamming distance:Of course, Hamming distance coincides with the shortest path distance with respect to the graph structure on .

- Coupling: Let X and be two random variables taking values on . Denote by and the laws of , respectively. A coupling between is a probability distribution on , whose X-marginal is and -marginal is . In other words, a coupling is an embedding of two random variables onto a joint probability space, defined by a joint law.

Appendix B. Approximate Message Passing (AMP)

References

- Polyanskiy, Y. A perspective on massive random-access. In Proceedings of the International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 2523–2527. [Google Scholar]

- Vem, A.; Narayanan, K.R.; Chamberland, J.; Cheng, J. A User-Independent Successive Interference Cancellation Based Coding Scheme for the Unsourced Random Access Gaussian Channel. IEEE Trans. Commun. 2019, 67, 8258–8272. [Google Scholar] [CrossRef]

- Ordentlich, O.; Polyanskiy, Y. Low complexity schemes for the random access Gaussian channel. In Proceedings of the International Symposium on Information Theory (ISIT), Aachen, Germany, 25–30 June 2017; pp. 2528–2532. [Google Scholar]

- Marshakov, E.; Balitskiy, G.; Andreev, K.; Frolov, A. A polar code based unsourced random access for the Gaussian MAC. In Proceedings of the Vehicular Technology Conference, Honolulu, HI, USA, 22–25 September 2019; pp. 1–5. [Google Scholar]

- Calderbank, R.; Thompson, A. CHIRRUP: A practical algorithm for unsourced multiple access. Inf. Inference J. IMA 2020, 9, 875–897. [Google Scholar] [CrossRef]

- Chen, Z.; Sohrabi, F.; Liu, Y.F.; Yu, W. Covariance Based Joint Activity and Data Detection for Massive Random Access with Massive MIMO. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Tokyo, Japan, 15–20 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Amalladinne, V.K.; Chamberland, J.F.; Narayanan, K.R. A Coded Compressed Sensing Scheme for Unsourced Multiple Access. IEEE Trans. Inf. Theory 2020, 66, 6509–6533. [Google Scholar] [CrossRef]

- Glebov, A.; Matveev, N.; Andreev, K.; Frolov, A.; Turlikov, A. Achievability Bounds for T-Fold Irregular Repetition Slotted ALOHA Scheme in the Gaussian MAC. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakech, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- Fengler, A.; Jung, P.; Caire, G. SPARCs and AMP for unsourced random access. In Proceedings of the International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019; pp. 2843–2847. [Google Scholar]

- Kowshik, S.S.; Andreev, K.; Frolov, A.; Polyanskiy, Y. Energy efficient random access for the quasi-static fading MAC. In Proceedings of the 2019 IEEE International Symposium on Information Theory (ISIT), Paris, France, 7–12 July 2019; pp. 2768–2772. [Google Scholar]

- Amalladinne, V.K.; Pradhan, A.K.; Rush, C.; Chamberland, J.F.; Narayanan, K.R. On Approximate Message Passing for Unsourced Access with Coded Compressed Sensing. In Proceedings of the International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; pp. 2995–3000. [Google Scholar]

- Facenda, G.K.; Silva, D. Efficient scheduling for the massive random access Gaussian channel. IEEE Trans. Wirel. Commun. 2020, 19, 7598–7609. [Google Scholar] [CrossRef]

- Decurninge, A.; Land, I.; Guillaud, M. Tensor-Based Modulation for Unsourced Massive Random Access. arXiv 2020, arXiv:2006.06797. [Google Scholar] [CrossRef]

- Shyianov, V.; Bellili, F.; Mezghani, A.; Hossain, E. Massive Unsourced Random Access Based on Uncoupled Compressive Sensing: Another Blessing of Massive MIMO. arXiv 2020, arXiv:2002.03044. [Google Scholar] [CrossRef]

- Wu, Y.; Gao, X.; Zhou, S.; Yang, W.; Polyanskiy, Y.; Caire, G. Massive Access for Future Wireless Communication Systems. IEEE Wirel. Commun. 2020, 27, 148–156. [Google Scholar] [CrossRef]

- Cormode, G.; Muthukrishnan, S. Combinatorial algorithms for compressed sensing. In Proceedings of the International Colloquium on Structural Information and Communication Complexity, Chester, UK, 2–5 July 2006; pp. 280–294. [Google Scholar]

- Gilbert, A.C.; Strauss, M.J.; Tropp, J.A.; Vershynin, R. One sketch for all: Fast algorithms for compressed sensing. In Proceedings of the Thirty-Ninth Annual ACM Symposium on Theory of Computing, San Diego, CA, USA, 11–13 June 2007; pp. 237–246. [Google Scholar]

- Ngo, H.Q.; Porat, E.; Rudra, A. Efficiently decodable compressed sensing by list-recoverable codes and recursion. In Proceedings of the 29th Symposium on Theoretical Aspects of Computer Science (STACS’12), Paris, France, 29 February–3 March 2012; Volume 14, pp. 230–241. [Google Scholar]

- Amalladinne, V.K.; Vem, A.; Soma, D.K.; Narayanan, K.R.; Chamberland, J. A Coupled Compressive Sensing Scheme for Unsourced Multiple Access. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6628–6632. [Google Scholar] [CrossRef]

- Amalladinne, V.K.; Pradhan, A.K.; Rush, C.; Chamberland, J.F.; Narayanan, K.R. Unsourced random access with coded compressed sensing: Integrating AMP and belief propagation. arXiv 2020, arXiv:2010.04364. [Google Scholar]

- Schouhamer Immink, K.; Siegel, P.; Wolf, J. Codes for digital recorders. IEEE Trans. Inf. Theory 1998, 44, 2260–2299. [Google Scholar] [CrossRef]

- Cai, Y.; Haratsch, E.F.; Mutlu, O.; Mai, K. Threshold voltage distribution in MLC NAND flash memory: Characterization, analysis, and modeling. In Proceedings of the 2013 Design, Automation Test in Europe Conference Exhibition (DATE), Grenoble, France, 18–22 March 2013; pp. 1285–1290. [Google Scholar]

- Mazumdar, A.; Chandar, V.; Wornell, G.W. Update-Efficiency and Local Repairability Limits for Capacity Approaching Codes. IEEE J. Sel. Areas Commun. 2014, 32, 976–988. [Google Scholar] [CrossRef]

- Aldridge, M.; Johnson, O.; Scarlett, J. Group Testing: An Information Theory Perspective. Found. Trends Commun. Inf. Theory 2019, 15, 196–392. [Google Scholar] [CrossRef]

- Foucart, S.; Rauhut, H. A Mathematical Introduction to Compressive Sensing. In Applied and Numerical Harmonic Analysis; Springer: New York, NY, USA, 2013. [Google Scholar]

- Donoho, D.L.; Tanner, J. Sparse nonnegative solution of underdetermined linear equations by linear programming. Proc. Natl. Acad. Sci. USA 2005, 102, 9446–9451. [Google Scholar] [CrossRef] [PubMed]

- Candes, E.J.; Tao, T. Decoding by linear programming. IEEE Trans. Inf. Theory 2005, 51, 4203–4215. [Google Scholar] [CrossRef]

- Candes, E.J.; Tao, T. Near-optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Candès, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 52, 489–509. [Google Scholar] [CrossRef]

- Baraniuk, R.G. Compressive sensing [lecture notes]. IEEE Signal Process. Mag. 2007, 24, 118–121. [Google Scholar] [CrossRef]

- Duarte, M.F.; Eldar, Y.C. Structured compressed sensing: From theory to applications. IEEE Trans. Signal Process. 2011, 59, 4053–4085. [Google Scholar] [CrossRef]

- Elad, M. Sparse and Redundant Representations: From Theory to Applications in Signal and Image Processing; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Eldar, Y.C.; Kutyniok, G. Compressed Sensing: Theory and Applications; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Marques, E.C.; Maciel, N.; Naviner, L.; Cai, H.; Yang, J. A review of sparse recovery algorithms. IEEE Access 2018, 7, 1300–1322. [Google Scholar] [CrossRef]

- Brunel, L.; Boutros, J. Euclidean space lattice decoding for joint detection in CDMA systems. In Proceedings of the ITW’99, Kruger National Park, South Africa, 25 June 1999; p. 129. [Google Scholar]

- Thrampoulidis, C.; Zadik, I.; Polyanskiy, Y. A simple bound on the BER of the MAP decoder for massive MIMO systems. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 4544–4548. [Google Scholar]

- Reeves, G.; Xu, J.; Zadik, I. The all-or-nothing phenomenon in sparse linear regression. In Proceedings of the Conference on Learning Theory, Phoenix, AZ, USA, 25–28 June 2019; pp. 2652–2663. [Google Scholar]

- Jin, Y.; Kim, Y.; Rao, B.D. Limits on Support Recovery of Sparse Signals via Multiple-Access Communication Techniques. IEEE Trans. Inf. Theory 2011, 57, 7877–7892. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Wainwright, M. Statistical Learning with Sparsity: The Lasso and Generalizations; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Wainwright, M.J. Sharp thresholds for High-Dimensional and noisy sparsity recovery using ℓ1-Constrained Quadratic Programming (Lasso). IEEE Trans. Inf. Theory 2009, 55, 2183–2202. [Google Scholar] [CrossRef]

- Gamarnik, D.; Zadik, I. Sparse high-dimensional linear regression. algorithmic barriers and a local search algorithm. arXiv 2017, arXiv:1711.04952. [Google Scholar]

- Bruckstein, A.M.; Elad, M.; Zibulevsky, M. On the uniqueness of nonnegative sparse solutions to underdetermined systems of equations. IEEE Trans. Inf. Theory 2008, 54, 4813–4820. [Google Scholar] [CrossRef]

- Slawski, M.; Hein, M. Sparse recovery by thresholded non-negative least squares. Adv. Neural Inf. Process. Syst. 2011, 24, 1926–1934. [Google Scholar]

- Meinshausen, N. Sign-constrained least squares estimation for high-dimensional regression. Electron. J. Stat. 2013, 7, 1607–1631. [Google Scholar] [CrossRef]

- Foucart, S.; Koslicki, D. Sparse recovery by means of nonnegative least squares. IEEE Signal Process. Lett. 2014, 21, 498–502. [Google Scholar] [CrossRef]

- Kueng, R.; Jung, P. Robust nonnegative sparse recovery and the nullspace property of 0/1 measurements. IEEE Trans. Inf. Theory 2017, 64, 689–703. [Google Scholar] [CrossRef]

- Gallager, R. Low-density parity-check codes. IRE Trans. Inf. Theory 1962, 8, 21–28. [Google Scholar] [CrossRef]

- Indyk, P.; Ruzic, M. Near-optimal sparse recovery in the l1 norm. In Proceedings of the 2008 49th Annual IEEE Symposium on Foundations of Computer Science, Philadelphia, PA, USA, 26–28 October 2008; pp. 199–207. [Google Scholar]

- Berinde, R.; Gilbert, A.C.; Indyk, P.; Karloff, H.; Strauss, M.J. Combining geometry and combinatorics: A unified approach to sparse signal recovery. In Proceedings of the 2008 46th Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 23–26 September 2008; pp. 798–805. [Google Scholar]

- Gilbert, A.; Indyk, P. Sparse recovery using sparse matrices. Proc. IEEE 2010, 98, 937–947. [Google Scholar] [CrossRef]

- Jafarpour, S.; Xu, W.; Hassibi, B.; Calderbank, R. Efficient and robust compressed sensing using optimized expander graphs. IEEE Trans. Inf. Theory 2009, 55, 4299–4308. [Google Scholar] [CrossRef]

- Arora, S.; Daskalakis, C.; Steurer, D. Message-passing algorithms and improved LP decoding. IEEE Trans. Inf. Theory 2012, 58, 7260–7271. [Google Scholar] [CrossRef]

- Dimakis, A.G.; Smarandache, R.; Vontobel, P.O. LDPC codes for compressed sensing. IEEE Trans. Inf. Theory 2012, 58, 3093–3114. [Google Scholar] [CrossRef]

- Zhang, F.; Pfister, H.D. Verification decoding of high-rate LDPC codes with applications in compressed sensing. IEEE Trans. Inf. Theory 2012, 58, 5042–5058. [Google Scholar] [CrossRef][Green Version]

- Khajehnejad, M.A.; Dimakis, A.G.; Xu, W.; Hassibi, B. Sparse recovery of nonnegative signals with minimal expansion. IEEE Trans. Signal Process. 2010, 59, 196–208. [Google Scholar] [CrossRef]

- Richardson, T.; Urbanke, R. Modern Coding Theory; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Burshtein, D.; Miller, G. Expander graph arguments for message-passing algorithms. IEEE Trans. Inf. Theory 2001, 47, 782–790. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Kudekar, S.; Kumar, S.; Mondelli, M.; Pfister, H.D.; Urbanke, R. Comparing the bit-MAP and block-MAP decoding thresholds of Reed-Muller codes on BMS channels. In Proceedings of the 2016 IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016; pp. 1755–1759. [Google Scholar]

- Liu, J.; Cuff, P.; Verdú, S. On α-decodability and α-likelihood decoder. In Proceedings of the 2017 55th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 3–6 October 2017; pp. 118–124. [Google Scholar]

- Levin, D.A.; Peres, Y. Markov Chains and Mixing Times; American Mathematical Soc.: Providence, RI, USA, 2017; Volume 107. [Google Scholar]

- Neal, R.M. Monte Carlo Decoding of LDPC Codes. Technical Report. 2001. Available online: https://webcache.googleusercontent.com/search?q=cache:ujdA8n8zmD8J:https://www.cs.toronto.edu/~radford/ftp/mcdecode-talk.ps+&cd=3&hl=en&ct=clnk&gl=hk (accessed on 14 May 2021).

- Mezard, M.; Montanari, A. Information, Physics, and Computation; Oxford University Press: Oxford, UK, 2009. [Google Scholar]

- Hansen, M.; Hassibi, B.; Dimakis, A.G.; Xu, W. Near-optimal detection in MIMO systems using Gibbs sampling. In Proceedings of the GLOBECOM 2009—2009 IEEE Global Telecommunications Conference, Honolulu, HI, USA, 30 November–4 December 2009; pp. 1–6. [Google Scholar]

- Hassibi, B.; Dimakis, A.G.; Papailiopoulos, D. MCMC methods for integer least-squares problems. In Proceedings of the 2010 48th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 29 September–1 October 2010; pp. 495–501. [Google Scholar]

- Hassibi, B.; Hansen, M.; Dimakis, A.G.; Alshamary, H.A.J.; Xu, W. Optimized Markov chain Monte Carlo for signal detection in MIMO systems: An analysis of the stationary distribution and mixing time. IEEE Trans. Signal Process. 2014, 62, 4436–4450. [Google Scholar] [CrossRef]

- Bhatt, A.; Huang, J.; Kim, Y.; Ryu, J.J.; Sen, P. Monte Carlo Methods for Randomized Likelihood Decoding. In Proceedings of the 2018 56th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 2–5 October 2018; pp. 204–211. [Google Scholar] [CrossRef]

- Doucet, A.; Wang, X. Monte Carlo methods for signal processing: A review in the statistical signal processing context. IEEE Signal Process. Mag. 2005, 22, 152–170. [Google Scholar] [CrossRef]

- Lucka, F. Fast Markov chain Monte Carlo sampling for sparse Bayesian inference in high-dimensional inverse problems using L1-type priors. Inverse Probl. 2012, 28, 125012. [Google Scholar] [CrossRef]

- Fengler, A.; Jung, P.; Caire, G. SPARCs for unsourced random access. arXiv 2019, arXiv:1901.06234. [Google Scholar]

- Kang, J.; Yu, W. Minimum Feedback for Collision-Free Scheduling in Massive Random Access. In Proceedings of the 2020 IEEE International Symposium on Information Theory (ISIT), Los Angeles, CA, USA, 21–26 June 2020; pp. 2989–2994. [Google Scholar]

- Polyanskiy, Y.; Poor, H.V.; Verdú, S. Channel coding rate in the finite blocklength regime. IEEE Trans. Inf. Theory 2010, 56, 2307–2359. [Google Scholar] [CrossRef]

- Donoho, D.L.; Maleki, A.; Montanari, A. Message-passing algorithms for compressed sensing. Proc. Natl. Acad. Sci. USA 2009, 106, 18914–18919. [Google Scholar] [CrossRef] [PubMed]

- Donoho, D.L.; Johnstone, I.; Montanari, A. Accurate prediction of phase transitions in compressed sensing via a connection to minimax denoising. IEEE Trans. Inf. Theory 2013, 59, 3396–3433. [Google Scholar] [CrossRef]

- Bayati, M.; Montanari, A. The dynamics of message passing on dense graphs, with applications to compressed sensing. IEEE Trans. Inf. Theory 2011, 57, 764–785. [Google Scholar] [CrossRef]

- Krzakala, F.; Mézard, M.; Sausset, F.; Sun, Y.; Zdeborová, L. Statistical-physics-based reconstruction in compressed sensing. Phys. Rev. X 2012, 2, 021005. [Google Scholar] [CrossRef]

- Rangan, S. Generalized approximate message passing for estimation with random linear mixing. In Proceedings of the 2011 IEEE International Symposium on Information Theory Proceedings, St. Petersburg, Russia, 31 July–5 August 2011; pp. 2168–2172. [Google Scholar]

- Donoho, D.L.; Javanmard, A.; Montanari, A. Information-theoretically optimal compressed sensing via spatial coupling and approximate message passing. IEEE Trans. Inf. Theory 2013, 59, 7434–7464. [Google Scholar] [CrossRef]

- Metzler, C.A.; Maleki, A.; Baraniuk, R.G. From denoising to compressed sensing. IEEE Trans. Inf. Theory 2016, 62, 5117–5144. [Google Scholar] [CrossRef]

- Romanov, E.; Gavish, M. Near-optimal matrix recovery from random linear measurements. Proc. Natl. Acad. Sci. USA 2018, 115, 7200–7205. [Google Scholar] [CrossRef]

- Schniter, P.; Rangan, S. Compressive phase retrieval via generalized approximate message passing. IEEE Trans. Signal Process. 2014, 63, 1043–1055. [Google Scholar] [CrossRef]

- Parker, J.T.; Schniter, P.; Cevher, V. Bilinear generalized approximate message passing—Part I: Derivation. IEEE Trans. Signal Process. 2014, 62, 5839–5853. [Google Scholar] [CrossRef]

- Rush, C.; Greig, A.; Venkataramanan, R. Capacity-achieving sparse superposition codes via approximate message passing decoding. IEEE Trans. Inf. Theory 2017, 63, 1476–1500. [Google Scholar] [CrossRef]

- Berthier, R.; Montanari, A.; Nguyen, P.M. State evolution for approximate message passing with non-separable functions. Inf. Inference J. IMA 2020, 9, 33–79. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Romanov, E.; Ordentlich, O. On Compressed Sensing of Binary Signals for the Unsourced Random Access Channel. Entropy 2021, 23, 605. https://doi.org/10.3390/e23050605

Romanov E, Ordentlich O. On Compressed Sensing of Binary Signals for the Unsourced Random Access Channel. Entropy. 2021; 23(5):605. https://doi.org/10.3390/e23050605

Chicago/Turabian StyleRomanov, Elad, and Or Ordentlich. 2021. "On Compressed Sensing of Binary Signals for the Unsourced Random Access Channel" Entropy 23, no. 5: 605. https://doi.org/10.3390/e23050605

APA StyleRomanov, E., & Ordentlich, O. (2021). On Compressed Sensing of Binary Signals for the Unsourced Random Access Channel. Entropy, 23(5), 605. https://doi.org/10.3390/e23050605