Abstract

When confronted with massive data streams, summarizing data with dimension reduction methods such as PCA raises theoretical and algorithmic pitfalls. A principal curve acts as a nonlinear generalization of PCA, and the present paper proposes a novel algorithm to automatically and sequentially learn principal curves from data streams. We show that our procedure is supported by regret bounds with optimal sublinear remainder terms. A greedy local search implementation (called slpc, for sequential learning principal curves) that incorporates both sleeping experts and multi-armed bandit ingredients is presented, along with its regret computation and performance on synthetic and real-life data.

1. Introduction

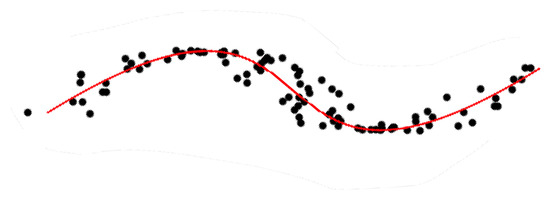

Numerous methods have been proposed in the statistics and machine learning literature to sum up information and represent data by condensed and simpler-to-understand quantities. Among those methods, principal component analysis (PCA) aims at identifying the maximal variance axes of data. This serves as a way to represent data in a more compact fashion and hopefully reveal as well as possible their variability. PCA was introduced by [1,2] and further developed by [3]. This is one of the most widely used procedures in multivariate exploratory analysis targeting dimension reduction or feature extraction. Nonetheless, PCA is a linear procedure and the need for more sophisticated nonlinear techniques has led to the notion of principal curve. Principal curves may be seen as a nonlinear generalization of the first principal component. The goal is to obtain a curve which passes “in the middle” of data, as illustrated by Figure 1. This notion of skeletonization of data clouds has been at the heart of numerous applications in many different domains, such as physics [4,5], character and speech recognition [6,7], mapping and geology [5,8,9], to name but a few.

Figure 1.

A principal curve.

1.1. Earlier Works on Principal Curves

The original definition of principal curve dates back to [10]. A principal curve is a smooth () parameterized curve in which does not intersect itself, has finite length inside any bounded subset of and is self-consistent. This last requirement means that , where is a random vector and the so-called projection index is the largest real number s minimizing the squared Euclidean distance between and x, defined by

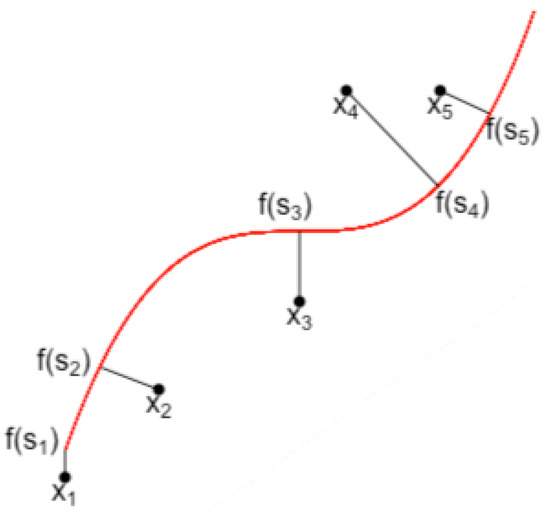

Self-consistency means that each point of is the average (under the distribution of X) of all data points projected on , as illustrated by Figure 2.

Figure 2.

A principal curve and projections of data onto it.

However, an unfortunate consequence of this definition is that the existence is not guaranteed in general for a particular distribution, let alone for an online sequence for which no probabilistic assumption is made. In order to handle complex data structures, Ref. [11] proposed principal curves (PCOP) of principal oriented points (POPs) which are defined as the fixed points of an expectation function of points projected to a hyperplane minimising the total variance. To obtain POPs, a cluster analysis is performed on the hyperplane and only data in the local cluster are considered. Ref. [12] introduced the local principal curve (LPC), whose concept is similar to that of [11], but accelerates the computation of POPs by calculating local centers of mass instead of performing cluster analysis, and local principal component instead of principal direction. Later, Ref. [13] also considered LPC in data compression and regression to reduce the dimension of predictors space to low-dimension manifold. Ref. [14] extended the idea of localization to independent component analysis (ICA) by proposing a local-to-global non-linear ICA framework for visual and auditory signal. Ref. [15] considered principal curves from a different perspective: as the ridge of a smooth probability density function (PDF) generating dataset, where the ridges are collections of all points; the local gradient of a PDF is an eigenvector of the local Hessian, and the eigenvalues corresponding to the remaining eigenvectors are negative. To estimate principal curves based on this definition, the subspace constrained mean shift (SCMS) algorithm was proposed. All the local methods above require strong assumptions on the PDF, such as twice continuous differentiability, which may prove challenging to be satisfied in the settings of online sequential data. Ref. [16] proposed a new concept of principal curves which ensures its existence for a large class of distributions. Principal curves are defined as the curves minimizing the expected squared distance over a class of curves whose length is smaller than ; namely,

where

If , always exists but may not be unique. In practical situations where only i.i.d. copies of X are observed, the method of [16] considers classes of all polygonal lines with k segments and length not exceeding L, and chooses an estimator of as the one within , which minimizes the empirical counterpart

of . It is proved in [17] that if X is almost surely bounded and , then

As the task of finding a polygonal line with k segments and length of at most L that minimizes is computationally costly, Ref. [17] proposed a polygonal line algorithm. This iterative algorithm proceeds by fitting a polygonal line with k segments and considerably speeds up the exploration part by resorting to gradient descent. The two steps (projection and optimization) are similar to what is done by the k-means algorithm. However, the polygonal line algorithm is not supported by theoretical bounds and leads to variable performance depending on the distribution of the observations.

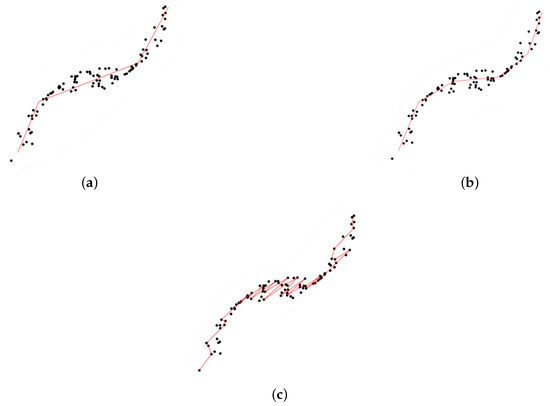

As the number of segments, k, plays a crucial role (a too small a k value leads to a poor summary of data, whereas a too-large k yields overfitting; see Figure 3), Ref. [18] aimed to fill the gap by selecting an optimal k from both theoretical and practical perspectives.

Figure 3.

Principal curves with different numbers (k) of segments. (a) A too small k. (b) Right k. (c) A too large k.

Their approach relies strongly on the theory of model selection by penalization introduced by [19] and further developed by [20]. By considering countable classes of polygonal lines with k segments and total length , and whose vertices are on a lattice, the optimal is obtained as the minimizer of the criterion

where

is a penalty function where stands for the diameter of observations and denotes the weight attached to class ; and it has constants depending on , maximum length L and a certain number of dimensions of observations. Ref. [18] then proved that

where is a numerical constant. The expected loss of the final polygonal line is close to the minimal loss achievable over up to a remainder term decaying as .

1.2. Motivation

The big data paradigm—where collecting, storing and analyzing massive amounts of large and complex data becomes the new standard—commands one to revisit some of the classical statistical and machine learning techniques. The tremendous improvements of data acquisition infrastructures generates new continuous streams of data, rather than batch datasets. This has drawn great interest to sequential learning. Extending the notion of principal curves to the sequential settings opens up immediate practical application possibilities. As an example, path planning for passengers’ locations can help taxi companies to better optimize their fleet. Online algorithms that could yield instantaneous path summarization would be adapted to the sequential nature of geolocalized data. Existing theoretical works and practical implementations of principal curves are designed for the batch setting [7,16,17,18,21] and their adaptation to the sequential setting is not a smooth process. As an example, consider the algorithm in [18]. It is assumed that vertices of principal curves are located on a lattice, and its computational complexity is of order where n is the number of observations, N the number of points on the lattice and p the maximum number of vertices. When p is large, running this algorithm at each epoch yields a monumental computational cost. In general, if data are not identically distributed or even adversary, algorithms that originally worked well in the batch setting may not be ideal when cast onto the online setting (see [22], Chapter 4). To the best of our knowledge, little effort has been put so far into extending principal curves algorithms to the sequential context.

Ref. [23] provided an incremental version of the SCMS algorithm [15] which is based on a definition of a principal curve as the ridge of a smooth probability density function generating observations. They applied the SCMS algorithm to the input points that are associated with the output points which are close to the new incoming sample and leave the remaining outputs unchanged. Hence, this algorithm can be used to deal with sequential data. As presented in the next section, our algorithm for sequentially updating principal curve vertices that are close to new data is similar in spirit to that of incremental SCMS. However, a difference is that our algorithm outputs polygonal lines. In addition, the computation complexity of our method is , and incremental SCMS has complexity, where n is the number of observations. Ref. [24] considered sequential principal curves analysis in a fairly different setting in which the goal was to derive in an adaptive fashion a set of nonlinear sensors by using a set of preliminary principal curves. Unfolding sequentially principal curves and a sequential path for Jacobian integration were considered. The “sequential” in this setting represented the generalization of principal curves to principal surfaces or even a principal manifold of higher dimensions. This way of sequentially exploiting principal curves was firstly proposed by [11] and later extended by [14,25,26] to give curvilinear representations using sequence of local-to-global curves. In addition, Refs. [15,27,28] presented, respectively, principal polynomial and non-parametric regressions to capture the nonlinear nature of data. However, these methods are not originally designed for treating sequential data. The present paper aims at filling this gap: our goal was to propose an online perspective to principal curves by automatically and sequentially learning the best principal curve summarizing a data stream. Sequential learning takes advantage of the latest collected (set of) observations and therefore suffers a much smaller computational cost.

Sequential learning operates as follows: a blackbox reveals at each time t some deterministic value , and a forecaster attempts to predict sequentially the next value based on past observations (and possibly other available information). The performance of the forecaster is no longer evaluated by its generalization error (as in the batch setting) but rather by a regret bound which quantifies the cumulative loss of a forecaster in the first T rounds with respect to some reference minimal loss. In sequential learning, the velocity of algorithms may be favored over statistical precision. An immediate use of aforecited techniques [17,18,21] at each time round t (treating data collected until t as a batch dataset) would result in a monumental algorithmic cost. Rather, we propose a novel algorithm which adapts to the sequential nature of data, i.e., which takes advantage of previous computations.

The contributions of the present paper are twofold. We first propose a sequential principal curve algorithm, for which we derived regret bounds. We then present an implementation, illustrated on a toy dataset and a real-life dataset (seismic data). The sketch of our algorithm’s procedure is as follows. At each time round t, the number of segments of is chosen automatically and the number of segments in the next round is obtained by only using information about and a small number of past observations. The core of our procedure relies on computing a quantity which is linked to the mode of the so-called Gibbs quasi-posterior and is inspired by quasi-Bayesian learning. The use of quasi-Bayesian estimators is especially advocated by the PAC-Bayesian theory, which originated in the machine learning community in the late 1990s, in the seminal works of [29] and McAllester [30,31]. The PAC-Bayesian theory has been successfully adapted to sequential learning problems; see, for example, Ref. [32] for online clustering. We refer to [33,34] for a recent overview of the field.

The paper is organized as follows. Section 2 presents our notation and our online principal curve algorithm, for which we provide regret bounds with sublinear remainder terms in Section 3. A practical implementation was proposed in Section 4, and we illustrate its performance on synthetic and real-life datasets in Section 5. Proofs of all original results claimed in the paper are collected in Section 6.

2. Notation

A parameterized curve in is a continuous function where is a closed interval of the real line. The length of is given by

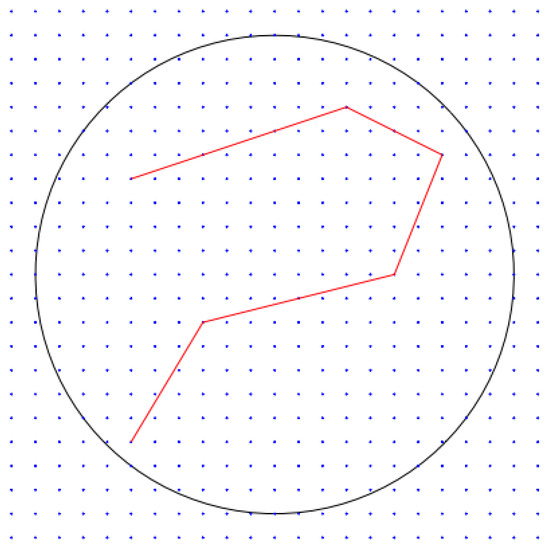

Let be a sequence of data, where stands for the -ball centered in with radius . Let be a grid over , i.e., where is a lattice in with spacing . Let and define for each the collection of polygonal lines with k segments whose vertices are in and such that . Denote by all polygonal lines with a number of segments , whose vertices are in and whose length is at most L. Finally, let denote the number of segments of . This strategy is illustrated by Figure 4.

Figure 4.

An example of a lattice in with (spacing between blue points) and (black circle). The red polygonal line is composed of vertices in .

Our goal is to learn a time-dependent polygonal line which passes through the “middle” of data and gives a summary of all available observations (denoted by hereafter) before time t. Our output at time t is a polygonal line depending on past information and past predictions . When is revealed, the instantaneous loss at time t is computed as

In what follows, we investigate regret bounds for the cumulative loss based on (2). Given a measurable space (embedded with its Borel -algebra), we let denote the set of probability distributions on , and for some reference measure , we let be the set of probability distributions absolutely continuous with respect to .

For any , let denote a probability distribution on . We define the prior on as

where and .

We adopt a quasi-Bayesian-flavored procedure: consider the Gibbs quasi-posterior (note that this is not a proper posterior in all generality, hence the term “quasi”):

where

as advocated by [32,35] who then considered realizations from this quasi-posterior. In the present paper, we will rather focus on a quantity linked to the mode of this quasi-posterior. Indeed, the mode of the quasi-posterior is

where (i) is a cumulative loss term, (ii) is a term controlling the variance of the prediction to past predictions , and (iii) can be regarded as a penalty function on the complexity of if is well chosen. This mode hence has a similar flavor to follow the best expert or follow the perturbed leader in the setting of prediction with experts (see [22,36], Chapters 3 and 4) if we consider each as an expert which always delivers constant advice. These remarks yield Algorithm 1.

| Algorithm 1 Sequentially learning principal curves. |

|

3. Regret Bounds for Sequential Learning of Principal Curves

We now present our main theoretical results.

Theorem 1.

For any sequence , and any penalty function , let . Let ; then the procedure described in Algorithm 1 satisfies

where and

The expectation of the cumulative loss of polygonal lines is upper-bounded by the smallest penalized cumulative loss over all up to a multiplicative term , which can be made arbitrarily close to 1 by choosing a small enough . However, this will lead to both a large in and a large . In addition, another important issue is the choice of the penalty function h. For each , should be large enough to ensure a small , but not too large to avoid overpenalization and a larger value for . We therefore set

for each with k segments (where denotes the cardinality of a set M) since it leads to

The penalty function satisfies (3), where are constants depending on R, d, , p (this is proven in Lemma 3, in Section 6). We therefore obtain the following corollary.

Corollary 1.

Under the assumptions of Theorem 1, let

Then

where .

Proof.

Note that

and we conclude by setting

□

Sadly, Corollary 1 is not of much practical use since the optimal value for depends on which is obviously unknown, even more so at time . We therefore provide an adaptive refinement of Algorithm 1 in the following Algorithm 2.

| Algorithm 2 Sequentially and adaptively learning principal curves. |

|

Theorem 2.

For any sequence , let where , , are constants depending on . Let and

where and . Then the procedure described in Algorithm 2 satisfies

The message of this regret bound is that the expected cumulative loss of polygonal lines is upper-bounded by the minimal cumulative loss over all , up to an additive term which is sublinear in T. The actual magnitude of this remainder term is . When L is fixed, the number k of segments is a measure of complexity of the retained polygonal line. This bound therefore yields the same magnitude as (1), which is the most refined bound in the literature so far ([18] where the optimal values for k and L were obtained in a model selection fashion).

4. Implementation

The argument of the infimum in Algorithm 2 is taken over which has a cardinality of order , making any greedy search largely time-consuming. We instead turn to the following strategy: Given a polygonal line with segments, we consider, with a certain proportion, the availability of within a neighborhood (see the formal definition below) of . This consideration is well suited for the principal curves setting, since if observation is close to , one can expect that the polygonal line which well fits observations lies in a neighborhood of . In addition, if each polygonal line is regarded as an action, we no longer assume that all actions are available at all times, and allow the set of available actions to vary at each time. This is a model known as “sleeping experts (or actions)” in prior work [37,38]. In this setting, defining the regret with respect to the best action in the whole set of actions in hindsight remains difficult, since that action might sometimes be unavailable. Hence, it is natural to define the regret with respect to the best ranking of all actions in the hindsight according to their losses or rewards, and at each round one chooses among the available actions by selecting the one which ranks the highest. Ref. [38] introduced this notion of regret and studied both the full-information (best action) and partial-information (multi-armed bandit) settings with stochastic and adversarial rewards and adversarial action availability. They pointed out that the EXP4 algorithm [37] attains the optimal regret in the adversarial rewards case but has a runtime exponential in the number of all actions. Ref. [39] considered full and partial information with stochastic action availability and proposed an algorithm that runs in polynomial time. In what follows, we materialize our implementation by resorting to “sleeping experts”, i.e., a special set of available actions that adapts to the setting of principal curves.

Let denote an ordering of actions, and a subset of the available actions at round t. We let denote the highest ranked action in . In addition, for any action we define the reward of at round by

It is clear that . The convention from losses to gains is done in order to facilitate the subsequent performance analysis. The reward of an ordering is the cumulative reward of the selected action at each time:

and the reward of the best ordering is (respectively, when is stochastic).

Our procedure starts with a partition step which aims at identifying the “relevant” neighborhood of an observation with respect to a given polygonal line, and then proceeds with the definition of the neighborhood of an action . We then provide the full implementation and prove a regret bound.

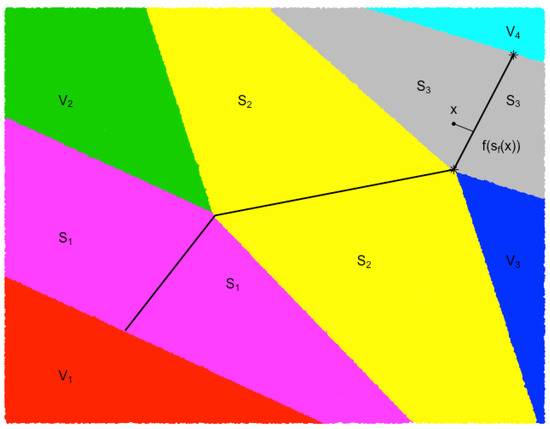

Partition. For any polygonal line with k segments, we denote by its vertices and by the line segments connecting and . In the sequel, we use to represent the polygonal line formed by connecting consecutive vertices in if no confusion arises. Let and be the Voronoi partitions of with respect to , i.e., regions consisting of all points closer to vertex or segment . Figure 5 shows an example of Voronoi partition with respect to with three segments.

Figure 5.

An example of a Voronoi partition.

Neighborhood. For any , we define the neighborhood with respect to as the union of all Voronoi partitions whose closure intersects with two vertices connecting the projection of x to . For example, for the point x in Figure 5, its neighborhood is the union of and . In addition, let be the set of observations belonging to and be its average. Let denote the diameter of set . We finally define the local grid of at time t as

We can finally proceed to the definition of the neighborhood of . Assume has vertices , where vertices of belong to while those of and do not. The neighborhood consists of sharing vertices and with , but can be equipped with different vertices in ; i.e.,

where and m is given by

In Algorithm 3, we initiate the principal curve as the first component line segment whose vertices are the two farthest projections of data ( can be set to 20 in practice) on the first component line. The reward of at round t in this setting is therefore . Algorithm 3 has an exploration phase (when ) and an exploitation phase (). In the exploration phase, it is allowed to observe rewards of all actions and to choose an optimal perturbed action from the set of all actions. In the exploitation phase, only rewards of a part of actions can be accessed and rewards of others are estimated by a constant, and we update our action from the neighborhood of the previous action . This local update (or search) greatly reduces computation complexity since when p is large. In addition, this local search will be enough to account for the case when locates in . The parameter needs to be carefully calibrated since it should not be too large to ensure that the condition is non-empty; otherwise, all rewards are estimated by the same constant and thus lead to the same descending ordering of tuples for both and . Therefore, we may face the risk of having in the neighborhood of even if we are in the exploration phase at time . Conversely, very small could result in large bias for the estimation of . Note that the exploitation phase is close yet different to the label efficient prediction ([40], Remark 1.1) since we allow an action at time t to be different from the previous one. Ref. [41] proposed the geometric resampling method to estimate the conditional probability since this quantity often does not have an explicit form. However, due to the simple exponential distribution of chosen in our case, an explicit form of is straightforward.

| Algorithm 3 A locally greedy algorithm for sequentially learning principal curves. |

|

Theorem 3.

Assume that , and let , , , and

Then the procedure described in Algorithm 3 satisfies the regret bound

The proof of Theorem 3 is presented in Section 6. The regret is upper bounded by a term of order , sublinear in T. The term is the price to pay for the local search (with a proportion ) of polygonal line in the neighborhood of the previous . If , we would have that , and the last two terms in the first inequality of Theorem 3 would vanish; hence, the upper bound reduces to Theorem 2. In addition, our algorithm achieves an order that is smaller (from the perspective of both the number of all actions and the total rounds T) than [39] since at each time, the availability of actions for our algorithm can be either the whole action set or a neighborhood of the previous action while [39] consider at each time only partial and independent stochastic available set of actions generated from a predefined distribution.

5. Numerical Experiments

We illustrate the performance of Algorithm 3 on synthetic and real-life data. Our implementation (hereafter denoted by slpc—Sequential Learning of Principal Curves) is conducted with the R language and thus our most natural competitors are the R package princurve, which is the algorithm from [10], and incremental, which is the algorithm from SCMS [23]. We let , , . The spacing of the lattice is adjusted with respect to data scale.

Synthetic data We generate a dataset uniformly along the curve , . Table 1 shows the regret (first row) for

Table 1.

The first line is the regret (cumulative loss) on synthetic data (average over 10 trials, with standard deviation in brackets). Second and third lines are the average computation time for two values of the time horizon T. princurve and incremental SCMS are deterministic, hence the zero standard deviation for regret.

- the ground truth (sum of squared distances of all points to the true curve),

- princurve and incremental SCMS (sum of squared distances between observation and fitted princurve on observations ),

- slpc (regret being equal to in both cases).

The mean computation time with different values for the time horizons T are also reported.

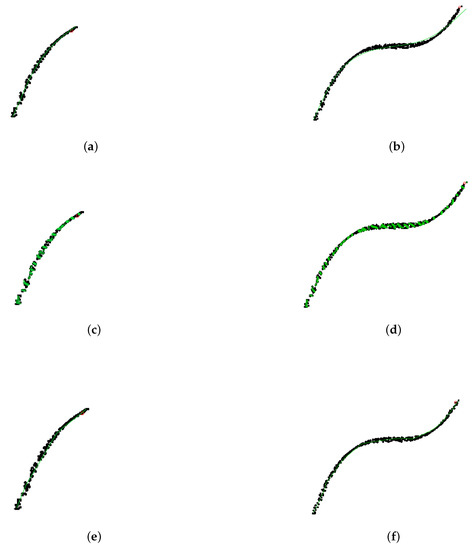

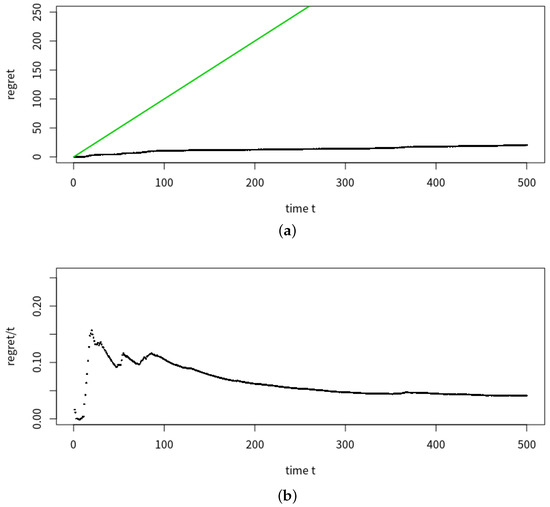

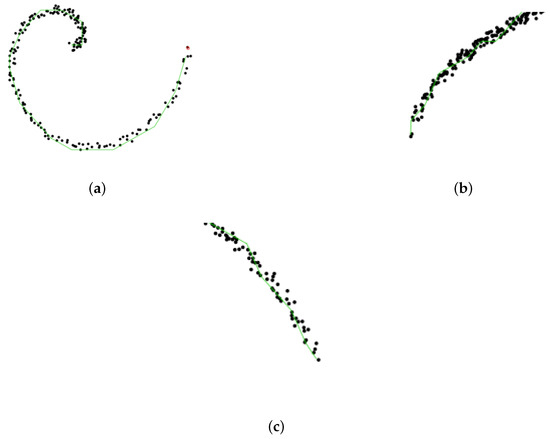

Table 1 demonstrates the advantages of our method slpc, as it achieved the optimal tradeoff between performance (in terms of regret) and runtime. Although princurve outperformed the other two algorithms in terms of computation time, it yielded the largest regret, since it outputs a curve which does not pass in “the middle of data” but rather bends towards the curvature of the data cloud, as shown in Figure 6 where the predicted principal curves for princurve, incremental SCMS and slpc are presented. incremental SCMS and slpc both yielded satisfactory results, although the mean computation time of splc was significantly smaller than that of incremental SCMS (the reason being that eigenvectors of the Hessian of PDF need to be computed in incremental SCMS). Figure 7 showed, respectively, the estimation of the regret of slpc and its per-round value (i.e., the cumulative loss divided by the number of rounds) both with respect to the round t. The jumps in the per-round curve occurred at the beginning, due to the initialization from a first principal component and to the collection of new data. When data accumulates, the vanishing pattern of the per-round curve illustrates that the regret is sublinear in t, which matches our aforementioned theoretical results.

Figure 6.

Synthetic data. Black dots represent data . The red point is the new observation . princurve (solid red) and slpc (solid green). (a) , princurve. (b) , princurve. (c) , incremental SCMS. (d) , incremental SCMS. (e) , slpc. (f) , slpc.

Figure 7.

Mean estimation of regret and per-round regret of slpc with respect to time round t, for the horizon . (a) Mean estimation of the regret of slpc over 20 trials (black line) and a bisection line (green) with respect to time round t. (b) Per-round of estimated regret of slpc with respect to t.

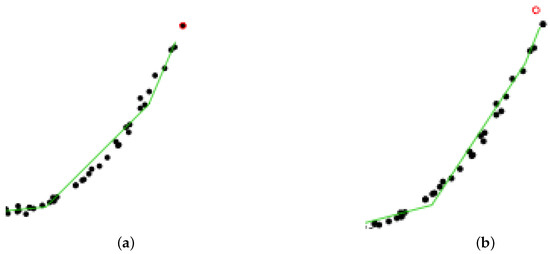

In addition, to better illustrate the way slpc works between two epochs, Figure 8 focuses on the impact of collecting a new data point on the principal curve. We see that only a local vertex is impacted, whereas the rest of the principal curve remains unaltered. This cutdown in algorithmic complexity is one the key assets of slpc.

Figure 8.

Synthetic data. Zooming in: how a new data point impacts the principal curve only locally. (a) At time . (b) And at time .

Synthetic data in high dimension. We also apply our algorithm on a dataset in higher dimension. It is generated uniformly along a parametric curve whose coordinates are

where t takes 100 equidistant values in . To the best of our knowledge, [10,16,18] only tested their algorithm on 2-dimensional data. This example aims at illustrating that our algorithm also works on higher dimensional data. Table 2 shows the regret for the ground truth, princurve and slpc.

Table 2.

Regret (cumulative loss) on synthetic high dimensional data in (average over 10 trials, with standard deviation in brackets). princurve and incremental SCMS are deterministic, hence the zero standard deviation.

In addition, Figure 9 shows the behaviour of slpc (green) on each dimension.

Figure 9.

slpc (green line) on synthetic high dimensional data from different perspectives. Black dots represent recordings ; the red dot is the new recording . (a) slpc, , 1st and 2nd coordinates. (b) slpc, , 3th and 5th coordinates. (c) slpc, , 4th and 6th coordinates.

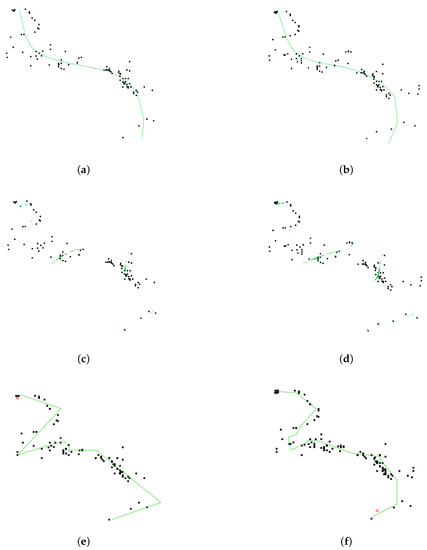

Seismic data. Seismic data spanning long periods of time are essential for a thorough understanding of earthquakes. The “Centennial Earthquake Catalog” [42] aims at providing a realistic picture of the seismicity distribution on Earth. It consists in a global catalog of locations and magnitudes of instrumentally recorded earthquakes from 1900 to 2008. We focus on a particularly representative seismic active zone (a lithospheric border close to Australia) whose longitude is between E to E and latitude between S to N, with seismic recordings. As shown in Figure 10, slpc recovers nicely the tectonic plate boundary, but both princurve and incremental SCMS with well-calibrated bandwidth fail to do so.

Figure 10.

Seismic data. Black dots represent seismic recordings ; the red dot is the new recording . (a) princurve, . (b) princurve, . (c) incremental SCMS, . (d) incremental SCMS, . (e) slpc, . (f) slpc, .

Lastly, since no ground truth is available, we used the coefficient to assess the performance (residuals are replaced by the squared distance between data points and their projections onto the principal curve). The average over 10 trials was 0.990.

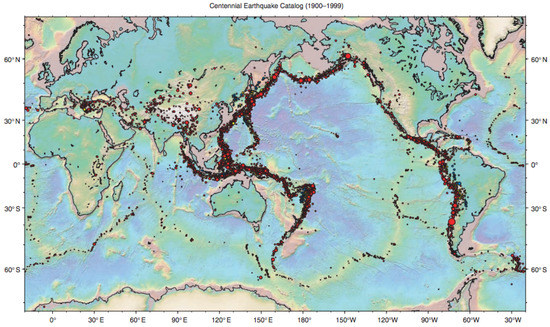

Back to Seismic Data.Figure 11 was taken from the USGS website (https://earthquake.usgs.gov/data/centennial/) and gives the global locations of earthquakes for the period 1900–1999. The seismic data (latitude, longitude, magnitude of earthquakes, etc.) used in the present paper may be downloaded from this website.

Figure 11.

Seismic data from https://earthquake.usgs.gov/data/centennial/.

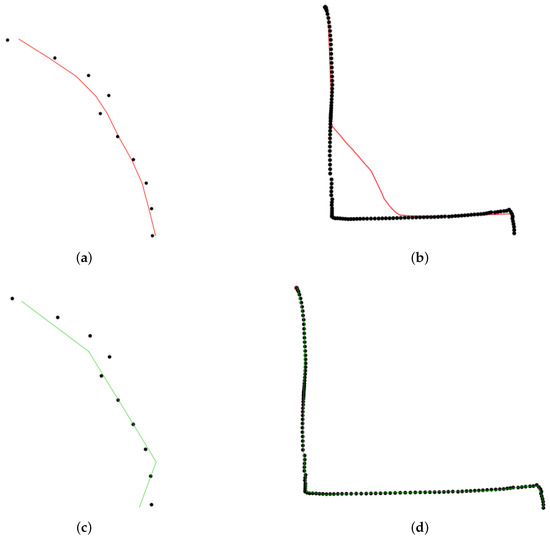

Daily Commute Data. The identification of segments of personal daily commuting trajectories can help taxi or bus companies to optimize their fleets and increase frequencies on segments with high commuting activity. Sequential principal curves appear to be an ideal tool to address this learning problem: we tested our algorithm on trajectory data from the University of Illinois at Chicago (https://www.cs.uic.edu/~boxu/mp2p/gps_data.html). The data were obtained from the GPS reading systems carried by two of the laboratory members during their daily commute for 6 months in the Cook county and the Dupage county of Illinois. Figure 12 presents the learning curves yielded by princurve and slpc on geolocalization data for the first person, on May 30. A particularly remarkable asset of slpc is that abrupt curvature in the data sequence was perfectly captured, whereas princurve does not enjoy the same flexibility. Again, we used the coefficient to assess the performance (where residuals are replaced by the squared distances between data points and their projections onto the principal curve). The average over 10 trials was 0.998.

Figure 12.

Daily commute data. Black dots represent collected locations . The red point is the new observation . princurve (solid red) and slpc (solid green). (a) , princurve. (b) , princurve. (c) , slpc. (d) , slpc.

6. Proofs

This section contains the proof of Theorem 2 (note that Theorem 1 is a straightforward consequence, with , ) and the proof of Theorem 3 (which involves intermediary lemmas). Let us first define for each the following forecaster sequence

Note that is an “illegal” forecaster since it peeks into the future. In addition, denote by

the polygonal line in which minimizes the cumulative loss in the first T rounds plus a penalty term. is deterministic, and is a random quantity (since it depends on , drawn from ). If several attain the infimum, we chose as the one having the smallest complexity. We now enunciate the first (out of three) intermediary technical result.

Lemma 1.

For any sequence in ,

Proof.

By (5) and the definition of , for , we have -almost surely that

where by convention. The second and third inequality is due to respectively the definition of and . Hence

where the second inequality is due to and for since is decreasing in t in Theorem 2. In addition, for , one has

Hence, for any

where . Therefore, we have

We thus obtain

Next, we control the regret of Algorithm 2.

Lemma 2.

Assume that is sampled from the symmetric exponential distribution in , i.e., . Assume that , and define . Then for any sequence , ,

Proof.

Let us denote by

the instantaneous loss suffered by the polygonal line when is obtained. We have

where the inequality is due to the fact that holds uniformly for any and . Finally, summing on t on both sides and using the elementary inequality if concludes the proof. □

Lemma 3.

For , we control the cardinality of set as

where denotes the volume of the unit ball in .

Proof.

First, let denote the set of polygonal lines with k segments and whose vertices are in . Notice that is different from and that

Hence

where the second inequality is a consequence to the elementary inequality combined with Lemma 2 in [16]. □

We now have all the ingredients to prove Theorem 1 and Theorem 2.

First, combining (6) and (7) yields that

Assume that , and for , then and moreover

where

and the second inequality is obtained with Lemma 1. By setting

we obtain

where . This proves Theorem 1.

Finally, assume that

Since for any , we have

which concludes the proof of Theorem 2.

Lemma 4.

Using Algorithm 3, if , , and for all , where is the cardinality of , then we have

Proof.

First notice that if , and that for

where denotes the complement of set . The first inequality above is due to the assumption that for all , we have . For , the above inequality is trivial since by its definition. Hence, for , one has

Summing on both sides of inequality (8) over t terminates the proof of Lemma 4. □

Lemma 5.

Let . If , then we have

Proof.

By the definition of in Algorithm 3, for any and , we have

where in the second inequality we use that for all and t, and that when . The rest of the proof is similar to those of Lemmas 1 and 2. In fact, if we define by , then one can easily observe the following relation when (similar relation in the case that = 0)

Then applying Lemmas 1 and 2 on this newly defined sequence leads to the result of Lemma 5. □

The proof of the upcoming Lemma 6 requires the following submartingale inequality: let be a sequence of random variable adapted to random events such that for , the following three conditions hold:

Then for any ,

The proof can be found in Chung and Lu [43] (Theorem 7.3).

Lemma 6.

Assume that and , then we have

Proof.

First, we have almost surely that

Denote by . Since

and uniformly for any and t, we have uniformly that , satisfying the first condition.

For the second condition, if , then

Similarly, for , one can have . Moreover, for the third condition, since

then

Setting leads to

Hence the following inequality holds with probability

Finally, noticing that almost surely, we terminate the proof of Lemma 6. □

Proof of Theorem 3.

Assume that , and let

With those values, the assumptions of Lemmas 4, 5 and 6 are satisfied. Combining their results lead to the following

where the second inequality is due to the fact that the cardinality is upper bounded by for . In addition, using the definition of that terminates the proof of Theorem 3. □

Author Contributions

Conceptualization, L.L. and B.G.; Formal analysis, L.L. and B.G.; Methodology, B.G.; Project administration, B.G.; Software, L.L.; Supervision, B.G.; Writing—original draft, L.L. and B.G.; Writing—review and editing, L.L. and B.G. All authors have read and agreed to the published version of the manuscript.

Funding

LL is funded and supported by the Fundamental Research Funds for the Central Universities (Grand No. 30106210158) and National Natural Science Foundation of China (Grant No. 61877023), the Fundamental Research Funds for the Central Universities (CCNU19TD009). BG is supported in part by the U.S. Army Research Laboratory and the U. S. Army Research Office, and by the U.K. Ministry of Defence and the U.K. Engineering and Physical Sciences Research Council (EPSRC) under grant number EP/R013616/1. BG acknowledges partial support from the French National Agency for Research, grants ANR-18-CE40-0016-01 and ANR-18-CE23- 0015-02.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pearson, K. On lines and planes of closest fit to systems of point in space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Spearman, C. “General Intelligence”, Objectively Determined and Measured. Am. J. Psychol. 1904, 15, 201–292. [Google Scholar] [CrossRef]

- Hotelling, H. Analysis of a complex of statistical variables into principal components. J. Educ. Psychol. 1933, 24, 417–441. [Google Scholar] [CrossRef]

- Friedsam, H.; Oren, W.A. The application of the principal curve analysis technique to smooth beamlines. In Proceedings of the 1st International Workshop on Accelerator Alignment, Stanford, CA, USA, 31 July–2 August 1989. [Google Scholar]

- Brunsdon, C. Path estimation from GPS tracks. In Proceedings of the 9th International Conference on GeoComputation, Maynoorth, Ireland, 3–5 September 2007. [Google Scholar]

- Reinhard, K.; Niranjan, M. Parametric Subspace Modeling Of Speech Transitions. Speech Commun. 1999, 27, 19–42. [Google Scholar] [CrossRef]

- Kégl, B.; Krzyżak, A. Piecewise linear skeletonization using principal curves. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 59–74. [Google Scholar] [CrossRef]

- Banfield, J.D.; Raftery, A.E. Ice floe identification in satellite images using mathematical morphology and clustering about principal curves. J. Am. Stat. Assoc. 1992, 87, 7–16. [Google Scholar] [CrossRef]

- Stanford, D.C.; Raftery, A.E. Finding curvilinear features in spatial point patterns: Principal curve clustering with noise. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 601–609. [Google Scholar] [CrossRef]

- Hastie, T.; Stuetzle, W. Principal curves. J. Am. Stat. Assoc. 1989, 84, 502–516. [Google Scholar] [CrossRef]

- Delicado, P. Another Look at Principal Curves and Surfaces. J. Multivar. Anal. 2001, 77, 84–116. [Google Scholar] [CrossRef]

- Einbeck, J.; Tutz, G.; Evers, L. Local principal curves. Stat. Comput. 2005, 15, 301–313. [Google Scholar] [CrossRef]

- Einbeck, J.; Tutz, G.; Evers, L. Data Compression and Regression through Local Principal Curves and Surfaces. Int. J. Neural Syst. 2010, 20, 177–192. [Google Scholar] [CrossRef]

- Malo, J.; Gutiérrez, J. V1 non-linear properties emerge from local-to-global non-linear ICA. Netw. Comput. Neural Syst. 2006, 17, 85–102. [Google Scholar] [CrossRef]

- Ozertem, U.; Erdogmus, D. Locally Defined Principal Curves and Surfaces. J. Mach. Learn. Res. 2011, 12, 1249–1286. [Google Scholar]

- Kégl, B. Principal Curves: Learning, Design, and Applications. Ph.D. Thesis, Concordia University, Montreal, QC, Canada, 1999. [Google Scholar]

- Kégl, B.; Krzyżak, A.; Linder, T.; Zeger, K. Learning and design of principal curves. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 281–297. [Google Scholar] [CrossRef]

- Biau, G.; Fischer, A. Parameter selection for principal curves. IEEE Trans. Inf. Theory 2012, 58, 1924–1939. [Google Scholar] [CrossRef]

- Barron, A.; Birgé, L.; Massart, P. Risk bounds for model selection via penalization. Probab. Theory Relat. Fields 1999, 113, 301–413. [Google Scholar] [CrossRef]

- Birgé, L.; Massart, P. Minimal penalties for Gaussian model selection. Probab. Theory Relat. Fields 2007, 183, 33–73. [Google Scholar] [CrossRef]

- Sandilya, S.; Kulkarni, S.R. Principal curves with bounded turn. IEEE Trans. Inf. Theory 2002, 48, 2789–2793. [Google Scholar] [CrossRef]

- Cesa-Bianchi, N.; Lugosi, G. Prediction, Learning and Games; Cambridge University Press: New York, NY, USA, 2006. [Google Scholar]

- Rudzicz, F.; Ghassabeh, Y.A. Incremental algorithm for finding principal curves. IET Signal Process. 2015, 9, 521–528. [Google Scholar]

- Laparra, V.; Malo, J. Sequential Principal Curves Analysis. arXiv 2016, arXiv:1606.00856. [Google Scholar]

- Laparra, V.; Jiménez, S.; Camps-Valls, G.; Malo, J. Nonlinearities and Adaptation of Color Vision from Sequential Principal Curves Analysis. Neural Comput. 2012, 24, 2751–2788. [Google Scholar] [CrossRef]

- Laparra, V.; Malo, J. Visual Aftereffects and Sensory Nonlinearities from a single Statistical Framework. Front. Hum. Neurosci. 2015, 9. [Google Scholar] [CrossRef]

- Laparra, V.; Jiménez, S.; Tuia, D.; Camps-Valls, G.; Malo, J. Principal Polynomial Analysis. Int. J. Neural Syst. 2014, 24, 1440007. [Google Scholar] [CrossRef]

- Laparra, V.; Malo, J.; Camps-Valls, G. Dimensionality Reduction via Regression in Hyperspectral Imagery. IEEE J. Sel. Top. Signal Process. 2015, 9, 1026–1036. [Google Scholar] [CrossRef]

- Shawe-Taylor, J.; Williamson, R.C. A PAC analysis of a Bayes estimator. In Proceedings of the 10th annual conference on Computational Learning Theory, Nashville, TN, USA, 6–9 July 1997; pp. 2–9. [Google Scholar] [CrossRef]

- McAllester, D.A. Some PAC-Bayesian Theorems. Mach. Learn. 1999, 37, 355–363. [Google Scholar] [CrossRef]

- McAllester, D.A. PAC-Bayesian Model Averaging. In Proceedings of the 12th Annual Conference on Computational Learning Theory, Santa Cruz, CA, USA, 7–9 July 1999; pp. 164–170. [Google Scholar]

- Li, L.; Guedj, B.; Loustau, S. A quasi-Bayesian perspective to online clustering. Electron. J. Stat. 2018, 12, 3071–3113. [Google Scholar] [CrossRef]

- Guedj, B. A Primer on PAC-Bayesian Learning. In Proceedings of the Second Congress of the French Mathematical Society, Long Beach, CA, USA, 10 June 2019; pp. 391–414. [Google Scholar]

- Alquier, P. User-friendly introduction to PAC-Bayes bounds. arXiv 2021, arXiv:2110.11216. [Google Scholar]

- Audibert, J.Y. Fast Learning Rates in Statistical Inference through Aggregation. Ann. Stat. 2009, 37, 1591–1646. [Google Scholar] [CrossRef]

- Hutter, M.; Poland, J. Adaptive Online Prediction by Following the Perturbed Leader. J. Mach. Learn. Res. 2005, 6, 639–660. [Google Scholar]

- Auer, P.; Cesa-Bianchi, N.; Freund, Y.; Schapire, R.E. The Nonstochastic multiarmed Bandit problem. SIAM J. Comput. 2003, 32, 48–77. [Google Scholar] [CrossRef]

- Kleinberg, R.D.; Niculescu-Mizil, A.; Sharma, Y. Regret Bounds for Sleeping Experts and Bandits. In COLT; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Kanade, V.; McMahan, B.; Bryan, B. Sleeping Experts and Bandits with Stochastic Action Availability and Adversarial Rewards. Artif. Intell. Stat. 2009, 3, 1137–1155. [Google Scholar]

- Cesa-Bianchi, N.; Lugosi, G.; Stoltz, G. Minimizing regret with label-efficient prediction. IEEE Trans. Inf. Theory 2005, 51, 2152–2162. [Google Scholar] [CrossRef]

- Neu, G.; Bartók, G. An Efficient Algorithm for Learning with Semi-Bandit Feedback; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8139, pp. 234–248. [Google Scholar]

- Engdahl, E.R.; Villaseñor, A. 41 Global seismicity: 1900–1999. Int. Geophys. 2002, 81, 665–690. [Google Scholar]

- Chung, F.; Lu, L. Concentration Inequalities and Martingale Inequalities: A Survey. Internet Math. 2006, 3, 79–127. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).