Abstract

Hidden Markov model (HMM) is a vital model for trajectory recognition. As the number of hidden states in HMM is important and hard to be determined, many nonparametric methods like hierarchical Dirichlet process HMMs and Beta process HMMs (BP-HMMs) have been proposed to determine it automatically. Among these methods, the sampled BP-HMM models the shared information among different classes, which has been proved to be effective in several trajectory recognition scenes. However, the existing BP-HMM maintains a state transition probability matrix for each trajectory, which is inconvenient for classification. Furthermore, the approximate inference of the BP-HMM is based on sampling methods, which usually takes a long time to converge. To develop an efficient nonparametric sequential model that can capture cross-class shared information for trajectory recognition, we propose a novel variational BP-HMM model, in which the hidden states can be shared among different classes and each class chooses its own hidden states and maintains a unified transition probability matrix. In addition, we derive a variational inference method for the proposed model, which is more efficient than sampling-based methods. Experimental results on a synthetic dataset and two real-world datasets show that compared with the sampled BP-HMM and other related models, the variational BP-HMM has better performance in trajectory recognition.

1. Introduction

Trajectory recognition is important and meaningful in many practical applications, such as human activities recognition [1], speech recognition [2], handwritten character recognition [3] and navigation task with mobile robot [4]. In most practical applications, the trajectory is affected by the hidden features corresponding to each point. The hidden Markov model (HMM) [2], hierarchical conditional random field (HCRF) [5,6] and the HMM-based models, such as the hierarchical Dirichlet process hidden Markov model (HDP-HMM) [7], the Beta process hidden Markov model (BP-HMM) [8,9,10] and the Gaussian mixture model hidden Markov model (GMM-HMM) [2] are used to model sequential data and identify their classes [11,12,13,14].

The HMM is a popular model which has been applied widely in human activity recognition [1,15], speech recognition [2,16] and remote target tracking [2,17]. Besides, the HMM is becoming a more significant part as a building block of smart cities and Industry 4.0 [18,19] and implemented in extensive applications such as driving behaviors prediction [20] and the inernet of thing (IoT) signature anomalies [21]. One drawback of the HMM is having to ensure in advance the number of hidden states that need to be selected or cross-validated. To address this problem, several methods based on model selection are employed, such as BIC [22] or some Bayesian non-parameter prior like the BP [23] and the HDP [24]. Besides, directly using the original HMM for classification has another disadvantage, in which each HMM is trained for one class separately and thus information from different classes cannot be shared. It is worth mentioning that the sampled BP-HMM proposed by Fox et al. [9] can not only learn the number of hidden features automatically but also obtain the sharing features between different classes, which has been proved to be meaningful for human activity trajectory recognition. The sampled BP-HMM learns the shared states among different classes by jointly modeling all trajectories together, in which a hidden state indicator for one trajectory with a BP prior is introduced and thus a state transition matrix for each trajectory is maintained. When used for classification, the sampled BP-HMM calculates the class-specific transition matrix by averaging the transition matrices of the trajectories from the corresponding class. However, from the perspective of performance or efficiency, if the sampled BP-HMM [1,7] is used for classification, there is still a lot of room for improvement.

From the perspective of performance, the classification procedure in the sampled BP-HMM [1] is too rough to make full use of the trained model, in which the state transition matrix for each class is calculated by averaging the transition matrixes of all the trajectories. Obviously, this will lead to the loss of information, especially when the training set has some ambiguous trajectories. For instance, a “running” class has some “jogging” trajectories. One naive method to solve it is to select the K best HMMs for each class. However, it will cost plenty of time to select representatives for each class. In order to take account of both performance and efficiency, we change the way of modeling data in BP-HMMs. Differently from those versions of BP-HMMs [1,8,9,10,25], in variational BP-HMMs, an HMM is created for each class instead of for each trajectory.

From the perspective of efficiency, the existing approximate inference for the BP-HMM is based on sampling methods [1,9] which often converge slowly. This drawback of the sampled BP-HMM [1] is inconvenient to practical applications. To provide a faster convergence rate than sampling methods, we develop variational inference for the BP-HMM. If the variational lower bound is unchanged or almost unchanged, the iteration will stop. To be amenable to the variational method, we use the stick-breaking construction of the BP [26] instead of the Indian buffet process (IBP) construction [27] in the sampled BP-HMM.

In this paper, we propose a variational BP-HMM for trajectory recognition, in which the way of the data modeling and the inference method are novel compared with the previous sampled BP-HMM. On the one hand, the new method of modeling trajectories enables the model to obtain better classification performance. Specifically, the hidden state can be optionally shared, and the class-specific state indicator is more suitable for classification than the trajectory-specific state indicator in the sampled BP-HMM. The transition matrix is actually learned from the data instead of averaging all the trajectory-specific transitions. On the other hand, the derived variational inference of the BP-HMM makes the model more efficient. In particular, we use the two-parameter BP as the prior of the class-specific state indicator, which is more flexible than the one-parameter Indian buffet process in the sampled BP-HMM. We apply our model to the navigation task of mobile robots and human activity trajectory recognition. Experimental results on the synthetic and real-world data show that the proposed variational BP-HMM with sharing hidden states has advantages to trajectory recognition.

The remainder of this paper is organized as follows. Section 2 gives an overview of the BP and HMM. In Section 3, we review the model assumption of the sampled BP-HMM. In Section 4, we present the proposed variational BP-HMM including the model setting and its variational inference procedure. Experimental results on both synthetic and real-world datasets are reported in Section 5. Finally, Section 6 gives the conclusion and future research directions.

2. Preliminary Knowledge

In order to explain the variational BP-HMM more clearly, the key related backgrounds including BP and HMM will be introduced in the following sub-sections.

2.1. Beta Process

The BP is defined by Hjort [28] for applications in survival analysis. It is a significant application as a non-parametric prior for latent factor models [23,26], and used as a non-parameter prior for selecting the hidden state set of the HMM [8,9,25]. At the beginning, the BP is defined on the positive real line then extended to more general spaces (e.g., ).

A BP, , is a positive Lévy process. Here, is the concentration parameter and is a fixed measure on . Let . The is formulated as

where are atoms in B. If is continuous, the Lévy measure of the BP is expressed as

If is discrete, in the form of , the atoms in B and have the same location. It can be represented as follows

As and , B represents a BP [29].

The BP is conjugate to a class of Bernoulli process, denoted by . For example, we define a Bernoulli process . In this article, we focus on the discrete Bernoulli process in the form of , and then the Bernoulli process can be expressed as , where , is the independent Bernoulli variable with the probability . If B is a BP, then

is called the Beta-Bernoulli process.

Similarly to Dirichlet process which has two principle methods for drawing samples, (1) the Chinese restaurant process [30], (2) the stick-breaking process [31], the BP generates samples using the Indian buffet process (IBP) [23] and the stick-breaking process [29].

The original IBP can be seen as a special case of the general BP, i.e., an IBP is a one-parameter BP. Similarly to the Chinese restaurant process, the IBP is described in the view of customers choosing dishes. It is also employed to construct two-parameter BPs but with some details changed. Specifically, the procedure for constructing BP is as follows:

- The first customer takes the first dishes.

- The nth customer then takes dishes that have been previously sampled with probability , where is the number of people who have already sampled the dish k. He also takes Poisson new dishes.

The BP has been shown as a de Finetti mixing distribution underlying the Indian buffet process, and an algorithm has been presented to generate the BP [23].

The stick-breaking process of the BP, , is provided by Paisley et al. [29]. It is formulated as follows.

It is clearly shown from the above equations that in every round (indexed by i), atoms have been selected, the weights of them follow an i-times stick-breaking process in which each breaking has the probability and is drawn from .

2.2. Hidden Markov Models

The HMM [2] is a state space model where each sequence uses a Markov chain of discrete latent variables, with each observation conditioned on the state of the corresponding latent variables. Obviously, they are appropriate to model the data varying over time, and the data can be considered to be generated by the process that switches between different phases or states at different time-points. The HMM has been proved as a valuable tool in human activity recognition, speech recognition and many other popular areas [32].

Suppose that the trajectory observation is an matrix and is a N dimensional latent variable vector which has a value set with size K. The joint distribution of X and Z is expressed as

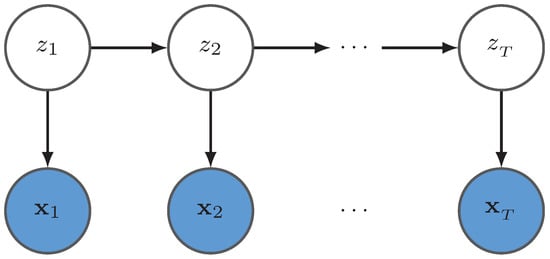

where , and A is a matrix with , with , and is a K dimensional vector with with . Furthermore, in the nonparametric version of HMM, the matrix can be assumed to obey a Dirichlet distribution, i.e., where The probabilistic graphical model is represented in Figure 1.

Figure 1.

The probabilistic graphical model for an HMM where represents an observation sequence and represents the corresponding hidden state sequence.

If is discrete with value set in the size of D, is a matrix with element . and are named respectively as the transition matrix and emission matrix. If is continuous, the emission matrix will be replaced by the emission distribution, where is often defined as a distribution like Gaussian distribution , . In the fully Bayesian framework, can be regarded as random variables with distribution like normal inverse Wishart or Gaussian with Gamma distribution.

Marginal likelihood is often used to evaluate how an HMM is fit for the trajectories. Therefore, the HMM is usually trained by maximizing the marginal likelihood over the training trajectories. Baum–Welch (BW) algorithm, as an EM method is a famous algorithm for learning parameters of HMMs. The parameters include the transition matrix, the initial state distribution and the emission matrix (distribution’s parameters). In the BW algorithm, the forward-backward algorithm is employed to calculate the marginal probability. It should be noted that since the BW algorithm can only find the local optimum, multiple initializations are usually used to obtain better solutions. Given the learned parameters, the most likely state sequence corresponding to a trajectory is required in many practical applications. Viterbi algorithm is an effective method to obtain the most probable state sequence.

HMMs are a kind of generative model; they model the distribution of all the observed data. In trajectory classification tasks, such as activity trajectory recognition, different HMMs are used to model different classes of trajectories separately. After training these HMMs, the parameters in different HMMs are used to evaluate the newly come trajectory to find the most probable class. Specifically, to model large multiple trajectories from different classes, a separate HMM is defined for each class of trajectories, where represents its parameters. Given the trained HMMs, the class label of a new test trajectory is determined according to

where can be calculated using the forward-backward algorithm.

3. The Sampled BP-HMM

The sampled BP-HMM [9] is proposed to discover the available hidden states and the sharing patterns among different classes. It jointly models multiple trajectories and learns a state transition matrix for each trajectory. The sampled BP-HMM is successfully applied to trajectory recognition tasks, such as human activity trajectory recognition [1,10].

The sampled BP-HMM uses HMMs to model all the trajectories from all the classes and uses the BP as the prior of the indicator variables with each one corresponding to one trajectory. Suppose where is the trajectory. Each trajectory is modeled by an HMM. These HMMs share a global hidden feature set with the size of ∞. The sampled BP-HMM uses a hidden state selection matrix F with the size of to indicate the available states for each trajectory, i.e., indicators whether the HMM owns the state. The prior of the transition matrix for each trajectory is related to F, The transition matrix of the HMM is

and the initial state probability vector is also related to F,

Similarly to the standard HMM, the latent variable is a discrete sequence with

and the emission distribution of the HMM is

In order to build a non-parameter model, the hidden states selection matrix F is constructed by a BP−BeP.

From the perspective of the characteristic of BPs, we can find that the greater the concentration parameter , the sparser the hidden state selection matrix F, and greater will lead to more hidden features.

Given the above model assumptions, the sampled BP-HMM uses the Gibbs sampling method to train the model and uses the gradient based method to learn the parameters. With the state transition matrix for each trajectory being learned, the average state transition matrix for each class can be calculated by the mean operation. The new test trajectories are classified according to their likelihood probabilities conditional on each class.

4. The Proposed Variational BP-HMM

In this section, we will introduce the proposed variational BP-HMM which has more reasonable assumptions and more efficient inference procedure than the sampled BP-HMM. We first describe key points of our model and present our stick-breaking representation for the BP which allows for variational inference. Then we give the joint distribution of the proposed BP-HMM and the variational inference for the BP-HMM.

4.1. BP-HMM with the Shared Hidden State Space and Class Specific Indicators

As introduced above, the existing sampled BP-HMM can jointly learn the trajectories from different classes by sharing a same hidden state space. It can also automatically determine the available states and the corresponding transition matrices for one trajectory by the introducing state selection matrix F. However, in the sampled BP-HMM, the state transition matrix and initial probabilities are trajectory-specific, and it is not appropriate to perform mean operation on these transition matrices and probabilities to obtain a average matrix and probabilities for each class.

In order to model trajectories from different classes more reasonably, we introduce a shared hidden state space and class-specific indicators. We define a state selection vector for each class which are used to distinguish the differences between classes and define state initial probabilities and transition matrix for each class which are used to capture the commonness with one class. The transition matrix of the cth class from state j is

and the initial state probability vector is also related to F,

Similarly to the standard HMM, the latent variable for the nth trajectory is a discrete sequence with

where denotes the class of nth trajectory.

From the way of modeling, the proposed new version of the BP-HMM is different from the sampled BP-HMM [1] which learns an HMM for each trajectory, and it is also different from the traditional HMMs which learn an HMM for each class separately. The proposed BP-HMM can use all the sequences from different classes to jointly train a whole BP-HMM with each HMM corresponding to one class. Therefore, the proposed BP-HMM can better model the trajectories from multiple classes and can further make better classification.

4.2. A Simpler Representation for Beta Process

Besides the model assumption, the proposed variational BP-HMM has different representation of the BP. As introduced in Section 2, the IBP construction of the BP describes the process by conditional distributions. This kind of representation is only suitable for sampling methods which are similar to the Chinese restaurant construction of DPs. Therefore, different from the sampled BP-HMM which uses the IBP construction for the BP to lend it to a Gibbs sampler, we use the stick-breaking construction for the BP to adapt to variational inference. There is some work in constructing stick-breaking representation of BPs for variational inference. The stick-breaking construction is used for the IBP which is closely related to the BP and can be seen as a one-parameter BP [26]. The two-parameter BP is also constructed through stick-breaking processes to server for variational inference [29]. Recently, a simpler representation of the two-parameter BP based on stick-breaking construction is developed to make simpler variational inference [33]. In order to approximate posterior inference to the BP with variational Bayesian method more easily, we refer to the simpler representation of the BP [33]. Let mark the round in which the atom appears. That is,

Note is a binary indicator and it equals to 1 if the formula is true. Using the latent indicators, the representation of B in (6) is simplified as

with and V drawn as before.

Let . Since each individual term , it follows that . This gives the following representations of the BP,

Here we should notice that each does not have a distribution, but the cardinality of is drawn by . In addition, with probability one when . In this BP, the atom and Gamma priors with hyper-parameters , are given to and :

4.3. Joint Distribution of the Proposed BP-HMM

Assume that the total class number is C and the trajectory number is N. Let X represent the data, , , , , , , , , represents the set of all latent variables in the model, including which is the set of all the hyper-parameters, and Y is the set of all the class labels.

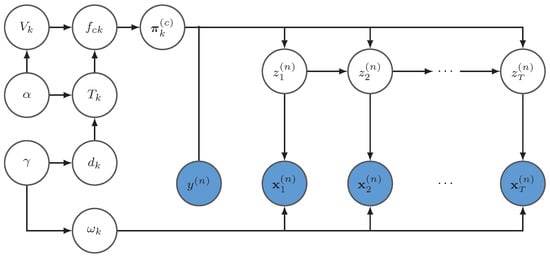

The probabilistic graphical model is shown in Figure 2, where its joint likelihood is

Figure 2.

This is the probabilistic graphical model of the proposed variational BP-HMM. is the nth observed trajectory, is the hidden state sequence of the nth trajectory, and is the class label of the trajectory which indicates choosing the state transition probabilities from the class it belongs to. In this graphical model, we omit the hyper-parameters.

The likelihood is defined as a multi-normal distribution by

The prior distribution of the parameter W and detailed setup are expressed in Appendix A.

4.4. Variational Inference for the Proposed BP-HMM

We use a factorized variational distribution over all the latent variables to approximate the intractable posterior . Two truncations are set in the inference: one is truncation of the number of hidden states at K and the other is the truncation of the round number at R. Specifically, we assume the variational distribution as

where

It is obvious that and do not have conjugate posterior. Thus the distributions are selected for better accuracy and more convenience. Here is an estimation of the probability of the initial state distribution, , where and is an estimation of the probability of transition from state to and is an estimation of the emission probability density given the system in state j at time point t. In order to simplify our representation, we do not use sub-index. Here , . Let be the set of variational parameters. We expand the lower bound as which is expressed in detail in Appendix B.

4.5. Parameter Update

In the framework of variational mean field approximation, the parameters of some variational distributions can be analytically solved using

However, in some cases that the prior distribution and posterior distribution over one latent variable are not conjugate, the variational distribution over this variable cannot have an analytical solution. The parameters of this variational distribution should be optimized through gradient based methods with the variational lower bound being the objective.

In our model, the variational distributions , , , , , have a closed form solution, and we can get their parameter update formulas according to (23). While the variational distributions , , cannot be analytically solved, we can update their parameters by corresponding gradients. Next, we give the way of calculating variational distributions and show the procedure for training the variational BP-HMM in Algorithm 1. The detailed parameters update formulas or the gradients with respect to the parameters are presented in Appendix C.

| Algorithm 1 Variational Inference for the Proposed BP-HMM |

|

4.5.1. Calculation for , , , , ,

4.5.2. Optimization for , ,

The variational parameters of , , include , , . They are updated by the gradient based method where the gradients of the lower bound with respect to these parameters should be calculated.

4.5.3. Remarks

Note that when updating the BP parameters, we should calculate the expectation as

of which the second term is intractable. We refer the work in [33] to use a Taylor expansion to about the point one,

For clarity, we define each term in the Taylor expansion using the notation as

and define . Therefore, .

4.6. Classification

Our model is applicable to trajectory recognition like human activity trajectory recognition. We use the proposed variational BP-HMM to model all the training data from different classes, with each HMM corresponding to a class. Given the learned model with the hyperparameters and variational parameters , a new test trajectory can be classified according to its marginal likelihood . Denote as the label of the test trajectory; the classification criteria can be expressed as

where is an estimate of the probability of transition from state j to k in the cth class. The likelihood can be calculated through the forward-backward algorithm.

This classification mechanism is more reasonable than the method in [1], as the transition matrix is actually learned.

5. Experiment

To demonstrate the effectiveness of our model on trajectory recognition, we conduct experiments on one synthetic dataset and two real-world datasets; the detailed data statistics are illustrated in Table 1 and the following subsections. We compare our model with HCRF, LSTM [34], HMM-BIC and the sampled BP-HMM. In particular, in HCRF, the number of hidden states is set to 15 and the size of the window is set to 0. In LSTM, we use a recurrent neural network with one hidden layer as its architecture. In HMM-BIC, the state number is selected from the range . In the sampled BP-HMM, the hyperparameters are set according to Sun et al. [1]. In the variational BP-HMM, the hyperparameters are randomly initialized and selected by maximizing the variational lower bound, and the emission hyperparameters are initialized with k-means. Particularly, the state truncation parameters in variational BP-HMM are set according to specific datasets, e.g., for the synthetic data and for the two real-world data. All experiments are repeated ten times with different training and test division methods, and the average classification accuracy with the standard deviation is reported.

Table 1.

Data statistics for the CCP, HATR and WFNT datasets and corresponding classes.

5.1. Synthetic Data

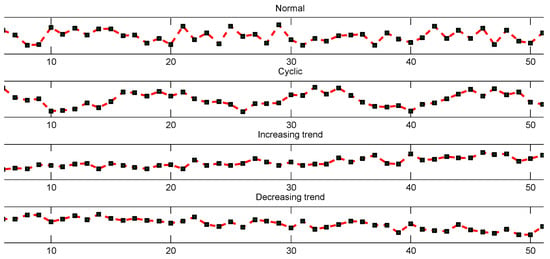

The synthetic data called control chart patterns (CCP) have some quantifiable similarities. They contain four pattern types which can be downloaded from the UCI machine learning repository. The CCP are trajectories that show the level of a machine parameter plotted against time. 400 trajectories are artificially generated by the following four equations [35]:

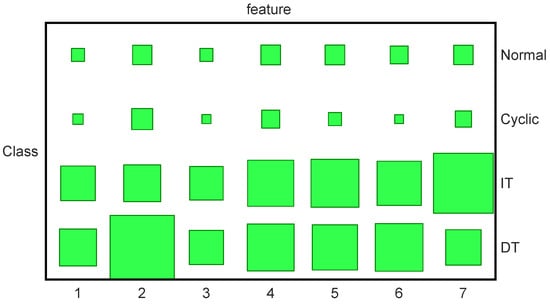

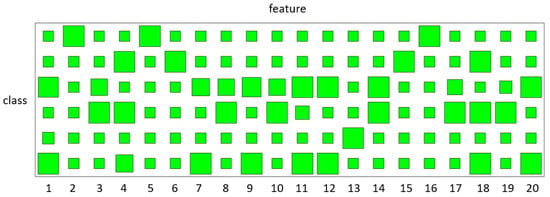

Figure 3 shows the generated synthetic data. In this experiment, 20 trajectories are used for training with 5 trajectories for each class. The classification results are presented in Table 2. The results are obtained through five-fold cross-validation. In order to illustrate that the sharing patterns have been learned by our method, the Hinton diagrams of the variational parameter V are given in Figure 4, where the occurrence probabilities of the hidden states are presented by the sizes of the blocks. For example, we can find that IT and DT share the 4th, 5th, 6th features.

Figure 3.

Examples of control chart patterns.

Table 2.

Comparisons of the classification accuracy for the proposed method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled BP-HMM in CCP.

Figure 4.

Selection results of hidden states for four classes on control chart patterns; these four classes are normal pattern (Normal), cyclic pattern (Cyclic), increasing trend (IT) and decreasing trend (DT). The occurrence probabilities of the hidden states are presented by the sizes of the green blocks. The large size of the green blocks represents high occurrence probability of hidden states.

We compare our method with HCRF, LSTM, HMM-BIC and the sampled BP-HMM. As we can see from Table 2, our method outperforms all the other methods.

In this experiment, the sharing patterns contribute to improving the performance. Since an HMM is created for each class of trajectories in our proposed method instead of each trajectory in the sampled BP-HMM, our method has better performance than the sampled BP-HMM.

5.2. Human Activity Trajectory Recognition

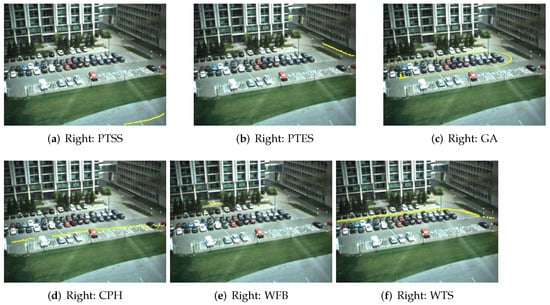

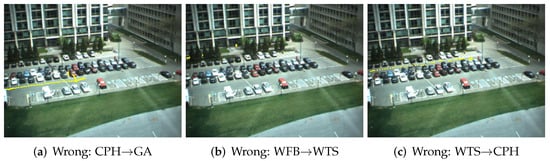

Human activity trajectory recognition (HATR) [36] is important in many applications such as health care. In our human activity trajectory recognition experiment, parking lot data are collected from the video [1]. We use the data tagged manually [1], which has 300 trajectories with 50 trajectories for each class. Six classes are defined, which are “passing through south street” (PTSS), “passing through east street” (PTES), “going around” (GA), “crossing park horizontally” (CPH), “wandering in front of building” (WFB) and “walking in top street” (WTS). As seen from [1], the sampled BP-HMM is the best method among the methods including HCRF, LSTM, HMM-BIC and the sampled BP-HMM in HATR. Here we use the same training and test data to compare the variational BP-HMM with the sampled BP-HMM. Table 3 shows the comparisons of the classification accuracy for the proposed method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled-BP-HMMs in HATR. The results are obtained through five-fold cross-validation. As can be seen from Table 3, the accuracy of our method is , while the accuracy of the sampled BP-HMM is [1]. The detailed confusion matrix for our method is given in Table 4. The state sharing patterns learned by variational BP-HMM are displayed with the Hinton diagrams in Figure 5, in which GA and CPH, as well as GA and WTS, are more likely to share states. The good performance verifies the superiority of modeling an HMM for each class. Moreover, we take some examples of the correct classification and misclassification results for visualization as in Figure 6 and Figure 7. As illustrated in Figure 7, the misclassified trajectories often contain some deceptive subpatterns such as the trajectory of CPH in subfigure (d) containing a back turn and a left turn like the GA class.

Table 3.

Comparisons of the classification accuracy for the proposed method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled BP-HMM in HATR.

Table 4.

Classification accuracy for human activity trajectory recognition.

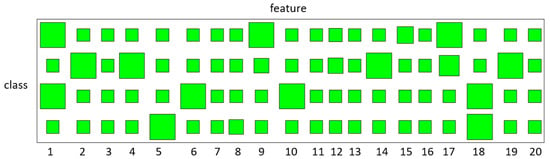

Figure 5.

Selection results of hidden states for different classes on human activity trajectory recognition. The occurrence probabilities of the hidden states are presented by the sizes of the green blocks. The large size of the green blocks represents high occurrence probability of hidden states.

Figure 6.

Correct classification results of HATR dataset for the classes: PTSS, PTES, GA, CPH, WFB, WTS, respectively.

Figure 7.

Misclassification results of HATR dataset for the three classes: CPH, WFB and WTS which are misclassified to GA, WTS and CPH, respectively.

5.3. Wall-Following Navigation Task

We perform the Wall-Following navigation task (WFNT) in which data are collected from the sensors on the mobile robot SCITOS-G5 [4]. We think that this task is a trajectory with historical data, and two ultrasound sensors datasets are selected, because the cost is as low as possible in civil applications with acceptable accuracy. There are 187 trajectories in the data and four classes need to be recognized, which are “front distance” (F), “left distance” (L), “right distance” (R) and “back distance” (B). We randomly select 40 training trajectories with 10 for each class. The confusion matrix of classification is shown in Table 5 and the state sharing patterns learned by variational BP-HMM are displayed with the Hinton diagrams in Figure 8, where R and F, as well as R and B, have a small number of shared states.

Table 5.

The confusion matrix of the Wall-Following navigation recognition.

Figure 8.

Selection results of hidden states for different classes on the Wall-Following navigation recognition. The occurrence probabilities of the hidden states are presented by the sizes of the green blocks. The large size of the green blocks represents high occurrence probability of hidden states.

The comparison of the classification accuracy for our method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled BP-HMM is shown in Table 6. The results are obtained by five-fold cross-validation. It is obvious that our method is much better than the sampled BP-HMM, because we create an HMM for each class of trajectories rather than create an HMM for each trajectory. Although the sharing patterns are not obvious in this experiment, our method has better performance than the other methods. As we have analyzed, sharing patterns among different classes will be learned automatically by our model, which helps to localize precisely the difference of different classes. When there is no sharing pattern among classes, the advantage will be weakened.

Table 6.

Comparisons of the classification accuracy for the proposed method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled BP-HMM in WFNT.

5.4. Performance Analysis

In our experiments, the results show that the proposed variational BP-HMM has a great improvement compared to the sampled BP-HMM which uses average transition over trajectories from each class. We analyze the advantages of variational BP-HMM for the following reasons. Due to the small amount of training data in our experiment, the performance of LSTM is not satisfactory. HMM-BIC finds an optimal state number through model selection but it cannot make use of the shared information among classes, and its performance is the second-best overall. Although the sample BP-HMM can share hidden states among classes, it does not make correct use of the shared information in classification and thus does not gain better results. Our proposed variational BP-HMM constructs a mechanism to learn shared hidden states by introducing state indicator variables and maintains class-specific state transition matrices which are very helpful for classification tasks.

Moreover, we give the total cost time of the variational BP-HMM, HMM-BIC, LSTM, HCRF and the sampled BP-HMM in Table 7, where we can see the variational BP-HMM performs much more efficiently than the sampled BP-HMM. This is attributed to the efficiency of the variational methods. Although the sampled BP-HMM and the variational BP-HMM have similar time complexity, due to the sampling operation, the cost time of the sampled BP-HMM is usually several times that of the variational BP-HMM. In other words, the variational BP-HMM converges much faster than the sampled BP-HMM. Besides, compared with HMM-BIC, it only takes about twice the time to achieve significant performance improvements. Above all, we can conclude that the proposed variational BP-HMM is an effective and efficient method for trajectory recognition.

Table 7.

Comparisons of total time cost for the proposed method VBP-HMM versus HCRF, LSTM, HMM-BIC and the sampled-BP-HMM in experiments.

6. Conclusions

In this paper, we have proposed a novel variational BP-HMM for modeling and recognizing trajectories. The proposed variational BP-HMM has shared hidden state space which is used to capture the commonality of the cross-category data and class-specific indicators which are used to distinguish the data from different classes. As a result, in the variational BP-HMM, multiple HMMs are used to model multiple classes of trajectories among which a hidden state space is shared.

The more reasonable assumptions of the proposed model make it more suitable for jointly modeling trajectories over all classes and further making trajectory recognition. Experimental results both on synthetic and real-world data have verified that the proposed variational BP-HMM can find the feature sharing patterns among different classes, which helps to better model trajectories and further improve the classification performance. Moreover, compared with the sampled BP-HMM, the derived variational inference for the proposed BP-HMM can reduce the time cost of the training procedure. The experimental time records also show the efficiency of the proposed variational BP-HMM.

Author Contributions

Conceptualization, J.Z. and S.S.; methodology, J.Z. and S.S.; Software, J.Z., Y.Z. and H.D.; formal analysis, J.Z. and Y.Z.; writing—original draft preparation, J.Z.; writing—review and editing, J.Z., Y.Z. and S.S.; supervision, J.Z. and S.S.; Visualization, Y.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the NSFC Projects 62006078 and 62076096, the Shanghai Municipal Project 20511100900, Shanghai Knowledge Service Platform Project ZF1213, the Shanghai Chenguang Program under Grant 19CG25, the Open Research Fund of KLATASDS-MOE and the Fundamental Research Funds for the Central Universities.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available in a publicly accessible repository. The data presented in this study are openly available in UCI Machine Learning Repository at http://archive.ics.uci.edu/ml/index.php, accessed on 27 September 2021, reference number [4,35,36].

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. The Prior Distribution of the Parameter W

Denote as d. The prior distribution of the parameter W is expressed as

We use

to account for the class in which an atom appears. Two terms and are given by Paisley et al. [33],

where , and

In , the indicator is used for selecting the Gamma prior parameters of . Moreover, the term in (21) will be removed if . The binary indicator in means that at least one of the K indexed atoms occur in round r or the round after r.

Appendix B. The Lower Bound

We expand the lower bound as which is expressed as

Note that we multiply the entropy of , , by the variational probability as done in [33] for keeping the entropy of from blowing up when , where .

Appendix C. Coordinate Update for the Key Distributions

Appendix C.1. Coordinate Update for q(fck)

Since the Dirichlet distribution of , cannot be obtained by analysis directly. The gradient ascent algorithm is used for updating . The derivative of with respect to is

Appendix C.2. Coordinate Update for q(dk)

The update for each is given below for . Let

If ,

If ,

where , and .

Appendix C.3. Coordinate Update for q(Vk)

We use the gradient ascent algorithm to jointly update by the gradients of the lower bound with respect to . Let , , and . The derivatives are

Since can be expanded as and its derivative is , we can get

and

Appendix C.4. Coordinate Update for q(Tk)

We use the gradient ascent algorithm to jointly update by the gradients of the lower bound with respect to . The derivatives are

where

Appendix C.5. Coordinate Update for q(α)

The update formulae for are

It is shown that has nothing to do with the update of .

Appendix C.6. Coordinate Update for q(γ)

The update formulae for are

It can be seen from above that does not change with iterations while depends on .

Appendix C.7. Coordinate Update for q(μk,Σk)

The variational parameter update of is analytical and the update formulae are

Appendix C.8. Coordinate Update for q(πj(c))

In order to update , two cases, and , should be analyzed. For , the logarithmic distribution of is updated as

where

and

Here B is the normalizing constant of the Dirichlet distribution . We can get

Thus the parameters for the Dirichlet distribution are formulated as

and

For , is the prior probability of the hidden states. We can obtain

Appendix C.9. Coordinate Update for q(Z)

For each class of trajectories,

where p is the dimension of and

From the above, we need the marginal probabilities and . The detailed calculations are as follows. Both of them can be calculated by the forward-backward algorithm. The forward procedure is

The backward procedure is

Thus, the expressions of the posterior distributions are

Now we can update the variational parameters in the BP-HMM according to the above equations. We judge the convergence of this update according to the change of the lower bound.

References

- Sun, S.; Zhao, J.; Gao, Q. Modeling and recognizing human trajectories with beta process hidden Markov models. Pattern Recognit. 2015, 48, 2407–2417. [Google Scholar] [CrossRef]

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Braiek, E.; Aouina, N.; Abid, S.; Cheriet, M. Handwritten characters recognition based on SKCS-polyline and hidden Markov model (HMM). In Proceedings of the International Symposium on Control, Communications and Signal Processing, Hammamet, Tunisia, 21–24 March 2004; pp. 447–450. [Google Scholar]

- Freire, A.L.; Barreto, G.A.; Veloso, M.; Varela, A.T. Short-term memory mechanisms in neural network learning of robot navigation tasks: A case study. In Proceedings of the Latin American Robotics Symposium, Valparaiso, Chile, 29–30 October 2009; pp. 1–6. [Google Scholar]

- Gao, Q.B.; Sun, S.L. Trajectory-based human activity recognition using hidden conditional random fields. In Proceedings of the International Conference on Machine Learning and Cybernetics, Xi’an, China, 15–17 July 2012; Volume 3, pp. 1091–1097. [Google Scholar]

- Bousmalis, K.; Zafeiriou, S.; Morency, L.P.; Pantic, M. Infinite hidden conditional random fields for human behavior analysis. IEEE Trans. Neural Netw. Learn. Syst. 2012, 24, 170–177. [Google Scholar] [CrossRef] [PubMed]

- Gao, Q.; Sun, S. Trajectory-based human activity recognition with hierarchical Dirichlet process hidden Markov models. In Proceedings of the International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013; pp. 456–460. [Google Scholar]

- Fox, E.B.; Hughes, M.C.; Sudderth, E.B.; Jordan, M.I. Joint modeling of multiple time series via the beta process with application to motion capture segmentation. Ann. Appl. Stat. 2014, 8, 1281–1313. [Google Scholar] [CrossRef] [Green Version]

- Fox, E.; Jordan, M.I.; Sudderth, E.B.; Willsky, A.S. Sharing features among dynamical systems with beta processes. Adv. Neural Inf. Process. Syst. 2009, 22, 549–557. [Google Scholar]

- Gao, Q.B.; Sun, S.L. Human activity recognition with beta process hidden Markov models. In Proceedings of the International Conference on Machine Learning and Cybernetics, Tianjin, China, 14–17 July 2013; Volume 2, pp. 549–554. [Google Scholar]

- Gao, Y.; Villecco, F.; Li, M.; Song, W. Multi-scale permutation entropy based on improved LMD and HMM for rolling bearing diagnosis. Entropy 2017, 19, 176. [Google Scholar] [CrossRef] [Green Version]

- Filippatos, A.; Langkamp, A.; Kostka, P.; Gude, M. A sequence-based damage identification method for composite rotors by applying the Kullback–Leibler divergence, a two-sample Kolmogorov–Smirnov test and a statistical hidden Markov model. Entropy 2019, 21, 690. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Granada, I.; Crespo, P.M.; Garcia-Frías, J. Combining the Burrows-Wheeler transform and RCM-LDGM codes for the transmission of sources with memory at high spectral efficiencies. Entropy 2019, 21, 378. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, C.; Pourtaherian, A.; van Onzenoort, L.; Ten, W.E.T.a.; de With, P.H.N. Infant facial expression analysis: Towards a real-time video monitoring system using R-CNN and HMM. IEEE J. Biomed. Health Inform. 2021, 25, 1429–1440. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Todorovic, S. Action shuffle alternating learning for unsupervised action segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, virtual meeting, 19–25 June 2021; pp. 12628–12636. [Google Scholar]

- Zhou, W.; Michel, W.; Irie, K.; Kitza, M.; Schlüter, R.; Ney, H. The rwth ASR system for ted-lium release 2: Improving hybrid HMM with specaugment. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 7839–7843. [Google Scholar]

- Zhu, Y.; Yan, Y.; Komogortsev, O. Hierarchical HMM for eye movement classification. In European Conference on Computer Vision Workshops; Springer: Cham, Switzerland, 2020; pp. 544–554. [Google Scholar]

- Lom, M.; Pribyl, O.; Svitek, M. Industry 4.0 as a part of smart cities. In Proceedings of the 2016 Smart Cities Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2016; pp. 1–6. [Google Scholar]

- Castellanos, H.G.; Varela, J.A.E.; Zezzatti, A.O. Mobile Device Application to Detect Dangerous Movements in Industrial Processes Through Intelligence Trough Ergonomic Analysis Using Virtual Reality. In The International Conference on Artificial Intelligence and Computer Vision; Springer: Cham, Switzerland, 2021; pp. 202–217. [Google Scholar]

- Deng, Q.; Söffker, D. Improved driving behaviors prediction based on fuzzy logic-hidden markov model (fl-hmm). In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 2003–2008. [Google Scholar]

- Fouad, M.A.; Abdel-Hamid, A.T. On Detecting IoT Power Signature Anomalies using Hidden Markov Model (HMM). In Proceedings of the 2019 31st International Conference on Microelectronics (ICM), Cairo, Egypt, 15–18 December 2019; pp. 108–112. [Google Scholar]

- Nascimento, J.C.; Figueiredo, M.A.; Marques, J.S. Trajectory classification using switched dynamical hidden Markov models. IEEE Trans. Image Process. 2009, 19, 1338–1348. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thibaux, R.; Jordan, M.I. Hierarchical beta processes and the Indian buffet process. In Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics, San Juan, Puerto Rico, 21–24 March 2007; pp. 564–571. [Google Scholar]

- Teh, Y.W.; Jordan, M.I.; Beal, M.J.; Blei, D.M. Sharing clusters among related groups: Hierarchical Dirichlet processes. Adv. Neural Inf. Process. Syst. 2004, 17, 1385–1392. [Google Scholar]

- Hughes, M.C.; Fox, E.; Sudderth, E.B. Effective split-merge monte carlo methods for nonparametric models of sequential data. Adv. Neural Inf. Process. Syst. 2012, 25, 1295–1303. [Google Scholar]

- Teh, Y.W.; Grür, D.; Ghahramani, Z. Stick-breaking construction for the Indian buffet process. In Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics, San Juan, Puerto Rico, 21–24 March 2007; pp. 556–563. [Google Scholar]

- Griffiths, T.L.; Ghahramani, Z. Infinite latent feature models and the Indian buffet process. Adv. Neural Inf. Process. Syst. 2005, 18, 475–482. [Google Scholar]

- Hjort, N.L. Nonparametric Bayes estimators based on beta processes in models for life history data. Ann. Stat. 1990, 18, 1259–1294. [Google Scholar] [CrossRef]

- Paisley, J.W.; Zaas, A.K.; Woods, C.W.; Ginsburg, G.S.; Carin, L. A stick-breaking construction of the beta process. In Proceedings of the 27th International Conference on Machine Learning (ICML 2010), Haifa, Israel, 21–24 June 2010; pp. 847–854. [Google Scholar]

- Ferguson, T.S. A Bayesian analysis of some nonparametric problems. Ann. Stat. 1973, 1, 209–230. [Google Scholar] [CrossRef]

- Selhuraman, J. A constructive definition of the Dirichlet prior. Statist. Sin. 1994, 2, 639–650. [Google Scholar]

- Cao, Y.; Li, Y.; Coleman, S.; Belatreche, A.; McGinnity, T.M. Adaptive hidden Markov model with anomaly states for price manipulation detection. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 318–330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Paisley, J.W.; Carin, L.; Blei, D.M. Variational Inference for Stick-Breaking Beta Process Priors. In Proceedings of the 28th International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 889–896. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Alcock, R.J.; Manolopoulos, Y. Time-series similarity queries employing a feature-based approach. In Proceedings of the 7th Hellenic Conference on Informatics, Ioannina, Greece, 26–29 August 1999; pp. 27–29. [Google Scholar]

- Ziaeefard, M.; Bergevin, R. Semantic human activity recognition: A literature review. Pattern Recognit. 2015, 48, 2329–2345. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).