Gait Recognition Method of Underground Coal Mine Personnel Based on Densely Connected Convolution Network and Stacked Convolutional Autoencoder

Abstract

:1. Introduction

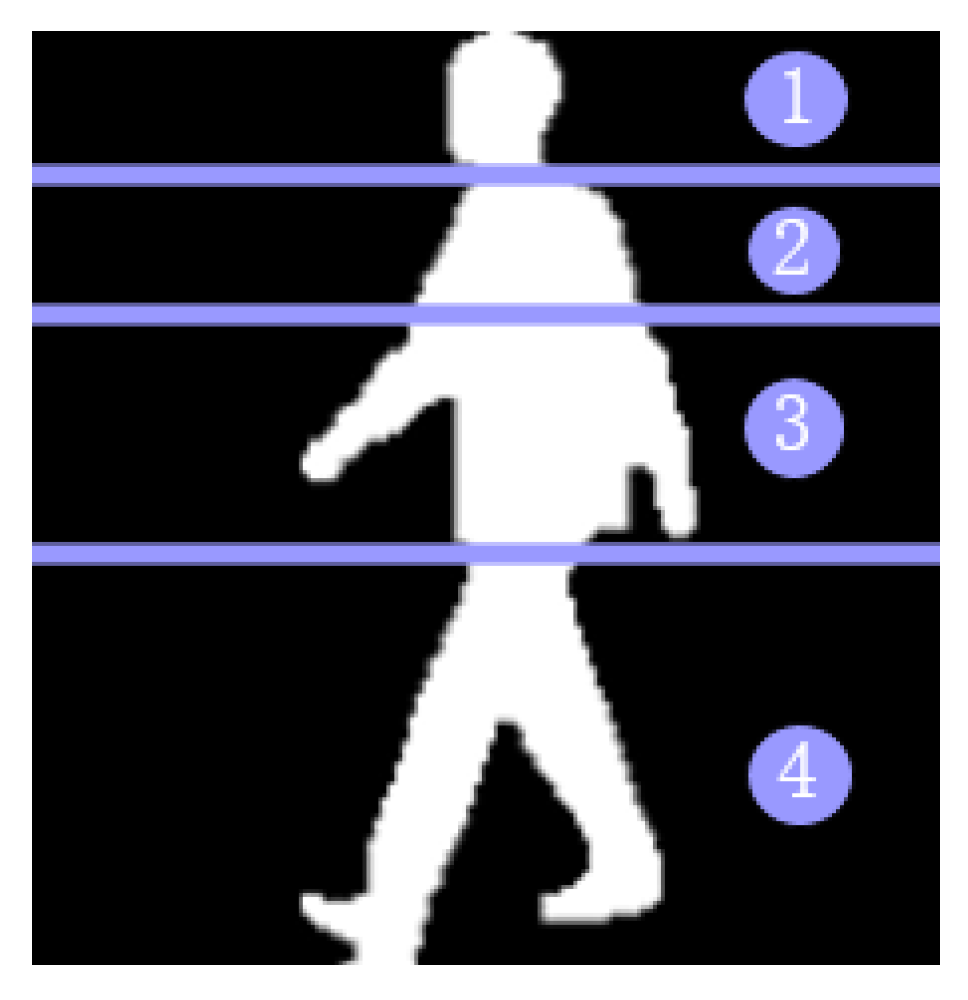

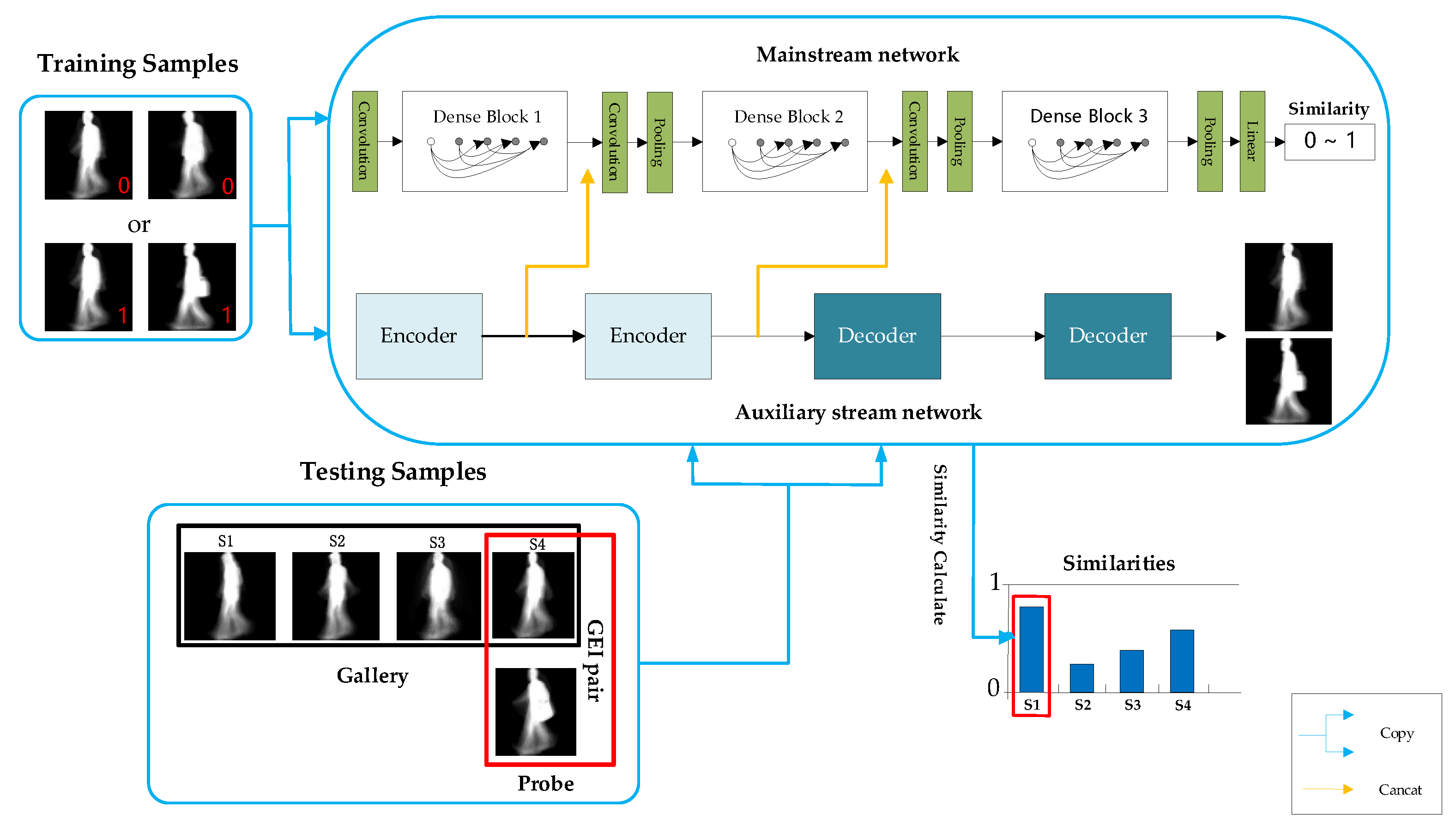

2. Overview of the Proposed TS-Net Model

3. Dynamic and Static Feature Extraction, Fusion, and Recognition

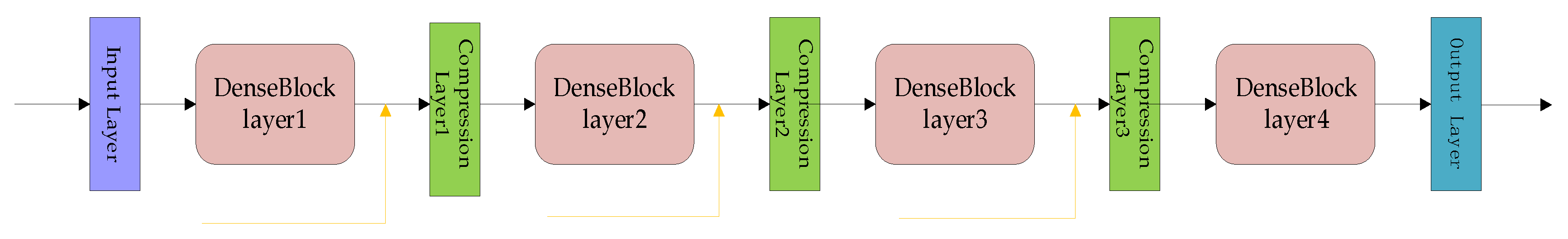

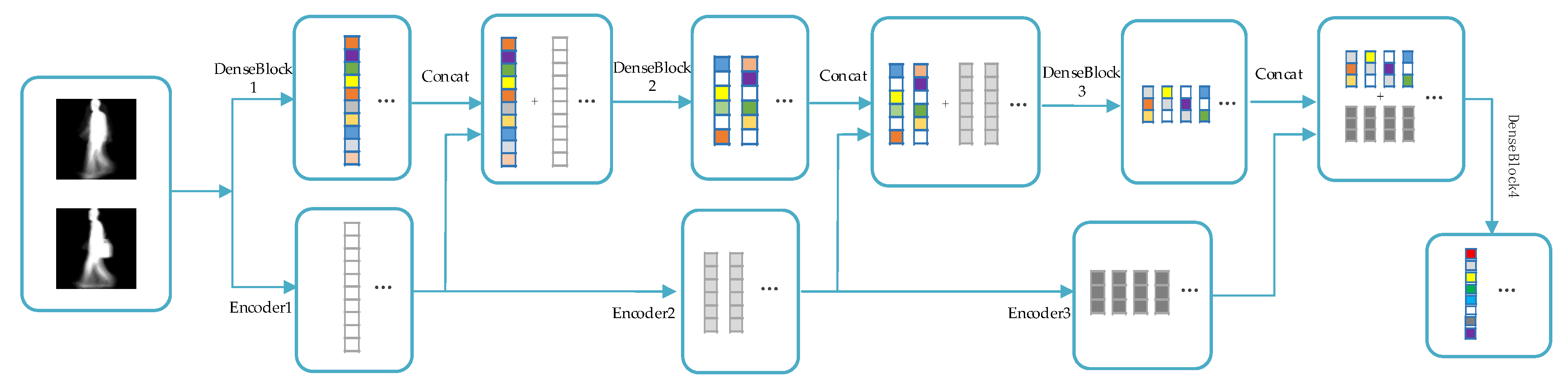

3.1. Mainstream Network

3.1.1. The Architecture of Mainstream Network

3.1.2. Reducing Overfitting

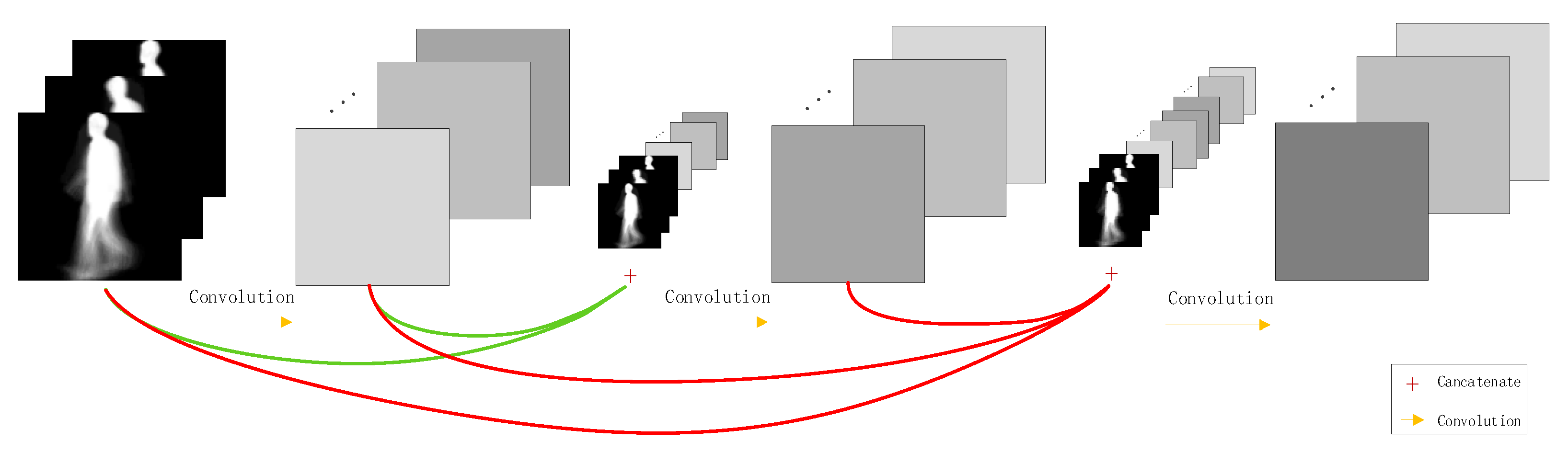

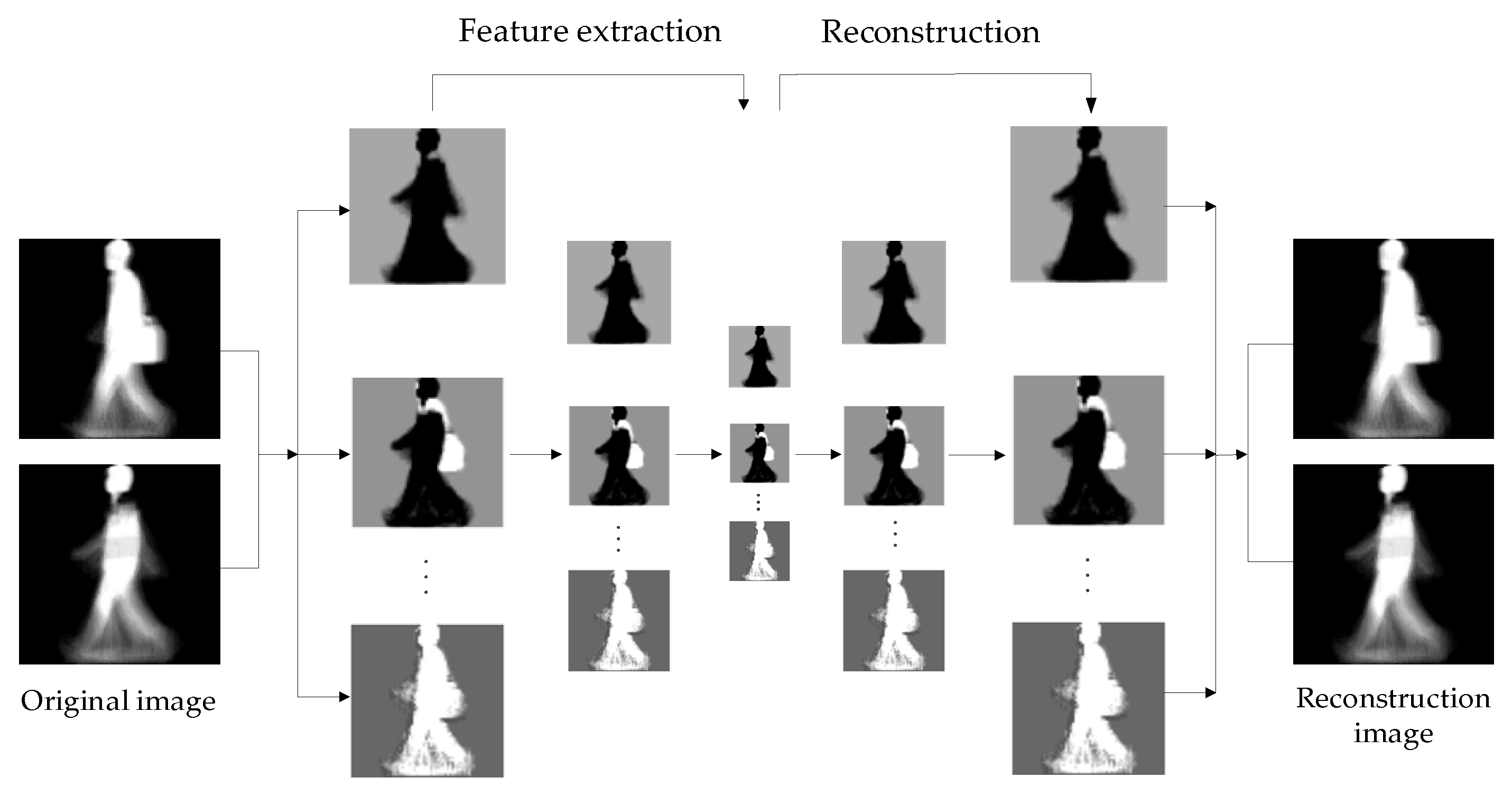

3.2. Auxiliary Stream Network

3.3. Feature Fusion and Recognition

| Algorithm 1 TS-Net Model. |

| Training: Input data: image pair (X1, X2) randomly selected from the training set. for i ← 1 to M (Iteration) input SCAE ← (X1, X2) output S (s1, s2, s3) ← SCAE input DenseNet ← (X1, X2) and S(s1,s2, s3) output Y ← DenseNet do Loss ← ∆, ∆+∆ (α is learning rate) do Loss ← ∆, ∆+∆ end Testing: for to (Images of prob set) for to (Images of gallery set) input SCAE ← (, ) output S (s1, s2, s3) ← SCAE input DenseNet ← (, ) and S(s1,s2, s3) output sim ← DenseNet prediction ← vote(max(sim)) end |

4. Experimental Results and Conclusions

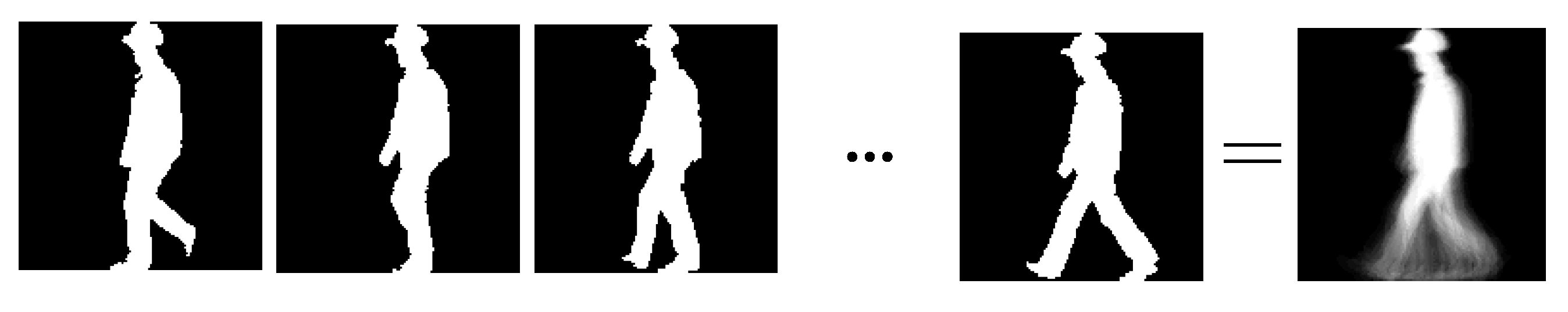

4.1. Dataset

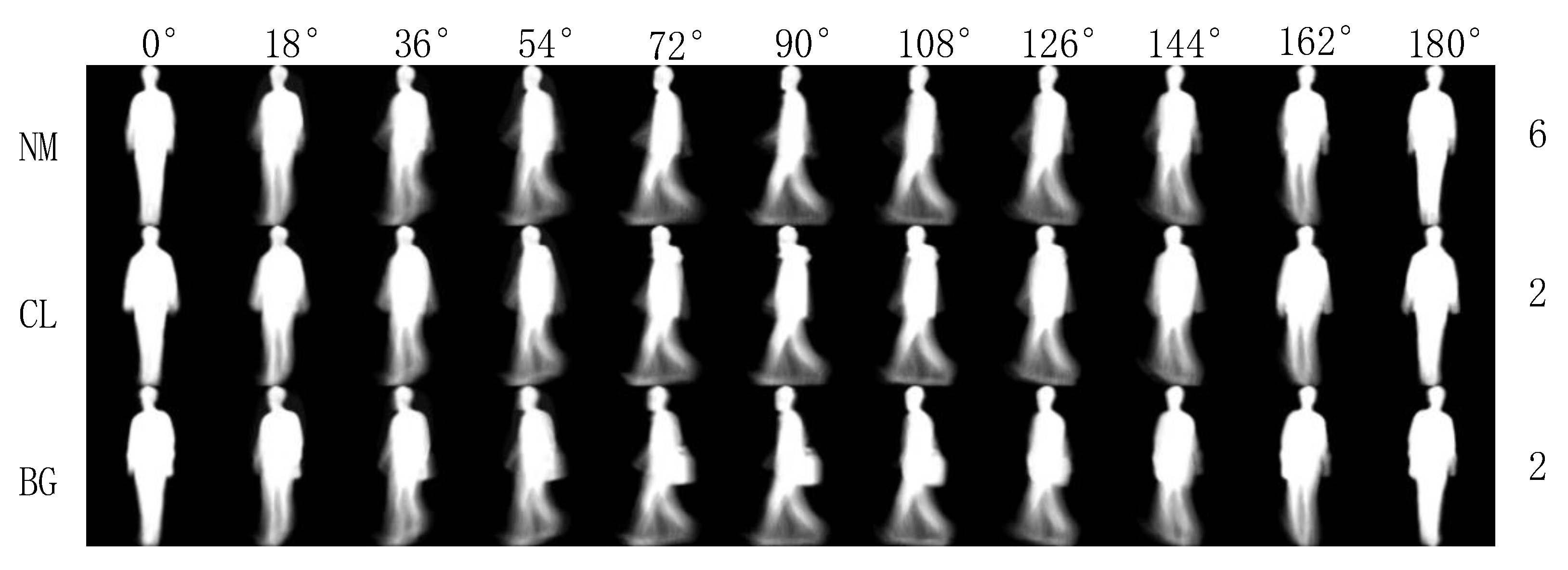

4.1.1. CASIA-B Dataset

4.1.2. UCMP-GAIT Dataset

4.2. Experimental Design

4.3. Model Parameters

4.3.1. Mainstream Network Parameters

4.3.2. Auxiliary Stream Network Parameters

4.4. Experimental Results

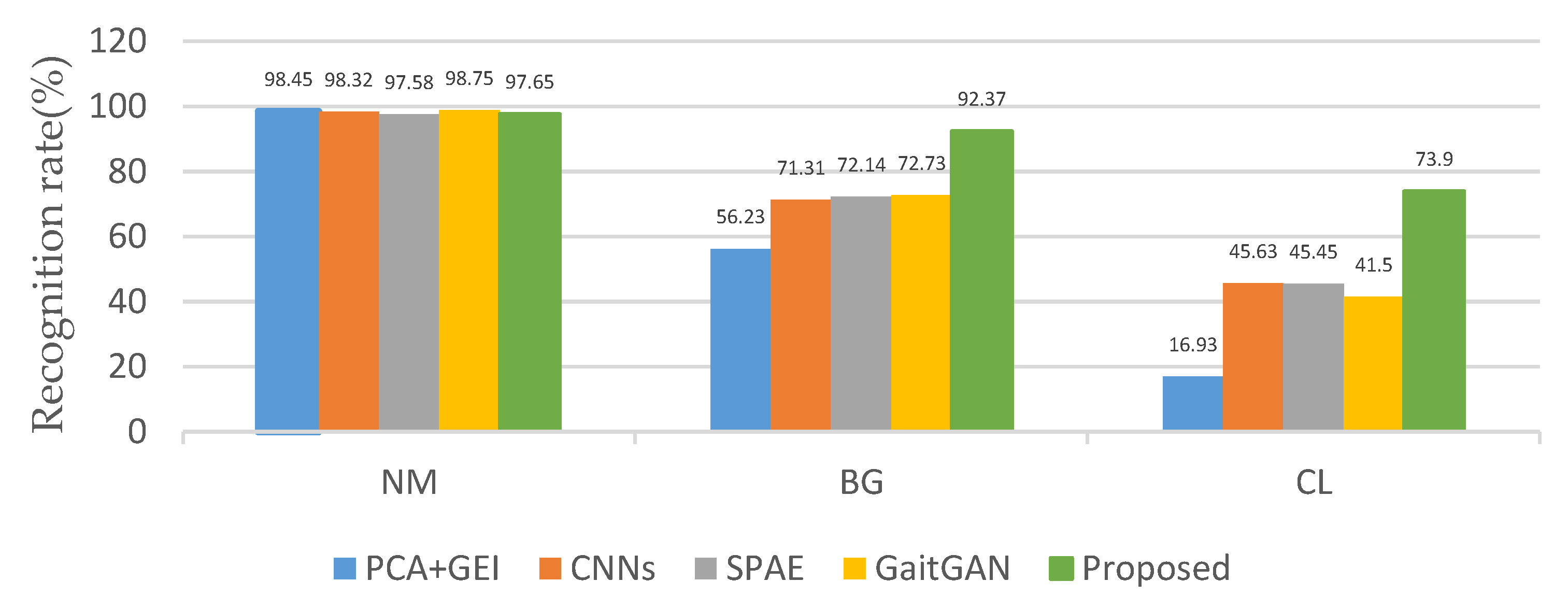

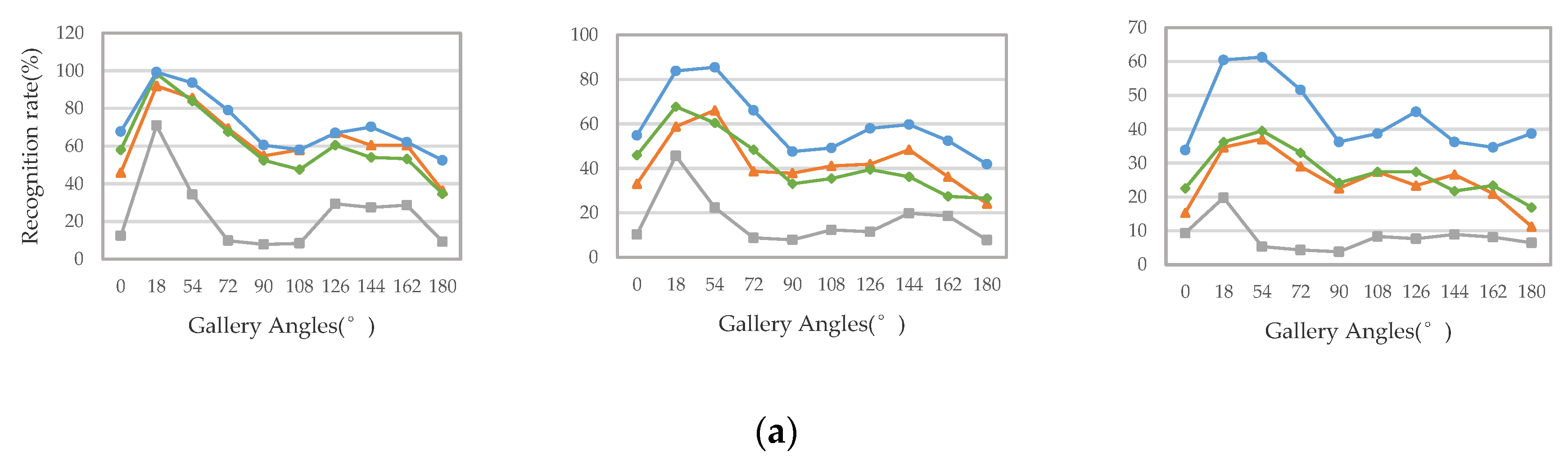

4.5. Compared with State-of-the-Art Methods

4.6. Efficiency

5. Conclusions and Outlook

Author Contributions

Funding

Conflicts of Interest

References

- Chai, Y.; Xia, T.; Han, W. State-of-the-Art on Gait Recognition. Comput. Sci. 2012, 39, 16–21. (In Chinese) [Google Scholar]

- Huang, L.; Xu, Z.; Wang, L.; Hu, F. A novel gait contours segmentation algorithm. In Proceedings of the International Conference on Computer, Mechatronics, Control and Electronic Engineering (CMCE), Changchun, China, 24–26 August 2010. [Google Scholar]

- Zhang, S.; Zhang, C.; Huang, W. Personnel identification in mine underground based on maximin discriminant projection. J. China Coal Soc. 2013, 38, 1894–1899. (In Chinese) [Google Scholar]

- Kumar, H.P.M.; Nagendraswamy, H.S. Gait recognition: An approach based on interval valued features. In Proceedings of the International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 4–6 January 2013. [Google Scholar]

- Sun, J.; Wang, Y. View-invariant gait recognition based on kinect skeleton feature. Multimed. Tools Appl. 2018, 77, 24909–24935. [Google Scholar] [CrossRef]

- Lishani, A.O.; Boubchir, L.; Khalifa, E.; Bouridane, A. Human gait recognition using GEI-based local multi-scale feature descriptors. Multimed. Tools Appl. 2018, 78, 5715–5730. [Google Scholar] [CrossRef]

- Zhao, X.L.; Zhang, X.H. Gait Recognition Based on Dynamic and Static Feature Fusion. Nat. Sci. J. Xiangtan Univ. 2017, 39, 89–91. (In Chinese) [Google Scholar]

- Xue, Z.; Ming, D.; Song, W.; Wan, B.; Jin, S. Infrared gait recognition based on wavelet transform and support vector machine. Pattern Recognit. 2010, 43, 2904–2910. [Google Scholar] [CrossRef]

- Tao, D.; Li, X.; Wu, X.; Wu, X.; Maybank, S. General Tensor Discriminant Analysis and Gabor Features for Gait Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1700–1715. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Fontainebleau Resort, Miami, FL, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Wu, Z.; Huang, Y.; Wang, L.; Wang, X.; Tan, T. A comprehensive study on cross-view gait based human identification with deep cnns. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 209–226. [Google Scholar] [CrossRef]

- Yu, S.; Chen, H.; Reyes, E.B.G.; Poh, N. GaitGAN: Invariant Gait Feature Extraction Using Generative Adversarial Networks. In Proceedings of the 2017 IEEE Conference Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 532–539. [Google Scholar]

- Chao, H.; He, Y.; Zhang, J.; Feng, J. GaitSet: Regarding Gait as a Set for Cross-View Gait Recognition. arXiv 2018, arXiv:1811.06186v4. [Google Scholar] [CrossRef] [Green Version]

- Wu, L.; Cheng, Z. Learning Efficient Spatial-Temporal Gait Features with Deep Learning for Human Identification. Neuroinformatics 2018, 16, 457–471. [Google Scholar]

- Zhang, Y.; Huang, Y.; Wang, L.; Yu, S. A comprehensive study on gait biometrics using a joint CNN-based method. Pattern Recognit. 2019, 93, 228–236. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, J. Gait feature extraction and gait classification using two-branch CNN. Multimed. Tools Appl. 2020, 79, 2917–2930. [Google Scholar] [CrossRef]

- Mehmood, A.; Khan, M.A. Prosperous Human Gait Recognition: An end-to-end system based on pre-trained CNN features selection. Multimed. Tools Appl. 2020, 1–21. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Weinberger, K.Q.; van der Maaten, L. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; Volume 1, p. 3. [Google Scholar]

- Tao, Y.; Xu, M.; Zhong, Y.; Cheng, Y. GAN-Assisted Two-Stream Neural Network for High-Resolution Remote Sensing Image Classification. Remote Sens. 2017, 9, 1328. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Mou, L.; Schmitt, A.; Zhu, X.X. FusioNet: A Two-Stream convolutional neural network for urban scene classification using PolSAR and hyperspectral data. In Proceedings of the Urban Remote Sensing Event (JURSE), Dubai, UAE, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Han, J.; Bhanu, B. Individual recognition using gait energy image. Trans. Pattern Anal. Mach. Intell. 2006, 28, 316–322. [Google Scholar] [CrossRef] [PubMed]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the ACM 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Masci, J.; Meier, U.; Cire¸san, D.; Schmidhuber, J. Stacked convolutional auto-encoders for hierarchical feature extraction. In Proceedings of the 21st International Conference on Artificial Neural Networks—Volume Part II, Espoo, Finland, 14–17 June 2011; pp. 52–59. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Liu, Y.; Liu, Y.; Ding, L. Scene Classification Based on Two-Stage Deep Feature Fusion. IEEE Geosci. Remote Sens. Lett. 2018, 15, 183–186. [Google Scholar] [CrossRef]

- Yu, Y.; Gong, Z.; Wang, C.; Zhong, P. An Unsupervised Convolutional Feature Fusion Network for Deep Representation of Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 23–27. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral Image Classification with Deep Feature Fusion Network. IEEE Trans. Geosci. Remote Sens. 2018, 1–12. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Sokolova, M.; Guy, L. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Factor to ROC, Informedness, Markedness and Correlation. J. Mach. Learn. Technol. 2011, 2, 2229–3981. [Google Scholar]

- Yu, S.; Chen, H.; Wang, Q.; Shen, L.; Huang, Y. Invariant feature extraction for gait recognition using only one uniform model. Neurocomputing 2017, 239, 81–93. [Google Scholar] [CrossRef]

- Yoo, D.; Kim, N.; Park, S.; Paek, A.S.; Kweon, I.S. Pixel-level domain transfer. arXiv 2016, arXiv:1603.07442. [Google Scholar]

- Yu, S.; Tan, D.; Tan, T. A framework for evaluating the effect of view angle, clothing and carrying condition on gait recognition. In Proceedings of the IEEE of 18th International Conference on Pattern Recognition (ICPR), Hong Kong, China, 20–24 August 2006; pp. 441–444. [Google Scholar]

- He, Y.; Zhang, J.; Shan, H.; Wang, L. Multi-task GANs for view-specific feature learning in gait recognition. IEEE TIFS 2019, 14, 102–113. [Google Scholar] [CrossRef]

| Parameter | Optimization |

|---|---|

| Batch Size | 64 |

| Epochs | 200,000 |

| Learning Rate | 0.0001 |

| Layers | Output Size | Feature Num | Mainstream Neural Network |

|---|---|---|---|

| Convolution | 64 × 64 | 2 → 24(12 × 2) | 7 × 7 conv, stride 2 |

| Pooling | 64 × 64 | 24 → 24 | 3 × 3 max pool, stride 1 |

| Dense Block (1) | 64 × 64 | 24 → 48(24 + 12 × 2) | × 2 |

| Compression Layer (1) | 32 × 32 | 64(48 + 16) → 24 | 1 × 1 conv |

| 2 × 2 average pool, stride 2 | |||

| Dense Block (2) | 32 × 32 | 24 → 72(24 + 12 × 4) | × 4 |

| Compression Layer (2) | 16 × 16 | 104(72 + 32) → 36 | 1 × 1 conv |

| 2 × 2 average pool, stride 2 | |||

| Dense Block (3) | 16 × 16 | 36 → 132(36 + 12 × 8) | × 8 |

| Compression Layer (3) | 8 × 8 | 196(132 + 64) → 66 | 1 × 1 conv |

| 2 × 2 average pool, stride 2 | |||

| Dense Block (4) | 8 × 8 | 66 → 138(66 + 12 × 6) | × 6 |

| Classification Layer | 1 × 1 | 138 → 138 | 8 × 8 global average pool |

| Fully connected, sigmoid |

| Layers | Number of Filters | Filter Size | Stride | Batch Norm | Activation Function |

|---|---|---|---|---|---|

| Conv.1 | 16 | 2 × 2 × 2 | 2 | Y | ReLU |

| Conv.2 | 32 | 2 × 2 × 16 | 2 | Y | ReLU |

| Conv.3 | 64 | 2 × 2 × 32 | 2 | Y | ReLU |

| F-Conv.1 | 64 | 2 × 2 × 32 | 1/2 | Y | ReLU |

| F-Conv.2 | 32 | 2 × 2 × 16 | 1/2 | Y | ReLU |

| F-Conv.3 | 16 | 2 × 2 × 2 | 1/2 | Y | ReLU |

| Gallery view | Probe view (nm05, nm06) | |||||||||||

| 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | ||

| 0 | 97.58 | 89.52 | 67.74 | 54.84 | 29.84 | 30.65 | 33.06 | 36.29 | 42.74 | 61.29 | 83.87 | |

| 18 | 87.10 | 98.39 | 99.19 | 87.90 | 57.26 | 45.16 | 44.35 | 54.84 | 58.7 | 64.52 | 65.32 | |

| 36 | 70.97 | 91.94 | 97.58 | 95.97 | 79.84 | 64.52 | 64.52 | 74.19 | 75.00 | 66.94 | 60.48 | |

| 54 | 46.77 | 74.19 | 93.55 | 96.77 | 91.94 | 80.65 | 84.68 | 82.26 | 72.58 | 54.03 | 38.71 | |

| 72 | 32.26 | 47.58 | 79.03 | 97.58 | 96.77 | 94.35 | 92.74 | 84.68 | 68.55 | 45.97 | 30.65 | |

| 90 | 28.23 | 37.10 | 60.48 | 85.48 | 96.77 | 97.58 | 96.77 | 89.52 | 65.32 | 41.94 | 28.23 | |

| 108 | 25.81 | 38.71 | 58.06 | 76.61 | 91.94 | 97.58 | 97.58 | 95.97 | 87.10 | 48.39 | 30.65 | |

| 126 | 33.06 | 51.61 | 66.94 | 76.61 | 80.65 | 87.10 | 94.35 | 96.77 | 91.13 | 74.19 | 46.77 | |

| 144 | 40.32 | 62.10 | 70.16 | 66.94 | 66.13 | 72.58 | 79.03 | 91.13 | 89.38 | 86.29 | 73.54 | |

| 162 | 57.26 | 70.97 | 62.10 | 54.03 | 50.00 | 45.97 | 55.65 | 75.00 | 83.87 | 99.19 | 87.10 | |

| 180 | 75.81 | 63.71 | 52.42 | 40.32 | 33.06 | 31.45 | 39.52 | 46.77 | 66.13 | 85.48 | 97.58 | |

| Gallery view | Probe view (bg01, bg02) | |||||||||||

| 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | ||

| 0 | 91.94 | 75.00 | 54.84 | 35.48 | 20.16 | 16.13 | 24.19 | 27.42 | 37.10 | 56.45 | 62.90 | |

| 18 | 79.03 | 95.97 | 83.87 | 66.94 | 41.94 | 32.26 | 40.32 | 49.19 | 54.03 | 61.29 | 54.84 | |

| 36 | 52.42 | 82.26 | 90.32 | 87.90 | 66.94 | 49.19 | 60.48 | 70.16 | 62.90 | 48.39 | 42.74 | |

| 54 | 37.90 | 62.10 | 85.48 | 91.94 | 79.84 | 67.74 | 72.58 | 70.97 | 70.97 | 45.16 | 35.48 | |

| 72 | 20.97 | 32.26 | 66.13 | 91.13 | 98.39 | 93.55 | 89.52 | 77.42 | 54.84 | 41.13 | 25.00 | |

| 90 | 17.74 | 24.19 | 47.58 | 71.77 | 91.13 | 91.94 | 93.55 | 78.23 | 50.00 | 32.26 | 19.35 | |

| 108 | 22.58 | 31.45 | 49.19 | 71.77 | 85.48 | 88.71 | 93.55 | 91.94 | 62.90 | 37.90 | 25.00 | |

| 126 | 27.42 | 40.32 | 58.06 | 70.16 | 74.19 | 73.99 | 90.32 | 93.55 | 82.26 | 53.23 | 36.29 | |

| 144 | 33.87 | 52.42 | 59.68 | 58.87 | 45.16 | 48.39 | 68.55 | 87.10 | 88.71 | 81.45 | 48.39 | |

| 162 | 52.42 | 61.29 | 52.42 | 38.71 | 36.29 | 30.65 | 41.94 | 57.26 | 73.39 | 89.52 | 75.81 | |

| 180 | 59.67 | 53.23 | 41.94 | 30.65 | 23.39 | 16.13 | 27.42 | 32.26 | 57.26 | 84.68 | 90.32 | |

| Gallery view | Probe view (cl01, cl02) | |||||||||||

| 0 | 18 | 36 | 54 | 72 | 90 | 108 | 126 | 144 | 162 | 180 | ||

| 0 | 68.55 | 53.23 | 33.87 | 20.97 | 12.10 | 8.87 | 12.90 | 15.32 | 20.16 | 45.16 | 45.97 | |

| 18 | 46.77 | 67.74 | 60.48 | 41.13 | 30.65 | 28.23 | 26.61 | 31.34 | 30.65 | 34.68 | 29.03 | |

| 36 | 34.68 | 60.48 | 75.81 | 60.48 | 48.39 | 36.29 | 37.90 | 37.10 | 34.68 | 29.84 | 27.41 | |

| 54 | 21.77 | 47.58 | 61.29 | 75.00 | 53.23 | 50.00 | 48.39 | 41.94 | 42.74 | 27.42 | 25.00 | |

| 72 | 12.90 | 37.10 | 51.61 | 75.00 | 82.26 | 73.39 | 71.77 | 54.84 | 35.48 | 21.77 | 17.74 | |

| 90 | 17.74 | 26.61 | 36.29 | 54.03 | 73.39 | 75.81 | 78.23 | 62.90 | 33.06 | 20.16 | 15.32 | |

| 108 | 14.52 | 26.61 | 38.71 | 50.81 | 67.74 | 70.97 | 83.87 | 73.39 | 44.35 | 25.81 | 16.13 | |

| 126 | 19.35 | 35.48 | 45.16 | 49.19 | 50.00 | 55.65 | 70.97 | 78.23 | 59.68 | 40.32 | 27.42 | |

| 144 | 23.39 | 30.65 | 36.29 | 34.68 | 37.90 | 32.26 | 47.58 | 59.68 | 75.81 | 56.45 | 36.29 | |

| 162 | 38.71 | 50.00 | 34.68 | 21.77 | 21.77 | 22.58 | 25.81 | 33.87 | 43.55 | 63.71 | 47.58 | |

| 180 | 49.19 | 41.94 | 38.71 | 24.19 | 14.52 | 12.90 | 15.32 | 19.35 | 37.90 | 53.23 | 70.16 | |

| Probe View | 0° | 18° | 36° | 54° | 72° | 90° | 108° | 126° | 144° | 162° | 180° | Mean | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Before swap | NM | 54.10 | 65.95 | 73.39 | 75.73 | 70.38 | 67.96 | 71.11 | 75.22 | 73.61 | 66.20 | 58.45 | 68.37 |

| BG | 45.09 | 55.57 | 62.68 | 65.03 | 60.26 | 55.33 | 63.86 | 66.86 | 63.12 | 57.41 | 46.92 | 58.38 | |

| CL | 31.60 | 43.40 | 46.63 | 46.11 | 44.72 | 42.45 | 47.21 | 46.18 | 41.64 | 38.05 | 32..55 | 41.88 | |

| After swap | NM | 55.62 | 64.87 | 72.46 | 74.81 | 71.32 | 66.63 | 68.54 | 76.20 | 73.81 | 65.79 | 59.81 | 68.16 |

| BG | 46.73 | 55.49 | 61.45 | 64.39 | 62.52 | 54.78 | 66.84 | 67.52 | 62.59 | 56.99 | 48.76 | 58.91 | |

| CL | 33.87 | 43.97 | 43.69 | 44.22 | 42.88 | 40.77 | 45.11 | 43.76 | 41.48 | 39.13 | 33.74 | 41.14 | |

| Type of Work | Mean (%) | |||

|---|---|---|---|---|

| Coal miner | 90.00 | 100.0 | 100.0 | 96.67 |

| Hydraulic support worker | 90.00 | 90.00 | 100.0 | 93.33 |

| Shearer driver | 80.00 | 90.00 | 90.00 | 86.67 |

| All | 86.67 | 93.33 | 96.67 | 92.22 |

| Probe View | 0° | 18° | 36° | 54° | 72° | 90° | 108° | 126° | 144° | 162° | 180° | Mean | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| NM# 5-6 | SPAE | 49.3 | 61.5 | 64.4 | 63.6 | 63.7 | 58.1 | 59.9 | 66.5 | 64.8 | 56.9 | 44.0 | 59.3 |

| MGAN | 54.9 | 65.9 | 72.1 | 74.8 | 71.1 | 65.7 | 70.0 | 75.6 | 76.2 | 68.6 | 53.8 | 68.1 | |

| Proposed | 54.1 | 66.0 | 73.4 | 75.7 | 70.4 | 68.0 | 71.1 | 75.2 | 73.6 | 66.2 | 58.5 | 68.4 | |

| BG# 1-2 | SPAE | 29.8 | 37.7 | 39.2 | 40.5 | 43.8 | 37.5 | 43.0 | 42.7 | 36.3 | 30.6 | 28.5 | 37.2 |

| MGAN | 48.5 | 58.5 | 59.7 | 58.0 | 53.7 | 49.8 | 54.0 | 61.3 | 59.5 | 55.9 | 43.1 | 54.7 | |

| Proposed | 45.1 | 55.6 | 62.7 | 65.0 | 60.3 | 55.3 | 63.9 | 66.9 | 63.1 | 57.4 | 46.9 | 58.4 | |

| CL# 1-2 | SPAE | 18.7 | 21.0 | 25.0 | 25.1 | 25.0 | 26.3 | 28.7 | 30.0 | 23.6 | 23.4 | 19.0 | 24.2 |

| MGAN | 23.1 | 34.5 | 36.3 | 33.3 | 32.9 | 32.7 | 34.2 | 37.6 | 33.7 | 26.7 | 21.0 | 31.5 | |

| Proposed | 31.6 | 43.4 | 46.6 | 46.1 | 44.7 | 42.5 | 47.2 | 46.2 | 41.6 | 38.1 | 32.6 | 41.9 | |

| Methods | Accuracy (%) |

|---|---|

| GEI + PCA | 31.11 |

| CNNs | 85.56 |

| SPAE | 83.33 |

| GaitGAN | 81.11 |

| Proposed | 92.22 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Liu, J. Gait Recognition Method of Underground Coal Mine Personnel Based on Densely Connected Convolution Network and Stacked Convolutional Autoencoder. Entropy 2020, 22, 695. https://doi.org/10.3390/e22060695

Liu X, Liu J. Gait Recognition Method of Underground Coal Mine Personnel Based on Densely Connected Convolution Network and Stacked Convolutional Autoencoder. Entropy. 2020; 22(6):695. https://doi.org/10.3390/e22060695

Chicago/Turabian StyleLiu, Xiaoyang, and Jinqiang Liu. 2020. "Gait Recognition Method of Underground Coal Mine Personnel Based on Densely Connected Convolution Network and Stacked Convolutional Autoencoder" Entropy 22, no. 6: 695. https://doi.org/10.3390/e22060695

APA StyleLiu, X., & Liu, J. (2020). Gait Recognition Method of Underground Coal Mine Personnel Based on Densely Connected Convolution Network and Stacked Convolutional Autoencoder. Entropy, 22(6), 695. https://doi.org/10.3390/e22060695