Abstract

Image quality assessment (IQA) aims to devise computational models to evaluate image quality in a perceptually consistent manner. In this paper, a novel no-reference image quality assessment model based on dual-domain feature fusion is proposed, dubbed as DFF-IQA. Firstly, in the spatial domain, several features about weighted local binary pattern, naturalness and spatial entropy are extracted, where the naturalness features are represented by fitting parameters of the generalized Gaussian distribution. Secondly, in the frequency domain, the features of spectral entropy, oriented energy distribution, and fitting parameters of asymmetrical generalized Gaussian distribution are extracted. Thirdly, the features extracted in the dual-domain are fused to form the quality-aware feature vector. Finally, quality regression process by random forest is conducted to build the relationship between image features and quality score, yielding a measure of image quality. The resulting algorithm is tested on the LIVE database and compared with competing IQA models. Experimental results on the LIVE database indicate that the proposed DFF-IQA method is more consistent with the human visual system than other competing IQA methods.

1. Introduction

Many image processing tasks (e.g., image acquisition, compression, transmission, restoration, etc.) often cause different types of distortion at different levels, so perceived quality assessment has been receiving more and more attention [1]. It can be divided into subjective IQA and objective IQA. The conventional way of measuring image quality is to solicit the opinion of human observers. However, such subjective IQA methods are cumbersome and time-consuming, so they are difficult to be incorporated into automatic systems. Therefore, objective IQA methods have more actual significance in practical applications [2,3].

Depending on whether there are available reference images, objective IQA methods can be divided into three categories: full-reference IQA (FR-IQA) [4,5,6,7,8,9,10,11,12,13,14,15,16,17], reduced-reference IQA (RR-IQA) [18,19] and no-reference IQA (NR-IQA) [20,21,22,23,24,25,26,27,28,29,30,31]. FR-IQA and RR-IQA require the provision of multiple reference images and partial information of them, respectively. In most cases, the reference image is not always available, so the NR-IQA are the unique ones to be embedded into the actual application system.

According to the scope of application, the current methods of NR-IQA can be roughly divided into two categories: special methods for specific types of distortion [20,21,22] and general methods for various types of distortion [23,24,25,26,27,28,29,30,31]. Considering that special-purpose algorithms need to acquire the type of distortion such as blur, noise, compression, etc., their scope of application is limited. Therefore, research on general-purpose methods has become a hot topic in the field of IQA, including two-stage framework models and global framework models.

At present, BIQI [23], DIIVINE [24], SSEQ [25], and CurveletQA [26] are the representative methods of two-stage framework IQA models. BIQI extracts statistical features from the wavelet coefficients and utilizes distortion classification to judge the specific type of distortion, and then adopts the corresponding regression model to evaluate the image. On the basis of BIQI, DIIVINE obtains sub-band coefficients with different directions by the multi-scale wavelet decomposition and extracts several statistical features to predict the image quality by employing support vector machine (SVM) regression. SSEQ combines local spatial and spectral entropy features extracted from the distorted images after distortion classification. CurveletQA proposes a two-stage framework of distortion classification followed by quality assessment, and a set of statistical features are extracted from a computed image curvelet representation.

Representative global framework of NR-IQA models include BLIINDS-I [27], BLIINDS-II [28], GRNN [29], BRISQUE [30], and NIQE [31]. BLIINDS-I combined features of contrast, sharpness and anisotropy in discrete cosine transform (DCT) domain by a down-sampling operation on the image and adopted a probability model to predict image quality. BLIINDS-II predicted quality score by using statistical features extracted from local image blocks on the basis of BLIINDS-I. GRNN extracted complementary perceptual features of phase consistency, gradient and entropy, and adopted a generalized regression neural network to establish a mapping between visual features and subjective scores to predict image quality. BRISQUE investigated the statistical rules of images from the perspective of the spatial domain and utilized support vector regression (SVR) to establish a mapping between statistical features and mean opinion score (MOS). NIQE evaluated image quality by measuring the distance between the statistical features of distorted images and natural images. In view of visual perception and statistical characteristics, this paper proposes a novel NR-IQA algorithm based on dual-domain feature fusion, dubbed as DFF-IQA. In the spatial domain, features of weighted local binarization pattern (WLBP), naturalness, and spatial entropy are extracted. In the frequency domain, asymmetrical generalized gaussian distribution (AGGD) fitting parameters of curvelet coefficients, oriented energy distribution (OED), and spectral entropy features are extracted. The features extracted from the dual-domain are fused to form a quality-aware feature vector, and then random forest regression is employed to predict the image quality score. At last, we validate the performance of the proposed DFF-IQA method on the LIVE database. In summary, the main contributions of this work are:

- (1)

- We analyze and extract the perceptual features in dual-domain, and the fused quality-aware feature vector has been verified to promote the performance of quality evaluation.

- (2)

- We compare the representative FR/NR-IQA models with our DFF-IQA model. The experimental results show that the proposed method has better performance and has good consistency with human subjective perception.

2. Proposed DFF-IQA Method

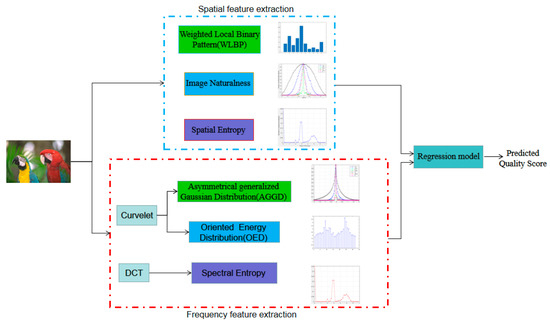

As shown in Figure 1, this paper proposes a novel NR-IQA algorithm based on dual-domain feature fusion, dubbed as DFF-IQA. Firstly, in the spatial domain, we extract several features about weighted local binary pattern (WLBP) [32], naturalness and entropy from the distorted image. WLBP describes the texture characteristic of distorted images by adopting the statistical features of gradient-weighted LBP histogram, and the naturalness feature is represented by the fitting parameters of the generalized gaussian distribution (GDD). Spatial entropy is computed based on image blocks. Secondly, in the frequency domain, the DCT and curvelet transforms are implemented on distorted image based on the blocks respectively. In DCT domain, spectral entropy feature is extracted from image blocks. In curvelet transform domain, an asymmetric generalized Gaussian distribution (AGGD) model is employed to summarize the distribution of curvelet coefficients of the distorted image. Meanwhile, oriented energy distribution (OED) feature is further extracted to describe the curvelet coefficient. Thirdly, the features extracted in the dual-domain are fused to build the quality-aware feature vector. Finally, quality regression process by random forest is conducted to build the relationship between image features and quality score, yielding a measure of image quality. The framework of the proposed DFF-IQA algorithm is depicted in Figure 1.

Figure 1.

Overview of the proposed DFF-IQA framework.

2.1. Feature Extraction

2.1.1. Weighted Local Binary Pattern (WLBP)

Local binary pattern (LBP) is an operator used to describe local texture features of images effectively and has shown good performance for evaluation of IQA tasks [33]. In this paper, the LBP coding with rotation invariance equivalent mode is employed, and gradient magnitude is adopted for weighting. Here, the gradient magnitude of the image () is obtained using the Prewitt filter. The calculation process is as follows:

where is the input image, Ph and Pv are the Prewitt filters in the horizontal and vertical directions respectively, “*” represents the convolution operation, and is the gradient magnitude image of .

We calculate the local rotation invariant uniform LBP operator by:

where R is the radius value, and P represents the number of neighboring points. indicates a center pixel at the position in the corresponding images, and is a neighboring pixel (xp, yp) surrounding :

where is the number of neighboring pixels sampled by a distance from to . In this case, z(θ) is the step function and defined by:

where indicates the threshold value. In addition, is used to compute the number of bitwise transitions:

where is the rotation-invariant operator:

where , and is the circular bit-wise right shift operator that shifts the tuple by positions. Finally, we obtain with a length of .

Prewitt filters with horizontal, vertical, main diagonal, and secondary diagonal directions were used to obtain the four gradient images in different directions by convolution operation. They are defined as , where represents the Prewitt filter with four different directions, denotes the input image, and represents the gradient of the four directions. In this work, we use the maximum gradient magnitude O(i, j) calculated by Equation (7) as the LBP weight of each pixel:

where represents the values of the gradient in four directions of (i, j) pixel point. Then the final weight map is obtained. The gradient magnitudes of pixels with the same WLBP pattern are accumulated , which can be regarded as the gradient-weighted WLBP histogram.

where represents the maximum directional gradient response, that is, the weight map; and represent the length and width of the image, respectively; and is the LBP encoding patterns. In this paper, LBP of rotation invariant equivalence mode is used. At last we extract 10-dimensional statistical characteristics from pattern 1 to pattern 10 at a single scale.

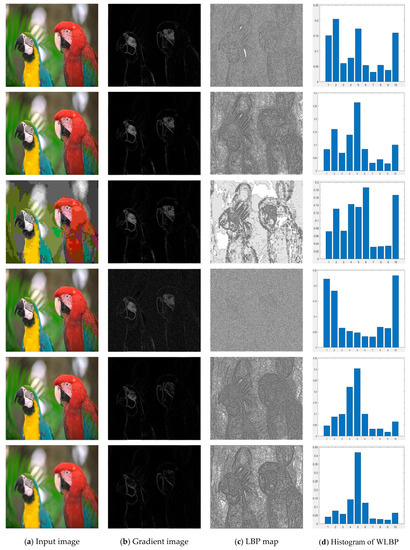

As shown in Figure 2d, we can find a significant difference in the WLBP distribution for different distortion types. The abscissa of the histogram is LBP coding pattern from pattern 1 to pattern 10. In the histogram of the pristine natural image, most number of the patterns are pattern 1, pattern 2, pattern 5, and pattern 10, and the other patterns are relatively few. When the images are distorted, the pattern distribution changes significantly. Therefore, the spatial distortion of the image can be described by extracting the WLBP feature of the image.

Figure 2.

Pristine natural image and five distorted versions of it from the LIVE IQA database (“parrots” in LIVE database); from left column to right column are the input image, gradient image, LBP map, and histogram of WLBP. From top to bottom of the first column are pristine image with DMOS = 0, JPEG2000 compressed image with DMOS = 45.8920, JPEG compressed image with DMOS = 46.8606, white noise image with DMOS = 47.0386, fast-fading distorted image with DMOS = 44.0640, and Gaussian blur image with DMOS = 49.1911.

2.1.2. Naturalness Feature

The locally mean subtracted contrast normalized (MSCN) coefficients have been successfully applied to measure their naturalness [30]. For each distorted image, its MSCN coefficients can be calculated by:

where is the input image, is a constant that prevents instabilities from occurring when denominator tends to zero, and and are the mean and standard deviation of the distorted image, respectively. The calculation formulas are shown in Equations (11) and (12).

where represents a 2D circularly-symmetric Gaussian weighting function sampled out to three standard deviations and rescaled to unit volume. In our implementation, we set .

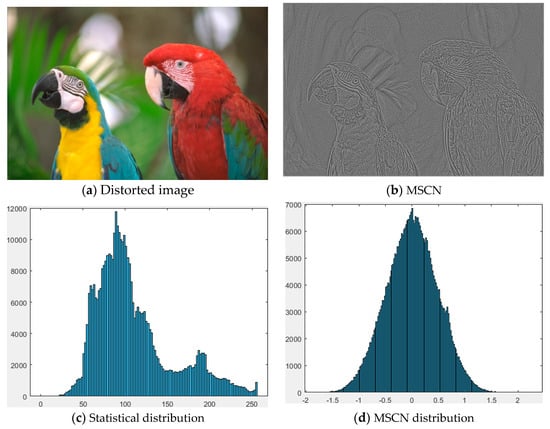

Figure 3a,b shows the distorted image of JP2K and the corresponding MSCN coefficients, respectively; Figure 3c,d shows statistical distribution of the distorted image and MSCN histogram distribution of MSCN coefficients, respectively. As shown in Figure 3c,d, the distribution of MSCN coefficients is significantly different from the statistical distribution of the distorted image and approximates the Gaussian distribution. Therefore, the distribution of MSCN coefficient can be fitted by GGD to represent the degree of naturalness [30].

Figure 3.

(a) Distorted image (“parrots” in LIVE database, type of JP2K compressed image with DMOS = 45.8920); (b) Corresponding MSCN coefficients image of (a); (c) Statistical distribution of the distorted image; (d) MSCN histogram distribution of MSCN coefficients.

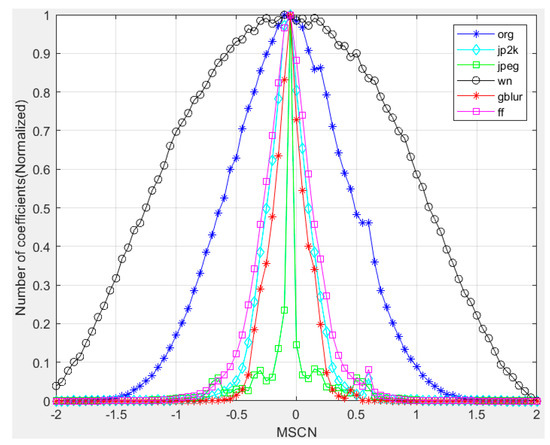

Figure 4 plots a histogram of MSCN coefficients for a pristine natural image and for various distorted versions of it. Notice how the pristine image exhibits a Gaussian-like appearance, while each distortion modifies the statistics in its own characteristic way. For example, blur creates a more Laplacian appearance, while white-noise distortion appears to reduce the weight of the tail of the histogram. We have found that a generalized Gaussian distribution (GGD) can be used to effectively capture a broader spectrum of distorted image statistics, which often exhibit changes in the tail behaviour (i.e., kurtosis) of the empirical coefficient distributions where the GGD with zero mean is given by:

where

where is the gamma function:

Figure 4.

Histogram of MSCN coefficients for a reference image and its various distorted versions. Distortions from the LIVE IQA database. org: original image (i.e., Pristine natural image). jp2k: JPEG2000. jpeg: JPEG compression. wn: additive white Gaussian noise. blur: Gaussian blur. ff: Rayleigh fast-fading channel simulation.

2.1.3. Local Spatial Entropy and Spectral Entropy

Although global entropy can reflect the overall information in the image, it cannot reflect the details in the image. Therefore, this paper uses entropies computed from local image blocks, on both the block spatial scale responses and also on the block DCT coefficients [25].

The spatial entropy is computed by:

where are the pixel values in a local block, with empirical probability density .

The block DCT coefficient matrix is firstly computed on 8 × 8 blocks. Implementing of the DCT rather than the DFT reduces block edge energy in the transform coefficients. DCT coefficients are normalized by the following equation:

where , , and (DC is excluded). Then the local spectral map could be computed by:

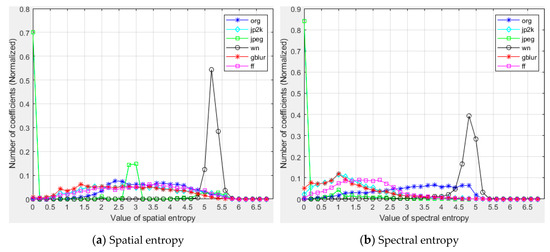

To illustrate the behavior of the local spatial entropy and spectral entropy against different degrees and types of distortions, we conducted a series of validation experiments on images. As shown in Figure 5, the undistorted image (org) has a spatial entropy histogram that is left-skewed. The spectral entropy histogram has a similar distribution. we can find different types of distortions (jp2k and jpeg compression, noise, blur and fast-fading) that exert systematically different influences on the local spatial and spectral entropy. Therefore, we utilize skewness and mean as features to measure the image quality.

Figure 5.

Histograms of spatial and spectral entropy values for different types of distortion. The ordinate represents the coefficient normalized between 0 and 1.

2.1.4. AGGD Fitting Parameter of Curvelet Coefficients

The Curvelet transform is a higher dimensional generalization of the Wavelet transform designed to represent images at different scales and different angles [26]. Therefore, it is characterized by the ability to capture the information along the edges of the image well.

Taking as the input in Cartesian coordinate system, the discrete curvelet transform of a 2-D function is computed by:

where represents a curvelet of scale at position index , with angle index , denoting coordinates in the spatial domain [34].

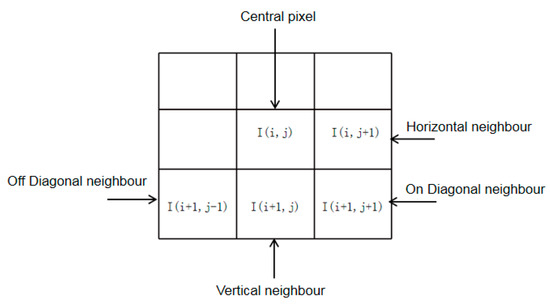

After the curvelet transform is implemented on the distorted image, we can obtain the curvelet coefficients. Then we can compute the MSCN coefficients from the curvelet coefficient according to Equation (10) described in Section 2.1.2. While MSCN coefficients are definitely more homogenous for pristine images, the signs of adjacent coefficients also exhibit a regular structure, which gets disturbed in the presence of distortion. We construct this structure using the empirical distributions of pairwise products of neighboring MSCN coefficients along four orientations: horizontal (H), vertical (V), main-diagonal (D1), and secondary-diagonal (D2), as depicted in Figure 6, respectively.

Figure 6.

Various paired products computed in order to quantify neighboring statistical relationships. Pairwise products are computed along four orientations—horizontal, vertical, main-diagonal, and secondary-diagonal at a distance of 1 pixel.

Mathematically, it could be computed by:

where MI is the curvelet coefficients and Ob represents the pairwise product of the MSCN coefficients and the MSCN coefficients in the V, D1 and D2, and b is set to 0, 1, −1. H represents the pairwise product of the MSCN coefficients and the horizontal MSCN coefficients.

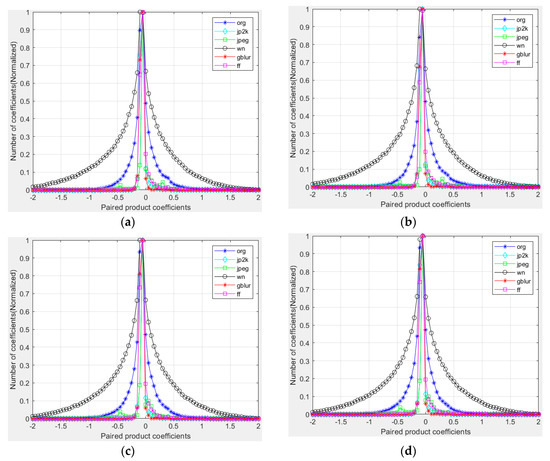

In order to visualize how paired products vary in the presence of distortion, in Figure 7, we plot histograms of paired products along each of the four orientations, for a reference image and for distorted versions of it. Figure 7a–d are the histograms of the pairwise product of the center pixel and horizontal, vertical, main diagonal, and secondary-diagonal MSCN coefficients.

Figure 7.

Histograms of paired products of MSCN coefficients of a natural undistorted image and various distorted versions of it. (a) Horizontal; (b) Vertical; (c) Main-diagonal; (d) Secondary-diagonal. Distortions from the LIVE IQA database. jp2k: JPEG2000. jpeg: JPEG compression. wn: additive white Gaussian noise. gblur: Gaussian blur. ff: Rayleigh fast-fading channel simulation.

We use the zero mean asymmetric generalized Gaussian distribution (AGGD model) to fit its statistical distribution. The histograms of the pairwise products in four directions are calculated.

where

where is the shape parameter controlling the statistical distribution, and and are the scale parameters of left and right edges respectively. When , AGGD model can be transformed into generalized Gaussian model (GGD). In addition, we use the three parameters mentioned above to calculate as an additional feature. See the formula below for the specific calculation process.

Finally, the AGGD fitting parameters of curvelet coefficients are extracted fAGGD = {}.

2.1.5. Oriented Energy Distribution (OED)

Cortical neurons are highly sensitive to orientation energy in images, whereas image distortion can modify the orientation energy distribution in an unnatural manner. The curvelet transform is a rich source of orientation information on images and their distortions [26]. In order to describe changes in the energy distribution in curvelet domain, we utilize the mean of the logarithm of the magnitude of the curvelet coefficients in all scales as an energy measure to calculate the energy differences between the adjacent layers and interval layers. The energy statistical function on different scales is calculated by:

where is a set of coefficients of the scale matrix’s set with scale index , and represent energy differences between the adjacent layers and interval layers.

At the same time, the curvelet transform has rich directional information on the reference and distorted images. The magnitude of oriented energy is different in various categories of distortion. The average kurtosis m can be selected as the quality feature.

Previous studies [35,36,37,38,39] have found that image distortion processes affect image anisotropy. To capture this, we calculate the variation of the non-cardinal orientation energies [40]:

where and are the sample mean and standard deviation of the non-cardinal orientation energies, and is employed to capture the degree of anisotropy of the image, and is used as a quality feature. Thus, we obtain an eight-dimensional feature group, which describes the oriented energy distribution, referred to as fOED = [m,,,,,,,].

2.2. Pooling Strategy

The features extracted from the dual-domain are fused to form a multi-dimensional feature vector [41,42,43,44,45,46]. After feature extraction, the quality regression from feature space to image quality is conducted, which can be denoted as

where is a quality regression function achieved by feature pooling strategy, represents the extracted feature vector, and Q is the quality of tested image.

At present, learning-based methods [25,26,30] have been widely used in the feature pooling stage of IQA, such as support vector regression (SVR), random forest (RF) and BP neural network. SVR model is relatively fast in the regression processing, however, it is prone to over-fitting. BP neural network is employed rarely because of its high complexity. In the learning process of RF, a large number of decision trees will be generated. Each decision tree will give its own classification results, and the final regression score will be obtained by averaging the classification results of all decision trees. Many studies have shown that RF has higher prediction accuracy and is less prone to over-fitting [47,48,49], which is better than SVR in predicting the color images. Therefore, RF is used in this paper to learn the mapping relationship between feature vectors and Mean Opinion Score (MOS), so as to obtain the final quality score.

3. Experimental Results and Analysis

3.1. Database and Evaluation Criterion

In order to verify the effectiveness of the proposed algorithm, we tested the performance of DFF-IQA on the LIVE IQA database [50], which contains 29 reference images distorted by the five distortion types: white noise, JPEG and JP2K compression, Gaussian blur, and fast Rayleigh fading, yielding 799 distorted images. Each distorted image is provided with a Difference Mean Opinion Score (DMOS) value, which is representative of the human subjective score of the image. The subjective score DMOS value range is 0–100; the larger the DMOS value is, the more serious the image distortion is. Some examples of reference scenes in the LIVE database are showed in Figure 8.

Figure 8.

Some examples of reference scenes in the LIVE database. (a–i) shows some examples of reference scenes in the LIVE database, including “bikes scene”, “buildings scene”, “caps scene”, “lighthouse2 scene”, “monarch scene”, “ocean scene”, “parrots scene”, “plane scene” and “rapids scene” (not listed one by one due to layout reasons).

Pearson linear correlation coefficient (PLCC), Spearman rank order correlation coefficient (SROCC) and root mean square error (RMSE) were used to measure the correlation between a set of predicted visual quality scores and a set of predicted visual quality score . The better correlation with human perception means a value close to 0 for RMSE and a value close to 1 for PLCC and SROCC. The calculation processes of PLCC, SROCC and RMSE are shown in Equations (30)–(32), respectively.

where represents the covariance between and ; represents the standard deviation; is rank difference of -th evaluation sample in and ; and N is the number of samples.

where N is the number of samples; is the subjective value (MOS/DMOS); and is the predicted value by IQA model.

3.2. Performance Analysis of Different Features

Overall, the proposed method extracts five types of quality perception features from the distorted image, as tabulated in Table 1.

Table 1.

Features used for Proposed IQA method.

Table 2 show the performance comparison of different features on the specific distortion types of LIVE database. The overall performance of the combination of the five features is better than that of each single feature, which shows that the design of each feature is reasonable and complementary.

Table 2.

Performance comparison of different features (LIVE database).

3.3. Overall Performance Analysis

We compared the performance of the proposed algorithm (DFF-IQA) with three FR-IQA models (PSNR, SSIM [4] and VIF [7]) and another five NR-IQA algorithms (BIQI [23], DIIVINE [24], BLIINDS-II [28], BRISQUE [30] and SSEQ [25]) on individual distortion types over the LIVE database. To make a fair comparison, we performed a similar random 20% test set selection for 1000 times to get median performance indices of the FR algorithms, since the FR algorithms do not need training. In addition, we only tested the FR approaches on the distorted images (excluding the reference images of the LIVE IQA database). For the NR approaches, the same random 80–20% train test trails were conducted and the median performance was treated as the overall performance indices. We also calculated the standard deviations (STD) of the performance indices to judge the algorithm stability in Table 6. Higher PLCC and SROCC with the lower STD and RMSE mean excellent quality prediction performance. The results are shown in Table 3, Table 4, Table 5 and Table 6.

Table 3.

Median SROCC across 1000 train-test trials on the LIVE IQA database. From the indices, we can see that the proposed approach shows the best performance in the individual distortion types (JP2K, JPEG and Noise) and in all distorted types.

Table 4.

Median PLCC across 1000 train-test trials on the LIVE IQA database. From the results, we can find that the VIF model indicates best performance in the individual distortion types of JP2K, Blur and FF. However, the proposed method shows the best index in the whole LIVE database.

Table 5.

Median RMSE across 1000 train-test trials on the LIVE IQA database. From the experimental data, we can deduce the proposed method shows second performance in the whole database. The best IQA model is the VIF.

Table 6.

Standard deviation of SROCC, PLCC and RMSE across 1000 train-test trials on the LIVE database. From the results, we can find the proposed method shows best performance in PLCC STD, second in SROCC STD, and third in RMSE STD.

Table 3, Table 4 and Table 5 are the experimental results of SROCC, PLCC and RMSE respectively. It can be seen from the results in the table that when evaluating the whole LIVE database, the median value of SROCC is 0.9576, and the median value of PLCC is 0.9671, all of which are the best performance. We also calculated the standard deviations (STD) of the performance indices to measure the stability of the models in Table 6; the proposed model also has the best stability. In addition, we can also find that the VIF model performs better than the proposed method in the median value of RMSE. However, its application is limited because it is a FR-IQA model. In summary, the proposed DFF-IQA model is more consistent with the human visual system than other competing IQA methods considering the practical application.

To further testify the superiority of the proposed DFF-IQA method, we also conducted a statistical significance analysis by following the approach in [16]. The comparison results among nine metrics are shown in Table 7 in terms of the Median PLCC. The proposed method is obviously superior to other competing IQA models, which is consistent with the data in Table 4.

Table 7.

Statistical significance tests of different IQA models in terms of PLCC. A value of ‘1’ (highlighted in green) indicates that the model in the row is significantly better than the model in the column, while a value of ‘0’ (highlighted in purple) indicates that the model in the row is not significantly better than the model in the column. The symbol “--” (highlighted in blue) indicates that the models in the rows and columns are statistically indistinguishable.

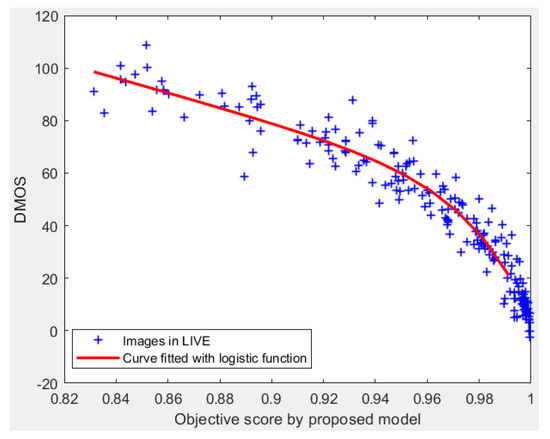

In order to further analyze the prediction performance of the proposed model, we provide a visual illustration by scatter plot of subjective ratings (DMOS) versus objective scores obtained by DFF-IQA model on LIVE database. As shown in Figure 9, each point (‘+’) represents one test image. The red curve shown in Figure 9 is obtained by a logistic function. DFF’s points are more close to each other, which means that the model correlates well with subjective ratings.

Figure 9.

Scatter plot of the proposed model on LIVE database. Each point (‘+’) represents one test image. The red curve shown in Figure 9 is obtained by a logistic function.

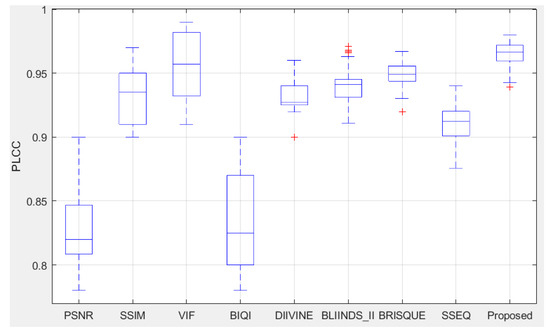

From Figure 10, we can find the proposed method is superior to other competing FR-IQA and NR-IQA models, which is consistent with the median PLCC in Table 4. From the above box plot, we can also find that the VIF model performs as well as the proposed method. However, its application is limited because it is a full-reference method. Therefore, experimental results further confirm that the proposed FFD-IQA model has good performance and practical application.

Figure 10.

Box plot of PLCC distributions of the compared IQA methods over 1000 trials on the LIVE database.

4. Conclusions

In this paper, we proposed a novel metric for NR-IQA, dubbed as DFF-IQA. It is based on dual-domain features extracted. The basic consideration is to develop some known facts of the human visual system (HVS) to build an IQA model that is useful for blind quality evaluation of color images. For this purpose, the proposed DFF-IQA model is dedicated to characterizing the image quality from both spatial and frequency domains. In the spatial domain, features of weighted local binary model (WLBP), naturalness and spatial entropy are extracted. In the frequency domain, the features of spectral entropy, asymmetrical generalized gaussian distribution (AGGD) fitting parameters and oriented energy distribution (OED) of curvelet coefficient are extracted. Then, the features extracted in the dual domain are fused to form a feature vector. At last, random forest (RF) is adopted to build the relationship between image features and quality scores, yielding a measure of image quality. Experiments on LIVE databases well demonstrate the superiority of the proposed DFF-IQA model. In the future, we will consider to further improve the performance of the algorithm by extracting more effective perceptional features.

Funding

This work was supported by Zhejiang Provincial National Science Foundation of China and National Science Foundation of China (NSFC) under Grant No. LZ20F020002, No. LY18F010005 and 61976149, Taizhou Science and Technology Project under Grant Nos. 1803gy08 and 1802gy06, and Outstanding Youth Project of Taizhou University under Grant Nos. 2018JQ003 and 2017PY026.

Acknowledgments

Thanks to Aihua Chen, Yang Wang and the members of image research team for discussions about the algorithm. Thanks also to anonymous reviewers for their comments.

Conflicts of Interest

The author declares no conflict of interest.

References

- Moorthy, A.K.; Mittal, A.; Bovik, A.C. Perceptually optimized blind repair of natural images. Signal Process. Image Commun. 2013, 28, 1478–1493. [Google Scholar] [CrossRef]

- Lin, W.; Kuo, C.-C.J. Perceptual visual quality metrics: A survey. J. Vis. Commun. Image Represent. 2011, 22, 297–312. [Google Scholar] [CrossRef]

- Gao, X.; Lu, W.; Tao, D.; Li, X. Image quality assessment and human visual system. In Proceedings of the SPIE 7744, Visual Communications and Image Processing, Huangshan, China, 11–14 July 2010. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378. [Google Scholar] [CrossRef]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thirty-Seventh Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 9–12 November 2003; pp. 1398–1402. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, X.; Wang, W.; Xue, W. Edge strength similarity for image quality assessment. IEEE Signal Process. Lett. 2013, 20, 319–322. [Google Scholar] [CrossRef]

- Liu, A.; Lin, W.; Narwaria, M. Image quality assessment based on gradient similarity. IEEE Trans. Image Process. 2012, 21, 1500–1512. [Google Scholar]

- Zhu, J.; Wang, N. Image quality assessment by visual gradient similarity. IEEE Trans. Image Process. 2012, 21, 919–933. [Google Scholar]

- Bondzulic, B.P.; Petrovic, V.S. Edge based objective evaluation of image quality. In Proceedings of the 18th IEEE International Conference on Image Processing (ICIP), Brussels, Belgium, 11–14 September 2011; pp. 3305–3308. [Google Scholar]

- Yang, Y.; Tu, D.; Cheng, G. Image quality assessment using histograms of oriented gradients. In Proceedings of the International Conference on Intelligent Control and Information Processing, Beijing, China, 9–11 June 2013; pp. 555–559. [Google Scholar]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient magnitude similarity deviation: A highly effificient perceptual image quality index. IEEE Trans. Image Process. 2013, 23, 684–695. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, T.; Siwei, M.; Gao, W. Image quality assessment based on local orientation distributions. In Proceedings of the 28th Picture Coding Symposium (PCS), Nagoya, Japan, 8–10 December 2010; pp. 274–277. [Google Scholar]

- Zhang, H.; Huang, Y.; Chen, X.; Deng, D. MLSIM: A multi-level similarity index for image quality assessment. Signal Process. Image Commun. 2013, 28, 1464–1477. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Gu, K.; Zhai, G.; Lin, W.; Yang, X.; Zhang, W. An effificient color image quality metric with local-tuned-global model. In Proceedings of the IEEE International Conference on Image Processing (ICIP 2014), Paris, France, 27–30 January 2015; pp. 506–510. [Google Scholar]

- Li, Q.; Wang, Z. Reduced-reference image quality assessment using divisive normalization-based image representation. IEEE J. Sel. Top. Signal Process. 2009, 3, 202–211. [Google Scholar] [CrossRef]

- Tao, D.; Li, X.; Lu, W.; Gao, X. Reduced-reference IQA in contourlet domain. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2009, 39, 1623–1627. [Google Scholar]

- Sazzad, Z.M.P.; Kawayoke, Y.; Horita, Y. No reference image quality assessment for JPEG2000 based on spatial features. Signal Process. Image Commun. 2008, 23, 257–268. [Google Scholar] [CrossRef]

- Suthaharan, S. No-reference visually significant blocking artifact metric for natural scene images. Signal Process. 2009, 1647–1652. [Google Scholar] [CrossRef]

- Zhu, X.; Milanfar, P. A no-reference sharpness metric sensitive to blur and noise. In Proceedings of the 2009 International Workshop on Quality of Multimedia Experience, San Diego, CA, USA, 29–31 July 2009; pp. 64–69. [Google Scholar]

- Moorthy, A.K.; Bovik, A.C. A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 2010, 17, 513–516. [Google Scholar] [CrossRef]

- Moorthy, A.K.; Bovik, A.C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Trans. Image Process. 2012, 20, 3350–3364. [Google Scholar] [CrossRef]

- Liu, L.; Liu, B.; Huang, H.; Bovik, A.C. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014, 29, 856–863. [Google Scholar] [CrossRef]

- Liu, L.; Dong, H.; Huang, H.; Bovik, A.C. No-reference image quality assessment in curvelet domain. Signal Process. Image Commun. 2014. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. A DCT statistics-based blind image quality index. IEEE Signal Process. Lett. 2010, 17, 583–586. [Google Scholar] [CrossRef]

- Saad, M.A.; Bovik, A.C.; Charrier, C. Blind image quality assessment: A natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 2012, 21, 3339–3352. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Bovik, A.; Wu, X. Blind image quality assessment using a general regression neural network. IEEE Trans. Neural Netw. 2011, 22, 793–799. [Google Scholar] [PubMed]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a ‘completely blind’ image quality analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Li, Q.; Lin, W.; Fang, Y. No-reference quality assessment for multiply-distorted images in gradient domain. IEEE Signal Process. Lett. 2016, 23, 541–545. [Google Scholar] [CrossRef]

- Freitas, P.G.; Akamine, W.Y.; Farias, M.C. Blind image quality assessment using multiscale local binary patterns. J. Imaging Sci. Technol. 2017, 60. [Google Scholar] [CrossRef]

- Candès, E.; Demanet, L.; Donoho, D.; Ying, L. Fast discrete Curvelet transforms. Multiscale Model. Simul. 2006, 5, 861–899. [Google Scholar] [CrossRef]

- Tang, H.; Joshi, N.; Kapoor, A. Learning a blind measure of perceptual image quality. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011. [Google Scholar]

- Ye, P.; Doermann, D. No-reference image quality assessment using visual codebooks. IEEE Trans. Image Process. 2012, 21, 3129–3138. [Google Scholar]

- Zhang, Y.; Wang, C.; Mou, X. SPCA: A no-reference image quality assessment based on the statistic property of the PCA on nature images. In Proceedings of the Digital Photography IX. International Society for Optics and Photonics, Burlingame, CA, USA, 3–7 February 2013. [Google Scholar]

- Mittal, A.; Soundararajan, R.; Muralidhar, G.S.; Bovik, A.C.; Ghosh, J. Blind image quality assessment without training on human opinion scores. In Proceedings of the Human Vision and Electronic Imaging XVIII. International Society for Optics and Photonics, Burlingame, CA, USA, 3–7 February 2013. [Google Scholar]

- Liu, L.; Hua, Y.; Zhao, Q.; Huang, H.; Bovik, A.C. Blind image quality assessment by relative gradient statistics and adaboosting neural network. Signal Process. Image Commun. 2015, 40, 1–15. [Google Scholar] [CrossRef]

- Seshadrinathan, K.; Bovik, A.C. Motio-tuned spatio-temporal quality assessment of natural video. IEEE Trans. Image Process. 2010, 19, 335–350. [Google Scholar] [CrossRef]

- Li, Q.; Lin, W.; Fang, Y. BSD: Blind image quality assessment based on structural degradation. Neurocomputing 2017, 236, 93–103. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.; Razavi, S. Multisensor data fusion: A review of the state-of-the-art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Mohandes, M.; Deriche, M.; Aliyu, S. Classifiers combination techniques: A comprehensive review. IEEE Access 2018, 6, 19626–19639. [Google Scholar] [CrossRef]

- Soriano, A.; Vergara, L.; Ahmed, B.; Salazar, A. Fusion of scores in a detection context based on alpha integration. Neural Comput. 2015, 27, 1983–2010. [Google Scholar] [CrossRef]

- Safont, G.; Salazar, A.; Vergara, L. Multi-class alpha integration of scores from multiple classifiers. Neural Comput. 2019, 31. [Google Scholar] [CrossRef]

- Vergara, L.; Soriano, A.; Safont, G.; Salazar, A. On the fusion of non-independent detectors. Digit. Signal Process. 2016, 50, 24–33. [Google Scholar] [CrossRef]

- Cui, Y.; Chen, A.; Yang, B.; Zhang, S.; Yang, Y. Human visual perception-based multi-exposure fusion image quality assessment. Symmetry 2019, 11, 1494. [Google Scholar] [CrossRef]

- Chi, B.; Yu, M.; Jiang, G.; He, Z.; Peng, Z.; Chen, F. Blind tone mapped image quality assessment with image segmentation and visual perception. J. Vis. Commun. Image Represent. 2020, 67, 102752. [Google Scholar] [CrossRef]

- Zheng, X.; Jiang, G.; Yu, M.; Jiang, H. Segmented spherical projection-based blind omnidirectional image quality assessment. IEEE Access 2020, 8, 31647–31659. [Google Scholar] [CrossRef]

- Sheikh, H.R.; Wang, Z.; Cormack, L.K.; Bovik, A.C. LIVE Image Quality Assessment Database. Available online: http://live.ece.utexas.edu/research/quality (accessed on 1 August 2006).

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).