Gaussian Process Based Expected Information Gain Computation for Bayesian Optimal Design

Abstract

1. Introduction

2. Formulation of Experimental Design

3. GP Based Framework for Bayesian Optimal Design

3.1. Bayesian Monte Carlo

3.2. Estimating the Expected Information Gain Using Double-Loop BMC

| Algorithm 1 Double-Loop Bayesian Monte Carlo (DLBMC) for estimating EIG |

|

3.3. Bayesian Optimization

| Algorithm 2 Bayesian optimization (BO) for optimal design |

|

3.4. Bayesian Parameter Inference

4. Numerical Experiments

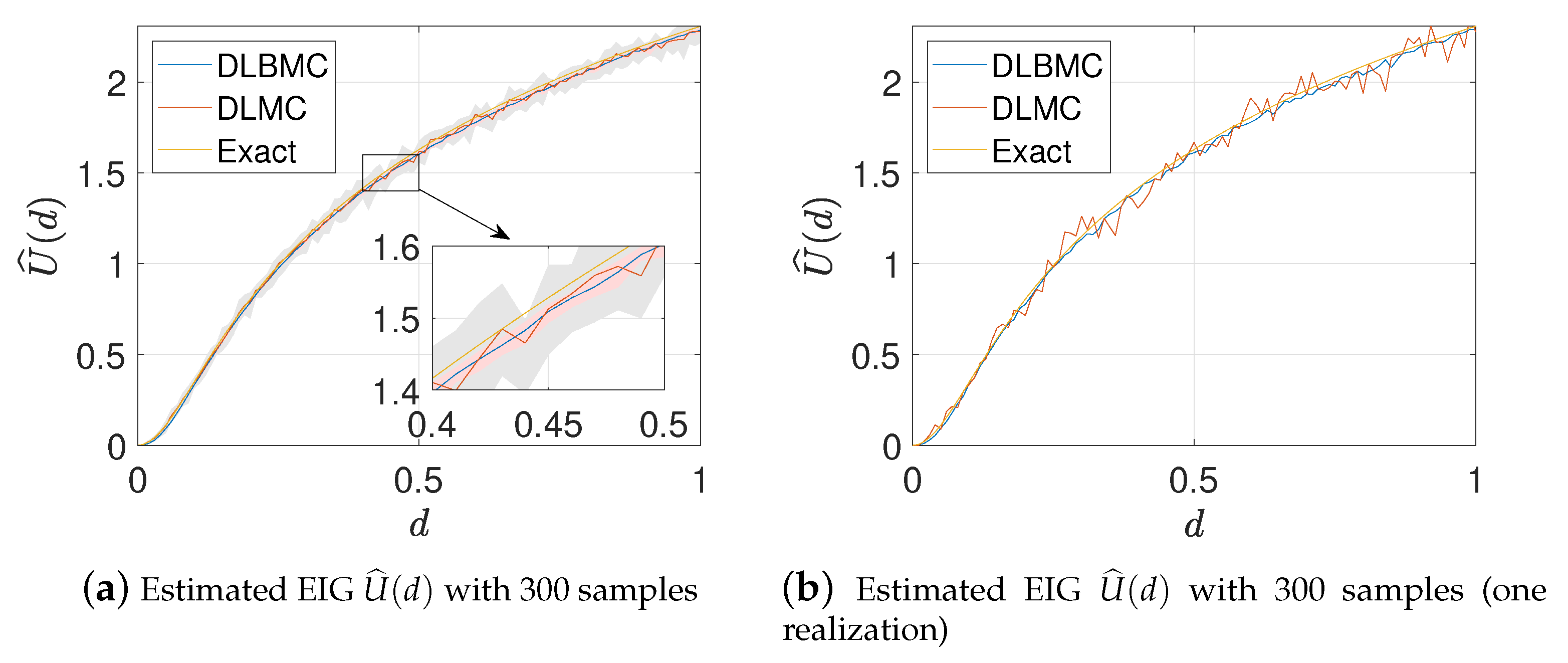

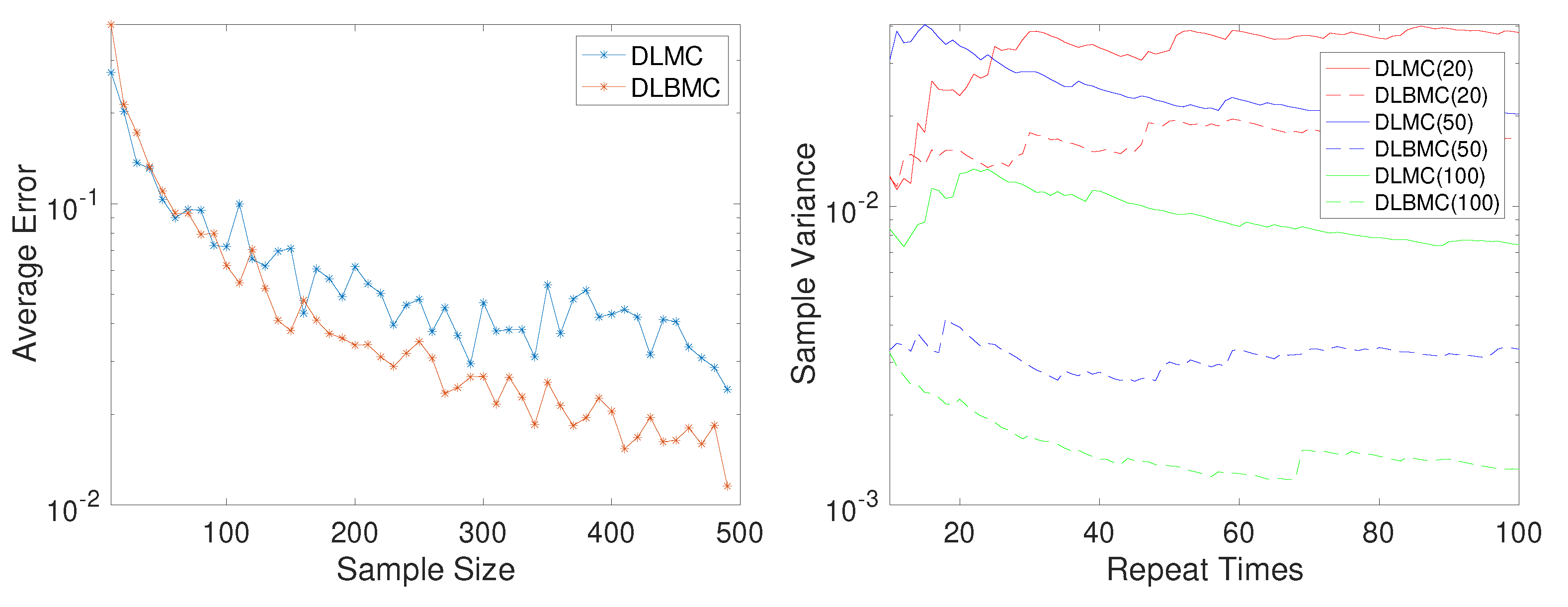

4.1. Test Problem 1: Linear Gaussian Problem

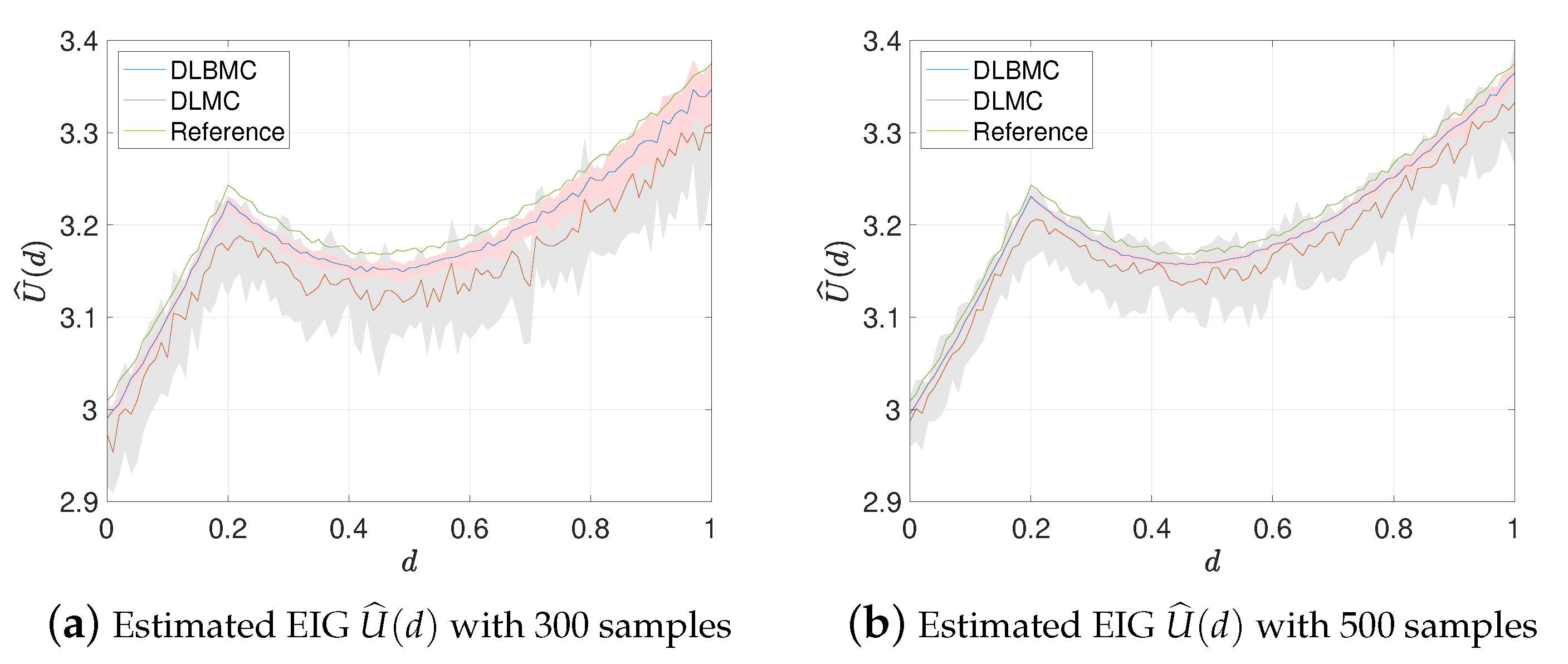

4.2. Test Problem 2: Nonlinear Simple Problem

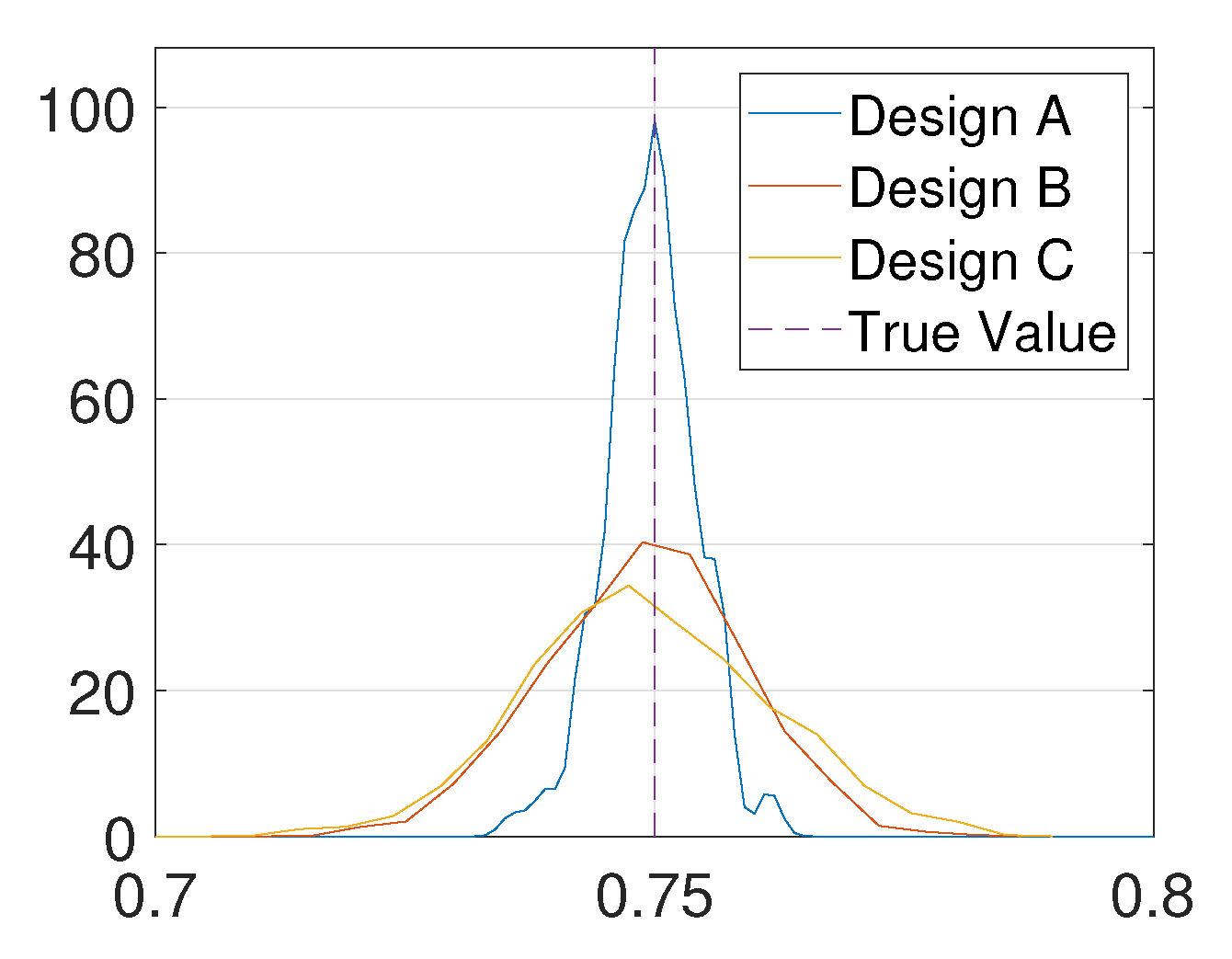

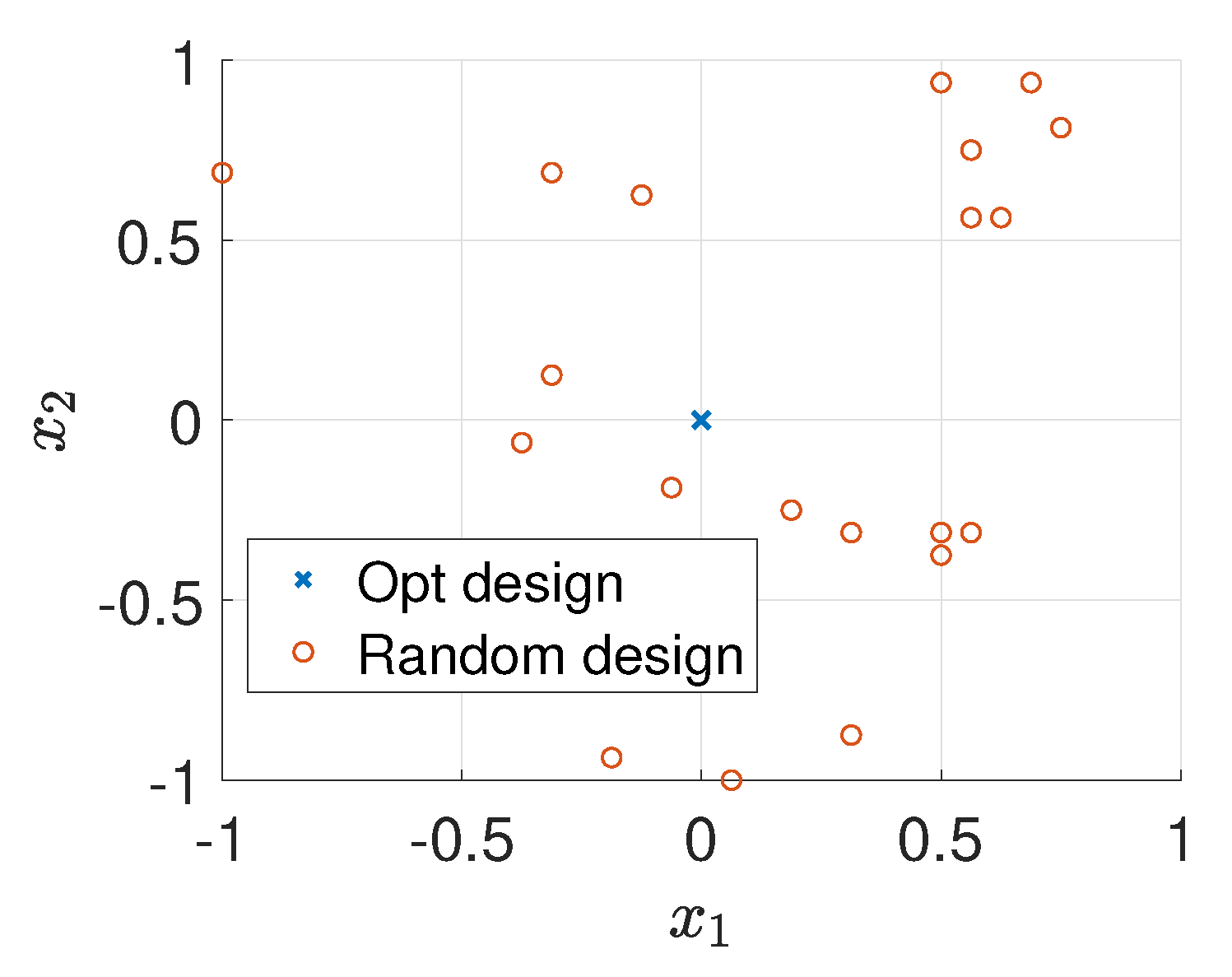

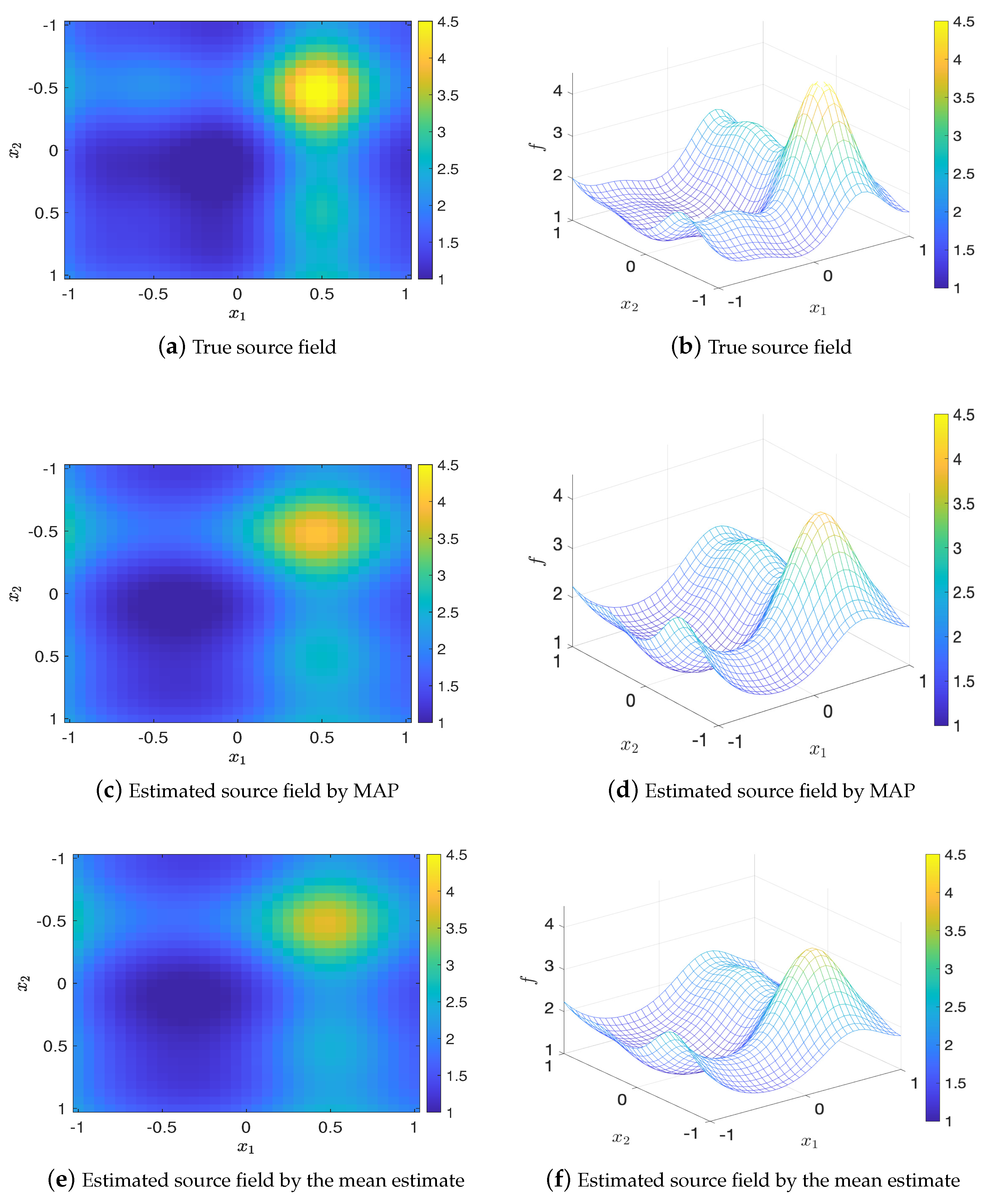

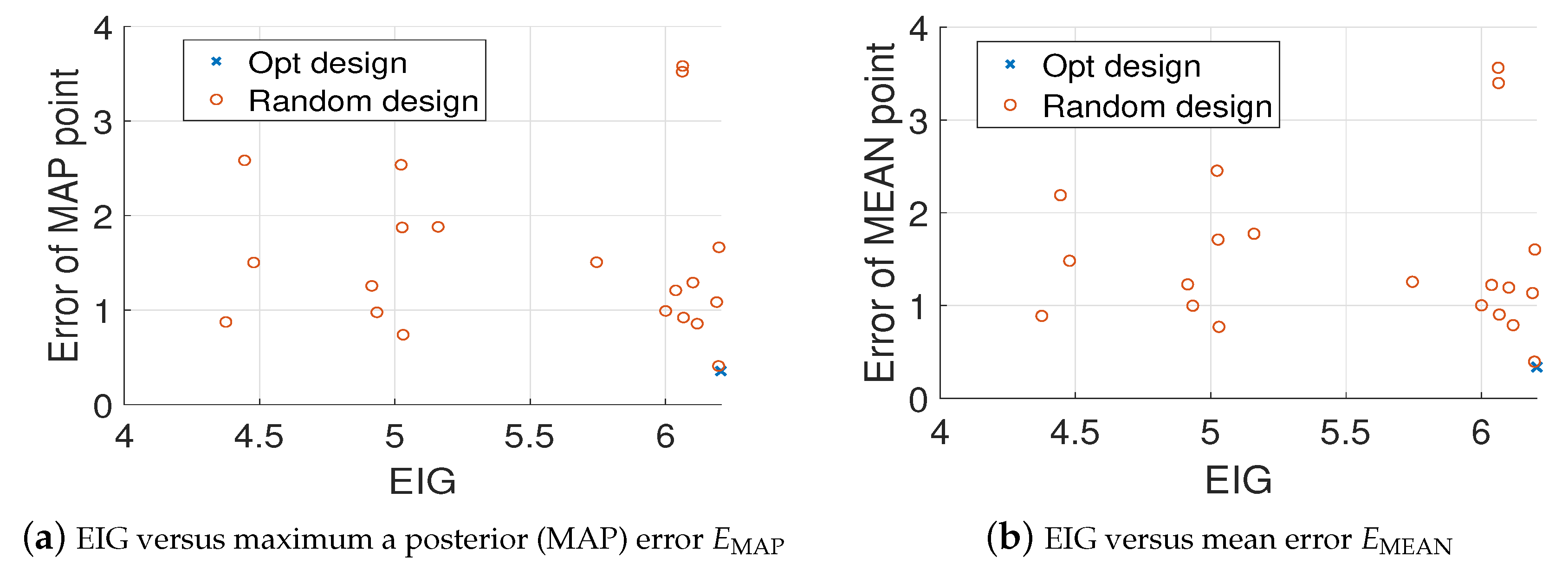

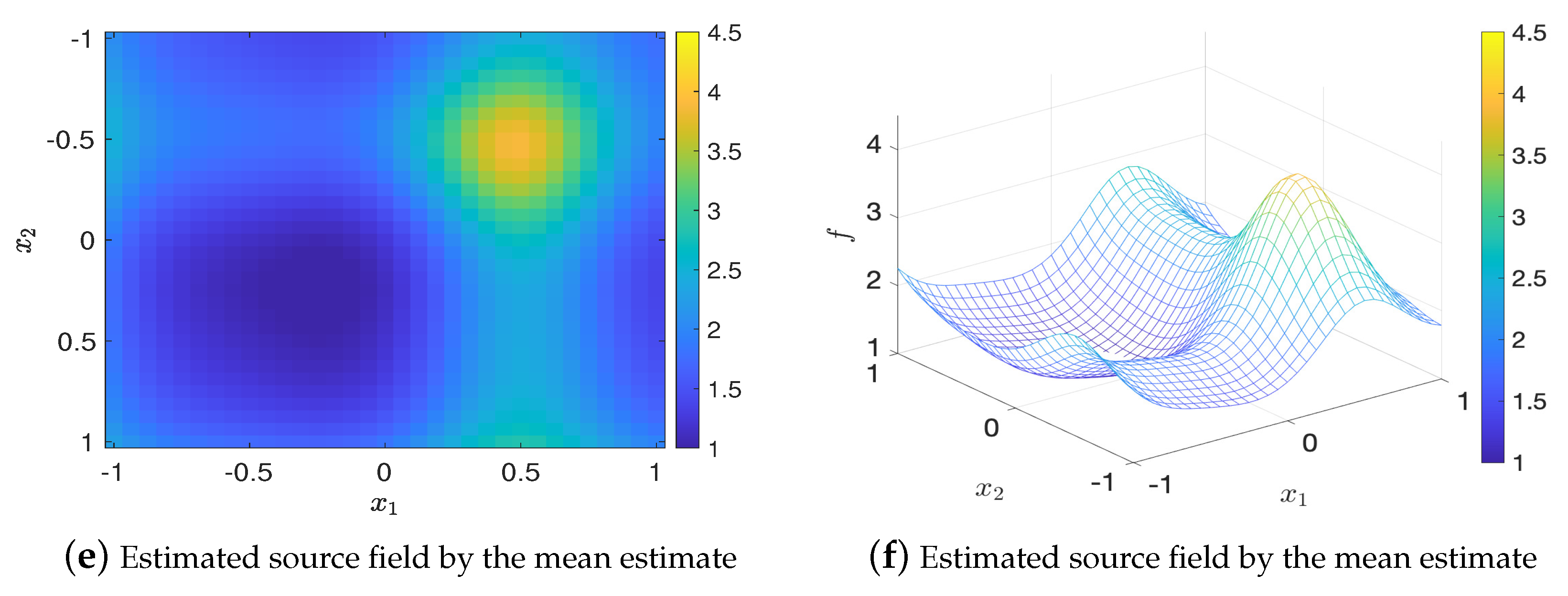

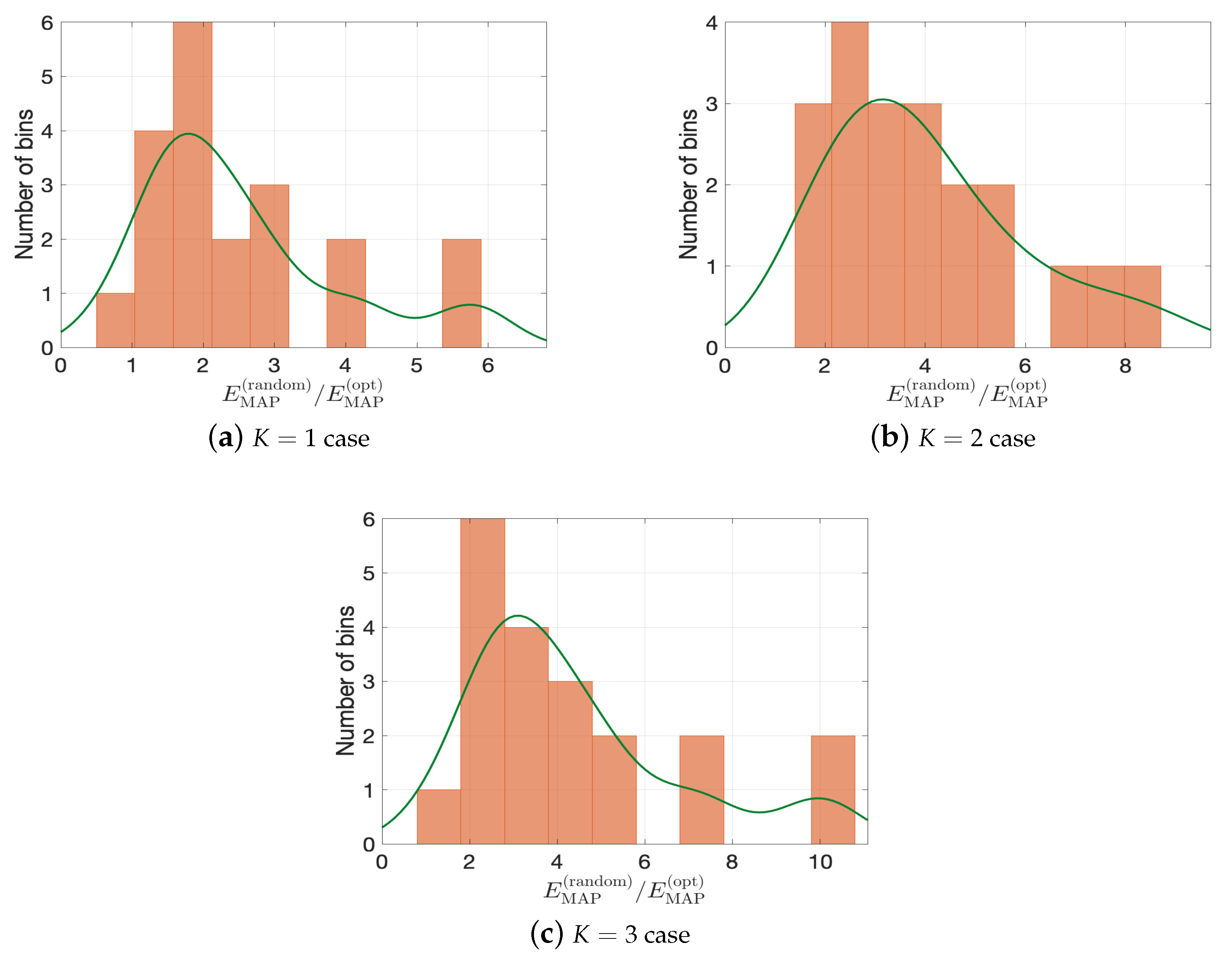

4.3. Test Problem 3: Source Inversion for the Diffusion Problem

5. Conclusions and Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Deduction of Linear Gaussian Model

Appendix B. Metropolis-Hastings MCMC Algorithm

| Algorithm A1 The Metropolis-Hastings Markov chain Monte Carlo (MH-MCMC) algorithm |

|

References

- Jones, M.; Goldstein, M.; Jonathan, P.; Randell, D. Bayes linear analysis for Bayesian optimal experimental design. J. Stat. Plan. Inference 2016, 171, 115–129. [Google Scholar] [CrossRef]

- Atkinson, A.; Donev, A.; Tobias, R. Optimum Experimental Designs, with SAS; Oxford University Press: Oxford, UK, 2007; Volume 34. [Google Scholar]

- Bernardo, J.M.; Smith, A.F. Bayesian Theory; John Wiley & Sons: Hoboken, NJ, USA, 2009; Volume 405. [Google Scholar]

- Stuart, A.M. Inverse problems: A Bayesian perspective. Acta Numer. 2010, 19, 451–559. [Google Scholar] [CrossRef]

- Tarantola, A. Inverse Problem Theory and Methods for Model Parameter Estimation; SIAM: Philadelphia, PA, USA, 2005; Volume 89. [Google Scholar]

- Kaipio, J.; Somersalo, E. Statistical and Computational Inverse Problems; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006; Volume 160. [Google Scholar]

- Alexanderian, A.; Petra, N.; Stadler, G.; Ghattas, O. A-optimal design of experiments for infinite-dimensional Bayesian linear inverse problems with regularized ℓ0-sparsification. SIAM J. Sci. Comput. 2014, 36, A2122–A2148. [Google Scholar] [CrossRef]

- Alexanderian, A.; Petra, N.; Stadler, G.; Ghattas, O. A fast and scalable method for A-optimal design of experiments for infinite-dimensional Bayesian nonlinear inverse problems. SIAM J. Sci. Comput. 2016, 38, A243–A272. [Google Scholar] [CrossRef]

- Weaver, B.P.; Williams, B.J.; Anderson-Cook, C.M.; Higdon, D.M. Computational enhancements to Bayesian design of experiments using Gaussian processes. Bayesian Anal. 2016, 11, 191–213. [Google Scholar] [CrossRef]

- Lindley, D.V. Bayesian Statistics, A Review; SIAM: Philadelphia, PA, USA, 1972; Volume 2. [Google Scholar]

- Chaloner, K.; Verdinelli, I. Bayesian experimental design: A review. Stat. Sci. 1995, 10, 273–304. [Google Scholar] [CrossRef]

- Müller, P.; Parmigiani, G. Optimal design via curve fitting of Monte Carlo experiments. J. Am. Stat. Assoc. 1995, 90, 1322–1330. [Google Scholar]

- Huan, X.; Marzouk, Y. Gradient-based stochastic optimization methods in Bayesian experimental design. Int. J. Uncertain. Quantif. 2014, 4, 479–510. [Google Scholar] [CrossRef]

- Huan, X.; Marzouk, Y.M. Simulation-based optimal Bayesian experimental design for nonlinear systems. J. Comput. Phys. 2013, 232, 288–317. [Google Scholar] [CrossRef]

- Wang, H.; Lin, G.; Li, J. Gaussian process surrogates for failure detection: A Bayesian experimental design approach. J. Comput. Phys. 2016, 313, 247–259. [Google Scholar] [CrossRef]

- Drovandi, C.C.; Tran, M.N. Improving the efficiency of fully Bayesian optimal design of experiments using randomised quasi-Monte Carlo. Bayesian Anal. 2018, 13, 139–162. [Google Scholar] [CrossRef]

- Feng, C.; Marzouk, Y.M. A layered multiple importance sampling scheme for focused optimal Bayesian experimental design. arXiv 2019, arXiv:1903.11187. [Google Scholar]

- Overstall, A.M.; Woods, D.C. Bayesian design of experiments using approximate coordinate exchange. Technometrics 2017, 59, 458–470. [Google Scholar] [CrossRef]

- Overstall, A.; McGree, J. Bayesian design of experiments for intractable likelihood models using coupled auxiliary models and multivariate emulation. Bayesian Anal. 2018, 15, 103–131. [Google Scholar] [CrossRef]

- Ryan, E.G.; Drovandi, C.C.; McGree, J.M.; Pettitt, A.N. A review of modern computational algorithms for Bayesian optimal design. Int. Stat. Rev. 2016, 84, 128–154. [Google Scholar] [CrossRef]

- Rasmussen, C.E. Gaussian processes in machine learning. In Summer School on Machine Learning; Springer: Berlin/Heidelberg, Germany, 2003; pp. 63–71. [Google Scholar]

- Deisenroth, M.P.; Huber, M.F.; Hanebeck, U.D. Analytic moment-based Gaussian process filtering. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 225–232. [Google Scholar]

- Santner, T.J.; Williams, B.J.; Notz, W.; Williams, B.J. The Design and Analysis of Computer Experiments; Springer: Berlin/Heidelberg, Germany, 2003; Volume 1. [Google Scholar]

- O’Hagan, A. Bayes–hermite quadrature. J. Stat. Plan. Inference 1991, 29, 245–260. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Ghahramani, Z. Bayesian Monte Carlo. In Proceedings of the 2003 Neural Information Processing Systems, Vancouver, BC, Canada, 8–13 December 2003; pp. 505–512. [Google Scholar]

- Briol, F.X.; Oates, C.J.; Girolami, M.; Osborne, M.A.; Sejdinovic, D. Probabilistic integration: A role in statistical computation? Stat. Sci. 2019, 34, 1–22. [Google Scholar] [CrossRef]

- Brochu, E.; Cora, V.M.; De Freitas, N. A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning. arXiv 2010, arXiv:1012.2599. [Google Scholar]

- Snoek, J.; Larochelle, H.; Adams, R.P. Practical bayesian optimization of machine learning algorithms. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 2951–2959. [Google Scholar]

- Shahriari, B.; Swersky, K.; Wang, Z.; Adams, R.P.; De Freitas, N. Taking the human out of the loop: A review of Bayesian optimization. Proc. IEEE 2015, 104, 148–175. [Google Scholar] [CrossRef]

- Ryan, K.J. Estimating expected information gains for experimental designs with application to the random fatigue-limit model. J. Comput. Graph. Stat. 2003, 12, 585–603. [Google Scholar] [CrossRef]

- Bungartz, H.J.; Griebel, M. Sparse grids. Acta Numer. 2004, 13, 147–269. [Google Scholar] [CrossRef]

- Xiu, D. Numerical Methods for Stochastic Computations: A Spectral Method Approach; Princeton University Press: Princeton, NJ, USA, 2010. [Google Scholar]

- Elman, H.; Liao, Q. Reduced Basis Collocation Methods for Partial Differential Equations with Random Coefficients. SIAM/ASA J. Uncertain. Quantif. 2013, 1, 192–217. [Google Scholar] [CrossRef][Green Version]

- Villemonteix, J.; Vazquez, E.; Walter, E. An informational approach to the global optimization of expensive-to-evaluate functions. J. Glob. Optim. 2009, 44, 509. [Google Scholar] [CrossRef]

- Jones, D.R. A taxonomy of global optimization methods based on response surfaces. J. Glob. Optim. 2001, 21, 345–383. [Google Scholar] [CrossRef]

- Lam, R.; Willcox, K.; Wolpert, D.H. Bayesian optimization with a finite budget: An approximate dynamic programming approach. In Proceedings of the 2016 NIPS, Barcelona, Spain, 5–10 December 2016; pp. 883–891. [Google Scholar]

- Srinivas, N.; Krause, A.; Kakade, S.M.; Seeger, M. Gaussian process optimization in the bandit setting: No regret and experimental design. arXiv 2009, arXiv:0912.3995. [Google Scholar]

- Shahriari, B.; Bouchard-Côté, A.; Freitas, N. Unbounded Bayesian optimization via regularization. In Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Cadiz, Spain, 9–11 May 2016; pp. 1168–1176. [Google Scholar]

- Lizotte, D.J. Practical Bayesian Optimization. Ph.D. Thesis, University of Alberta, Edmonton, AB, Canada, 2008. [Google Scholar]

- Kushner, H.J. A new method of locating the maximum point of an arbitrary multipeak curve in the presence of noise. J. Basic Eng. Mar. 1964, 86, 97–106. [Google Scholar] [CrossRef]

- Mockus, J.; Tiesis, V.; Zilinskas, A. The application of bayesian methods for seeking the extremum. In Toward Global Optimization; North-Holand: Amsterdam, The Netherlands, 1978. [Google Scholar]

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient global optimization of expensive black-box functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Teller, A.H.; Teller, E. Equation of state calculations by fast computing machines. J. Chem. Phys. 1953, 21, 1087–1092. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Robert, C.; Casella, G. Monte Carlo Statistical Methods; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Marzouk, Y.M.; Najm, H.N.; Rahn, L.A. Stochastic spectral methods for efficient Bayesian solution of inverse problems. J. Comput. Phys. 2007, 224, 560–586. [Google Scholar] [CrossRef]

- Marzouk, Y.M.; Najm, H.N. Dimensionality reduction and polynomial chaos acceleration of Bayesian inference in inverse problems. J. Comput. Phys. 2009, 228, 1862–1902. [Google Scholar] [CrossRef]

- Li, J.; Marzouk, Y.M. Adaptive construction of surrogates for the Bayesian solution of inverse problems. SIAM J. Sci. Comput. 2014, 36, A1163–A1186. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Liao, Q. Gaussian Process Based Expected Information Gain Computation for Bayesian Optimal Design. Entropy 2020, 22, 258. https://doi.org/10.3390/e22020258

Xu Z, Liao Q. Gaussian Process Based Expected Information Gain Computation for Bayesian Optimal Design. Entropy. 2020; 22(2):258. https://doi.org/10.3390/e22020258

Chicago/Turabian StyleXu, Zhihang, and Qifeng Liao. 2020. "Gaussian Process Based Expected Information Gain Computation for Bayesian Optimal Design" Entropy 22, no. 2: 258. https://doi.org/10.3390/e22020258

APA StyleXu, Z., & Liao, Q. (2020). Gaussian Process Based Expected Information Gain Computation for Bayesian Optimal Design. Entropy, 22(2), 258. https://doi.org/10.3390/e22020258