An Information-Theoretic Measure for Balance Assessment in Comparative Clinical Studies

Abstract

1. Introduction

2. Information Theory and the JSD

2.1. Entropy

2.2. Joint and Conditional Entropy

2.3. Mutual Information

2.4. Relative Entropy

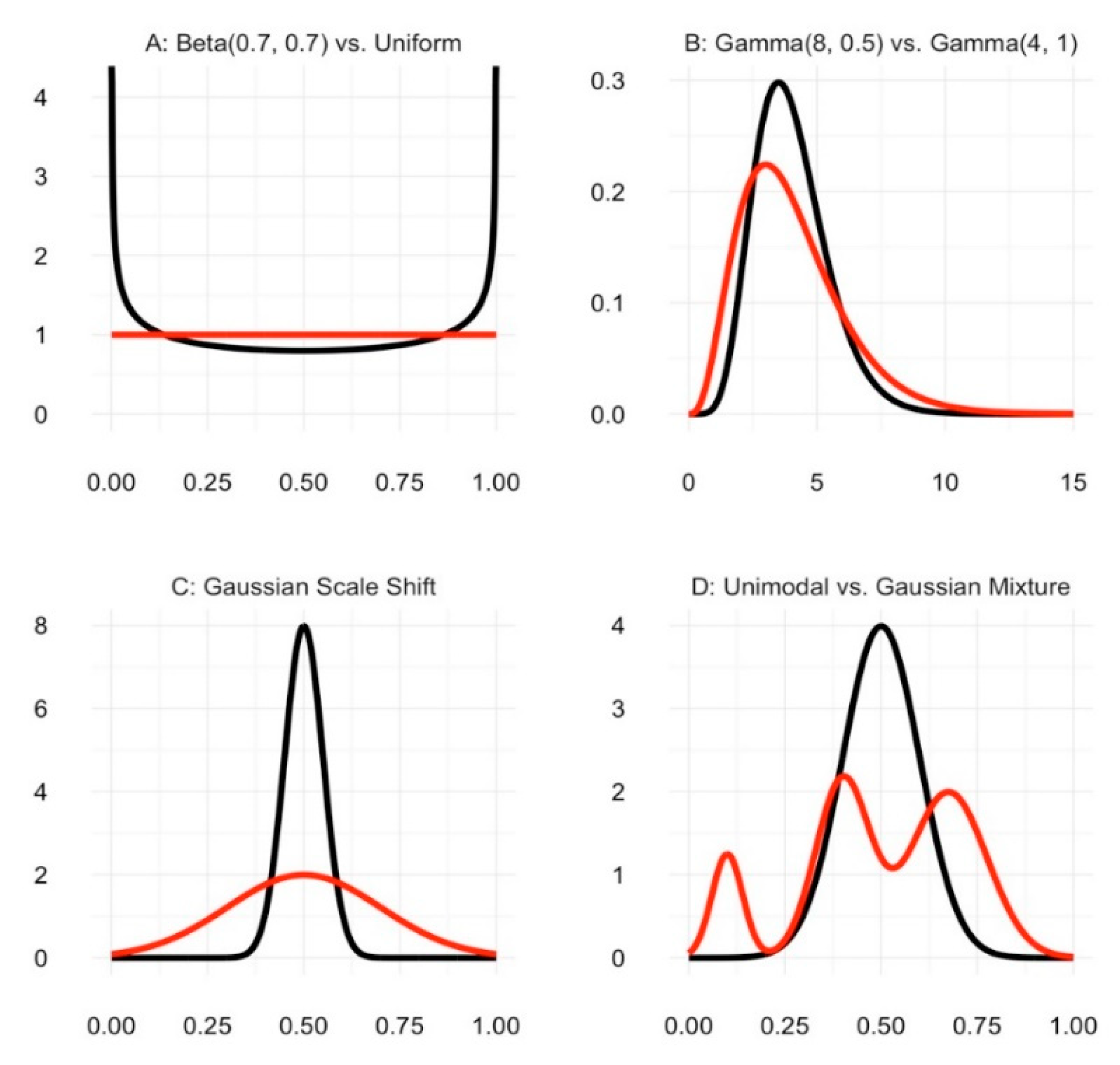

2.5. Jensen–Shannon Divergence (JSD)

2.6. The JSD of Covariate Distributions Across Treatment Groups

3. Properties of the JSD

4. Applications

5. Summary

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Friedman, L.M.; Furberg, C.D.; DeMets, D.L.; Reboussin, D.M.; Granger, C.B. Fundamentals of Clinical Trials, 5th ed.; Springer: New York, NY, USA, 2010. [Google Scholar]

- Contreras-Reyes, J.E.; Cortés, D.D. Bounds on Rényi and Shannon Entropies for Finite Mixtures of Multivariate Skew-Normal Distributions: Application to Swordfish (Xiphias gladius Linnaeus). Entropy 2016, 18, 382. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inform. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Kullback, S.; Leibler, R.A. On information and sufficiency. Ann. Math. Stat. 1951, 2, 79–86. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Nielsen, F. On the Jensen-Shannon symmetrization of distances relying on abstract means. Entropy 2019, 21, 485. [Google Scholar] [CrossRef]

- Kind, A.J.H.; Buckingham, W.R. Making Neighborhood-Disadvantage Metrics Accessible—The Neighborhood Atlas. N. Engl. J. Med. 2018, 378, 2456–2458. [Google Scholar] [CrossRef] [PubMed]

- Austin, P.C. Using the standardized difference to compare the prevalence of a binary variable between two groups in observational research. Commun. Stat.-Simul. Comput. 2009, 38, 1228–1234. [Google Scholar] [CrossRef]

- Ho, D.E.; Imai, K.; King, G.; Stuart, E.A. Matching as nonparametric preprocessing for reducing model dependence in parametric causal inference. Political Anal. 2007, 15, 199–236. [Google Scholar] [CrossRef]

| Glucose | Disadvantaged | Elderly | Reference |

|---|---|---|---|

| <109 | 7191 | 3637 | 64,265 |

| 109–125 | 1025 | 835 | 7298 |

| >125 | 1715 | 685 | 6932 |

| Glucose | Disadvantaged | Elderly | Reference | |

|---|---|---|---|---|

| <109 | 0.724 | 0.705 | 0.819 | 0.749 |

| 109–125 | 0.103 | 0.162 | 0.093 | 0.119 |

| >125 | 0.173 | 0.133 | 0.088 | 0.131 |

| Glucose | Disadvantaged | Elderly | Reference | Total |

|---|---|---|---|---|

| <109 | −0.0119 | −0.0206 | 0.0349 | 0.0023 |

| 109–125 | −0.0072 | 0.0237 | −0.0112 | 0.0053 |

| >125 | 0.0228 | 0.0008 | −0.0168 | 0.0067 |

| Total | 0.0036 | 0.0039 | 0.0068 | 0.0144 * |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dalton, J.E.; Benish, W.A.; Krieger, N.I. An Information-Theoretic Measure for Balance Assessment in Comparative Clinical Studies. Entropy 2020, 22, 218. https://doi.org/10.3390/e22020218

Dalton JE, Benish WA, Krieger NI. An Information-Theoretic Measure for Balance Assessment in Comparative Clinical Studies. Entropy. 2020; 22(2):218. https://doi.org/10.3390/e22020218

Chicago/Turabian StyleDalton, Jarrod E., William A. Benish, and Nikolas I. Krieger. 2020. "An Information-Theoretic Measure for Balance Assessment in Comparative Clinical Studies" Entropy 22, no. 2: 218. https://doi.org/10.3390/e22020218

APA StyleDalton, J. E., Benish, W. A., & Krieger, N. I. (2020). An Information-Theoretic Measure for Balance Assessment in Comparative Clinical Studies. Entropy, 22(2), 218. https://doi.org/10.3390/e22020218