Emerging Complexity in Distributed Intelligent Systems

Abstract

1. Introduction

2. State of the Art

2.1. Sensor Network

2.2. Multiagent Systems

2.3. Distributed Expert Systems and Cognitive Architectures

2.4. Multiagent Machine Learning

2.5. Quantum Approach to DIS

2.6. Valuation and Comparison

3. DIS: Generalized Motivational Model

3.1. Definitions and Features

- Commonly, a DIS consists of agents of various roles. This includes agents with an explicit and essential role of human interaction (furtherly called natural intelligence agents, NIA) and agents with the primary role of AI components (furtherly called artificial intelligence agents, AIA). In the general case, , where —a set of AIAs (Definition 2), —a set of NIAs (Definitions 3 and 4).

- Decentralized network-based nature of DIS. In a large-scale DIS, one can consider its structure as a complex network (Definition 5) where the emergent structures and topology influence both information exchange procedures and high-level performance characteristics of DIS. In addition, in a fully decentralized system, usually , so the agents behave under self-motivation and self-regulation.

- Agents and subsystems within a DIS may have different goals, objective functions, behavior models, etc. (see Definition 5). It is more natural for NIA; still, AIA optimizing their outcomes may select different behavior strategies too as a reaction to the environment , and according to the structure of and other agents’ behavior.

- The self-organization of large-scale DIS enables the adaptation of its functional features without a prior definition of the domain, problem, and particular task. In this case, a DIS may even be considered as a step toward artificial general intelligence. Still, in some types of DIS, centralized control () is presented, e.g., as a shared knowledge base or functional characteristics for the organization of .

- A DIS is considered as a system that (as a whole) acts as an information system with particular purposes, functional properties, performance measures, etc. Commonly, the functional characteristics are defined as a reaction of the whole DIS to the environment based on the individual behavior of the agents (Definition 5). As a result, emergent structures within a DIS as a complex system can directly influence its performance and functional characteristics.

3.2. Generalized Formalism

- ,

- , which may change with time,

- —multiplex condition, i.e., . For simplicity, let fix

- Eventaffects agent state by an update function as;

- Agent attitude to an event is determined by the reaction function.

- (1)

- —state variable,is objective, such thattends to reach its objective for minimal time:;

- (2)

- is the event environment,andare the update and reaction feedback functions, affecting dynamics of NIA states.

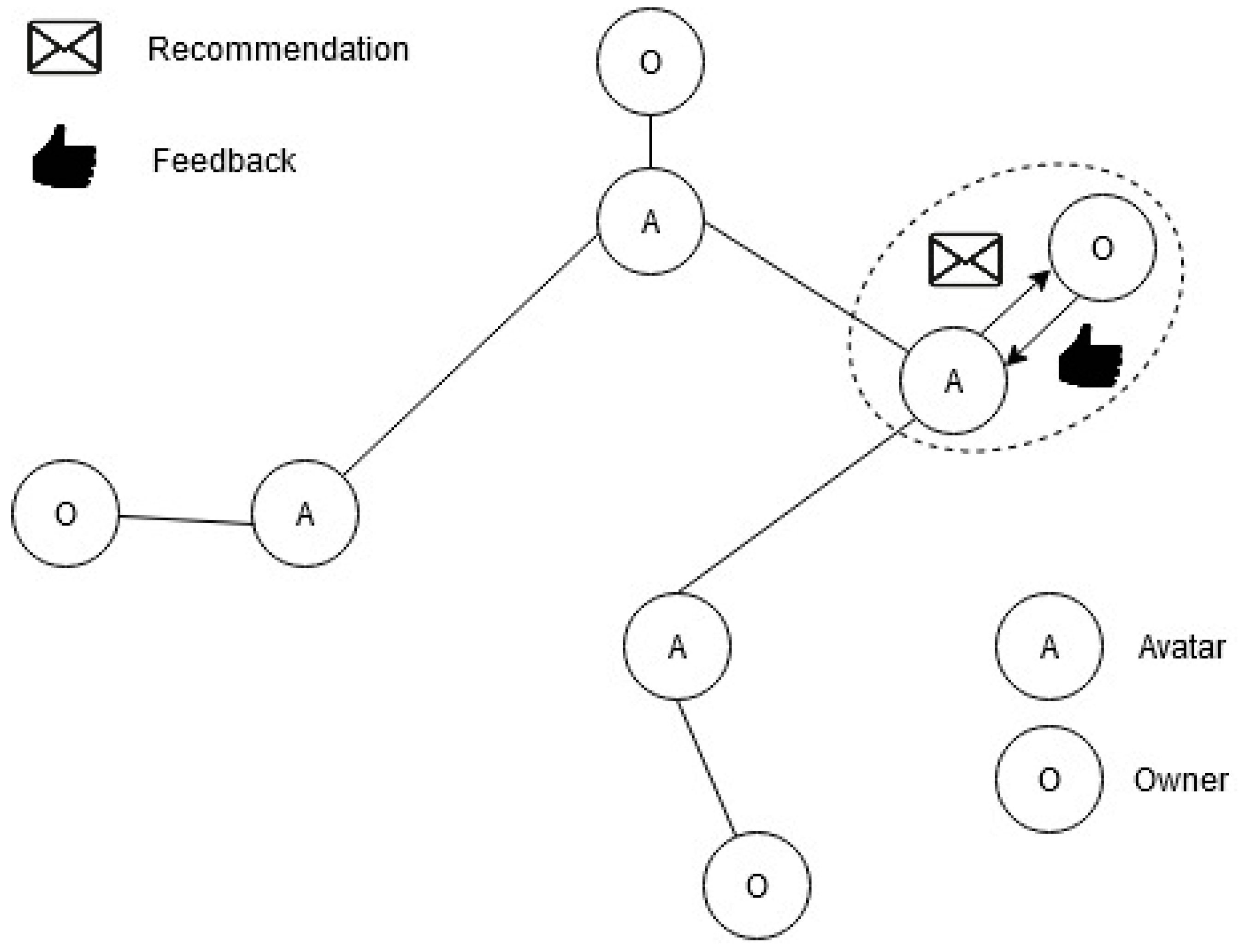

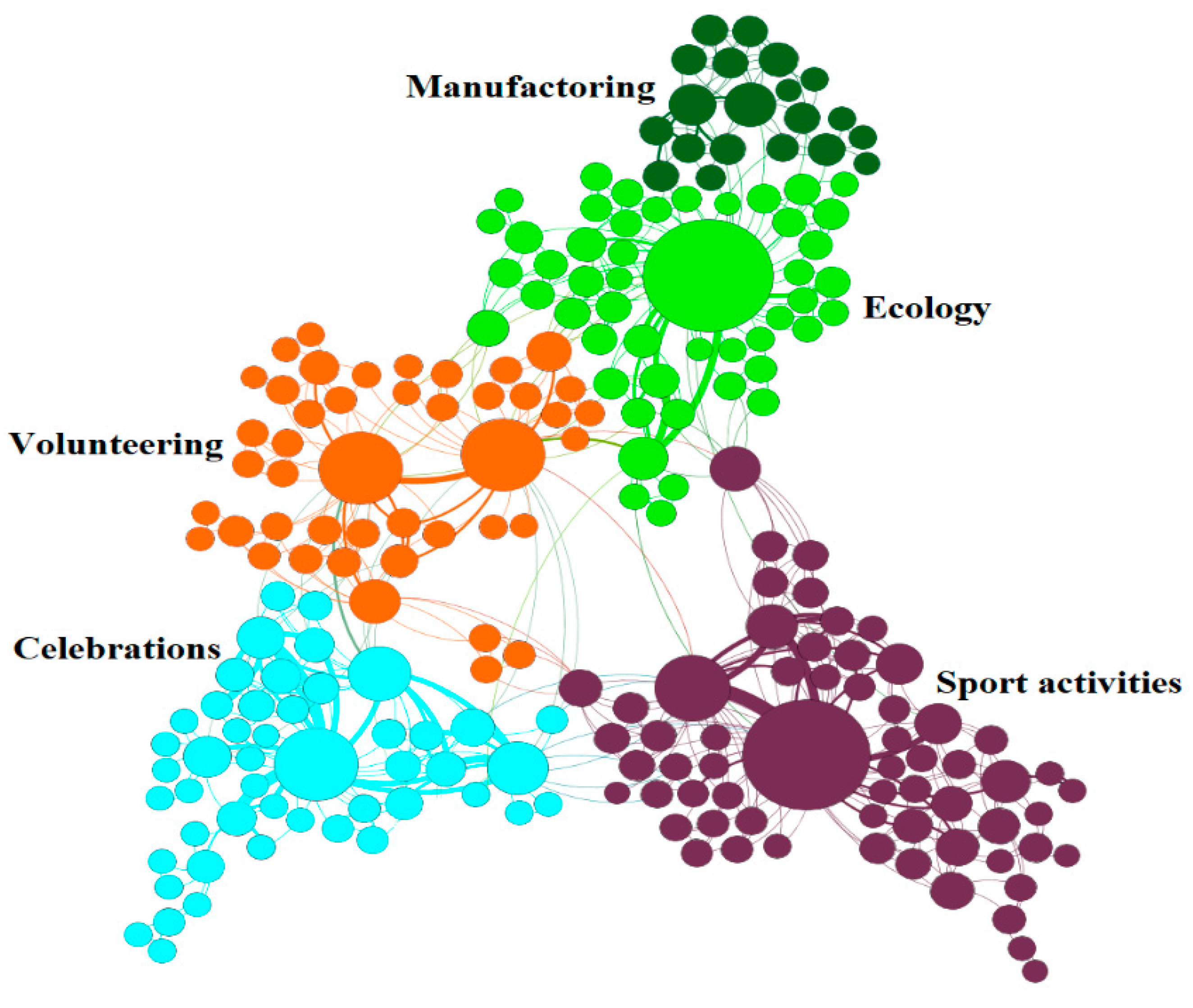

4. Case Study: A Network of Personal Self-Adaptive Educational Assistants (Avatars)

4.1. Problem Formulation

- q-values for all types of messages are calculated:

- For each type of message, the average value of the q-value by neighbors is calculated:

- The final value of q-values for the i-th owner is formed as a linear combination of its own Q-values and q-values of its neighbors:where α is the predefined mixing factor (α = 0—use only neighbor data; α = 1—do not use the neighbor’s data).

- Then, the ε-greedy algorithm (ε = 0.1) is followed.

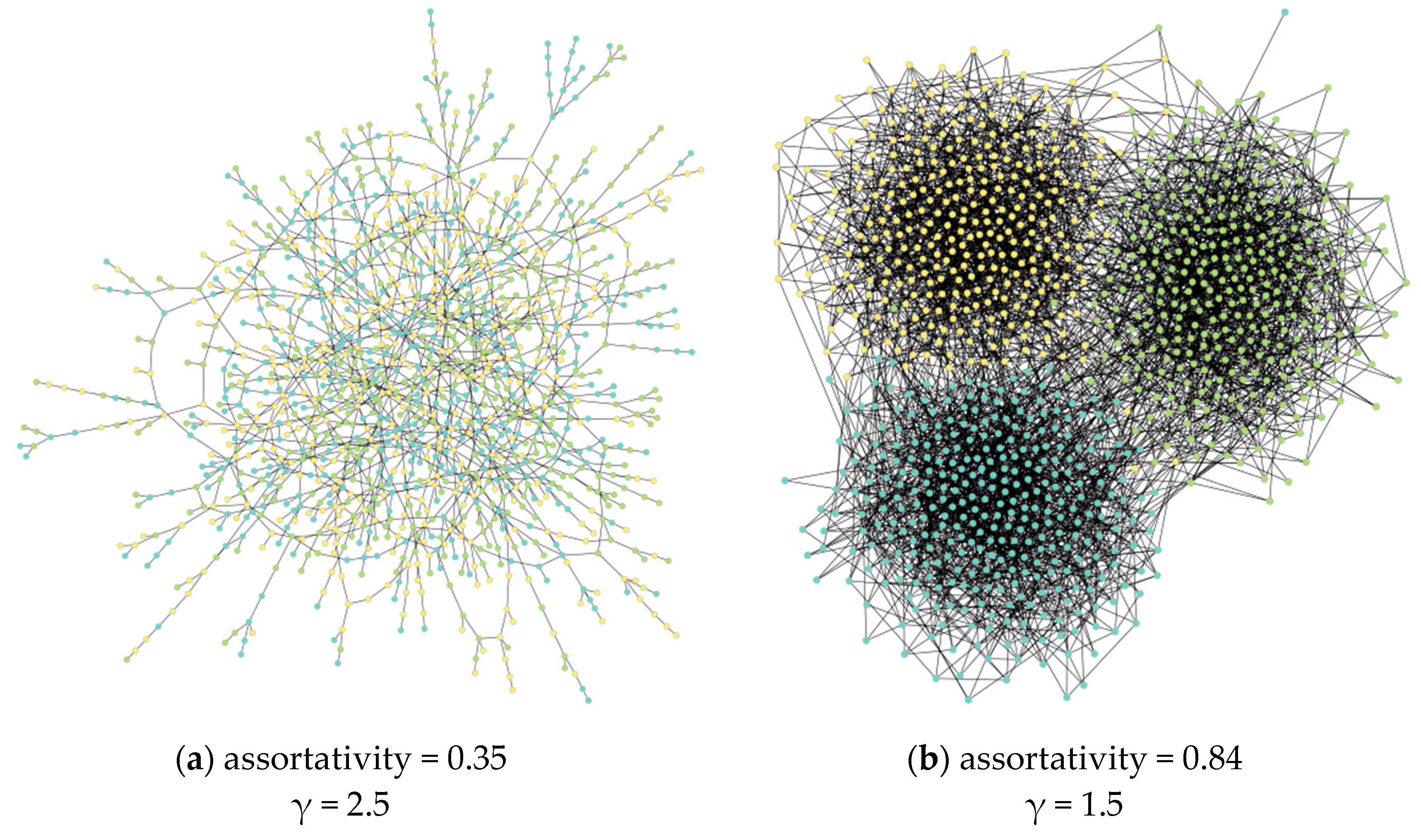

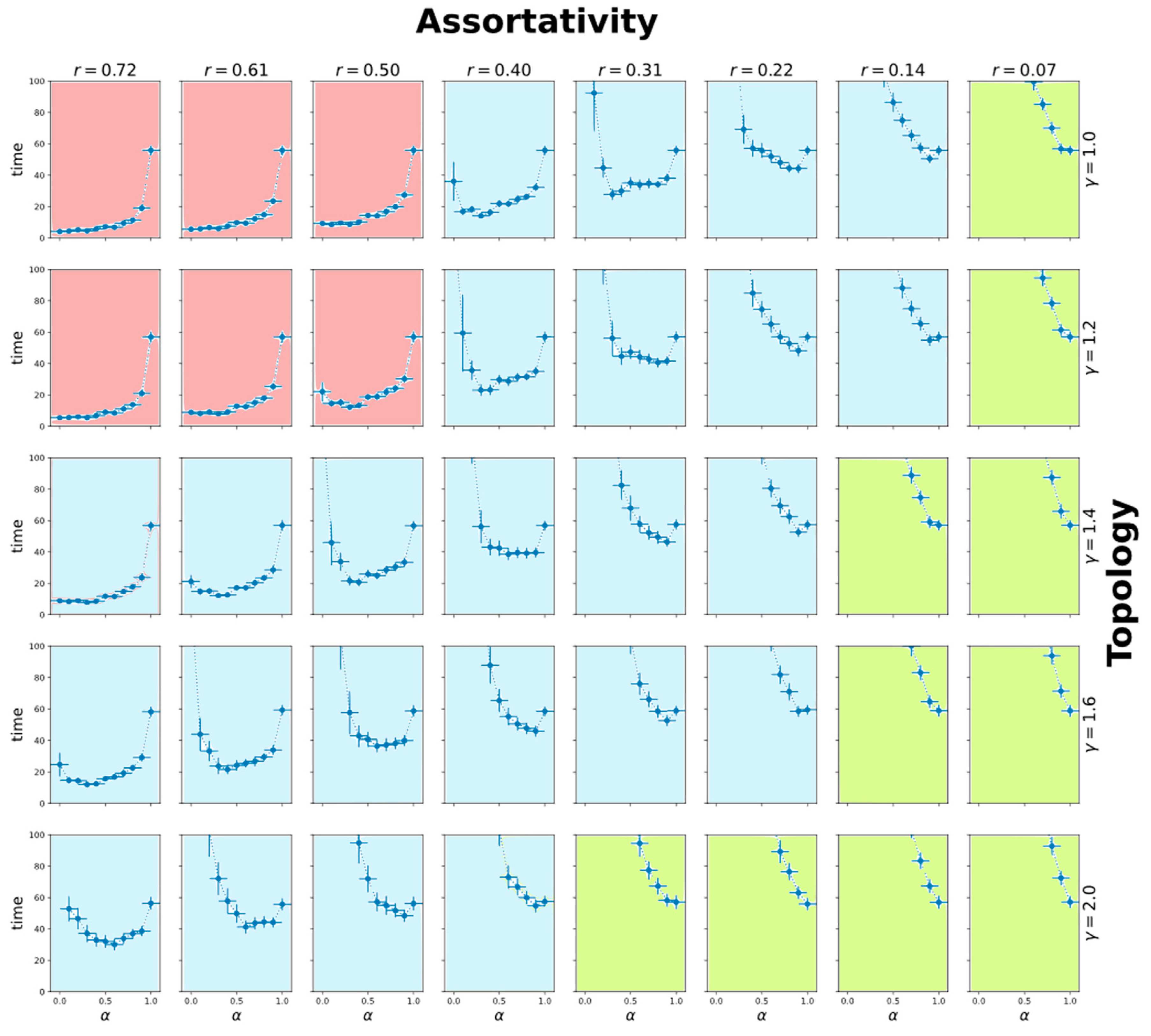

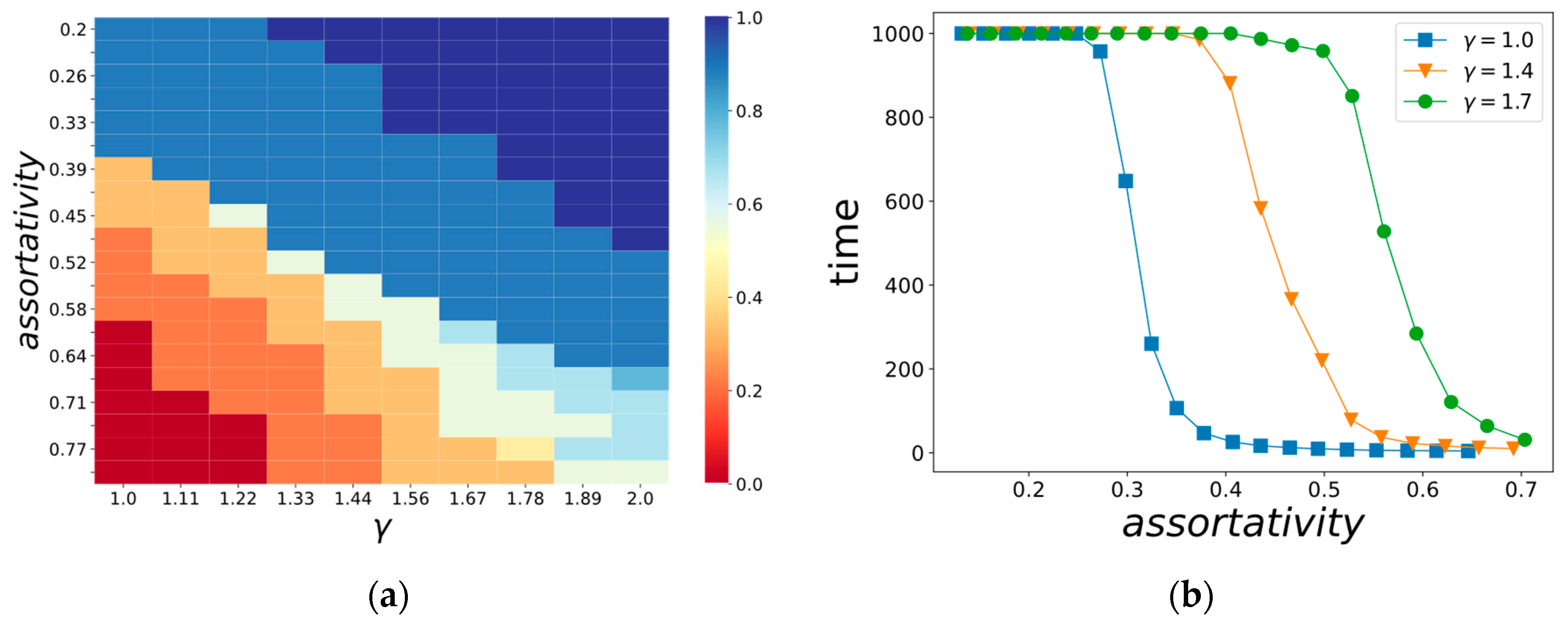

4.2. Computational Experiment

- Mode 1—including owner knowledge worsens the speed of convergence, is observed for high assortativity values (red);

- Mode 2—limited usage of neighbors’ knowledge improves the speed of convergence (blue);

- Mode 3—including knowledge about neighbors leads to worsening the speed of convergence, is observed for low assortativity values, when the majority of neighbors give bad advices to the assistant (green).

5. Discussion

5.1. Emergence of Complexity in DIS

5.2. Practical Implications of DIS Complexity Effects

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Levis, A.H. Human organizations as distributed intelligence systems. IFAC Proc. Vol. 1988, 21, 5–11. [Google Scholar] [CrossRef]

- Heylighen, F. Distributed intelligence technologies: Present and future applications. In The Future Information Society; World Scientific: Singapore, 2017; pp. 179–212. [Google Scholar]

- Corchado, J.M.; Bichindaritz, I.; De Paz, J.F. Distributed artificial intelligence models for knowledge discovery in bioinformatics. Biomed. Res. Int. 2015, 2015, 1–2. [Google Scholar] [CrossRef]

- Crowder, J.A.; Carbone, J.N. An agent-based design for distributed artificial intelligence. In Proceedings of the 2016 International Conference on Artificial Intelligence, ICAI 2016-WORLDCOMP, Las Vegas, NV, USA, 25–28 July 2016; pp. 81–87. [Google Scholar]

- D’Angelo, G.; Rampone, S. Cognitive distributed application area networks. In Security and Resilience in Intelligent Data-Centric Systems and Communication Networks; Elsevier: Amsterdam, The Netherlands, 2018; pp. 193–214. ISBN 9780128113745. [Google Scholar]

- Kennedy, J. Swarm intelligence. In Handbook of Nature-Inspired and Innovative Computing; Kluwer Academic Publishers: Boston, MA, USA, 2006; pp. 187–219. [Google Scholar]

- Tsvetkova, M.; García-Gavilanes, R.; Floridi, L.; Yasseri, T. Even good bots fight: The case of Wikipedia. PLoS ONE 2017, 12. [Google Scholar] [CrossRef]

- Perez, O. Collaborative e-rulemaking, democratic bots, and the future of digital democracy. Digit. Gov. Res. Pract. 2020, 1, 1–13. [Google Scholar] [CrossRef]

- Murgia, A.; Janssens, D.; Demeyer, S.; Vasilescu, B. Among the Machines. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems–CHI EA’ 2016, New York, NY, USA, 7–11 May 2016; ACM Press: New York, NY, USA, 2016; pp. 1272–1279. [Google Scholar]

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. Online human-bot interactions: Detection, estimation, and characterization. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media (ICWSM 2017), Montréal, QC, Canada, 15–18 May 2017; pp. 280–289. [Google Scholar]

- Sayama, H. Introduction to the Modeling and Analysis of Complex Systems; Open SUNY Textbooks: New York, NY, USA, 2015; ISBN 978-1942341086. [Google Scholar]

- Mayfield, M.M.; Stouffer, D.B. Higher-order interactions capture unexplained complexity in diverse communities. Nat. Ecol. Evol. 2017, 1. [Google Scholar] [CrossRef] [PubMed]

- Shao, C.; Hui, P.-M.; Wang, L.; Jiang, X.; Flammini, A.; Menczer, F.; Ciampaglia, G.L. Anatomy of an online misinformation network. PLoS ONE 2018, 13. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Wickstrom, K.; Jenssen, R.; Principe, J.C. Understanding convolutional neural networks with information theory: An initial exploration. IEEE Trans. Neural Networks Learn. Syst. 2020, 1–8. [Google Scholar] [CrossRef]

- Quax, R.; Apolloni, A.; Sloot, P.M.A. Towards understanding the behavior of physical systems using information theory. Eur. Phys. J. Spec. Top. 2013, 222, 1389–1401. [Google Scholar] [CrossRef]

- Heo, J.; Han, I. Performance measure of information systems (IS) in evolving computing environments: An empirical investigation. Inf. Manag. 2003, 40, 243–256. [Google Scholar] [CrossRef]

- Myers, B.L.; Kappelman, L.A.; Prybutok, V.R. A Comprehensive model for assessing the quality and productivity of the information systems function. Inf. Resour. Manag. J. 1997, 10, 6–26. [Google Scholar] [CrossRef]

- Tang, Y.; Kurths, J.; Lin, W.; Ott, E.; Kocarev, L. Introduction to focus issue: When machine learning meets complex systems: Networks, chaos, and nonlinear dynamics. Chaos An. Interdiscip. J. Nonlinear Sci. 2020, 30, 63151. [Google Scholar] [CrossRef] [PubMed]

- Khrennikov, A.Y. Ubiquitous Quantum Structure; Springer: Berlin/Heidelberg, Germany, 2010; ISBN 978-3-642-05100-5. [Google Scholar]

- Busemeyer, J.R.; Bruza, P.D. Quantum Models of Cognition and Decision; Cambridge University Press: Cambridge, UK, 2012; ISBN 9780511997716. [Google Scholar]

- Dunjko, V.; Briegel, H.J. Machine learning & amp; artificial intelligence in the quantum domain: A review of recent progress. Rep. Prog. Phys. 2018, 81. [Google Scholar] [CrossRef]

- Gisin, N.; Thew, R. Quantum communication. Nat. Photonics 2007, 1, 165–171. [Google Scholar] [CrossRef]

- Ladd, T.D.; Jelezko, F.; Laflamme, R.; Nakamura, Y.; Monroe, C.; O’Brien, J.L. Quantum computers. Nature 2010, 464, 45–53. [Google Scholar] [CrossRef]

- Kimble, H.J. The quantum internet. Nature 2008, 453, 1023–1030. [Google Scholar] [CrossRef]

- Rahman, M.S.; Hossam-E-Haider, M. Quantum IoT: A quantum approach in IoT security maintenance. In Proceedings of the 2019 International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 10–12 January 2019; pp. 269–272. [Google Scholar]

- Yick, J.; Mukherjee, B.; Ghosal, D. Wireless sensor network survey. Comput. Netw. 2008, 52, 2292–2330. [Google Scholar] [CrossRef]

- Xu, N. A survey of sensor network applications. IEEE Commun. Mag. 2002, 40, 102–114. [Google Scholar]

- Venkatraman, K.; Daniel, J.V.; Murugaboopathi, G. Various attacks in wireless sensor network: Survey. Int. J. Soft Comput. Eng. 2013, 3, 208–212. [Google Scholar]

- Bonaci, T.; Bushnell, L.; Poovendran, R. Node capture attacks in wireless sensor networks: A system theoretic approach. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 6765–6772. [Google Scholar]

- Wu, N.E.; Sarailoo, M.; Salman, M. Transmission fault diagnosis with sensor-localized filter models for complexity reduction. IEEE Trans. Smart Grid. 2017, 9, 6939–6950. [Google Scholar] [CrossRef]

- Nazemi, S.; Leung, K.K.; Swami, A. Optimization framework with reduced complexity for sensor networks with in-network processing. In Proceedings of the 2016 IEEE Wireless Communications and Networking Conference, Doha, Qatar, 3–6 April 2016; pp. 1–6. [Google Scholar]

- Kumar, S.; Krishna, C.R.; Solanki, A.K. A Technique to analyze cyclomatic complexity and risk in a Wireless sensor network. In Proceedings of the 2018 5th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, Delhi-NCR, India, 22–23 February 2018; pp. 602–607. [Google Scholar]

- Khanna, R.; Liu, H.; Chen, H.-H. Reduced complexity intrusion detection in sensor networks using genetic algorithm. In Proceedings of the 2009 IEEE International Conference on Communications, Dresden, Germany, 14–18 June 2009; pp. 1–5. [Google Scholar]

- Macal, C.; North, M. Introductory tutorial: Agent-based modeling and simulation. In Proceedings of the Winter Simulation Conference, Savannah, GA, USA, 7–10 December 2014; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2015; Volume 2015, pp. 6–20. [Google Scholar]

- Miao, C.Y.; Goh, A.; Miao, Y.; Yang, Z.H. Agent that models, reasons and makes decisions. Knowl. Based Syst. 2002, 15, 203–211. [Google Scholar] [CrossRef]

- Dibley, M.; Li, H.; Rezgui, Y.; Miles, J. An integrated framework utilising software agent reasoning and ontology models for sensor based building monitoring. J. Civ. Eng. Manag. 2015, 21, 356–375. [Google Scholar] [CrossRef]

- Dennett, D.C. The Intentional Stance; MIT Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Kennedy, W.G. Modelling human behaviour in agent-based models. In Agent-Based Models of Geographical Systems; Springer: Dordrecht, The Netherlands, 2012; pp. 167–179. ISBN 9789048189274. [Google Scholar]

- Consoli, A.; Tweedale, J.; Jain, L. The link between agent coordination and cooperation. IFIP Int. Fed. Inf. Process. 2006, 228, 11–19. [Google Scholar] [CrossRef]

- Michael, W. An Introduction to MultiAgent Systems; John Wiley & Sons: Hoboken, NJ, USA, 2009; ISBN 047149691X. [Google Scholar]

- Ardavs, A.; Pudane, M.; Lavendelis, E.; Nikitenko, A. Long-term adaptivity in distributed intelligent systems: Study of viabots in a simulated environment. Robotics 2019, 8, 25. [Google Scholar] [CrossRef]

- Giarratano, J.C.; Riley, G. Expert Systems: Principles and Programming, 2nd ed.; PWS Publishing Co.: Boston, MA, USA, 1994; ISBN 0534937446. [Google Scholar]

- Konar, A. Artificial Intelligence and Soft Computing: Behavioral and Cognitive Modeling of the Human Brain; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Kotseruba, I.; Tsotsos, J.K. 40 years of cognitive architectures: core cognitive abilities and practical applications. Artif. Intell. Rev. 2018. [Google Scholar] [CrossRef]

- Hayes-Roth, B. An architecture for adaptive intelligent systems. Artif. Intell. 1995, 72, 329–365. [Google Scholar] [CrossRef]

- Laird, J.E.; Newell, A.; Rosenbloom, P.S. SOAR: An architecture for general intelligence. Artif. Intell. 1987, 33, 1–64. [Google Scholar] [CrossRef]

- Sotnik, G. The SOSIEL platform: Knowledge-based, cognitive, and multi-agent. Biol. Inspired Cogn. Archit. 2018, 26, 103–117. [Google Scholar] [CrossRef]

- Karpistsenko, A. Networked intelligence: Towards autonomous cyber physical systems. arXiv 2016, arXiv:1606.04087. Available online: https://arxiv.org/abs/1606.04087 (accessed on 23 November 2020).

- Perraju, T.S. Specifying fault tolerance in mission critical intelligent systems. Knowl. Based Syst. 2001, 14, 385–396. [Google Scholar] [CrossRef]

- Rzevski, G. Intelligent Multi-agent Platform for Designing Digital Ecosystems. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: New York, NY, USA, 2019; Volume 11710, pp. 29–40. ISBN 9783030278779. [Google Scholar]

- Kunnappiilly, A.; Cai, S.; Marinescu, R.; Seceleanu, C. Architecture modelling and formal analysis of intelligent multi-agent systems. In Proceedings of the 14th International Conference on Evaluation of Novel Approaches to Software Engineering, Heraklion, Greece, 4 –5 May 2019; Scitepress–Science and Technology Publications: Setúbal, Portugal, 2019; pp. 114–126. [Google Scholar]

- Kovalchuk, S.V.; Boukhanovsky, A.V. Towards ensemble simulation of complex systems. Procedia Comput. Sci. 2015, 51, 532–541. [Google Scholar] [CrossRef][Green Version]

- Kovalchuk, S.V.; Krikunov, A.V.; Knyazkov, K.V.; Boukhanovsky, A. V Classification issues within ensemble-based simulation: application to surge floods forecasting. Stoch. Environ. Res. Risk Assess. 2017, 31, 1183–1197. [Google Scholar] [CrossRef]

- Ren, Y.; Zhang, L.; Suganthan, P.N. Ensemble classification and regression-recent developments, applications and future directions. IEEE Comput. Intell. Mag. 2016, 11, 41–53. [Google Scholar] [CrossRef]

- Mendes-Moreira, J.; Soares, C.; Jorge, A.M.; Sousa, J.F. De Ensemble approaches for regression. ACM Comput. Surv. 2012, 45, 1–40. [Google Scholar] [CrossRef]

- Polikar, R. Ensemble based systems in decision making. IEEE Circuits Syst. Mag. 2006, 6, 21–44. [Google Scholar] [CrossRef]

- Hartanto, I.M.; Andel, S.J.V.; Alexandridis, T.K.; Solomatine, D. Ensemble simulation from multiple data sources in a spatially distributed hydrological model of the rijnland water system in the Netherlands. In Proceedings of the 11th International Conference on Hydroinformatics, New York, NY, USA, 17–21 August 2014; Piasecki, M., Ed.; CUNY Academic Works: New York, NY, USA, 2014. [Google Scholar]

- Choubin, B.; Moradi, E.; Golshan, M.; Adamowski, J.; Sajedi-Hosseini, F.; Mosavi, A. An ensemble prediction of flood susceptibility using multivariate discriminant analysis, classification and regression trees, and support vector machines. Sci. Total Environ. 2019, 651, 2087–2096. [Google Scholar] [CrossRef] [PubMed]

- Giacomel, F.; Galante, R.; Pereira, A. An algorithmic trading agent based on a neural network ensemble: A case of study in North American and Brazilian stock markets. In Proceedings of the International Joint Conference on Web Intelligence and Intelligent Agent Technology, Singapore, Singapore, 6–9 December 2015; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2016; Volume 2, pp. 230–233. [Google Scholar]

- Golzadeh, M.; Hadavandi, E.; Chelgani, S.C. A new Ensemble based multi-agent system for prediction problems: Case study of modeling coal free swelling index. Appl. Soft Comput. J. 2018, 64, 109–125. [Google Scholar] [CrossRef]

- Nikitin, N.O.; Kalyuzhnaya, A.V.; Bochenina, K.; Kudryashov, A.A.; Uteuov, A.; Derevitskii, I.; Boukhanovsky, A.V. Evolutionary ensemble approach for behavioral credit scoring. In Lecture Notes in Computer Science; including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer Verlag: Berlin, Germany, 2018; Volume 10862, pp. 825–831. [Google Scholar]

- Hao, J.; Huang, D.; Cai, Y.; Leung, H. The dynamics of reinforcement social learning in networked cooperative multiagent systems. Eng. Appl. Artif. Intell. 2017, 58, 111–122. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, Z.; Chen, Z. Effects of strategy switching and network topology on decision-making in multi-agent systems. Int. J. Syst. Sci. 2018, 49, 1934–1949. [Google Scholar] [CrossRef]

- Veillon, L.-M.; Bourgne, G.; Soldano, H. Effect of network topology on neighbourhood-aided collective learning. In Proceedings of the International Conference on Computational Collective Intelligence, Nicosia, Cyprus, 27–29 September 2017; pp. 202–211. [Google Scholar]

- Bourgne, G.; El Fallah Segrouchni, A.; Soldano, H. Smile: Sound multi-agent incremental learning. In Proceedings of the 6th International Joint Conference on Autonomous Agents and Multiagent Systems, New York, NY, USA, 21–25 May 2007; pp. 1–8. [Google Scholar]

- Bourgne, G.; Soldano, H.; El Fallah-Seghrouchni, A. Learning better together. In Proceedings of the ECAI, Amsterdam, The Netherlands, 16–20 August 2010; Volume 215, pp. 85–90. [Google Scholar]

- Zhang, K.; Yang, Z.; Bacsar, T. Multi-agent reinforcement learning: A selective overview of theories and algorithms. arXiv 2019, arXiv:1911.10635. Available online: https://arxiv.org/abs/1911.10635 (accessed on 23 November 2020).

- Gupta, S.; Hazra, R.; Dukkipati, A. Networked multi-agent reinforcement learning with emergent communication. arXiv 2020, arXiv:2004.02780. Available online: https://arxiv.org/abs/2004.02780 (accessed on 23 November 2020).

- Sheng, J.; Wang, X.; Jin, B.; Yan, J.; Li, W.; Chang, T.-H.; Wang, J.; Zha, H. Learning structured communication for multi-agent reinforcement learning. arXiv 2020, arXiv:2002.04235. Available online: https://arxiv.org/abs/2002.04235 (accessed on 23 November 2020).

- Hu, J.; Wellman, M.P. Multiagent reinforcement learning: theoretical framework and an algorithm. In Proceedings of the ICML, Madison, Wisconsin, USA, 24–27 July 1998; Volume 98, pp. 242–250. [Google Scholar]

- Liu, Y.; Liu, L.; Chen, W.-P. Intelligent traffic light control using distributed multi-agent Q learning. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–8. [Google Scholar]

- Cao, K.; Lazaridou, A.; Lanctot, M.; Leibo, J.Z.; Tuyls, K.; Clark, S. Emergent communication through negotiation. arXiv 2018, arXiv:1804.03980. Available online: https://arxiv.org/abs/1804.03980 (accessed on 23 November 2020).

- Mordatch, I.; Abbeel, P. Emergence of grounded compositional language in multi-agent populations. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Havrylov, S.; Titov, I. Emergence of language with multi-agent games: Learning to communicate with sequences of symbols. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 2149–2159. [Google Scholar]

- Gupta, S.; Dukkipati, A. On Voting Strategies and Emergent Communication. arXiv 2019, arXiv:1902.06897. Available online: https://arxiv.org/abs/1902.06897 (accessed on 23 November 2020).

- Hernandez-Leal, P.; Kaisers, M.; Baarslag, T.; de Cote, E.M. A survey of learning in multiagent environments: Dealing with non-stationarity. arXiv 2017, arXiv:1707.09183. Available online: https://arxiv.org/abs/1707.09183 (accessed on 23 November 2020).

- Bloembergen, D.; Tuyls, K.; Hennes, D.; Kaisers, M. Evolutionary dynamics of multi-agent learning: A survey. J. Artif. Intell. Res. 2015, 53, 659–697. [Google Scholar] [CrossRef]

- Haven, E.; Khrennikov, A. Quantum probability and the mathematical modelling of decision-making. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374. [Google Scholar] [CrossRef]

- Yukalov, V.I.; Sornette, D. Quantum decision theory as quantum theory of measurement. Phys. Lett. A 2008, 372, 6867–6871. [Google Scholar] [CrossRef]

- Kahneman, D.; Slovic, P.; Tversky, A. Judgment Under Uncertainty: Heuristics and Biases, 1st ed.; Cambridge University Press: Cambridge, UK, 1982. [Google Scholar]

- Platonov, A.V.; Bessmertny, I.A.; Semenenko, E.K.; Alodjants, A.P. Non-separability effects in cognitive semantic retrieving. In Quantum-Like Models for Information Retrieval and Decision-Making; Springer Nature: London, UK, 2019; pp. 35–40. [Google Scholar]

- Ozawa, M.; Khrennikov, A. Application of theory of quantum instruments to psychology: Combination of question order effect with response replicability effect. Entropy 2019, 22, 37. [Google Scholar] [CrossRef] [PubMed]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef] [PubMed]

- Paparo, G.D.; Dunjko, V.; Makmal, A.; Martin-Delgado, M.A.; Briegel, H.J. Quantum speedup for active learning agents. Phys. Rev. X 2014, 4, 031002. [Google Scholar] [CrossRef]

- Melnikov, A.A.; Poulsen Nautrup, H.; Krenn, M.; Dunjko, V.; Tiersch, M.; Zeilinger, A.; Briegel, H.J. Active learning machine learns to create new quantum experiments. Proc. Natl. Acad. Sci. USA 2018, 115, 1221–1226. [Google Scholar] [CrossRef]

- Brito, S.; Canabarro, A.; Chaves, R.; Cavalcanti, D. Statistical properties of the quantum internet. Phys. Rev. Lett. 2020, 124, 210501. [Google Scholar] [CrossRef]

- Melnikov, A.A.; Fedichkin, L.E.; Alodjants, A. Predicting quantum advantage by quantum walk with convolutional neural networks. New J. Phys. 2019, 21. [Google Scholar] [CrossRef]

- Cabello, A.; Danielsen, L.E.; López-Tarrida, A.J.; Portillo, J.R. Quantum social networks. J. Phys. A Math. Theory 2012, 45, 285101. [Google Scholar] [CrossRef]

- Tsarev, D.; Trofimova, A.; Alodjants, A.; Khrennikov, A. Phase transitions, collective emotions and decision-making problem in heterogeneous social systems. Sci. Rep. 2019, 9. [Google Scholar] [CrossRef]

- Strogatz, S.H. Exploring complex networks. Nature 2001, 410, 268–276. [Google Scholar] [CrossRef]

- Arenas, A.; Díaz-Guilera, A.; Kurths, J.; Moreno, Y.; Zhou, C. Synchronization in complex networks. Phys. Rep. 2008, 469, 93–153. [Google Scholar] [CrossRef]

- Ravasz, E.; Barabási, A.-L. Hierarchical organization in complex networks. Phys. Rev. E 2003, 67. [Google Scholar] [CrossRef] [PubMed]

- Krenn, M.; Malik, M.; Scheidl, T.; Ursin, R.; Zeilinger, A. Quantum communication with photons. In Optics in Our Time; Springer International Publishing: Cham, Switzerland, 2016; pp. 455–482. [Google Scholar]

- Gyongyosi, L.; Imre, S.; Nguyen, H.V. A Survey on Quantum Channel Capacities. IEEE Commun. Surv. Tutorials 2018, 20, 1149–1205. [Google Scholar] [CrossRef]

- Wallnöfer, J.; Melnikov, A.A.; Dür, W.; Briegel, H.J. Machine learning for long-distance quantum communication. PRX Quantum 2020, 1. [Google Scholar] [CrossRef]

- Manzalini, A. Quantum communications in future networks and services. Quantum Rep. 2020, 2, 14. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov decision processes. Handb. Oper. Res. Manag. Sci. 1990, 2, 331–434. [Google Scholar]

- Watkins, C.J.C.H.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Newman, M.E.J. Mixing patterns in networks. Phys. Rev. E 2003, 67, 26126. [Google Scholar] [CrossRef]

- Karrer, B.; Newman, M.E.J. Stochastic blockmodels and community structure in networks. Phys. Rev. E 2011, 83. [Google Scholar] [CrossRef]

- Górski, P.J.; Bochenina, K.; Holyst, J.A.; D’Souza, R.M. Homophily Based on Few Attributes Can Impede Structural Balance. Phys. Rev. Lett. 2020, 125. [Google Scholar] [CrossRef]

- Rodrigues, F.A.; Peron, T.K.D.M.; Ji, P.; Kurths, J. The Kuramoto model in complex networks. Phys. Rep. 2016, 610, 1–98. [Google Scholar] [CrossRef]

- Scafuti, F.; Aoki, T.; di Bernardo, M. Heterogeneity induces emergent functional networks for synchronization. Phys. Rev. E 2015, 91, 62913. [Google Scholar] [CrossRef] [PubMed]

- Bertotti, M.L.; Brunner, J.; Modanese, G. The Bass diffusion model on networks with correlations and inhomogeneous advertising. Chaos Solitons Fractals 2016, 90, 55–63. [Google Scholar] [CrossRef]

- Maslennikov, O.V.; Nekorkin, V.I. Adaptive dynamic networks (in russian). Phys. Sci. Success 2017, 187, 745–756. [Google Scholar]

- Dorogovtsev, S. Lectures on Complex Networks. In Oxford Master Series in Physics; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Bazhenov, A.Y.; Tsarev, D.V.; Alodjants, A.P. Superradiant phase transition in complex networks. arXiv 2020, arXiv:2012.03088. Available online: https://arxiv.org/abs/2012.03088 (accessed on 23 November 2020).

| Explicit Knowledge Base/Inference | Systemic Intelligence | Agent Intelligence | Multiple Agents | Networked | Systemic Complexity | AIA + NIA | |

|---|---|---|---|---|---|---|---|

| Sensor networks | - 1 | - | - | + | + | - | - |

| Ensemble learning | - | - | + | +/− | - | - | - |

| Complex networks | - | - | - | + | + | + | - |

| Quantum channels and networks | - | - | - | +/− | + | - | - |

| MAS | - | +/− | - | + | - | +/− | - |

| Distributed expert systems | +/− | +/− | + | + | + | + | - |

| MARL | +/− | + | + | + | +/− | - | + |

| System Type | Node State | Own Contribution | Neighbors Contribution to Node State Increment | Edge Weights Increment | Discrete Node State | Probability of State Change |

|---|---|---|---|---|---|---|

| Kuramoto oscillators [102] | const | - | - | |||

| Oscillators with coupling dynamics [103] | - | - | ||||

| SI diffusion [104] | {S, I} | - | - | Yes | Fixed, | |

| DIS | - | Yes |

| Quality Measures | Agent | Network | External | Complexity Aspects |

|---|---|---|---|---|

| Service quality | +2 | Collaboration | ||

| System quality | + | Resistance to perturbations Resistance to the false response Minimal number of agents | ||

| Information quality | + | + | Information percolation/degradation | |

| Information use | + | |||

| User satisfaction | + | Individual strategies Collision avoidance | ||

| Individual impact | + | Control driving nodes | ||

| Workgroup impact | + | Emergent phenomena | ||

| Organizational impact | + | + | Resource management |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guleva, V.; Shikov, E.; Bochenina, K.; Kovalchuk, S.; Alodjants, A.; Boukhanovsky, A. Emerging Complexity in Distributed Intelligent Systems. Entropy 2020, 22, 1437. https://doi.org/10.3390/e22121437

Guleva V, Shikov E, Bochenina K, Kovalchuk S, Alodjants A, Boukhanovsky A. Emerging Complexity in Distributed Intelligent Systems. Entropy. 2020; 22(12):1437. https://doi.org/10.3390/e22121437

Chicago/Turabian StyleGuleva, Valentina, Egor Shikov, Klavdiya Bochenina, Sergey Kovalchuk, Alexander Alodjants, and Alexander Boukhanovsky. 2020. "Emerging Complexity in Distributed Intelligent Systems" Entropy 22, no. 12: 1437. https://doi.org/10.3390/e22121437

APA StyleGuleva, V., Shikov, E., Bochenina, K., Kovalchuk, S., Alodjants, A., & Boukhanovsky, A. (2020). Emerging Complexity in Distributed Intelligent Systems. Entropy, 22(12), 1437. https://doi.org/10.3390/e22121437