Abstract

We consider a continuous-time random walk which is the generalization, by means of the introduction of waiting periods on sites, of the one-dimensional non-homogeneous random walk with a position-dependent drift known in the mathematical literature as Gillis random walk. This modified stochastic process allows to significantly change local, non-local and transport properties in the presence of heavy-tailed waiting-time distributions lacking the first moment: we provide here exact results concerning hitting times, first-time events, survival probabilities, occupation times, the moments spectrum and the statistics of records. Specifically, normal diffusion gives way to subdiffusion and we are witnessing the breaking of ergodicity. Furthermore we also test our theoretical predictions with numerical simulations.

1. Introduction

Since their first appearance, random walks have always been used as effective mathematical tools to describe a plenty of problems from a variety of fields, such as crystallography, biology, behavioural sciences, optical and metal physics, finance and economics. Although homogeneous random walks are not a mystery anymore, in many situations the topology of the environment causes correlations (induced by the medium inhomogeneities), which have powerful consequences on the transport properties of the process. The birth of whole classes of non-homogeneous random walks [1,2] is due to the need to study disordered media and non-Brownian motions, responsible for anomalous diffusive behaviour. This topic of research is prompted by phenomena observed in several systems such as turbolent flows, dynamical systems with intermittencies, glassy materials, Lorentz gases, predators hunting for food [3,4,5,6,7,8,9,10,11,12,13]. For the sake of clarity, we recall that anomalous processes are characterized by a mean square displacement of the walker’s position with a sublinear or superlinear growth in time as opposed to normal Brownian diffusion, defined as an asymptotically linear time dependence of the variance.

In this context, the outstanding Gillis random walk [14] plays a crucial role, since it is one of the few analytically solvable models of non-homogeneous random walks with a drift dependent on the position in the sample. Other few exceptions are random walks with a limited number of boundaries or defective sites [15,16,17,18]. The Gillis model is a nearest-neighbour centrally-biased random walk on , lacking translational invariance for the transition probabilities, which provides an appropriate environment in order to investigate the critical behaviour in the proximity of a phase transition: while keeping fixed the dimensionality of the model, one can observe different regimes by simply changing the parameter value.

As is natural, in the first instance one typically focuses his attention on the dynamics of the random walk by considering a discretization of the time evolution: basically you wear the simplest clock, consisting of a counting measure of the number of steps. But in most physical situations, you deal with systems requiring a continuous-time description of the evolution (that clearly introduces a higher degree of complexity). In order to show this important difference, we can rely on the explanatory comparative analysis between Lévy flights and Lévy walks [19,20]. We are mentioning homogeneous random walks whose transition probabilities have an infinite variance: Lévy flights are indeed jump processes with steps picked from a long-tailed (or Lévy) distribution, whose tails are not exponentially bounded and so there is a good chance that you jump really far from the current site. This is what we mean when we say, from a mathematical point of view, that the distribution does not have a finite variance. However, Lévy flights have a drawback: clearly, if spatial trajectories are totally unaware of the related time trace, flights are rightful, otherwise they are not exactly physically acceptable because they appear to possess an infinite speed. More realistic models are instead Lévy walks, where the walker needs a certain time to perform the jump, which is no longer instantaneous. The time spent is usually proportional to the length of the step, so we assume a constant finite speed for the motion.

In our case, we take a step back: in Lévy walks you are already assuming the existence of spatiotemporal correlations, but in general the easiest way to get a continuous-time description starting from the discrete model is to decouple spatial and temporal components. This is precisely what E. Montroll and G.H. Weiss did in 1965 [21] by means of a random walk (the so called Continuous Time Random Walk) whose jumps are still instantaneous but the dynamics is subordinated to a random physical clock. Basically, you have to introduce a new random variable, the waiting time on a site, in addition to the length of the jump [22]. Also this time there are relevant application aspects: ruin theory of insurance companies, dynamics of prices in financial markets, impurity in semiconductors, transport in porous media, transport in geological formations. An incomplete list of general references includes [23,24,25,26,27,28].

Inspired by the previous models, we consider the continuous-time generalization of the discrete-time Gillis random walk that we have already studied thoroughly in Reference [29]. In particular, we will also look at first-time events: they account for key problems in the theory of stochastic processes, since you can determine when system variables assume specific values (for example, see Reference [30]).

This paper is structured as follows. In Section 2 we briefly present the background in order to provide a complete overview of the known results, which are the basis of the work. Then, in Section 3, we will discuss the original results, by establishing a connection between the discrete-time random walk and the continuous-time formalism. In particular, two significant phenomena will arise: the ergodicity breaking and the extension of the anomalous diffusion regime. In Section 4, moreover, we will integrate the theoretical analysis with computational simulations, as further confirmation. Finally, in Section 5 we will summarize all the conclusions previously described in detail.

2. Review of Previous Work

First of all, intending to be self-consistent, we provide a brief recap of the discrete-time Gillis model and resume the key concepts necessary for its continuous-time version. In this way we will be sufficiently equipped to move on to list the major results.

2.1. Gillis Random Walk

The Gillis model [14] is a discrete-time random walk, on a one-dimensional lattice, whose transition probabilities of moving from site i to site j are non-null if and only if , namely are nearest-neighbour lattice points. We assume that the positional dependence is ruled by the real parameter , where and:

Clearly, if you set out you recover homogeneity since it boils down to the simple symmetric random walk. Otherwise, the position-dependent drift is responsible for an attractive bias towards the starting site, the origin, when or for a repulsive action if .

As we said in the general introduction, the Gillis random walk is one of the few analytically solvable models and, in particular, in his original paper the author writes down the exact expression of the generating function of in terms of the elementary hypergeometric function [31], where denotes the probability that the walker returns to the origin, not necessarily for the first time, after n steps. Actually, this solution has been later generalized for a generic starting site [1]. Given the probability that the particle starts from any site and passes through the origin after n steps, we can write its generating function:

This is one of the essential tools for the following analysis, along with those in our previous paper [29], and for it is clearly consistent with the original result by Gillis concerning the generating function .

Another relevant statement for future considerations is that the motion is positive-recurrent (recurrent with a finite mean return time) and ergodic (thus admitting a stationary distribution) when , null-recurrent (recurrent with an infinite mean return time that increases with the number of steps) if and transient for . To be more precise [32], the mean time taken between two consecutive returns to the starting site up to the n-th step is:

and this is a direct consequence of Equation (2). In fact, starting from there one can also obtain the generating functions of the first-hitting and the first-return times to the origin. First of all, let us define the probability that the moving particle starts from and hits j for the first time after n steps. Then we know that are connected to in the following way:

or, equivalently, in terms of the corresponding generating functions:

Notice that in the presence of translational invariance and (see Equation in Reference [22]). In our context, anyway, Equation (5) becomes particularly easy since we choose . Hence we can finally conclude that when :

where are the first-return probabilities, whereas for (first-passage probabilities):

The mean time spent between two consecutive visits at the origin up to the n-th step is easily derived from [32].

Now, instead, moving on to transport properties, we can quickly resume the moments spectrum and the statistics of records (for more details and references see Reference [29]). Firstly, denoting the moment of order q with , we know that the asymptotical dependence on the number of steps n is , where:

Translated into words, this leads to recognize the presence of a phase transition: non-ergodic processes are characterized by normal diffusion, since the second moment shows an asymptotically linear growth in time, whereas the ergodic ones reveal strong anomalous (sub-)diffusion [33].

Secondly, let us first recall the following definition: given a finite set of random variables, the record value is the largest/smallest value assumed by that sequence. In the Gillis model, the events to account for are the positions on the one-dimensional lattice during the motion and the record after n steps , with due to the intial condition , can be seen as the non-negative integer exceeding all the previously occupied sites: indeed thanks to symmetry, as we will explain in details later on, we can restrict ourselves to studying a random walk defined on the half-line, with a reflecting barrier at the origin. In addition, the presence of a nearest-neighbour structure implies that the number of records after n steps is connected to the value of the maximum by means of the trivial relationship , where:

We point out that here we only consider the range in order to limit ourselves to recurrent processes: the limiting case is excluded because of technical reasons in the rigorous proof (see Reference [34]).

Here, again, the model enters two different phases, according to the value of the characteristic parameter . In particular, in the interval the mean number of records has the same growth of the first moment.

2.2. CTRW

Our aim here is to formalize the transformation of the number of steps into the physical real time. We shall follow Reference [22]: for an in-depth and more exhaustive review on subordination techniques refer to Reference [35], for instance. As a preliminary remark, we point out that, moving from discrete to continuous formalism, we have to abandon the generating function (for the time domain, not for the lattice) in favour of a more appropriate mathematical tool, the Laplace transform.

The basic assumption is that we have a random walker who performs instantaneous jumps on a line, but now he is forced to wait on the target site for a certain interval of time, whose duration t is always picked from a common probability distribution , before going any further. So, for instance, will be the waiting time on the origin before jumping for the first time and, moreover, we would emphasize that the waiting times of subsequent steps are independent and identically distributed (according to ) random variables.

These are the essential instruments for introducing the quantities of interest. Firstly, we can define the PDF (Probability Density Function) of the occurrence of the n-th step at time . As a consequence, through independence, the following recurrence relation holds:

where the convolution becomes a product and, from now on, the use of the following convention is implied: variables indicated in brackets in the functions uniquely define the space you are working in (real for t, Laplace for s). Secondly, we can introduce the PDF of taking exactly n steps up to the time t (namely this time the n-th step may occur at time and then the walker rests on the site):

Next section will shed some light on the role of these useful quantities.

3. Results

For the sake of clarity, we will simply state the significant elements in this section. For all of the detailed computation please refer to appendices further below.

3.1. Probability of Being at the Origin

The most natural step to undertake as first thing is obviously to determine the probability of finding the walker at the origin at time t, for comparison with Gillis original results. This task can be carried out in two different ways, both instructive.

3.1.1. Gillis Way

As a first attempt, one could be led to translate Gillis method into continuous-time formalism. And in fact this is a viable solution. The starting point is Equation in Reference [14] that reads:

where denotes the probability of being at site j after n steps when the initial position of the walker is the origin.

In order to accomplish the transformation, we need to establish some more notation. In particular we notice that, after introducing the physical real time, the position at time t is still the position after n steps, provided that exactly n jumps have been counted up to time t. Hence:

- is the probability of being (arriving) at j at (within) time t;

- is the probability of arriving at j at time t.

By performing the Laplace transform on time and the generating function on sites, we get: . Now, the continuous-time equivalent of Equation (12) is obtained by multiplying both sides by and summing over n:

Essentially, we find ourselves in the exact same situation, we just need to shift focus back to a new key element, the arrival event. Retracing the steps of the original paper (see Appendix A), we can (trivially) conclude that:

namely:

where we remind you that is the generating function (evaluated at ) of the probability of being at the origin in the discrete-time model.

This result is not surprising: given that the temporal component is independent of the spatial scale, the time trace is ruled by a random clock that replaces the role of the counting measure (the simple internal clock given by the number of steps). The generating function of the probability of arriving at the origin is the same of the one associated with the discrete model (where there is no distinction between arriving and being, because the walker can not stand still on a site), but subordinated to the new physical time. This observation let us generalize immediately the result to the case of a generic starting point , obtaining:

which, thanks to Equation (2), becomes:

3.1.2. Recurrence Relation: First-Return Time to the Origin

However, we can arrive at Equation (15) also in a different way. If we now perform, as before, a continuous-time transformation of Equation (4) with , we get:

and considering the Laplace domain:

As a last step we can plug in Equation (5), so we finally go back to Equation (15). In addition, we have immediately also the Laplace transform for the first-return time. Indeed, since the first-return is an arrival event and thus coincides with the occurrence of a step, there is no way to land earlier and wait for the remaining time. Hence, from a mathematical point of view, we can write [22]:

thanks to Equation (5). Lastly, by comparing Equation (15) with Equation (21), we get the relationship in the Laplace domain:

Once again we can generalize the previous formula for a generic starting site :

Now, turning back to our specific case, we know that the generating functions of interest can be written in the form (see Reference [29]):

where are slowly-varying functions at infinity, namely for instance is such that , and:

As a consequence, the corresponding Laplace transforms are automatically given by:

At this point, it is apparent that we are forced to split our analysis according to the features of the waiting-time distribution: clearly the asymptotic behaviour of the quantities above mentioned is established by the expansion of the Laplace transform of the waiting-time distribution for small s.

3.1.3. Finite-Mean Waiting-Time Distributions

As the first choice, one can think of as i.i.d. positive random variables with finite mean (but non necessarily a finite variance too: for example, the waiting times may be taken belonging to the domain of attraction - because they must be spectrally positive [36] - of -stable laws with ). In these circumstances, the leading term in the expansion is: for . Therefore, in the limit we get:

equivalently written in the limit by directly applying Tauberian theorems [22,37]. In any case, however, the exponent of the power-law decay is the same of the discrete-time model [29]:

This is not an astonishing result because obviously , where n is the number of steps. It is merely a change of scale. So from now on we will disregard this possibility.

3.1.4. Infinite-Mean Waiting-Time Distributions

Implications are a little bit different if we choose power-law distributions lacking the first moment, because the dynamics becomes highly irregular this time. If we assume a heavy-tailed waiting-time distribution of the form: with , then the corresponding Laplace expansion is: , where . Again by substitution, we derive:

We invite you to read Appendix D for a check with a well-known example. Moreover, as advanced in the previous section, asymptotic expansion and Tauberian theorems give us an immediate, even if incomplete, insight of what happens: exact and exhaustive results (involving a generic starting site) are postponed in Appendix B and Appendix C, in order not to burden the reading.

Anyhow, we provide here the summarising full spectrum of return, first-return, hitting and first-hitting time PDFs of the origin, which is consistent with the asymptotic behaviour previously predicted in Equation (32) and for in Equation (33). Firstly, keeping in mind Equation (17), we have:

With the choice , you get immediately the PDF of returns. In particular, let us point out that in the recurrent cases the coefficient does not depend on .

A little discrepancy, instead, arises if you compare first-passage and first-return events (see Equations (6) and (7)). The first-return time PDF is asymptotically given by:

whereas the first-hitting time PDF can be connected to the previous one by means of the following relationship:

with:

and denoting the digamma function [31]. As a consequence, we notice that, by setting , the asymptotic expansion of vanishes in all regimes: the direct relation between and does not hold anymore for first-time events. Nevertheless, although the coefficients differ, the asymptotic decays of and are the same.

3.2. Survival Probability on the Positive Semi-Axis

Return and first-return probabilities allow us to determine also the asymptotic behaviour of other related quantities. In the first place, we can introduce the survival probability in a given subset: it is defined as the probability of never escaping from the selected collection of neighbouring sites. For instance, by considering , it can be written as:

This quantity has been deepenly studied for a wide range of homogeneous stochastic processess. In particular, with regards to random walks with i.i.d. steps, the historical Sparre-Andersen theorem [38] is a significant result connecting a non-local property, since the survival probability depends on the history of the motion, to the local (in time) probability of standing non-positive at the last step:

It is an outstanding expression of universality, both in discrete and continuous-time versions, if you consider jump distributions that are continuous and symmetric about the origin, although this feature is partially lost (the coefficient of proportionality in the scaling law is no longer universal) when you move on a lattice instead of the real line [39,40]. However, whereas temporal components have already been included in the analysis [41], not much has been said about spatial correlations, to the authors’ knowledge.

With our previous results [42] in mind, we will now consider changes arising from the subordination to a physical clock. We need to introduce the persistence probability of never coming back to the origin up to the n-th step, namely , in order to write down the following recurrence relation:

In the Laplace domain it becomes:

and in conclusion:

to be compared with the discrete-time results [42]:

with as and . It is apparent that there are similarities in the null-recurrent cases , since , and when , whose decay is even slower (for it is a decreasing slowly-varying function). The main relevant difference, instead, is the disappearance of the positive-recurrent regime (where Tauberian theorems fail).

Nevertheless, if the underlying discrete-time random walk is ergodic, a discrepancy remains also in the continuous-time translation. In general, we have to notice that:

where the slowly-varying function L tends to a constant. The coefficient of proportionality between and should not be underestimated: it means that the occupation time spent at the origin behaves in a different way, as we will see later on. Indeed, for the sake of illustration, a halved coefficient would mean that visits at the origin are negligible (which is recovered in the limiting case ). In particular, says that they have more weight in the presence of an underlying ergodic context.

As a final check, if we suppose, on the contrary, a finite time scale () then we have again (independently of , clearly) and when .

3.3. Occupation Times

This section will be devoted to the statistics of the fraction of time spent by the walker at a given site or in a given subset. As in the previous one, the probability distribution of the quantity of interest stems from the features of the asymptotic decay of return and first-return PDFs. We shall describe (or simply mention) any other necessary tool from time to time, as always.

3.3.1. Occupation Time of the Origin

In the discrete-time formalism, as we have already discussed in Section 3.1.1, the particle can not stand still on a site and so considering the occupation time of a single site is equivalent to talk about the number of visits to the same. Thanks to the Darling-Kac theorem [43], a remarkable mathematical result for Markov processes, we know [29,42] that the number of visits to the starting point (properly rescaled by the average taken over several realizations) has a Mittag-Leffler distribution of index as limiting distribution. We would emphasize that spatial inhomogeneities cause non-Markovianity for the original process, but now we are focusing on returns to the origin that are renewal events. Thus you have a sequence of i.i.d. first-return times and loss of memory is ensured in each case.

Obviously, this result is still true for waiting-time distributions with finite mean: even if the physical clock is running when the particle rests on a site and the internal clock stops, the microscopic time scale gives us the constant of direct proportionality necessary to move from the number of steps to the correct time measure, which has the same distribution, as a consequence.

In the non-trivial continuous-time translation, instead, what we need to apply is Lamperti theorem [44]. It is a statement involving two-state stochastic processes (being or not at the origin in our case). More precisely we deal with its continuous-time generalization, which has been discussed in many works, such as References [45,46,47,48,49]. Here we provide the final formula, that is the starting point of our analysis: for a detailed proof refer to Reference [47], for instance. Essentially, we will conclude that, even if the Mittag-Leffler statistics is mapped to a Lamperti distribution, the index of the discrete-time formalism is always replaced by the product characterizing the asymptotic expansion of the first-return PDF. In particular, in order to preserve the ergodic property of the discrete-time version () we have to consider a waiting-time distribution with finite mean (): in this way, the Lamperti distribution collapses to a Dirac delta function on the mean value of the occupation time.

Before formalizing the theorem, let us define some notation. We consider a stochastic process described by a set of transitions between two states (that we call and ) and we consider arrivals at the origin and departures as events. Time periods between events are i.i.d. random variables, with PDFs and respectively, that are the alternating distributions of the renewal process. In fact, the time spent on state is precisely the waiting time on a site, whereas the time spent outside the origin coincides with the first-return time to the origin starting from because, thanks to the nearest-neighbour structure, when you leave the origin you land on ±1 and by symmetry, as witnessed by Equation (37). Moreover we can notice that and are connected by means of the first-return PDF, in fact:

We assume that at the particle occupies the origin (namely it is in state ) and we denote the total times spent by the walker in the two states up to time t by and , associated with the PDFs and . Continuous-time Lamperti theorem tells us that the double Laplace transforms of these quantities are:

For the moment we focus on the non-ergodic regime . First of all, let us choose a finite-mean waiting-time distribution, which constitutes a useful check. Clearly and we know that : having different asymptotic time decays, is ruled by the slower one, namely (and indeed as you can see from Equation (37)). By substituting in Equation (46), we immediately get:

and by expanding in powers of u, one can compute the moments of order k of in the time domain:

This suggests to us that if we consider the rescaled random variable:

then we asymptotically recover the moments of the Mittag-Leffler function of index , as we said previously. We would point out that is not directly the fraction of time spent at the origin and this observation is consistent with the fact that, in addition to the presence of an infinite recurrence time, decays more slowly with respect to : without a properly scaling, is negligible with respect to and, from a mathematical point of view, it follows a Dirac delta with mass at the origin, namely all moments converge to 0.

If now, instead, we take waiting-time distributions with infinite mean, we can not find out any scaling function in such a way that the rescaled occupation time admits a limiting distribution. In fact, recalling that and , we similarly obtain:

Let us move on to the discrete-time ergodic regime: and . This time and are of the same order, since they possess the same asymptotic exponent and the slowly-varying function decays to a constant L. As a consequence, they both determine the behaviour of:

according to .

By exploiting again Equation (46), in the limit we have:

which may be inverted (see Reference [50] as in the original paper [44]) and leads to the Lamperti probability density function for the fraction of time spent at the origin (ergodicity breaking):

where is the asymmetry parameter and . In addition, we notice that:

and so the expected value of the fraction of continuous-time spent at the origin coincides with the inverse mean recurrence time of the discrete-time random walk. But, thanks to ergodicity, we have also a stationary distribution at the origin for [29] that, by means of Birkhoff ergodic theorem, satisfies:

and in conclusion:

where are the stationary measures of the subsets associated with the two states, according to the known results in the literature [46,47,48,49].

As a last comment, we turn back again to the finite-mean case. As expected, when we get:

namely a Dirac delta centered at the expected value .

3.3.2. Occupation Time of the Positive Semi-Axis

In the non-ergodic (for the discrete-time random walk) regime, since given that the fraction of time spent at the origin is negligible (as we discussed above, after Equation (50)), we have a system with a state space split into two subsets, and , that can communicate only passing through the recurrent event, the origin, that is also the initial condition. Thanks to symmetry, and the limiting distribution of the fraction of time spent in each subset is the symmetric Lamperti PDF of index , , which for finite-mean waiting times consistently boils down to (by directly applying the original Lamperti statement [44]).

In the ergodic regime, instead, when you split the state in two symmetric subsets, you must in any case look at a three-state process: although the mean recurrence time is still infinite, the fraction of time spent at the origin has its weight without any rescaling, see Equation (55). But by symmetry you know also that : as a consequence, you can easily conclude that the Lamperti distribution is with . In fact, you can retrace previous steps for the asymptotic expansion of in Equation (46) or equivalently observe that and the exponent remains unchanged when you move from to . And here too, the asymmetry parameter could be written as:

By way of conclusion, as in the previous section, if you set then you obviously recover the ergodicity in the continuous-time model, since you get a Dirac delta with mass at . Apparently this time there is a little difference with respect to the discrete-time random walk (see Reference [29]): the degenerate distribution is no longer centered at (obtained immediately from Lamperti theorem [44]), as expected by symmetry. But this value was due to the convention [44] of counting the visits at the origin according to the direction of motion. So, if we consider, in addition to the occupation time of the positive axis, half the time spent at the origin (in the long-time limit), then we correctly get a mass at . This comment allows us to highlight another aspect of the ergodicity breaking: when , on the contrary, the choice of the convention to be adopted is completely irrelevant to the final result, since the mean return time to the origin is infinite, supporting the asymmetry of the distribution.

3.4. Moments Spectrum

Having assumed the presence of a waiting-time distribution of the form specified in Section 3.1.4 and knowing the asymptotic behaviour of the moments with respect to the number of steps, Equation (8), all we have to do is find out the number of steps performed (on average) up to time t in order to determine the physical time dependence of the moments. Clearly we can write [22] that in the Laplace domain reads:

Now, by applying Tauberian theorems once more and coming back to the time domain, we get:

As a consequence, we can easily conclude that:

hence, in particular, a subdiffusive regime also arises for non-ergodic processes. The derivation of this spectrum for the discrete-time model is rather technical: it is a consequence of the specific form of the continuum limit. So we will not dwell on a brief recap this time, for the detailed analysis refer to References [29,51].

3.5. Statistics of Records

The statistics of records is another aspects relying on the mean number of steps counted in a given time period. Essentially, we have to retrace the relevant steps shown in Reference [29] for the discrete-time random walk in the light of additional knowledge.

First we must outline an excursion as each subsequence between consecutive returns to the origin: as we shall see, properties of single excursions carry information about the expected value of the maximum of the entire motion. We have handled with a stochastic process defined on the half-line, for instance on the non-negative integers : in the case of symmetric random walks, we do not need to deal with its extension to the whole line, the origin can always be assumed to be a totally reflecting barrier. Indeed changes take over if and only if positive and negative excursions are characterized by different tail bounds for their durations [34]. A fundamental assumption to fulfill, instead, is the presence of the regenerative structure, whereas Markovianity is not required. Moreover, in order to make sure that there is recurrence, we focus on the range .

For the sake of completeness, here we provide heuristic guidelines: they should simply be intended as a motivation, for rigourous proofs we entrust you to previous references. Let denote the number of excursions, equivalently the number of returns to the origin, occurred up to the n-th step and M the maximum position occupied during a single excursion. In Reference [29] we have shown that the stochastic process obtained from the Gillis random walk by means of the transformation is a symmetric random walk with no longer drift. As a consequence, thanks to classic results in random walk theory (see Reference [30]), we know that the probability of reaching the site m before coming back to the origin, that is also the probability of having M beyond m, is given by:

and then:

since, because of the renewal property, excursions are independent of one another. Now, by means of the common limits for exponential functions:

since recurrence ensures as , we deduce that the correct scaling law for the maximum is . At this point, we have just to find out the relationship between the number of excursions and the number of steps n. But this is almost immediate since we know that the properly rescaled random variable follows a Mittag-Leffler distribution of parameter [42,43], whose first moment is by definition and as a consequence . In conclusion, we get that the expected value of the maximum reached by the particle up to time t is:

An interesting comment concerns a related quantity, the duration of a single excursion T, namely the first-return time to the origin. A mathematical rigorous theorem [34] comes to our aid once again. On the event that reaches m during an excursion, semimartingale estimates can be used to show that approximately the walker spends an amount of time of order before returning to the origin:

that is clearly consistent with our result in Section 3.1.3: . Moreover, the expected value of the maximum duration of an excursion up to the n-th step is:

according to the fact that for the process is null-recurrent, whereas in the ergodic regime we have a finite mean return time and the growth of is slower. On the contrary, as we have seen in Section 3.3, in the presence of a non-trivial continuous-time random walk ergodicity is lost and in fact:

4. Numerical Results

Here our intent is to substantiate theoretical arguments by means of numerical checks. Moreover, we also take the opportunity to show how detailed analytical considerations are fundamental in this kind of context: some aspects are intrinsically difficult to be directly investigated from a numerical point of view.

Before going any further, as a general comment, from now on we will consider Pareto distributions as heavy-tailed waiting-time distributions for our simulations:

where is a positive parameter, the so-called tail index, and , the scale parameter, is the lower bound for t. In this way, the variance of the random variable for is infinite, with a finite mean, whereas it does not exist for , when the expected value becomes infinite. We will focus on the latter case.

4.1. Return and First-Return Events

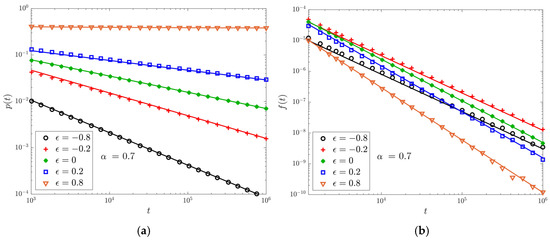

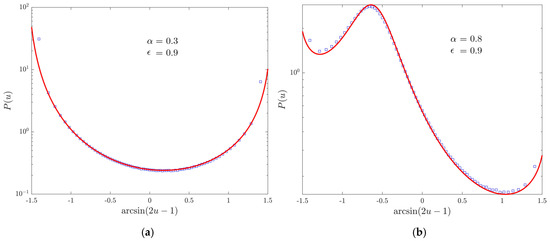

Here we compare Figure 1a,b with Equations (34) and (35), respectively: there is good agreement with the previous theoretical analysis.

Figure 1.

Data are obtained simulating walks up to time with , . Markers represent the simulation results for different values of , lines the corresponding theoretical predictions. We use logarithmic scales on both the horizontal and vertical axes. (a) The PDF of being at the origin. (b) The first-return PDF .

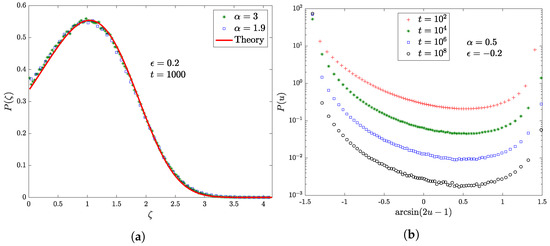

4.2. Occupation Times

In Figure 2 we examine the dependence of the PDF of the occupation time of the origin on the features of the waiting-time distribution in the purely non-ergodic regime . In the first one, Figure 2a, we have , namely a finite first moment with a finite () or infinite () variance, and as a consequence in both cases the occupation time rescaled by its mean value, , follows a limiting Mittag-Leffler distribution of index , which is the same as the properly rescaled number of visits to the origin. In the presence of an infinite first moment, instead, there is no longer an appropriate scaling function: we show (Figure 2b) the slow convergence of the fraction of occupation time to a Dirac delta with mass at 0. For increasing evolution times, the peak at becomes more and more prominent with respect to in the asymmetric U-shaped PDF, suggesting the collapse to a degenerate Lamperti distribution.

Figure 2.

Markers represent the numerical results obtained simulating walks with a cut-off . (a) The PDF of the rescaled fraction of continuous-time spent at the origin when , or , for an evolution time of . (b) The PDF of the fraction of continuous-time spent at the origin for the case , evolved up to different times. In addition, we perform the transformation on the horizontal axis.

Next, as illustrated in Figure 3, we move on to the ergodic regime of the underlying random walk: we consider different values for in order to hint that, when approaches 1, the expected Lamperti distribution, Equation (55), eventually collapses to a Dirac delta centered at the mean value of the occupation time, according to previous results in the physical literature [46,47,48,49].

Figure 3.

PDF of the fraction of continuous-time spent at the origin. Markers represent the simulation results, (red) lines the theoretical Lamperti distributions. We choose and . Moreover, for the sake of readability, we perform the transformation on the horizontal axis. (a) The case obtained simulating walks up to time . (b) The case with walks evolved up to time .

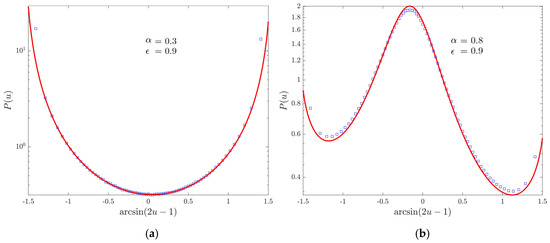

We now discuss the distribution of the occupation time of the positive semi-axis. In Figure 4, we take a purely non-ergodic process: since the fraction of time spent at the origin is negligible, we have the expected symmetric Lamperti distribution of index , which replaces the discrete-time parameter . In Figure 5, we shift to the discrete-time ergodic regime by setting . We can observe once again the birth of the continuous-time ergodic regime when , with an asymmetry due to the fact that .

Figure 4.

PDF of the fraction of continuous-time spent in the positive semi-axis for walks evolved up to time with , and . Markers represent numerical data, the (red) line the theoretical Lamperti distribution. Moreover, for the sake of readability, we perform the transformation on the horizontal axis.

Figure 5.

PDF of the fraction of continuous-time spent in the positive semi-axis. Markers represent the simulation results, (red) lines the theoretical Lamperti distributions. We choose and . Moreover, for the sake of readability, we perform the transformation on the horizontal axis. (a) The case obtained simulating walks up to time . (b) The case with walks evolved up to time .

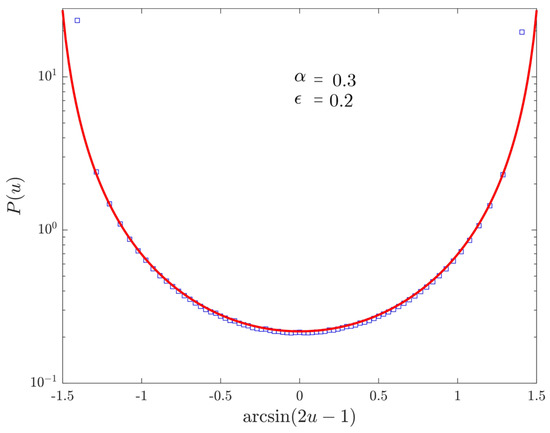

4.3. Moments Spectrum

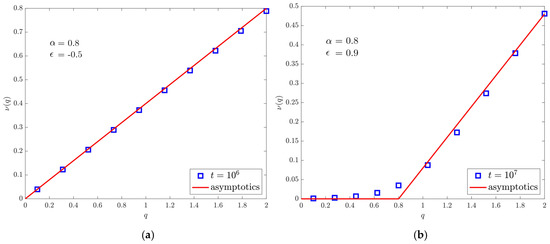

In Figure 6a you can see the expected smooth behaviour for a purely non-ergodic process, although it is no longer related to normal diffusion. In Figure 6b, instead, in addition to subdiffusion we recognize the presence of a corner, since for the moments tend to a constant, which is typical of the underlying ergodic property: the convergence near the critical point is slower.

Figure 6.

Comparison between the asymptotic exponents of the power-law growth in time of the q-th moments. Markers represent the simulation results, lines the theoretical predictions. is set equal to and . (a) The case . The figure is obtained by simulating walks up to time . (b) The case with walks evolved up to time .

4.4. Records

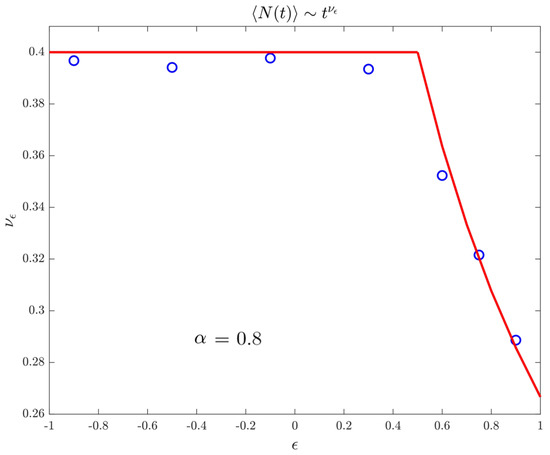

In Figure 7, we finally show the asymptotic behaviour of the mean number of records, or equivalently the expected maximum, up to time t. In particular, we want to emphasize that, even if the range becomes an anomalous regime (in contrast with the discrete-time model), the mean number of records still behaves as the first moment.

Figure 7.

Exponent characterizing the power-law growth of the number of records with respect to the time. We consider walks and an evolution time of . Again we have and . Markers refer to data and the (red) line to the theoretical prediction.

5. Discussion

We have reassessed all the exact results found out in our previous work [29] in the light of the continuous-time formalism. By considering waiting times on the sites picked from a heavy-tailed distribution lacking the first moment, meaningful modifications in all regimes can be carried out.

By tuning the real parameter , we detect the following differences with respect to the discrete-time dynamics. First of all, the ergodic regime for fades out. Nevertheless, the underlying ergodic property makes the continuous-time upper range distinct from the purely non-ergodic processes : visits at the origin have more and more weight since the fraction of time spent at the starting site does not converge to 0. Although in the presence of an infinite mean recurrence time, due solely to the irregular temporal component, we have a non-degenerate Lamperti distribution for the quantity of interest. Secondly, the strong-anomalous diffusion regime, characterizing the ergodic processes in the discete-time version, is weakly extended to the purely non-ergodic range, where weak subdiffusion replaces normal diffusion. More generally, return and first-return probabilities have a slower asymptotic power-law decay, depending on the parameter of the temporal tail bounds.

It remains an interesting open problem to extend the analysis to centrally-biased random walks with hopping rates beyond next neighbours. Some conclusions may be drawn, for instance, by applying Lamperti criteria [52,53] about the recurrence or the transience of the random walk, but only if precise assumptions regarding the increments (see Equation in Reference [52]) are satisfied. Though, in general, most of the above results must be reassessed: first of all, you are no longer forced to pass through the origin in the transitions between and and the renewal theory, by identifying events with returns to the starting site, plays a crucial role in our setting. Secondly, looking also at the corresponding diffusion equation, the most remarkable case should involve increments that are not uniformly bounded, or better random jump distances with infinite mean.

We hope our studies will fall under an increasingly wide class of general exact results for stochastic processes lacking translational invariance, which hide subtle phenomena of physical interest not satisfied by the well-known homogeneous counterpart.

Author Contributions

All authors have contributed substantially to the work. All authors have read and agreed to the published version of the manuscript.

Funding

The authors acknowledge partial support from PRIN Research Project No. 2017S35EHN “Regular and stochastic behavior in dynamical systems” of the Italian Ministry of Education, University and Research (MIUR).

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| CTRW | Continuous Time Random Walk |

| Probability Density Function | |

| i.i.d. | Independent Identically Distributed |

Appendix A. Gillis-Type Proof

In Section 3.1.1 we have written the following equation:

In the Laplace domain it reads:

and considering also the generating function on sites, we get:

similarly for and clearly . As a consequence, we can write:

Differentiating both sides with respect to x, we obtain:

to be compared with:

and so the differential equation for is:

Now, we set , and split real and imaginary parts, thus we get , where:

and the solution is:

In order to recover Equation (14), it is sufficient to perform the calculations and recall that:

Appendix B. Hitting Time PDF of the Origin: Exact Results

Let us derive the exact and asymptotic behaviours of the probabilities of being at the origin. For the moment, we neglect the limiting cases . All we need are the following properties of the Gamma function and the transformation formula 15.3.6 for the hypergeometric functions in Reference [31]:

where and . Now, recalling Equation (17) and considering for , we have to asymptotically compute in different regimes.

First of all, let us take . By skipping the intermediate steps, we get:

since:

In conclusion, by means of Tauberian theorems:

Before going any further, let us make a comment which highlights the transience property. It is glaring that:

namely it has the same scaling law of the survival probability on a site, although possibly not the same coefficient. In particular, setting means to consider return probabilities. But if you choose moreover the limiting case , when the particle moves to it will never come back since and it is like placing two barriers with total reflection on the outside. As a consequence .

If , we find again but and so when and :

In the first line of , we want to emphasize that we can always explicitly write the exact slowly-varying function (the last factor), even if then we focus on its asymptotic expansion.

When , instead we have and as a consequence:

Finally, we have to handle with the transition points. If we need to introduce also 15.3.10 and 15.3.11 in Reference [31], with , , :

where denotes the digamma function and is the Pochhammer symbol, and:

Hence we get:

Whereas, when , we need also Equation (15.3.12) in Reference [31]:

In this way, we can conclude that:

Appendix C. First-Hitting Time PDF: Exact Results

Now, by means of Equations (22) and (23), we can exploit the previous appendix in order to extend the analysis to first-passage events. Once again, we can deduce exact results although in the end we pull up asymptotic formulas, but in addition this time we have to split our investigation according to the choice of the starting site: we have to differentiate between first-passage and first-return.

Appendix C.1. First-Return

Knowing already that:

we immediately get:

and (thanks to Tauberian theorems):

Actually, we can not directly apply Tauberian theorems to , of course, but we can however get around the problem by using the following trick. If we consider with and use an auxiliary function, for instance the derivative, then:

Though we have yet to determine the result for . The limiting case is almost immediate since again:

For transient processes, instead, we must first supplement the asymptotic expansion with higher order terms:

in such a way that:

Appendix C.2. First-Hitting

Now, keeping in mind the techniques illustrated in the previous Appendix C.1 and Appendix B, we manage to generalize the results to the first-passage time to the origin, assuming to start from any other site .

As long as , we can write:

When :

since the first term in the expansion of hypergeometric functions is of order , which is dominant with respect to . Therefore:

If :

Finally, for :

At this stage, we have to focus on the transition points. Firstly, let us consider :

where the hypergeometric functions in the numerator and denominator asymptotically behave as:

In conclusion:

Secondly, when we get:

and as we did before:

Hence:

Appendix D. CTRW on

Let us deal with a simple symmetric random walk, namely a nearest-neighbour (homogeneous and symmetric) random walk on the one-dimensional integer lattice, starting from the origin and ruled by waiting periods on the sites. We know [22] that the generating functions of the discrete-time model are:

Giving up the parametrization by the number of steps, thanks to Equations (15) and (21) we can write also the Laplace transforms:

Moreover, we can recast these formulas in the required convenient way:

where and are slowly-varying functions at infinity. If we further suppose, as in Section 3.1.4, that , then:

with .

As a final point, let us emphasize that these are acknowledged results in the literature, verified with different techniques. See, for instance, Reference [22].

References

- Hughes, B.D. Random Walks and Random Environments. Volume I: Random Walks; Clarendon Press: Oxford, UK, 1995. [Google Scholar]

- Menshikov, M.; Popov, S.; Wade, A. Non-Homogeneous Random Walks. Lyapunov Function Methods for Near-Critical Stochastic Systems; Cambridge University Press: Cambridge, UK, 2017. [Google Scholar]

- Klages, R.; Radons, G.; Sokolov, I.M. Anomalous Transport: Foundations and Applications; Wiley–VHC: Berlin, Germany, 2008. [Google Scholar]

- Bouchaud, J.P.; Georges, A. Anomalous diffusion in disordered media: Statistical mechanisms, models and physical applications. Phys. Rep. 1990, 195, 127–293. [Google Scholar] [CrossRef]

- Barthelemy, P.; Bertolotti, J.; Wiersma, D.S. A Lévy flight for light. Nature 2008, 453, 495–498. [Google Scholar] [CrossRef] [PubMed]

- Dentz, M.; Cortis, A.; Scher, H.; Berkowitz, B. Time behavior of solute transport in heterogeneous media: Transition from anomalous to normal transport. Adv. Water Resour. 2004, 27, 155–173. [Google Scholar] [CrossRef]

- Cortis, A.; Berkowitz, B. Anomalous transport in “classical” soil and sand columns. Soil Sci. Soc. Am. J. 2004, 68, 1539–1548. [Google Scholar] [CrossRef]

- Kühn, T.; Ihalainen, T.O.; Hyväluoma, J.; Dross, N.; Willman, S.F.; Langowski, J.; Vihinen-Ranta, M.; Timonen, J. Protein Diffusion in Mammalian Cell Cytoplasm. PLoS ONE 2011, 6, e22962. [Google Scholar] [CrossRef]

- Nissan, A.; Berkowitz, B. Inertial Effects on Flow and Transport in Heterogeneous Porous Media. Phys. Rev. Lett. 2018, 120, 054504. [Google Scholar] [CrossRef]

- Barkai, E.; Garini, Y.; Metzler, R. Strange kinetics of single molecules in living cells. Phys. Today 2012, 65, 29–35. [Google Scholar] [CrossRef]

- Metzler, R.; Jeon, J.H.; Cherstvy, A.G.; Barkai, E. Anomalous diffusion models and their properties: Non-stationarity, non-ergodicity, and ageing at the centenary of single particle tracking. Phys. Chem. Chem. Phys. 2014, 16, 24128–24164. [Google Scholar] [CrossRef]

- He, Y.; Burov, S.; Metzler, R.; Barkai, E. Random Time-Scale Invariant Diffusion and Transport Coefficients. Phys. Rev. Lett. 2008, 101, 058101. [Google Scholar] [CrossRef]

- Burov, S.; Jeon, J.H.; Metzler, R.; Barkai, E. Single particle tracking in systems showing anomalous diffusion: The role of weak ergodicity breaking. Phys. Chem. Chem. Phys. 2010, 13, 1800–1812. [Google Scholar] [CrossRef]

- Gillis, J. Centrally biased discrete random walk. Q. J. Math. 1956, 7, 144–152. [Google Scholar]

- Percus, O.E. Phase transition in one-dimensional random walk with partially reflecting boundaries. Adv. Appl. Probab. 1985, 17, 594–606. [Google Scholar] [CrossRef]

- Montroll, E.W. Random Walks on Lattices. III. Calculation of First-Passage Times with Application to Exciton Trapping on Photosynthetic Units. J. Math. Phys. 1969, 10, 153–165. [Google Scholar] [CrossRef]

- Hill, J.M.; Gulati, C.M. The random walk associated by the game of roulette. J. Appl. Probab. 1981, 18, 931–936. [Google Scholar] [CrossRef]

- Hughes, B.D.; Sahimi, M. Random walks on the Bethe lattice. J. Stat. Phys. 1982, 23, 1688–1692. [Google Scholar] [CrossRef]

- Zaburdaev, V.; Denisov, S.; Klafter, J. Lévy walks. Rev. Mod. Phys. 2015, 87, 483. [Google Scholar] [CrossRef]

- Shlesinger, M.F.; Klafter, J. Lévy Walks Versus Lévy Flights. In On Growth and Form. Fractal and Non–Fractal Patterns in Physics; Stanley, H.E., Ostrowsky, N., Eds.; NATO ASI Series E: Applied Sciences-No. 100; Martinus Nijhoff Publihers: Dordrecht, The Netherlands; Boston, MA, USA; Lancaster, UK, 1986; pp. 279–283. [Google Scholar]

- Montroll, E.W.; Weiss, G.H. Random walks on lattices, II. J. Math. Phys. 1965, 6, 167–181. [Google Scholar] [CrossRef]

- Klafter, J.; Sokolov, I.M. First Steps in Random Walks. From Tools to Applications; Oxford University Press Inc.: New York, NY, USA, 2011; pp. 1–51. [Google Scholar]

- Scalas, E. The application of continuous-time random walks in finance and economics. Physica A 2006, 362, 225–239. [Google Scholar] [CrossRef]

- Wolfgang, P.; Baschnagel, J. Stochastic Processes. From Physics to Finance; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Scher, H.; Shlesinger, M.F.; Bendler, J.T. Time-scale invariance in transport and relaxation. Phys. Today 1991, 44, 26–34. [Google Scholar] [CrossRef]

- Berkowitz, B.; Cortis, A.; Dentz, M.; Scher, H. Modeling non-Fickian transport in geological formations as a continuous time random walk. Rev. Geophys. 2006, 44, RG2003. [Google Scholar] [CrossRef]

- Boano, F.; Packman, A.I.; Cortis, A.; Ridolfi, R.R.L. A continuous time random walk approach to the stream transport of solutes. Water Resour. Res. 2007, 43, W10425. [Google Scholar] [CrossRef]

- Geiger, S.; Cortis, A.; Birkholzer, J.T. Upscaling solute transport in naturally fractured porous media with the continuous time random walk method. Water Resour. Res. 2010, 46, W12530. [Google Scholar] [CrossRef]

- Onofri, M.; Pozzoli, G.; Radice, M.; Artuso, R. Exploring the Gillis model: a discrete approach to diffusion in logarithmic potentials. J. Stat. Mech. 2020, 2020, 113201. [Google Scholar] [CrossRef]

- Redner, S. A Guide to First-Passage Processes; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Functions; Dover Publications Inc.: New York, NY, USA, 1970. [Google Scholar]

- Hughes, B.D. On returns to the starting site in lattice random walks. Physica A 1986, 134, 443–457. [Google Scholar] [CrossRef]

- Castiglione, P.; Mazzino, A.; Muratore-Ginanneschi, P.; Vulpiani, A. On strong anomalous diffusion. Physica D 1999, 134, 75–93. [Google Scholar] [CrossRef]

- Hryniv, O.; Menshikov, M.V.; Wade, A.R. Excursions and path functionals for stochastic processes with asymptotically zero drifts. Stoch. Process. Their Appl. 2013, 123, 1891–1921. [Google Scholar] [CrossRef]

- Meerschaert, M.M.; Straka, P. Inverse Stable Subordinators. Math. Model. Nat. Phenom. 2013, 8, 1–16. [Google Scholar] [CrossRef]

- Janson, S. Stable Distributions. (Lecture Notes). arXiv 2011, arXiv:1112.0220v2. [Google Scholar]

- Feller, W. An Introduction to Probability Theory and Its Applications; John Wiley and Sons, Inc.: New York, NY, USA, 1971; Volume II. [Google Scholar]

- Sparre Andersen, E. On the fluctuations of sums of random variables II. Math. Scand. 1954, 2, 195–223. [Google Scholar]

- Mounaix, P.; Majumdar, S.N.; Schehr, G. Statistics of the number of records for random walks and Lévy Flights on a 1D Lattice. J. Phys. A Math. Theor. 2020, 53, 415003. [Google Scholar] [CrossRef]

- Feller, W. An Introduction to Probability Theory and Its Applications; John Wiley and Sons, Inc.: New York, NY, USA, 1968; Volume I. [Google Scholar]

- Artuso, R.; Cristadoro, G.; Degli Esposti, M.; Knight, G.S. Sparre-Andersen theorem with spatiotemporal correlations. Phys. Rev. E 2014, 89, 052111. [Google Scholar] [CrossRef] [PubMed]

- Radice, M.; Onofri, M.; Artuso, R.; Pozzoli, G. Statistics of occupation times and connection to local properties of nonhomogeneous random walks. Phys. Rev. E 2020, 101, 042103. [Google Scholar]

- Darling, D.A.; Kac, M. On occupation times for Markoff processes. Trans. Am. Math. Soc. 1957, 84, 444–458. [Google Scholar] [CrossRef]

- Lamperti, J. An occupation time theorem for a class of stochastic processes. Trans. Am. Math. Soc. 1958, 88, 380–387. [Google Scholar] [CrossRef]

- Godrèche, C.; Luck, J.M. Statistics of the occupation time for renewal processes. J. Stat. Phys. 2001, 104, 489–524. [Google Scholar] [CrossRef]

- Barkai, E. Residence time statistics for normal and fractional diffusion in a force field. J. Stat. Phys. 2006, 123, 883–907. [Google Scholar] [CrossRef]

- Bel, G.; Barkai, E. Occupation time and ergodicity breaking in biased continuous time random walks. J. Phys. Condens. Matter 2005, 17, S4287–S4304. [Google Scholar] [CrossRef][Green Version]

- Bel, G.; Barkai, E. Random walk to a nonergodic equilibrium concept. Phys. Rev. E 2006, 73, 16125. [Google Scholar] [CrossRef]

- Bel, G.; Barkai, E. Weak ergodicity breaking in continuous-time random walk. Phys. Rev. Lett. 2005, 94, 240602. [Google Scholar] [CrossRef]

- Widder, D.V. The Laplace Transform; Princeton University Press: London, UK, 1946. [Google Scholar]

- Dechant, A.; Lutz, E.; Barkai, E.; Kessler, D.A. Solution of the Fokker-Planck Equationation with a Logarithmic Potential. J. Stat. Phys. 2011, 145, 1524–1545. [Google Scholar] [CrossRef]

- Lamperti, J. Criteria for the Recurrence or Transience of Stochastic Process I. J. Math. Anal. Appl. 1960, 1, 314–330. [Google Scholar] [CrossRef]

- Lamperti, J. Criteria for Stochastic Processes II: Passage-Time Moments. J. Math. Anal. Appl. 1963, 7, 127–145. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).