Abstract

A load-sharing system is defined as a parallel system whose load will be redistributed to its surviving components as each of the components fails in the system. Our focus is on making statistical inference of the parameters associated with the lifetime distribution of each component in the system. In this paper, we introduce a methodology which integrates the conventional procedure under the assumption of the load-sharing system being made up of fundamental hypothetical latent random variables. We then develop an expectation maximization algorithm for performing the maximum likelihood estimation of the system with Lindley-distributed component lifetimes. We adopt several standard simulation techniques to compare the performance of the proposed methodology with the Newton–Raphson-type algorithm for the maximum likelihood estimate of the parameter. Numerical results indicate that the proposed method is more effective by consistently reaching a global maximum.

1. Introduction

We consider a load-sharing system which consists of several components associated in parallel. As each of the components fails one by one in the system, the surviving components will share the load with the remaining surviving components.

The load-sharing system has been studied since the 1940s [1]. Since then, it is widely used in many engineering applications [2,3,4]. The studies in [5,6] drew, in particular, load-sharing parameter inferences in a system with each component with a constant failure rate. Singh et al. [7] presented a more generalized load-sharing system under an assumption of a constant hazard at the beginning and a linearly increasing hazard rate after the failure of several components. Wang et al. [8] studied a more generalized load-sharing system with the work history or memory.

Park [9,10] considered the maximum likelihood estimate (MLE) of the parameters in the system, whose component lifetimes are distributed as exponential, Weibull, normal, and lognormal. Singh et al. [7] analyzed the discrete-type multi-component load-sharing parallel system and later on Deshpandé et al. [11] presented the nonparametric-type load-sharing system.

Singh and Gupta [12,13] and Singh et al. [14] investigated the load-sharing system model, in which the failure times of each component can be either constant or time-dependent. It deserves mentioning that Bayesian analyses of the load-sharing systems with several well-known distributions of lifetime components have also been investigated in the literature [15,16,17]. For instance, Xu et al. [15] studied a test scheme for reliability demonstration test on load-sharing systems with Weibull-distributed (including exponential) lifetime components. More recently, Singh and Goel [16] studied a multi-component parallel system model by assuming a load-sharing tendency on its components. Xu et al. [17] proposed the expectation-maximization (EM) algorithm for estimating the parameters in the load-sharing system with continuous degradation.

It is worthwhile to mention that based on accelerated testing models, the MLE method for the parameter estimation of the load-sharing systems under the classical latent variable approach is not well studied when the normal and lognormal are assumed to be the lifetime distribution of components. As mentioned in [5], a direct generalization of the load-sharing system with these lifetime distributions is usually problematic when making likelihood-based parametric inference, because of following reasons: (a) it may be impossible or extremely difficult to obtain explicit MLEs, (b) standard numerical methods such as the Newton–Raphson-type method can perform poorly when the dimension of parameters is high, or (c) if the likelihood function is not sharp enough, then the standard numerical methods may fail to reach the global maximizer.

In this paper, we extend the load-sharing system with Lindley-distributed component lifetimes. Since the Lindley distribution was proposed by [18] as a counterexample of fiducial statistics, it has been widely used in reliability engineering due to its non-constant hazard rate in comparison to the exponential distribution. A natural question that arises is about making statistical inference of the parameters in this system. As mentioned above, the Newton–Raphson-type method can be sensitive to starting values so that it often fails to reach a correct solution especially for high-dimensional parameters. An alternative is to consider the EM algorithm particularly in engineering applications. For example, Albert and Baxter [19] derived the EM sequences with several causes, censoring, partial masking and right-censoring for the exponential model. Park [20] generalized their EM-type results in the system with different multi-modal strength distributions. Amari et al. [21] used the EM method to evaluate incomplete warranty data. Recently, Park [22] analyzed interval-data using the quantile implementation of the EM algorithm which improves the Monte Carlo EM (MCEM) algorithm’s convergence and stability properties and Ouyang [23] considered an interval-based model for analyzing incomplete data in a different viewpoint. These observations motivate us to develop an EM-type MLE for the parameters in the considered system to overcome the possible difficulties mentioned above when using the standard numerical methods.

The rest of the paper is as follows. In Section 2, we discuss the construction of the likelihood of the system with Lindley-distributed component lifetimes. In Section 3, we develop an EM algorithm for performing the MLE of the system under consideration. In Section 4, we conduct numerical studies to investigate the performance of the proposed EM-type MLE method, and some concluding remarks are given in Section 5.

2. Likelihood Construction

In this section, we briefly overview the construction of the likelihood function for the load-sharing system in Section 2.1 and then focus on the working likelihood in the load-sharing system with Lindley-distributed component lifetimes in Section 2.2.

2.1. Working Likelihood for the Load-Sharing Model

We here provide a brief description of the likelihood construction needed for a load-sharing framework and refer the interested readers to [9,10] for details on various types of load-sharing models. We assume that in a load-sharing parallel system consisting of J components, the failure rates of the remaining surviving components could change and the lifetimes of the surviving components at each failure step are independent and identically distributed (iid). However, since each of the components fails one by one (without being replaced or repaired) in the system, the parameter of the remaining surviving components should change from to after the occurrence of the first failure. Similarly, it also changes from to after the occurrence of the j-th failure. Let be the lifetime between the failures of the j-th and -st for . We will observe the value of after the failure of the j-th component. For notational simplicity, we assume that the component fails in the order of the J-th, the -st, etc.

When analyzing the lifetime distribution in a load-sharing system, standard methods are developed based on the use of hypothetical latent random variables. After the occurrence of the j-th failure, there are surviving components in the system. These surviving components are denoted by , respectively. Then, we follow the traditional latent variable approach and model

For a parametric model, each lifetime distribution usually includes the unknown parameters. We shall be particularly interested in obtaining the MLEs of the unknown parameters in the system. Given that it is often impossible to obtain the analytic-form MLE of the parameters, we often employ some reliable numerical procedures for obtaining the MLEs. Of particular note is that many lifetimes of the non-observed components can be considered to be right-censoring. For more details, the reader is directed to Equation (1.1) in [24], Section 5.3.1 in [25], Example 6.30 in [26], and the EM algorithm with right-censoring in Park [20]. This observation motivates us to adopt a similar method for constructing the likelihood of the load-sharing system as follows.

We assume that for a random sample of size n, the i-th measurement can be categorized as below

Let the realization of the random variables be . By using the formulation in [9,10], we can construct the observed likelihood function from the n observations as follows

where ,

Under the equal load-sharing rule, we consider the parameter estimates for the system with Lindley-distributed component lifetimes. For the notion of completeness, we here review the likelihood construction provided in [10]. We assume that for a specific value of j, are iid for . The cumulative distribution function (cdf) of can then be defined as

where is the survival function of with the parameter , where . Note that we omitted the index ℓ for since are iid for any ℓ.

Let be the hazard function. The probability density function (pdf) of can be easily obtained by differentiating Equation (2), and it is given by

Then the likelihood and log-likelihood functions for the n observations are, respectively, given by

and

2.2. Likelihood Construction with the Lindley Distribution

We focus on the load-sharing system with Lindley-distributed component lifetimes. The pdf and cdf of the Lindley distribution with the parameter are given by

respectively, for . Then the survival and hazard functions of the Lindley distribution are given by and , respectively. Based on the Lindley distribution above, we have the survival and hazard functions of

Thus, using Equations (3) and (7), it follows that

Substitution of Equations (8) into (5) leads to

To the best of our knowledge, it is actually impossible to derive an analytic-expression of the MLE of the likelihood function in Equation (9). Thus, appropriate numerical procedures are usually required to obtain the MLE under this scenario, whereas, as afore-mentioned, the conventional Newton–Raphson-type method is very sensitive to starting values and it could often fail to reach a correct solution especially for high-dimensional parameters. Given that the likelihood in Equation (9) can be easily over-parameterized, we recommend the use of an EM algorithm to overcome these difficulties in maximizing the likelihood in Equation (4) discussed as follows.

3. The Proposed EM Algorithm

We first briefly discuss how to implement an EM algorithm based on the complete-data likelihood function in Section 3.1 and develop the EM-type MLEs for the load-sharing system with Lindley-distributed component lifetimes in Section 3.2.

3.1. An EM Algorithm with the Load-Sharing Model

The EM algorithm [24] is a numerical iterative algorithm to obtain the MLE of parametric models. The EM algorithm consists of two steps which are (i) an expectation step (E-step) and (ii) a maximization step (M-step). It is often employed to solve a difficult or complex likelihood problem to obtain the MLE by iterating two easier steps above. In the E-step, we calculate the expectation of the log-likelihood function under the incomplete data given the observed data. In the M-step, we calculate the maximizer of the expected log-likelihood. Although this algorithm may be slower than the gradient-based methods such as Newton–Raphson, it is very stable. For the sake of completeness, we briefly discuss the EM algorithm when missing values such as censored or masked data are considered. For more details on the term missing values in terms of data coarsening, the reader is referred to the references [26,27,28].

Let denote the set of unknown parameters. We assume that the complete data can be reorganized as , where represents the fully observed part and stands for the missing part (right-censored in the load-sharing) [24]. Then the likelihood function becomes

which can also be rewritten as

For more details on the above, see Section 5.3.1 in [25] and Section 6.3.3 in [26]. Let be the estimate at the s-th step of the algorithm and define

The two distinct steps of the EM algorithm can be summarized as follows:

- E-step:

- M-step:

As yet, it is not clear how to implement the EM algorithm for performing the MLE of the considered load-sharing system. Given that the lifetime between the failures of the j-th and -st is observed from the minimum lifetime among the remaining components, we could view this load-sharing system in the setting of competing risks [29]. Consequently, after the occurrence of the j-th failure, let be the lifetimes of the remaining surviving components, in which these components will compete with each other for the next failure. Also, given that the lifetime between the failures of the j-th and -st are partially observed with the unknown component, we may view the system as masking [30]. Then, it is equivalent to assuming that causes (failed components) are completely masked or missing [20,31]. Thus, analogous to his approach to complete masking, we can easily construct the likelihood function of the system for implementing the EM algorithm as follows.

First, we use an indicator function and a variable to express the cause of failure of the component: let be the indicator function of two event C and D and be an indicator variable such that indicating that the failure of the ℓ-th component. Then we define

Since are the indicator functions of the events for , they have an iid Bernoulli distribution with the probability , which is obtained as

for . Analogous to the approach in Section 8.2.2 of [32], the Bernoulli probability is obtained by

where and . For the detailed explicit derivation of Equation (11), one can refer to Appendix A in [10].

Next, we incorporate the above Bernoulli random variables and construct the complete-data likelihood function as follows. To be more specific, by using Equation (4), the likelihood function can be constructed as which can be rewritten as , where . Using the above Bernoulli random variables in Equation (10), we can rewrite for the complete-data likelihood as below

which results in the following likelihood function

For convenience, we rewrite the above likelihood function as

where stands for the likelihood function of the i-th system given by

We observe that the complete-data likelihood function in (13) can now be constructed based on the Bernoulli random variable in (10), whereas it still seems difficult to directly derive an explicit function from Equation (13) in the E-step. We may overcome this difficulty by viewing the censored data as missing. This idea could help us avoid the use of the survival function, since in many practical cases, it is easier to deal with a density than a survival function. We thus provide a method for constructing the complete-data likelihood based on a density rather than a survival function, which could ease the difficulty in calculating the E-step. It should be noted that . Thus, by introducing the random variable for , we can replace in Equation (14) with in the E-step. That is, can be re-expressed as

where the pdf of is defined as

This technique is widely used for simplifying the calculation of the E-step. The reader is referred to Equation (1.1) in [24], Section 5.3.1 in [25], Example 6.30 in [26], and Park [20] for details. Now, using Equation (15), we can obtain an explicit closed-form function which will be shown in Equation (21). This brings great simplicity for finding the MLE in the M-step.

Since for all i and ℓ as shown in Equation (11), we just write by ignoring i and ℓ for notational simplicity. Then the likelihood function of the complete-data in Equation (15) can be written as

In what follows, using Equation (17), we implement the EM algorithm for finding the MLE of the parameters in the load-sharing system with Lindley-distributed component lifetimes.

3.2. The EM-Type Maximum Likelihood Estimates with the Lindley Distribution

Since the lifetime of the components follows the Lindley distribution with the pdf in Equation (6). Then, the pdf of is defined as

We substitute this function into Equation (17) and take the logarithm of , which leads to the complete-data log-likelihood function of given by

where

It should be noted that C does not include . Thus, we can treat it as a constant in the M-step, where function is differentiated with respect to .

Let be the estimate of at the s-th EM sequences. We can summarize the proposed EM as follows.

- E-step:

- M-step:We differentiate with respect to and obtainFinally, we set the above equation to be zero in order to solve for and obtain the -st EM sequences, denoted by , such that

We can easily obtain the MLE of the parameter in the system based on the above EM sequences. In addition, to make our procedure be accessible for practitioners, we provide the function written in R language [33] in Appendix A.

4. Numerical Study

4.1. Real Data Analysis

We consider the real data set analyzed in [12,34] to illustrate the usefulness of the proposed procedure. We refer the readers to [12,34] for more details on the description of the data. Singh and Gupta [12] used the load-sharing model with the underlying Lindley distribution and estimated , , and based on the Newton–Raphson-type method. The corresponding log-likelihood and likelihood values are and , respectively.

We reanalyze the above real data with the proposed EM algorithm in Section 3. The R function for this analysis (Lindley.LS.EM) and the real data set are provided in Appendix A. Using the Lindley.LS.EM function, we obtained , , and . The corresponding log-likelihood and likelihood values are and , respectively. Comparing the two results, we observe that the estimates using the EM algorithm have a greater likelihood value, which clearly implies that it is more effective than the Newton–Raphson-type method by Singh and Gupta [12].

4.2. Sensitivity of Parameter Estimation Due to Starting Values

We compare the sensitivity of starting values to the Newton–Raphson-type method and the EM algorithm. To this end, we generated ten lifetimes () from a five-component parallel system with the parameter values of , , , , and . Please note that Ghitanya et al. [35] used the mixture model to generate the Lindley random sample, whereas the sample can be directly obtained by solving for x where p is a uniform random number in . In Appendix B, we provided the quantile function of the Lindley distribution by solving for x. We provided the generated data in Table 1.

Table 1.

Lifetimes for load-sharing sample of size .

We first need to choose starting values for using both methods to obtain the estimates. We selected starting values at random and obtained the estimates based on the Newton–Raphson-type method and the EM algorithm. For the case of the proposed EM method, we obtained , , , and along with the corresponding log-likelihood value where regardless of starting values. On the other hand, for the case of the Newton–Raphson-type method, the parameter estimates are dependent on the choice of starting values. In Table 2, we reported only some non-convergent cases for brevity. These results clearly show that the Newton–Raphson-type method is sensitive to the choice of starting values.

Table 2.

Parameter estimates with corresponding log-likelihood values when the Newton–Raphson-type method is used.

We investigate the sensitivity of the estimates more thoroughly as follows.

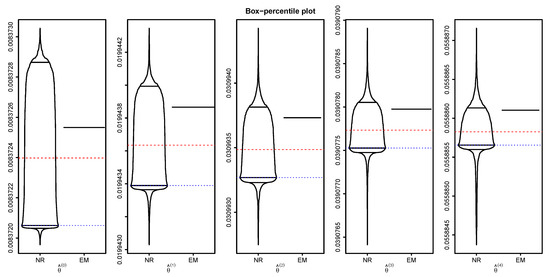

Here, we choose starting values by randomly selecting a value in and then estimate the five parameters using both methods. We repeated this procedure 1000 times, which results in 1000 sets of parameter estimates. We draw the box-percentile plots [36] of the estimates under each method in Figure 1. It is easily seen from the figure that the Newton–Raphson-type method shows the wide spread of the estimates indicating that it is sensitive to starting values, while the EM algorithm does not show any spread of the estimates which clearly shows that it is not sensitive to starting values at all. In the figure, we also added the mean (red dashed line) and median (blue dotted line) of the estimates using the Newton–Raphson-type method which indicate that they tend to underestimate the true MLE but their distributions are skewed to the right.

Figure 1.

Box-percentile plots of the estimates based on the Newton–Raphson-type method (NR) and the EM algorithm.

It deserves mentioning that we have more extensive results, but due to the space limitations, we briefly report some important findings. As expected, with an increasing number of parameters, the Newton–Raphson-type method becomes more unstable, and in some cases, it even fails to provide reasonable results. On the other hand, the EM algorithm always provides reliable estimates. It is of interest to note that for a small number of the parameters (e.g., five or smaller), the Newton–Raphson-type method is not so bad. In summary, when the number of the parameter is more than five, we highly recommend the use of the EM algorithm.

5. Conclusions

In this paper, we have introduced a methodology by integrating the conventional procedure under the assumption of the load-sharing system being made up of fundamental hypothetical latent random variables. In addition, we have investigated the problem of estimating the parameter of a load-sharing system with Lindley-distributed component lifetimes. To our knowledge, it is not possible to obtain a closed-form MLE of the parameter under this system. We thus develop an EM algorithm for finding the MLE of the load-sharing system under consideration. The EM algorithm is not only easily implemented by practitioners with the R programs in Appendix A, but also can alleviate some potential issues faced by iterative numerical methods such as the Newton–Raphson-type method.

We have conducted extensive simulations to compare the performance of the EM algorithm with the Newton–Raphson-type method. Numerical results indicated that the Newton–Raphson-type method often fails to converge and is very dependent on the starting values. Also, as the number of load-sharing parameters increases, it becomes increasingly ineffective because the chance of poor convergence becomes greater. Consequently, we do not recommend the use of this method for parameter estimation of the described load-sharing system. Instead, we have a preference for the proposed EM algorithm, because it consistently offers reliable results, even as the number of parameters becomes larger.

Author Contributions

C.P. developed methodology and R functions; M.W. investigated mathematical formulas and wrote the manuscript; and R.M.A. and H.R. carried out literature survey and review. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (NRF-2017R1A2B4004169). Also, this research was funded by the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University through the Fast-track Research Funding Program.

Acknowledgments

The authors wish to thank the anonymous referees for their careful reading and suggestions for improvement of the original manuscript. The authors also thank Mark Leeds for his help for writing an earlier version of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. R Programs for the Proposed EM Algorithm

| # Lindley.LS.EM function |

| Lindley.LS.EM <- function(Y, start = 1, maxits = 1000 L, eps = 1.0E-15) { |

| J = ncol(Y) |

| converged = logical(J) |

| para = numeric(J) |

| THETA = start |

| iter = numeric(J) |

| if( length(start) < J ) THETA = rep_len(start,J) |

| for ( j in 0L:(J-1L) ) { |

| thetaj = THETA[j+1L] |

| V = (J-j-1L) / (J-j) |

| y = Y[,j+1L] |

| while ( (iter[j+1L]<maxits)&&(!converged[j+1L]) ) { |

| w = mean(y+V*(thetaj+thetaj*y+2)/thetaj/(thetaj+thetaj*y+1)) |

| newtheta = (1-w+sqrt( (w-1)^2+8*w ))/(2*w) |

| converged[j+1L] = abs(newtheta-thetaj) < eps |

| iter[j+1L] = iter[j+1L] + 1L |

| thetaj = newtheta |

| } |

| para[j+1L] = newtheta |

| } |

| list(para=para, iter=iter, conv=converged) |

| } |

| # Data |

| > Y0=c(21.02, 24.25, 6.55, 15.35, 39.08, 16.20, 34.59, 19.10, 28.22, |

| 32.00, 11.25, 17.39, 28.47, 23.42, 42.06, 28.51, 34.56, 40.33, |

| 27.56, 9.54, 27.09, 40.36, 41.44, 32.23, 7.53, 28.34, 26.32, 30.47) |

| > Y1=c(30.22, 45.54, 19.47, 16.37, 30.32, 4.16, 46.44, 38.40, 37.43, |

| 45.52, 19.09, 25.43, 31.15, 31.28, 23.21, 33.59, 32.53, 15.35, |

| 46.21, 36.21, 11.11, 33.21, 36.28, 8.17, 37.31, 35.58, 28.02, 40.4) |

| > Y2=c(43.43, 17.19, 23.28, 25.40, 43.53, 39.52, 16.33, 20.17, 25.41, |

| 39.11, 11.59, 22.51, 2.41, 40.03, 45.36, 16.20, 40.44, 28.33, |

| 28.05, 28.12, 23.33, 17.04, 19.13, 41.27, 13.43, 41.48, 29.33, 42.13) |

| Y = cbind(Y0, Y1, Y2) |

| # Use of Lindley.LS.EM() function |

| > Lindley.LS.EM(Y) |

| $para |

| [1] 0.03624714 0.04104211~0.06915810 |

| $iter |

| [1] 58 37~2 |

| $conv |

| [1] TRUE TRUE TRUE |

Appendix B. Generating Lindley-Distributed Random Numbers

The pdf of the Lindley distribution with the parameter is defined as

for . Thus, the Lindley distribution is regarded as the mixture of the exponential with rate and the gamma with with their mixing proportions being and [35]. We can thus generate a Lindley random sample based on this mixture model. An alternative way is through the direct use of of the inverse cdf of the Lindley distribution, which depends on the Lambert W function. For the sake of completeness, we provide a brief introduction of the Lambert W function in the appendix and then show how it can be used to generate a Lindley random sample.

Lambert [37] first considered the Lambert’s transcendental equation which is the inverse of

The solution of the above equation was first recognized in [38].

Now we consider the cdf of Lindley in (6) and we let for notational convenience. Then we have

which leads to

If we let , then we can rewrite (A2) as

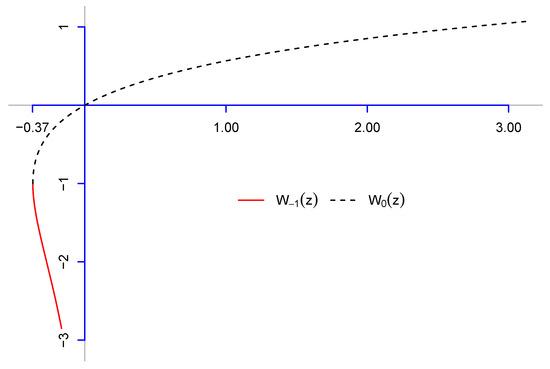

Figure A1.

Lambert W function. denotes the lower branch and denotes the upper branch.

Please note that the Lambert function is not injective when z is in and has two branches as seen in Figure A1. The upper branch for the case of is denoted by and the lower branch for the case of is by . Then we can see that the x value in Equation (A6) is always positive in the lower branch and always negative in the upper branch. Thus, the quantile function or inverse cdf function of the Lindley distribution is explicitly expressed as

To ensure that the cdf possesses the standard properties of the cdf, we calculate the cdf values at the endpoints 0 and ∞ as follows. As , we know and thus we have as expected. When , we have

It is immediate from that and thus as expected. The Lambert W function is available in the lamW package [39] of the R language.

References

- Daniels, H.E. The Statistical Theory of the Strength of Bundles of Threads I. Proc. R. Soc. Lond. Ser. A 1945, 83, 405–435. [Google Scholar]

- Durham, S.D.; Lynch, J.D.; Padgett, W.J. A Theoretical Justification for an Increasing Average Failure Rate Strength Distribution in Fibrous Composites. Nav. Res. Logist. 1989, 36, 655–661. [Google Scholar] [CrossRef]

- Durham, S.D.; Lynch, J.D.; Padgett, W.J.; Horan, T.J.; Owen, W.J.; Surles, J. Localized Load-Sharing Rules and Markov-Weibull Fibers: A Comparision of Microcomposite Failure Data with Monte Carlo Simulations. J. Compos. Mater. 1997, 31, 1856–1882. [Google Scholar] [CrossRef]

- Durham, S.D.; Lynch, J.D. A Threshold Representation for the Strength Distribution of a Complex Load Sharing System. J. Stat. Plan. Inference 2000, 83, 25–46. [Google Scholar] [CrossRef]

- Kim, H.; Kvam, P.H. Reliability Estimation Based on System Data with an Unknown Load Share Rule. Lifetime Data Anal. 2004, 10, 83–94. [Google Scholar] [CrossRef]

- Kvam, P.H.; Peña, E.A. Estimating Load-Sharing Properties in a Dynamic Reliability System. J. Am. Stat. Assoc. 2005, 100, 262–272. [Google Scholar] [CrossRef]

- Singh, B.; Sharma, K.K.; Kumar, A. A classical and Bayesian estimation of a k-components load-sharing parallel system. Comput. Stat. Data Anal. 2008, 52, 5175–5185. [Google Scholar] [CrossRef]

- Wang, D.; Jiang, C.; Park, C. Reliability analysis of load-sharing systems with memory. Lifetime Data Anal. 2019, 25, 341–360. [Google Scholar] [CrossRef]

- Park, C. Parameter Estimation for Reliability of Load Sharing Systems. IIE Trans. 2010, 42, 753–765. [Google Scholar] [CrossRef]

- Park, C. Parameter Estimation from Load-Sharing System Data Using the Expectation-Maximization Algorithm. IIE Trans. 2013, 45, 147–163. [Google Scholar] [CrossRef]

- Deshpandé, J.V.; Dewan, I.; Naik-Nimbalkar, U.V. A family of distributions to model load sharing systems. J. Stat. Plan. Inference 2010, 140, 1441–1451. [Google Scholar] [CrossRef]

- Singh, B.; Gupta, P.K. Load-sharing system model and its application to the real data set. Math. Comput. Simul. 2012, 82, 1615–1629. [Google Scholar] [CrossRef]

- Singh, B.; Gupta, P.K. Bayesian reliability estimation of a 1-out-of-k load-sharing system model. Int. J. Syst. Assur. Eng. Manag. 2014, 5, 562–576. [Google Scholar] [CrossRef]

- Singh, B.; Rathi, S.; Kumar, S. Inferential statistics on the dynamic system model with time dependent failure-rate. J. Stat. Comput. Simul. 2013, 83, 1–24. [Google Scholar] [CrossRef]

- Xu, J.; Hu, Q.; Yu, D.; Xie, M. Reliability demonstration test for load-sharing systems with exponential and Weibull components. PLoS ONE 2017, 12, e0189863. [Google Scholar] [CrossRef]

- Singh, B.; Goel, R. MCMC estimation of multi-component load-sharing system model and its application. Iran J. Sci. Technol. Trans. Sci. 2019, 43, 567–577. [Google Scholar] [CrossRef]

- Xu, J.; Liu, B.; Zhao, X. Parameter estimation for load-sharing system subject to Wiener degradation process using the expectation-maximization algorithm. Qual. Reliab. Eng. Int. 2019, 35, 1010–1024. [Google Scholar] [CrossRef]

- Lindley, D.V. Fiducial distributions and Bayes’ theorem. J. R. Stat. Soc. B 1958, 20, 102–107. [Google Scholar] [CrossRef]

- Albert, J.R.G.; Baxter, L.A. Applications of the EM algorithm to the Analysis of life length data. Appl. Stat. 1995, 44, 323–341. [Google Scholar]

- Park, C. Parameter Estimation of Incomplete Data in Competing Risks Using the EM algorithm. IEEE Trans. Reliab. 2005, 54, 282–290. [Google Scholar] [CrossRef]

- Amari, S.V.; Mohan, K.; Cline, B.; Xing, L. Expectation-Maximization Algorithm for Failure Analysis Using Incomplete Warranty Data. Int. J. Perform. Eng. 2009, 5, 403–417. [Google Scholar]

- Park, C. A Quantile Variant of the Expectation-Maximization Algorithm and its Application to Parameter Estimation with Interval Data. J. Algorithms Comput. Technol. 2018, 12, 253–272. [Google Scholar] [CrossRef]

- Ouyang, L.; Zheng, W.; Zhu, Y.; Zhou, X. An interval probability-based FMEA model for risk assessment: A real-world case. Qual. Reliab. Eng. Int. 2020, 36, 125–143. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. B 1977, 39, 1–22. [Google Scholar]

- Robert, C.P.; Casella, G. Monte Carlo Statistical Methods, 2nd ed.; Springer: New York, NY, USA, 2005. [Google Scholar]

- Little, R.J.A.; Rubin, D.B. Statistical Analysis with Missing Data, 2nd ed.; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Heitjan, D.F.; Rubin, D.B. Inference from coarse data via multiple imputation with application to age heaping. J. Am. Stat. Assoc. 1990, 85, 304–314. [Google Scholar] [CrossRef]

- Heitjan, D.F.; Rubin, D.B. Ignorability and coarse data. AOS 1991, 19, 2244–2253. [Google Scholar] [CrossRef]

- Crowder, M.J. Classical Competing Risks; Chapman & Hall: London, UK, 2001. [Google Scholar]

- Miyakawa, M. Analysis of Incomplete Data in Competing Risks Model. IEEE Trans. Reliab. 1984, 33, 293–296. [Google Scholar] [CrossRef]

- Park, C.; Padgett, W.J. Analysis of Strength Distributions of Multi-Modal Failures Using the EM Algorithm. J. Stat. Comput. Simul. 2006, 76, 619–636. [Google Scholar] [CrossRef]

- Kalbfleisch, J.D.; Prentice, R.L. The Statistical Analysis of Failure Time Data; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020; Available online: http://www.r-project.org (accessed on 7 June 2020).

- Kvam, P.H.; Peña, E.A. Estimating Load-Sharing Properties in a Dynamic Reliability System; Technical Report; Department of Statistics, University of South Carolina: Columbia, SC, USA, 2003; Available online: https://people.stat.sc.edu/pena/TechReports/KvamPena2003.pdf (accessed on 11 November 2020).

- Ghitanya, M.E.; Atieha, B.; Nadarajah, S. Lindleydistribution and its application. Math. Comput. Simul. 2008, 78, 493–506. [Google Scholar] [CrossRef]

- Esty, W.; Banfield, J. The Box-Percentile Plot. J. Stat. Softw. Artic. 2003, 8, 1–14. [Google Scholar] [CrossRef][Green Version]

- Lambert, J.H. Observations variae in Mathesin Puram. Acta Helvitica Phys. Math. Anat. Bot. Medica 1758, 3, 128–168. [Google Scholar]

- Pólya, G.; Szegö. Aufgaben und Lehrsätze der Analysis; Springer: Berlin, Germany, 1925. [Google Scholar]

- Adler, A. LamW: Lambert-W Function, R Package Version 1.3.3. 2015. Available online: https://CRAN.R-project.org/package=lamW (accessed on 11 November 2020).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).